In a paper published in Nature, we introduce FunSearch, a method for searching for “functions” written in computer code, and find new solutions in mathematics and computer science. FunSearch works by pairing a pre-trained LLM, whose goal is to provide creative solutions in the form of computer code, with an automated “evaluator”, which guards against hallucinations and incorrect ideas.Read More

Google DeepMind at NeurIPS 2023

The Neural Information Processing Systems (NeurIPS) is the largest artificial intelligence (AI) conference in the world. NeurIPS 2023 will be taking place December 10-16 in New Orleans, USA.Teams from across Google DeepMind are presenting more than 150 papers at the main conference and workshops.Read More

Introducing Gemini: our largest and most capable AI model

Making AI more helpful for everyoneRead More

Millions of new materials discovered with deep learning

We share the discovery of 2.2 million new crystals – equivalent to nearly 800 years’ worth of knowledge. We introduce Graph Networks for Materials Exploration (GNoME), our new deep learning tool that dramatically increases the speed and efficiency of discovery by predicting the stability of new materials.Read More

Transforming the future of music creation

Announcing our most advanced music generation model and two new AI experiments, designed to open a new playground for creativityRead More

Empowering the next generation for an AI-enabled world

Experience AI’s course and resources are expanding on a global scaleRead More

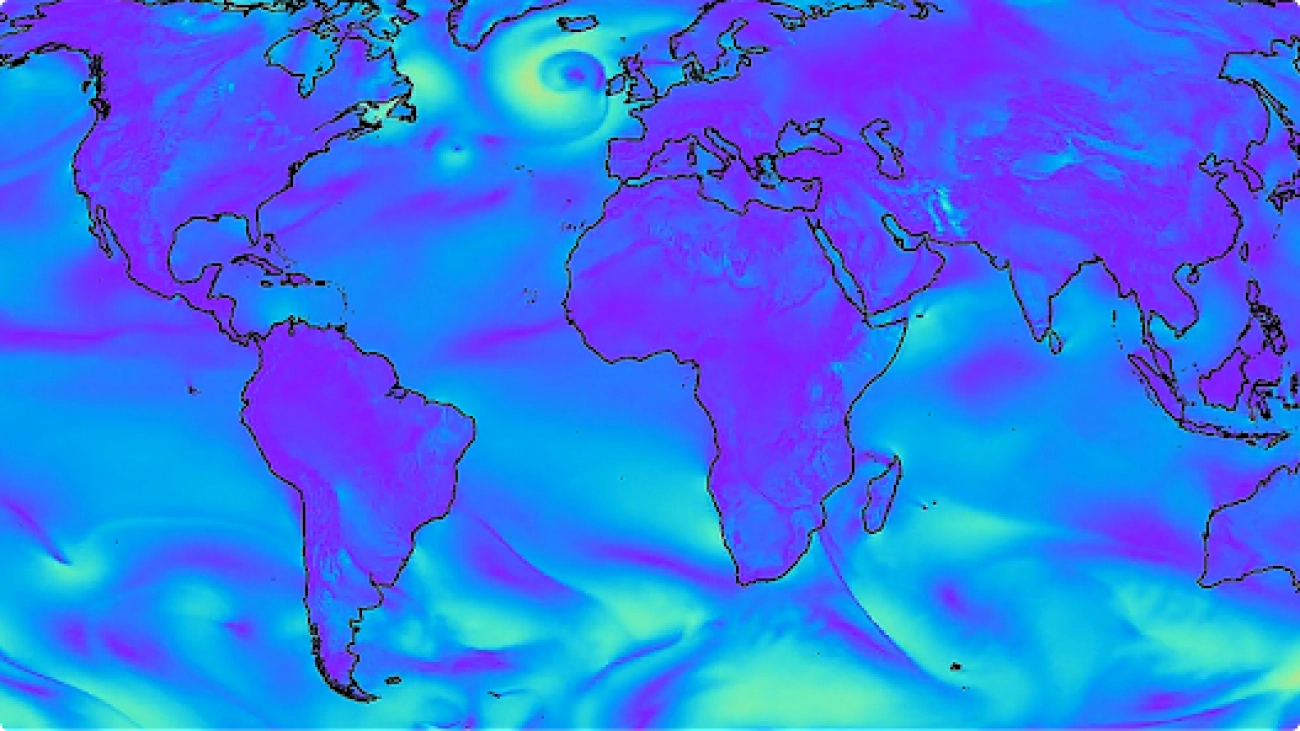

GraphCast: AI model for faster and more accurate global weather forecasting

We introduce GraphCast, a state-of-the-art AI model able to make medium-range weather forecasts with unprecedented accuracyRead More

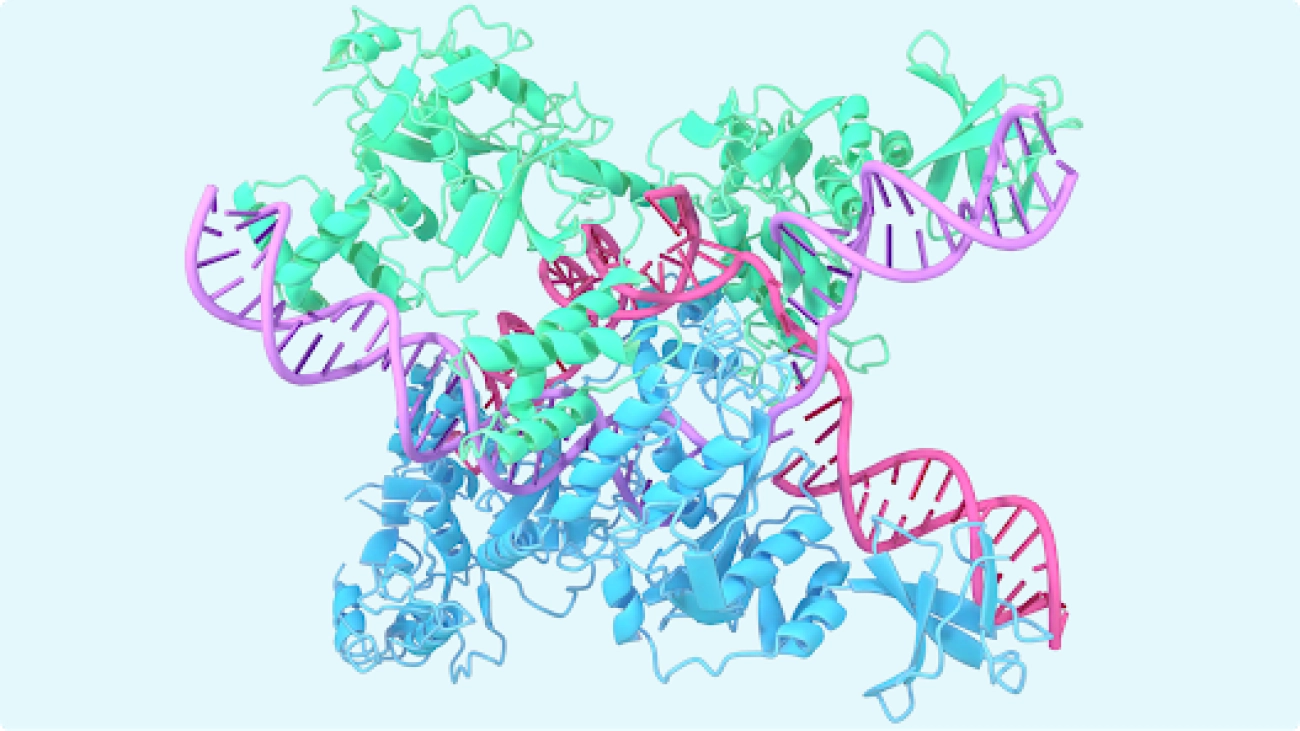

A glimpse of the next generation of AlphaFold

Progress update: Our latest AlphaFold model shows significantly improved accuracy and expands coverage beyond proteins to other biological molecules, including ligands.Read More

Evaluating social and ethical risks from generative AI

Introducing a context-based framework for comprehensively evaluating the social and ethical risks of AI systemsRead More

Evaluating social and ethical risks from generative AI

Generative AI systems are already being used to write books, create graphic designs, assist medical practitioners, and are becoming increasingly capable. To ensure these systems are developed and deployed responsibly requires carefully evaluating the potential ethical and social risks they may pose.In our paper, we propose a three-layered framework for evaluating the social and ethical risks of AI systems. This framework includes evaluations of AI system capability, human interaction, and systemic impacts.We also map the current state of safety evaluations and find three main gaps: context, specific risks, and multimodality. To help close these gaps, we call for repurposing existing evaluation methods for generative AI and for implementing a comprehensive approach to evaluation, as in our case study on misinformation. This approach integrates findings like how likely the AI system is to provide factually incorrect information, with insights on how people use that system, and in what context. Multi-layered evaluations can draw conclusions beyond model capability and indicate whether harm — in this case, misinformation — actually occurs and spreads. To make any technology work as intended, both social and technical challenges must be solved. So to better assess AI system safety, these different layers of context must be taken into account. Here, we build upon earlier research identifying the potential risks of large-scale language models, such as privacy leaks, job automation, misinformation, and more — and introduce a way of comprehensively evaluating these risks going forward.Read More