The future of healthcare is software-defined and AI-enabled. Around 700 FDA-cleared, AI-enabled medical devices are now on the market — more than 10x the number available in 2020.

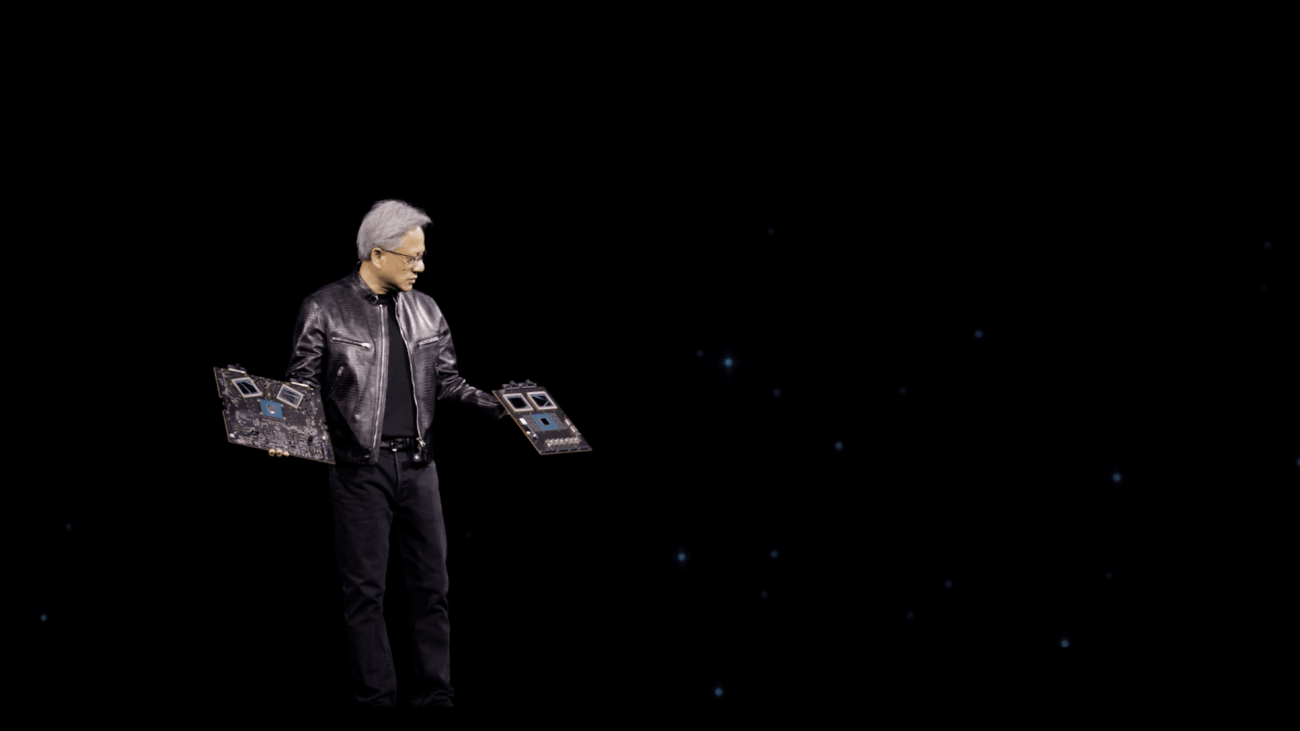

Many of the innovators behind this boom announced their latest AI-powered solutions at NVIDIA GTC, a global conference that last week attracted more than 16,000 business leaders, developers and researchers in Silicon Valley — and many more online.

Designed to make healthcare more efficient and help improve patient outcomes, these new technologies include foundation models to accelerate ultrasound analysis, augmented and virtual reality solutions for cardiac imaging, and generative AI software to support surgeons.

Shifting From Hardware to Software-Defined Medical Devices

Medical devices have long been hardware-centric, relying on intricate designs and precise engineering. They’re now shifting to be software-defined, meaning they can be enhanced over time through software updates — the same way that smartphones can be upgraded with new apps and features for years before a user upgrades to a new device.

This new approach, supported by NVIDIA’s domain-specific platforms for real-time accelerated computing, is taking center stage because of its potential to transform patient care, increase efficiencies, enhance the clinician experience and drive better outcomes.

Leading medtech companies such as GE Healthcare are using NVIDIA technology to develop, fine-tune and deploy AI for software-defined medical imaging applications.

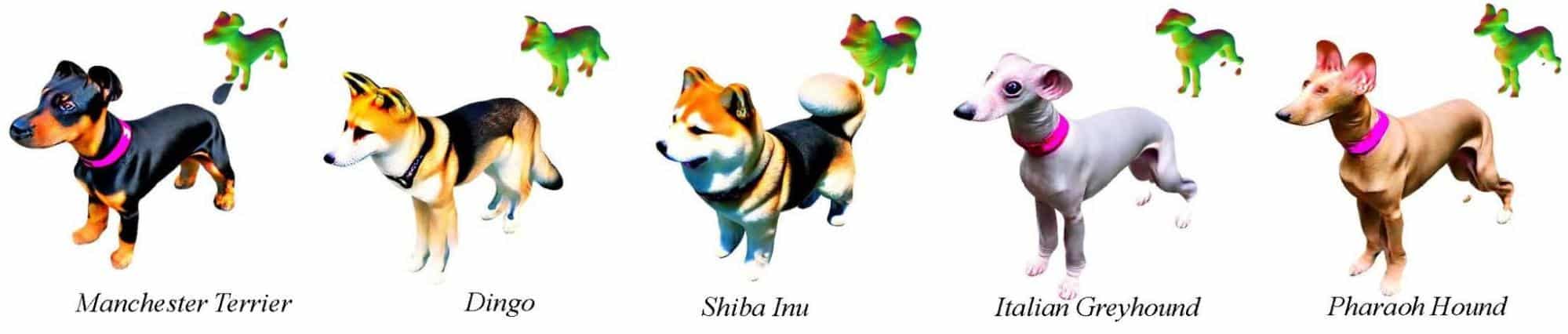

GE Healthcare announced at GTC that it used NVIDIA tools including the TensorRT software development kit to develop and optimize SonoSAMTrack, a recent research foundation model that delineates and tracks organs, structures or lesions across medical images with just a few clicks. The research model has the potential to simplify and speed up ultrasound analysis for healthcare professionals.

Powering the Next Generation of Digital Surgery

With the NVIDIA IGX edge computing platform and NVIDIA Holoscan medical-grade edge AI platform, medical device companies are accelerating the development and deployment of AI-powered innovation in the operating room.

Johnson & Johnson MedTech is working with NVIDIA to test new AI capabilities for the company’s connected digital ecosystem for surgery. It aims to enable open innovation and accelerate the delivery of real-time insights at scale to support medical professionals before, during and after procedures.

Paris-based robotic surgery company Moon Surgical is using Holoscan and IGX to power its Maestro System, which is used in laparoscopy, a technique where surgeons operate through small incisions with an internal camera and instruments.

Maestro’s ScoPilot enables surgeons to control a laparoscope without taking their hands off other surgical tools during an operation. To date, it’s been used to treat over 200 patients successfully.

Moon Surgical and NVIDIA are also collaborating to bring generative AI features to the operating room using Maestro and Holoscan.

NVIDIA Platforms Power Thriving Medtech Ecosystem

A growing number of medtech companies and solution providers is making it easier for customers to adopt NVIDIA’s edge AI platforms to enhance and accelerate healthcare.

Arrow Electronics is delivering IGX as a subscription-like platform-as-a-service for industrial and medical customers. Customers who have adopted Arrow’s business model to accelerate application deployment include Kaliber AI, a company developing AI tools to assist minimally invasive surgery. At GTC, Kaliber showcased AI-generated insights for surgeons and a large language model to respond to patient questions.

Global visualization leader Barco is adopting Holoscan and IGX to build a turnkey surgical AI platform for customers seeking an off-the-shelf offering that allows them to focus their engineering resources on application development. The company is working with SoftAcuity on two Holoscan-based products that will include generative AI voice control and AI-powered data analytics.

And Magic Leap has integrated Holoscan in its extended reality software stack, enhancing the capabilities of customers like Medical iSight — a software developer building real-time, intraoperative support for minimally invasive treatments of stroke and neurovascular conditions.

Learn more about NVIDIA-accelerated medtech.

Get started on NVIDIA NGC or visit ai.nvidia.com to experiment with more than two dozen healthcare microservices.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

Congratulations to the

Congratulations to the  CLARA – Talk with computers in human language – Matthew Yaeger

CLARA – Talk with computers in human language – Matthew Yaeger