On June 7, 2025, PyTorch Day China was held in Beijing, co-hosted by PyTorch Foundation and the Beijing Academy of Artificial Intelligence (BAAI). The one-day conference featured 16 talks and averaged 160 participants per session. Explore the full YouTube playlist to find sessions that interest you.

Matt White, Executive Director of the PyTorch Foundation, delivered key insights into PyTorch Foundation’s commitment to accelerating open source AI. Since its establishment two years ago, the foundation has grown to 30 members and evolved into an umbrella foundation capable of hosting open source projects beyond PyTorch core. vLLM and DeepSpeed became the first projects under the Foundation umbrella, BAAI’s open source project FlagGems also joined the PyTorch Ecosystem. The PyTorch Ambassador Program, launched to support local community development, received over 200 applications within a month. Matt also introduced the new PyTorch website, as well as the schedules for PyTorch Conference and Open Source AI Week. He mentioned the Foundation’s upcoming initiatives, including the Speaker Bureau, university collaborations, and training certifications, thanked the attendees, and expressed anticipation for the day’s speeches.

2. Running Large Models on Diverse AI Chips: PyTorch + Open Source Stack (FlagOS) for Architecture-Free Deployment

Yonghua Lin, Vice President of the Beijing Academy of Artificial Intelligence, discussed the current status of running large models on diverse AI chips. She explained the rationale behind building a unified open source system software stack: large models face challenges such as high costs, massive resource demands, and expensive training/inference, while the fragmented global AI accelerator ecosystem creates additional issues. She then introduced FlagOS, developed by BAAI in collaboration with multiple partners, including core components and essential tools, supporting various underlying chips and system deployment architectures, as well as multiple large models. It has gained support from various architectures and demonstrated outstanding performance in operator efficiency and compatibility. Finally, she called for more teams to participate in building this open source ecosystem.

3. Diving in Hugging Face Hub; Share Your Model Weights on the #1 AI Hub, Home of 700k+ PyTorch Models

Tiezhen Wang from HuggingFace introduced the HuggingFace Hub, an open source AI community often referred to as the “GitHub of AI.” It hosts a vast number of open source models and datasets, along with diverse features: spaces for easily testing models, kernels, API provider gateways, social communication functions, and open source-related metrics. Its model library offers convenient filtering by popularity and task, with a trending models page featuring various hot models. Each model has a dedicated page displaying model cards, code, and structured data. For datasets, it supports git repositories, provides visualization and SQL query functions, and offers a powerful programming interface.

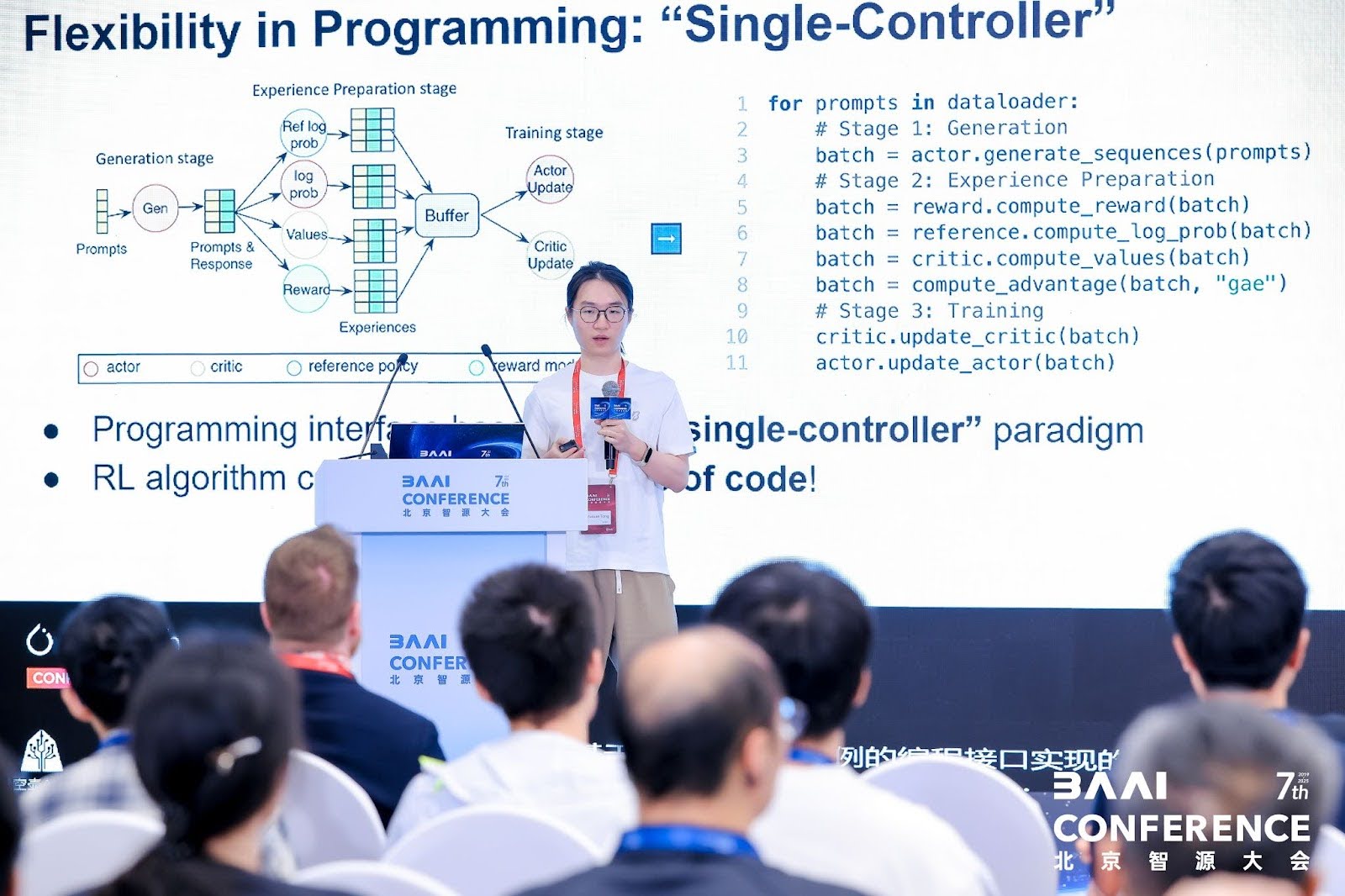

4. verl: An Open Source Large Scale LLM RL Framework for Agentic Tasks

Yuxuan Tong from ByteDance introduced verl, an open source large-scale LLM Reinforcement Learning framework. He first emphasized the importance of large-scale RL, which significantly enhances language model performance and has wide applications in real-world tasks. However, it faces challenges such as complex data flows (involving multiple models, stages, and workloads), distributed workloads, and the need to balance data dependencies and resource constraints. Verl’s strengths lie in balancing flexibility and efficiency: it achieves programming flexibility through a single-controller paradigm, allowing core logic to be described with minimal code and supporting multiple algorithms, and it features a hybrid engine to optimize resource utilization. The framework has an active open source community, with several popular projects built on it. Finally, he shared the community’s future roadmap and welcomed new members.

5. PyTorch in China: Community Growth, Localization, and Interaction

Zesheng Zong from Huawei discussed the development of the PyTorch community in China. As a globally popular framework, PyTorch has a large number of contributors from China, ranking among the top globally. To address the lack of localized resources for beginners, they translated PyTorch’s official website, built a community homepage, and translated tutorials from beginner to advanced levels. They also actively engaged with users through chat channels (established late last year), published over 60 technical blogs, and gained 2,500 subscribers. Future plans include further automating translations, providing more high-quality resources and events, and inviting users to participate.

6. The Development of AI Open Source and Its Influence on the AI Ecosystem

Jianzhong Li, Senior Vice President of CSDN and Boulon technical expert, shared insights into the development of AI open source and its impact on the AI ecosystem. He compared global and Chinese AI technology ecosystems, noting that Chinese AI open source is gaining increasing global importance, and drew parallels between AI development and the evolution of biological intelligence on Earth. He then discussed the development of reasoning models, which enable large models to “think slowly” and reduce reliance on weak reasoning signals in training corpora, with machine-synthesized data in reinforcement learning playing a key role. He analyzed open source’s impact on the ecosystem, including drastically reducing model training and inference costs, and driving the evolution of AI applications toward agents capable of planning, collaboration, and action.

7. torch.accelerator: A Unified, Device-Agnostic Runtime API for Stream-Based Accelerators

Guangye Yu from Intel introduced the torch.accelerator APIs launched in PyTorch 2.6, a unified, device-agnostic runtime API for stream-based accelerators. While PyTorch, a widely used machine learning framework, supports various acceleration hardware, existing runtimes are coupled with specific device modules (e.g., `torch.cuda.current_device` only works for CUDA devices), limiting code portability and creating challenges for hardware vendors integrating new backends. PyTorch 2.5 introduced the concept of accelerators, and 2.6 proposed a unified device-agnostic runtime API, with functionality mapping closely to existing device-specific APIs to minimize code migration changes. Future plans include adding memory-related APIs and universal unit tests. He concluded by thanking the community and contributors for these improvements.

8. vLLM: Easy, Fast, and Cheap LLM Serving for Everyone

Kaichao You from Tsinghua University introduced vLLM, which aims to provide accessible, fast, and affordable language model inference services for everyone. Open-sourced in June 2023, it has gained widespread attention with nearly 48.3K GitHub stars. It is easy to use, supporting offline batch inference and an OpenAI-compatible API server, and works with various model types. As an official partner of major language model companies, it enables immediate deployment upon model release. vLLM supports a wide range of hardware, explores plugin-based integrations, and is used in daily life and enterprise applications. It prioritizes user experience with packages, Docker images, precompiled wheels, and a robust continuous integration system. Finally, he thanks over 1,100 contributors in the vLLM community.

9. A torch.fx Based Compression Toolkit Empowered by torch_musa

Fan Mo from Moore Threads introduced torch_musa, a PyTorch plugin enabling PyTorch to run natively on its platform with highly optimized features and operators. He then detailed the compression toolkit, explaining the choice of FX (debuggable, easy to modify graphs, easy to integrate). Its workflow involves inputting models and configuration files, capturing complete model graphs in the tracing phase, and optimizing/reducing via the backend. He also covered customized optimizations and support for multiple data types. Future work includes making large language and vision models traceable, accelerating inference, and building fault-tolerant systems.

10. Efficient Training of Video Generation Foundation Model at ByteDance

Heng Zhang from ByteDance shared ByteDance’s experience in large-scale, high-performance training of video generation foundation models, including applications in advertising, film, and animation. He introduced the video generation model structure (VE encoding, MMDIT diffusion, VE decoding) and training process (phased training, with VE encoding offline to optimize storage and preprocessing). He also discussed the challenges of load imbalance in video generation models and solutions.

11. torch.compile Practice and Optimization in Different Scenarios

Yichen Yan from Alibaba Cloud shared the team’s experience with `torch.compile` practice and optimization. `torch.compile` accelerates models with one line of code through components like graph capturing, fallback handling, and optimized kernel generation, but faces challenges in production environments. To address these, the team resolved compatibility between Dynamo and DeepSpeed ZeRO/gradient checkpointing, submitting integration solutions to relevant libraries; identified and rewrote attention computation patterns via pattern matching for better fusion and performance; and optimized input alignment to reduce unnecessary recompilations. He also mentioned unresolved issues and future directions: compilation strategies for dynamic shapes, startup latency optimization, reducing overhead, and improving kernel caching mechanisms.

12. PyTorch in Production: Boosting LLM Training and Inferencing on Ascend NPU

Jiawei Li and Jing Li from Huawei introduced advancements in Ascend NPU(torch_npu) within the PyTorch ecosystem. Focusing on upstream diversity support for PyTorch, they explained the third-party device Integration mechanism: using the CPU-based simulation backend OpenRag as a test backend to monitor interface functionality, and establishing mechanisms for downstream hardware vendors to identify risks before community PR merges.

Jing Li shared Ascend NPU’s performance and ecosystem support. He introduced torch_npu architecture for high performance and reliability. Currently already supports more than 20+ popular libraries, including vLLM, torchtune, torchtitan etc. He also explained the mechanism of torch_npu work with NPUGraph and torch.compile, to provide high-performance computation. Finally, he invites everyone to join the community and attend periodic meetings.

13. Hetu-Galvatron: An Automatic Distributed System for Efficient Large-Scale Foundation Model Training

Xinyi Liu and Yujie Wang, from Peking University, detailed Hetu-Galvatron, an innovative PyTorch-based system with key features: automatic optimization, versatility, and user-friendliness. For model conversion, it builds on native PyTorch, transforming single-GPU training models into multi-parallel-supported models by replacing layers supporting tensors and synchronization comparison. For automatic optimization, it has an engine based on cost models and search algorithms. It supports diverse model architectures and hardware backends, ensuring integration with GPU and NPU via PyTorch. It demonstrates superior efficiency on different clusters and models, with verified performance and accuracy. Future plans include integrating torch FSDP2, supporting more parallelism strategies, more models and attention types, and optimizing post-training workflows.

14. Intel’s PyTorch Journey: Promoting AI Performance and Optimizing Open-Source Software

Mingfei Ma from Intel’s PyTorch team introduced Intel’s work in PyTorch. For PyTorch optimization on Intel GPUs, Intel provides support on Linux and Windows, covering runtime, operator support, `torch.compile`, and distributed training. For CPU backend optimization in `torch.compile`, the team participated in architecture design, expanded data type support, implemented automatic tuning of gemm templates, supported Windows, and continuously improved performance speedups. For DeepSeek 671B full-version performance optimization, the team completed CPU backend development with significant speedups(14x performance boost for prefill and 2.9x for decode), supporting multiple data types, meeting real-time requirements at low cost.

15. FlagTree: Unified AI Compiler for Diverse AI Chips

Chunlei Men from the Beijing Academy of Artificial Intelligence introduced FlagTree, a unified AI compiler supporting diverse AI chips and a key component of the FlagOS open source stack. FlagOS, developed by BAAI with multiple partners, includes FlagGems (a general operator library for large models), FlagCX (multi-chip communication), and parallel training/inference frameworks, supporting large model training and inference. He also introduced FlagTree’s architecture for multi-backend integration, and features under development: annotation-based programming paradigms, refactored Triton compiler runtime, etc., with significant performance improvements via related optimizations.

16. KTransformers: Unleashing the Full Potential of CPU/GPU Hybrid Inference for MoE Models

Dr. Mingxing Zhang from Tsinghua University introduced KTransformers, which stands for Quick Transformers, a library built on HuggingFace’s Transformers, aiming to unlock CPU/GPU hybrid inference potential for MoE models via optimized operator integration and data layout strategies. Initially designed as a flexible framework for integrating various operator optimizations, it addresses rising inference costs due to larger models and longer contexts. For scenarios with low throughput and concurrency, it enables low-threshold model operation by offloading compute-intensive parts to GPUs and sparse parts to CPUs (tailored to models like DeepSeek), with flexible configuration. Future focus includes attention layer sparsification, adding local fine-tuning, and maintaining the Mooncake project for distributed inference, welcoming community exchanges.

17. SGLang: An Efficient Open Source Framework for Large-Scale LLM Serving

Liangsheng Yin, a graduate student from Shanghai Jiao Tong University, introduced SGLang, an efficient open source framework for large-scale LLM serving. As a leading-performance open source engine with an elegant, lightweight, and customizable design, it is adopted by academia and companies like Microsoft and AMD, offering high-performance RL solutions. Its core is the PD disaggregation design, solving issues in non-decoupled modes: latency, unbalanced computation-communication, and scheduling incompatibility. It routes requests via load balancers, enabling KV cache transmission between prefetching and decoding instances. Future plans include latency optimization, longer sequence support, and integrating data-parallel attention. With over 400 contributors, it is used by multiple enterprises.