charliemarsh’s tweet, creator of uv

PyTorch is the leading machine learning framework for developing and deploying some of the largest AI products from around the world. However, there is one major wart whenever you talk to most PyTorch users: packaging.

Now, this isn’t a new problem. Python packaging is notoriously difficult, and with the advent of compiled / specialized components for packages, the packaging ecosystem has needed an answer for how to make the experience better. (If you’re interested in learning more about these difficulties, I’d highly recommend reading through pypackaging native.)

With that in mind, we’ve launched experimental support within PyTorch 2.8 for wheel variants. To install them, you can use the following commands:

Linux x86 and aarch64, MacOS

curl -LsSf https://astral.sh/uv/install.sh | INSTALLER_DOWNLOAD_URL=https://wheelnext.astral.sh sh uv pip install torch

Windows x86

powershell -ExecutionPolicy Bypass -c “$env:INSTALLER_DOWNLOAD_URL=‘https://wheelnext.astral.sh’; irm https://astral.sh/uv/install.ps1 | iex” uv pip install torch

This particular post will focus on the problems that wheel variants are trying to solve and how they could impact the future of PyTorch’s packaging (and the overall Python packaging) ecosystem.

More details on the proposal and install instructions can be found on the following resources:

- Astral: Wheel Variants

- NVIDIA: Streamline CUDA Accelerated Python Install and Packaging Workflows with Wheel Variants

- Quansight: Python Wheels from Tags to Variants

- PyTorch DevDiscuss: Discover a simpler way to install PyTorch tailored to your hardware: Wheel variants for Release 2.8 are now available for testing on the PyTorch test channel

What are the problems?

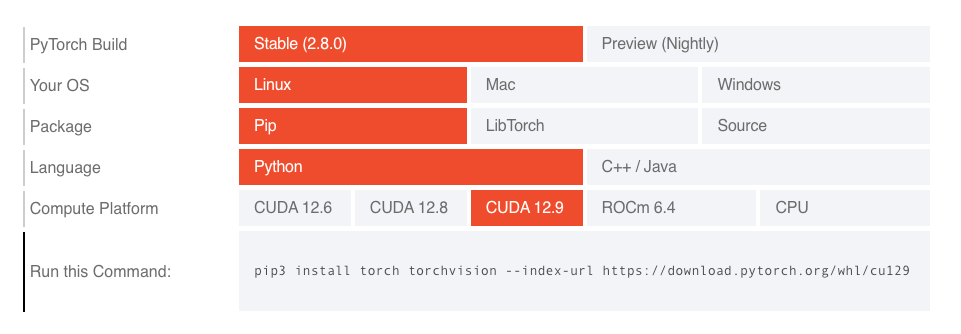

Currently, the matrix for installing PyTorch is manifested as a modal on the PyTorch website, which looks like:

This modal has more than 10 buttons dedicated to installing different versions of PyTorch that are compiled for specialized hardware, and most of the pathways lead to install commands that end up looking like:

pip install torch torchvision -- index-url https://download.pytorch.org/whl/cu129

While the command itself doesn’t look terrible, it does take a lot of steps to get to that point, including:

- Understanding what accelerator you are using

- Understanding what accelerator version you are using

- Knowing which URL maps to which accelerator + accelerator version you are using

- Of which the naming conventions can be non-standard

This has led to frustration for PyTorch users, and even worse, churn for developers using PyTorch within their own projects who need to support multiple accelerators (see example).

The future of PyTorch Packaging

This is the future of PyTorch packaging. Yes, really, that’s it.

More seriously, we have worked alongside engineers from the WheelNext community to deliver experimental binaries that:

- Automatically identify which accelerator and accelerator version you are using (e.g., CUDA 12.8)

- Install the best-fitting variant of PyTorch automatically based on these software and hardware parameters

NOTE: This particular feature is experimental and based on the wheel variants proposal. (PEP pending)

The PyTorch team views wheel variants as a promising way for Python packages to ensure that they can mark specific packages for support of specialized hardware and software, and will be supporting its development/proposal as it makes its way through the PEP process.

We’d love your feedback!

As we blaze the frontier of Python packaging, we’d love to hear how you are utilizing PyTorch’s wheel variants and, more importantly, if you have any feedback, we invite you to post them to our issue tracker on pytorch/pytorch.