A better path to pruning large language models

A new philosophy for developing LLM architectures reduces energy requirements, speeds up runtime, and preserves pretrained-model performance.

Conversational AI

Kai ZhenAugust 08, 02:06 PMAugust 09, 11:22 AM

In recent years, large language models (LLMs) have revolutionized the field of natural-language processing and made significant contributions to computer vision, speech recognition, and language translation. One of the keys to LLMs effectiveness has been the exceedingly large datasets theyre trained on. The trade-off is exceedingly large model sizes, which lead to slower runtimes and higher consumption of computational resources. AI researchers know these challenges well, and many of us are seeking ways to make large models more compact while maintaining their performance.

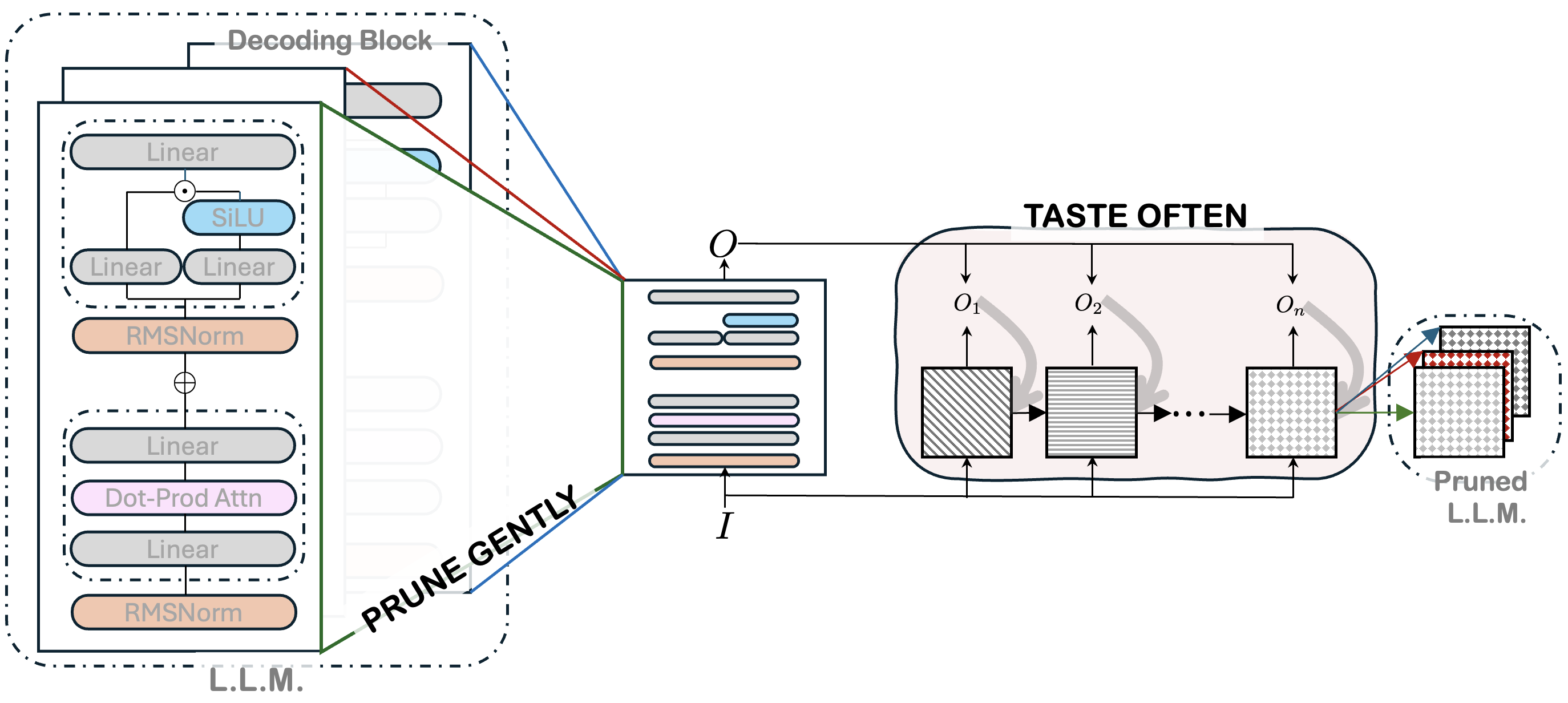

To this end, wed like to present a novel philosophy, Prune Gently, Taste Often, which focuses on a new way to do pruning, a compression process that removes unimportant connections within the layers of an LLMs neural network. In a paper we presented at this years meeting of the Association for Computational Linguistics (ACL), we describe our framework, Wanda++, which can compress a model with seven billion parameters in under 10 minutes on a single GPU.

Measured according to perplexity, or how well a probability distribution predicts a given sample, our approach improves the models performance by 32 percent over its leading predecessor, called Wanda.

A brief history of pruning

Pruning is challenging for a number of reasons. First, training huge LLMs is expensive, and once theyre trained, runtime is expensive too. While pruning can make runtime cheaper, if its done later in the build process, it hurts performance. But if its done too early in the build process, it further exacerbates the first problem: increasing the cost of training.

When a model is trained, it builds a map of semantic connections gleaned from the training data. These connections, called parameters, gain or lose importance, or weight, as more training data is introduced. Pruning during the training stage, called pruning-aware training, is baked into the training recipe and performs model-wide scans of weights, at a high computational cost. Whats worse, pruning-aware training comes with a heavy trial burden of full-scale runs. Researchers must decide when to prune, how often, and what criteria to use to keep pretraining performance viable. Tuning such hyperparameters requires repeated model-wide culling experiments, further driving up cost

The other approach to pruning is to do it after the LLM is trained. This tends to be cheaper, taking somewhere between a few minutes and a few hours compared to the weeks that training can take. And post-training pruning doesnt require a large number of GPUs.

In this approach, engineers scan the model layer by layer for unimportant weights, as measured by a combination of factors such as how big the weight is and how frequently it factors into the models final output. If either number is low, the weight is more likely to be pruned. The problem with this approach is that it isnt gentle: it shocks the structure of the model, which loses accuracy since it doesnt learn anything from the absence of those weights, as it would have if they had been removed during training.

Striking a balance

Heres where our philosophy presents a third path. After a model is fully trained, we scan it piece by piece, analyzing weights neither at the whole-model level nor at the layer level but at the level of decoding blocks: smaller, repeating building blocks that make up most of an LLM.

Within each decoding block, we feed in a small amount of data and collect the output to calibrate the weights, pruning the unimportant ones and updating the surviving ones for a few iterations. Since decoder blocks are small a fraction of the size of the entire model this approach requires only a single GPU, which can scan a block within minutes.

We liken our approach to the way an expert chef spices a complex dish. In cooking, spices are easy to overlook and hard to add at the right moment and even risky, if handled poorly. One simply cannot add a heap of tarragon, pepper, and salt at the beginning (pruning-aware training) or at the end (layer-wide pruning) and expect to have the same results as if spices had been added carefully throughout. Similarly, our approach finds a balance between two extremes. Pruning block by block, as we do, is more like spicing a dish throughout the process. Hence the motto of our approach: Prune Gently, Taste Often.

From a technical perspective, the key is focusing on decoding blocks, which are composed of a few neural-network layers such as attention layers, multihead attention layers, and multilayer perceptrons. Even an LLM with seven billion parameters might have just 32 decoder blocks. Each block is small enough say, 200 million parameters to easily be scanned by a single GPU. Pruning a model at the block level saves resources by not consuming much GPU memory.

And while all pruning processes initially diminish performance, ours actually brings it back. Every time we scan a block, we balanceg pruning with performance until theyre optimized. Then we move on to the next block. This preserves both performance at the block level and overall model quality. With Wanda++, were offering a practical, scalable middle path for the LLM optimization process, especially for teams that dont control the full training pipeline or budget.

Whats more, we believe our philosophy also helps address a pain point of LLM development at large companies. Before the era of LLMs, each team built its own models, with the services that a single LLM now provides achieved via orchestration of those models. Since none of the models was huge, each model development team received its own allocation of GPUs. Nowadays, however, computational resources tend to get soaked up by the teams actually training LLMs. With our philosophy, teams working on runtime performance optimization, for instance, could reclaim more GPUs, effectively expanding what they can explore.

Further implementations of Prune Gently, Taste Often could apply to other architectural optimizations. For instance, calibrating a model at the decoder-block level could convert a neural network with a dense structure, called a dense multilayer perceptron, to a less computationally intensive neural network known as a mixture of experts (MoE). In essence, per-decoder-block calibration can enable a surgical redesign of the model by replacing generic components with more efficient and better-performing alternatives such as Kolmogorov-Arnold Networks (KAN). While the Wanda++ philosophy isnt a cure-all, we believe it opens up an exciting new path for re-thinking model compression and exploring future LLM architectures.

Research areas: Conversational AI, Machine learning

Tags: Generative AI, Large language models (LLMs), Compression