People have the remarkable ability to take in a tremendous amount of information (estimated to be ~1010 bits/s entering the retina) and selectively attend to a few task-relevant and interesting regions for further processing (e.g., memory, comprehension, action). Modeling human attention (the result of which is often called a saliency model) has therefore been of interest across the fields of neuroscience, psychology, human-computer interaction (HCI) and computer vision. The ability to predict which regions are likely to attract attention has numerous important applications in areas like graphics, photography, image compression and processing, and the measurement of visual quality.

We’ve previously discussed the possibility of accelerating eye movement research using machine learning and smartphone-based gaze estimation, which earlier required specialized hardware costing up to $30,000 per unit. Related research includes “Look to Speak”, which helps users with accessibility needs (e.g., people with ALS) to communicate with their eyes, and the recently published “Differentially private heatmaps” technique to compute heatmaps, like those for attention, while protecting users’ privacy.

In this blog, we present two papers (one from CVPR 2022, and one just accepted to CVPR 2023) that highlight our recent research in the area of human attention modeling: “Deep Saliency Prior for Reducing Visual Distraction” and “Learning from Unique Perspectives: User-aware Saliency Modeling”, together with recent research on saliency driven progressive loading for image compression (1, 2). We showcase how predictive models of human attention can enable delightful user experiences such as image editing to minimize visual clutter, distraction or artifacts, image compression for faster loading of webpages or apps, and guiding ML models towards more intuitive human-like interpretation and model performance. We focus on image editing and image compression, and discuss recent advances in modeling in the context of these applications.

Attention-guided image editing

Human attention models usually take an image as input (e.g., a natural image or a screenshot of a webpage), and predict a heatmap as output. The predicted heatmap on the image is evaluated against ground-truth attention data, which are typically collected by an eye tracker or approximated via mouse hovering/clicking. Previous models leveraged handcrafted features for visual clues, like color/brightness contrast, edges, and shape, while more recent approaches automatically learn discriminative features based on deep neural networks, from convolutional and recurrent neural networks to more recent vision transformer networks.

In “Deep Saliency Prior for Reducing Visual Distraction” (more information on this project site), we leverage deep saliency models for dramatic yet visually realistic edits, which can significantly change an observer’s attention to different image regions. For example, removing distracting objects in the background can reduce clutter in photos, leading to increased user satisfaction. Similarly, in video conferencing, reducing clutter in the background may increase focus on the main speaker (example demo here).

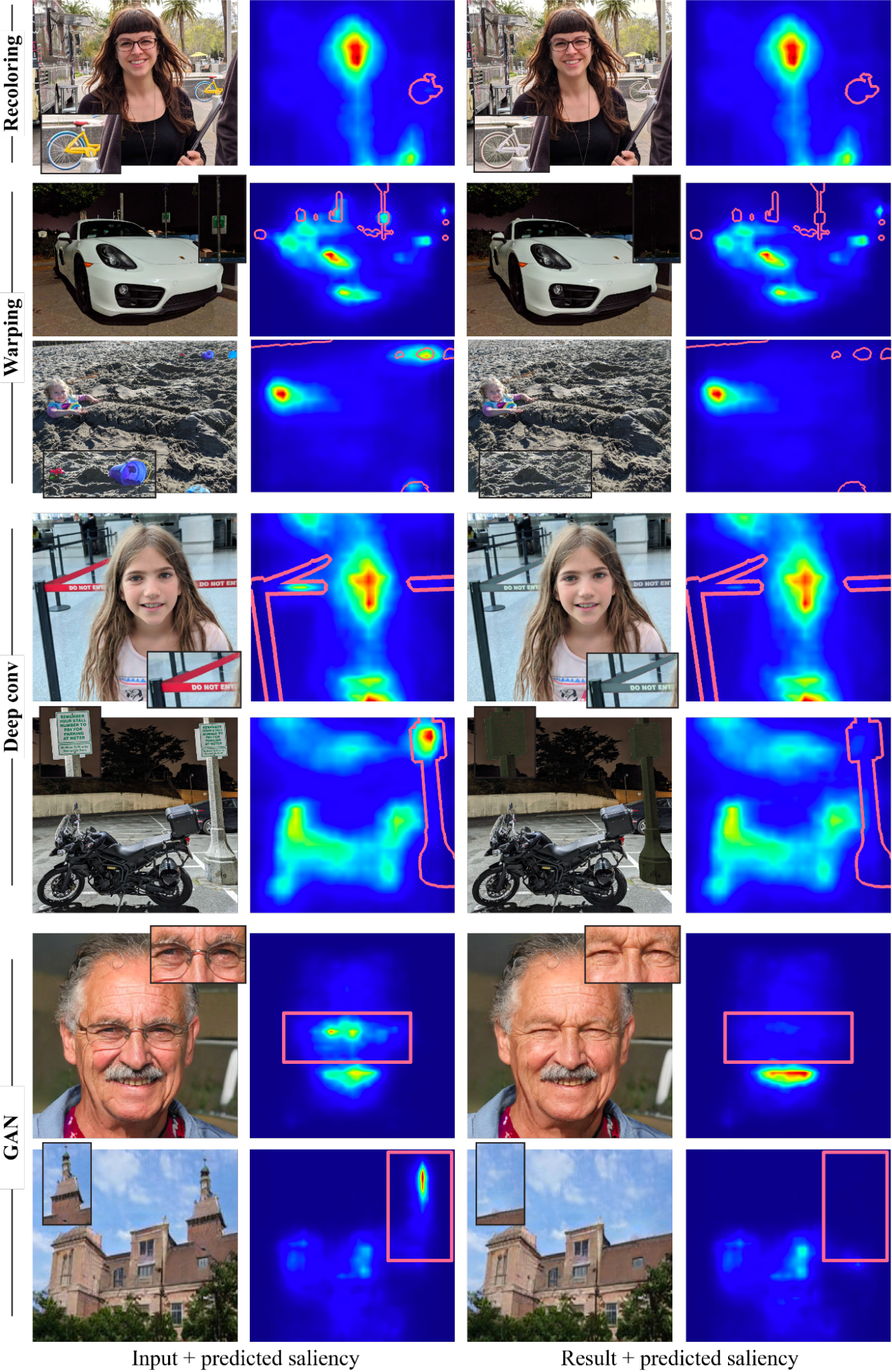

To explore what types of editing effects can be achieved and how these affect viewers’ attention, we developed an optimization framework for guiding visual attention in images using a differentiable, predictive saliency model. Our method employs a state-of-the-art deep saliency model. Given an input image and a binary mask representing the distractor regions, pixels within the mask will be edited under the guidance of the predictive saliency model such that the saliency within the masked region is reduced. To make sure the edited image is natural and realistic, we carefully choose four image editing operators: two standard image editing operations, namely recolorization and image warping (shift); and two learned operators (we do not define the editing operation explicitly), namely a multi-layer convolution filter, and a generative model (GAN).

With those operators, our framework can produce a variety of powerful effects, with examples in the figure below, including recoloring, inpainting, camouflage, object editing or insertion, and facial attribute editing. Importantly, all these effects are driven solely by the single, pre-trained saliency model, without any additional supervision or training. Note that our goal is not to compete with dedicated methods for producing each effect, but rather to demonstrate how multiple editing operations can be guided by the knowledge embedded within deep saliency models.

|

| Examples of reducing visual distractions, guided by the saliency model with several operators. The distractor region is marked on top of the saliency map (red border) in each example. |

Enriching experiences with user-aware saliency modeling

Prior research assumes a single saliency model for the whole population. However, human attention varies between individuals — while the detection of salient clues is fairly consistent, their order, interpretation, and gaze distributions can differ substantially. This offers opportunities to create personalized user experiences for individuals or groups. In “Learning from Unique Perspectives: User-aware Saliency Modeling”, we introduce a user-aware saliency model, the first that can predict attention for one user, a group of users, and the general population, with a single model.

As shown in the figure below, core to the model is the combination of each participant’s visual preferences with a per-user attention map and adaptive user masks. This requires per-user attention annotations to be available in the training data, e.g., the OSIE mobile gaze dataset for natural images; FiWI and WebSaliency datasets for web pages. Instead of predicting a single saliency map representing attention of all users, this model predicts per-user attention maps to encode individuals’ attention patterns. Further, the model adopts a user mask (a binary vector with the size equal to the number of participants) to indicate the presence of participants in the current sample, which makes it possible to select a group of participants and combine their preferences into a single heatmap.

|

| An overview of the user aware saliency model framework. The example image is from OSIE image set. |

During inference, the user mask allows making predictions for any combination of participants. In the following figure, the first two rows are attention predictions for two different groups of participants (with three people in each group) on an image. A conventional attention prediction model will predict identical attention heatmaps. Our model can distinguish the two groups (e.g., the second group pays less attention to the face and more attention to the food than the first). Similarly, the last two rows are predictions on a webpage for two distinctive participants, with our model showing different preferences (e.g., the second participant pays more attention to the left region than the first).

|

| Predicted attention vs. ground truth (GT). EML-Net: predictions from a state-of-the-art model, which will have the same predictions for the two participants/groups. Ours: predictions from our proposed user aware saliency model, which can predict the unique preference of each participant/group correctly. The first image is from OSIE image set, and the second is from FiWI. |

Progressive image decoding centered on salient features

Besides image editing, human attention models can also improve users’ browsing experience. One of the most frustrating and annoying user experiences while browsing is waiting for web pages with images to load, especially in conditions with low network connectivity. One way to improve the user experience in such cases is with progressive decoding of images, which decodes and displays increasingly higher-resolution image sections as data are downloaded, until the full-resolution image is ready. Progressive decoding usually proceeds in a sequential order (e.g., left to right, top to bottom). With a predictive attention model (1, 2), we can instead decode images based on saliency, making it possible to send the data necessary to display details of the most salient regions first. For example, in a portrait, bytes for the face can be prioritized over those for the out-of-focus background. Consequently, users perceive better image quality earlier and experience significantly reduced wait times. More details can be found in our open source blog posts (post 1, post 2). Thus, predictive attention models can help with image compression and faster loading of web pages with images, improve rendering for large images and streaming/VR applications.

Conclusion

We’ve shown how predictive models of human attention can enable delightful user experiences via applications such as image editing that can reduce clutter, distractions or artifacts in images or photos for users, and progressive image decoding that can greatly reduce the perceived waiting time for users while images are fully rendered. Our user-aware saliency model can further personalize the above applications for individual users or groups, enabling richer and more unique experiences.

Another interesting direction for predictive attention models is whether they can help improve robustness of computer vision models in tasks such as object classification or detection. For example, in “Teacher-generated spatial-attention labels boost robustness and accuracy of contrastive models”, we show that a predictive human attention model can guide contrastive learning models to achieve better representation and improve the accuracy/robustness of classification tasks (on the ImageNet and ImageNet-C datasets). Further research in this direction could enable applications such as using radiologist’s attention on medical images to improve health screening or diagnosis, or using human attention in complex driving scenarios to guide autonomous driving systems.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of software engineers, researchers, and cross-functional contributors. We’d like to thank all the co-authors of the papers/research, including Kfir Aberman, Gamaleldin F. Elsayed, Moritz Firsching, Shi Chen, Nachiappan Valliappan, Yushi Yao, Chang Ye, Yossi Gandelsman, Inbar Mosseri, David E. Jacobes, Yael Pritch, Shaolei Shen, and Xinyu Ye. We also want to thank team members Oscar Ramirez, Venky Ramachandran and Tim Fujita for their help. Finally, we thank Vidhya Navalpakkam for her technical leadership in initiating and overseeing this body of work.