Posted by Ann Yuan and Andrey Vakunov, Software Engineers at Google

Iris tracking enables a wide range of applications, such as hands-free interfaces for assistive technologies and understanding user behavior beyond clicks and gestures. Iris tracking is also a challenging computer vision problem. Eyes appear under variable light conditions, are often occluded by hair, and can be perceived as differently shaped depending on the head’s angle of rotation and the person’s expression. Existing solutions rely heavily on specialized hardware, often requiring a costly headset or a remote eye tracker system. These approaches are ill-suited for mobile devices with limited computing resources.

| An example of eye re-coloring enabled. |

In March we announced the release of a new package detecting facial landmarks in the browser. Today, we’re excited to add iris tracking to this package through the TensorFlow.js face landmarks detection model. This work is made possible by the MediaPipe Iris model. We have deprecated the original facemesh model, and future updates will be made to the face landmarks detection model.

Note that iris tracking does not infer the location at which people are looking, nor does it provide any form of identity recognition. In our model’s documentation and the accompanying Model Card, we detail the model’s intended uses, limitations and fairness attributes (aligned with Google’s AI Principles).

The MediaPipe iris model is able to track landmarks for the iris and pupil using a single RGB camera, in real-time, without the need for specialized hardware. The model also returns landmarks for the eyelids and eyebrow regions, enabling detection of slight eye movements such as blinking. Try the model out yourself right now in your browser.

Introducing @tensorflow/face-landmarks-detection

| Above left are predictions from @tensorflow-models/facemesh@0.0.4, above right are predictions from @tensorflow-models/face-landmarks-detection@0.0.1. Iris landmarks are in red. |

Users familiar with our existing facemesh model will be able to upgrade to the new faceLandmarksDetection model with only a few code changes, detailed below. faceLandmarksDetection offers three major improvements over facemesh:

- Iris keypoints detection

- Improved eyelid contour detection

- Improved detection for rotated faces

These improvements are highlighted in the GIF above, which demonstrates how the landmarks returned by faceLandmarksDetection and facemesh differ for the same image sequence.

Installation

There are two ways to install the faceLandmarksDetection package:

- Through script tags:

- Through NPM (via the yarn package manager):

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.6.0/dist/tf.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow-models/face-landmarks-detection"></script>$ yarn add @tensorflow-models/face-landmarks-detection@0.0.1

$ yarn add @tensorflow/tfjs@2.6.0

Usage

Once the package is installed, you only need to load the model weights and then pass in an image to start detecting facial landmarks:

// If you are using NPM, first require the model. If you are using script tags, you can skip this step because `faceLandmarksDetection` will already be available in the global scope.

const faceLandmarksDetection = require('@tensorflow-models/face-landmarks-detection');

// Load the faceLandmarksDetection model assets.

const model = await faceLandmarksDetection.load(

faceLandmarksDetection.SupportedPackages.mediapipeFacemesh);

// Pass in a video stream to the model to obtain an array of detected faces from the MediaPipe graph.

// For Node users, the `estimateFaces` API also accepts a `tf.Tensor3D`, or an ImageData object.

const video = document.querySelector("video");

const faces = await model.estimateFaces({ input: video });

The input to estimateFaces can be a video, a static image, a `tf.Tensor3D` or even an ImageData object for use in node.js pipelines. FaceLandmarksDetection then returns an array of prediction objects for the faces in the input, which include information about each face (e.g. a confidence score, and the locations of 478 landmarks within the face).

Here is a sample prediction object:

{

faceInViewConfidence: 1,

boundingBox: {

topLeft: [232.28, 145.26], // [x, y]

bottomRight: [449.75, 308.36],

},

mesh: [

[92.07, 119.49, -17.54], // [x, y, z]

[91.97, 102.52, -30.54],

...

],

// x,y,z positions of each facial landmark within the input space.

scaledMesh: [

[322.32, 297.58, -17.54],

[322.18, 263.95, -30.54]

],

// Semantic groupings of x,y,z positions.

annotations: {

silhouette: [

[326.19, 124.72, -3.82],

[351.06, 126.30, -3.00],

...

],

...

}

}Refer to our README for more details about the API.

Performance

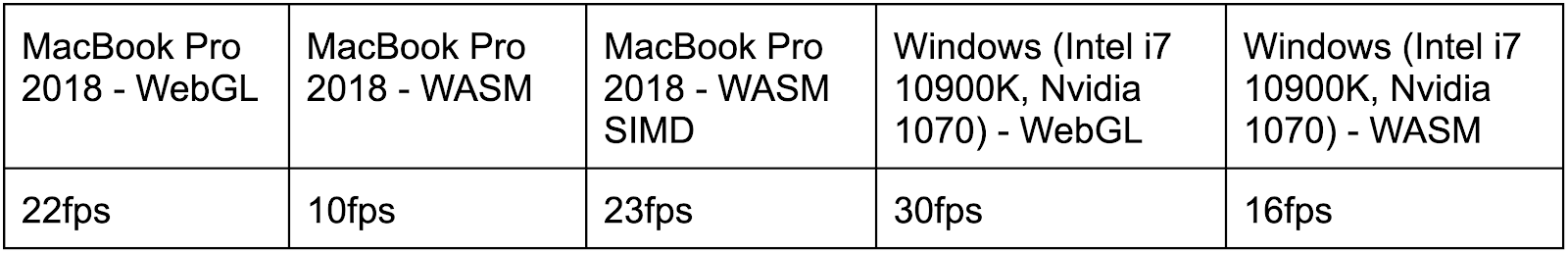

FaceLandmarksDetection is a lightweight package containing only ~3MB of weights, making it ideally suited for real-time inference on a variety of mobile devices. When testing, note that TensorFlow.js also provides several different backends to choose from, including WebGL and WebAssembly (WASM) with XNNPACK for devices with lower-end GPU’s. The table below shows how the package performs across a few different devices and TensorFlow.js backends.:

Desktop:

Mobile:

All benchmarks were collected in the Chrome browser. See our earlier blogpost for details on how to activate SIMD for the TF.js WebAssembly backend.

Looking ahead

Both the TensorFlow.js and MediaPipe teams plan to add depth estimation capabilities to our face landmark detection solutions using the improved iris coordinates. We strongly believe in sharing code that enables reproducible research and rapid experimentation, and are looking forward to seeing how the wider community makes use of the MediaPipe iris model.

Try the demo!

Use this link to try our new package in your web browser. We look forward to seeing how you use it in your apps.

More information

- Learn more about the MediaPipe Iris model here: MediaPipe Iris

- Read about the model’s intended uses, limitations and fairness attributes: Model Card

- Read the original Google AI blogpost announcing MediaPipe Iris: Real-time Iris Tracking & Depth Estimation

- Read our paper on arXiv: Real-time Pupil Tracking from Monocular Video for Digital Puppetry