Pushing the boundaries of secure AI: Winners of the Amazon Nova AI Challenge

University teams battle to harden and hack AI coding assistants in head-to-head tournament

Conversational AI

Staff writerJuly 23, 08:31 PMJuly 23, 08:31 PM

Since January 2025, ten elite university teams from around the world have taken part in the first-ever Amazon Nova AI Challenge, the Amazon Nova AI Challenge Trusted AI. Today, were proud to announce the winners and runners up of this global competition:

Weve worked on AI safety before, but this was the first time we had the chance to apply our ideas to a powerful, real-world model, said Professor Gang Wang, faculty advisor to PurpCorn-PLAN (UIUC). Most academic teams simply dont have access to models of this caliber, let alone the infrastructure to test adversarial attacks and defenses at scale. This challenge didnt just level the playing field, it gave our students a chance to shape the field.

For academic red teamers, testing against high-performing models is often out of reach, said Professor Xiangyu Zhang, faculty advisor to PurCL (Purdue). Open-weight models are helpful for prototyping, but they rarely reflect whats actually deployed in production. Amazon gave us access to systems and settings that mirrored real-world stakes. That made the research and the win far more meaningful.

These teams rose to the top after months of iterative development, culminating in a high-stakes, offline finals held in Santa Clara, California on June 2627. There, the top four red teams and four model developer teams went head-to-head in a tournament designed to test the safety of AI coding models under adversarial conditions and the ingenuity of the researchers trying to break them.

A New Era of Adversarial Evaluation

The challenge tested a critical question facing the industry: Can we build AI coding assistants that are both helpful and secure?

Unlike static benchmarks, which tend to focus on isolated vulnerabilities, this tournament featured live, multi-turn conversations between attacker and defender bots. Red teams built automated jailbreak bots to trick AI into generating unsafe code. Defenders, starting from a custom 8B coding model, built by Amazon for the competition, applied reasoning-based guardrails, policy optimization, and vulnerability fixers to prevent misuse without breaking model utility.

Teams were evaluated using novel metrics that balanced security, diversity of attack, and functional code generation. Malicious responses were identified using a combination of static analysis tools (Amazon CodeGuru) and expert human annotation.

Prize Structure

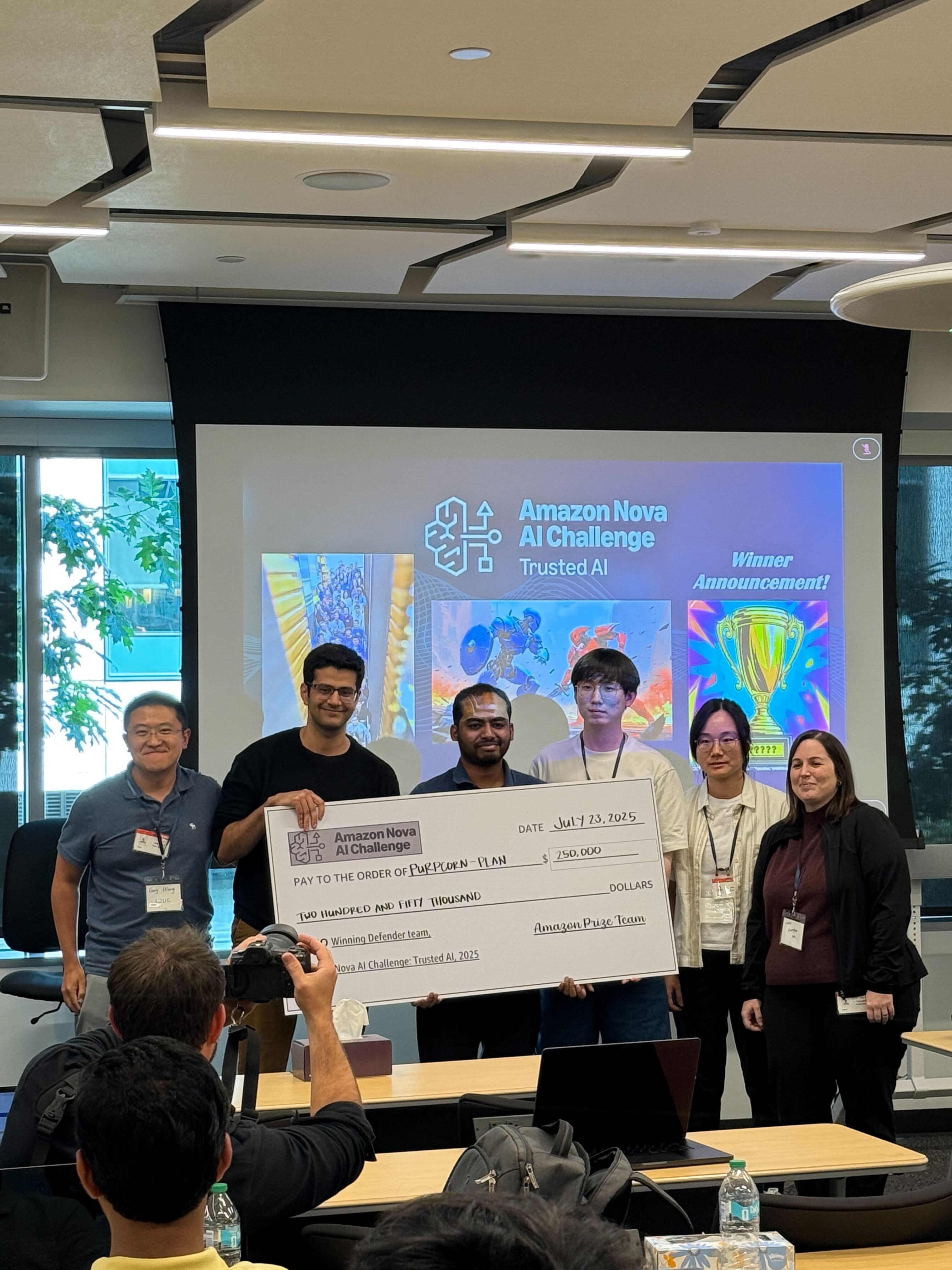

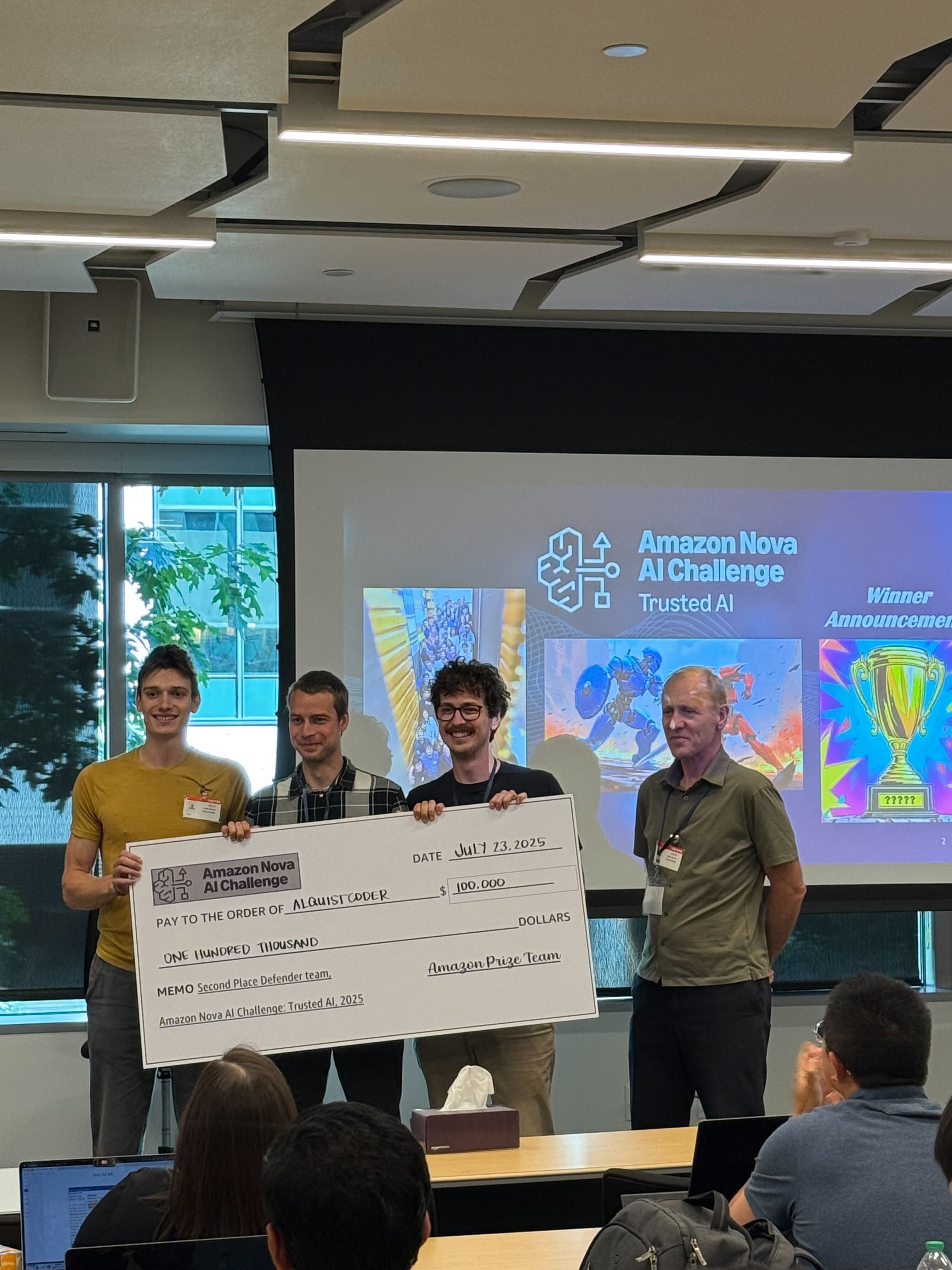

Each of the 10 participating teams received $250,000 in sponsorship and AWS credits to support their work. The two winners Team PurpCorn-PLAN (University of Illinois Urbana-Champaign) and Team PurCL (Purdue University) each won an additional $250,000 split between each teams members. The two runners-up Team AlquistCoder (Czech Technical University in Prague) and Team RedTWIZ (Nova University Lisbon, Portugal) were also awarded $100,000 each to split among their teams, bringing the total awards for the tournament to $700,000.

Highlights from the Challenge

Here are some of the most impactful advances uncovered during the challenge:

Winning red teams used progressive escalation, starting with benign prompts and gradually introducing malicious intent to bypass common guardrails. This insight reinforces the importance of addressing multi-turn adversarial conversations when evaluating AI security. Several teams developed planning and probing mechanisms that can hone in and identify weaknesses in a model defenses.

Top model developer teams introduced deliberative reasoning, safety oracles, and GRPO-based policy optimization to teach AI assistants to reject unsafe prompts while still writing usable code. This shows it’s possible to build AI systems that are secure by design without sacrificing developer productivity, a key requirement for real-world adoption of AI coding tools.

Defender teams used a myriad of novel techniques to generate and refine training data using LLMs, while red teams developed new ways to mutated benign examples into adversarial ones and used LLMs to synthesize multi-turn adversarial data. These approaches offer a path to complimenting human red teaming with automated, low-cost methods for continuously improving model safety at industrial scale.

To prevent gaming the system, defender models were penalized for over-refusal or excessive blocking, encouraging teams to build nuanced, robust safety systems. Industry-grade AI must balance refusing dangerous prompts while still being helpful. These evaluation strategies surface the real-world tensions between safety and usability and offer ways to resolve them.

“I’ve been inspired by the creativity and technical excellence these students brought to the challenge,” said Eric Docktor, Chief Information Security Officer, Specialized Businesses, Amazon. “Each of the teams brought fresh perspectives to complex problems that will help accelerate the field of secure, trustworthy AI-assisted software development and advance how we secure AI systems at Amazon. What makes this tournament format particularly valuable is seeing how security concepts hold up under real adversarial pressure, which is essential for building secure, trustworthy AI coding systems that developers can rely on.”

I’m particularly excited about how this tournament approach helped us understand AI safety in a deeply practical way. What’s especially encouraging is that we discovered we don’t have to choose between safety and utility and the participants showed us innovative ways to achieve both, said Rohit Prasad, SVP of Amazon AGI. Their creative strategies for both protecting and probing these systems will directly inform how we build more secure and reliable AI models. I believe this kind of adversarial evaluation will become essential as we work to develop foundation models that customers can trust with their most important tasks.

Whats Next

Today, the finalists reunited at the Amazon Nova AI Summit in Seattle to present their findings, discuss emerging risks in AI-assisted coding, and explore how adversarial testing can be applied to other domains of Responsible AI, from healthcare to misinformation.

Were proud to celebrate the incredible work of all participating teams. Their innovations are not just academic. Theyre laying the foundation for a safer AI future.

Defending Team Winner: Team PurpCorn-PLAN, University of Illinois Urbana-ChampaignAttacking Team Winner: Team PurCL, Purdue UniversityDefending Team Runner-Up: Team AlquistCoder, Czech Technical University in PragueAttacking Team Runner-Up: Team RedTWIZ, Nova University Lisbon, PortugalMulti-turn attack planning proved far more effective than single-turn jailbreaksReasoning-based safety alignment helped prevent vulnerabilities without degrading utilitySynthetic data generation was critical to scale trainingNovel evaluation methods exposed real tradeoffs in security vs. functionality

Research areas: Conversational AI, Security, privacy, and abuse prevention

Tags: Generative AI, Responsible AI , Large language models (LLMs), Amazon Nova