The International Conference on Machine Learning (ICML) 2021 is being hosted virtually from July 18th – July 24th. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

Deep Reinforcement Learning amidst Continual Structured Non-Stationarity

Authors: Annie Xie, James Harrison, Chelsea Finn

Contact: anniexie@stanford.edu

Keywords: deep reinforcement learning, non-stationarity

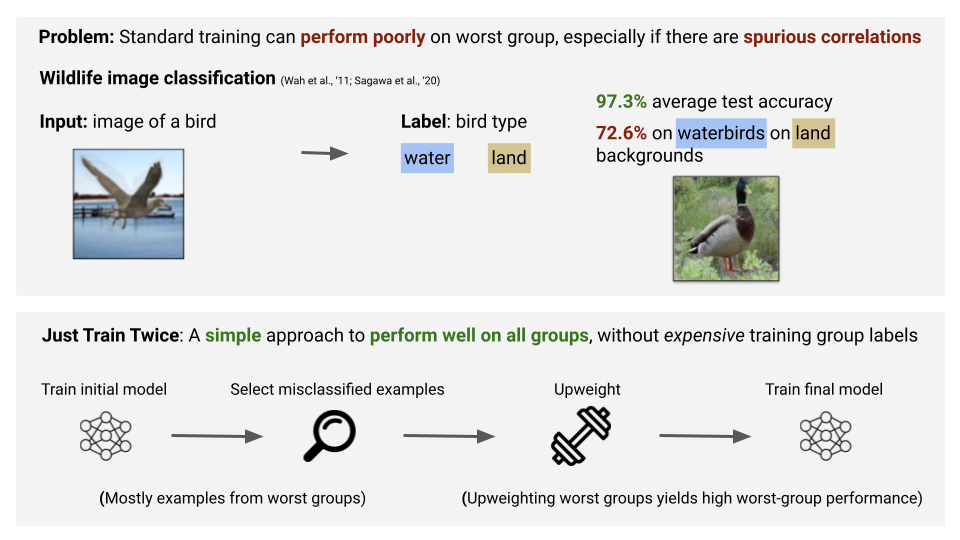

Just Train Twice: Improving Group Robustness without Training Group Information

Authors: Evan Zheran Liu*, Behzad Haghgoo*, Annie S. Chen*, Aditi Raghunathan, Pang Wei Koh, Shiori Sagawa, Percy Liang, Chelsea Finn

Contact: evanliu@cs.stanford.edu

Links: Paper | Video

Keywords: robustness, spurious correlations

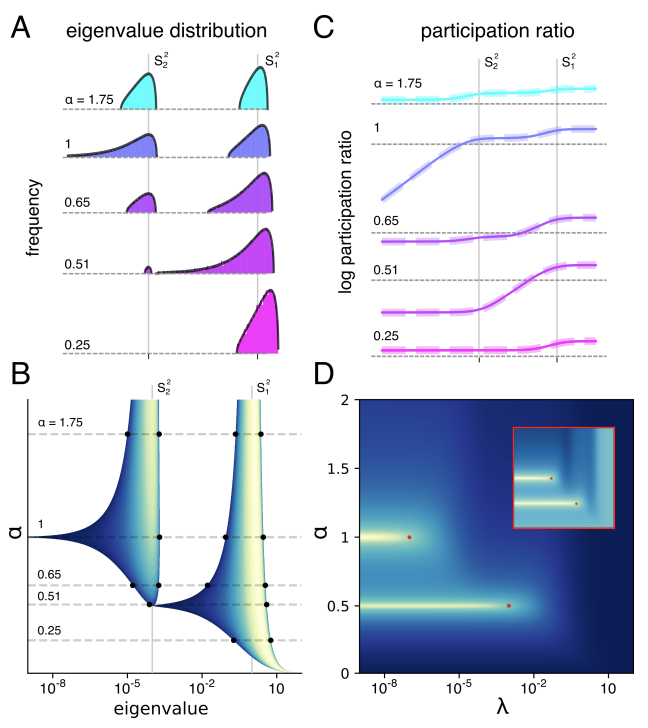

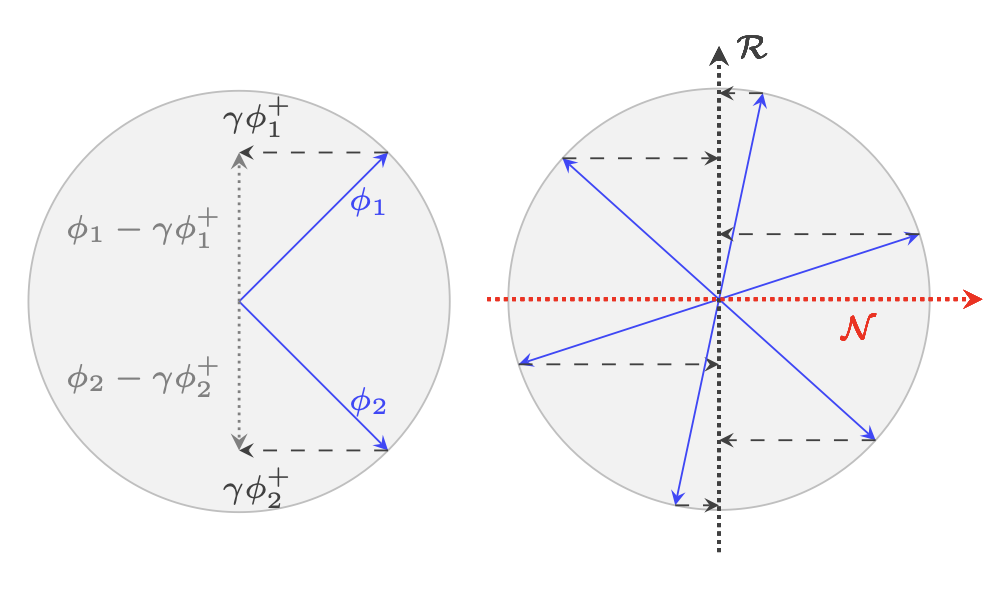

A theory of high dimensional regression with arbitrary correlations between input features and target functions: sample complexity, multiple descent curves and a hierarchy of phase transitions

Authors: Gabriel Mel, Surya Ganguli

Contact: sganguli@stanford.edu

Links: Paper

Keywords: high dimensional statistics, random matrix theory, regularization

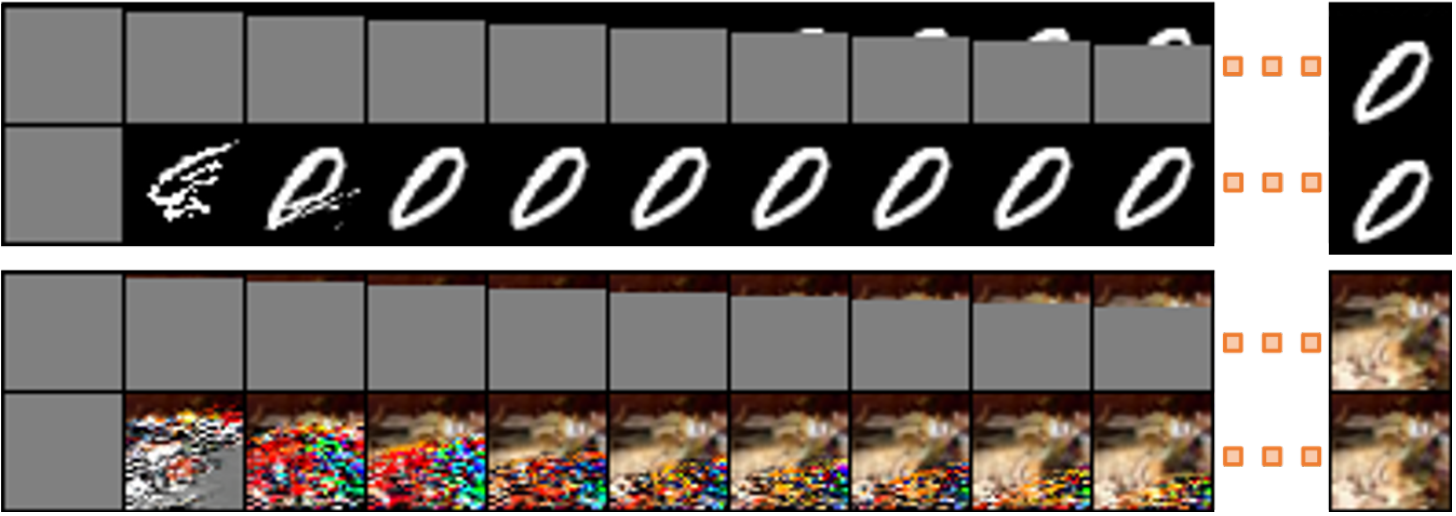

Accelerating Feedforward Computation via Parallel Nonlinear Equation Solving

Authors: Yang Song, Chenlin Meng, Renjie Liao, Stefano Ermon

Contact: songyang@stanford.edu

Links: Paper | Website

Keywords: parallel computing, autoregressive models, densenets, rnns

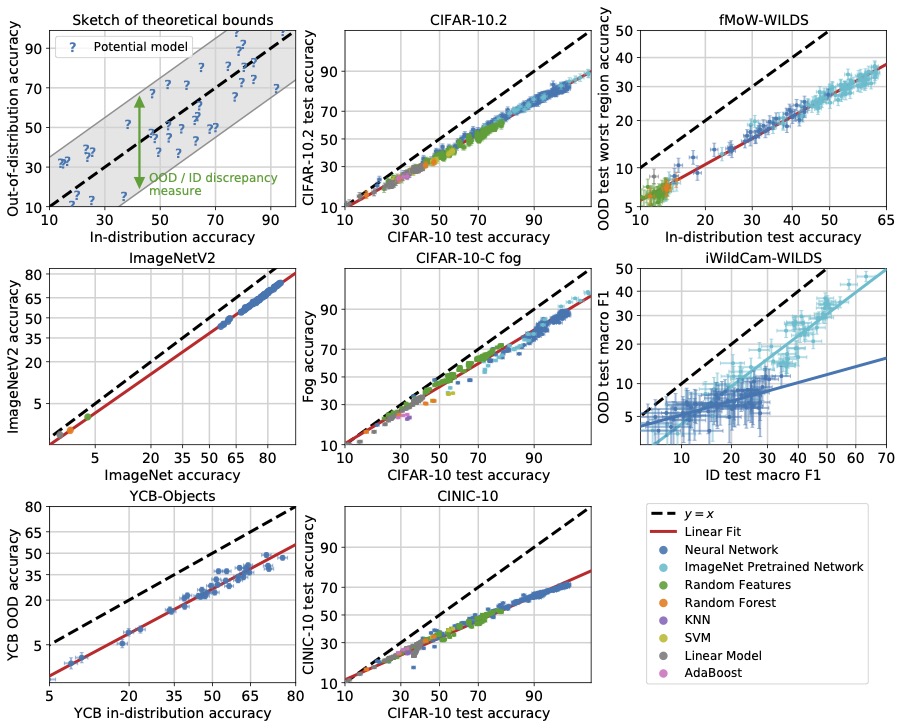

Accuracy on the Line: on the Strong Correlation Between Out-of-Distribution and In-Distribution Generalization

Authors: John Miller, Rohan Taori, Aditi Raghunathan, Shiori Sagawa, Pang Wei Koh, Vaishaal Shankar, Percy Liang, Yair Carmon, Ludwig Schmidt

Contact: rtaori@stanford.edu

Links: Paper

Keywords: out of distribution, generalization, robustness, distribution shift, machine learning

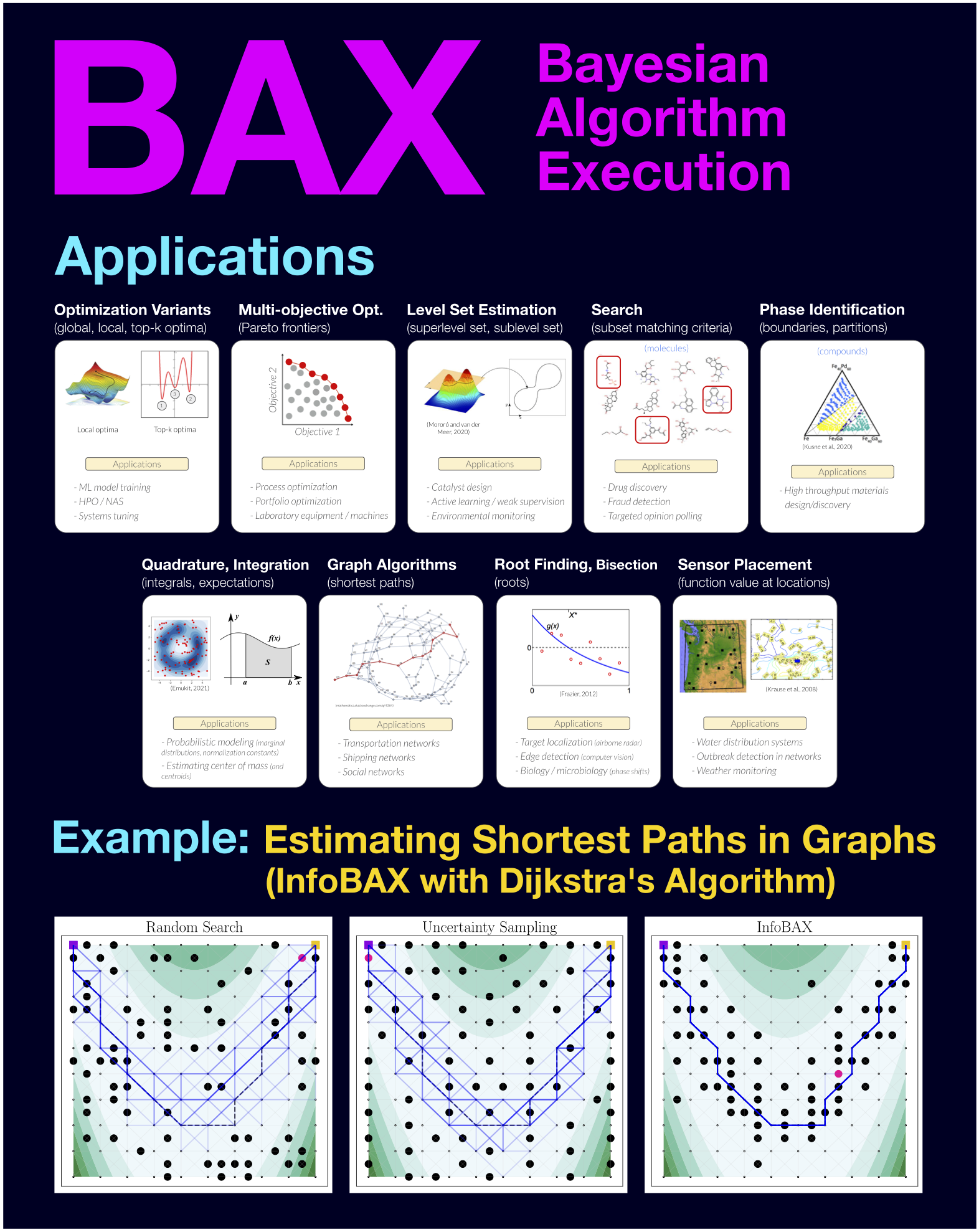

Bayesian Algorithm Execution: Estimating Computable Properties of Black-box Functions Using Mutual Information

Authors: Willie Neiswanger, Ke Alexander Wang, Stefano Ermon

Contact: neiswanger@cs.stanford.edu

Links: Paper | Blog Post | Video | Website

Keywords: bayesian optimization, experimental design, algorithm execution, information theory

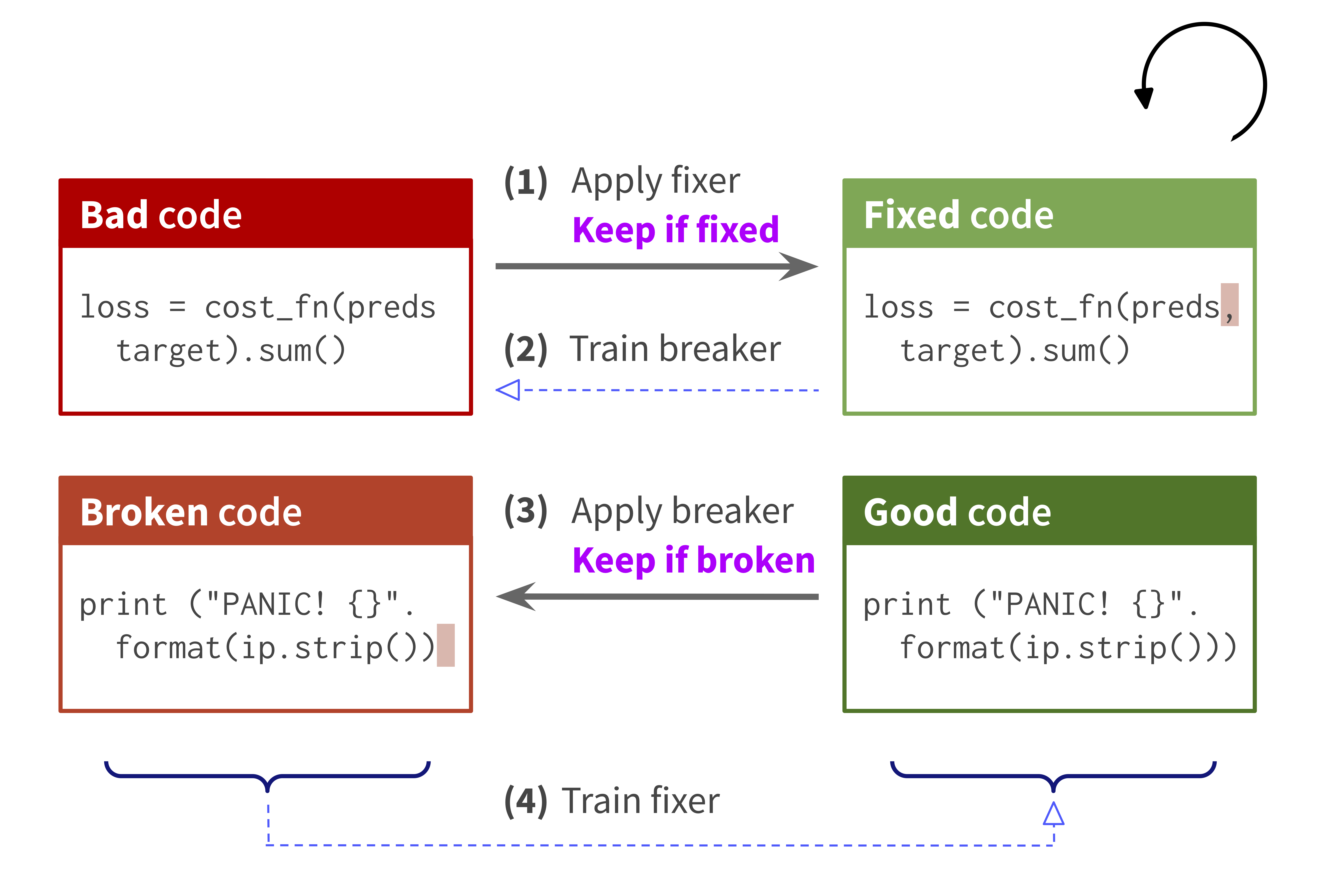

Break-It-Fix-It: Unsupervised Learning for Program Repair

Authors: Michihiro Yasunaga, Percy Liang

Contact: myasu@cs.stanford.edu

Links: Paper | Website

Keywords: program repair, unsupervised learning, translation, domain adaptation, self-supervised learning

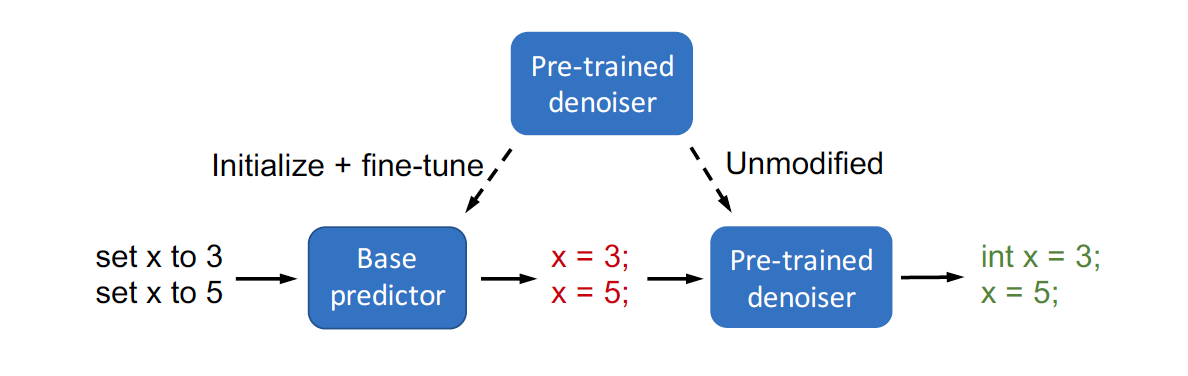

Composed Fine-Tuning: Freezing Pre-Trained Denoising Autoencoders for Improved Generalization

Authors: Sang Michael Xie, Tengyu Ma, Percy Liang

Contact: xie@cs.stanford.edu

Links: Paper | Website

Keywords: fine-tuning, adaptation, freezing, ood generalization, structured prediction, semi-supervised learning, unlabeled outputs

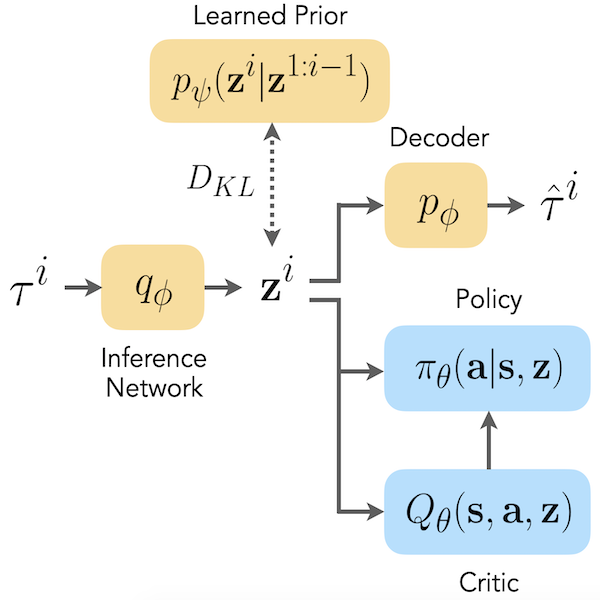

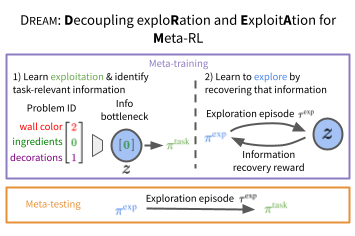

Decoupling Exploration and Exploitation for Meta-Reinforcement Learning without Sacrifices

Authors: Evan Zheran Liu, Aditi Raghunathan, Percy Liang, Chelsea Finn

Contact: evanliu@cs.stanford.edu

Links: Paper | Blog Post | Video | Website

Keywords: meta-reinforcement learning, exploration

Exponential Lower Bounds for Batch Reinforcement Learning: Batch RL can be Exponentially Harder than Online RL

Authors: Andrea Zanette

Contact: zanette@stanford.edu

Links: Paper | Video

Keywords: reinforcement learning, lower bounds, linear value functions, off-policy evaluation, policy learning

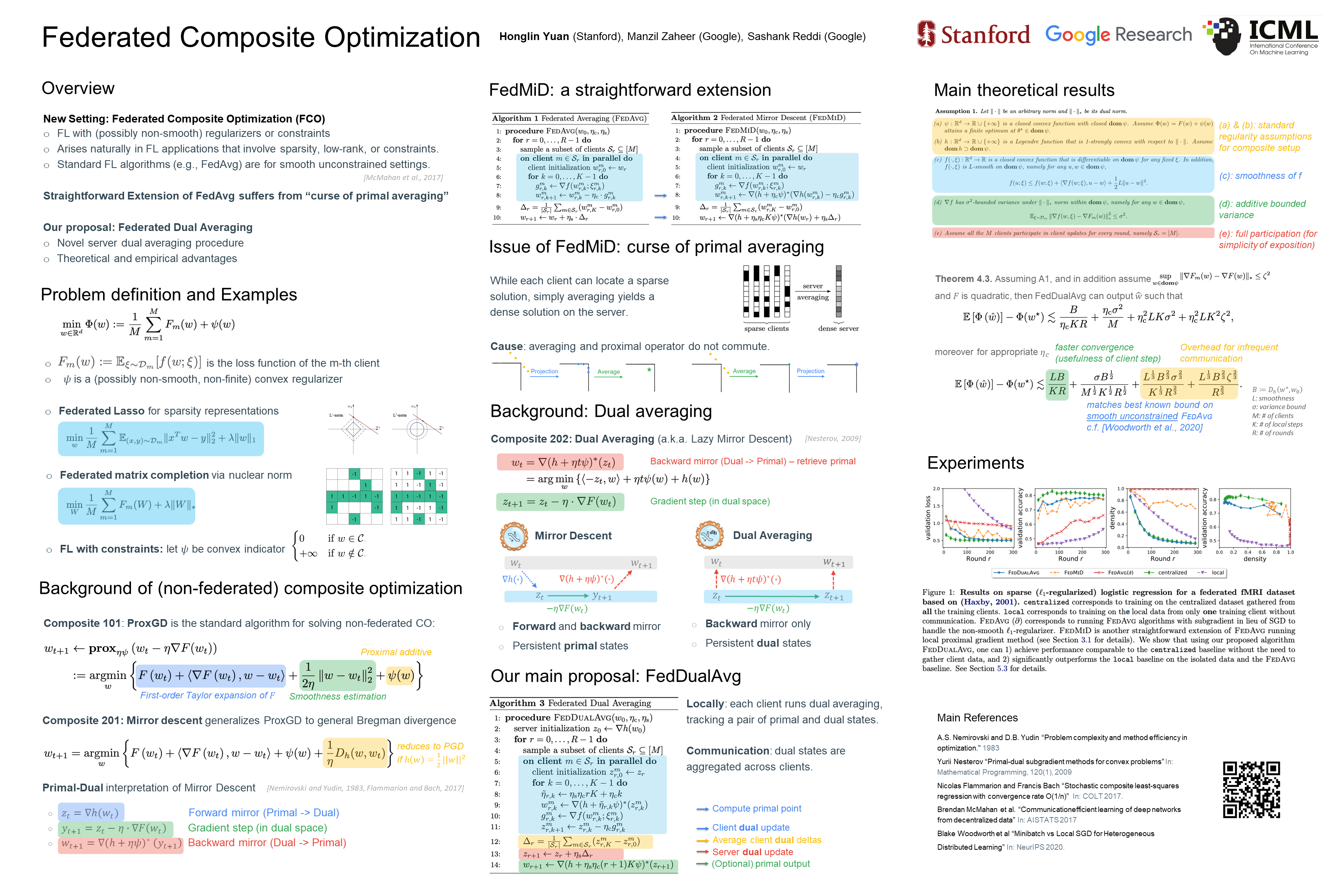

Federated Composite Optimization

Authors: Honglin Yuan, Manzil Zaheer, Sashank Reddi

Contact: yuanhl@cs.stanford.edu

Links: Paper | Video | Website

Keywords: federated learning, distributed optimization, convex optimization

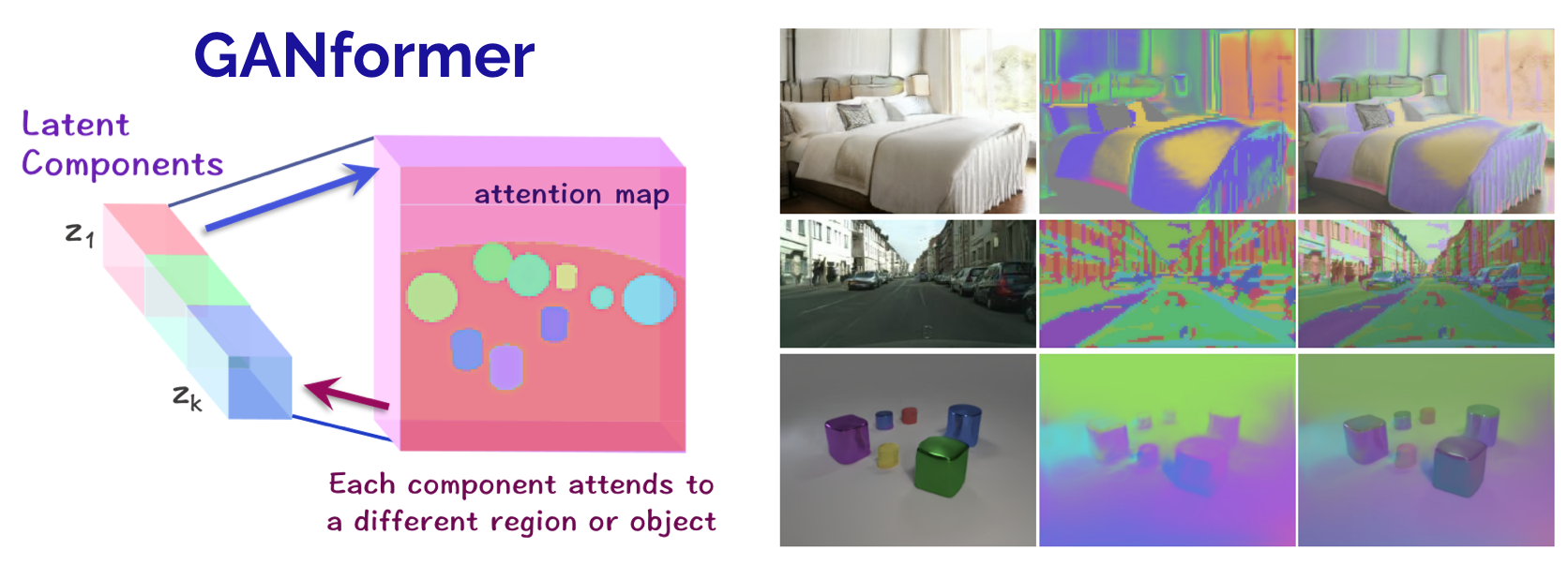

Generative Adversarial Transformers

Authors: Drew A. Hudson, C. Lawrence Zitnick

Contact: dorarad@stanford.edu

Links: Paper | Website

Keywords: gans, transformers, compositionality, attention, bottom-up, top-down, disentanglement, object-oriented, representation learning, scenes

Improving Generalization in Meta-learning via Task Augmentation

Authors: Huaxiu Yao, Longkai Huang, Linjun Zhang, Ying Wei, Li Tian, James Zou, Junzhou Huang, Zhenhui Li

Contact: huaxiu@cs.stanford.edu

Links: Paper

Keywords: meta-learning

Mandoline: Model Evaluation under Distribution Shift

Authors: Mayee Chen, Karan Goel, Nimit Sohoni, Fait Poms, Kayvon Fatahalian, Christopher Ré

Contact: mfchen@stanford.edu

Links: Paper

Keywords: evaluation, distribution shift, importance weighting

Memory-Efficient Pipeline-Parallel DNN Training

Authors: Deepak Narayanan

Contact: deepakn@stanford.edu

Links: Paper

Keywords: distributed training, pipeline model parallelism, large language model training

Offline Meta-Reinforcement Learning with Advantage Weighting

Authors: Eric Mitchell, Rafael Rafailov, Xue Bin Peng, Sergey Levine, Chelsea Finn

Contact: em7@stanford.edu

Links: Paper | Website

Keywords: meta-rl offline rl batch meta-learning

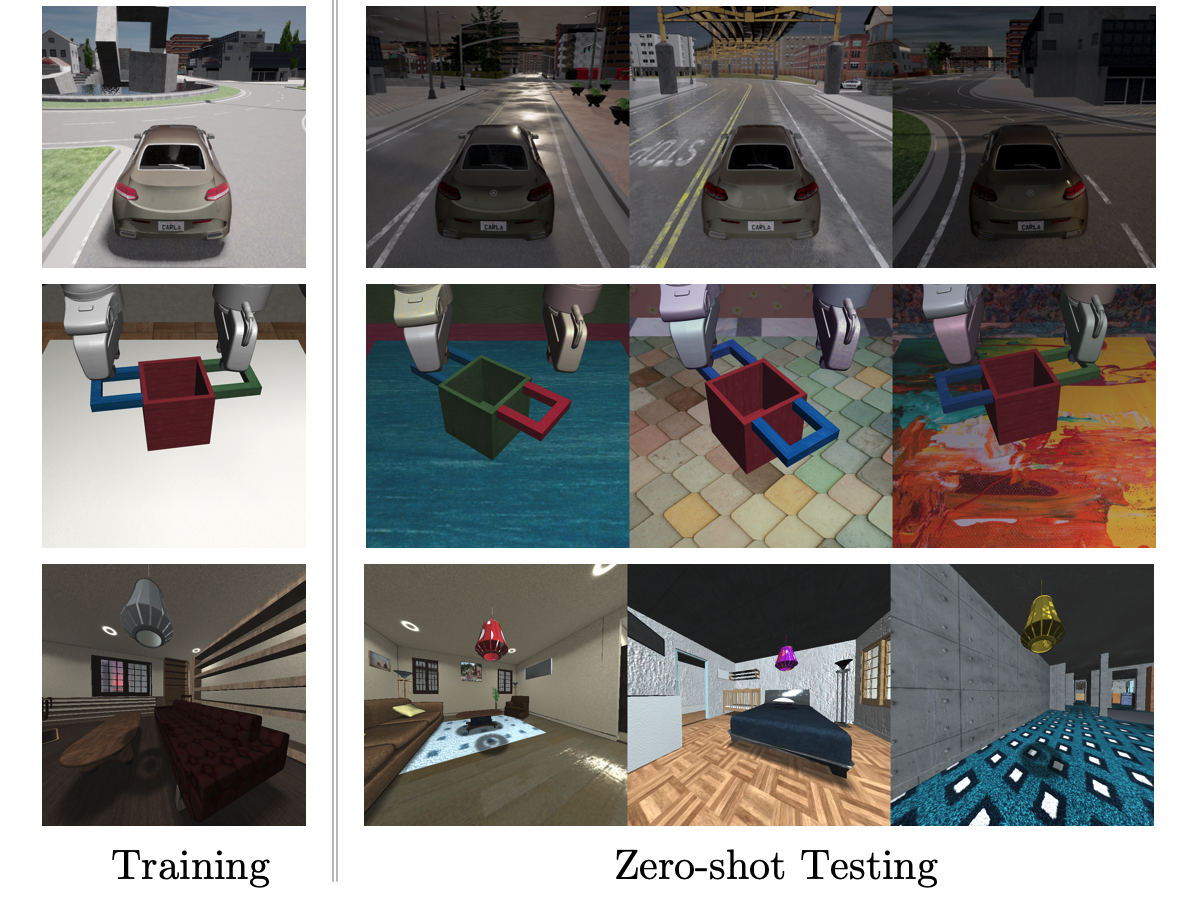

SECANT: Self-Expert Cloning for Zero-Shot Generalization of Visual Policies

Authors: Linxi Fan, Guanzhi Wang, De-An Huang, Zhiding Yu, Li Fei-Fei, Yuke Zhu, Anima Anandkumar

Contact: jimfan@cs.stanford.edu

Links: Paper | Website

Keywords: reinforcement learning, computer vision, sim-to-real, robotics, simulation

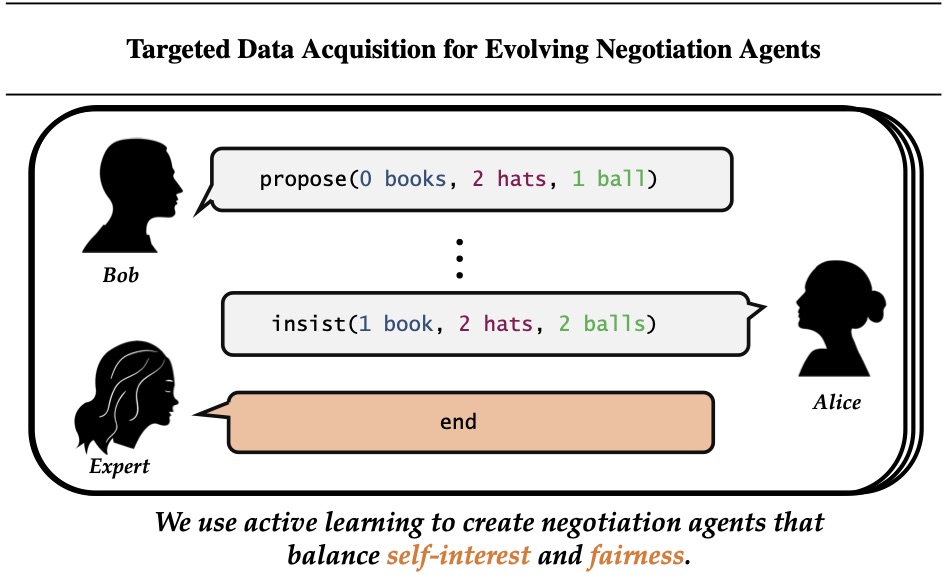

Targeted Data Acquisition for Evolving Negotiation Agents

Authors: Minae Kwon, Siddharth Karamcheti, Mariano-Florentino Cuéllar, Dorsa Sadigh

Contact: minae@cs.stanford.edu

Links: Paper | Video

Keywords: negotiation, targeted data acquisition, active learning

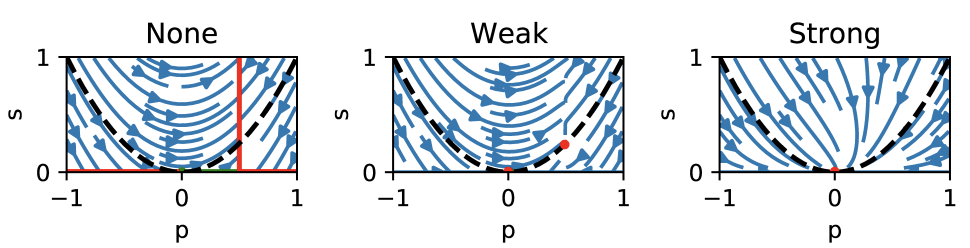

Understanding self-supervised Learning Dynamics without Contrastive Pairs

Authors: Yuandong Tian, Xinlei Chen, Surya Ganguli

Contact: sganguli@stanford.edu

Links: Paper

Keywords: self-supervised learning

WILDS: A Benchmark of in-the-Wild Distribution Shifts

Authors: Pang Wei Koh*, Shiori Sagawa*, Henrik Marklund, Sang Michael Xie, Marvin Zhang, Akshay Balsubramani, Weihua Hu, Michihiro Yasunaga, Richard Lanas Phillips, Irena Gao, Tony Lee, Etienne David, Ian Stavness, Wei Guo, Berton A. Earnshaw, Imran S. Haque, Sara Beery, Jure Leskovec, Anshul Kundaje, Emma Pierson, Sergey Levine, Chelsea Finn, Percy Liang

Contact: pangwei@cs.stanford.edu, ssagawa@cs.stanford.edu

Links: Paper | Website

Keywords: robustness, distribution shifts, benchmark

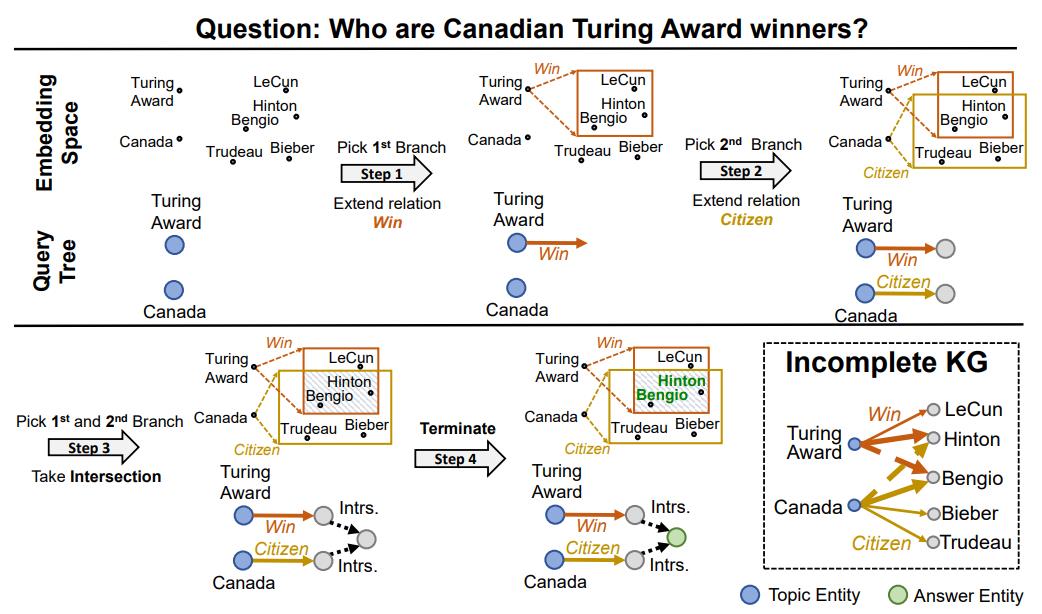

LEGO: Latent Execution-Guided Reasoning for Multi-Hop Question Answering on Knowledge Graphs

Authors: Hongyu Ren, Hanjun Dai, Bo Dai, Xinyun Chen, Michihiro Yasunaga, Haitian Sun, Dale Schuurmans, Jure Leskovec, Denny Zhou

Contact: hyren@cs.stanford.edu

Keywords: knowledge graphs, question answering, multi-hop reasoning

We look forward to seeing you at ICML 2021!