The Neural Information Processing Systems (NeurIPS) 2020 conference is being hosted virtually from Dec 6th – Dec 12th. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

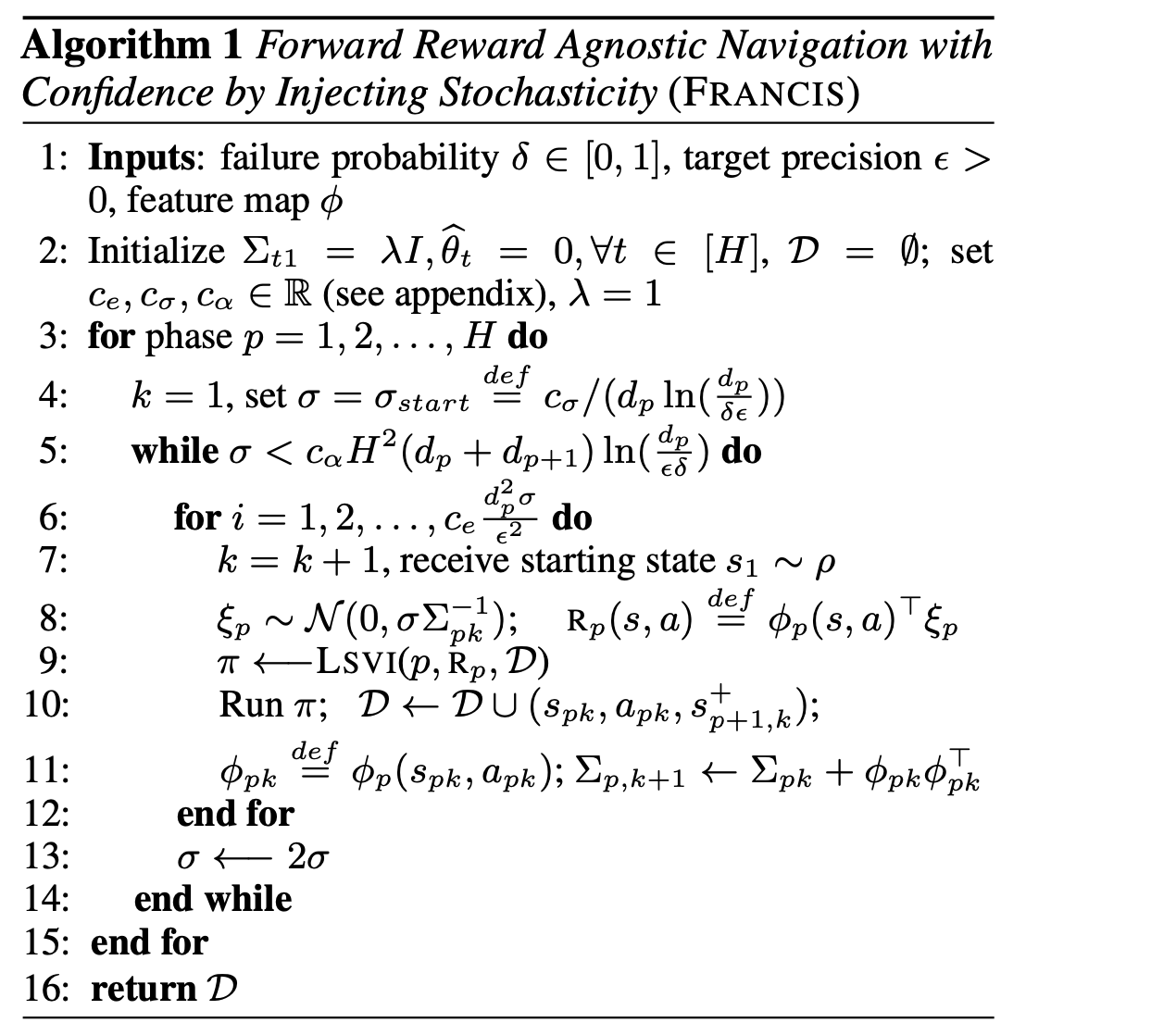

Provably Efficient Reward-Agnostic Navigation with Linear Value Iteration

Authors: Andrea Zanette, Alessandro Lazaric, Mykel Kochenderfer, Emma Brunskill

Contact: zanette@stanford.edu

Keywords: reinforcement learning, function approximation, exploration

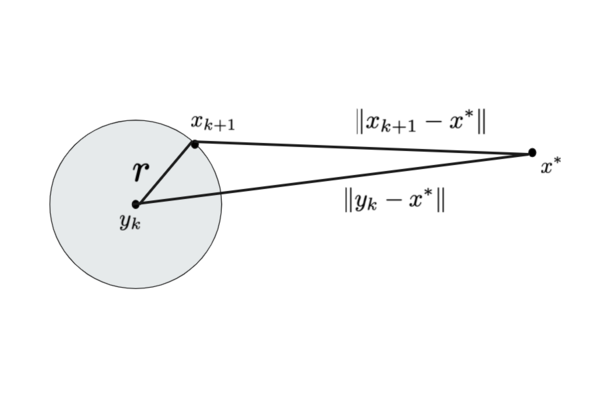

Acceleration with a Ball Optimization Oracle

Authors: Yair Carmon, Arun Jambulapati, Qijia Jiang, Yujia Jin, Yin Tat Lee, Aaron Sidford, Kevin Tian

Contact: kjtian@stanford.edu

Award nominations: Oral presentation

Links: Paper

Keywords: convex optimization, local search, trust region methods

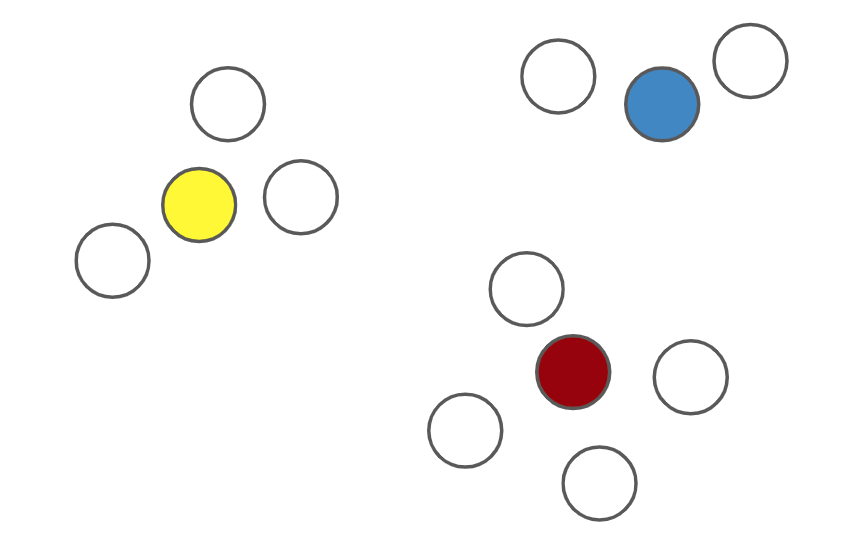

BanditPAM: Almost Linear Time k-Medoids Clustering via Multi-Armed Bandits

Authors: Mo Tiwari, Martin Jinye Zhang, James Mayclin, Sebastian Thrun, Chris Piech, Ilan Shomorony

Contact: Motiwari@stanford.edu

Links: Paper | Video

Keywords: clustering, k-means, k-medoids, multi-armed bandits

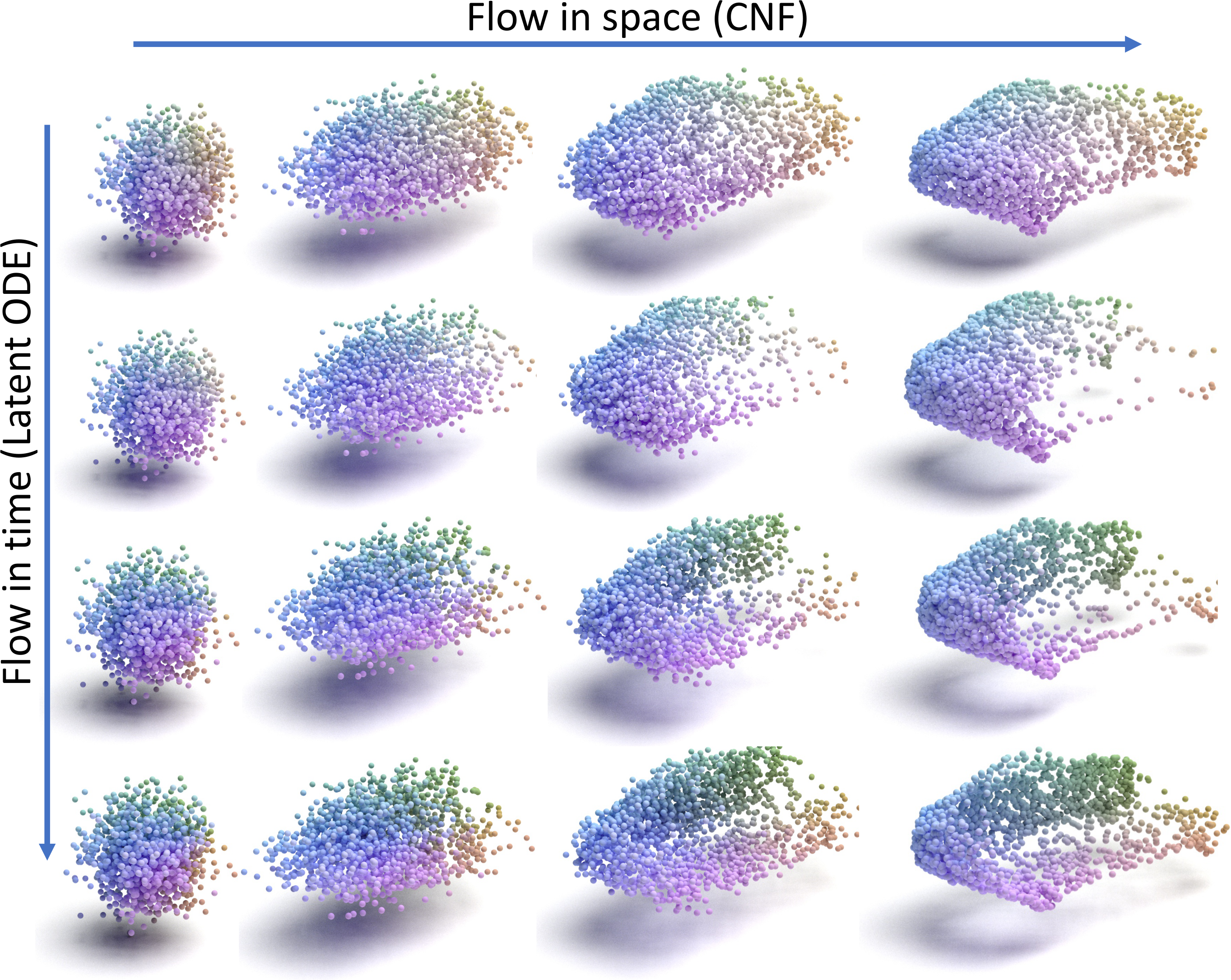

CaSPR: Learning Canonical Spatiotemporal Point Cloud Representations

Authors: Davis Rempe, Tolga Birdal, Yongheng Zhao, Zan Gojcic, Srinath Sridhar, Leonidas J. Guibas

Contact: drempe@stanford.edu

Links: Paper | Video | Website

Keywords: 3d vision, dynamic point clouds, representation learning

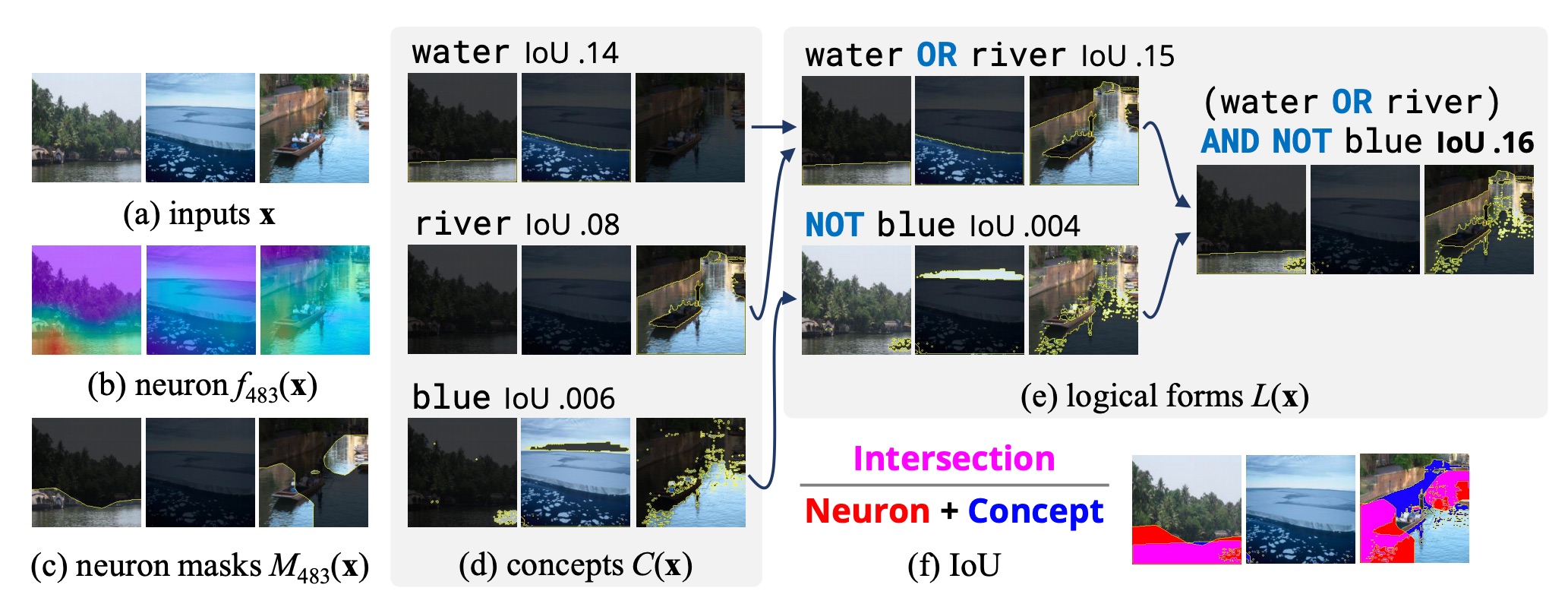

Compositional Explanations of Neurons

Authors: Jesse Mu, Jacob Andreas

Contact: muj@stanford.edu

Award nominations: oral

Links: Paper

Keywords: interpretability, explanation, deep learning, computer vision, natural language processing, adversarial examples

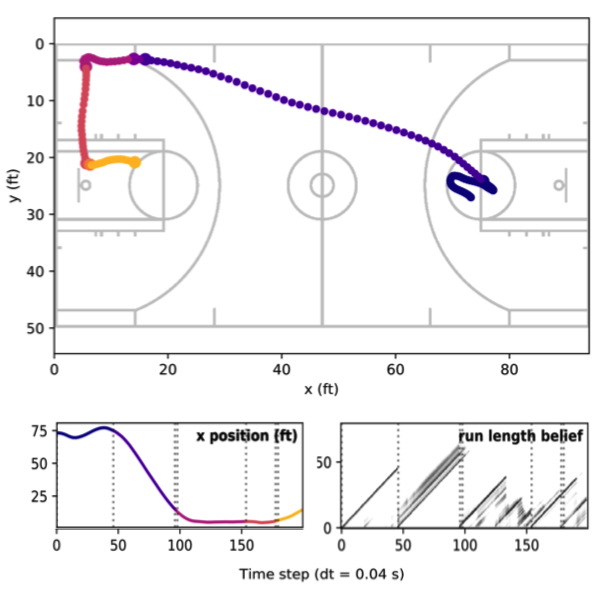

Continuous Meta-Learning without Tasks

Authors: James Harrison, Apoorva Sharma, Chelsea Finn, Marco Pavone

Contact: jharrison@stanford.edu

Links: Paper

Keywords: meta-learning, continuous learning, changepoint detection

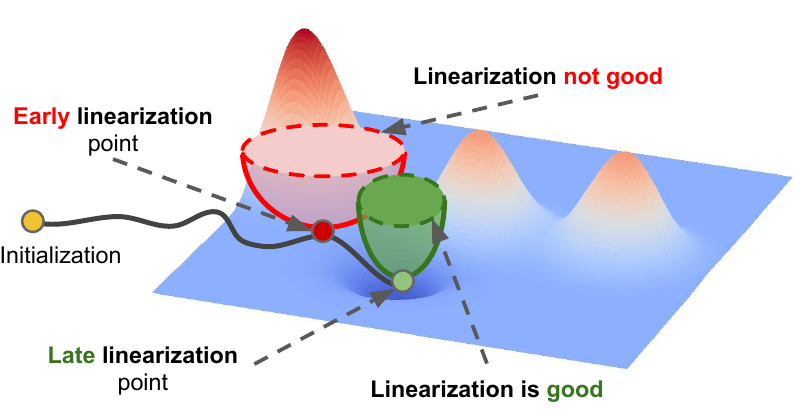

Deep learning versus kernel learning: an empirical study of loss landscape geometry and the time evolution of the Neural Tangent Kernel

Authors: Stanislav Fort, Gintare Karolina Dziugaite, Mansheej Paul, Sepideh Kharaghani, Daniel M. Roy, Surya Ganguli

Contact: sfort1@stanford.edu

Links: Paper

Keywords: loss landscape, neural tangent kernel, linearization, taylorization, basin, nonlinear advantage

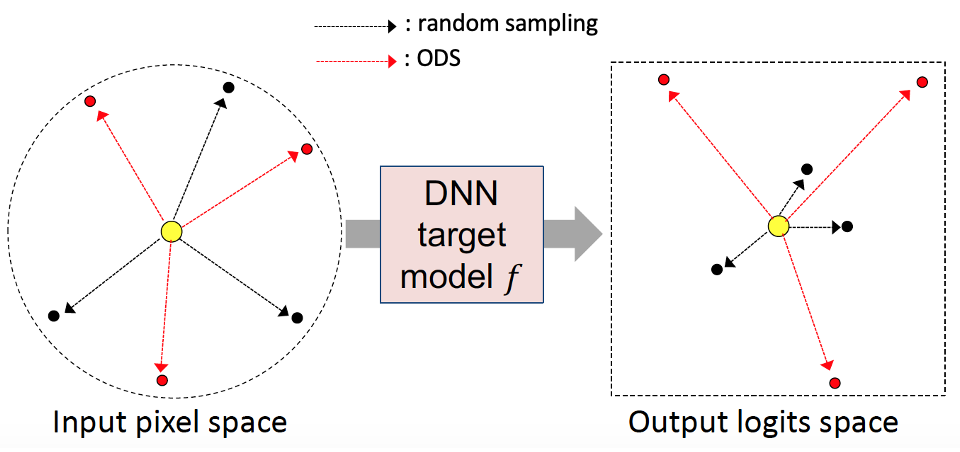

Diversity can be Transferred: Output Diversification for White- and Black-box Attacks

Authors: Yusuke Tashiro, Yang Song, Stefano Ermon

Contact: ytashiro@stanford.edu

Links: Paper | Website

Keywords: adversarial examples, deep learning, robustness

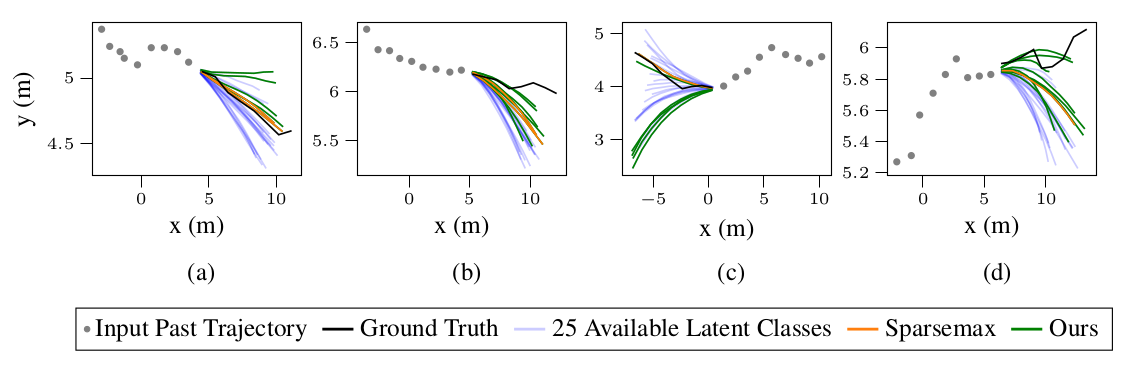

Evidential Sparsification of Multimodal Latent Spaces in Conditional Variational Autoencoders

Authors: Masha Itkina, Boris Ivanovic, Ransalu Senanayake, Mykel J. Kochenderfer, and Marco Pavone

Contact: mitkina@stanford.edu

Links: Paper | Website

Keywords: sparse distributions, generative models, discrete latent spaces, behavior prediction, image generation

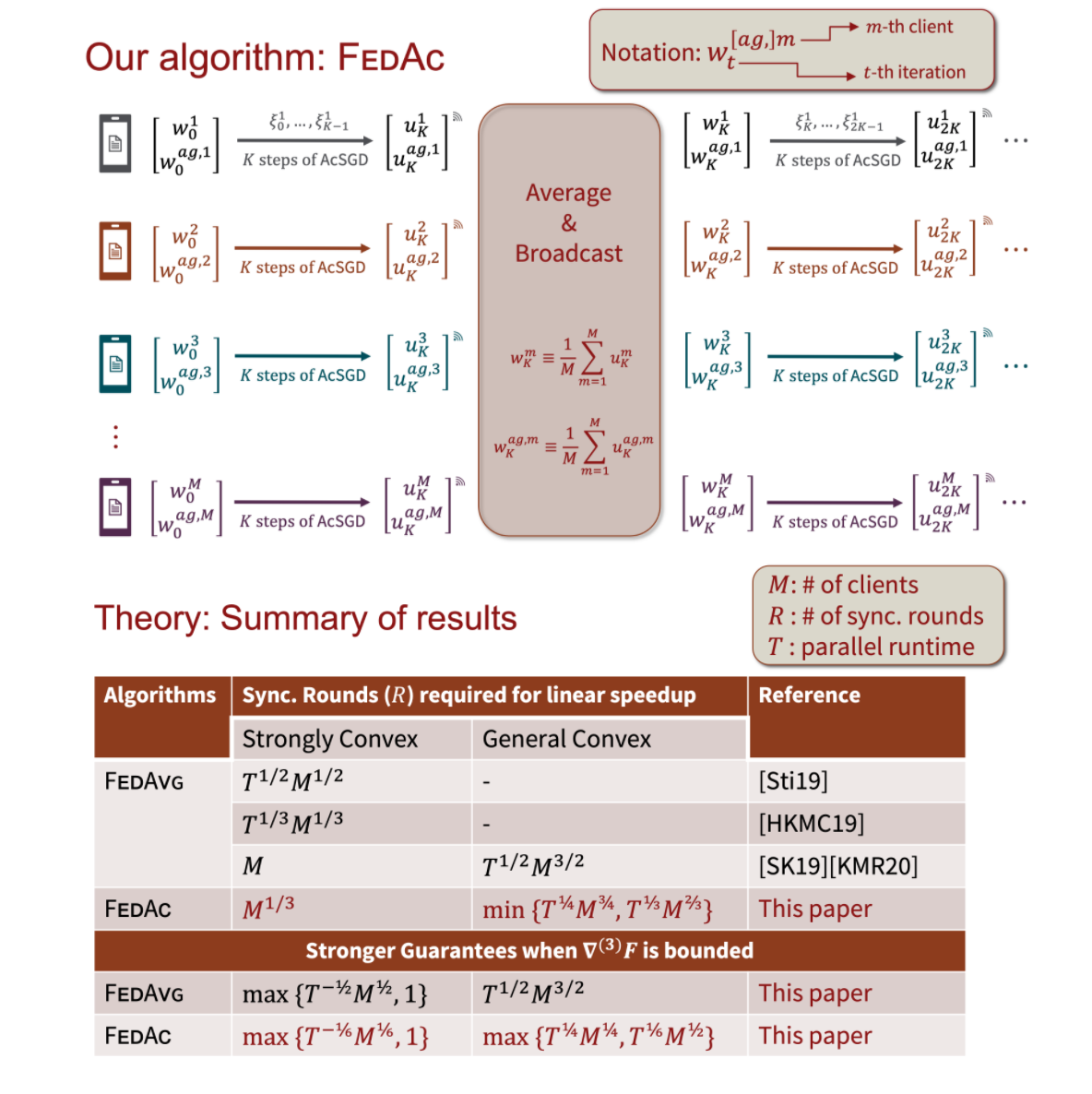

Federated Accelerated Stochastic Gradient Descent

Authors: Honglin Yuan, Tengyu Ma

Contact: yuanhl@stanford.edu

Award nominations: Best Paper Award of Federated Learning for User Privacy and Data Confidentiality in Conjunction with ICML 2020 (FL-ICML’20)

Links: Paper | Website

Keywords: federated learning, local sgd, acceleration, fedac

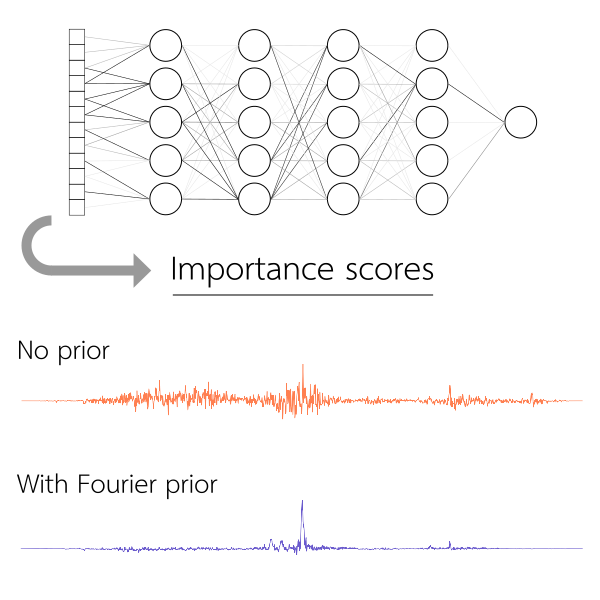

Fourier-transform-based attribution priors improve the interpretability and stability of deep learning models for genomics

Authors: Alex Michael Tseng, Avanti Shrikumar, Anshul Kundaje

Contact: amtseng@stanford.edu

Links: Paper | Website

Keywords: deep learning, interpretability, attribution prior, computational biology, genomics

From Trees to Continuous Embeddings and Back: Hyperbolic Hierarchical Clustering

Authors: Ines Chami, Albert Gu, Vaggos Chatziafratis, Christopher Re

Contact: chami@stanford.edu

Links: Paper | Video | Website

Keywords: hierarchical clustering, hyperbolic embeddings

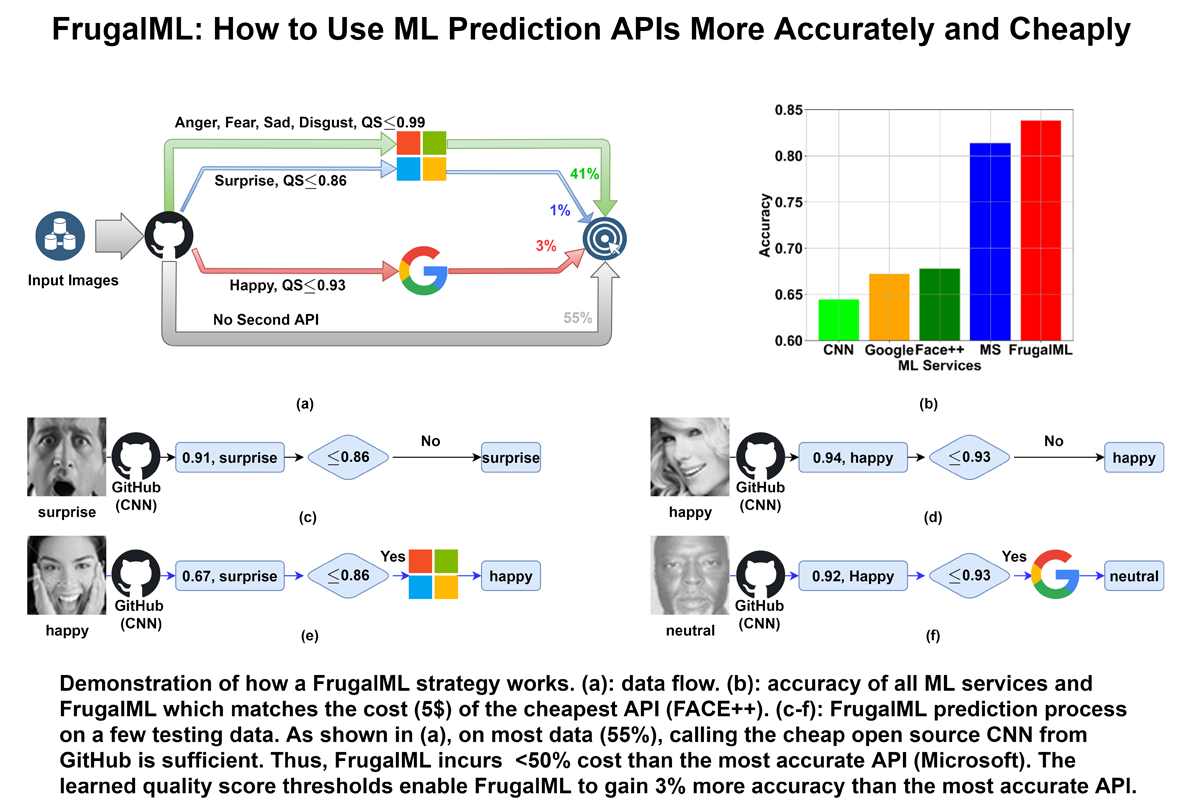

FrugalML: How to Use ML Prediction APIs More Accurately and Cheaply

Authors: Lingjiao Chen; Matei Zaharia; James Zou

Contact: lingjiao@stanford.edu

Links: Paper | Blog Post | Website

Keywords: machine learning as a service, ensemble learning, meta learning, systems for machine learning

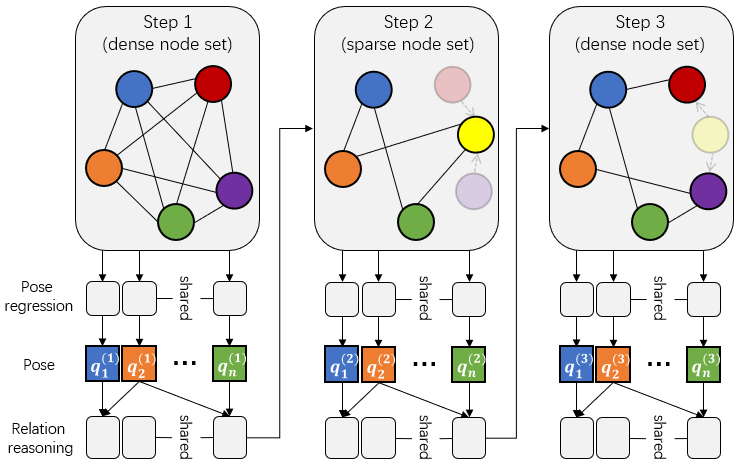

Generative 3D Part Assembly via Dynamic Graph Learning

Authors: Jialei Huang, Guanqi Zhan, Qingnan Fan, Kaichun Mo, Lin Shao, Baoquan Chen, Leonidas Guibas, Hao Dong

Contact: fqnchina@gmail.com

Links: Paper

Keywords: 3d part assembly, dynamic graph learning

Generative 3D Part Assembly via Dynamic Graph Learning

Authors: Jialei Huang*, Guanqi Zhan*, Qingnan Fan, Kaichun Mo, Lin Shao, Baoquan Chen, Leonidas J. Guibas, Hao Dong

Contact: kaichun@cs.stanford.edu

Links: Paper | Website

Keywords: 3d part assembly, graph neural network

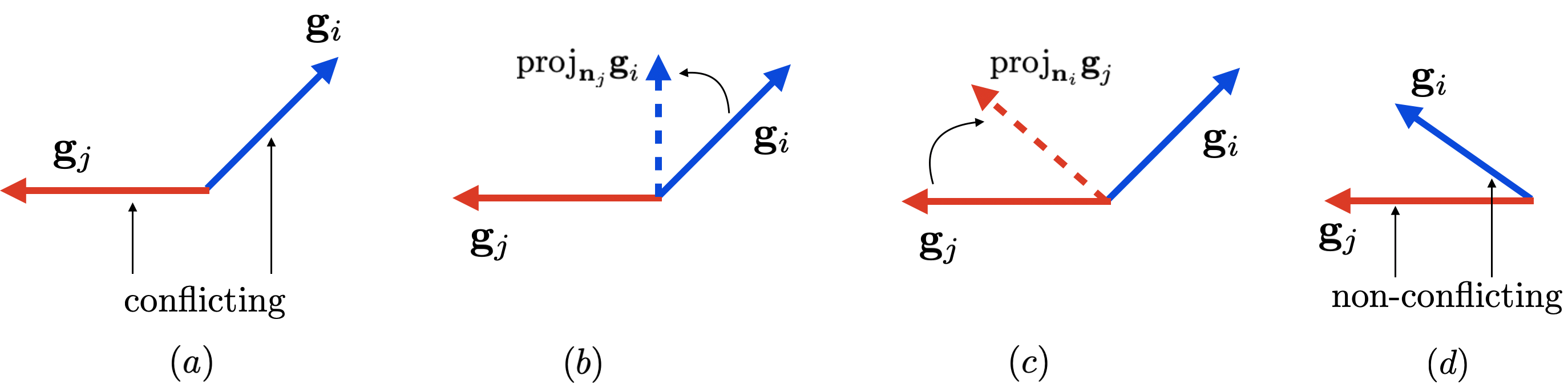

Gradient Surgery for Multi-Task Learning

Authors: Tianhe Yu, Saurabh Kumar, Abhishek Gupta, Sergey Levine, Karol Hausman, Chelsea Finn

Contact: tianheyu@cs.stanford.edu

Links: Paper | Website

Keywords: multi-task learning, deep reinforcement learning

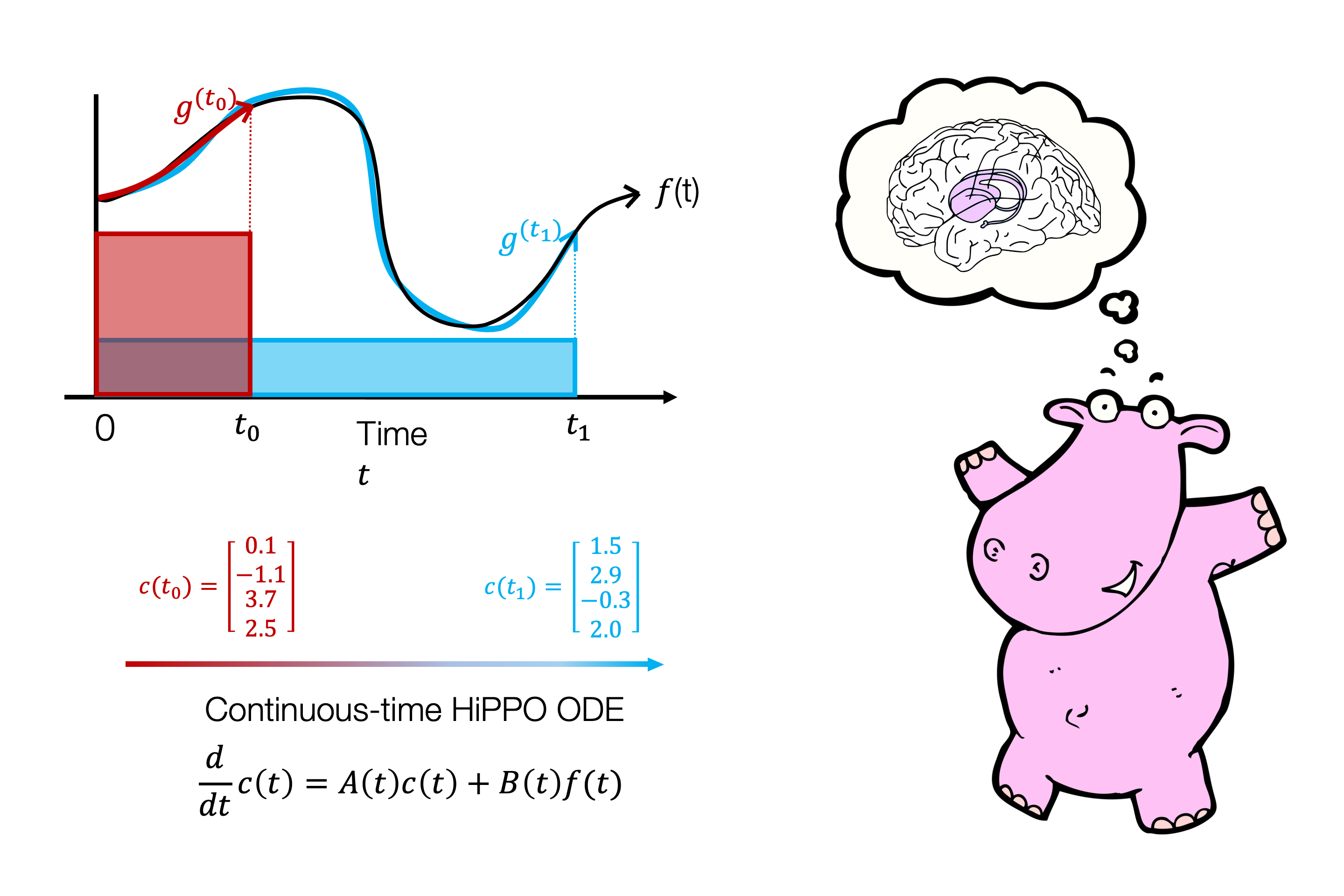

HiPPO: Recurrent Memory with Optimal Polynomial Projections

Authors: Albert Gu*, Tri Dao*, Stefano Ermon, Atri Rudra, Chris Ré

Contact: albertgu@stanford.edu, trid@stanford.edu

Links: Paper | Blog Post

Keywords: representation learning, time series, recurrent neural networks, lstm, orthogonal polynomials

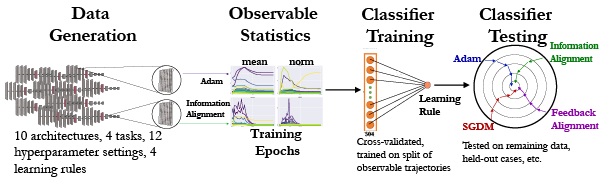

Identifying Learning Rules From Neural Network Observables

Authors: Aran Nayebi, Sanjana Srivastava, Surya Ganguli, Daniel L.K. Yamins

Contact: anayebi@stanford.edu

Award nominations: Spotlight Presentation

Links: Paper | Website

Keywords: computational neuroscience, learning rule, deep networks

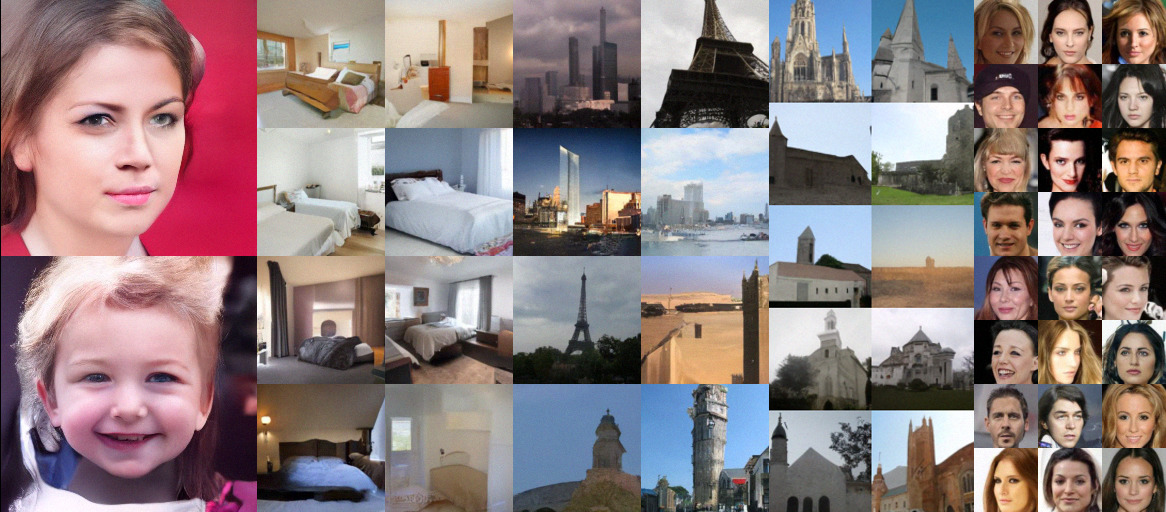

Improved Techniques for Training Score-Based Generative Models

Authors: Yang Song, Stefano Ermon

Contact: songyang@stanford.edu

Links: Paper

Keywords: score-based generative modeling, score matching, deep generative models

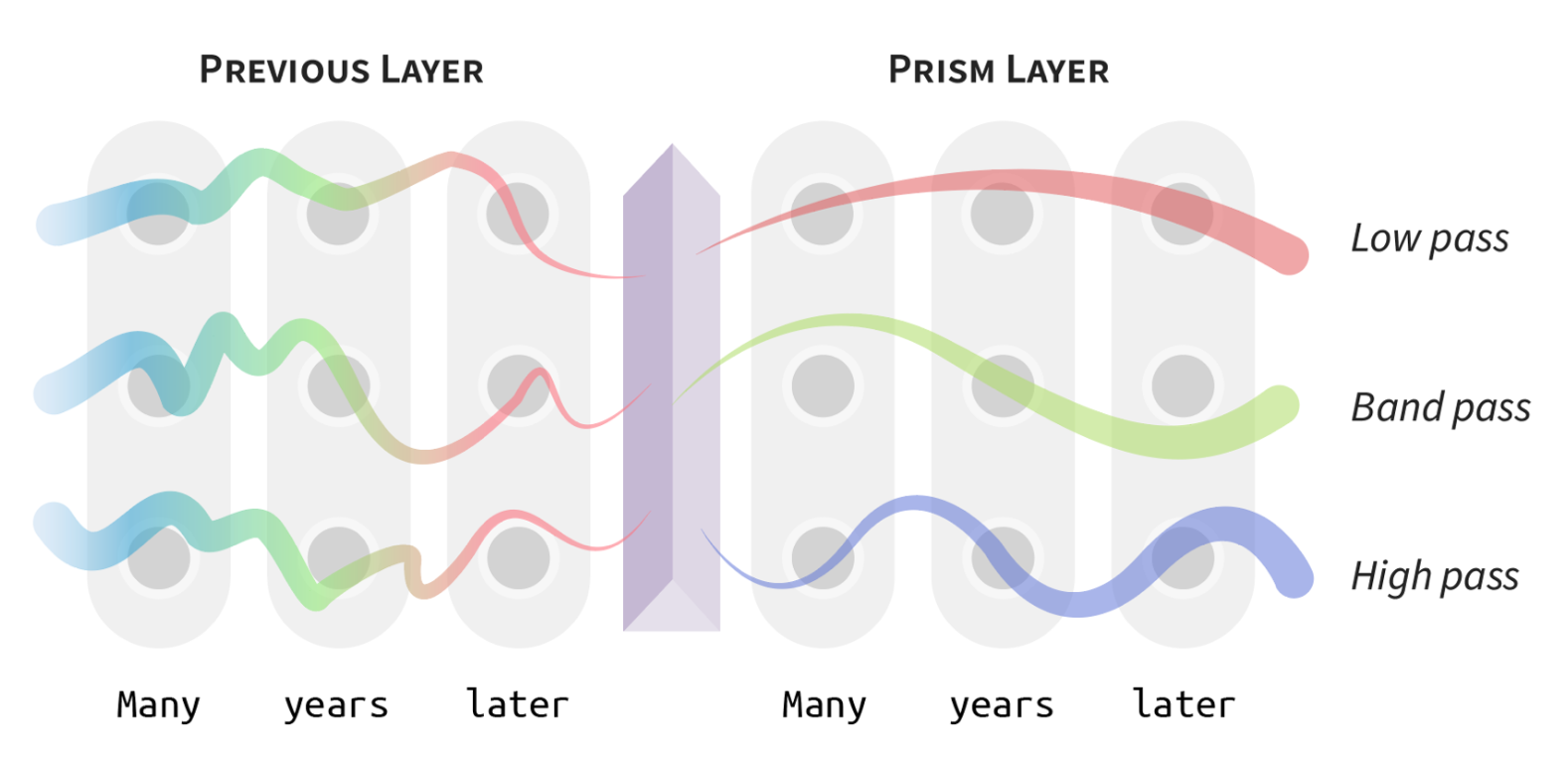

Language Through a Prism: A Spectral Approach for Multiscale Language Representations

Authors: Alex Tamkin, Dan Jurafsky, Noah Goodman

Contact: atamkin@stanford.edu

Links: Paper

Keywords: bert, signal processing, self-supervised learning, interpretability, multiscale

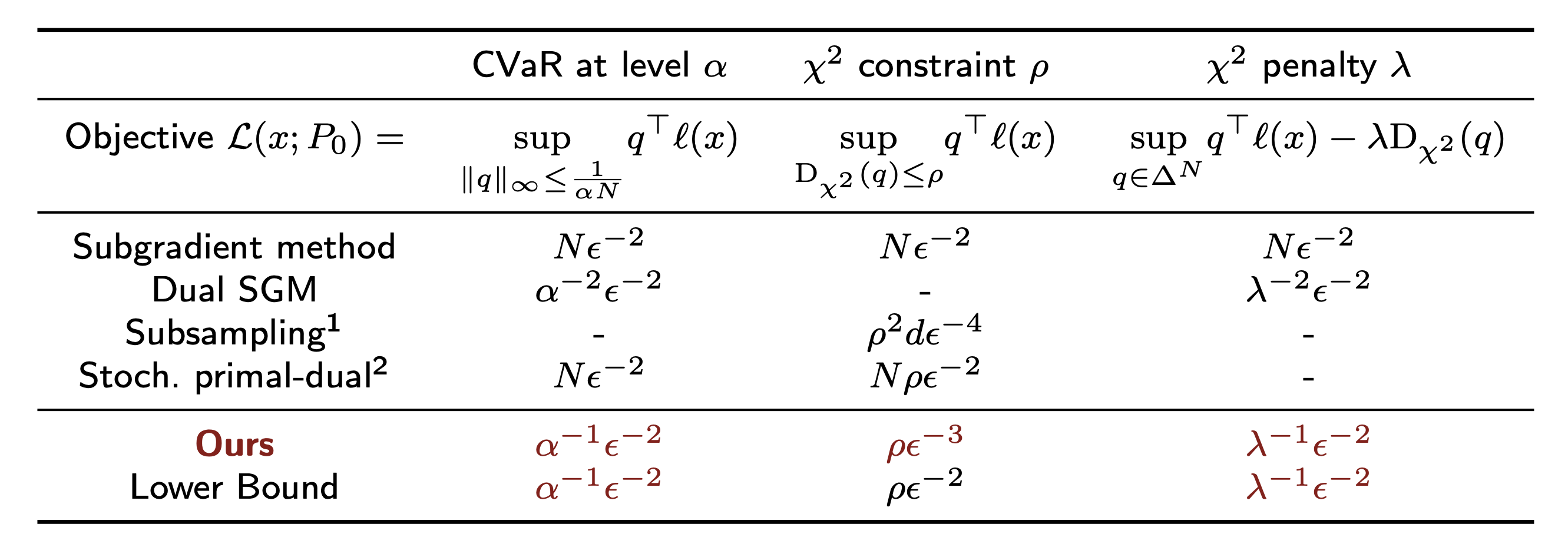

Large-Scale Methods for Distributionally Robust Optimization

Authors: Daniel Levy, Yair Carmon, John Duchi, Aaron Sidford

Contact: danilevy@stanford.edu

Links: Paper

Keywords: robustness dro optimization large-scale optimal

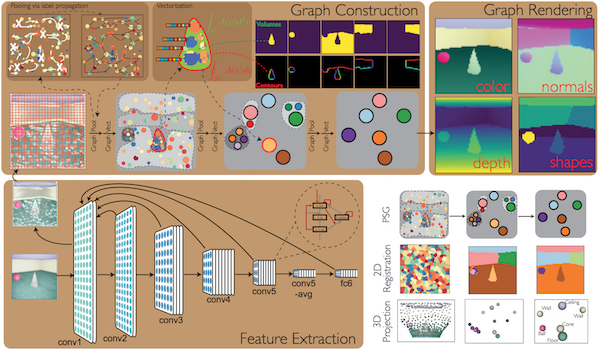

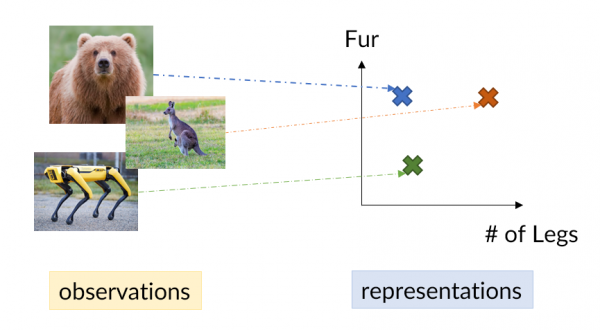

Learning Physical Graph Representations from Visual Scenes

Authors: Daniel Bear, Chaofei Fan, Damian Mrowca, Yunzhu Li, Seth Alter, Aran Nayebi, Jeremy Schwartz, Li F. Fei-Fei, Jiajun Wu, Josh Tenenbaum, Daniel L. Yamins

Contact: dbear@stanford.edu

Links: Paper | Blog Post | Website

Keywords: structure learning, graph learning, visual scene representations, unsupervised learning, unsupervised segmentation, object-centric representation, intuitive physics

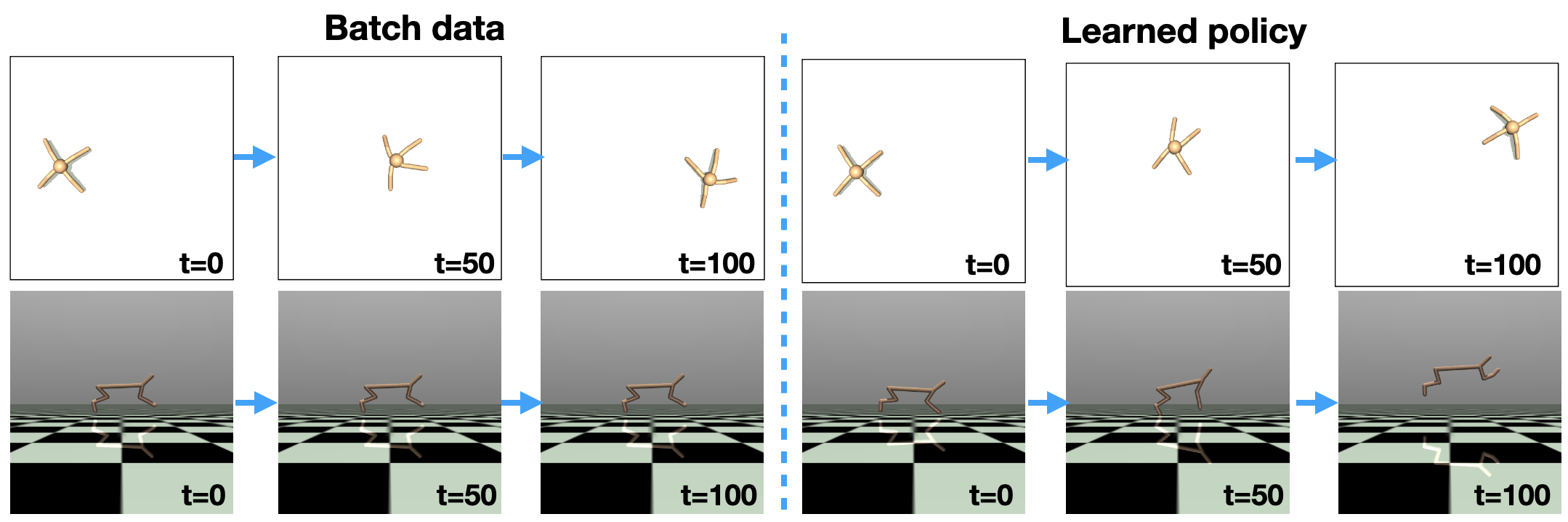

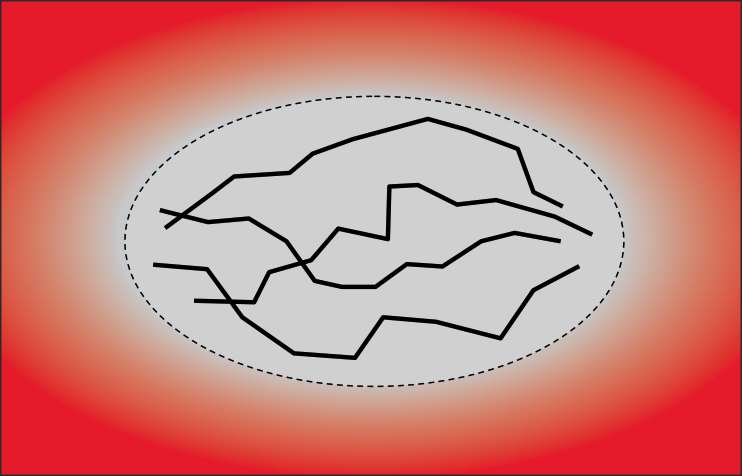

MOPO: Model-based Offline Policy Optimization

Authors: Tianhe Yu*, Garrett Thomas*, Lantao Yu, Stefano Ermon, James Zou, Sergey Levine, Chelsea Finn, Tengyu Ma

Contact: tianheyu@cs.stanford.edu

Links: Paper | Website

Keywords: offline reinforcement learning, model-based reinforcement learning

MOPO: Model-based Offline Policy Optimization

Authors: Tianhe Yu, Garrett Thomas, Lantao Yu, Stefano Ermon, James Zou, Sergey Levine, Chelsea Finn, Tengyu Ma

Contact: tianheyu@cs.stanford.edu,gwthomas@stanford.edu

Links: Paper

Keywords: model-based rl, offline rl, batch rl

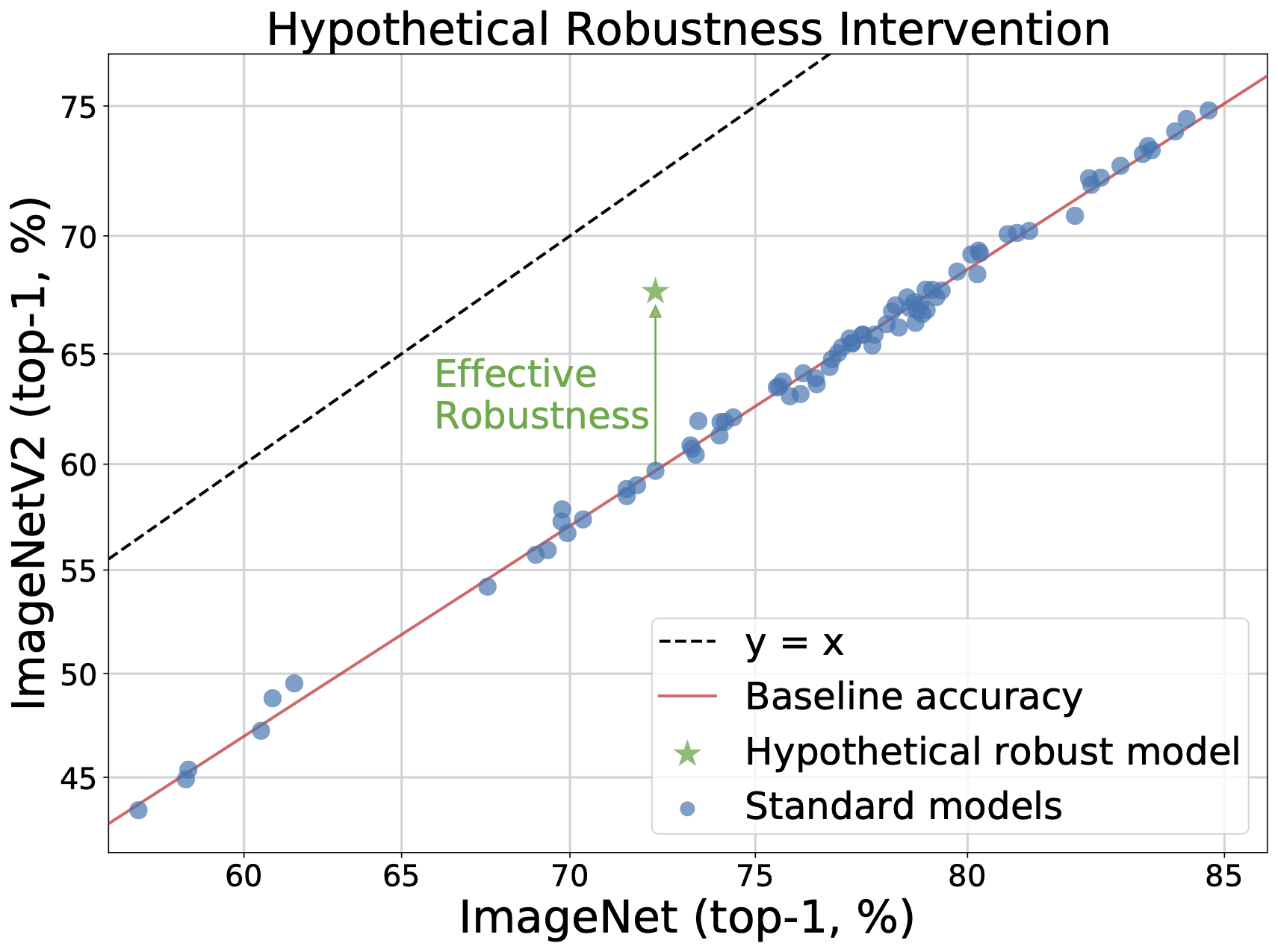

Measuring Robustness to Natural Distribution Shifts in Image Classification

Authors: Rohan Taori, Achal Dave, Vaishaal Shankar, Nicholas Carlini, Benjamin Recht, Ludwig Schmidt

Contact: rtaori@stanford.edu

Award nominations: Spotlight

Links: Paper | Website

Keywords: machine learning, robustness, image classification

Minibatch Stochastic Approximate Proximal Point Methods

Authors: Hilal Asi, Karan Chadha, Gary Cheng, John Duchi

Contact: chenggar@stanford.edu

Award nominations: Spotlight talk

Links: Paper

Keywords: stochastic optimization, sgd, aprox

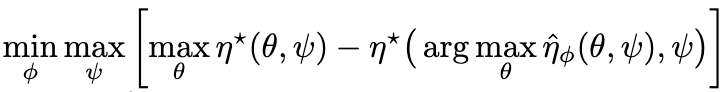

Model-based Adversarial Meta-Reinforcement Learning

Authors: Zichuan Lin, Garrett Thomas, Guangwen Yang, Tengyu Ma

Contact: lzcthu12@gmail.com,gwthomas@stanford.edu

Links: Paper

Keywords: model-based rl, meta-rl, minimax

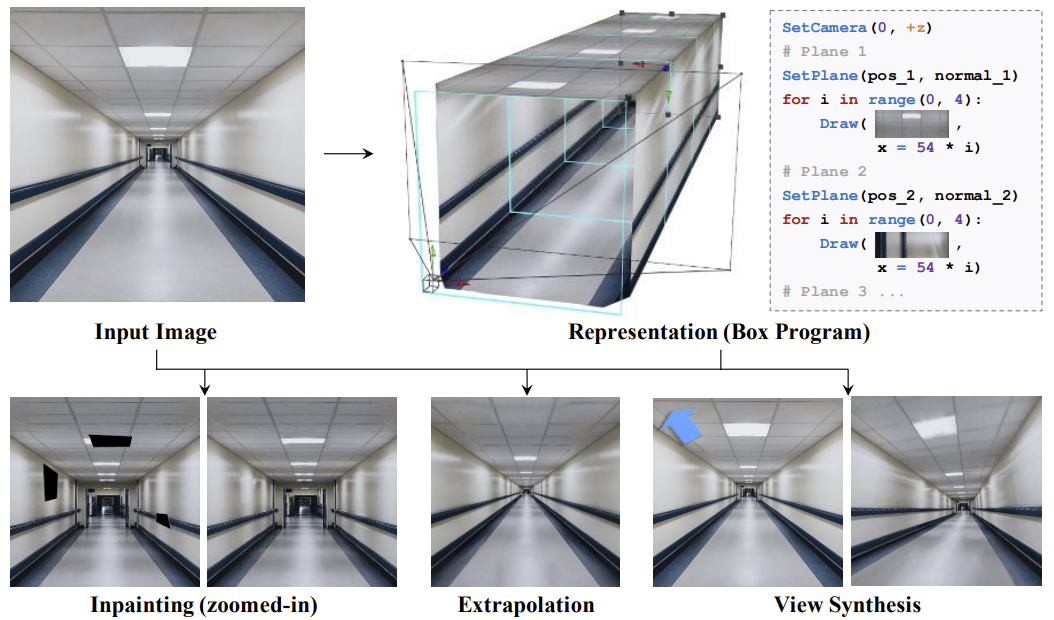

Multi-Plane Program Induction with 3D Box Priors

Authors: Yikai Li, Jiayuan Mao, Xiuming Zhang, William T. Freeman, Joshua B. Tenenbaum, Noah Snavely, Jiajun Wu

Contact: jiajunwu@cs.stanford.edu

Links: Paper | Video | Website

Keywords: visual program induction, 3d vision, image editing

Multi-label Contrastive Predictive Coding

Authors: Jiaming Song, Stefano Ermon

Contact: jiaming.tsong@gmail.com

Links: Paper

Keywords: representation learning, mutual information

Neuron Shapley: Discovering the Responsible Neurons

Authors: Amirata Ghorbani, James Zou

Contact: amiratag@stanford.edu

Links: Paper

Keywords: interpretability, deep learning, shapley value

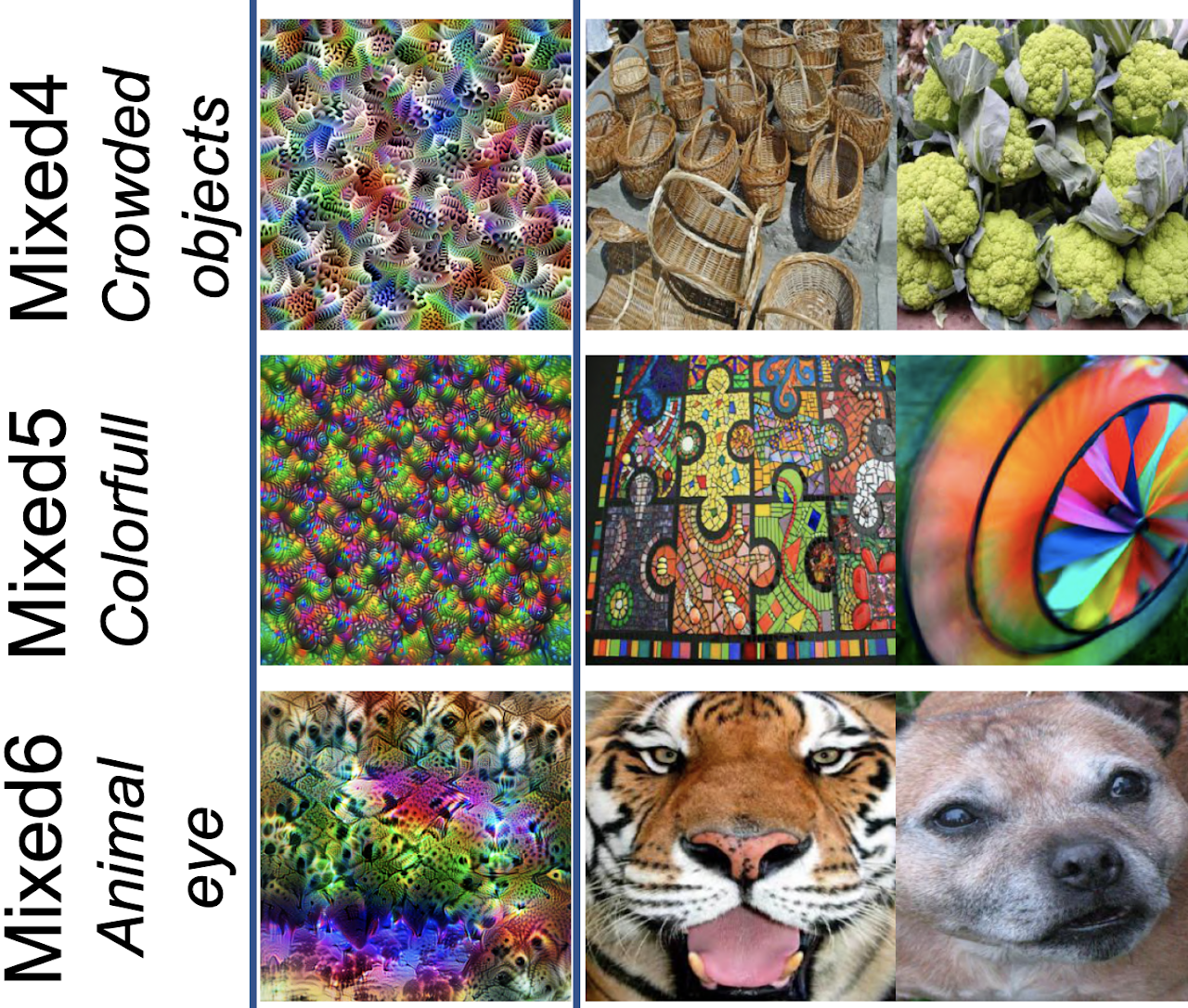

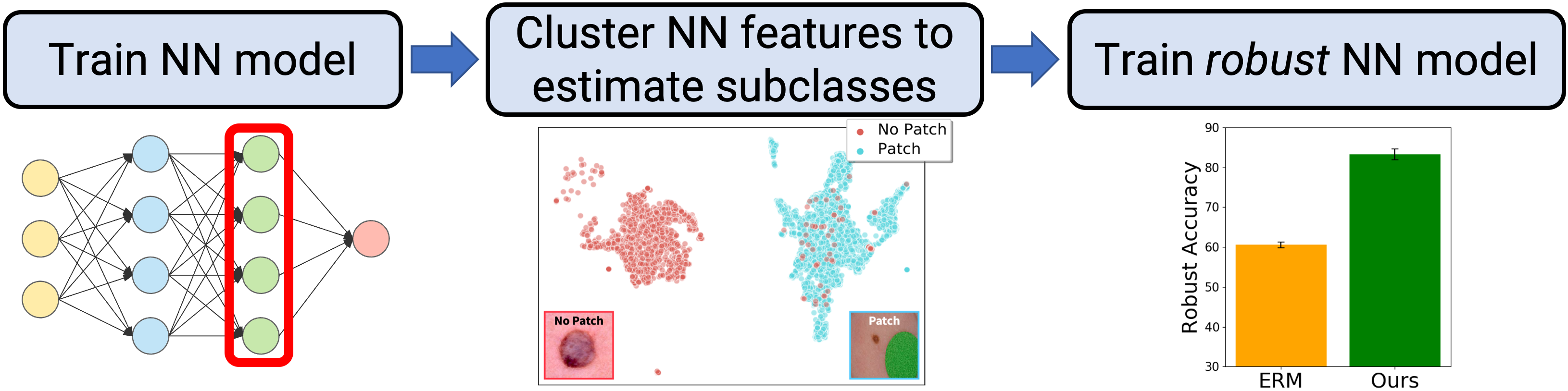

No Subclass Left Behind: Fine-Grained Robustness in Coarse-Grained Classification Problems

Authors: Nimit Sharad Sohoni, Jared Alexander Dunnmon, Geoffrey Angus, Albert Gu, Christopher Ré

Contact: nims@stanford.edu

Links: Paper | Blog Post | Video

Keywords: classification, robustness, clustering, neural feature representations

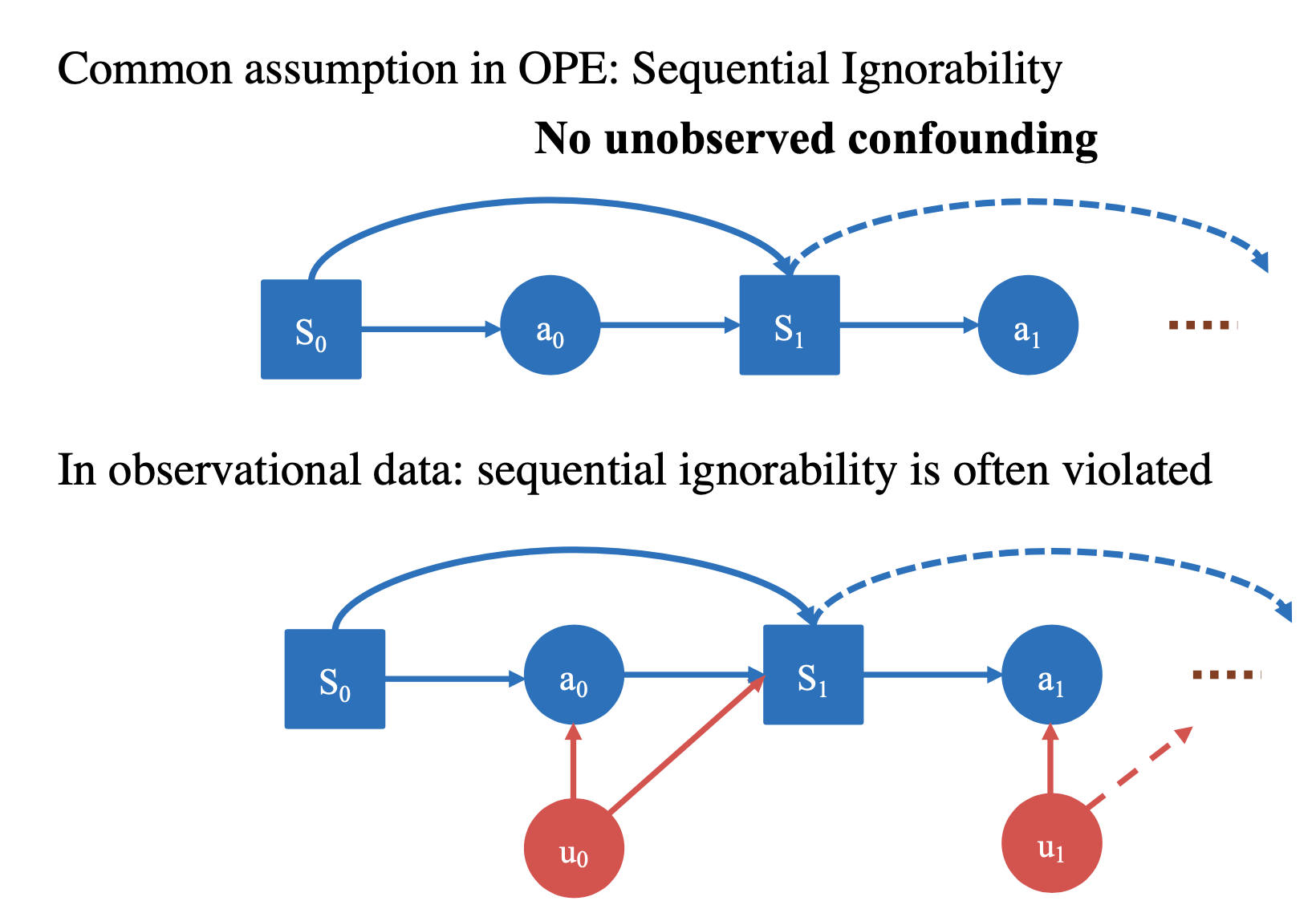

Off-policy Policy Evaluation For Sequential Decisions Under Unobserved Confounding

Authors: Hongseok Namkoong, Ramtin Keramati, Steve Yadlowsky, Emma Brunskill

Contact: keramati@stanford.edu

Links: Paper

Keywords: off-policy policy evaluation, unobserved confounding, reinforcement learning

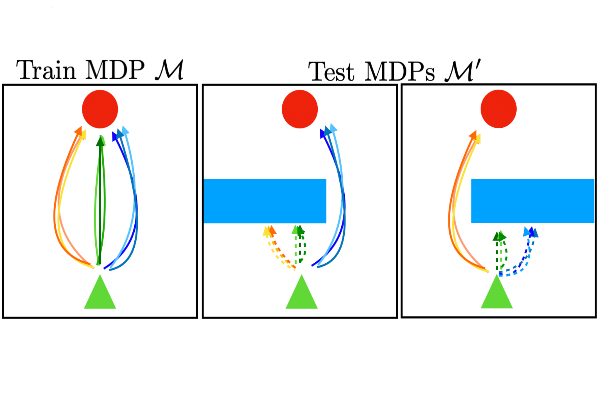

One Solution is Not All You Need: Few-Shot Extrapolation via Structured MaxEnt RL

Authors: Saurabh Kumar, Aviral Kumar, Sergey Levine, Chelsea Finn

Contact: szk@stanford.edu

Links: Paper

Keywords: robustness, diversity, reinforcement learning

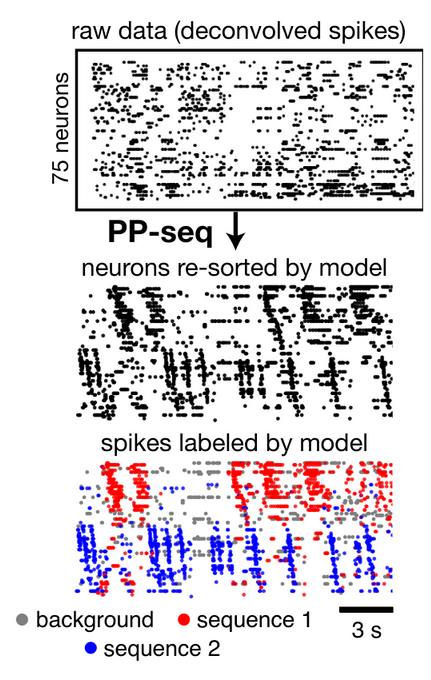

Point process models for sequence detection in high-dimensional neural spike trains

Authors: Alex H. Williams, Anthony Degleris, Yixin Wang, Scott W. Linderman

Contact: ahwillia@stanford.edu

Award nominations: Selected for Oral Presentation

Links: Paper | Website

Keywords: bayesian nonparametrics, unsupervised learning

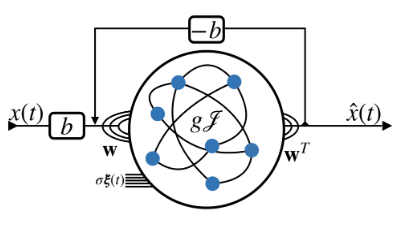

Predictive coding in balanced neural networks with noise, chaos and delays

Authors: Jonathan Kadmon, Jonathan Timcheck, Surya Ganguli

Contact: kadmonj@stanford.edu

Links: Paper

Keywords: neuroscience, predictive coding, chaos

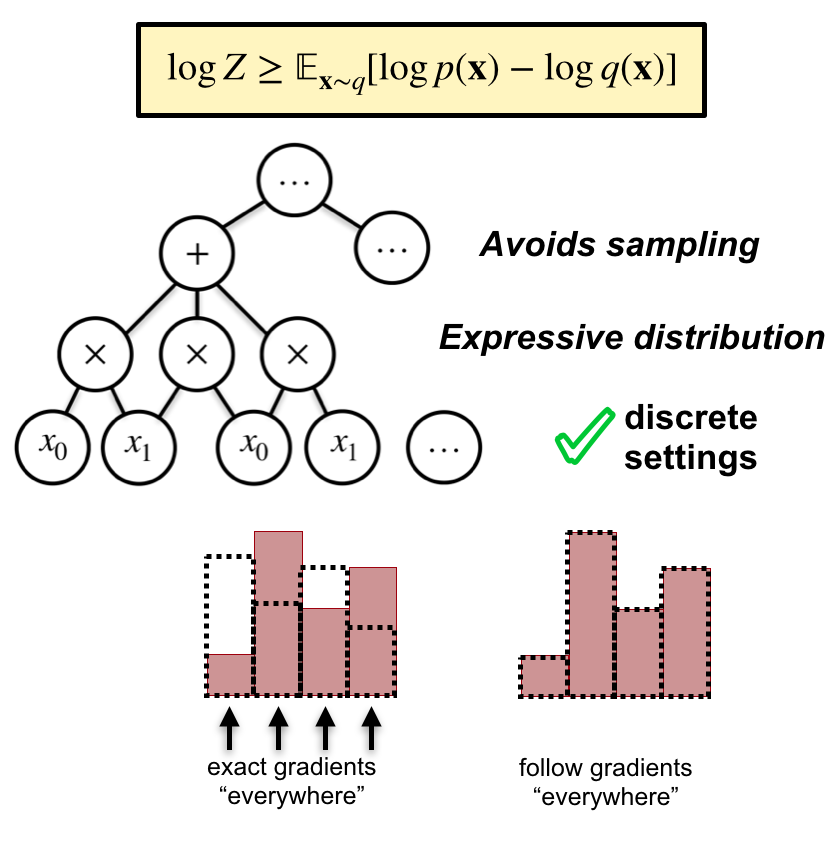

Probabilistic Circuits for Variational Inference in Discrete Graphical Models

Authors: Andy Shih, Stefano Ermon

Contact: andyshih@stanford.edu

Links: Paper

Keywords: variational inference, discrete, high-dimensions, sum product networks, probabilistic circuits, graphical models

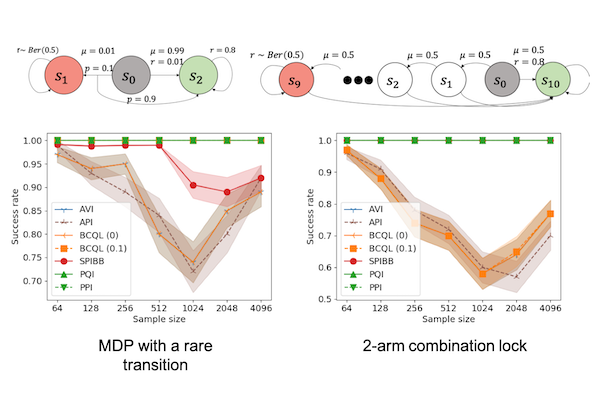

Provably Good Batch Off-Policy Reinforcement Learning Without Great Exploration

Authors: Yao Liu, Adith Swaminathan, Alekh Agarwal, Emma Brunskill.

Contact: yaoliu@stanford.edu

Links: Paper

Keywords: reinforcement leanring, off-policy, batch reinforcement learning

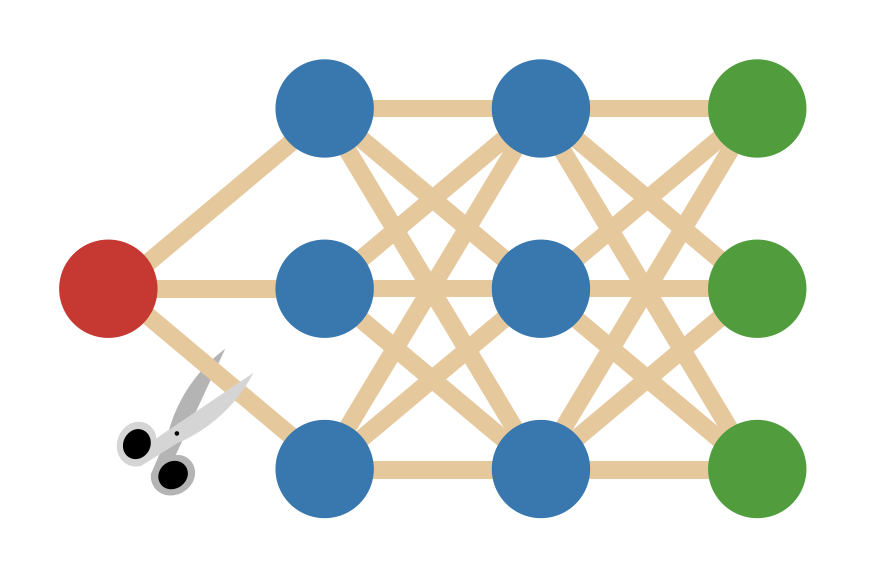

Pruning neural networks without any data by iteratively conserving synaptic flow

Authors: Hidenori Tanaka, Daniel Kunin, Daniel L. K. Yamins, Surya Ganguli

Contact: kunin@stanford.edu

Links: Paper | Video | Website

Keywords: network pruning, sparse initialization, lottery ticket

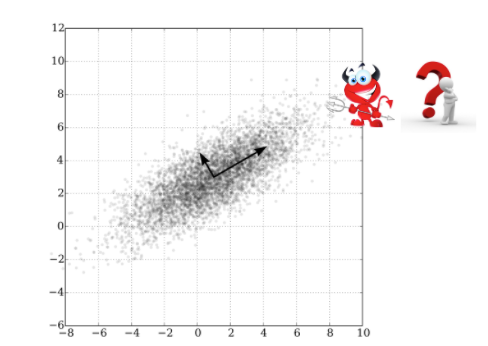

Robust Sub-Gaussian Principal Component Analysis and Width-Independent Schatten Packing

Authors: Arun Jambulapati, Jerry Li, Kevin Tian

Contact: kjtian@stanford.edu

Award nominations: Spotlight presentation

Links: Paper

Keywords: robust statistics, principal component analysis, positive semidefinite programming

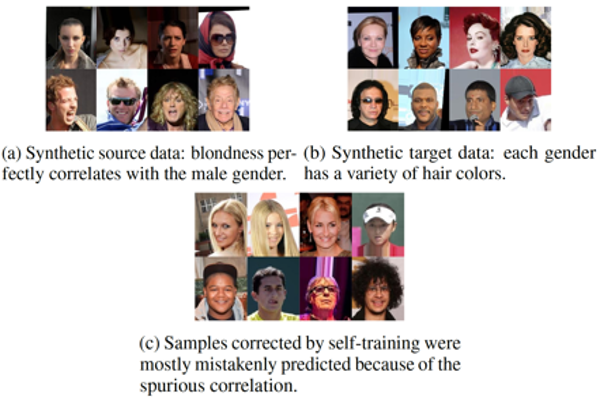

Self-training Avoids Using Spurious Features Under Domain Shift

Authors: Yining Chen*, Colin Wei*, Ananya Kumar, Tengyu Ma (*equal contribution)

Contact: cynnjjs@stanford.edu

Links: Paper

Keywords: self-training, pseudo-labeling, domain shift, robustness

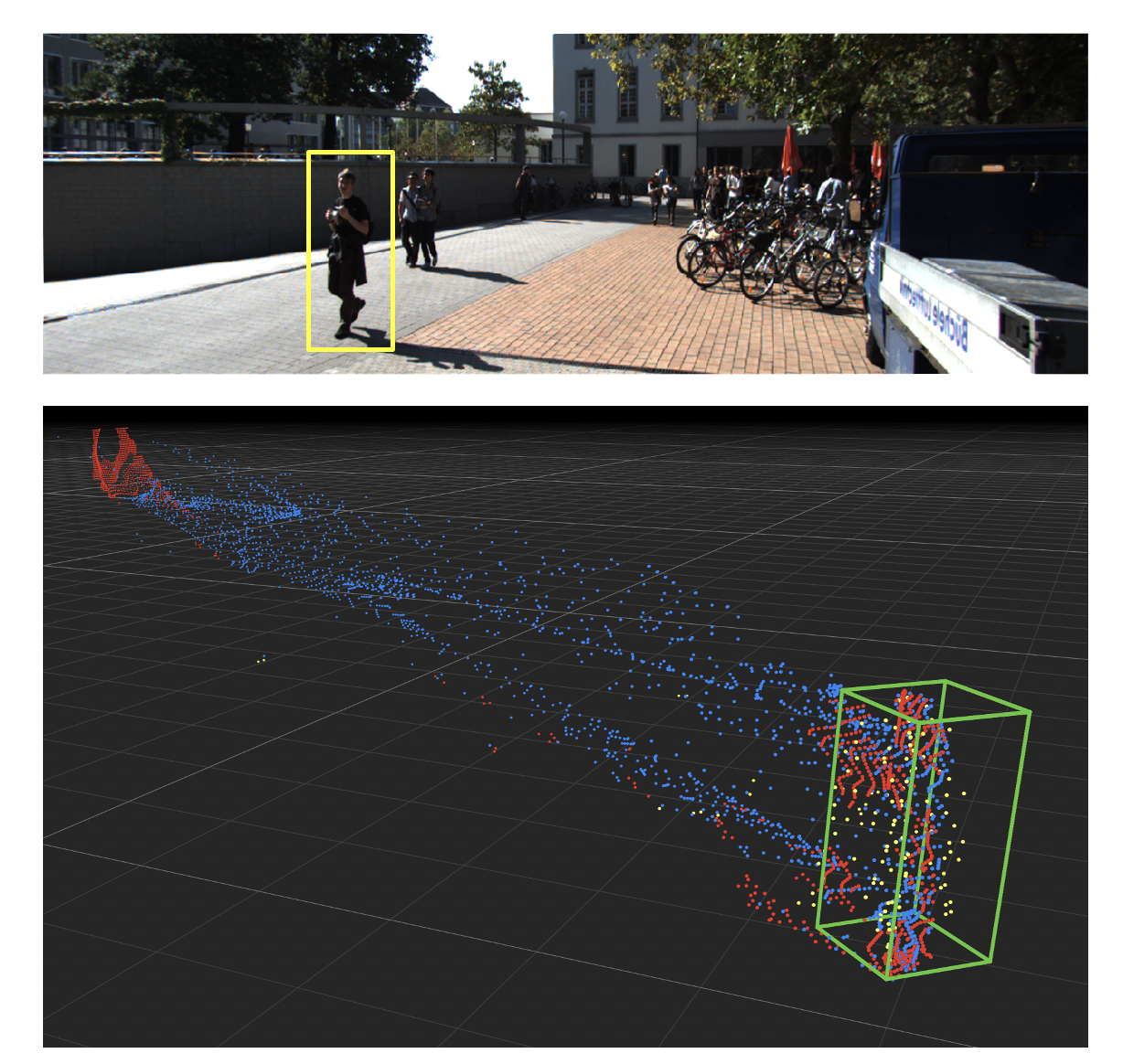

Wasserstein Distances for Stereo Disparity Estimation

Authors: Divyansh Garg, Yan Wang, Bharath Hariharan, Mark Campbell, Kilian Q. Weinberger, Wei-Lun Chao

Contact: divgarg@stanford.edu

Award nominations: Spotlight

Links: Paper | Video | Website

Keywords: depth estimation, disparity estimation, autonomous driving, 3d object detection, statistical learning

We look forward to seeing you at NeurIPS2020!