NVIDIA GPUs will play a key role interpreting data streaming in from the James Webb Space Telescope, with NASA preparing to release next month the first full-color images from the $10 billion scientific instrument.

The telescope’s iconic array of 18 interlocking hexagonal mirrors, which span a total of 21 feet 4 inches, will be able to peer far deeper into the universe, and deeper into the universe’s past, than any tool to date, unlocking discoveries for years to come.

GPU-powered deep learning will play a key role in several of the highest-profile efforts to process data from the revolutionary telescope positioned a million miles away from Earth, explains UC Santa Cruz Astronomy and Astrophysics Professor Brant Robertson.

“The JWST will really enable us to see the universe in a new way that we’ve never seen before,” said Robertson, who is playing a leading role in efforts to use AI to take advantage of the unprecedented opportunities JWST creates. “So it’s really exciting.”

High-Stakes Science

Late last year, Robertson was among the millions tensely following the runup to the launch of the telescope, developed over the course of three decades, and loaded with instruments that define the leading edge of science.

The JWST’s Christmas Day launch went better than planned, allowing the telescope to slide into a LaGrange point — a kind of gravitational eddy in space that allows an object to “park” indefinitely — and extending the telescope’s usable life to more than 10 years.

“It’s working fantastically,” Robertson reports. “All of the signs are it’s going to be a tremendous facility for science.”

AI Powering New Discoveries

Robertson — who leads the computational astrophysics group at UC Santa Cruz — is among a new generation of scientists across a growing array of disciplines using AI to quickly classify the vast quantities of data — often more than can be sifted in a human lifetime — streaming in from the latest generation of scientific instruments.

“What’s great about AI and machine learning is that you can train a model to actually make those decisions for you in a way that is less hands-on and more based on a set of metrics that you define,” Robertson said.

Working with Ryan Hausen, a Ph.D. student in UC Santa Cruz’s computer science department, Robertson helped create a deep learning framework that classifies astronomical objects, such as galaxies, based on the raw data streaming out of telescopes on a pixel by pixel basis, which they called Morpheus.

It quickly became a key tool for classifying images from the Hubble Space Telescope. Since then the team working on Morpheus has grown considerably, to roughly a half-dozen people at UC Santa Cruz.

Researchers are able to use NVIDIA GPUs to accelerate Morpheus across a variety of platforms — from an NVIDIA DGX Station desktop AI system to a small computing cluster equipped with several dozen NVIDIA V100 Tensor Core GPUs to sophisticated simulations runs thousands of GPUs on the Summit supercomputer at Oak Ridge National Laboratory.

A Trio of High-Profile Projects

Now, with the first science data from the JWST due for release July 12, much more’s coming.

“We’ll be applying that same framework to all of the major extragalactic JWST surveys that will be conducted in the first year,” Robertson.

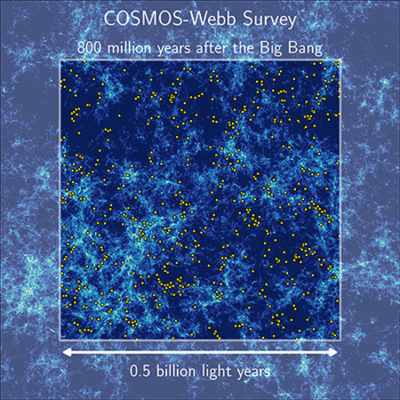

Robertson is among a team of nearly 50 researchers who will be mapping the earliest structure of the universe through the COSMOS-Webb program, the largest general observer program selected for JWST’s first year.

Over the course of more than 200 hours, the COSMOS-Webb program will survey half a million galaxies with multiband, high-resolution, near-infrared imaging and an unprecedented 32,000 galaxies in mid-infrared.

“The COSMOS-Webb project is the largest contiguous area survey that will be executed with JWST for the foreseeable future,” Robertson said.

Robertson also serves on the steering committee for the JWST Advanced Deep Extragalactic Survey, or JADES, to produce infrared imaging and spectroscopy of unprecedented depth. Robertson and his team will put Morpheus to work classifying the survey’s findings.

Robertson and his team are also involved with another survey, dubbed PRIMER, to bring AI and machine learning classification capabilities to the effort.

From Studying the Stars to Studying Ourselves

All these efforts promise to help humanity survey — and understand — far more of our universe than ever before. But perhaps the most surprising application Robertson has found for Morpheus is here at home.

“We’ve actually trained Morpheus to go back into satellite data and automatically count up how much sea ice is present in the North Atlantic over time,” Robertson said, adding it could help scientists better understand and model climate change.

As a result, a tool developed to help us better understand the history of our universe may soon help us better predict the future of our own small place in it.

FEATURED IMAGE CREDIT: NASA

The post Stunning Insights from James Webb Space Telescope Are Coming, Thanks to GPU-Powered Deep Learning appeared first on NVIDIA Blog.