Posted by Yonglong Tian, Student Researcher and Chen Sun, Staff Research Scientist, Google Research

Most people take for granted the ability to view an object from several different angles, but still recognize that it’s the same object— a dog viewed from the front is still a dog when viewed from the side. While people do this naturally, computer scientists need to explicitly enable machines to learn representations that are view-invariant, with the goal of seeking robust data representations that retain information that is useful to downstream tasks.

Of course, in order to learn these representations, manually annotated training data can be used. However, as in many cases such annotations aren’t available, which gives rise to a series of self- and crossmodal supervised approaches that do not require manually annotated training data. Currently, a popular paradigm for training with such data is contrastive multiview learning, where two views of the same scene (for example, different image channels, augmentations of the same image, and video and text pairs) will tend to converge in representation space while two views of different scenes diverge. Despite their success, one important question remains: “If one doesn’t have annotated labels readily available, how does one select the views to which the representations should be invariant?” In other words, how does one identify an object using information that resides in the pixels of the image itself, while still remaining accurate when that image is viewed from disparate viewpoints?

In “What makes for good views for contrastive learning”, we use theoretical and empirical analysis to better understand the importance of view selection, and argue that one should reduce the mutual information between views while keeping task-relevant information intact. To verify this hypothesis, we devise unsupervised and semi-supervised frameworks that learn effective views by aiming to reduce their mutual information. We also consider data augmentation as a way to reduce mutual information, and show that increasing data augmentation indeed leads to decreasing mutual information while improving downstream classification accuracy. To encourage further research in this space, we have open-sourced the code and pre-trained models.

The InfoMin Hypothesis

The goal of contrastive multiview learning is to learn a parametric encoder, whose output representations can be used to discriminate between pairs of views with the same identities, and pairs with different identities. The amount and type of information shared between the views determines how well the resulting model performs on downstream tasks. We hypothesize that the views that yield the best results should discard as much information in the input as possible except for the task relevant information (e.g., object labels), which we call the InfoMin principle.

Consider the example below in which two patches of the same image represent the different “views”. The training objective is to identify that the two views belong to the same image. It is undesirable to have views that share too much information, for example, where low-level color and texture cues can be exploited as “shortcuts” (left), or to have views that share too little information to identify that they belong to the same image (right). Rather, views at the “sweet spot” share the information related to downstream tasks, such as patches corresponding to different parts of the panda for an object classification task (center).

A Unified View on Contrastive Learning

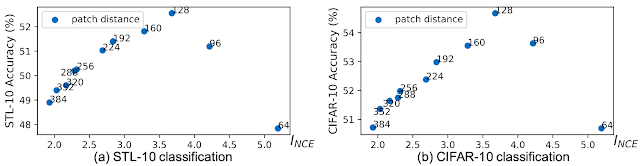

We design several sets of experiments to verify the InfoMin hypothesis, motivated by the fact that there are simple ways to control the mutual information shared between views without any supervision. For example, we can sample different patches from the same images, and reduce their mutual information simply by increasing the distance between the patches. Here, we estimate the mutual information using InfoNCE (INCE), which is a quantitative measure of the mutual information lower bound.. Indeed, we observe a reverse U-shape curve: as mutual information is reduced, the downstream task accuracy first increases and then begins to decrease.

|

| Downstream classification accuracy on STL-10 (left) and CIFAR-10 (right) by applying linear classifiers on representations learned with contrastive learning. Same as the previous illustration, the views are sampled as different patches from the same images. Increasing the Euclidean distance between patches leads to decreasing mutual information. A reverse U-shape curve between classification accuracy and INCE (patch distance) is observed. |

Furthermore, we demonstrate that several state-of-the-art contrastive learning methods (InstDis, MoCo, CMC, PIRL, SimCLR and CPC) can be unified through the perspective of view selection: despite the differences in architecture, objective and engineering details, all recent contrastive learning methods create two views that implicitly follow the InfoMin hypothesis, where the information shared between views are controlled by the strength of data augmentation. Motivated by this, we propose a new set of data augmentations, which outperforms the prior state of the art, SimCLR, by nearly 4% on the ImageNet linear readout benchmark. We also found that transferring our unsupervised pre-trained models to object detection and instance segmentation consistently outperforms ImageNet pre-training.

Learning to Generate Views

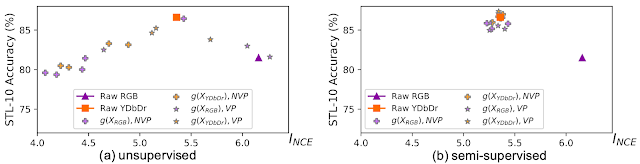

In our work, we design unsupervised and semi-supervised methods that synthesize novel views following the InfoMin hypothesis. We learn flow-based models that transfer natural color spaces into novel color spaces, from which we split the channels to get views. For the unsupervised setup, the view generators are optimized to minimize the InfoNCE bound between views. As shown in the results below, we observe a similar reverse U-shape trend while minimizing the InfoNCE bound.

|

| View generators learned by unsupervised (left) and semi-supervised (right) objectives. |

To reach the sweet spot without overly minimizing mutual information, we can use the semi-supervised setup and guide the view generator to retain label information. As expected, all learned views are now centered around the sweet spot, no matter what the input color space is.

Code and Pretrained Models

To accelerate research in self-supervised contastive learning, we are excited to share the code and pretrained models of InfoMin with the academic community. They can be found here.

Acknowledgements

The core team includes Yonglong Tian, Chen Sun, Ben Poole, Dilip Krishnan, Cordelia Schmid and Phillip Isola. We would like to thank Kevin Murphy for insightful discussion; Lucas Beyer for feedback on the manuscript; and the Google Cloud team for computation support.