Generative AI is the latest turn in the fast-changing digital landscape. One of the groundbreaking innovations making it possible is a relatively new term: SuperNIC.

What Is a SuperNIC?

SuperNIC is a new class of network accelerators designed to supercharge hyperscale AI workloads in Ethernet-based clouds. It provides lightning-fast network connectivity for GPU-to-GPU communication, achieving speeds reaching 400Gb/s using remote direct memory access (RDMA) over converged Ethernet (RoCE) technology.

SuperNICs combine the following unique attributes:

- High-speed packet reordering to ensure that data packets are received and processed in the same order they were originally transmitted. This maintains the sequential integrity of the data flow.

- Advanced congestion control using real-time telemetry data and network-aware algorithms to manage and prevent congestion in AI networks.

- Programmable compute on the input/output (I/O) path to enable customization and extensibility of network infrastructure in AI cloud data centers.

- Power-efficient, low-profile design to efficiently accommodate AI workloads within constrained power budgets.

- Full-stack AI optimization, including compute, networking, storage, system software, communication libraries and application frameworks.

NVIDIA recently unveiled the world’s first SuperNIC tailored for AI computing, based on the BlueField-3 networking platform. It’s a part of the NVIDIA Spectrum-X platform, where it integrates seamlessly with the Spectrum-4 Ethernet switch system.

Together, the NVIDIA BlueField-3 SuperNIC and Spectrum-4 switch system form the foundation of an accelerated computing fabric specifically designed to optimize AI workloads. Spectrum-X consistently delivers high network efficiency levels, outperforming traditional Ethernet environments.

“In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery,” said Yael Shenhav, vice president of DPU and NIC products at NVIDIA. “SuperNICs ensure that your AI workloads are executed with efficiency and speed, making them foundational components for enabling the future of AI computing.”

The Evolving Landscape of AI and Networking

The AI field is undergoing a seismic shift, thanks to the advent of generative AI and large language models. These powerful technologies have unlocked new possibilities, enabling computers to handle new tasks.

AI success relies heavily on GPU-accelerated computing to process mountains of data, train large AI models, and enable real-time inference. This new compute power has opened new possibilities, but it has also challenged Ethernet cloud networks.

Traditional Ethernet, the technology that underpins internet infrastructure, was conceived to offer broad compatibility and connect loosely coupled applications. It wasn’t designed to handle the demanding computational needs of modern AI workloads, which involve tightly coupled parallel processing, rapid data transfers and unique communication patterns — all of which demand optimized network connectivity.

Foundational network interface cards (NICs) were designed for general-purpose computing, universal data transmission and interoperability. They were never designed to cope with the unique challenges posed by the computational intensity of AI workloads.

Standard NICs lack the requisite features and capabilities for efficient data transfer, low latency and the deterministic performance crucial for AI tasks. SuperNICs, on the other hand, are purpose-built for modern AI workloads.

SuperNIC Advantages in AI Computing Environments

Data processing units (DPUs) deliver a wealth of advanced features, offering high throughput, low-latency network connectivity and more. Since their introduction in 2020, DPUs have gained popularity in the realm of cloud computing, primarily due to their capacity to offload, accelerate and isolate data center infrastructure processing.

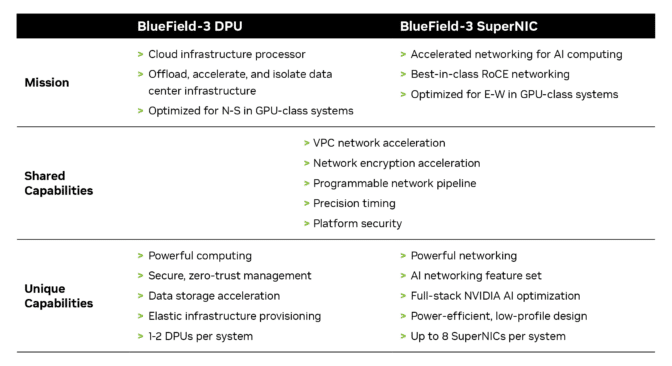

Although DPUs and SuperNICs share a range of features and capabilities, SuperNICs are uniquely optimized for accelerating networks for AI. The chart below shows how they compare:

Distributed AI training and inference communication flows depend heavily on network bandwidth availability for success. SuperNICs, distinguished by their sleek design, scale more effectively than DPUs, delivering an impressive 400Gb/s of network bandwidth per GPU.

The 1:1 ratio between GPUs and SuperNICs within a system can significantly enhance AI workload efficiency, leading to greater productivity and superior outcomes for enterprises.

The sole purpose of SuperNICs is to accelerate networking for AI cloud computing. Consequently, it achieves this goal using less computing power than a DPU, which requires substantial computational resources to offload applications from a host CPU.

The reduced computing requirements also translate to lower power consumption, which is especially crucial in systems containing up to eight SuperNICs.

Additional distinguishing features of the SuperNIC include its dedicated AI networking capabilities. When tightly integrated with an AI-optimized NVIDIA Spectrum-4 switch, it offers adaptive routing, out-of-order packet handling and optimized congestion control. These advanced features are instrumental in accelerating Ethernet AI cloud environments.

Revolutionizing AI Cloud Computing

The NVIDIA BlueField-3 SuperNIC offers several benefits that make it key for AI-ready infrastructure:

- Peak AI workload efficiency: The BlueField-3 SuperNIC is purpose-built for network-intensive, massively parallel computing, making it ideal for AI workloads. It ensures that AI tasks run efficiently — without bottlenecks.

- Consistent and predictable performance: In multi-tenant data centers where numerous tasks are processed simultaneously, the BlueField-3 SuperNIC ensures that each job and tenant’s performance is isolated, predictable and unaffected by other network activities.

- Secure multi-tenant cloud infrastructure: Security is a top priority, especially in data centers handling sensitive information. The BlueField-3 SuperNIC maintains high security levels, enabling multiple tenants to coexist while keeping data and processing isolated.

- Extensible network infrastructure: The BlueField-3 SuperNIC isn’t limited in scope — it’s highly flexible and adaptable to a myriad of other network infrastructure needs.

- Broad server manufacturer support: The BlueField-3 SuperNIC fits seamlessly into most enterprise-class servers without excessive power consumption in data centers.

Learn more about NVIDIA BlueField-3 SuperNICs, including how they integrate across NVIDIA’s data center platforms, in the whitepaper: Next-Generation Networking for the Next Wave of AI.