Interest in generative AI is continuing to grow, as new models include more capabilities. With the latest advancements, even enthusiasts without a developer background can dive right into tapping these models.

With popular applications like Langflow — a low-code, visual platform for designing custom AI workflows — AI enthusiasts can use simple, no-code user interfaces (UIs) to chain generative AI models. And with native integration for Ollama, users can now create local AI workflows and run them at no cost and with complete privacy, powered by NVIDIA GeForce RTX and RTX PRO GPUs.

Visual Workflows for Generative AI

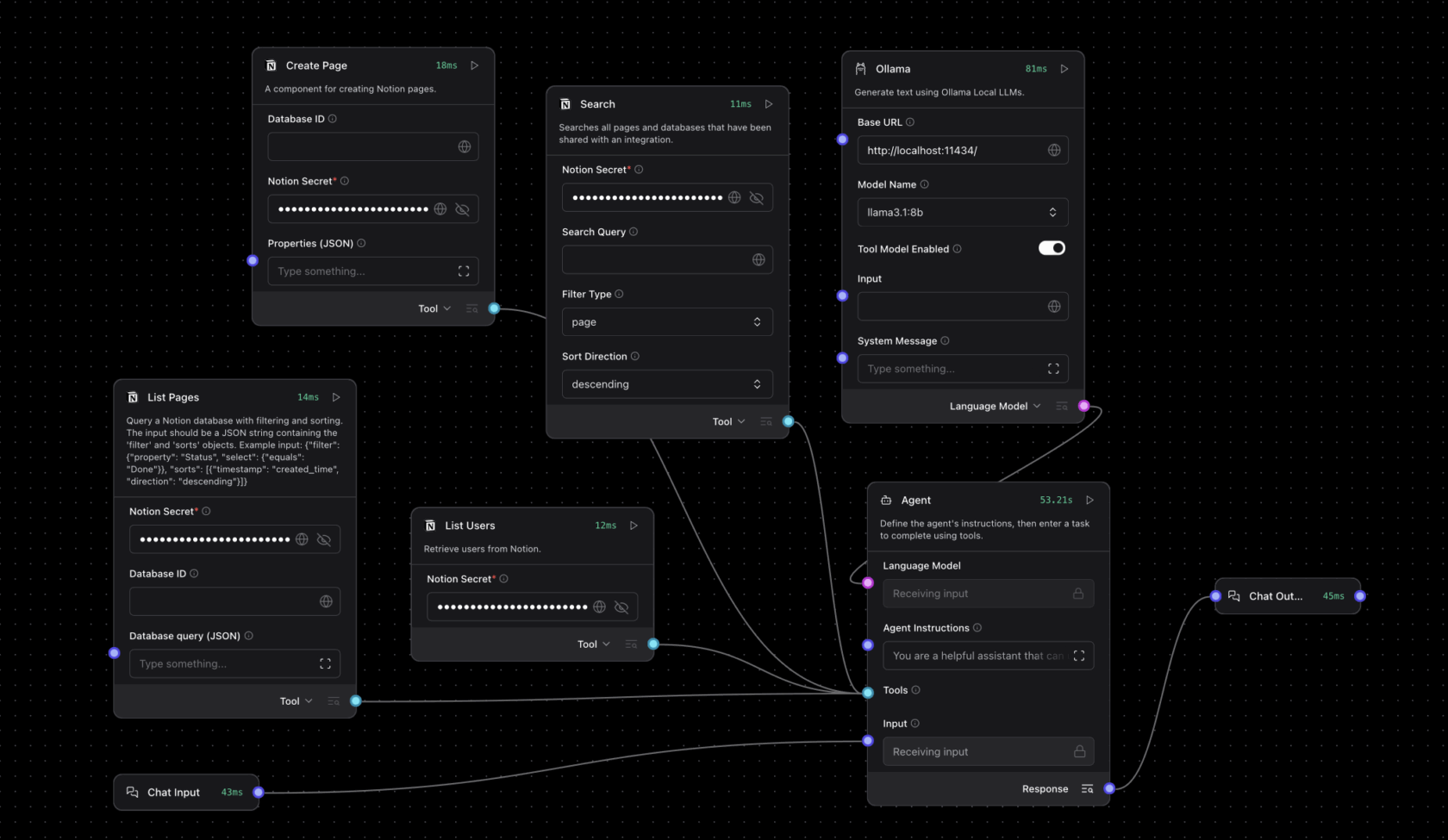

Langflow offers an easy-to-use, canvas-style interface where components of generative AI models — like large language models (LLMs), tools, memory stores and control logic — can be connected through a simple drag-and-drop UI.

This allows complex AI workflows to be built and modified without manual scripting, easing the development of agents capable of decision-making and multistep actions. AI enthusiasts can iterate and build complex AI workflows without prior coding expertise.

Unlike apps limited to running a single-turn LLM query, Langflow can build advanced AI workflows that behave like intelligent collaborators, capable of analyzing files, retrieving knowledge, executing functions and responding contextually to dynamic inputs.

Langflow can run models from the cloud or locally — with full acceleration for RTX GPUs through Ollama. Running workflows locally provides multiple key benefits:

- Data privacy: Inputs, files and prompts remain confined to the device.

- Low costs and no API keys: As cloud application programming interface access is not required, there are no token restrictions, service subscriptions or costs associated with running the AI models.

- Performance: RTX GPUs enable low-latency, high-throughput inference, even with long context windows.

- Offline functionality: Local AI workflows are accessible without the internet.

Creating Local Agents With Langflow and Ollama

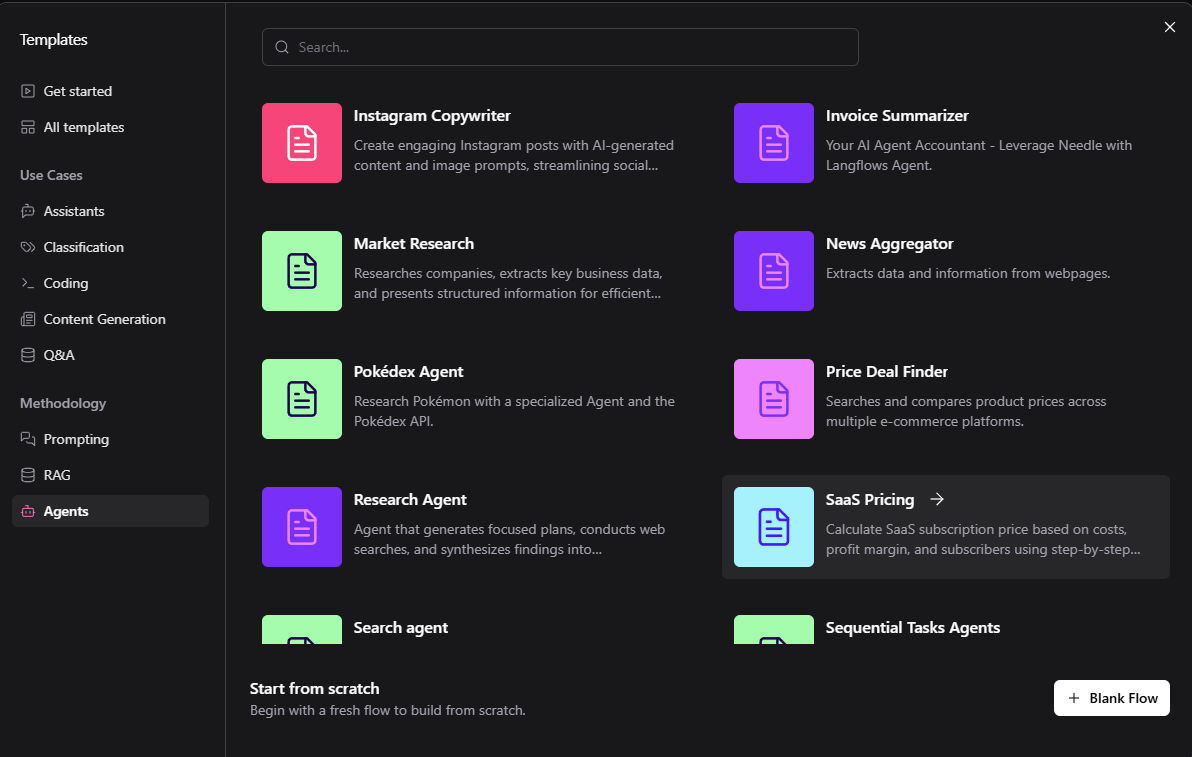

Getting started with Ollama within Langflow is simple. Built-in starters are available for use cases ranging from travel agents to purchase assistants. The default templates typically run in the cloud for testing, but they can be customized to run locally on RTX GPUs with Langflow.

To build a local workflow:

- Install the Langflow desktop app for Windows.

- Install Ollama, then run Ollama and launch the preferred model (Llama 3.1 8B or Qwen3 4B recommended for users’ first workflow).

- Run Langflow and select a starter.

- Replace cloud endpoints with local Ollama runtime. For agentic workflows, set the language model to Custom, drag an Ollama node to the canvas and connect the agent node’s custom model to the Language Model output of the Ollama node.

Templates can be modified and expanded — such as by adding system commands, local file search or structured outputs — to meet advanced automation and assistant use cases.

Watch this step-by-step walkthrough from the Langflow team:

Get Started

Below are two sample projects to start exploring.

Create a personal travel itinerary agent: Input all travel requirements — including desired restaurant reservations, travelers’ dietary restrictions and more — to automatically find and arrange accommodations, transport, food and entertainment.

Expand Notion’s capabilities: Notion, an AI workspace application for organizing projects, can be expanded with AI models that automatically input meeting notes, update the status of projects based on Slack chats or email, and send out project or meeting summaries.

RTX Remix Adds Model Context Protocol, Unlocking Agent Mods

RTX Remix — an open-source platform that allows modders to enhance materials with generative AI tools and create stunning RTX remasters that feature full ray tracing and neural rendering technologies — is adding support for Model Context Protocol (MCP) with Langflow.

Langflow nodes with MCP give users a direct interface for working with RTX Remix — enabling modders to build modding assistants capable of intelligently interacting with Remix documentation and mod functions.

To help modders get started, NVIDIA’s Langflow Remix template includes:

- A retrieval-augmented generation module with RTX Remix documentation.

- Real-time access to Remix documentation for Q&A-style support.

- An action nodule via MCP that supports direct function execution inside RTX Remix, including asset replacement, metadata updates and automated mod interactions.

Modding assistant agents built with this template can determine whether a query is informational or action-oriented. Based on context, agents dynamically respond with guidance or take the requested action. For example, a user might prompt the agent: “Swap this low-resolution texture with a higher-resolution version.” In response, the agent would check the asset’s metadata, locate an appropriate replacement and update the project using MCP functions — without requiring manual interaction.

Documentation and setup instructions for the Remix template are available in the RTX Remix developer guide.

Control RTX AI PCs With Project G-Assist in Langflow

NVIDIA Project G-Assist is an experimental, on-device AI assistant that runs locally on GeForce RTX PCs. It enables users to query system information (e.g. PC specs, CPU/GPU temperatures, utilization), adjust system settings and more — all through simple natural language prompts.

With the G-Assist component in Langflow, these capabilities can be built into custom agentic workflows. Users can prompt G-Assist to “get GPU temperatures” or “tune fan speeds” — and its response and actions will flow through their chain of components.

Beyond diagnostics and system control, G-Assist is extensible via its plug-in architecture, which allows users to add new commands tailored to their workflows. Community-built plug-ins can also be invoked directly from Langflow workflows.

To get started with the G-Assist component in Langflow, read the developer documentation.

Langflow is also a development tool for NVIDIA NeMo microservices, a modular platform for building and deploying AI workflows across on-premises or cloud Kubernetes environments.

With integrated support for Ollama and MCP, Langflow offers a practical no-code platform for building real-time AI workflows and agents that run fully offline and on device, all accelerated by NVIDIA GeForce RTX and RTX PRO GPUs.

Each week, the RTX AI Garage blog series features community-driven AI innovations and content for those looking to learn more about NVIDIA NIM microservices and AI Blueprints, as well as building AI agents, creative workflows, productivity apps and more on AI PCs and workstations.

Plug in to NVIDIA AI PC on Facebook, Instagram, TikTok and X — and stay informed by subscribing to the RTX AI PC newsletter. Join NVIDIA’s Discord server to connect with community developers and AI enthusiasts for discussions on what’s possible with RTX AI.

Follow NVIDIA Workstation on LinkedIn and X.

See notice regarding software product information.