As global climate change accelerates, finding and securing clean energy is a crucial challenge for many researchers, organizations and governments.

The U.K.’s Atomic Energy Authority (UKAEA), through an evaluation project at the University of Manchester, has been testing the NVIDIA Omniverse simulation platform to accelerate the design and development of a full-scale fusion powerplant that could put clean power on the grid in the coming years.

Over the past several decades, scientists have experimented with ways to create fusion energy, which produces zero carbon and low radioactivity. Such technology could provide virtually limitless clean, safe and affordable energy to meet the world’s growing demand.

Fusion is the principle that energy can be released by combining atomic nuclei together. But fusion energy has not yet been successfully scaled for production due to high energy input needs and unpredictable behavior of the fusion reaction.

Fusion reaction powers the sun, where massive gravitational pressures allow fusion to happen naturally at temperatures around 27 million degrees Fahrenheit. But Earth doesn’t have the same gravitational pressure as the sun, and this means that temperatures to produce fusion need to be much higher — above 180 million degrees.

To replicate the power of the sun on Earth, researchers and engineers are using the latest advances in data science and extreme-scale computing to develop designs for fusion powerplants. With NVIDIA Omniverse, researchers could potentially build a fully functioning digital twin of a powerplant, helping ensure the most efficient designs are selected for construction.

Accelerating Design, Simulation and Real-Time Collaboration

Building a digital twin that accurately represents all powerplant components, the plasma, and the control and maintenance systems is a massive challenge — one that can benefit greatly from AI, exascale GPU computing, and physically accurate simulation software.

It starts with the design of a fusion powerplant, which requires a large number of parts and inputs from large teams of engineering, design and research experts throughout the process. “There are many different components, and we have to take into account lots of different areas of physics and engineering,” said Lee Margetts, UKAEA chair of digital engineering for nuclear fusion at the University of Manchester. “If we make a design change in one system, this has a knock-on effect on other systems.”

Experts from various domains are involved in the project. Each team member uses different computer-aided design applications or simulation tools, and an expert’s work in one domain depends on the data from others working in different domains.

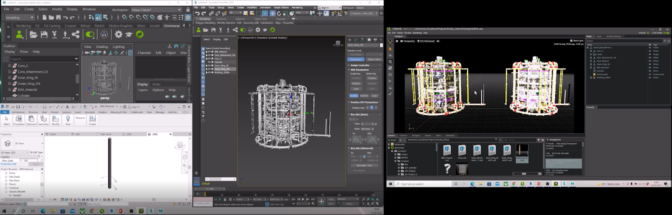

The UKAEA team is exploring Omniverse to help them work together in a real-time simulation environment, so they can see the design of the whole machine rather than only individual subcomponents.

Omniverse has been critical in keeping all these moving parts in sync. By enabling all tools and applications to connect, Omniverse allows the engineers working on the powerplant design to simultaneously collaborate from a single source of truth.

“We can see three different engineers, from three different locations, working on three different components of a powerplant in three different packages,” said Muhammad Omer, a researcher on the project.

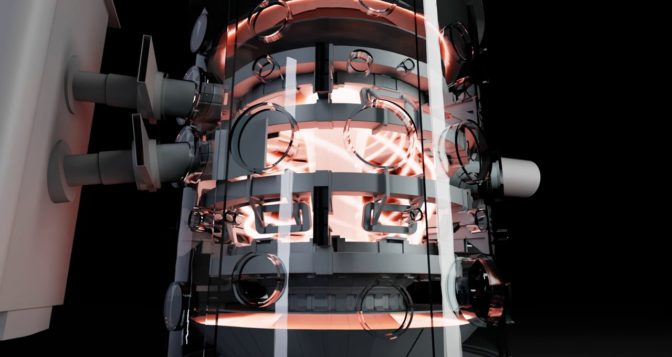

Omer explained that when experimenting in Omniverse, the team achieved photorealism in their powerplant designs using the platform’s core abilities to import full-fidelity 3D data. They could also visualize in real time with the RTX Renderer, which made it easy for them to compare different design options for components.

Simulation of fusion plasma is also a challenge. The teams developed Python-based Omniverse Extensions with Omniverse Kit to connect and ingest data from industry simulation software Monte Carlo Neutronics Code Geant4. This allows them to simulate neutron transport in the powerplant core, which is what carries energy out of the powerplant.

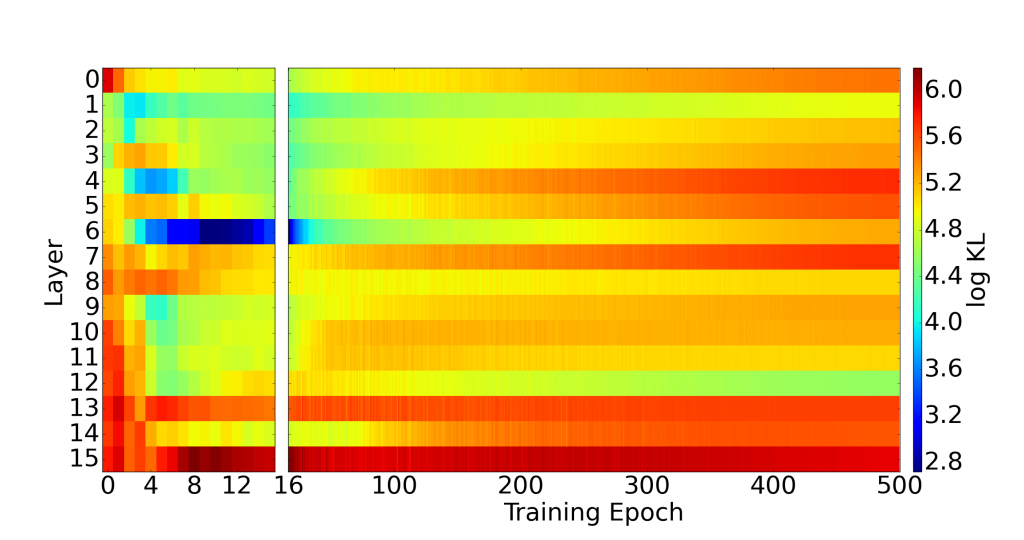

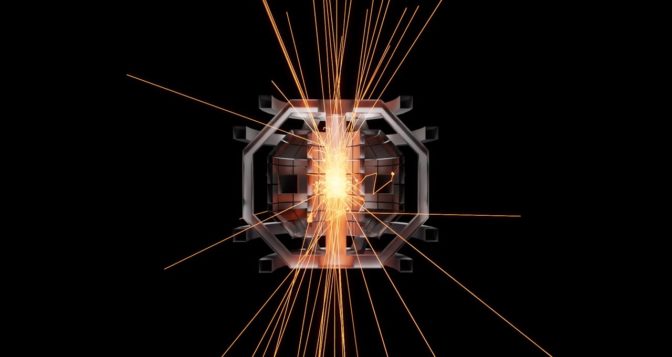

They also built Omniverse Extensions to view JOREK plasma simulation code, which simulates visible light emissions, giving the researchers insight into the plasma’s state. The scientists will begin to explore the NVIDIA Modulus AI-physics framework to use with their existing simulation data to develop AI surrogate models to accelerate the fusion plasma simulations.

Using AI to Optimize Designs and Enhance Digital Twins

In addition to helping design, operate and control the powerplant, Omniverse can help assist in the training of future AI-driven or AI-augmented robotic control and maintenance systems. These will be essential for maintaining plants in the radiation environment of the powerplant.

Using Omniverse Replicator, a software development kit for building custom synthetic data-generation tools and datasets, researchers can generate large quantities of physically accurate synthetic data of the powerplant and plasma behavior to train robotic systems. By learning in simulation, the robots can correctly handle tasks more accurately in the real world, improving predictive maintenance and reducing downtime.

In the future, sensor models could livestream observation data to the Omniverse digital twin, constantly keeping the virtual twin synchronized to the powerplant’s physical state. Researchers will be able to explore various hypothetical scenarios by first testing in the virtual twin before deploying changes to the physical powerplant.

Overall, Margetts and the team at UKAEA saw many unique opportunities and benefits in using Omniverse to build digital twins for fusion powerplants. Omniverse provides the possibility of a real-time platform that researchers can use to develop first-of-a-kind powerplant technology. The platform also allows engineers to seamlessly work together on powerplant designs. And teams can access integrated AI tools that will enable users to optimize future power plants.

“We’re delighted about what we’ve seen. We believe it’s got great potential as a platform for digital engineering,” said Margetts.

Watch the demo and learn more about NVIDIA Omniverse.

Featured image courtesy of Brigantium Engineering and Bentley Systems.

The post Scientists Building Digital Twins in NVIDIA Omniverse to Accelerate Clean Energy Research appeared first on NVIDIA Blog.

Kicking off the ISC 2022 conference in Hamburg, Germany, NVIDIA’s Rev Lebaredian (left), vice president for Omniverse and simulation technology, was joined by Michele Melchiorre, senior vice president for product system, technical planning, and tool shop at BMW Group.

Kicking off the ISC 2022 conference in Hamburg, Germany, NVIDIA’s Rev Lebaredian (left), vice president for Omniverse and simulation technology, was joined by Michele Melchiorre, senior vice president for product system, technical planning, and tool shop at BMW Group.