Posted by Jeff Dean, Chief Scientist, Google DeepMind & Google Research, Demis Hassabis, CEO, Google DeepMind, and James Manyika, SVP, Google Research, Technology & Society

This has been a year of incredible progress in the field of Artificial Intelligence (AI) research and its practical applications.

As ongoing research pushes AI even farther, we look back to our perspective published in January of this year, titled “Why we focus on AI (and to what end),” where we noted:

We are committed to leading and setting the standard in developing and shipping useful and beneficial applications, applying ethical principles grounded in human values, and evolving our approaches as we learn from research, experience, users, and the wider community.

We also believe that getting AI right — which to us involves innovating and delivering widely accessible benefits to people and society, while mitigating its risks — must be a collective effort involving us and others, including researchers, developers, users (individuals, businesses, and other organizations), governments, regulators, and citizens.

We are convinced that the AI-enabled innovations we are focused on developing and delivering boldly and responsibly are useful, compelling, and have the potential to assist and improve lives of people everywhere — this is what compels us.

In this Year-in-Review post we’ll go over some of Google Research’s and Google DeepMind’s efforts putting these paragraphs into practice safely throughout 2023.

Advances in products & technologies

This was the year generative AI captured the world’s attention, creating imagery, music, stories, and engaging conversation about everything imaginable, at a level of creativity and a speed almost implausible a few years ago.

In February, we first launched Bard, a tool that you can use to explore creative ideas and explain things simply. It can generate text, translate languages, write different kinds of creative content and more.

In May, we watched the results of months and years of our foundational and applied work announced on stage at Google I/O. Principally, this included PaLM 2, a large language model (LLM) that brought together compute-optimal scaling, an improved dataset mixture, and model architecture to excel at advanced reasoning tasks.

By fine-tuning and instruction-tuning PaLM 2 for different purposes, we were able to integrate it into numerous Google products and features, including:

- An update to Bard, which enabled multilingual capabilities. Since its initial launch, Bard is now available in more than 40 languages and over 230 countries and territories, and with extensions, Bard can find and show relevant information from Google tools used every day — like Gmail, Google Maps, YouTube, and more.

- Search Generative Experience (SGE), which uses LLMs to reimagine both how to organize information and how to help people navigate through it, creating a more fluid, conversational interaction model for our core Search product. This work extended the search engine experience from primarily focused on information retrieval into something much more — capable of retrieval, synthesis, creative generation and continuation of previous searches — while continuing to serve as a connection point between users and the web content they seek.

- MusicLM, a text-to-music model powered by AudioLM and MuLAN, which can make music from text, humming, images or video and musical accompaniments to singing.

- Duet AI, our AI-powered collaborator that provides users with assistance when they use Google Workspace and Google Cloud. Duet AI in Google Workspace, for example, helps users write, create images, analyze spreadsheets, draft and summarize emails and chat messages, and summarize meetings. Duet AI in Google Cloud helps users code, deploy, scale, and monitor applications, as well as identify and accelerate resolution of cybersecurity threats.

- And many other developments.

In June, following last year’s release of our text-to-image generation model Imagen, we released Imagen Editor, which provides the ability to use region masks and natural language prompts to interactively edit generative images to provide much more precise control over the model output.

Later in the year, we released Imagen 2, which improved outputs via a specialized image aesthetics model based on human preferences for qualities such as good lighting, framing, exposure, and sharpness.

In October, we launched a feature that helps people practice speaking and improve their language skills. The key technology that enabled this functionality was a novel deep learning model developed in collaboration with the Google Translate team, called Deep Aligner. This single new model has led to dramatic improvements in alignment quality across all tested language pairs, reducing average alignment error rate from 25% to 5% compared to alignment approaches based on Hidden Markov models (HMMs).

In November, in partnership with YouTube, we announced Lyria, our most advanced AI music generation model to date. We released two experiments designed to open a new playground for creativity, DreamTrack and music AI tools, in concert with YouTube’s Principles for partnering with the music industry on AI technology.

Then in December, we launched Gemini, our most capable and general AI model. Gemini was built to be multimodal from the ground up across text, audio, image and videos. Our initial family of Gemini models comes in three different sizes, Nano, Pro, and Ultra. Nano models are our smallest and most efficient models for powering on-device experiences in products like Pixel. The Pro model is highly-capable and best for scaling across a wide range of tasks. The Ultra model is our largest and most capable model for highly complex tasks.

In a technical report about Gemini models, we showed that Gemini Ultra’s performance exceeds current state-of-the-art results on 30 of the 32 widely-used academic benchmarks used in LLM research and development. With a score of 90.04%, Gemini Ultra was the first model to outperform human experts on MMLU, and achieved a state-of-the-art score of 59.4% on the new MMMU benchmark.

Building on AlphaCode, the first AI system to perform at the level of the median competitor in competitive programming, we introduced AlphaCode 2 powered by a specialized version of Gemini. When evaluated on the same platform as the original AlphaCode, we found that AlphaCode 2 solved 1.7x more problems, and performed better than 85% of competition participants

At the same time, Bard got its biggest upgrade with its use of the Gemini Pro model, making it far more capable at things like understanding, summarizing, reasoning, coding, and planning. In six out of eight benchmarks, Gemini Pro outperformed GPT-3.5, including in MMLU, one of the key standards for measuring large AI models, and GSM8K, which measures grade school math reasoning. Gemini Ultra will come to Bard early next year through Bard Advanced, a new cutting-edge AI experience.

Gemini Pro is also available on Vertex AI, Google Cloud’s end-to-end AI platform that empowers developers to build applications that can process information across text, code, images, and video. Gemini Pro was also made available in AI Studio in December.

To best illustrate some of Gemini’s capabilities, we produced a series of short videos with explanations of how Gemini could:

ML/AI Research

In addition to our advances in products and technologies, we’ve also made a number of important advancements in the broader fields of machine learning and AI research.

At the heart of the most advanced ML models is the Transformer model architecture, developed by Google researchers in 2017. Originally developed for language, it has proven useful in domains as varied as computer vision, audio, genomics, protein folding, and more. This year, our work on scaling vision transformers demonstrated state-of-the-art results across a wide variety of vision tasks, and has also been useful in building more capable robots.

Expanding the versatility of models requires the ability to perform higher-level and multi-step reasoning. This year, we approached this target following several research tracks. For example, algorithmic prompting is a new method that teaches language models reasoning by demonstrating a sequence of algorithmic steps, which the model can then apply in new contexts. This approach improves accuracy on one middle-school mathematics benchmark from 25.9% to 61.1%.

|

| By providing algorithmic prompts, we can teach a model the rules of arithmetic via in-context learning. |

In the domain of visual question answering, in a collaboration with UC Berkeley researchers, we showed how we could better answer complex visual questions (“Is the carriage to the right of the horse?”) by combining a visual model with a language model trained to answer visual questions by synthesizing a program to perform multi-step reasoning.

We are now using a general model that understands many aspects of the software development life cycle to automatically generate code review comments, respond to code review comments, make performance-improving suggestions for pieces of code (by learning from past such changes in other contexts), fix code in response to compilation errors, and more.

In a multi-year research collaboration with the Google Maps team, we were able to scale inverse reinforcement learning and apply it to the world-scale problem of improving route suggestions for over 1 billion users. Our work culminated in a 16–24% relative improvement in global route match rate, helping to ensure that routes are better aligned with user preferences.

We also continue to work on techniques to improve the inference performance of machine learning models. In work on computationally-friendly approaches to pruning connections in neural networks, we were able to devise an approximation algorithm to the computationally intractable best-subset selection problem that is able to prune 70% of the edges from an image classification model and still retain almost all of the accuracy of the original.

In work on accelerating on-device diffusion models, we were also able to apply a variety of optimizations to attention mechanisms, convolutional kernels, and fusion of operations to make it practical to run high quality image generation models on-device; for example, enabling “a photorealistic and high-resolution image of a cute puppy with surrounding flowers” to be generated in just 12 seconds on a smartphone.

Advances in capable language and multimodal models have also benefited our robotics research efforts. We combined separately trained language, vision, and robotic control models into PaLM-E, an embodied multi-modal model for robotics, and Robotic Transformer 2 (RT-2), a novel vision-language-action (VLA) model that learns from both web and robotics data, and translates this knowledge into generalized instructions for robotic control.

|

| RT-2 architecture and training: We co-fine-tune a pre-trained vision-language model on robotics and web data. The resulting model takes in robot camera images and directly predicts actions for a robot to perform. |

Furthermore, we showed how language can also be used to control the gait of quadrupedal robots and explored the use of language to help formulate more explicit reward functions to bridge the gap between human language and robotic actions. Then, in Barkour we benchmarked the agility limits of quadrupedal robots.

Algorithms & optimization

Designing efficient, robust, and scalable algorithms remains a high priority. This year, our work included: applied and scalable algorithms, market algorithms, system efficiency and optimization, and privacy.

We introduced AlphaDev, an AI system that uses reinforcement learning to discover enhanced computer science algorithms. AlphaDev uncovered a faster algorithm for sorting, a method for ordering data, which led to improvements in the LLVM libc++ sorting library that were up to 70% faster for shorter sequences and about 1.7% faster for sequences exceeding 250,000 elements.

We developed a novel model to predict the properties of large graphs, enabling estimation of performance for large programs. We released a new dataset, TPUGraphs, to accelerate open research in this area, and showed how we can use modern ML to improve ML efficiency.

|

| The TPUGraphs dataset has 44 million graphs for ML program optimization. |

We developed a new load balancing algorithm for distributing queries to a server, called Prequal, which minimizes a combination of requests-in-flight and estimates the latency. Deployments across several systems have saved CPU, latency, and RAM significantly. We also designed a new analysis framework for the classical caching problem with capacity reservations.

|

| Heatmaps of normalized CPU usage transitioning to Prequal at 08:00. |

We improved state-of-the-art in clustering and graph algorithms by developing new techniques for computing minimum-cut, approximating correlation clustering, and massively parallel graph clustering. Additionally, we introduced TeraHAC, a novel hierarchical clustering algorithm for trillion-edge graphs, designed a text clustering algorithm for better scalability while maintaining quality, and designed the most efficient algorithm for approximating the Chamfer Distance, the standard similarity function for multi-embedding models, offering >50× speedups over highly-optimized exact algorithms and scaling to billions of points.

We continued optimizing Google’s large embedding models (LEMs), which power many of our core products and recommender systems. Some new techniques include Unified Embedding for battle-tested feature representations in web-scale ML systems and Sequential Attention, which uses attention mechanisms to discover high-quality sparse model architectures during training.

<!–

This year, we also continued our research in market algorithms to design computationally efficient marketplaces and causal inference. First, we remain committed to advancing the rapidly growing interest in ads automation for which our recent work explains the adoption of autobidding mechanisms and examines the effect of different auction formats on the incentives of advertisers. In the multi-channel setting, our findings shed light on how the choice between local and global optimizations affects the design of multi-channel auction systems and bidding systems.

–>

Beyond auto-bidding systems, we also studied auction design in other complex settings, such as buy-many mechanisms, auctions for heterogeneous bidders, contract designs, and innovated robust online bidding algorithms. Motivated by the application of generative AI in collaborative creation (e.g., joint ad for advertisers), we proposed a novel token auction model where LLMs bid for influence in the collaborative AI creation. Finally, we show how to mitigate personalization effects in experimental design, which, for example, may cause recommendations to drift over time.

The Chrome Privacy Sandbox, a multi-year collaboration between Google Research and Chrome, has publicly launched several APIs, including for Protected Audience, Topics, and Attribution Reporting. This is a major step in protecting user privacy while supporting the open and free web ecosystem. These efforts have been facilitated by fundamental research on re-identification risk, private streaming computation, optimization of privacy caps and budgets, hierarchical aggregation, and training models with label privacy.

Science and society

In the not too distant future, there is a very real possibility that AI applied to scientific problems can accelerate the rate of discovery in certain domains by 10× or 100×, or more, and lead to major advances in diverse areas including bioengineering, materials science, weather prediction, climate forecasting, neuroscience, genetic medicine, and healthcare.

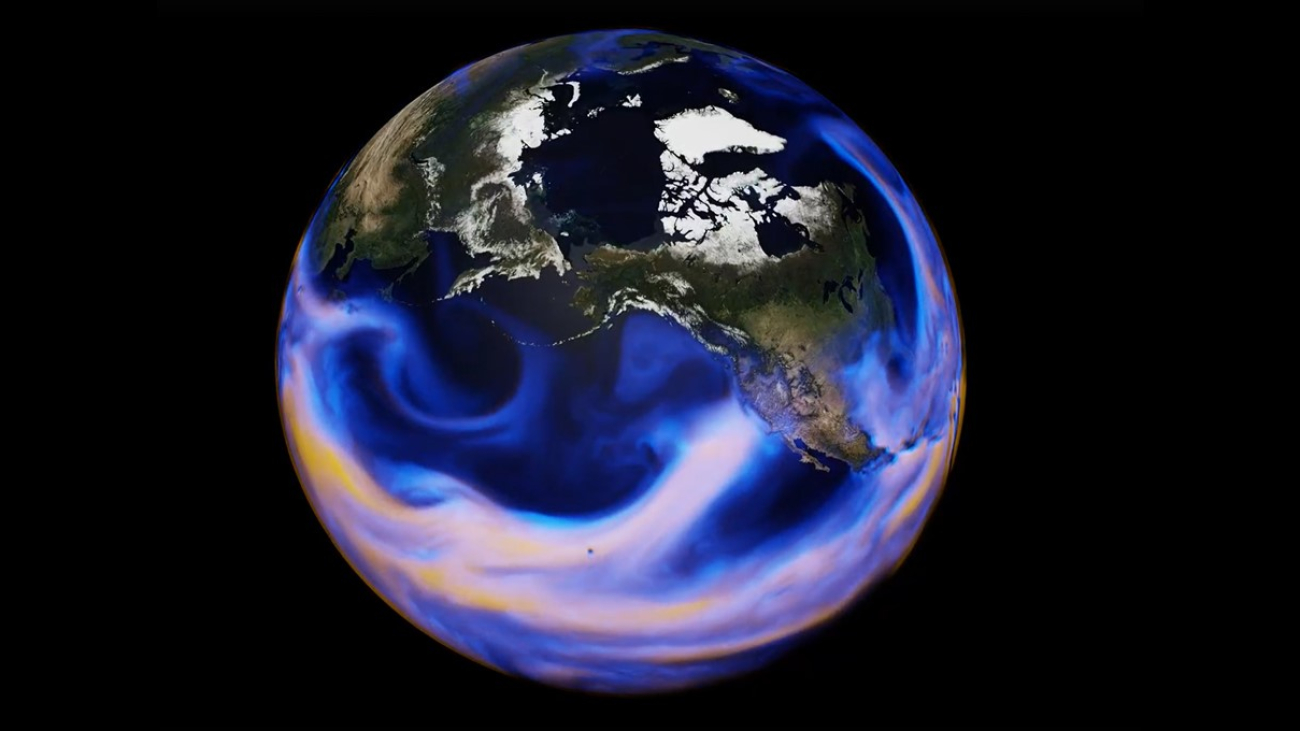

Sustainability and climate change

In Project Green Light, we partnered with 13 cities around the world to help improve traffic flow at intersections and reduce stop-and-go emissions. Early numbers from these partnerships indicate a potential for up to 30% reduction in stops and up to 10% reduction in emissions.

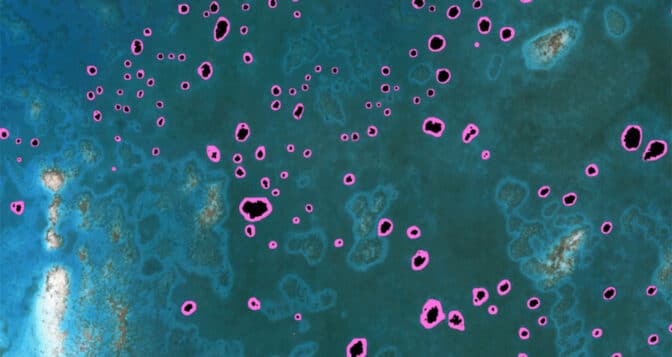

In our contrails work, we analyzed large-scale weather data, historical satellite images, and past flights. We trained an AI model to predict where contrails form and reroute airplanes accordingly. In partnership with American Airlines and Breakthrough Energy, we used this system to demonstrate contrail reduction by 54%.

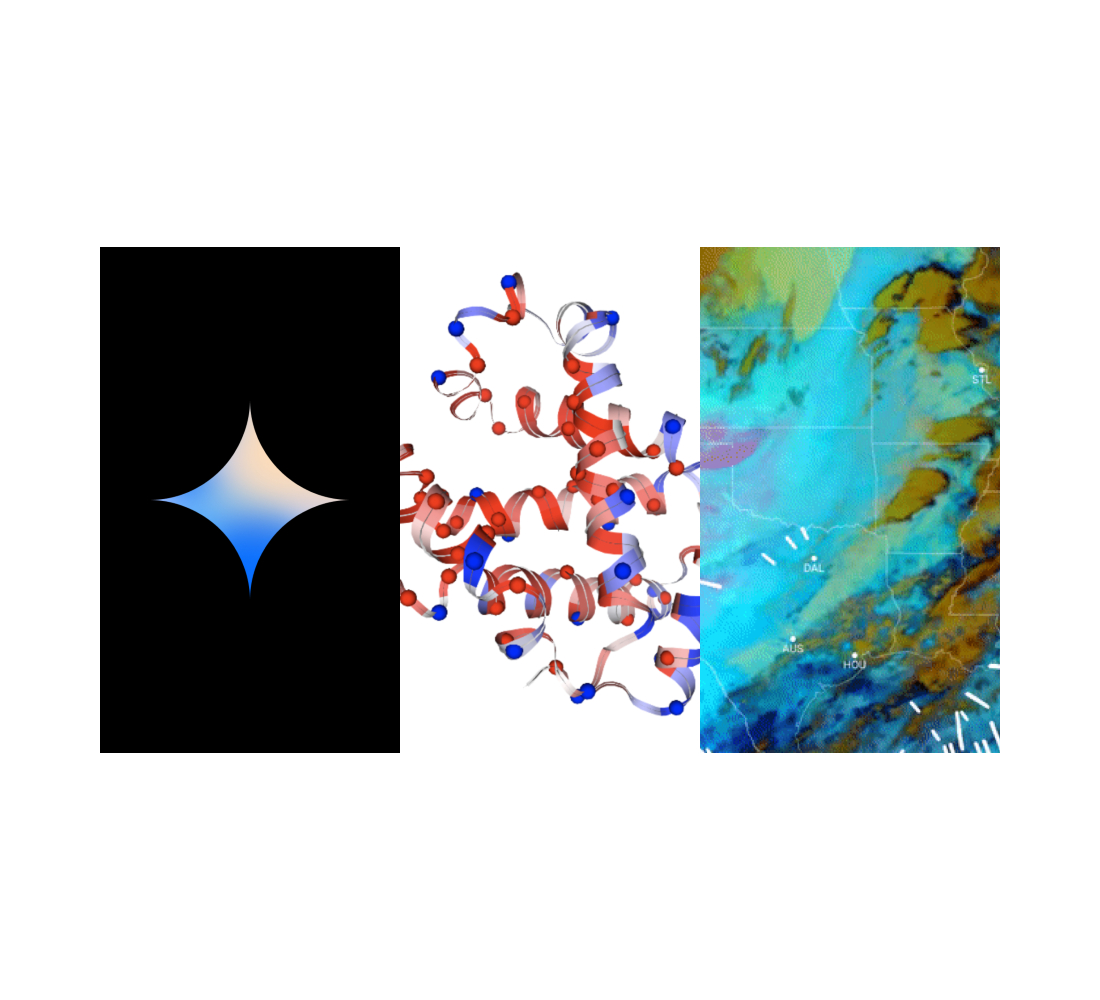

|

| Contrails detected over the United States using AI and GOES-16 satellite imagery. |

We are also developing novel technology-driven approaches to help communities with the effects of climate change. For example, we have expanded our flood forecasting coverage to 80 countries, which directly impacts more than 460 million people. We have initiated a number of research efforts to help mitigate the increasing danger of wildfires, including real-time tracking of wildfire boundaries using satellite imagery, and work that improves emergency evacuation plans for communities at risk to rapidly-spreading wildfires. Our partnership with American Forests puts data from our Tree Canopy project to work in their Tree Equity Score platform, helping communities identify and address unequal access to trees.

Finally, we continued to develop better models for weather prediction at longer time horizons. Improving on MetNet and MetNet-2, in this year’s work on MetNet-3, we now outperform traditional numerical weather simulations up to twenty-four hours. In the area of medium-term, global weather forecasting, our work on GraphCast showed significantly better prediction accuracy for up to 10 days compared to HRES, the most accurate operational deterministic forecast, produced by the European Centre for Medium-Range Weather Forecasts (ECMWF). In collaboration with ECMWF, we released WeatherBench-2, a benchmark for evaluating the accuracy of weather forecasts in a common framework.

|

| A selection of GraphCast’s predictions rolling across 10 days showing specific humidity at 700 hectopascals (about 3 km above surface), surface temperature, and surface wind speed. |

Health and the life sciences

The potential of AI to dramatically improve processes in healthcare is significant. Our initial Med-PaLM model was the first model capable of achieving a passing score on the U.S. medical licensing exam. Our more recent Med-PaLM 2 model improved by a further 19%, achieving an expert-level accuracy of 86.5%. These Med-PaLM models are language-based, enable clinicians to ask questions and have a dialogue about complex medical conditions, and are available to healthcare organizations as part of MedLM through Google Cloud.

In the same way our general language models are evolving to handle multiple modalities, we have recently shown research on a multimodal version of Med-PaLM capable of interpreting medical images, textual data, and other modalities, describing a path for how we can realize the exciting potential of AI models to help advance real-world clinical care.

|

| Med-PaLM M is a large multimodal generative model that flexibly encodes and interprets biomedical data including clinical language, imaging, and genomics with the same model weights. |

We have also been working on how best to harness AI models in clinical workflows. We have shown that coupling deep learning with interpretability methods can yield new insights for clinicians. We have also shown that self-supervised learning, with careful consideration of privacy, safety, fairness and ethics, can reduce the amount of de-identified data needed to train clinically relevant medical imaging models by 3×–100×, reducing the barriers to adoption of models in real clinical settings. We also released an open source mobile data collection platform for people with chronic disease to provide tools to the community to build their own studies.

AI systems can also discover completely new signals and biomarkers in existing forms of medical data. In work on novel biomarkers discovered in retinal images, we demonstrated that a number of systemic biomarkers spanning several organ systems (e.g., kidney, blood, liver) can be predicted from external eye photos. In other work, we showed that combining retinal images and genomic information helps identify some underlying factors of aging.

In the genomics space, we worked with 119 scientists across 60 institutions to create a new map of the human genome, or pangenome. This more equitable pangenome better represents the genomic diversity of global populations. Building on our ground-breaking AlphaFold work, our work on AlphaMissense this year provides a catalog of predictions for 89% of all 71 million possible missense variants as either likely pathogenic or likely benign.

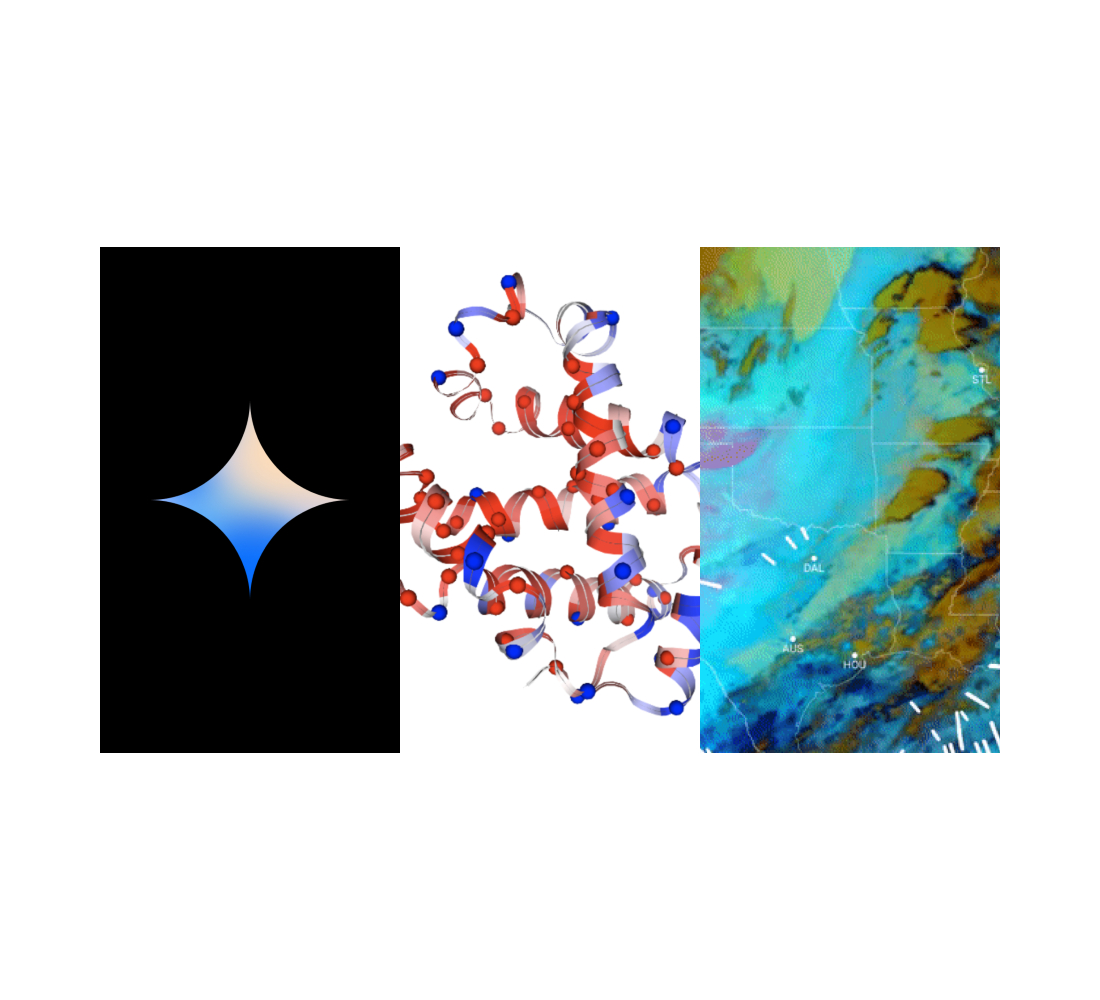

|

| Examples of AlphaMissense predictions overlaid on AlphaFold predicted structures (red – predicted as pathogenic; blue – predicted as benign; grey – uncertain). Red dots represent known pathogenic missense variants, blue dots represent known benign variants. Left: HBB protein. Variants in this protein can cause sickle cell anaemia. Right: CFTR protein. Variants in this protein can cause cystic fibrosis. |

We also shared an update on progress towards the next generation of AlphaFold. Our latest model can now generate predictions for nearly all molecules in the Protein Data Bank (PDB), frequently reaching atomic accuracy. This unlocks new understanding and significantly improves accuracy in multiple key biomolecule classes, including ligands (small molecules), proteins, nucleic acids (DNA and RNA), and those containing post-translational modifications (PTMs).

On the neuroscience front, we announced a new collaboration with Harvard, Princeton, the NIH, and others to map an entire mouse brain at synaptic resolution, beginning with a first phase that will focus on the hippocampal formation — the area of the brain responsible for memory formation, spatial navigation, and other important functions.

Quantum computing

Quantum computers have the potential to solve big, real-world problems across science and industry. But to realize that potential, they must be significantly larger than they are today, and they must reliably perform tasks that cannot be performed on classical computers.

This year, we took an important step towards the development of a large-scale, useful quantum computer. Our breakthrough is the first demonstration of quantum error correction, showing that it’s possible to reduce errors while also increasing the number of qubits. To enable real-world applications, these qubit building blocks must perform more reliably, lowering the error rate from ~1 in 103 typically seen today, to ~1 in 108.

Responsible AI research

Design for Responsibility

Generative AI is having a transformative impact in a wide range of fields including healthcare, education, security, energy, transportation, manufacturing, and entertainment. Given these advances, the importance of designing technologies consistent with our AI Principles remains a top priority. We also recently published case studies of emerging practices in society-centered AI. And in our annual AI Principles Progress Update, we offer details on how our Responsible AI research is integrated into products and risk management processes.

Proactive design for Responsible AI begins with identifying and documenting potential harms. For example, we recently introduced a three-layered context-based framework for comprehensively evaluating the social and ethical risks of AI systems. During model design, harms can be mitigated with the use of responsible datasets.

We are partnering with Howard University to build high quality African-American English (AAE) datasets to improve our products and make them work well for more people. Our research on globally inclusive cultural representation and our publication of the Monk Skin Tone scale furthers our commitments to equitable representation of all people. The insights we gain and techniques we develop not only help us improve our own models, they also power large-scale studies of representation in popular media to inform and inspire more inclusive content creation around the world.

With advances in generative image models, fair and inclusive representation of people remains a top priority. In the development pipeline, we are working to amplify underrepresented voices and to better integrate social context knowledge. We proactively address potential harms and bias using classifiers and filters, careful dataset analysis, and in-model mitigations such as fine-tuning, reasoning, few-shot prompting, data augmentation and controlled decoding, and our research showed that generative AI enables higher quality safety classifiers to be developed with far less data. We also released a powerful way to better tune models with less data giving developers more control of responsibility challenges in generative AI.

We have developed new state-of-the-art explainability methods to identify the role of training data on model behaviors. By combining training data attribution methods with agile classifiers, we found that we can identify mislabelled training examples. This makes it possible to reduce the noise in training data, leading to significant improvements in model accuracy.

We initiated several efforts to improve safety and transparency about online content. For example, we introduced SynthID, a tool for watermarking and identifying AI-generated images. SynthID is imperceptible to the human eye, doesn’t compromise image quality, and allows the watermark to remain detectable, even after modifications like adding filters, changing colors, and saving with various lossy compression schemes.

We also launched About This Image to help people assess the credibility of images, showing information like an image’s history, how it’s used on other pages, and available metadata about an image. And we explored safety methods that have been developed in other fields, learning from established situations where there is low-risk tolerance.

|

| SynthID generates an imperceptible digital watermark for AI-generated images. |

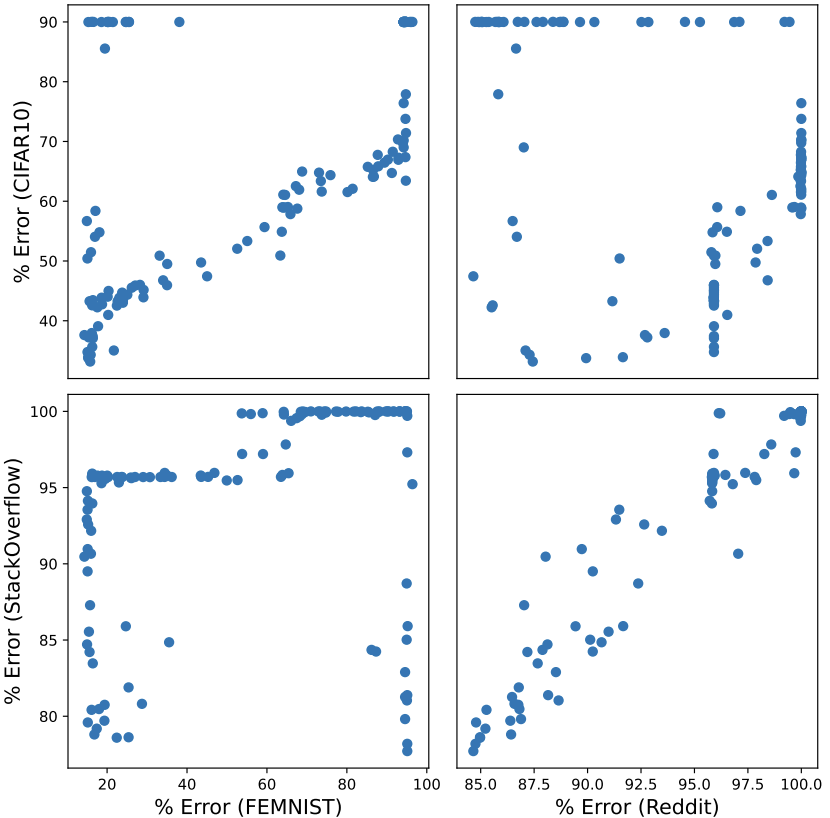

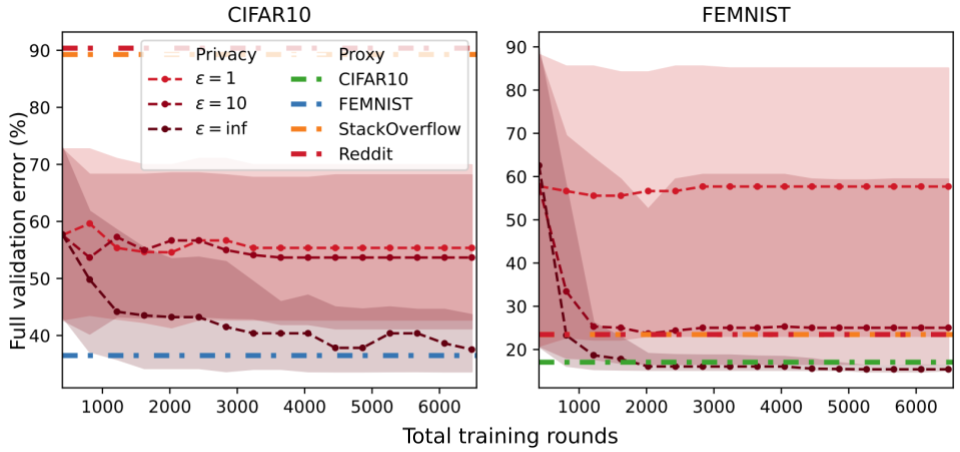

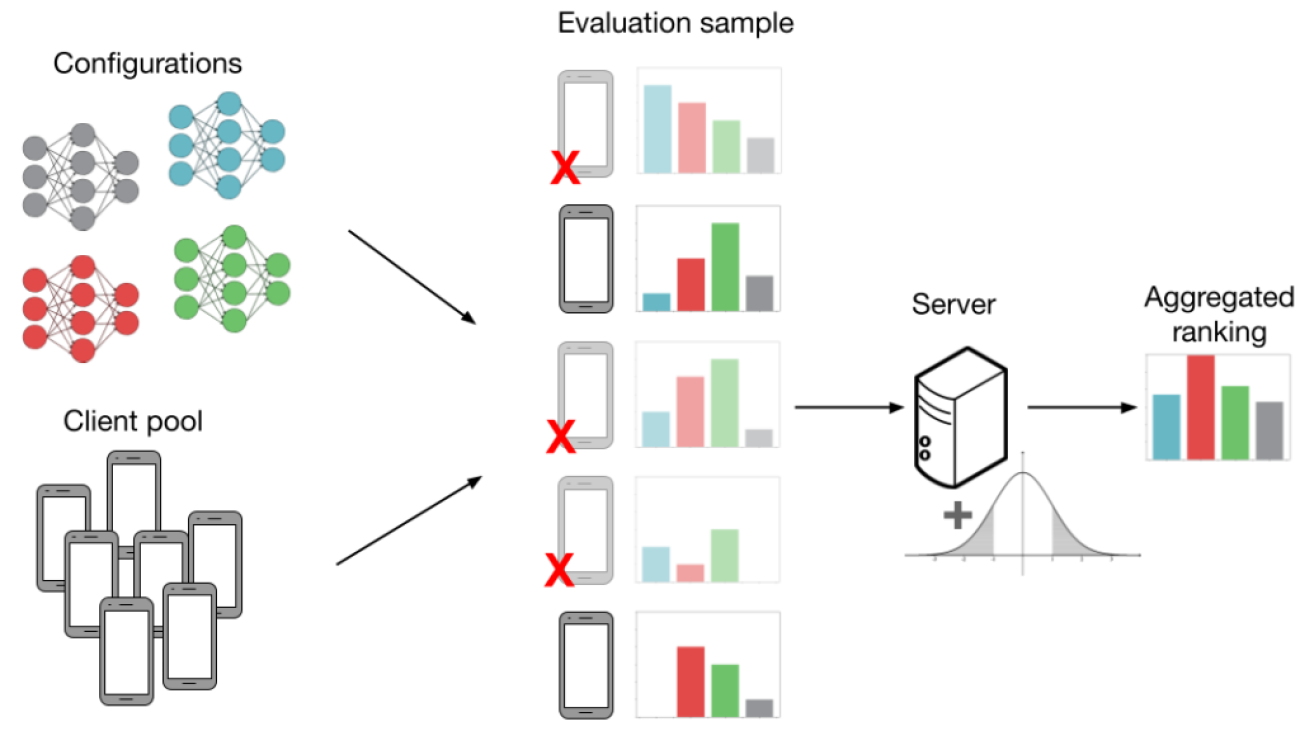

Privacy remains an essential aspect of our commitment to Responsible AI. We continued improving our state-of-the-art privacy preserving learning algorithm DP-FTRL, developed the DP-Alternating Minimization algorithm (DP-AM) to enable personalized recommendations with rigorous privacy protection, and defined a new general paradigm to reduce the privacy costs for many aggregation and learning tasks. We also proposed a scheme for auditing differentially private machine learning systems.

On the applications front we demonstrated that DP-SGD offers a practical solution in the large model fine-tuning regime and showed that images generated by DP diffusion models are useful for a range of downstream tasks. We proposed a new algorithm for DP training of large embedding models that provides efficient training on TPUs without compromising accuracy.

We also teamed up with a broad group of academic and industrial researchers to organize the first Machine Unlearning Challenge to address the scenario in which training images are forgotten to protect the privacy or rights of individuals. We shared a mechanism for extractable memorization, and participatory systems that give users more control over their sensitive data.

We continued to expand the world’s largest corpus of atypical speech recordings to >1M utterances in Project Euphonia, which enabled us to train a Universal Speech Model to better recognize atypical speech by 37% on real-world benchmarks.

We also built an audiobook recommendation system for students with reading disabilities such as dyslexia.

Adversarial testing

Our work in adversarial testing engaged community voices from historically marginalized communities. We partnered with groups such as the Equitable AI Research Round Table (EARR) to ensure we represent the diverse communities who use our models and engage with external users to identify potential harms in generative model outputs.

We established a dedicated Google AI Red Team focused on testing AI models and products for security, privacy, and abuse risks. We showed that attacks such as “poisoning” or adversarial examples can be applied to production models and surface additional risks such as memorization in both image and text generative models. We also demonstrated that defending against such attacks can be challenging, as merely applying defenses can cause other security and privacy leakages. We also introduced model evaluation for extreme risks, such as offensive cyber capabilities or strong manipulation skills.

Democratizing AI though tools and education

As we advance the state-of-the-art in ML and AI, we also want to ensure people can understand and apply AI to specific problems. We released MakerSuite (now Google AI Studio), a web-based tool that enables AI developers to quickly iterate and build lightweight AI-powered apps. To help AI engineers better understand and debug AI, we released LIT 1.0, a state-of-the-art, open-source debugger for machine learning models.

Colab, our tool that helps developers and students access powerful computing resources right in their web browser, reached over 10 million users. We’ve just added AI-powered code assistance to all users at no cost — making Colab an even more helpful and integrated experience in data and ML workflows.

|

| One of the most used features is “Explain error” — whenever the user encounters an execution error in Colab, the code assistance model provides an explanation along with a potential fix. |

To ensure AI produces accurate knowledge when put to use, we also recently introduced FunSearch, a new approach that generates verifiably true knowledge in mathematical sciences using evolutionary methods and large language models.

For AI engineers and product designers, we’re updating the People + AI Guidebook with generative AI best practices, and we continue to design AI Explorables, which includes how and why models sometimes make incorrect predictions confidently.

Community engagement

We continue to advance the fields of AI and computer science by publishing much of our work and participating in and organizing conferences. We have published more than 500 papers so far this year, and have strong presences at conferences like ICML (see the Google Research and Google DeepMind posts), ICLR (Google Research, Google DeepMind), NeurIPS (Google Research, Google DeepMind), ICCV, CVPR, ACL, CHI, and Interspeech. We are also working to support researchers around the world, participating in events like the Deep Learning Indaba, Khipu, supporting PhD Fellowships in Latin America, and more. We also worked with partners from 33 academic labs to pool data from 22 different robot types and create the Open X-Embodiment dataset and RT-X model to better advance responsible AI development.

Google has spearheaded an industry-wide effort to develop AI safety benchmarks under the MLCommons standards organization with participation from several major players in the generative AI space including OpenAI, Anthropic, Microsoft, Meta, Hugging Face, and more. Along with others in the industry we also co-founded the Frontier Model Forum (FMF), which is focused on ensuring safe and responsible development of frontier AI models. With our FMF partners and other philanthropic organizations, we launched a $10 million AI Safety Fund to advance research into the ongoing development of the tools for society to effectively test and evaluate the most capable AI models.

In close partnership with Google.org, we worked with the United Nations to build the UN Data Commons for the Sustainable Development Goals, a tool that tracks metrics across the 17 Sustainable Development Goals, and supported projects from NGOs, academic institutions, and social enterprises on using AI to accelerate progress on the SDGs.

The items highlighted in this post are a small fraction of the research work we have done throughout the last year. Find out more at the Google Research and Google DeepMind blogs, and our list of publications.

Future vision

As multimodal models become even more capable, they will empower people to make incredible progress in areas from science to education to entirely new areas of knowledge.

Progress continues apace, and as the year advances, and our products and research advance as well, people will find more and interesting creative uses for AI.

Ending this Year-in-Review where we began, as we say in Why We Focus on AI (and to what end):

If pursued boldly and responsibly, we believe that AI can be a foundational technology that transforms the lives of people everywhere — this is what excites us!

This Year-in-Review is cross-posted on both the Google Research Blog and the Google DeepMind Blog.

Read More

Robert Van Dusen is a Senior Product Manager with Amazon SageMaker. He leads frameworks, compilers, and optimization techniques for deep learning training.

Robert Van Dusen is a Senior Product Manager with Amazon SageMaker. He leads frameworks, compilers, and optimization techniques for deep learning training. Luis Quintela is the Software Developer Manager for the AWS SageMaker model parallel library. In his spare time, he can be found riding his Harley in the SF Bay Area.

Luis Quintela is the Software Developer Manager for the AWS SageMaker model parallel library. In his spare time, he can be found riding his Harley in the SF Bay Area. Gautam Kumar is a Software Engineer with AWS AI Deep Learning. He is passionate about building tools and systems for AI. In his spare time, he enjoy biking and reading books.

Gautam Kumar is a Software Engineer with AWS AI Deep Learning. He is passionate about building tools and systems for AI. In his spare time, he enjoy biking and reading books. Rahul Huilgol is a Senior Software Development Engineer in Distributed Deep Learning at Amazon Web Services.

Rahul Huilgol is a Senior Software Development Engineer in Distributed Deep Learning at Amazon Web Services.