Introducing a new, unifying DNA sequence model that advances regulatory variant-effect prediction and promises to shed new light on genome function — now available via API.Read More

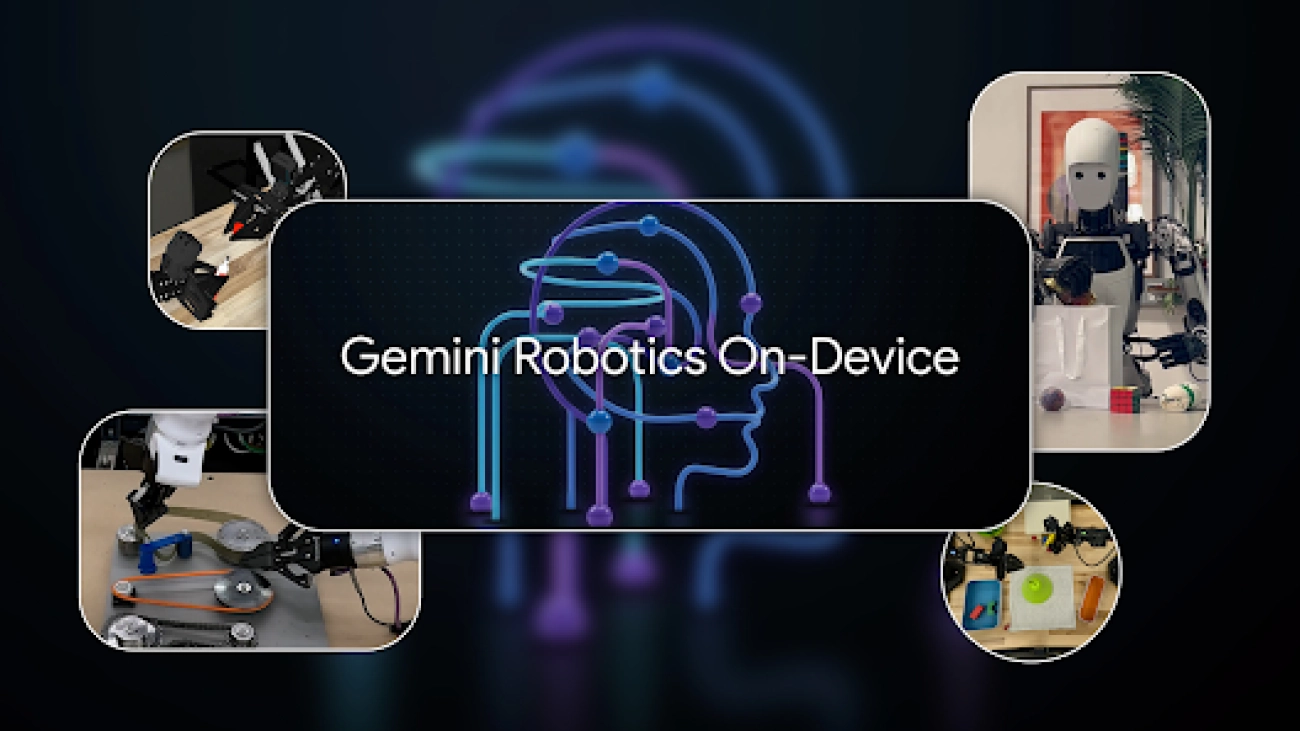

Gemini Robotics On-Device brings AI to local robotic devices

We’re introducing an efficient, on-device robotics model with general-purpose dexterity and fast task adaptation.Read More

Gemini 2.5: Updates to our family of thinking models

Explore the latest Gemini 2.5 model updates with enhanced performance and accuracy: Gemini 2.5 Pro now stable, Flash generally available, and the new Flash-Lite in preview.Read More

We’re expanding our Gemini 2.5 family of models

Gemini 2.5 Flash and Pro are now generally available, and we’re introducing 2.5 Flash-Lite, our most cost-efficient and fastest 2.5 model yet.Read More

Behind “ANCESTRA”: combining Veo with live-action filmmaking

We partnered with Darren Aronofsky, Eliza McNitt and a team of more than 200 people to make a film using Veo and live-action filmmaking.Read More

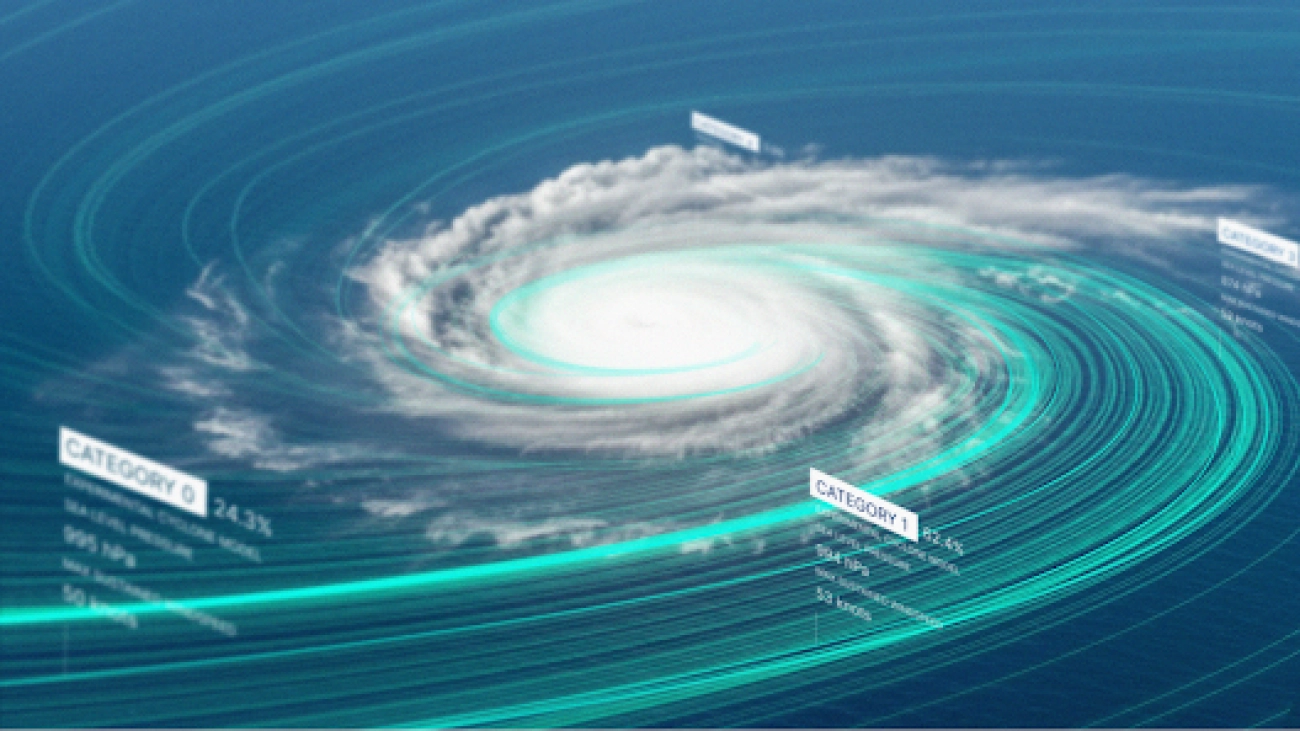

How we’re supporting better tropical cyclone prediction with AI

We’re launching Weather Lab, featuring our experimental cyclone predictions, and we’re partnering with the U.S. National Hurricane Center to support their forecasts and warnings this cyclone season.Read More

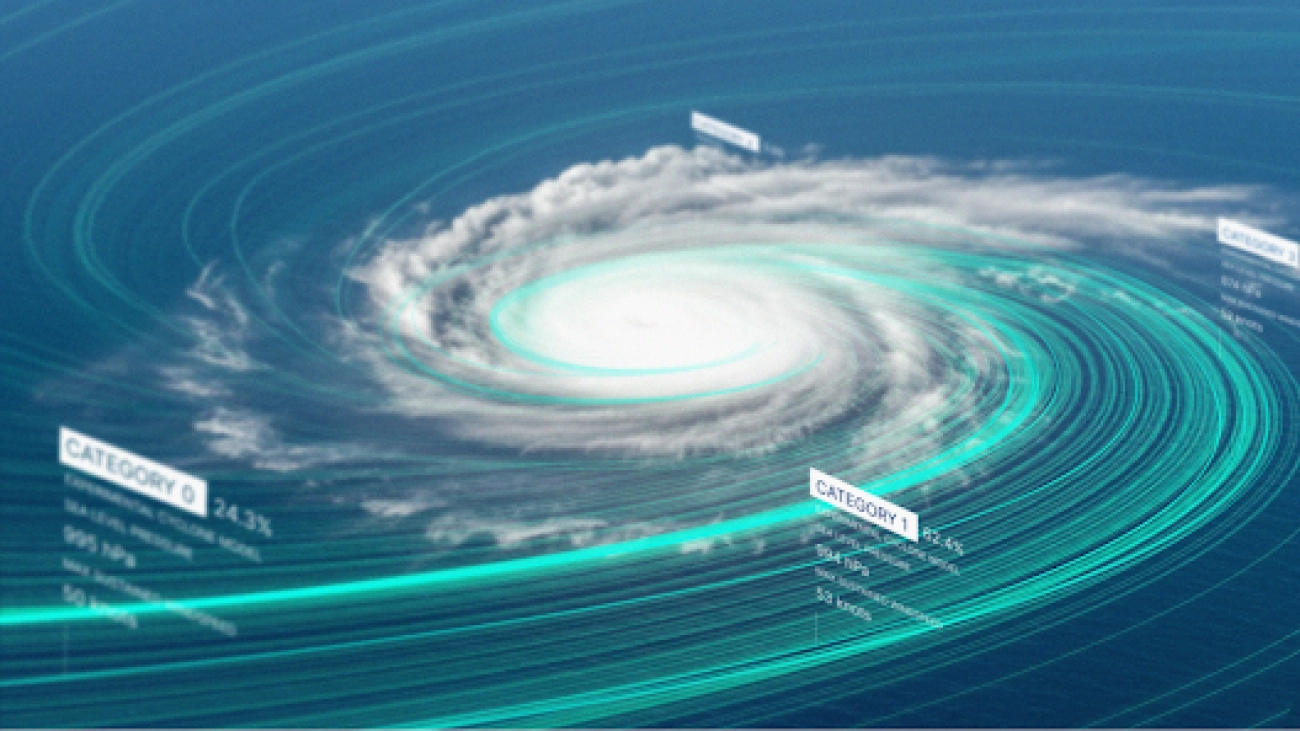

How we’re supporting better tropical cyclone prediction with AI

We’re launching Weather Lab, featuring our experimental cyclone predictions, and we’re partnering with the U.S. National Hurricane Center to support their forecasts and warnings this cyclone season.Read More

Advanced audio dialog and generation with Gemini 2.5

Gemini 2.5 has new capabilities in AI-powered audio dialog and generation.Read More

Advanced audio dialog and generation with Gemini 2.5

Gemini 2.5 has new capabilities in AI-powered audio dialog and generation.Read More

SynthID Detector — a new portal to help identify AI-generated content

Learn about the new SynthID Detector portal we announced at I/O to help people understand how the content they see online was generated.Read More