Today, customers of all industries—whether it’s financial services, healthcare and life sciences, travel and hospitality, media and entertainment, telecommunications, software as a service (SaaS), and even proprietary model providers—are using large language models (LLMs) to build applications like question and answering (QnA) chatbots, search engines, and knowledge bases. These generative AI applications are not only used to automate existing business processes, but also have the ability to transform the experience for customers using these applications. With the advancements being made with LLMs like the Mixtral-8x7B Instruct, derivative of architectures such as the mixture of experts (MoE), customers are continuously looking for ways to improve the performance and accuracy of generative AI applications while allowing them to effectively use a wider range of closed and open source models.

A number of techniques are typically used to improve the accuracy and performance of an LLM’s output, such as fine-tuning with parameter efficient fine-tuning (PEFT), reinforcement learning from human feedback (RLHF), and performing knowledge distillation. However, when building generative AI applications, you can use an alternative solution that allows for the dynamic incorporation of external knowledge and allows you to control the information used for generation without the need to fine-tune your existing foundational model. This is where Retrieval Augmented Generation (RAG) comes in, specifically for generative AI applications as opposed to the more expensive and robust fine-tuning alternatives we’ve discussed. If you’re implementing complex RAG applications into your daily tasks, you may encounter common challenges with your RAG systems such as inaccurate retrieval, increasing size and complexity of documents, and overflow of context, which can significantly impact the quality and reliability of generated answers.

This post discusses RAG patterns to improve response accuracy using LangChain and tools such as the parent document retriever in addition to techniques like contextual compression in order to enable developers to improve existing generative AI applications.

Solution overview

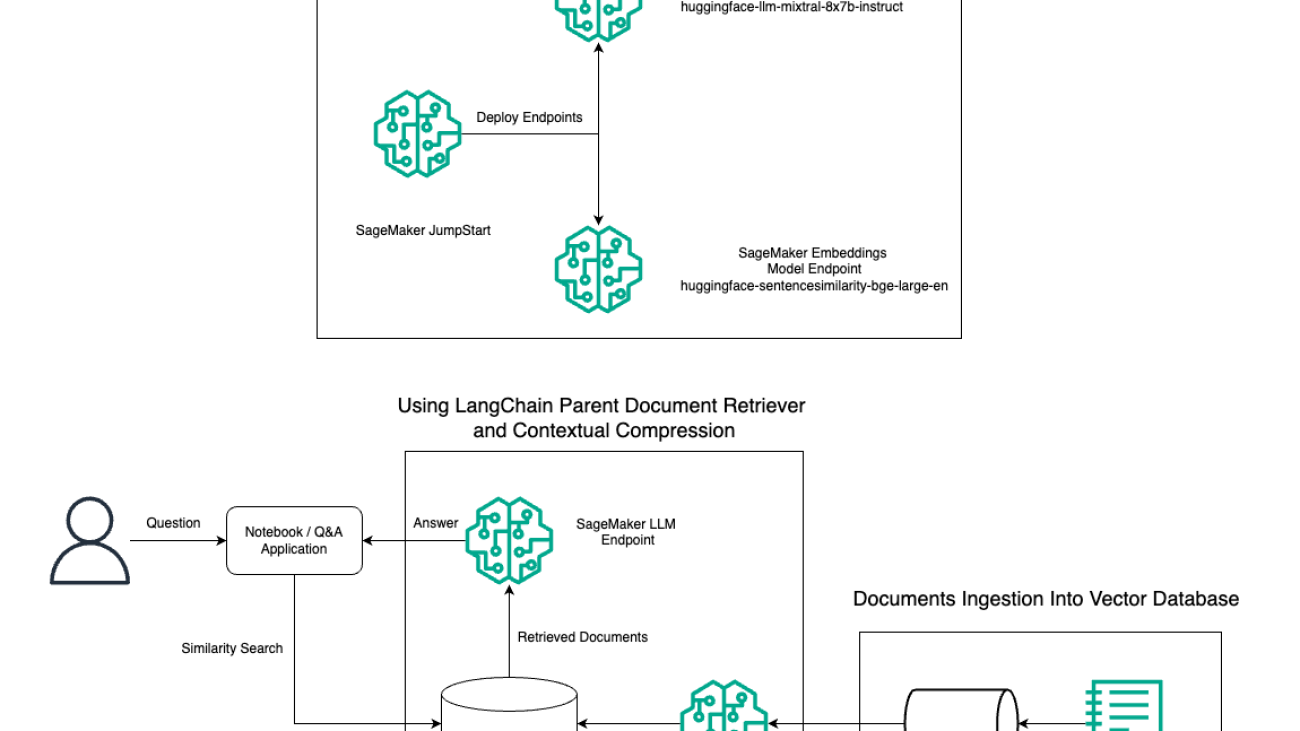

In this post, we demonstrate the use of Mixtral-8x7B Instruct text generation combined with the BGE Large En embedding model to efficiently construct a RAG QnA system on an Amazon SageMaker notebook using the parent document retriever tool and contextual compression technique. The following diagram illustrates the architecture of this solution.

You can deploy this solution with just a few clicks using Amazon SageMaker JumpStart, a fully managed platform that offers state-of-the-art foundation models for various use cases such as content writing, code generation, question answering, copywriting, summarization, classification, and information retrieval. It provides a collection of pre-trained models that you can deploy quickly and with ease, accelerating the development and deployment of machine learning (ML) applications. One of the key components of SageMaker JumpStart is the Model Hub, which offers a vast catalog of pre-trained models, such as the Mixtral-8x7B, for a variety of tasks.

Mixtral-8x7B uses an MoE architecture. This architecture allows different parts of a neural network to specialize in different tasks, effectively dividing the workload among multiple experts. This approach enables the efficient training and deployment of larger models compared to traditional architectures.

One of the main advantages of the MoE architecture is its scalability. By distributing the workload across multiple experts, MoE models can be trained on larger datasets and achieve better performance than traditional models of the same size. Additionally, MoE models can be more efficient during inference because only a subset of experts needs to be activated for a given input.

For more information on Mixtral-8x7B Instruct on AWS, refer to Mixtral-8x7B is now available in Amazon SageMaker JumpStart. The Mixtral-8x7B model is made available under the permissive Apache 2.0 license, for use without restrictions.

In this post, we discuss how you can use LangChain to create effective and more efficient RAG applications. LangChain is an open source Python library designed to build applications with LLMs. It provides a modular and flexible framework for combining LLMs with other components, such as knowledge bases, retrieval systems, and other AI tools, to create powerful and customizable applications.

We walk through constructing a RAG pipeline on SageMaker with Mixtral-8x7B. We use the Mixtral-8x7B Instruct text generation model with the BGE Large En embedding model to create an efficient QnA system using RAG on a SageMaker notebook. We use an ml.t3.medium instance to demonstrate deploying LLMs via SageMaker JumpStart, which can be accessed through a SageMaker-generated API endpoint. This setup allows for the exploration, experimentation, and optimization of advanced RAG techniques with LangChain. We also illustrate the integration of the FAISS Embedding store into the RAG workflow, highlighting its role in storing and retrieving embeddings to enhance the system’s performance.

We perform a brief walkthrough of the SageMaker notebook. For more detailed and step-by-step instructions, refer to the Advanced RAG Patterns with Mixtral on SageMaker Jumpstart GitHub repo.

The need for advanced RAG patterns

Advanced RAG patterns are essential to improve upon the current capabilities of LLMs in processing, understanding, and generating human-like text. As the size and complexity of documents increase, representing multiple facets of the document in a single embedding can lead to a loss of specificity. Although it’s essential to capture the general essence of a document, it’s equally crucial to recognize and represent the varied sub-contexts within. This is a challenge you are often faced with when working with larger documents. Another challenge with RAG is that with retrieval, you aren’t aware of the specific queries that your document storage system will deal with upon ingestion. This could lead to information most relevant to a query being buried under text (context overflow). To mitigate failure and improve upon the existing RAG architecture, you can use advanced RAG patterns (parent document retriever and contextual compression) to reduce retrieval errors, enhance answer quality, and enable complex question handling.

With the techniques discussed in this post, you can address key challenges associated with external knowledge retrieval and integration, enabling your application to deliver more precise and contextually aware responses.

In the following sections, we explore how parent document retrievers and contextual compression can help you deal with some of the problems we’ve discussed.

Parent document retriever

In the previous section, we highlighted challenges that RAG applications encounter when dealing with extensive documents. To address these challenges, parent document retrievers categorize and designate incoming documents as parent documents. These documents are recognized for their comprehensive nature but aren’t directly utilized in their original form for embeddings. Rather than compressing an entire document into a single embedding, parent document retrievers dissect these parent documents into child documents. Each child document captures distinct aspects or topics from the broader parent document. Following the identification of these child segments, individual embeddings are assigned to each, capturing their specific thematic essence (see the following diagram). During retrieval, the parent document is invoked. This technique provides targeted yet broad-ranging search capabilities, furnishing the LLM with a wider perspective. Parent document retrievers provide LLMs with a twofold advantage: the specificity of child document embeddings for precise and relevant information retrieval, coupled with the invocation of parent documents for response generation, which enriches the LLM’s outputs with a layered and thorough context.

Contextual compression

To address the issue of context overflow discussed earlier, you can use contextual compression to compress and filter the retrieved documents in alignment with the query’s context, so only pertinent information is kept and processed. This is achieved through a combination of a base retriever for initial document fetching and a document compressor for refining these documents by paring down their content or excluding them entirely based on relevance, as illustrated in the following diagram. This streamlined approach, facilitated by the contextual compression retriever, greatly enhances RAG application efficiency by providing a method to extract and utilize only what’s essential from a mass of information. It tackles the issue of information overload and irrelevant data processing head-on, leading to improved response quality, more cost-effective LLM operations, and a smoother overall retrieval process. Essentially, it’s a filter that tailors the information to the query at hand, making it a much-needed tool for developers aiming to optimize their RAG applications for better performance and user satisfaction.

Prerequisites

If you’re new to SageMaker, refer to the Amazon SageMaker Development Guide.

Before you get started with the solution, create an AWS account. When you create an AWS account, you get a single sign-on (SSO) identity that has complete access to all the AWS services and resources in the account. This identity is called the AWS account root user.

Signing in to the AWS Management Console using the email address and password that you used to create the account gives you complete access to all the AWS resources in your account. We strongly recommend that you do not use the root user for everyday tasks, even the administrative ones.

Instead, adhere to the security best practices in AWS Identity and Access Management (IAM), and create an administrative user and group. Then securely lock away the root user credentials and use them to perform only a few account and service management tasks.

The Mixtral-8x7b model requires an ml.g5.48xlarge instance. SageMaker JumpStart provides a simplified way to access and deploy over 100 different open source and third-party foundation models. In order to launch an endpoint to host Mixtral-8x7B from SageMaker JumpStart, you may need to request a service quota increase to access an ml.g5.48xlarge instance for endpoint usage. You can request service quota increases through the console, AWS Command Line Interface (AWS CLI), or API to allow access to those additional resources.

Set up a SageMaker notebook instance and install dependencies

To get started, create a SageMaker notebook instance and install the required dependencies. Refer to the GitHub repo to ensure a successful setup. After you set up the notebook instance, you can deploy the model.

You can also run the notebook locally on your preferred integrated development environment (IDE). Make sure that you have the Jupyter notebook lab installed.

Deploy the model

Deploy the Mixtral-8X7B Instruct LLM model on SageMaker JumpStart:

# Import the JumpStartModel class from the SageMaker JumpStart library

from sagemaker.jumpstart.model import JumpStartModel

# Specify the model ID for the HuggingFace Mixtral 8x7b Instruct LLM model

model_id = "huggingface-llm-mixtral-8x7b-instruct"

model = JumpStartModel(model_id=model_id)

llm_predictor = model.deploy()

Deploy the BGE Large En embedding model on SageMaker JumpStart:

# Specify the model ID for the HuggingFace BGE Large EN Embedding model

model_id = "huggingface-sentencesimilarity-bge-large-en"

text_embedding_model = JumpStartModel(model_id=model_id)

embedding_predictor = text_embedding_model.deploy()

Set up LangChain

After importing all the necessary libraries and deploying the Mixtral-8x7B model and BGE Large En embeddings model, you can now set up LangChain. For step-by-step instructions, refer to the GitHub repo.

Data preparation

In this post, we use several years of Amazon’s Letters to Shareholders as a text corpus to perform QnA on. For more detailed steps to prepare the data, refer to the GitHub repo.

Question answering

Once the data is prepared, you can use the wrapper provided by LangChain, which wraps around the vector store and takes input for the LLM. This wrapper performs the following steps:

- Take the input question.

- Create a question embedding.

- Fetch relevant documents.

- Incorporate the documents and the question into a prompt.

- Invoke the model with the prompt and generate the answer in a readable manner.

Now that the vector store is in place, you can start asking questions:

prompt_template = """<s>[INST]

{query}

[INST]"""

PROMPT = PromptTemplate(

template=prompt_template, input_variables=["query"]

)

query = "How has AWS evolved?"

answer = wrapper_store_faiss.query(question=PROMPT.format(query=query), llm=llm)

print(answer)

AWS, or Amazon Web Services, has evolved significantly since its initial launch in 2006. It started as a feature-poor service, offering only one instance size, in one data center, in one region of the world, with Linux operating system instances only. There was no monitoring, load balancing, auto-scaling, or persistent storage at the time. However, AWS had a successful launch and has since grown into a multi-billion-dollar service.

Over the years, AWS has added numerous features and services, with over 3,300 new ones launched in 2022 alone. They have expanded their offerings to include Windows, monitoring, load balancing, auto-scaling, and persistent storage. AWS has also made significant investments in long-term inventions that have changed what's possible in technology infrastructure.

One example of this is their investment in chip development. AWS has also seen a robust new customer pipeline and active migrations, with many companies opting to move to AWS for the agility, innovation, cost-efficiency, and security benefits it offers. AWS has transformed how customers, from start-ups to multinational companies to public sector organizations, manage their technology infrastructure.

Regular retriever chain

In the preceding scenario, we explored the quick and straightforward way to get a context-aware answer to your question. Now let’s look at a more customizable option with the help of RetrievalQA, where you can customize how the documents fetched should be added to the prompt using the chain_type parameter. Also, in order to control how many relevant documents should be retrieved, you can change the k parameter in the following code to see different outputs. In many scenarios, you might want to know which source documents the LLM used to generate the answer. You can get those documents in the output using return_source_documents, which returns the documents that are added to the context of the LLM prompt. RetrievalQA also allows you to provide a custom prompt template that can be specific to the model.

from langchain.chains import RetrievalQA

prompt_template = """<s>[INST]

Use the following pieces of context to provide a concise answer to the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

{context}

Question: {question}

[INST]"""

PROMPT = PromptTemplate(

template=prompt_template, input_variables=["context", "question"]

)

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=vectorstore_faiss.as_retriever(

search_type="similarity", search_kwargs={"k": 3}

),

return_source_documents=True,

chain_type_kwargs={"prompt": PROMPT}

)

Let’s ask a question:

query = "How did AWS evolve?"

result = qa({"query": query})

print(result['result'])

AWS (Amazon Web Services) evolved from an initially unprofitable investment to an $85B annual revenue run rate business with strong profitability, offering a wide range of services and features, and becoming a significant part of Amazon's portfolio. Despite facing skepticism and short-term headwinds, AWS continued to innovate, attract new customers, and migrate active customers, offering benefits such as agility, innovation, cost-efficiency, and security. AWS also expanded its long-term investments, including chip development, to provide new capabilities and change what's possible for its customers.

Parent document retriever chain

Let’s look at a more advanced RAG option with the help of ParentDocumentRetriever. When working with document retrieval, you may encounter a trade-off between storing small chunks of a document for accurate embeddings and larger documents to preserve more context. The parent document retriever strikes that balance by splitting and storing small chunks of data.

We use a parent_splitter to divide the original documents into larger chunks called parent documents and a child_splitter to create smaller child documents from the original documents:

# This text splitter is used to create the parent documents

parent_splitter = RecursiveCharacterTextSplitter(chunk_size=2000)

# This text splitter is used to create the child documents

# It should create documents smaller than the parent

child_splitter = RecursiveCharacterTextSplitter(chunk_size=400)

# The vectorstore to use to index the child chunks

vectorstore_faiss = FAISS.from_documents(

child_splitter.split_documents(documents),

sagemaker_embeddings,

)

The child documents are then indexed in a vector store using embeddings. This enables efficient retrieval of relevant child documents based on similarity. To retrieve relevant information, the parent document retriever first fetches the child documents from the vector store. It then looks up the parent IDs for those child documents and returns the corresponding larger parent documents.

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": PROMPT}

)

Let’s ask a question:

query = "How did AWS evolve?"

result = qa({"query": query})

print(result['result'])

AWS (Amazon Web Services) started with a feature-poor initial launch of the Elastic Compute Cloud (EC2) service in 2006, providing only one instance size, in one data center, in one region of the world, with Linux operating system instances only, and without many key features like monitoring, load balancing, auto-scaling, or persistent storage. However, AWS's success allowed them to quickly iterate and add the missing capabilities, eventually expanding to offer various flavors, sizes, and optimizations of compute, storage, and networking, as well as developing their own chips (Graviton) to push price and performance further. AWS's iterative innovation process required significant investments in financial and people resources over 20 years, often well in advance of when it would pay out, to meet customer needs and improve long-term customer experiences, loyalty, and returns for shareholders.

Contextual compression chain

Let’s look at another advanced RAG option called contextual compression. One challenge with retrieval is that usually we don’t know the specific queries your document storage system will face when you ingest data into the system. This means that the information most relevant to a query may be buried in a document with a lot of irrelevant text. Passing that full document through your application can lead to more expensive LLM calls and poorer responses.

The contextual compression retriever addresses the challenge of retrieving relevant information from a document storage system, where the pertinent data may be buried within documents containing a lot of text. By compressing and filtering the retrieved documents based on the given query context, only the most relevant information is returned.

To use the contextual compression retriever, you’ll need:

- A base retriever – This is the initial retriever that fetches documents from the storage system based on the query

- A document compressor – This component takes the initially retrieved documents and shortens them by reducing the contents of individual documents or dropping irrelevant documents altogether, using the query context to determine relevance

Adding contextual compression with an LLM chain extractor

First, wrap your base retriever with a ContextualCompressionRetriever. You’ll add an LLMChainExtractor, which will iterate over the initially returned documents and extract from each only the content that is relevant to the query.

from langchain.retrievers import ContextualCompressionRetrieverfrom langchain.retrievers.document_compressors import LLMChainExtractor

text_splitter = RecursiveCharacterTextSplitter(

# Set a really small chunk size, just to show.

chunk_size=1000,

chunk_overlap=100,

)

docs = text_splitter.split_documents(documents)

retriever = FAISS.from_documents(

docs,

sagemaker_embeddings,

).as_retriever()

compressor = LLMChainExtractor.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(

base_compressor=compressor, base_retriever=retriever

)

compressed_docs = compression_retriever.get_relevant_documents(

"How was Amazon impacted by COVID-19?"

)

Initialize the chain using the ContextualCompressionRetriever with an LLMChainExtractor and pass the prompt in via the chain_type_kwargs argument.

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=compression_retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": PROMPT}

)

Let’s ask a question:

query = "How did AWS evolve?"

result = qa({"query": query})

print(result['result'])

AWS evolved by starting as a small project inside Amazon, requiring significant capital investment and facing skepticism from both inside and outside the company. However, AWS had a head start on potential competitors and believed in the value it could bring to customers and Amazon. AWS made a long-term commitment to continue investing, resulting in over 3,300 new features and services launched in 2022. AWS has transformed how customers manage their technology infrastructure and has become an $85B annual revenue run rate business with strong profitability. AWS has also continuously improved its offerings, such as enhancing EC2 with additional features and services after its initial launch.

Filter documents with an LLM chain filter

The LLMChainFilter is a slightly simpler but more robust compressor that uses an LLM chain to decide which of the initially retrieved documents to filter out and which ones to return, without manipulating the document contents:

from langchain.retrievers.document_compressors import LLMChainFilter

_filter = LLMChainFilter.from_llm(llm)

compression_retriever = ContextualCompressionRetriever(

base_compressor=_filter, base_retriever=retriever

)

compressed_docs = compression_retriever.get_relevant_documents(

"How was Amazon impacted by COVID-19?"

)

print(compressed_docs)

Initialize the chain using the ContextualCompressionRetriever with an LLMChainFilter and pass the prompt in via the chain_type_kwargs argument.

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=compression_retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": PROMPT}

)

Let’s ask a question:

query = "How did AWS evolve?"

result = qa({"query": query})

print(result['result'])

AWS (Amazon Web Services) evolved by initially launching feature-poor but iterating quickly based on customer feedback to add necessary capabilities. This approach allowed AWS to launch EC2 in 2006 with limited features and then continuously add new functionalities, such as additional instance sizes, data centers, regions, operating system options, monitoring tools, load balancing, auto-scaling, and persistent storage. Over time, AWS transformed from a feature-poor service to a multi-billion-dollar business by focusing on customer needs, agility, innovation, cost-efficiency, and security. AWS now has an $85B annual revenue run rate and offers over 3,300 new features and services each year, catering to a wide range of customers from start-ups to multinational companies and public sector organizations.

Compare results

The following table compares results from different queries based on technique.

| Technique |

Query 1 |

Query 2 |

Comparison |

| How did AWS evolve? |

Why is Amazon successful? |

| Regular Retriever Chain Output |

AWS (Amazon Web Services) evolved from an initially unprofitable investment to an $85B annual revenue run rate business with strong profitability, offering a wide range of services and features, and becoming a significant part of Amazon’s portfolio. Despite facing skepticism and short-term headwinds, AWS continued to innovate, attract new customers, and migrate active customers, offering benefits such as agility, innovation, cost-efficiency, and security. AWS also expanded its long-term investments, including chip development, to provide new capabilities and change what’s possible for its customers. |

Amazon is successful due to its continuous innovation and expansion into new areas such as technology infrastructure services, digital reading devices, voice-driven personal assistants, and new business models like the third-party marketplace. Its ability to scale operations quickly, as seen in the rapid expansion of its fulfillment and transportation networks, also contributes to its success. Additionally, Amazon’s focus on optimization and efficiency gains in its processes has resulted in productivity improvements and cost reductions. The example of Amazon Business highlights the company’s capability to leverage its e-commerce and logistics strengths in different sectors. |

Based on the responses from the regular retriever chain, we notice that although it provides long answers, it suffers from context overflow and fails to mention any significant details from the corpus in regards to responding to the query provided. The regular retrieval chain is not able to capture the nuances with depth or contextual insight, potentially missing critical aspects of the document. |

| Parent Document Retriever Output |

AWS (Amazon Web Services) started with a feature-poor initial launch of the Elastic Compute Cloud (EC2) service in 2006, providing only one instance size, in one data center, in one region of the world, with Linux operating system instances only, and without many key features like monitoring, load balancing, auto-scaling, or persistent storage. However, AWS’s success allowed them to quickly iterate and add the missing capabilities, eventually expanding to offer various flavors, sizes, and optimizations of compute, storage, and networking, as well as developing their own chips (Graviton) to push price and performance further. AWS’s iterative innovation process required significant investments in financial and people resources over 20 years, often well in advance of when it would pay out, to meet customer needs and improve long-term customer experiences, loyalty, and returns for shareholders. |

Amazon is successful due to its ability to constantly innovate, adapt to changing market conditions, and meet customer needs in various market segments. This is evident in the success of Amazon Business, which has grown to drive roughly $35B in annualized gross sales by delivering selection, value, and convenience to business customers. Amazon’s investments in ecommerce and logistics capabilities have also enabled the creation of services like Buy with Prime, which helps merchants with direct-to-consumer websites drive conversion from views to purchases. |

The parent document retriever delves deeper into the specifics of AWS’s growth strategy, including the iterative process of adding new features based on customer feedback and the detailed journey from a feature-poor initial launch to a dominant market position, while providing a context-rich response. Responses cover a wide range of aspects, from technical innovations and market strategy to organizational efficiency and customer focus, providing a holistic view of the factors contributing to success along with examples. This can be attributed to the parent document retriever’s targeted yet broad-ranging search capabilities. |

| LLM Chain Extractor: Contextual Compression Output |

AWS evolved by starting as a small project inside Amazon, requiring significant capital investment and facing skepticism from both inside and outside the company. However, AWS had a head start on potential competitors and believed in the value it could bring to customers and Amazon. AWS made a long-term commitment to continue investing, resulting in over 3,300 new features and services launched in 2022. AWS has transformed how customers manage their technology infrastructure and has become an $85B annual revenue run rate business with strong profitability. AWS has also continuously improved its offerings, such as enhancing EC2 with additional features and services after its initial launch. |

Based on the provided context, Amazon’s success can be attributed to its strategic expansion from a book-selling platform to a global marketplace with a vibrant third-party seller ecosystem, early investment in AWS, innovation in introducing the Kindle and Alexa, and substantial growth in annual revenue from 2019 to 2022. This growth led to the expansion of the fulfillment center footprint, creation of a last-mile transportation network, and building a new sortation center network, which were optimized for productivity and cost reductions. |

The LLM chain extractor maintains a balance between covering key points comprehensively and avoiding unnecessary depth. It dynamically adjusts to the query’s context, so the output is directly relevant and comprehensive. |

| LLM Chain Filter: Contextual Compression Output |

AWS (Amazon Web Services) evolved by initially launching feature-poor but iterating quickly based on customer feedback to add necessary capabilities. This approach allowed AWS to launch EC2 in 2006 with limited features and then continuously add new functionalities, such as additional instance sizes, data centers, regions, operating system options, monitoring tools, load balancing, auto-scaling, and persistent storage. Over time, AWS transformed from a feature-poor service to a multi-billion-dollar business by focusing on customer needs, agility, innovation, cost-efficiency, and security. AWS now has an $85B annual revenue run rate and offers over 3,300 new features and services each year, catering to a wide range of customers from start-ups to multinational companies and public sector organizations. |

Amazon is successful due to its innovative business models, continuous technological advancements, and strategic organizational changes. The company has consistently disrupted traditional industries by introducing new ideas, such as an ecommerce platform for various products and services, a third-party marketplace, cloud infrastructure services (AWS), the Kindle e-reader, and the Alexa voice-driven personal assistant. Additionally, Amazon has made structural changes to improve its efficiency, such as reorganizing its US fulfillment network to decrease costs and delivery times, further contributing to its success. |

Similar to the LLM chain extractor, the LLM chain filter makes sure that although the key points are covered, the output is efficient for customers looking for concise and contextual answers. |

Upon comparing these different techniques, we can see that in contexts like detailing AWS’s transition from a simple service to a complex, multi-billion-dollar entity, or explaining Amazon’s strategic successes, the regular retriever chain lacks the precision the more sophisticated techniques offer, leading to less targeted information. Although very few differences are visible between the advanced techniques discussed, they are by far more informative than regular retriever chains.

For customers in industries such as healthcare, telecommunications, and financial services who are looking to implement RAG in their applications, the limitations of the regular retriever chain in providing precision, avoiding redundancy, and effectively compressing information make it less suited to fulfilling these needs compared to the more advanced parent document retriever and contextual compression techniques. These techniques are able to distill vast amounts of information into the concentrated, impactful insights that you need, while helping improve price-performance.

Clean up

When you’re done running the notebook, delete the resources you created in order to avoid accrual of charges for the resources in use:

# Delete resources

llm_predictor.delete_model()

llm_predictor.delete_endpoint()

embedding_predictor.delete_model()

embedding_predictor.delete_endpoint()

Conclusion

In this post, we presented a solution that allows you to implement the parent document retriever and contextual compression chain techniques to enhance the ability of LLMs to process and generate information. We tested out these advanced RAG techniques with the Mixtral-8x7B Instruct and BGE Large En models available with SageMaker JumpStart. We also explored using persistent storage for embeddings and document chunks and integration with enterprise data stores.

The techniques we performed not only refine the way LLM models access and incorporate external knowledge, but also significantly improve the quality, relevance, and efficiency of their outputs. By combining retrieval from large text corpora with language generation capabilities, these advanced RAG techniques enable LLMs to produce more factual, coherent, and context-appropriate responses, enhancing their performance across various natural language processing tasks.

SageMaker JumpStart is at the center of this solution. With SageMaker JumpStart, you gain access to an extensive assortment of open and closed source models, streamlining the process of getting started with ML and enabling rapid experimentation and deployment. To get started deploying this solution, navigate to the notebook in the GitHub repo.

About the Authors

Niithiyn Vijeaswaran is a Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Niithiyn Vijeaswaran is a Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the world with his wife.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the world with his wife.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and Data Analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and Data Analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Marco Punio is a Solutions Architect focused on generative AI strategy, applied AI solutions and conducting research to help customers hyper-scale on AWS. Marco is a digital native cloud advisor with experience in the FinTech, Healthcare & Life Sciences, Software-as-a-service, and most recently, in Telecommunications industries. He is a qualified technologist with a passion for machine learning, artificial intelligence, and mergers & acquisitions. Marco is based in Seattle, WA and enjoys writing, reading, exercising, and building applications in his free time.

Marco Punio is a Solutions Architect focused on generative AI strategy, applied AI solutions and conducting research to help customers hyper-scale on AWS. Marco is a digital native cloud advisor with experience in the FinTech, Healthcare & Life Sciences, Software-as-a-service, and most recently, in Telecommunications industries. He is a qualified technologist with a passion for machine learning, artificial intelligence, and mergers & acquisitions. Marco is based in Seattle, WA and enjoys writing, reading, exercising, and building applications in his free time.

AJ Dhimine is a Solutions Architect at AWS. He specializes in generative AI, serverless computing and data analytics. He is an active member/mentor in Machine Learning Technical Field Community and has published several scientific papers on various AI/ML topics. He works with customers, ranging from start-ups to enterprises, to develop AWSome generative AI solutions. He is particularly passionate about leveraging Large Language Models for advanced data analytics and exploring practical applications that address real-world challenges. Outside of work, AJ enjoys traveling, and is currently at 53 countries with a goal of visiting every country in the world.

AJ Dhimine is a Solutions Architect at AWS. He specializes in generative AI, serverless computing and data analytics. He is an active member/mentor in Machine Learning Technical Field Community and has published several scientific papers on various AI/ML topics. He works with customers, ranging from start-ups to enterprises, to develop AWSome generative AI solutions. He is particularly passionate about leveraging Large Language Models for advanced data analytics and exploring practical applications that address real-world challenges. Outside of work, AJ enjoys traveling, and is currently at 53 countries with a goal of visiting every country in the world.

Read More

Grazia Russo Lassner is a Senior Consultant with the AWS Professional Services Natural Language AI team. She specialises in designing and developing conversational AI solutions using AWS technologies for customers in various industries. Outside of work, she enjoys beach weekends, reading the latest fiction books, and family time.

Grazia Russo Lassner is a Senior Consultant with the AWS Professional Services Natural Language AI team. She specialises in designing and developing conversational AI solutions using AWS technologies for customers in various industries. Outside of work, she enjoys beach weekends, reading the latest fiction books, and family time. Austin Johnson is a Solutions Architect, helping to maintain the Lex Web UI open source library.

Austin Johnson is a Solutions Architect, helping to maintain the Lex Web UI open source library. Chris Brown is a Principal Natural Language AI consultant at AWS focused on digital customer experiences – including mobile apps, websites, marketing campaigns, and most recently conversational AI applications. Chris is an award-winning strategist and product manager – working with the Fortune 100 to deliver the best experiences for their customers. In his free time, Chris enjoys traveling, music, art, and experiencing new cultures.

Chris Brown is a Principal Natural Language AI consultant at AWS focused on digital customer experiences – including mobile apps, websites, marketing campaigns, and most recently conversational AI applications. Chris is an award-winning strategist and product manager – working with the Fortune 100 to deliver the best experiences for their customers. In his free time, Chris enjoys traveling, music, art, and experiencing new cultures. Bruno Mateus is a Principal Engineer at Talkdesk. With over 20 years of experience in the software industry, he specialises in large-scale distributed systems. When not working, he enjoys spending time outside with his family, trekking, mountain bike riding, and motorcycle riding.

Bruno Mateus is a Principal Engineer at Talkdesk. With over 20 years of experience in the software industry, he specialises in large-scale distributed systems. When not working, he enjoys spending time outside with his family, trekking, mountain bike riding, and motorcycle riding. Jonathan Diedrich is a Principal Solutions Consultant at Talkdesk. He works on enterprise and strategic projects to ensure technical execution and adoption. Outside of work, he enjoys ice hockey and games with the family.

Jonathan Diedrich is a Principal Solutions Consultant at Talkdesk. He works on enterprise and strategic projects to ensure technical execution and adoption. Outside of work, he enjoys ice hockey and games with the family. Crispim Tribuna is a Senior Software Engineer at Talkdesk currently focusing on the AI-based virtual agent project. He has over 17 years of experience in computer science, with a focus on telecommunications, IPTV, and fraud prevention. In his free time, he enjoys spending time with his family, running (he has completed three marathons), and riding motorcycles.

Crispim Tribuna is a Senior Software Engineer at Talkdesk currently focusing on the AI-based virtual agent project. He has over 17 years of experience in computer science, with a focus on telecommunications, IPTV, and fraud prevention. In his free time, he enjoys spending time with his family, running (he has completed three marathons), and riding motorcycles.

Armando Diaz

Armando Diaz

AJ Dhimine is a Solutions Architect at AWS. He specializes in generative AI, serverless computing and data analytics. He is an active member/mentor in Machine Learning Technical Field Community and has published several scientific papers on various AI/ML topics. He works with customers, ranging from start-ups to enterprises, to develop AWSome generative AI solutions. He is particularly passionate about leveraging Large Language Models for advanced data analytics and exploring practical applications that address real-world challenges. Outside of work, AJ enjoys traveling, and is currently at 53 countries with a goal of visiting every country in the world.

AJ Dhimine is a Solutions Architect at AWS. He specializes in generative AI, serverless computing and data analytics. He is an active member/mentor in Machine Learning Technical Field Community and has published several scientific papers on various AI/ML topics. He works with customers, ranging from start-ups to enterprises, to develop AWSome generative AI solutions. He is particularly passionate about leveraging Large Language Models for advanced data analytics and exploring practical applications that address real-world challenges. Outside of work, AJ enjoys traveling, and is currently at 53 countries with a goal of visiting every country in the world.

RAG is the most effective way of guiding an LLM to generate responses grounded in a domain. Although it can guide a model to generate responses by providing facts from the domain as auxiliary information, it doesn’t acquire the domain-specific language because the LLM is still relying on non-domain language style to generate the responses.

RAG is the most effective way of guiding an LLM to generate responses grounded in a domain. Although it can guide a model to generate responses by providing facts from the domain as auxiliary information, it doesn’t acquire the domain-specific language because the LLM is still relying on non-domain language style to generate the responses.

Yong Xie is an applied scientist in Amazon FinTech. He focuses on developing large language models and Generative AI applications for finance.

Yong Xie is an applied scientist in Amazon FinTech. He focuses on developing large language models and Generative AI applications for finance. Karan Aggarwal is a Senior Applied Scientist with Amazon FinTech with a focus on Generative AI for finance use-cases. Karan has extensive experience in time-series analysis and NLP, with particular interest in learning from limited labeled data

Karan Aggarwal is a Senior Applied Scientist with Amazon FinTech with a focus on Generative AI for finance use-cases. Karan has extensive experience in time-series analysis and NLP, with particular interest in learning from limited labeled data Aitzaz Ahmad is an Applied Science Manager at Amazon where he leads a team of scientists building various applications of Machine Learning and Generative AI in Finance. His research interests are in NLP, Generative AI, and LLM Agents. He received his PhD in Electrical Engineering from Texas A&M University.

Aitzaz Ahmad is an Applied Science Manager at Amazon where he leads a team of scientists building various applications of Machine Learning and Generative AI in Finance. His research interests are in NLP, Generative AI, and LLM Agents. He received his PhD in Electrical Engineering from Texas A&M University. Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in financial service build machine learning solutions on AWS.

Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in financial service build machine learning solutions on AWS. Raghvender Arni leads the Customer Acceleration Team (CAT) within AWS Industries. The CAT is a global cross-functional team of customer facing cloud architects, software engineers, data scientists, and AI/ML experts and designers that drives innovation via advanced prototyping, and drives cloud operational excellence via specialized technical expertise.

Raghvender Arni leads the Customer Acceleration Team (CAT) within AWS Industries. The CAT is a global cross-functional team of customer facing cloud architects, software engineers, data scientists, and AI/ML experts and designers that drives innovation via advanced prototyping, and drives cloud operational excellence via specialized technical expertise.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)