In our paper, published today in Nature, we introduce AlphaTensor, the first artificial intelligence (AI) system for discovering novel, efficient, and provably correct algorithms for fundamental tasks such as matrix multiplication. This sheds light on a 50-year-old open question in mathematics about finding the fastest way to multiply two matrices. This paper is a stepping stone in DeepMind’s mission to advance science and unlock the most fundamental problems using AI. Our system, AlphaTensor, builds upon AlphaZero, an agent that has shown superhuman performance on board games, like chess, Go and shogi, and this work shows the journey of AlphaZero from playing games to tackling unsolved mathematical problems for the first time.Read More

Discovering novel algorithms with AlphaTensor

In our paper, published today in Nature, we introduce AlphaTensor, the first artificial intelligence (AI) system for discovering novel, efficient, and provably correct algorithms for fundamental tasks such as matrix multiplication. This sheds light on a 50-year-old open question in mathematics about finding the fastest way to multiply two matrices. This paper is a stepping stone in DeepMind’s mission to advance science and unlock the most fundamental problems using AI. Our system, AlphaTensor, builds upon AlphaZero, an agent that has shown superhuman performance on board games, like chess, Go and shogi, and this work shows the journey of AlphaZero from playing games to tackling unsolved mathematical problems for the first time.Read More

Discovering novel algorithms with AlphaTensor

In our paper, published today in Nature, we introduce AlphaTensor, the first artificial intelligence (AI) system for discovering novel, efficient, and provably correct algorithms for fundamental tasks such as matrix multiplication. This sheds light on a 50-year-old open question in mathematics about finding the fastest way to multiply two matrices. This paper is a stepping stone in DeepMind’s mission to advance science and unlock the most fundamental problems using AI. Our system, AlphaTensor, builds upon AlphaZero, an agent that has shown superhuman performance on board games, like chess, Go and shogi, and this work shows the journey of AlphaZero from playing games to tackling unsolved mathematical problems for the first time. Read More

Redact sensitive data from streaming data in near-real time using Amazon Comprehend and Amazon Kinesis Data Firehose

Near-real-time delivery of data and insights enable businesses to rapidly respond to their customers’ needs. Real-time data can come from a variety of sources, including social media, IoT devices, infrastructure monitoring, call center monitoring, and more. Due to the breadth and depth of data being ingested from multiple sources, businesses look for solutions to protect their customers’ privacy and keep sensitive data from being accessed from end systems. You previously had to rely on personally identifiable information (PII) rules engines that could flag false positives or miss data, or you had to build and maintain custom machine learning (ML) models to identify PII in your streaming data. You also needed to implement and maintain the infrastructure necessary to support these engines or models.

To help streamline this process and reduce costs, you can use Amazon Comprehend, a natural language processing (NLP) service that uses ML to find insights and relationships like people, places, sentiments, and topics in unstructured text. You can now use Amazon Comprehend ML capabilities to detect and redact PII in customer emails, support tickets, product reviews, social media, and more. No ML experience is required. For example, you can analyze support tickets and knowledge articles to detect PII entities and redact the text before you index the documents. After that, documents are free of PII entities and users can consume the data. Redacting PII entities helps you protect your customer’s privacy and comply with local laws and regulations.

In this post, you learn how to implement Amazon Comprehend into your streaming architectures to redact PII entities in near-real time using Amazon Kinesis Data Firehose with AWS Lambda.

This post is focused on redacting data from select fields that are ingested into a streaming architecture using Kinesis Data Firehose, where you want to create, store, and maintain additional derivative copies of the data for consumption by end-users or downstream applications. If you’re using Amazon Kinesis Data Streams or have additional use cases outside of PII redaction, refer to Translate, redact and analyze streaming data using SQL functions with Amazon Kinesis Data Analytics, Amazon Translate, and Amazon Comprehend, where we show how you can use Amazon Kinesis Data Analytics Studio powered by Apache Zeppelin and Apache Flink to interactively analyze, translate, and redact text fields in streaming data.

Solution overview

The following figure shows an example architecture for performing PII redaction of streaming data in real time, using Amazon Simple Storage Service (Amazon S3), Kinesis Data Firehose data transformation, Amazon Comprehend, and AWS Lambda. Additionally, we use the AWS SDK for Python (Boto3) for the Lambda functions. As indicated in the diagram, the S3 raw bucket contains non-redacted data, and the S3 redacted bucket contains redacted data after using the Amazon Comprehend DetectPiiEntities API within a Lambda function.

Costs involved

In addition to Kinesis Data Firehose, Amazon S3, and Lambda costs, this solution will incur usage costs from Amazon Comprehend. The amount you pay is a factor of the total number of records that contain PII and the characters that are processed by the Lambda function. For more information, refer to Amazon Kinesis Data Firehose pricing, Amazon Comprehend Pricing, and AWS Lambda Pricing.

As an example, let’s assume you have 10,000 logs records, and the key value you want to redact PII from is 500 characters. Out of the 10,000 log records, 50 are identified as containing PII. The cost details are as follows:

Contains PII Cost:

- Size of each key value = 500 characters (1 unit = 100 characters)

- Number of units (100 characters) per record (minimum is 3 units) = 5

- Total units = 10,000 (records) x 5 (units per record) x 1 (Amazon Comprehend requests per record) = 50,000

- Price per unit = $0.000002

- Total cost for identifying log records with PII using ContainsPiiEntities API = $0.1 [50,000 units x $0.000002]

Redact PII Cost:

- Total units containing PII = 50 (records) x 5 (units per record) x 1 (Amazon Comprehend requests per record) = 250

- Price per unit = $0.0001

- Total cost for identifying location of PII using DetectPiiEntities API = [number of units] x [cost per unit] = 250 x $0.0001 = $0.025

Total Cost for identification and redaction:

- Total cost: $0.1 (validation if field contains PII) + $0.025 (redact fields that contain PII) = $0.125

Deploy the solution with AWS CloudFormation

For this post, we provide an AWS CloudFormation streaming data redaction template, which provides the full details of the implementation to enable repeatable deployments. Upon deployment, this template creates two S3 buckets: one to store the raw sample data ingested from the Amazon Kinesis Data Generator (KDG), and one to store the redacted data. Additionally, it creates a Kinesis Data Firehose delivery stream with DirectPUT as input, and a Lambda function that calls the Amazon Comprehend ContainsPiiEntities and DetectPiiEntities API to identify and redact PII data. The Lambda function relies on user input in the environment variables to determine what key values need to be inspected for PII.

The Lambda function in this solution has limited payload sizes to 100 KB. If a payload is provided where the text is greater than 100 KB, the Lambda function will skip it.

To deploy the solution, complete the following steps:

- Launch the CloudFormation stack in US East (N. Virginia)

us-east-1:

- Enter a stack name, and leave other parameters at their default

- Select I acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Choose Create stack.

Deploy resources manually

If you prefer to build the architecture manually instead of using AWS CloudFormation, complete the steps in this section.

Create the S3 buckets

Create your S3 buckets with the following steps:

- On the Amazon S3 console, choose Buckets in the navigation pane.

- Choose Create bucket.

- Create one bucket for your raw data and one for your redacted data.

- Note the names of the buckets you just created.

Create the Lambda function

To create and deploy the Lambda function, complete the following steps:

- On the Lambda console, choose Create function.

- Choose Author from scratch.

- For Function Name, enter

AmazonComprehendPII-Redact. - For Runtime, choose Python 3.9.

- For Architecture, select x86_64.

- For Execution role, select Create a new role with Lambda permissions.

- After you create the function, enter the following code:

- Choose Deploy.

- In the navigation pane, choose Configuration.

- Navigate to Environment variables.

- Choose Edit.

- For Key, enter

keys. - For Value, enter the key values you want to redact PII from, separated by a comma and space. For example, enter

Tweet1,Tweet2if you’re using the sample test data provided in the next section of this post. - Choose Save.

- Navigate to General configuration.

- Choose Edit.

- Change the value of Timeout to 1 minute.

- Choose Save.

- Navigate to Permissions.

- Choose the role name under Execution Role.

You’re redirected to the AWS Identity and Access Management (IAM) console. - For Add permissions, choose Attach policies.

- Enter

Comprehendinto the search bar and choose the policyComprehendFullAccess. - Choose Attach policies.

Create the Firehose delivery stream

To create your Firehose delivery stream, complete the following steps:

- On the Kinesis Data Firehose console, choose Create delivery stream.

- For Source, select Direct PUT.

- For Destination, select Amazon S3.

- For Delivery stream name, enter

ComprehendRealTimeBlog. - Under Transform source records with AWS Lambda, select Enabled.

- For AWS Lambda function, enter the ARN for the function you created, or browse to the function

AmazonComprehendPII-Redact. - For Buffer Size, set the value to 1 MB.

- For Buffer Interval, leave it as 60 seconds.

- Under Destination Settings, select the S3 bucket you created for the redacted data.

- Under Backup Settings, select the S3 bucket that you created for the raw records.

- Under Permission, either create or update an IAM role, or choose an existing role with the proper permissions.

- Choose Create delivery stream.

Deploy the streaming data solution with the Kinesis Data Generator

You can use the Kinesis Data Generator (KDG) to ingest sample data to Kinesis Data Firehose and test the solution. To simplify this process, we provide a Lambda function and CloudFormation template to create an Amazon Cognito user and assign appropriate permissions to use the KDG.

- On the Amazon Kinesis Data Generator page, choose Create a Cognito User with CloudFormation.You’re redirected to the AWS CloudFormation console to create your stack.

- Provide a user name and password for the user with which you log in to the KDG.

- Leave the other settings at their defaults and create your stack.

- On the Outputs tab, choose the KDG UI link.

- Enter your user name and password to log in.

Send test records and validate redaction in Amazon S3

To test the solution, complete the following steps:

- Log in to the KDG URL you created in the previous step.

- Choose the Region where the AWS CloudFormation stack was deployed.

- For Stream/delivery stream, choose the delivery stream you created (if you used the template, it has the format

accountnumber-awscomprehend-blog). - Leave the other settings at their defaults.

- For the record template, you can create your own tests, or use the following template.If you’re using the provided sample data below for testing, you should have updated environment variables in the

AmazonComprehendPII-RedactLambda function toTweet1,Tweet2. If deployed via CloudFormation, update environment variables toTweet1,Tweet2within the created Lambda function. The sample test data is below: - Choose Send Data, and allow a few seconds for records to be sent to your stream.

- After few seconds, stop the KDG generator and check your S3 buckets for the delivered files.

The following is an example of the raw data in the raw S3 bucket:

The following is an example of the redacted data in the redacted S3 bucket:

The sensitive information has been removed from the redacted messages, providing confidence that you can share this data with end systems.

Cleanup

When you’re finished experimenting with this solution, clean up your resources by using the AWS CloudFormation console to delete all the resources deployed in this example. If you followed the manual steps, you will need to manually delete the two buckets, the AmazonComprehendPII-Redact function, the ComprehendRealTimeBlog stream, the log group for the ComprehendRealTimeBlog stream, and any IAM roles that were created.

Conclusion

This post showed you how to integrate PII redaction into your near-real-time streaming architecture and reduce data processing time by performing redaction in flight. In this scenario, you provide the redacted data to your end-users and a data lake administrator secures the raw bucket for later use. You could also build additional processing with Amazon Comprehend to identify tone or sentiment, identify entities within the data, and classify each message.

We provided individual steps for each service as part of this post, and also included a CloudFormation template that allows you to provision the required resources in your account. This template should be used for proof of concept or testing scenarios only. Refer to the developer guides for Amazon Comprehend, Lambda, and Kinesis Data Firehose for any service limits.

To get started with PII identification and redaction, see Personally identifiable information (PII). With the example architecture in this post, you could integrate any of the Amazon Comprehend APIs with near-real-time data using Kinesis Data Firehose data transformation. To learn more about what you can build with your near-real-time data with Kinesis Data Firehose, refer to the Amazon Kinesis Data Firehose Developer Guide. This solution is available in all AWS Regions where Amazon Comprehend and Kinesis Data Firehose are available.

About the authors

Joe Morotti is a Solutions Architect at Amazon Web Services (AWS), helping Enterprise customers across the Midwest US. He has held a wide range of technical roles and enjoy showing customer’s art of the possible. In his free time, he enjoys spending quality time with his family exploring new places and overanalyzing his sports team’s performance

Joe Morotti is a Solutions Architect at Amazon Web Services (AWS), helping Enterprise customers across the Midwest US. He has held a wide range of technical roles and enjoy showing customer’s art of the possible. In his free time, he enjoys spending quality time with his family exploring new places and overanalyzing his sports team’s performance

Sriharsh Adari is a Senior Solutions Architect at Amazon Web Services (AWS), where he helps customers work backwards from business outcomes to develop innovative solutions on AWS. Over the years, he has helped multiple customers on data platform transformations across industry verticals. His core area of expertise include Technology Strategy, Data Analytics, and Data Science. In his spare time, he enjoys playing Tennis, binge-watching TV shows, and playing Tabla.

Sriharsh Adari is a Senior Solutions Architect at Amazon Web Services (AWS), where he helps customers work backwards from business outcomes to develop innovative solutions on AWS. Over the years, he has helped multiple customers on data platform transformations across industry verticals. His core area of expertise include Technology Strategy, Data Analytics, and Data Science. In his spare time, he enjoys playing Tennis, binge-watching TV shows, and playing Tabla.

Large Motion Frame Interpolation

Frame interpolation is the process of synthesizing in-between images from a given set of images. The technique is often used for temporal up-sampling to increase the refresh rate of videos or to create slow motion effects. Nowadays, with digital cameras and smartphones, we often take several photos within a few seconds to capture the best picture. Interpolating between these “near-duplicate” photos can lead to engaging videos that reveal scene motion, often delivering an even more pleasing sense of the moment than the original photos.

Frame interpolation between consecutive video frames, which often have small motion, has been studied extensively. Unlike videos, however, the temporal spacing between near-duplicate photos can be several seconds, with commensurately large in-between motion, which is a major failing point of existing frame interpolation methods. Recent methods attempt to handle large motion by training on datasets with extreme motion, albeit with limited effectiveness on smaller motions.

In “FILM: Frame Interpolation for Large Motion”, published at ECCV 2022, we present a method to create high quality slow-motion videos from near-duplicate photos. FILM is a new neural network architecture that achieves state-of-the-art results in large motion, while also handling smaller motions well.

|

| FILM interpolating between two near-duplicate photos to create a slow motion video. |

FILM Model Overview

The FILM model takes two images as input and outputs a middle image. At inference time, we recursively invoke the model to output in-between images. FILM has three components: (1) A feature extractor that summarizes each input image with deep multi-scale (pyramid) features; (2) a bi-directional motion estimator that computes pixel-wise motion (i.e., flows) at each pyramid level; and (3) a fusion module that outputs the final interpolated image. We train FILM on regular video frame triplets, with the middle frame serving as the ground-truth for supervision.

|

| A standard feature pyramid extraction on two input images. Features are processed at each level by a series of convolutions, which are then downsampled to half the spatial resolution and passed as input to the deeper level. |

Scale-Agnostic Feature Extraction

Large motion is typically handled with hierarchical motion estimation using multi-resolution feature pyramids (shown above). However, this method struggles with small and fast-moving objects because they can disappear at the deepest pyramid levels. In addition, there are far fewer available pixels to derive supervision at the deepest level.

To overcome these limitations, we adopt a feature extractor that shares weights across scales to create a “scale-agnostic” feature pyramid. This feature extractor (1) allows the use of a shared motion estimator across pyramid levels (next section) by equating large motion at shallow levels with small motion at deeper levels, and (2) creates a compact network with fewer weights.

Specifically, given two input images, we first create an image pyramid by successively downsampling each image. Next, we use a shared U-Net convolutional encoder to extract a smaller feature pyramid from each image pyramid level (columns in the figure below). As the third and final step, we construct a scale-agnostic feature pyramid by horizontally concatenating features from different convolution layers that have the same spatial dimensions. Note that from the third level onwards, the feature stack is constructed with the same set of shared convolution weights (shown in the same color). This ensures that all features are similar, which allows us to continue to share weights in the subsequent motion estimator. The figure below depicts this process using four pyramid levels, but in practice, we use seven.

Bi-directional Flow Estimation

After feature extraction, FILM performs pyramid-based residual flow estimation to compute the flows from the yet-to-be-predicted middle image to the two inputs. The flow estimation is done once for each input, starting from the deepest level, using a stack of convolutions. We estimate the flow at a given level by adding a residual correction to the upsampled estimate from the next deeper level. This approach takes the following as its input: (1) the features from the first input at that level, and (2) the features of the second input after it is warped with the upsampled estimate. The same convolution weights are shared across all levels, except for the two finest levels.

Shared weights allow the interpretation of small motions at deeper levels to be the same as large motions at shallow levels, boosting the number of pixels available for large motion supervision. Additionally, shared weights not only enable the training of powerful models that may reach a higher peak signal-to-noise ratio (PSNR), but are also needed to enable models to fit into GPU memory for practical applications.

|

|

| The impact of weight sharing on image quality. Left: no sharing, Right: sharing. For this ablation we used a smaller version of our model (called FILM-med in the paper) because the full model without weight sharing would diverge as the regularization benefit of weight sharing was lost. |

Fusion and Frame Generation

Once the bi-directional flows are estimated, we warp the two feature pyramids into alignment. We obtain a concatenated feature pyramid by stacking, at each pyramid level, the two aligned feature maps, the bi-directional flows and the input images. Finally, a U-Net decoder synthesizes the interpolated output image from the aligned and stacked feature pyramid.

Loss Functions

During training, we supervise FILM by combining three losses. First, we use the absolute L1 difference between the predicted and ground-truth frames to capture the motion between input images. However, this produces blurry images when used alone. Second, we use perceptual loss to improve image fidelity. This minimizes the L1 difference between the ImageNet pre-trained VGG-19 features extracted from the predicted and ground truth frames. Third, we use Style loss to minimize the L2 difference between the Gram matrix of the ImageNet pre-trained VGG-19 features. The Style loss enables the network to produce sharp images and realistic inpaintings of large pre-occluded regions. Finally, the losses are combined with weights empirically selected such that each loss contributes equally to the total loss.

Shown below, the combined loss greatly improves sharpness and image fidelity when compared to training FILM with L1 loss and VGG losses. The combined loss maintains the sharpness of the tree leaves.

|

| FILM’s combined loss functions. L1 loss (left), L1 plus VGG loss (middle), and Style loss (right), showing significant sharpness improvements (green box). |

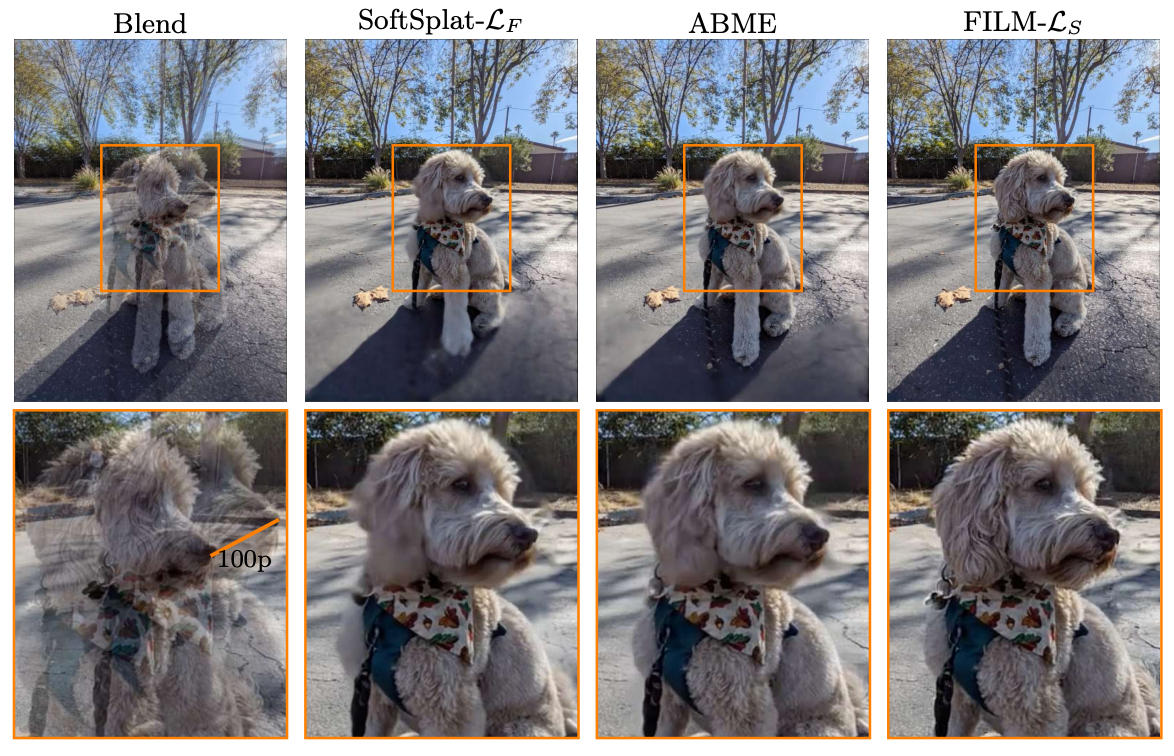

Image and Video Results

We evaluate FILM on an internal near-duplicate photos dataset that exhibits large scene motion. Additionally, we compare FILM to recent frame interpolation methods: SoftSplat and ABME. FILM performs favorably when interpolating across large motion. Even in the presence of motion as large as 100 pixels, FILM generates sharp images consistent with the inputs.

|

| Frame interpolation with SoftSplat (left), ABME (middle) and FILM (right) showing favorable image quality and temporal consistency. |

|

Conclusion

We introduce FILM, a large motion frame interpolation neural network. At its core, FILM adopts a scale-agnostic feature pyramid that shares weights across scales, which allows us to build a “scale-agnostic” bi-directional motion estimator that learns from frames with normal motion and generalizes well to frames with large motion. To handle wide disocclusions caused by large scene motion, we supervise FILM by matching the Gram matrix of ImageNet pre-trained VGG-19 features, which results in realistic inpainting and crisp images. FILM performs favorably on large motion, while also handling small and medium motions well, and generates temporally smooth high quality videos.

Try It Out Yourself

You can try out FILM on your photos using the source code, which is now publicly available.

Acknowledgements

We would like to thank Eric Tabellion, Deqing Sun, Caroline Pantofaru, Brian Curless for their contributions. We thank Marc Comino Trinidad for his contributions on the scale-agnostic feature extractor, Orly Liba and Charles Herrmann for feedback on the text, Jamie Aspinall for the imagery in the paper, Dominik Kaeser, Yael Pritch, Michael Nechyba, William T. Freeman, David Salesin, Catherine Wah, and Ira Kemelmacher-Shlizerman for support. Thanks to Tom Small for creating the animated diagram in this post.

Reduce cost and development time with Amazon SageMaker Pipelines local mode

Creating robust and reusable machine learning (ML) pipelines can be a complex and time-consuming process. Developers usually test their processing and training scripts locally, but the pipelines themselves are typically tested in the cloud. Creating and running a full pipeline during experimentation adds unwanted overhead and cost to the development lifecycle. In this post, we detail how you can use Amazon SageMaker Pipelines local mode to run ML pipelines locally to reduce both pipeline development and run time while reducing cost. After the pipeline has been fully tested locally, you can easily rerun it with Amazon SageMaker managed resources with just a few lines of code changes.

Overview of the ML lifecycle

One of the main drivers for new innovations and applications in ML is the availability and amount of data along with cheaper compute options. In several domains, ML has proven capable of solving problems previously unsolvable with classical big data and analytical techniques, and the demand for data science and ML practitioners is increasing steadily. From a very high level, the ML lifecycle consists of many different parts, but the building of an ML model usually consists of the following general steps:

- Data cleansing and preparation (feature engineering)

- Model training and tuning

- Model evaluation

- Model deployment (or batch transform)

In the data preparation step, data is loaded, massaged, and transformed into the type of inputs, or features, the ML model expects. Writing the scripts to transform the data is typically an iterative process, where fast feedback loops are important to speed up development. It’s normally not necessary to use the full dataset when testing feature engineering scripts, which is why you can use the local mode feature of SageMaker Processing. This allows you to run locally and update the code iteratively, using a smaller dataset. When the final code is ready, it’s submitted to the remote processing job, which uses the complete dataset and runs on SageMaker managed instances.

The development process is similar to the data preparation step for both model training and model evaluation steps. Data scientists use the local mode feature of SageMaker Training to iterate quickly with smaller datasets locally, before using all the data in a SageMaker managed cluster of ML-optimized instances. This speeds up the development process and eliminates the cost of running ML instances managed by SageMaker while experimenting.

As an organization’s ML maturity increases, you can use Amazon SageMaker Pipelines to create ML pipelines that stitch together these steps, creating more complex ML workflows that process, train, and evaluate ML models. SageMaker Pipelines is a fully managed service for automating the different steps of the ML workflow, including data loading, data transformation, model training and tuning, and model deployment. Until recently, you could develop and test your scripts locally but had to test your ML pipelines in the cloud. This made iterating on the flow and form of ML pipelines a slow and costly process. Now, with the added local mode feature of SageMaker Pipelines, you can iterate and test your ML pipelines similarly to how you test and iterate on your processing and training scripts. You can run and test your pipelines on your local machine, using a small subset of data to validate the pipeline syntax and functionalities.

SageMaker Pipelines

SageMaker Pipelines provides a fully automated way to run simple or complex ML workflows. With SageMaker Pipelines, you can create ML workflows with an easy-to-use Python SDK, and then visualize and manage your workflow using Amazon SageMaker Studio. Your data science teams can be more efficient and scale faster by storing and reusing the workflow steps you create in SageMaker Pipelines. You can also use pre-built templates that automate the infrastructure and repository creation to build, test, register, and deploy models within your ML environment. These templates are automatically available to your organization, and are provisioned using AWS Service Catalog products.

SageMaker Pipelines brings continuous integration and continuous deployment (CI/CD) practices to ML, such as maintaining parity between development and production environments, version control, on-demand testing, and end-to-end automation, which helps you scale ML throughout your organization. DevOps practitioners know that some of the main benefits of using CI/CD techniques include an increase in productivity via reusable components and an increase in quality through automated testing, which leads to faster ROI for your business objectives. These benefits are now available to MLOps practitioners by using SageMaker Pipelines to automate the training, testing, and deployment of ML models. With local mode, you can now iterate much more quickly while developing scripts for use in a pipeline. Note that local pipeline instances can’t be viewed or run within the Studio IDE; however, additional viewing options for local pipelines will be available soon.

The SageMaker SDK provides a general purpose local mode configuration that allows developers to run and test supported processors and estimators in their local environment. You can use local mode training with multiple AWS-supported framework images (TensorFlow, MXNet, Chainer, PyTorch, and Scikit-Learn) as well as images you supply yourself.

SageMaker Pipelines, which builds a Directed Acyclic Graph (DAG) of orchestrated workflow steps, supports many activities that are part of the ML lifecycle. In local mode, the following steps are supported:

- Processing job steps – A simplified, managed experience on SageMaker to run data processing workloads, such as feature engineering, data validation, model evaluation, and model interpretation

- Training job steps – An iterative process that teaches a model to make predictions by presenting examples from a training dataset

- Hyperparameter tuning jobs – An automated way to evaluate and select the hyperparameters that produce the most accurate model

- Conditional run steps – A step that provides a conditional run of branches in a pipeline

- Model step – Using CreateModel arguments, this step can create a model for use in transform steps or later deployment as an endpoint

- Transform job steps – A batch transform job that generates predictions from large datasets, and runs inference when a persistent endpoint isn’t needed

- Fail steps – A step that stops a pipeline run and marks the run as failed

Solution overview

Our solution demonstrates the essential steps to create and run SageMaker Pipelines in local mode, which means using local CPU, RAM, and disk resources to load and run the workflow steps. Your local environment could be running on a laptop, using popular IDEs like VSCode or PyCharm, or it could be hosted by SageMaker using classic notebook instances.

Local mode allows data scientists to stitch together steps, which can include processing, training, and evaluation jobs, and run the entire workflow locally. When you’re done testing locally, you can rerun the pipeline in a SageMaker managed environment by replacing the LocalPipelineSession object with PipelineSession, which brings consistency to the ML lifecycle.

For this notebook sample, we use a standard publicly available dataset, the UCI Machine Learning Abalone Dataset. The goal is to train an ML model to determine the age of an abalone snail from its physical measurements. At the core, this is a regression problem.

All of the code required to run this notebook sample is available on GitHub in the amazon-sagemaker-examples repository. In this notebook sample, each pipeline workflow step is created independently and then wired together to create the pipeline. We create the following steps:

- Processing step (feature engineering)

- Training step (model training)

- Processing step (model evaluation)

- Condition step (model accuracy)

- Create model step (model)

- Transform step (batch transform)

- Register model step (model package)

- Fail step (run failed)

The following diagram illustrates our pipeline.

Prerequisites

To follow along in this post, you need the following:

- An AWS account

- Valid AWS Identity and Access Management (IAM) credentials to access Amazon Simple Storage Service (Amazon S3), datasets, and more

-

docker-composeinstalled in the environment you’re using (for instructions, refer to Install Docker Compose)

After these prerequisites are in place, you can run the sample notebook as described in the following sections.

Build your pipeline

In this notebook sample, we use SageMaker Script Mode for most of the ML processes, which means that we provide the actual Python code (scripts) to perform the activity and pass a reference to this code. Script Mode provides great flexibility to control the behavior within the SageMaker processing by allowing you to customize your code while still taking advantage of SageMaker pre-built containers like XGBoost or Scikit-Learn. The custom code is written to a Python script file using cells that begin with the magic command %%writefile, like the following:

%%writefile code/evaluation.py

The primary enabler of local mode is the LocalPipelineSession object, which is instantiated from the Python SDK. The following code segments show how to create a SageMaker pipeline in local mode. Although you can configure a local data path for many of the local pipeline steps, Amazon S3 is the default location to store the data output by the transformation. The new LocalPipelineSession object is passed to the Python SDK in many of the SageMaker workflow API calls described in this post. Notice that you can use the local_pipeline_session variable to retrieve references to the S3 default bucket and the current Region name.

Before we create the individual pipeline steps, we set some parameters used by the pipeline. Some of these parameters are string literals, whereas others are created as special enumerated types provided by the SDK. The enumerated typing ensures that valid settings are provided to the pipeline, such as this one, which is passed to the ConditionLessThanOrEqualTo step further down:

mse_threshold = ParameterFloat(name="MseThreshold", default_value=7.0)

To create a data processing step, which is used here to perform feature engineering, we use the SKLearnProcessor to load and transform the dataset. We pass the local_pipeline_session variable to the class constructor, which instructs the workflow step to run in local mode:

Next, we create our first actual pipeline step, a ProcessingStep object, as imported from the SageMaker SDK. The processor arguments are returned from a call to the SKLearnProcessor run() method. This workflow step is combined with other steps towards the end of the notebook to indicate the order of operation within the pipeline.

Next, we provide code to establish a training step by first instantiating a standard estimator using the SageMaker SDK. We pass the same local_pipeline_session variable to the estimator, named xgb_train, as the sagemaker_session argument. Because we want to train an XGBoost model, we must generate a valid image URI by specifying the following parameters, including the framework and several version parameters:

We can optionally call additional estimator methods, for example set_hyperparameters(), to provide hyperparameter settings for the training job. Now that we have an estimator configured, we’re ready to create the actual training step. Once again, we import the TrainingStep class from the SageMaker SDK library:

Next, we build another processing step to perform model evaluation. This is done by creating a ScriptProcessor instance and passing the local_pipeline_session object as a parameter:

To enable deployment of the trained model, either to a SageMaker real-time endpoint or to a batch transform, we need to create a Model object by passing the model artifacts, the proper image URI, and optionally our custom inference code. We then pass this Model object to a ModelStep, which is added to the local pipeline. See the following code:

Next, we create a batch transform step where we submit a set of feature vectors and perform inference. We first need to create a Transformer object and pass the local_pipeline_session parameter to it. Then we create a TransformStep, passing the required arguments, and add this to the pipeline definition:

Finally, we want to add a branch condition to the workflow so that we only run batch transform if the results of model evaluation meet our criteria. We can indicate this conditional by adding a ConditionStep with a particular condition type, like ConditionLessThanOrEqualTo. We then enumerate the steps for the two branches, essentially defining the if/else or true/false branches of the pipeline. The if_steps provided in the ConditionStep (step_create_model, step_transform) are run whenever the condition evaluates to True.

The following diagram illustrates this conditional branch and the associated if/else steps. Only one branch is run, based on the outcome of the model evaluation step as compared in the condition step.

Now that we have all our steps defined, and the underlying class instances created, we can combine them into a pipeline. We provide some parameters, and crucially define the order of operation by simply listing the steps in the desired order. Note that the TransformStep isn’t shown here because it’s the target of the conditional step, and was provided as step argument to the ConditionalStep earlier.

To run the pipeline, you must call two methods: pipeline.upsert(), which uploads the pipeline to the underlying service, and pipeline.start(), which starts running the pipeline. You can use various other methods to interrogate the run status, list the pipeline steps, and more. Because we used the local mode pipeline session, these steps are all run locally on your processor. The cell output beneath the start method shows the output from the pipeline:

You should see a message at the bottom of the cell output similar to the following:

Pipeline execution d8c3e172-089e-4e7a-ad6d-6d76caf987b7 SUCCEEDED

Revert to managed resources

After we’ve confirmed that the pipeline runs without errors and we’re satisfied with the flow and form of the pipeline, we can recreate the pipeline but with SageMaker managed resources and rerun it. The only change required is to use the PipelineSession object instead of LocalPipelineSession:

from sagemaker.workflow.pipeline_context import LocalPipelineSession

from sagemaker.workflow.pipeline_context import PipelineSession

local_pipeline_session = LocalPipelineSession()

pipeline_session = PipelineSession()

This informs the service to run each step referencing this session object on SageMaker managed resources. Given the small change, we illustrate only the required code changes in the following code cell, but the same change would need to be implemented on each cell using the local_pipeline_session object. The changes are, however, identical across all cells because we’re only substituting the local_pipeline_session object with the pipeline_session object.

After the local session object has been replaced everywhere, we recreate the pipeline and run it with SageMaker managed resources:

Clean up

If you want to keep the Studio environment tidy, you can use the following methods to delete the SageMaker pipeline and the model. The full code can be found in the sample notebook.

Conclusion

Until recently, you could use the local mode feature of SageMaker Processing and SageMaker Training to iterate on your processing and training scripts locally, before running them on all the data with SageMaker managed resources. With the new local mode feature of SageMaker Pipelines, ML practitioners can now apply the same method when iterating on their ML pipelines, stitching the different ML workflows together. When the pipeline is ready for production, running it with SageMaker managed resources requires just a few lines of code changes. This reduces the pipeline run time during development, leading to more rapid pipeline development with faster development cycles, while reducing the cost of SageMaker managed resources.

To learn more, visit Amazon SageMaker Pipelines or Use SageMaker Pipelines to Run Your Jobs Locally.

About the authors

Paul Hargis has focused his efforts on machine learning at several companies, including AWS, Amazon, and Hortonworks. He enjoys building technology solutions and teaching people how to make the most of it. Prior to his role at AWS, he was lead architect for Amazon Exports and Expansions, helping amazon.com improve the experience for international shoppers. Paul likes to help customers expand their machine learning initiatives to solve real-world problems.

Paul Hargis has focused his efforts on machine learning at several companies, including AWS, Amazon, and Hortonworks. He enjoys building technology solutions and teaching people how to make the most of it. Prior to his role at AWS, he was lead architect for Amazon Exports and Expansions, helping amazon.com improve the experience for international shoppers. Paul likes to help customers expand their machine learning initiatives to solve real-world problems.

Niklas Palm is a Solutions Architect at AWS in Stockholm, Sweden, where he helps customers across the Nordics succeed in the cloud. He’s particularly passionate about serverless technologies along with IoT and machine learning. Outside of work, Niklas is an avid cross-country skier and snowboarder as well as a master egg boiler.

Niklas Palm is a Solutions Architect at AWS in Stockholm, Sweden, where he helps customers across the Nordics succeed in the cloud. He’s particularly passionate about serverless technologies along with IoT and machine learning. Outside of work, Niklas is an avid cross-country skier and snowboarder as well as a master egg boiler.

Kirit Thadaka is an ML Solutions Architect working in the SageMaker Service SA team. Prior to joining AWS, Kirit worked in early-stage AI startups followed by some time consulting in various roles in AI research, MLOps, and technical leadership.

Kirit Thadaka is an ML Solutions Architect working in the SageMaker Service SA team. Prior to joining AWS, Kirit worked in early-stage AI startups followed by some time consulting in various roles in AI research, MLOps, and technical leadership.

Research Focus: Week of September 26, 2022

Welcome to Research Focus, a new series of blog posts that highlights notable publications, events, code/datasets, new hires and other milestones from across the research community at Microsoft.

Clifford neural layers for PDE modeling

Johannes Brandstetter, Rianne van den Berg, Max Welling, and Jayesh K. Gupta

Partial differential equations (PDEs) are widely used to describe simulation of physical processes as scalar and vector fields interacting and coevolving over time.

Recent research has focused on using neural surrogates to accelerate such simulations. However, current methods do not explicitly model relationships between fields and their correlated internal components, such as the scalar temperature field and the vector wind velocity field in weather simulations.

In this work, we view the time evolution of such correlated fields through the lens of mulitvector fields, which consist of scalar, vector, and higher-order components, such as bivectors. An example of a bivector is the cross-product of two vectors in 3-D, which is a plane segment spanned by these two vectors, and is often represented as a vector itself. But the cross-product has a sign flip under reflection, which a vector does not. It is a bivector.

The algebraic properties of multivectors, such as multiplication and addition, can be described by Clifford algebras, leading to Clifford neural layers such as Clifford convolutions and Clifford Fourier transforms. These layers are universally applicable and will find direct use in the areas of fluid dynamics, weather forecasting, and the modeling of physical systems in general.

InAs-Al Hybrid Devices Passing the Topological Gap Protocol

While the promise of quantum is great, we are still in the early days of what is possible. Today’s quantum computers enable interesting research, however, innovators find themselves limited by the inadequate scale of these systems and are eager to do more. Microsoft is taking a more challenging, but ultimately a more promising approach to scaled quantum computing. We are engineering a full-stack quantum machine powered by topological qubits. We theorize that this type of qubit will be inherently more stable than qubits produced with existing methods without sacrificing size or speed. Earlier this year we had a major scientific breakthrough that cleared a significant hurdle – we discovered that we could produce the topological superconducting phase and its concomitant Majorana zero modes. In essence, we now have the building block for a topological qubit and quantum at scale. Learn more about this discovery from Dr. Chetan Nayak, Distinguished Engineer of Quantum at Microsoft, who just released a preprint to arXiv and met with leaders in the quantum computing industry.

DaVinci Image and Video Enhancement Toolkit

To help users enhance low quality videos in real time, on edge equipment and with limited computing power, researchers from Microsoft Research Asia launched a set of intelligent image/video enhancement tools called DaVinci. This toolkit aims to solve the pain points of existing video enhancement and restoration tools, give full play to the advantages of AI technology and lower the threshold for users to process video footage.

The targeted features of the DaVinci toolkit include low-level image and video enhancement tasks, real-time image and video filters, and visual quality enhancement such as super-resolution, denoising, and video frame interpolation. The backend technology of the DaVinci toolkit is supported by the industry-leading large-scale, low-level vision pre-training technology, supplemented by a large amount of data training. To maximize the robustness of the model, researchers use four million publicly available images and video data, with contents covering landscapes, buildings, people, and so on. To ensure an adequate amount of training data and rich data types, researchers synthesized data with various degradations, so that the entire model training could cover more actual user application scenarios. The DaVinci toolkit has been packaged and released on GitHub.

Explore More

RetrieverTTS: Modeling decomposed factors for text-based speech insertion

Dacheng Yin, Chuanxin Tang, Yanqing Liu, Xiaoqiang Wang, Zhiyuan Zhao, Yucheng Zhao, Zhiwei Xiong, Sheng Zhao, Chong Luo

In the post-pandemic world, a large portion of meetings, conferences, and trainings have been moved online, leading to a sharp increase in demand for audio and video creation. However, making zero-mistake audio/video recordings is time consuming, with re-recordings required to fix even the smallest mistakes. Therefore, a simple technique for making partial corrections to recordings is urgently needed. Researchers from Microsoft Research Asia’s Intelligent Multimedia Group and from Azure Speech Team developed a text-based speech editing system to address this problem. The system supports cutting, copying, pasting operations, and inserting synthesized speech segments based on text transcriptions.

AI4Science expands with new research lab in Berlin

Deep learning is set to transform the natural sciences, dramatically improving our ability to model and predict natural phenomena over widely varying scales of space and time. In fact, this may be the dawn of a new paradigm of scientific discovery. To help make this new paradigm a reality, Microsoft Research recently established AI4Science, a new global organization that will bring together experts in machine learning, quantum physics, computational chemistry, molecular biology, fluid dynamics, software engineering, and other disciplines.

This month, the AI4Science organization expanded with a new presence in Berlin, to be led by Dr. Frank Noé. As a “bridge professor,” Noé specializes in work at the interfaces between the fields of mathematics and computer science as well as physics, biology, chemistry and pharmacy. For his pioneering work in developing innovative computational methods in biophysics, he has received awards and funding from the American Chemical Society and the European Research Council, among others.

In the video below, Noé discusses the new lab in Berlin, and potential for machine learning to advance the natural sciences, with Chris Bishop, Microsoft Technical Fellow and director, AI4Science.

The post Research Focus: Week of September 26, 2022 appeared first on Microsoft Research.

Searidge Technologies Offers a Safety Net for Airports

Planes taxiing for long periods due to ground traffic — or circling the airport while awaiting clearance to land — don’t just make travelers impatient. They burn fuel unnecessarily, harming the environment and adding to airlines’ costs.

Searidge Technologies, based in Ottawa, Canada, has created AI-powered software to help the aviation industry avoid such issues, increasing efficiency and enhancing safety for airports.

Its Digital Tower and Apron solutions, powered by NVIDIA GPUs, use vision AI to manage traffic control for airports and alert users of safety concerns in real time. Searidge enables airports to handle 15-30% more aircraft per hour and reduce the number of tarmac incidents.

The company’s tech is used across the world, including at London’s Heathrow Airport, Fort Lauderdale-Hollywood International Airport in Florida and Dubai International Airport, to name a few.

In June, Searidge’s Digital Apron and Tower Management System (DATMS) went operational at Hong Kong International Airport as part of an initial phase of the Airport Authority Hong Kong’s large-scale expansion plan, which will bring machine learning to a new, integrated airport operations center.

In addition, Searidge provides the Civil Aviation Department of Hong Kong’s air-traffic control systems with next-generation safety enhancements using its vision AI software.

The deployment in Hong Kong is the industry’s largest digital platform for tower and apron management — and the first collaboration between an airport and an air-navigation service provider for a single digital platform.

Searidge is a member of NVIDIA Metropolis, a partner program focused on bringing to market a new generation of vision AI applications that make the world’s most important spaces and operations safer and more efficient.

Digital Tools for Airports

The early 2000s saw massive growth and restructuring of airports — and with this came increased use of digital tools in the aviation industry.

Founded in 2006, Searidge has become one of the first to bring machine learning to video processing in the aviation space, according to Pat Urbanek, the company’s vice president of business development for Asia Pacific and the Middle East.

“Video processing software for air-traffic control didn’t exist before,” Urbanek said. “It’s taken a decade to become mainstream — but now, intelligent video and machine learning have been brought into airport operations, enabling new levels of automation in air-traffic control and airside operations to enhance safety and efficiency.”

DATMS’s underlying machine learning platform, called Aimee, enables traffic-lighting automation based on data from radars and 4K-resolution video cameras. Aimee is trained to detect aircraft and vehicles. And DATMS is programmed based on the complex roadway rules that determine how buses and other vehicles should operate on service roads across taxiways.

After analyzing video data, the AI-enabled system activates or deactivates airports’ traffic lights in real time, based on when it’s appropriate for passenger buses and other vehicles to move. The status of each traffic light and additional details can also be visualized on end-user screens in airport traffic control rooms.

“What size is an aircraft? Does it have enough space to turn on the runway? Is it going too fast? All of this information and more is sent out over the Searidge Platform and displayed on screen based on user preference,” said Marco Rueckert, vice president of technology at Searidge.

The same underlying technology is applied to provide enhanced safety alerts for aircraft departure and arrival. In real time, DATMS alerts air traffic controllers of safety-standard breaches — taking into consideration clearances for aircraft to enter a runway, takeoff or land.

Speedups With NVIDIA GPUs

Searidge uses NVIDIA GPUs to optimize inference throughput across its deployments at airports around the globe. To train its AI models, Searidge uses an NVIDIA DGX A100 system.

“The NVIDIA platform allowed us to really bring down the hardware footprint and costs from the customer’s perspective,” Rueckert said. “It provides the scalability factor, so we can easily add more cameras with increasing resolution, which ultimately helps us solve more problems and address more customer needs.”

The company is also exploring the integration of voice data — based on communication between pilots and air-traffic controllers — within its machine learning platform to further enhance airport operations.

Searidge’s Digital Tower and Apron solutions can be customized for the unique challenges that come with varying airport layouts and traffic patterns.

“Of course, having aircraft land on time and letting passengers make their connections increases business and efficiency, but our technology has an environmental impact as well,” Urbanek said. “It can prevent burning of huge amounts of fuel — in the air or at the gate — by providing enhanced efficiency and safety for taxiing, takeoff and landing.”

Watch the latest GTC keynote by NVIDIA founder and CEO Jensen Huang to discover how vision AI and other groundbreaking technologies are shaping the world:

Feature video courtesy of Dubai Airports.

The post Searidge Technologies Offers a Safety Net for Airports appeared first on NVIDIA Blog.

Amazon and USC name three new ML Fellows

PhD students will receive fellowship funding and be mentored by Amazon scientists.Read More

Creator EposVox Shares Streaming Lessons, Successes This Week ‘In the NVIDIA Studio’

Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows. In the coming weeks, we’ll be deep diving on new GeForce RTX 40 Series GPU features, technologies and resources, and how they dramatically accelerate content creation.

TwitchCon — the world’s top gathering of live streamers — kicks off Friday with the new line of GeForce RTX 40 Series GPUs bringing incredible new technology — from AV1 to AI — to elevate live streams for aspiring and professional Twitch creators alike.

In addition, creator and educator EposVox is in the NVIDIA Studio to discuss his influences, inspiration and advice for getting the most out of live streams.

Plus, join the #From2Dto3D challenge this month by sharing a 2D piece of art next to a 3D rendition of it for a chance to be featured on the NVIDIA Studio social media channels. Be sure to tag #From2Dto3D to enter.

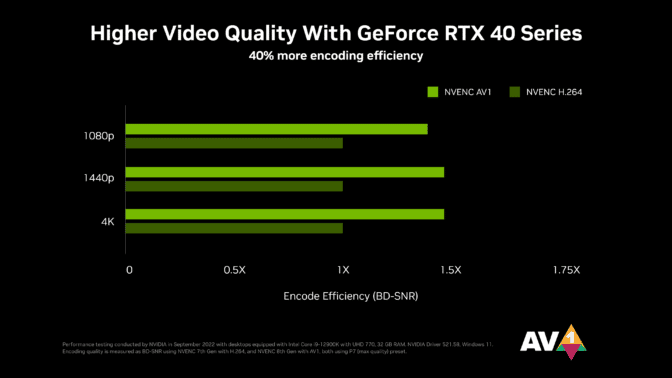

AV1 and Done

Releasing on Oct. 12, the new GeForce RTX 40 Series GPUs feature the eighth-generation NVIDIA video encoder, NVENC for short, now with support for AV1 encoding. For creators like EposVox, the new AV1 encoder will deliver 40% increased efficiency, unlocking higher resolutions and crisper image quality.

NVIDIA has collaborated with OBS Studio to add AV1 support to its next software release, expected later this month. In addition, Discord is enabling AV1 end to end for the first time later this year. GeForce RTX 40 Series owners will be able to stream with crisp, clear image quality at 1440p and even 4K resolution at 60 frames per second.

GeForce RTX 40 Series GPUs also feature dual encoders. This allows creators to capture up to 8K60. And when it’s time to cut a VOD of live streams, the dual encoders work in tandem, dividing work automatically, which slashes export times nearly in half. Blackmagic Design’s DaVinci Resolve, the popular Voukoder plugin for Adobe Premiere Pro, and Jianying — the top video editing app in China — are all enabling dual encoder through encode presets. Expect dual encoder availability for these apps in October.

The GeForce RTX 40 Series GPUs also give game streamers an unprecedented gen-to-gen frame-rate boost in PC games alongside the new NVIDIA DLSS 3 technology, which accelerates performance by up to 4x. This will unlock richer, more immersive ray-traced experiences to share via live streams, such as in Cyberpunk 2077 and Portal with RTX.

Virtual Live Streams Come to Life

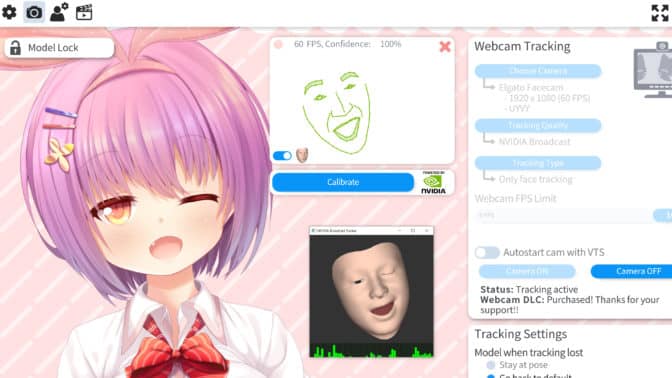

VTube Studio is a leading app for virtual streamers (VTubers) that makes it easy and fun to bring digital avatars to life on a live stream.

VTube Studio is adding support this month for the NVIDIA Broadcast AR SDK, allowing users to seamlessly control their avatars with AI by using a regular webcam and a GeForce RTX GPU.

Objectively Blissful Streaming

OBS doesn’t stand for objectively blissful streaming, but it should.

OBS Studio is free, open-source software for video recording and live streaming. It’s one of EposVox’s essential apps, as he said it “allows me to produce my content at a rapid pace that’s constantly evolving.”

The software now features native integration of the AI-powered NVIDIA Broadcast effects, including Virtual Background, Noise Removal and Room Echo Removal.

In addition to adding AV1 support for GeForce RTX 40 Series GPUs later this month, the recent OBS 28.0 release added support for high-efficiency video coding (HEVC or H.265), improving video compression rates by 15% across a wide range of NVIDIA GPUs. It also now includes support for high-dynamic range (HDR), offering a greater range of bright and dark colors, which brings stunning vibrance and dramatic improvements in visual quality.

Broadcast for All

The SDKs that power NVIDIA Broadcast are available to developers, enabling native AI feature support in devices ranging from Logitech, Corsair and Elgato, as well as advanced workflows in OBS and Notch software.

Features released last month at NVIDIA GTC include new and updated AI-powered effects.

Virtual Background now includes temporal information, so random objects in the background will no longer create distractions by flashing in and out. This will be available in the next major version of OBS Studio.

Face Expression Estimation allows apps to accurately track facial expressions for face meshes, even with the simplest of webcams. It’s hugely beneficial to VTubers and can be found in the next version of VTube Studio.

Eye Contact allows podcasters to appear as if they’re looking directly at the camera — highly useful for when the user is reading a script or looking away to engage with viewers in the chat window.

It’s EposVox’s World, We’re All Just Living in It

Adam Taylor, who goes by the stage name EposVox or “The Stream Professor,” runs a YouTube channel focused on tech education for content creators and streamers.

He’s been making videos since before YouTube even existed.

“DailyMotion, Google Video, does anyone remember MetaCafe? X-Fire?” said EposVox.

He maintains a strong passion for educational content, which stemmed from his desire to learn video editing workflows as a young man, when he lacked the wealth of knowledge and resources available today.

“I immediately ran into constant walls of information that were kept behind closed doors when it came to deeper video topics, audio setups and more,” the artist said. “It was really frustrating — there was nothing and no one, aside from a decade or two of DOOM9 forums and outdated broadcast books, that had ever heard of a USB port to help guide me.”

While content creation and live streaming, especially with software like OBS Studio and XSplit, are EposVox’s primary focuses, he also aspires to make technology more fun and easy to use.

“The GPU acceleration in 3D and video apps, and now all the AI innovations that are coming to new generations, are incredible — I’m not sure I’d be able to create on the level that I do, nor at the speed I do, without NVIDIA GPUs.”

When searching for content inspiration, EposVox deploys a proactive approach — he’s all about asking questions. “Whether it’s trying to figure out how to do some overkill new setup for myself, breaking down neat effects I see elsewhere, or just asking which point in the process might cause friction for a viewer — I ask questions, figure out the best way to answer those questions, and deliver them to viewers,” he said.

EposVox stressed the importance of experimenting with multiple creative applications, noting that “every tool I can add to my tool chest enhances my creativity by giving me more options or ways to create, and more experiences with new processes for creating things.” This is especially true for the use of AI in his creative workflows, he added.

“What I love about AI art generation right now is the fact that I can just type any idea that comes to mind, in plain text language, and see it come to life,” he said. “I may not get exactly what I was expecting, I may have to continue refining my language and ideas to approach the representation I’m after — but knocking down the barrier between idea conception and seeing some form of that idea in front of me, I cannot overstate the impact that is created here.”

For an optimal live-streaming setup, EposVox recommends a PC equipped with a GeForce RTX GPU. His GeForce RTX 3090 desktop GPU, he said, can handle the rigors of the entire creative process and remain fast even when he’s constantly switching between computationally complex creative applications.

The artist said, “These days, I use GPU-accelerated NVENC encoding for capturing, exporting videos and live streaming.”

EposVox can’t wait for his GeForce RTX 4090 GPU upgrade, primarily to take advantage of the new dual encoders, noting “ I’ll probably end up saving a few hours a day since less time waiting on renders and uploads means I can move from project to project much quicker, rather than having to walk away and work on other things. I’ll be able to focus so much more.”

When asked for parting advice, EposVox didn’t hesitate: “If you commit to a creative vision for a project, but the entity you’re making it for— the company, agency, person or whomever — takes the project in a completely different direction, find some way to still bring your vision to life,” he said. “You’ll be so much better off — in terms of how you feel and the experience gained — if you can still bring that to life.”

For more tips on live streaming and video exports, check out EposVox’s YouTube channel.

And for step-by-step tutorials for all creative fields — created by industry-leading artists and community showcases — check out the NVIDIA Studio YouTube channel.

Finally, join the #From2Dto3D challenge by posting on Instagram, Twitter or Facebook.

It’s time to show how your artwork has gone from 2D to 3D (just like @juliestrator)!

Join our October #From2Dto3D challenge by sharing a 2D piece of art next to a 3D piece you’ve made for a chance to be featured on our channels.

Tag #From2Dto3D so we can see your post! pic.twitter.com/pc6A6oJVWb

— NVIDIA Studio (@NVIDIAStudio) October 3, 2022

Get updates directly in your inbox by subscribing to the NVIDIA Studio newsletter.

The post Creator EposVox Shares Streaming Lessons, Successes This Week ‘In the NVIDIA Studio’ appeared first on NVIDIA Blog.