Robots are quickly becoming part of our everyday lives, but they’re often only programmed to perform specific tasks well. While harnessing recent advances in AI could lead to robots that could help in many more ways, progress in building general-purpose robots is slower in part because of the time needed to collect real-world training data. Our latest paper introduces a self-improving AI agent for robotics, RoboCat, that learns to perform a variety of tasks across different arms, and then self-generates new training data to improve its technique.Read More

Spatial LibriSpeech: An Augmented Dataset for Spatial Audio Learning

We present Spatial LibriSpeech, a spatial audio dataset with over 570 hours of 19-channel audio, first-order ambisonics, and optional distractor noise. Spatial LibriSpeech is designed for machine learning model training, and it includes labels for source position, speaking direction, room acoustics and geometry. Spatial LibriSpeech is generated by augmenting LibriSpeech samples with >220k simulated acoustic conditions across >8k synthetic rooms. To demonstrate the utility of our dataset, we train models on four fundamental spatial audio tasks, resulting in a median absolute error of 6.60° on…Apple Machine Learning Research

Stabilizing Transformer Training by Preventing Attention Entropy Collapse

m*= Equal Contributors

Training stability is of great importance to Transformers. In this work, we investigate the training dynamics of Transformers by examining the evolution of the attention layers. In particular, we track the attention entropy for each attention head during the course of training, which is a proxy for model sharpness. We identify a common pattern across different architectures and tasks, where low attention entropy is accompanied by high training instability, which can take the form of oscillating loss or divergence. We denote the pathologically low attention entropy…Apple Machine Learning Research

Private Online Prediction from Experts: Separations and Faster Rates

*= Equal Contributors

Online prediction from experts is a fundamental problem in machine learning and several works have studied this problem under privacy constraints. We propose and analyze new algorithms for this problem that improve over the regret bounds of the best existing algorithms for non-adaptive adversaries. For approximate differential privacy, our algorithms achieve regret bounds of for the stochastic setting and for oblivious adversaries (where is the number of experts). For pure DP, our algorithms are the first to obtain sub-linear regret for oblivious adversaries in the…Apple Machine Learning Research

The Monge Gap: A Regularizer to Learn All Transport Maps

Optimal transport (OT) theory has been been used in machine learning to study and characterize maps that can push-forward efficiently a probability measure onto another.

Recent works have drawn inspiration from Brenier’s theorem, which states that when the ground cost is the squared-Euclidean distance, the “best” map to morph a continuous measure in into another must be the gradient of a convex function.

To exploit that result, , Makkuva et al. (2020); Korotin et al. (2020) consider maps , where is an input convex neural network (ICNN), as defined by Amos et al. 2017, and fit with SGD using…Apple Machine Learning Research

Onboard users to Amazon SageMaker Studio with Active Directory group-specific IAM roles

Amazon SageMaker Studio is a web-based integrated development environment (IDE) for machine learning (ML) that lets you build, train, debug, deploy, and monitor your ML models. For provisioning Studio in your AWS account and Region, you first need to create an Amazon SageMaker domain—a construct that encapsulates your ML environment. More concretely, a SageMaker domain consists of an associated Amazon Elastic File System (Amazon EFS) volume, a list of authorized users, and a variety of security, application, policy, and Amazon Virtual Private Cloud (Amazon VPC) configurations.

When creating your SageMaker domain, you can choose to use either AWS IAM Identity Center (successor to AWS Single Sign-On) or AWS Identity and Access Management (IAM) for user authentication methods. Both authentication methods have their own set of use cases; in this post, we focus on SageMaker domains with IAM Identity Center, or single sign-on (SSO) mode, as the authentication method.

With SSO mode, you set up an SSO user and group in IAM Identity Center and then grant access to either the SSO group or user from the Studio console. Currently, all SSO users in a domain inherit the domain’s execution role. This may not work for all organizations. For instance, administrators may want to set up IAM permissions for a Studio SSO user based on their Active Directory (AD) group membership. Furthermore, because administrators are required to manually grant SSO users access to Studio, the process may not scale when onboarding hundreds of users.

In this post, we provide prescriptive guidance for the solution to provision SSO users to Studio with least privilege permissions based on AD group membership. This guidance enables you to quickly scale for onboarding hundreds of users to Studio and achieve your security and compliance posture.

Solution overview

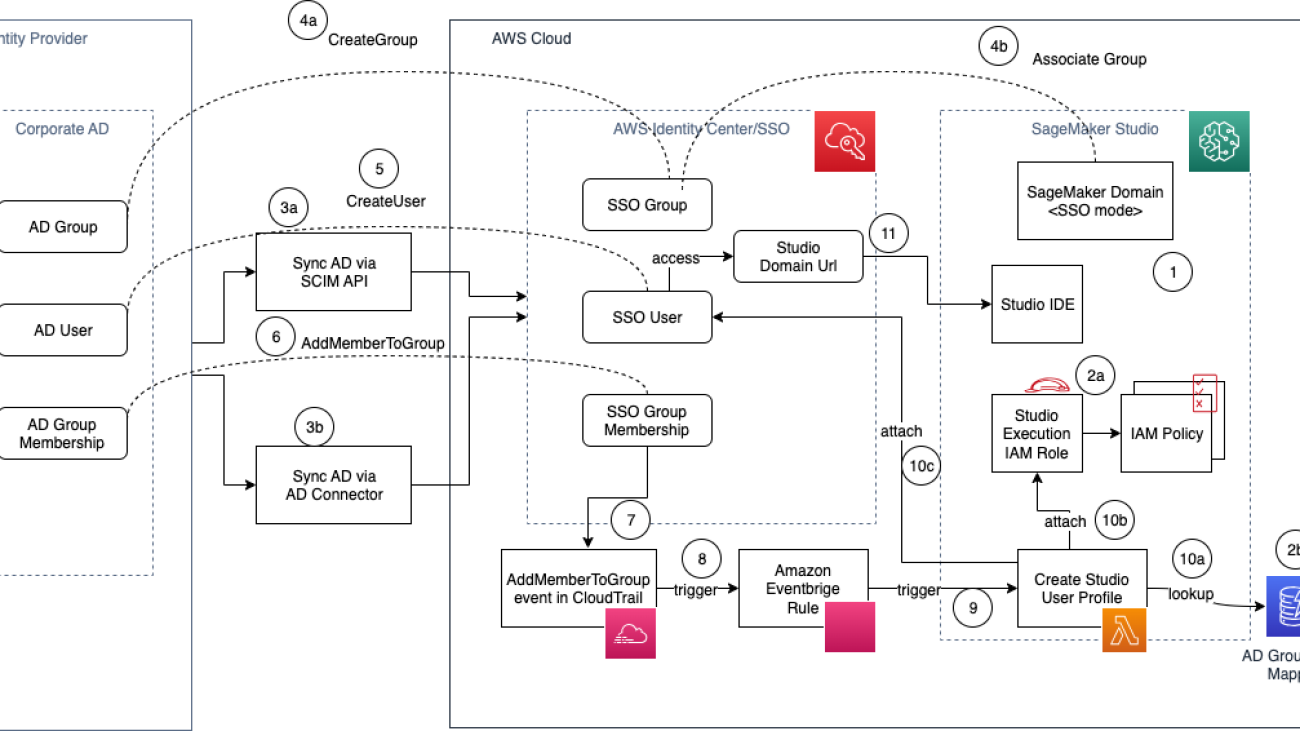

The following diagram illustrates the solution architecture.

The workflow to provision AD users in Studio includes the following steps:

- Set up a Studio domain in SSO mode.

- For each AD group:

- Set up your Studio execution role with appropriate fine-grained IAM policies

- Record an entry in the AD group-role mapping Amazon DynamoDB table.

Alternatively, you can adopt a naming standard for IAM role ARNs based on the AD group name and derive the IAM role ARN without needing to store the mapping in an external database.

- Sync your AD users and groups and memberships to AWS Identity Center:

- If you’re using an identity provider (IdP) that supports SCIM, use the SCIM API integration with IAM Identity Center.

- If you are using self-managed AD, you may use AD Connector.

- When the AD group is created in your corporate AD, complete the following steps:

- Create a corresponding SSO group in IAM Identity Center.

- Associate the SSO group to the Studio domain using the SageMaker console.

- When an AD user is created in your corporate AD, a corresponding SSO user is created in IAM Identity Center.

- When the AD user is assigned to an AD group, an IAM Identity Center API (CreateGroupMembership) is invoked, and SSO group membership is created.

- The preceding event is logged in AWS CloudTrail with the name

AddMemberToGroup. - An Amazon EventBridge rule listens to CloudTrail events and matches the

AddMemberToGrouprule pattern. - The EventBridge rule triggers the target AWS Lambda function.

- This Lambda function will call back IAM Identity Center APIs, get the SSO user and group information, and perform the following steps to create the Studio user profile (CreateUserProfile) for the SSO user:

- Look up the DynamoDB table to fetch the IAM role corresponding to the AD group.

- Create a user profile with the SSO user and the IAM role obtained from the lookup table.

- The SSO user is granted access to Studio.

- The SSO user is redirected to the Studio IDE via the Studio domain URL.

Note that, as of writing, Step 4b (associate the SSO group to the Studio domain) needs to be performed manually by an admin using the SageMaker console at the SageMaker domain level.

Set up a Lambda function to create the user profiles

The solution uses a Lambda function to create the Studio user profiles. We provide the following sample Lambda function that you can copy and modify to meet your needs for automating the creation of the Studio user profile. This function performs the following actions:

- Receive the CloudTrail

AddMemberToGroupevent from EventBridge. - Retrieve the Studio

DOMAIN_IDfrom the environment variable (you can alternatively hard-code the domain ID or use a DynamoDB table as well if you have multiple domains). - Read from a dummy markup table to match AD users to execution roles. You can change this to fetch from the DynamoDB table if you’re using a table-driven approach. If you use DynamoDB, your Lambda function’s execution role needs permissions to read from the table as well.

- Retrieve the SSO user and AD group membership information from IAM Identity Center, based on the CloudTrail event data.

- Create a Studio user profile for the SSO user, with the SSO details and the matching execution role.

Note that by default, the Lambda execution role doesn’t have access to create user profiles or list SSO users. After you create the Lambda function, access the function’s execution role on IAM and attach the following policy as an inline policy after scoping down as needed based on your organization requirements.

Set up the EventBridge rule for the CloudTrail event

EventBridge is a serverless event bus service that you can use to connect your applications with data from a variety of sources. In this solution, we create a rule-based trigger: EventBridge listens to events and matches against the provided pattern and triggers a Lambda function if the pattern match is successful. As explained in the solution overview, we listen to the AddMemberToGroup event. To set it up, complete the following steps:

- On the EventBridge console, choose Rules in the navigation pane.

- Choose Create rule.

- Provide a rule name, for example,

AddUserToADGroup. - Optionally, enter a description.

- Select default for the event bus.

- Under Rule type, choose Rule with an event pattern, then choose Next.

- On the Build event pattern page, choose Event source as AWS events or EventBridge partner events.

- Under Event pattern, choose the Custom patterns (JSON editor) tab and enter the following pattern:

- Choose Next.

- On the Select target(s) page, choose the AWS service for the target type, the Lambda function as the target, and the function you created earlier, then choose Next.

- Choose Next on the Configure tags page, then choose Create rule on the Review and create page.

After you’ve set the Lambda function and the EventBridge rule, you can test out this solution. To do so, open your IdP and add a user to one of the AD groups with the Studio execution role mapped. Once you add the user, you can verify the Lambda function logs to inspect the event and also see the Studio user provisioned automatically. Additionally, you can use the DescribeUserProfile API call to verify that the user is created with appropriate permissions.

Supporting multiple Studio accounts

To support multiple Studio accounts with the preceding architecture, we recommend the following changes:

- Set up an AD group mapped to each Studio account level.

- Set up a group-level IAM role in each Studio account.

- Set up or derive the group to IAM role mapping.

- Set up a Lambda function to perform cross-account role assumption, based on the IAM role mapping ARN and created user profile.

Deprovisioning users

When a user is removed from their AD group, you should remove their access from the Studio domain as well. With SSO, when a user is removed, the user is disabled in IAM Identity Center automatically if the AD to IAM Identity Center sync is in place, and their Studio application access is immediately revoked.

However, the user profile on Studio still persists. You can add a similar workflow with CloudTrail and a Lambda function to remove the user profile from Studio. The EventBridge trigger should now listen for the DeleteGroupMembership event. In the Lambda function, complete the following steps:

- Obtain the user profile name from the user and group ID.

- List all running apps for the user profile using the ListApps API call, filtering by the

UserProfileNameEqualsparameter. Make sure to check for the paginated response, to list all apps for the user. - Delete all running apps for the user and wait until all apps are deleted. You can use the DescribeApp API to view the app’s status.

- When all apps are in a Deleted state (or Failed), delete the user profile.

With this solution in place, ML platform administrators can maintain group memberships in one central location and automate the Studio user profile management through EventBridge and Lambda functions.

The following code shows a sample CloudTrail event:

The following code shows a sample Studio user profile API request:

Conclusion

In this post, we discussed how administrators can scale Studio onboarding for hundreds of users based on their AD group membership. We demonstrated an end-to-end solution architecture that organizations can adopt to automate and scale their onboarding process to meet their agility, security, and compliance needs. If you’re looking for a scalable solution to automate your user onboarding, try this solution, and leave you feedback below! For more information about onboarding to Studio, see Onboard to Amazon SageMaker Domain.

About the authors

Ram Vittal is an ML Specialist Solutions Architect at AWS. He has over 20 years of experience architecting and building distributed, hybrid, and cloud applications. He is passionate about building secure and scalable AI/ML and big data solutions to help enterprise customers with their cloud adoption and optimization journey to improve their business outcomes. In his spare time, he rides his motorcycle and walks with his 2-year-old sheep-a-doodle!

Ram Vittal is an ML Specialist Solutions Architect at AWS. He has over 20 years of experience architecting and building distributed, hybrid, and cloud applications. He is passionate about building secure and scalable AI/ML and big data solutions to help enterprise customers with their cloud adoption and optimization journey to improve their business outcomes. In his spare time, he rides his motorcycle and walks with his 2-year-old sheep-a-doodle!

Durga Sury is an ML Solutions Architect in the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 4 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and hiking with her 5-year-old husky.

Durga Sury is an ML Solutions Architect in the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 4 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and hiking with her 5-year-old husky.

Google at CVPR 2023

This week marks the beginning of the premier annual Computer Vision and Pattern Recognition conference (CVPR 2023), held in-person in Vancouver, BC (with additional virtual content). As a leader in computer vision research and a Platinum Sponsor, Google Research will have a strong presence across CVPR 2023 with 90 papers being presented at the main conference and active involvement in over 40 conference workshops and tutorials.

If you are attending CVPR this year, please stop by our booth to chat with our researchers who are actively exploring the latest techniques for application to various areas of machine perception. Our researchers will also be available to talk about and demo several recent efforts, including on-device ML applications with MediaPipe, strategies for differential privacy, neural radiance field technologies and much more.

You can also learn more about our research being presented at CVPR 2023 in the list below (Google affiliations in bold).

Board and organizing committee

Senior area chairs include: Cordelia Schmid, Ming-Hsuan Yang

Area chairs include: Andre Araujo, Anurag Arnab, Rodrigo Benenson, Ayan Chakrabarti, Huiwen Chang, Alireza Fathi, Vittorio Ferrari, Golnaz Ghiasi, Boqing Gong, Yedid Hoshen, Varun Jampani, Lu Jiang, Da-Cheng Jua, Dahun Kim, Stephen Lombardi, Peyman Milanfar, Ben Mildenhall, Arsha Nagrani, Jordi Pont-Tuset, Paul Hongsuck Seo, Fei Sha, Saurabh Singh, Noah Snavely, Kihyuk Sohn, Chen Sun, Pratul P. Srinivasan, Deqing Sun, Andrea Tagliasacchi, Federico Tombari, Jasper Uijlings

Publicity Chair: Boqing Gong

Demonstration Chair: Jonathan T. Barron

Program Advisory Board includes: Cordelia Schmid, Richard Szeliski

Panels

Scientific Discovery and the Environment

Best Paper Award candidates

MobileNeRF: Exploiting the Polygon Rasterization Pipeline for Efficient Neural Field Rendering on Mobile Architectures

Zhiqin Chen, Thomas Funkhouser, Peter Hedman, Andrea Tagliasacchi

DynIBaR: Neural Dynamic Image-Based Rendering

Zhengqi Li, Qianqian Wang, Forrester Cole, Richard Tucker, Noah Snavely

DreamBooth: Fine Tuning Text-to-Image Diffusion Models for Subject-Driven Generation

Nataniel Ruiz*, Yuanzhen Li, Varun Jampani, Yael Pritch, Michael Rubinstein, Kfir Aberman

On Distillation of Guided Diffusion Models

Chenlin Meng, Robin Rombach, Ruiqi Gao, Diederik Kingma, Stefano Ermon, Jonathan Ho, Tim Salimans

Highlight papers

Connecting Vision and Language with Video Localized Narratives

Paul Voigtlaender, Soravit Changpinyo, Jordi Pont-Tuset, Radu Soricut, Vittorio Ferrari

MaskSketch: Unpaired Structure-Guided Masked Image Generation

Dina Bashkirova*, Jose Lezama, Kihyuk Sohn, Kate Saenko, Irfan Essa

SPARF: Neural Radiance Fields from Sparse and Noisy Poses

Prune Truong*, Marie-Julie Rakotosaona, Fabian Manhardt, Federico Tombari

MAGVIT: Masked Generative Video Transformer

Lijun Yu*, Yong Cheng, Kihyuk Sohn, Jose Lezama, Han Zhang, Huiwen Chang, Alexander Hauptmann, Ming-Hsuan Yang, Yuan Hao, Irfan Essa, Lu Jiang

Region-Aware Pretraining for Open-Vocabulary Object Detection with Vision Transformers

Dahun Kim, Anelia Angelova, Weicheng Kuo

I2MVFormer: Large Language Model Generated Multi-View Document Supervision for Zero-Shot Image Classification

Muhammad Ferjad Naeem, Gul Zain Khan, Yongqin Xian, Muhammad Zeshan Afzal, Didier Stricker, Luc Van Gool, Federico Tombari

Improving Robust Generalization by Direct PAC-Bayesian Bound Minimization

Zifan Wang*, Nan Ding, Tomer Levinboim, Xi Chen, Radu Soricut

Imagen Editor and EditBench: Advancing and Evaluating Text-Guided Image Inpainting (see blog post)

Su Wang, Chitwan Saharia, Ceslee Montgomery, Jordi Pont-Tuset, Shai Noy, Stefano Pellegrini, Yasumasa Onoe, Sarah Laszlo, David J. Fleet, Radu Soricut, Jason Baldridge, Mohammad Norouzi, Peter Anderson, William Cha

RUST: Latent Neural Scene Representations from Unposed Imagery

Mehdi S. M. Sajjadi, Aravindh Mahendran, Thomas Kipf, Etienne Pot, Daniel Duckworth, Mario Lučić, Klaus Greff

REVEAL: Retrieval-Augmented Visual-Language Pre-Training with Multi-Source Multimodal Knowledge Memory (see blog post)

Ziniu Hu*, Ahmet Iscen, Chen Sun, Zirui Wang, Kai-Wei Chang, Yizhou Sun, Cordelia Schmid, David Ross, Alireza Fathi

RobustNeRF: Ignoring Distractors with Robust Losses

Sara Sabour, Suhani Vora, Daniel Duckworth, Ivan Krasin, David J. Fleet, Andrea Tagliasacchi

Papers

AligNeRF: High-Fidelity Neural Radiance Fields via Alignment-Aware Training

Yifan Jiang*, Peter Hedman, Ben Mildenhall, Dejia Xu, Jonathan T. Barron, Zhangyang Wang, Tianfan Xue*

BlendFields: Few-Shot Example-Driven Facial Modeling

Kacper Kania, Stephan Garbin, Andrea Tagliasacchi, Virginia Estellers, Kwang Moo Yi, Tomasz Trzcinski, Julien Valentin, Marek Kowalski

Enhancing Deformable Local Features by Jointly Learning to Detect and Describe Keypoints

Guilherme Potje, Felipe Cadar, Andre Araujo, Renato Martins, Erickson Nascimento

How Can Objects Help Action Recognition?

Xingyi Zhou, Anurag Arnab, Chen Sun, Cordelia Schmid

Hybrid Neural Rendering for Large-Scale Scenes with Motion Blur

Peng Dai, Yinda Zhang, Xin Yu, Xiaoyang Lyu, Xiaojuan Qi

IFSeg: Image-Free Semantic Segmentation via Vision-Language Model

Sukmin Yun, Seong Park, Paul Hongsuck Seo, Jinwoo Shin

Learning from Unique Perspectives: User-Aware Saliency Modeling (see blog post)

Shi Chen*, Nachiappan Valliappan, Shaolei Shen, Xinyu Ye, Kai Kohlhoff, Junfeng He

MAGE: MAsked Generative Encoder to Unify Representation Learning and Image Synthesis

Tianhong Li*, Huiwen Chang, Shlok Kumar Mishra, Han Zhang, Dina Katabi, Dilip Krishnan

NeRF-Supervised Deep Stereo

Fabio Tosi, Alessio Tonioni, Daniele Gregorio, Matteo Poggi

Omnimatte3D: Associating Objects and their Effects in Unconstrained Monocular Video

Mohammed Suhail, Erika Lu, Zhengqi Li, Noah Snavely, Leon Sigal, Forrester Cole

OpenScene: 3D Scene Understanding with Open Vocabularies

Songyou Peng, Kyle Genova, Chiyu Jiang, Andrea Tagliasacchi, Marc Pollefeys, Thomas Funkhouser

PersonNeRF: Personalized Reconstruction from Photo Collections

Chung-Yi Weng, Pratul Srinivasan, Brian Curless, Ira Kemelmacher-Shlizerman

Prefix Conditioning Unifies Language and Label Supervision

Kuniaki Saito*, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, Tomas Pfister

Rethinking Video ViTs: Sparse Video Tubes for Joint Image and Video Learning (see blog post)

AJ Piergiovanni, Weicheng Kuo, Anelia Angelova

Burstormer: Burst Image Restoration and Enhancement Transformer

Akshay Dudhane, Syed Waqas Zamir, Salman Khan, Fahad Shahbaz Khan, Ming-Hsuan Yang

Decentralized Learning with Multi-Headed Distillation

Andrey Zhmoginov, Mark Sandler, Nolan Miller, Gus Kristiansen, Max Vladymyrov

GINA-3D: Learning to Generate Implicit Neural Assets in the Wild

Bokui Shen, Xinchen Yan, Charles R. Qi, Mahyar Najibi, Boyang Deng, Leonidas Guibas, Yin Zhou, Dragomir Anguelov

Grad-PU: Arbitrary-Scale Point Cloud Upsampling via Gradient Descent with Learned Distance Functions

Yun He, Danhang Tang, Yinda Zhang, Xiangyang Xue, Yanwei Fu

Hi-LASSIE: High-Fidelity Articulated Shape and Skeleton Discovery from Sparse Image Ensemble

Chun-Han Yao*, Wei-Chih Hung, Yuanzhen Li, Michael Rubinstein, Ming-Hsuan Yang, Varun Jampani

Hyperbolic Contrastive Learning for Visual Representations beyond Objects

Songwei Ge, Shlok Mishra, Simon Kornblith, Chun-Liang Li, David Jacobs

Imagic: Text-Based Real Image Editing with Diffusion Models

Bahjat Kawar*, Shiran Zada, Oran Lang, Omer Tov, Huiwen Chang, Tali Dekel, Inbar Mosseri, Michal Irani

Incremental 3D Semantic Scene Graph Prediction from RGB Sequences

Shun-Cheng Wu, Keisuke Tateno, Nassir Navab, Federico Tombari

IPCC-TP: Utilizing Incremental Pearson Correlation Coefficient for Joint Multi-Agent Trajectory Prediction

Dekai Zhu, Guangyao Zhai, Yan Di, Fabian Manhardt, Hendrik Berkemeyer, Tuan Tran, Nassir Navab, Federico Tombari, Benjamin Busam

Learning to Generate Image Embeddings with User-Level Differential Privacy

Zheng Xu, Maxwell Collins, Yuxiao Wang, Liviu Panait, Sewoong Oh, Sean Augenstein, Ting Liu, Florian Schroff, H. Brendan McMahan

NoisyTwins: Class-Consistent and Diverse Image Generation Through StyleGANs

Harsh Rangwani, Lavish Bansal, Kartik Sharma, Tejan Karmali, Varun Jampani, Venkatesh Babu Radhakrishnan

NULL-Text Inversion for Editing Real Images Using Guided Diffusion Models

Ron Mokady*, Amir Hertz*, Kfir Aberman, Yael Pritch, Daniel Cohen-Or*

SCOOP: Self-Supervised Correspondence and Optimization-Based Scene Flow

Itai Lang*, Dror Aiger, Forrester Cole, Shai Avidan, Michael Rubinstein

Shape, Pose, and Appearance from a Single Image via Bootstrapped Radiance Field Inversion

Dario Pavllo*, David Joseph Tan, Marie-Julie Rakotosaona, Federico Tombari

TexPose: Neural Texture Learning for Self-Supervised 6D Object Pose Estimation

Hanzhi Chen, Fabian Manhardt, Nassir Navab, Benjamin Busam

TryOnDiffusion: A Tale of Two UNets

Luyang Zhu*, Dawei Yang, Tyler Zhu, Fitsum Reda, William Chan, Chitwan Saharia, Mohammad Norouzi, Ira Kemelmacher-Shlizerman

A New Path: Scaling Vision-and-Language Navigation with Synthetic Instructions and Imitation Learning

Aishwarya Kamath*, Peter Anderson, Su Wang, Jing Yu Koh*, Alexander Ku, Austin Waters, Yinfei Yang*, Jason Baldridge, Zarana Parekh

CLIPPO: Image-and-Language Understanding from Pixels Only

Michael Tschannen, Basil Mustafa, Neil Houlsby

Controllable Light Diffusion for Portraits

David Futschik, Kelvin Ritland, James Vecore, Sean Fanello, Sergio Orts-Escolano, Brian Curless, Daniel Sýkora, Rohit Pandey

CUF: Continuous Upsampling Filters

Cristina Vasconcelos, Cengiz Oztireli, Mark Matthews, Milad Hashemi, Kevin Swersky, Andrea Tagliasacchi

Improving Zero-Shot Generalization and Robustness of Multi-modal Models

Yunhao Ge*, Jie Ren, Andrew Gallagher, Yuxiao Wang, Ming-Hsuan Yang, Hartwig Adam, Laurent Itti, Balaji Lakshminarayanan, Jiaping Zhao

LOCATE: Localize and Transfer Object Parts for Weakly Supervised Affordance Grounding

Gen Li, Varun Jampani, Deqing Sun, Laura Sevilla-Lara

Nerflets: Local Radiance Fields for Efficient Structure-Aware 3D Scene Representation from 2D Supervision

Xiaoshuai Zhang, Abhijit Kundu, Thomas Funkhouser, Leonidas Guibas, Hao Su, Kyle Genova

Self-Supervised AutoFlow

Hsin-Ping Huang, Charles Herrmann, Junhwa Hur, Erika Lu, Kyle Sargent, Austin Stone, Ming-Hsuan Yang, Deqing Sun

Train-Once-for-All Personalization

Hong-You Chen*, Yandong Li, Yin Cui, Mingda Zhang, Wei-Lun Chao, Li Zhang

Vid2Seq: Large-Scale Pretraining of a Visual Language Model for Dense Video Captioning (see blog post)

Antoine Yang*, Arsha Nagrani, Paul Hongsuck Seo, Antoine Miech, Jordi Pont-Tuset, Ivan Laptev, Josef Sivic, Cordelia Schmid

VILA: Learning Image Aesthetics from User Comments with Vision-Language Pretraining

Junjie Ke, Keren Ye, Jiahui Yu, Yonghui Wu, Peyman Milanfar, Feng Yang

You Need Multiple Exiting: Dynamic Early Exiting for Accelerating Unified Vision Language Model

Shengkun Tang, Yaqing Wang, Zhenglun Kong, Tianchi Zhang, Yao Li, Caiwen Ding, Yanzhi Wang, Yi Liang, Dongkuan Xu

Accidental Light Probes

Hong-Xing Yu, Samir Agarwala, Charles Herrmann, Richard Szeliski, Noah Snavely, Jiajun Wu, Deqing Sun

FedDM: Iterative Distribution Matching for Communication-Efficient Federated Learning

Yuanhao Xiong, Ruochen Wang, Minhao Cheng, Felix Yu, Cho-Jui Hsieh

FlexiViT: One Model for All Patch Sizes

Lucas Beyer, Pavel Izmailov, Alexander Kolesnikov, Mathilde Caron, Simon Kornblith, Xiaohua Zhai, Matthias Minderer, Michael Tschannen, Ibrahim Alabdulmohsin, Filip Pavetic

Iterative Vision-and-Language Navigation

Jacob Krantz, Shurjo Banerjee, Wang Zhu, Jason Corso, Peter Anderson, Stefan Lee, Jesse Thomason

MoDi: Unconditional Motion Synthesis from Diverse Data

Sigal Raab, Inbal Leibovitch, Peizhuo Li, Kfir Aberman, Olga Sorkine-Hornung, Daniel Cohen-Or

Multimodal Prompting with Missing Modalities for Visual Recognition

Yi-Lun Lee, Yi-Hsuan Tsai, Wei-Chen Chiu, Chen-Yu Lee

Scene-Aware Egocentric 3D Human Pose Estimation

Jian Wang, Diogo Luvizon, Weipeng Xu, Lingjie Liu, Kripasindhu Sarkar, Christian Theobalt

ShapeClipper: Scalable 3D Shape Learning from Single-View Images via Geometric and CLIP-Based Consistency

Zixuan Huang, Varun Jampani, Ngoc Anh Thai, Yuanzhen Li, Stefan Stojanov, James M. Rehg

Improving Image Recognition by Retrieving from Web-Scale Image-Text Data

Ahmet Iscen, Alireza Fathi, Cordelia Schmid

JacobiNeRF: NeRF Shaping with Mutual Information Gradients

Xiaomeng Xu, Yanchao Yang, Kaichun Mo, Boxiao Pan, Li Yi, Leonidas Guibas

Learning Personalized High Quality Volumetric Head Avatars from Monocular RGB Videos

Ziqian Bai*, Feitong Tan, Zeng Huang, Kripasindhu Sarkar, Danhang Tang, Di Qiu, Abhimitra Meka, Ruofei Du, Mingsong Dou, Sergio Orts-Escolano, Rohit Pandey, Ping Tan, Thabo Beeler, Sean Fanello, Yinda Zhang

NeRF in the Palm of Your Hand: Corrective Augmentation for Robotics via Novel-View Synthesis

Allan Zhou, Mo Jin Kim, Lirui Wang, Pete Florence, Chelsea Finn

Pic2Word: Mapping Pictures to Words for Zero-Shot Composed Image Retrieval

Kuniaki Saito*, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, Tomas Pfister

SCADE: NeRFs from Space Carving with Ambiguity-Aware Depth Estimates

Mikaela Uy, Ricardo Martin Brualla, Leonidas Guibas, Ke Li

Structured 3D Features for Reconstructing Controllable Avatars

Enric Corona, Mihai Zanfir, Thiemo Alldieck, Eduard Gabriel Bazavan, Andrei Zanfir, Cristian Sminchisescu

Token Turing Machines

Michael S. Ryoo, Keerthana Gopalakrishnan, Kumara Kahatapitiya, Ted Xiao, Kanishka Rao, Austin Stone, Yao Lu, Julian Ibarz, Anurag Arnab

TruFor: Leveraging All-Round Clues for Trustworthy Image Forgery Detection and Localization

Fabrizio Guillaro, Davide Cozzolino, Avneesh Sud, Nicholas Dufour, Luisa Verdoliva

Video Probabilistic Diffusion Models in Projected Latent Space

Sihyun Yu, Kihyuk Sohn, Subin Kim, Jinwoo Shin

Visual Prompt Tuning for Generative Transfer Learning

Kihyuk Sohn, Yuan Hao, Jose Lezama, Luisa Polania, Huiwen Chang, Han Zhang, Irfan Essa, Lu Jiang

Zero-Shot Referring Image Segmentation with Global-Local Context Features

Seonghoon Yu, Paul Hongsuck Seo, Jeany Son

AVFormer: Injecting Vision into Frozen Speech Models for Zero-Shot AV-ASR (see blog post)

Paul Hongsuck Seo, Arsha Nagrani, Cordelia Schmid

DC2: Dual-Camera Defocus Control by Learning to Refocus

Hadi Alzayer, Abdullah Abuolaim, Leung Chun Chan, Yang Yang, Ying Chen Lou, Jia-Bin Huang, Abhishek Kar

Edges to Shapes to Concepts: Adversarial Augmentation for Robust Vision

Aditay Tripathi*, Rishubh Singh, Anirban Chakraborty, Pradeep Shenoy

MetaCLUE: Towards Comprehensive Visual Metaphors Research

Arjun R. Akula, Brendan Driscoll, Pradyumna Narayana, Soravit Changpinyo, Zhiwei Jia, Suyash Damle, Garima Pruthi, Sugato Basu, Leonidas Guibas, William T. Freeman, Yuanzhen Li, Varun Jampani

Multi-Realism Image Compression with a Conditional Generator

Eirikur Agustsson, David Minnen, George Toderici, Fabian Mentzer

NeRDi: Single-View NeRF Synthesis with Language-Guided Diffusion as General Image Priors

Congyue Deng, Chiyu Jiang, Charles R. Qi, Xinchen Yan, Yin Zhou, Leonidas Guibas, Dragomir Anguelov

On Calibrating Semantic Segmentation Models: Analyses and an Algorithm

Dongdong Wang, Boqing Gong, Liqiang Wang

Persistent Nature: A Generative Model of Unbounded 3D Worlds

Lucy Chai, Richard Tucker, Zhengqi Li, Phillip Isola, Noah Snavely

Rethinking Domain Generalization for Face Anti-spoofing: Separability and Alignment

Yiyou Sun*, Yaojie Liu, Xiaoming Liu, Yixuan Li, Wen-Sheng Chu

SINE: Semantic-Driven Image-Based NeRF Editing with Prior-Guided Editing Field

Chong Bao, Yinda Zhang, Bangbang Yang, Tianxing Fan, Zesong Yang, Hujun Bao, Guofeng Zhang, Zhaopeng Cui

Sequential Training of GANs Against GAN-Classifiers Reveals Correlated “Knowledge Gaps” Present Among Independently Trained GAN Instances

Arkanath Pathak, Nicholas Dufour

SparsePose: Sparse-View Camera Pose Regression and Refinement

Samarth Sinha, Jason Zhang, Andrea Tagliasacchi, Igor Gilitschenski, David Lindell

Teacher-Generated Spatial-Attention Labels Boost Robustness and Accuracy of Contrastive Models

Yushi Yao, Chang Ye, Gamaleldin F. Elsayed, Junfeng He

Workshops

Computer Vision for Mixed Reality

Speakers include: Ira Kemelmacher-Shlizerman

Workshop on Autonomous Driving (WAD)

Speakers include: Chelsea Finn

Multimodal Content Moderation (MMCM)

Organizers include: Chris Bregler

Speakers include: Mevan Babakar

Medical Computer Vision (MCV)

Speakers include: Shekoofeh Azizi

VAND: Visual Anomaly and Novelty Detection

Speakers include: Yedid Hoshen, Jie Ren

Structural and Compositional Learning on 3D Data

Organizers include: Leonidas Guibas

Speakers include: Andrea Tagliasacchi, Fei Xia, Amir Hertz

Fine-Grained Visual Categorization (FGVC10)

Organizers include: Kimberly Wilber, Sara Beery

Panelists include: Hartwig Adam

XRNeRF: Advances in NeRF for the Metaverse

Organizers include: Jonathan T. Barron

Speakers include: Ben Poole

OmniLabel: Infinite Label Spaces for Semantic Understanding via Natural Language

Organizers include: Golnaz Ghiasi, Long Zhao

Speakers include: Vittorio Ferrari

Large Scale Holistic Video Understanding

Organizers include: David Ross

Speakers include: Cordelia Schmid

New Frontiers for Zero-Shot Image Captioning Evaluation (NICE)

Speakers include: Cordelia Schmid

Computational Cameras and Displays (CCD)

Organizers include: Ulugbek Kamilov

Speakers include: Mauricio Delbracio

Gaze Estimation and Prediction in the Wild (GAZE)

Organizers include: Thabo Beele

Speakers include: Erroll Wood

Face and Gesture Analysis for Health Informatics (FGAHI)

Speakers include: Daniel McDuff

Computer Vision for Animal Behavior Tracking and Modeling (CV4Animals)

Organizers include: Sara Beery

Speakers include: Arsha Nagrani

3D Vision and Robotics

Speakers include: Pete Florence

End-to-End Autonomous Driving: Perception, Prediction, Planning and Simulation (E2EAD)

Organizers include: Anurag Arnab

End-to-End Autonomous Driving: Emerging Tasks and Challenges

Speakers include: Sergey Levine

Multi-Modal Learning and Applications (MULA)

Speakers include: Aleksander Hołyński

Synthetic Data for Autonomous Systems (SDAS)

Speakers include: Lukas Hoyer

Vision Datasets Understanding

Organizers include: José Lezama

Speakers include: Vijay Janapa Reddi

Precognition: Seeing Through the Future

Organizers include: Utsav Prabhu

New Trends in Image Restoration and Enhancement (NTIRE)

Organizers include: Ming-Hsuan Yang

Generative Models for Computer Vision

Speakers include: Ben Mildenhall, Andrea Tagliasacchi

Adversarial Machine Learning on Computer Vision: Art of Robustness

Organizers include: Xinyun Chen

Speakers include: Deqing Sun

Media Forensics

Speakers include: Nicholas Carlini

Tracking and Its Many Guises: Tracking Any Object in Open-World

Organizers include: Paul Voigtlaender

3D Scene Understanding for Vision, Graphics, and Robotics

Speakers include: Andy Zeng

Computer Vision for Physiological Measurement (CVPM)

Organizers include: Daniel McDuff

Affective Behaviour Analysis In-the-Wild

Organizers include: Stefanos Zafeiriou

Ethical Considerations in Creative Applications of Computer Vision (EC3V)

Organizers include: Rida Qadri, Mohammad Havaei, Fernando Diaz, Emily Denton, Sarah Laszlo, Negar Rostamzadeh, Pamela Peter-Agbia, Eva Kozanecka

VizWiz Grand Challenge: Describing Images and Videos Taken by Blind People

Speakers include: Haoran Qi

Efficient Deep Learning for Computer Vision (see blog post)

Organizers include: Andrew Howard, Chas Leichner

Speakers include: Andrew Howard

Visual Copy Detection

Organizers include: Priya Goyal

Learning 3D with Multi-View Supervision (3DMV)

Speakers include: Ben Poole

Image Matching: Local Features and Beyond

Organizers include: Eduard Trulls

Vision for All Seasons: Adverse Weather and Lightning Conditions (V4AS)

Organizers include: Lukas Hoyer

Transformers for Vision (T4V)

Speakers include: Cordelia Schmid, Huiwen Chang

Scholars vs Big Models — How Can Academics Adapt?

Organizers include: Sara Beery

Speakers include: Jonathan T. Barron, Cordelia Schmid

ScanNet Indoor Scene Understanding Challenge

Speakers include: Tom Funkhouser

Computer Vision for Microscopy Image Analysis

Speakers include: Po-Hsuan Cameron Chen

Embedded Vision

Speakers include: Rahul Sukthankar

Sight and Sound

Organizers include: Arsha Nagrani, William Freeman

AI for Content Creation

Organizers include: Deqing Sun, Huiwen Chang, Lu Jiang

Speakers include: Ben Mildenhall, Tim Salimans, Yuanzhen Li

Computer Vision in the Wild

Organizers include: Xiuye Gu, Neil Houlsby

Speakers include: Boqing Gong, Anelia Angelova

Visual Pre-Training for Robotics

Organizers include: Mathilde Caron

Omnidirectional Computer Vision

Organizers include: Yi-Hsuan Tsai

Tutorials

All Things ViTs: Understanding and Interpreting Attention in Vision

Hila Chefer, Sayak Paul

Recent Advances in Anomaly Detection

Guansong Pang, Joey Tianyi Zhou, Radu Tudor Ionescu, Yu Tian, Kihyuk Sohn

Contactless Healthcare Using Cameras and Wireless Sensors

Wenjin Wang, Xuyu Wang, Jun Luo, Daniel McDuff

Object Localization for Free: Going Beyond Self-Supervised Learning

Oriane Simeoni, Weidi Xie, Thomas Kipf, Patrick Pérez

Prompting in Vision

Kaiyang Zhou, Ziwei Liu, Phillip Isola, Hyojin Bahng, Ludwig Schmidt, Sarah Pratt, Denny Zhou

* Work done while at Google

Improving Subseasonal Forecasting with Machine Learning

This content was previously published by Nature Portfolio and Springer Nature Communities on Nature Portfolio Earth and Environment Community.

Improving our ability to forecast the weather and climate is of interest to all sectors of the economy and to government agencies from the local to the national level. Weather forecasts zero to ten days ahead and climate forecasts seasons to decades ahead are currently used operationally in decision-making, and the accuracy and reliability of these forecasts has improved consistently in recent decades (Troccoli, 2010). However, many critical applications – including water allocation, wildfire management, and drought and flood mitigation – require subseasonal forecasts with lead times in between these two extremes (Merryfield et al., 2020; White et al., 2017).

While short-term forecasting accuracy is largely sustained by physics-based dynamical models, these deterministic methods have limited subseasonal accuracy due to chaos (Lorenz, 1963). Indeed, subseasonal forecasting has long been considered a “predictability desert” due to its complex dependence on both local weather and global climate variables (Vitart et al., 2012). Recent studies, however, have highlighted important sources of predictability on subseasonal timescales, and the focus of several recent large-scale research efforts has been to advance the subseasonal capabilities of operational physics-based models (Vitart et al., 2017; Pegion et al., 2019; Lang et al., 2020). Our team has undertaken a parallel effort to demonstrate the value of machine learning methods in improving subseasonal forecasting.

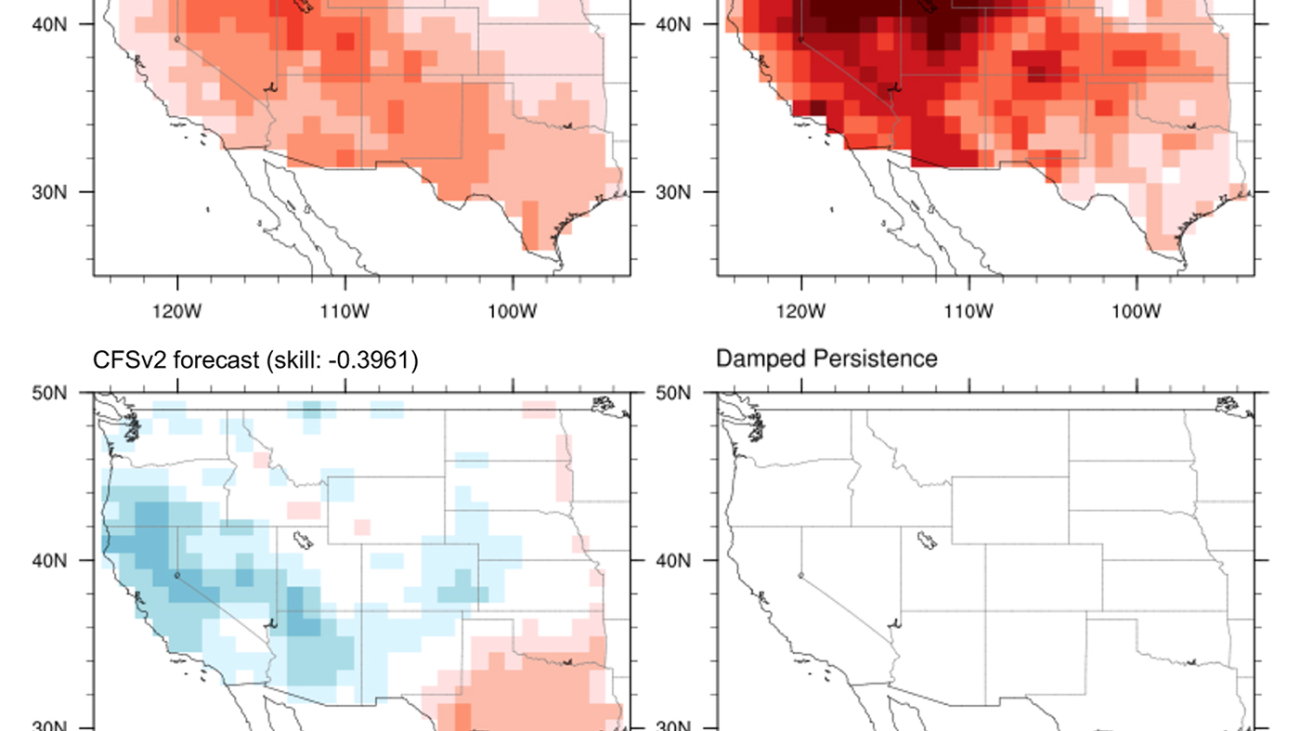

The Subseasonal Climate Forecast Rodeo

To improve the accuracy of subseasonal forecasts, the U.S. Bureau of Reclamation (USBR) and the National Oceanic and Atmospheric Administration (NOAA) launched the Subseasonal Climate Forecast Rodeo, a yearlong real-time forecasting challenge in which participants aimed to skillfully predict temperature and precipitation in the western U.S. two-to-four weeks and four-to-six weeks in advance. Our team developed a machine learning approach to the Rodeo and a SubseasonalRodeo dataset for training and evaluating subseasonal forecasting systems.

Spotlight: On-Demand EVENT

Microsoft Research Summit 2022

On-Demand

Watch now to learn about some of the most pressing questions facing our research community and listen in on conversations with 120+ researchers around how to ensure new technologies have the broadest possible benefit for humanity.

Our final Rodeo solution was an ensemble of two nonlinear regression models. The first integrates a diverse collection of meteorological measurements and dynamic model forecasts and prunes irrelevant predictors using a customized multitask model selection procedure. The second uses only historical measurements of the target variable (temperature or precipitation) and introduces multitask nearest neighbor features into a weighted local linear regression. Each model alone outperforms the debiased operational U.S. Climate Forecasting System version 2 (CFSv2), and, over 2011-2018, an ensemble of our regression models and debiased CFSv2 improves debiased CFSv2 skill by 40%-50% for temperature and 129%-169% for precipitation. See our write-up Improving Subseasonal Forecasting in the Western U.S. with Machine Learning for more details. While this work demonstrated the promise of machine learning models for subseasonal forecasting, it also highlighted the complementary strengths of physics- and learning-based approaches and the opportunity to combine those strengths to improve forecasting skill.

Adaptive Bias Correction (ABC)

To harness the complementary strengths of physics- and learning-based models, we next developed a hybrid dynamical-learning framework for improved subseasonal forecasting. In particular, we learn to adaptively correct the biases of dynamical models and apply our novel adaptive bias correction (ABC) to improve the skill of subseasonal temperature and precipitation forecasts.

ABC is an ensemble of three new low-cost, high-accuracy machine learning models: Dynamical++, Climatology++, and Persistence++. Each model trains only on past temperature, precipitation, and forecast data and outputs corrections for future forecasts tailored to the site, target date, and dynamical model. Dynamical++ and Climatology++ learn site- and date-specific offsets for dynamical and climatological forecasts by minimizing forecasting error over adaptively-selected training periods. Persistence++ additionally accounts for recent weather trends by combining lagged observations, dynamical forecasts, and climatology to minimize historical forecasting error for each site.

ABC can be applied operationally as a computationally inexpensive enhancement to any dynamical model forecast, and we use this property to substantially reduce the forecasting errors of eight operational dynamical models, including the state-of-the-art ECMWF model.

A practical implication of these improvements for downstream decision-makers is an expanded geographic range for actionable skill, defined here as spatial skill above a given sufficiency threshold. For example, we vary the weeks 5-6 sufficiency threshold from 0 to 0.6 and find that ABC consistently boosts the number of locales with actionable skill over both raw and operationally-debiased CFSv2 and ECMWF.

We couple these performance improvements with a practical workflow for explaining ABC skill gains using Cohort Shapley (Mase et al., 2019) and identifying higher-skill windows of opportunity (Mariotti et al., 2020) based on relevant climate variables.

To facilitate future deployment and development, we also release our model and workflow code through the subseasonal_toolkit Python package.

The SubseasonalClimateUSA dataset

To train and evaluate our contiguous US models, we developed a SubseasonalClimateUSA dataset housing a diverse collection of ground-truth measurements and model forecasts relevant to subseasonal timescales. The SubseasonalClimateUSA dataset is updated regularly and publicly accessible via the subseasonal_data package. In SubseasonalClimateUSA: A Dataset for Subseasonal Forecasting and Benchmarking, we used this dataset to benchmark ABC against operational dynamical models and seven state-of-the-art deep learning and machine learning methods from the literature. For each subseasonal forecasting task, ABC and its component models provided the best performance.

Online learning with optimism and delay

To provide more flexible and adaptive model ensembling in the operational setting of real-time climate and weather forecasting, we developed three new optimistic online learning algorithms — AdaHedgeD, DORM, and DORM+ — that require no parameter tuning and have optimal regret guarantees under delayed feedback.

Our open-source Python implementation, available via the PoolD library, provides simple strategies for combining the forecasts of different subseasonal forecasting models, adapting the weights of each model based on real-time performance. See our write-up Online Learning with Optimism and Delay for more details.

Looking forward

We’re excited to continue exploring machine learning applied to subseasonal forecasting on a global scale, and we hope that our open-source packages will facilitate future subseasonal development and benchmarking. If you have ideas for model or dataset development, please contribute to our open-source Python code or contact us!

The post Improving Subseasonal Forecasting with Machine Learning appeared first on Microsoft Research.

Paper on graph database schemata wins best-industry-paper award

SIGMOD paper by Amazon researchers and collaborators presents flexible data definition language that enables rapid development of complex graph databases.Read More

SambaSafety automates custom R workload, improving driver safety with Amazon SageMaker and AWS Step Functions

At SambaSafety, their mission is to promote safer communities by reducing risk through data insights. Since 1998, SambaSafety has been the leading North American provider of cloud–based mobility risk management software for organizations with commercial and non–commercial drivers. SambaSafety serves more than 15,000 global employers and insurance carriers with driver risk and compliance monitoring, online training and deep risk analytics, as well as risk pricing solutions. Through the collection, correlation and analysis of driver record, telematics, corporate and other sensor data, SambaSafety not only helps employers better enforce safety policies and reduce claims, but also helps insurers make informed underwriting decisions and background screeners perform accurate, efficient pre–hire checks.

Not all drivers present the same risk profile. The more time spent behind the wheel, the higher your risk profile. SambaSafety’s team of data scientists has developed complex and propriety modeling solutions designed to accurately quantify this risk profile. However, they sought support to deploy this solution for batch and real-time inference in a consistent and reliable manner.

In this post, we discuss how SambaSafety used AWS machine learning (ML) and continuous integration and continuous delivery (CI/CD) tools to deploy their existing data science application for batch inference. SambaSafety worked with AWS Advanced Consulting Partner Firemind to deliver a solution that used AWS CodeStar, AWS Step Functions, and Amazon SageMaker for this workload. With AWS CI/CD and AI/ML products, SambaSafety’s data science team didn’t have to change their existing development workflow to take advantage of continuous model training and inference.

Customer use case

SambaSafety’s data science team had long been using the power of data to inform their business. They had several skilled engineers and scientists building insightful models that improved the quality of risk analysis on their platform. The challenges faced by this team were not related to data science. SambaSafety’s data science team needed help connecting their existing data science workflow to a continuous delivery solution.

SambaSafety’s data science team maintained several script-like artifacts as part of their development workflow. These scripts performed several tasks, including data preprocessing, feature engineering, model creation, model tuning, and model comparison and validation. These scripts were all run manually when new data arrived into their environment for training. Additionally, these scripts didn’t perform any model versioning or hosting for inference. SambaSafety’s data science team had developed manual workarounds to promote new models to production, but this process became time-consuming and labor-intensive.

To free up SambaSafety’s highly skilled data science team to innovate on new ML workloads, SambaSafety needed to automate the manual tasks associated with maintaining existing models. Furthermore, the solution needed to replicate the manual workflow used by SambaSafety’s data science team, and make decisions about proceeding based on the outcomes of these scripts. Finally, the solution had to integrate with their existing code base. The SambaSafety data science team used a code repository solution external to AWS; the final pipeline had to be intelligent enough to trigger based on updates to their code base, which was written primarily in R.

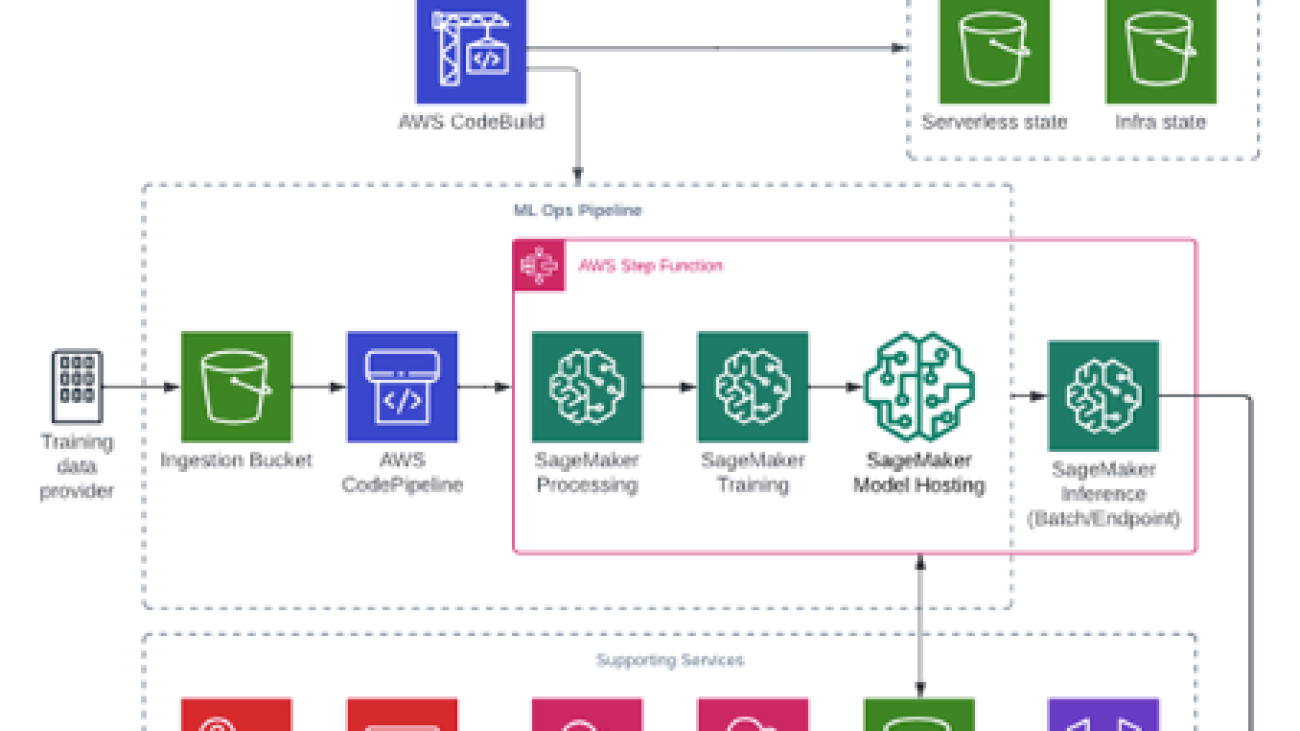

Solution overview

The following diagram illustrates the solution architecture, which was informed by one of the many open-source architectures maintained by SambaSafety’s delivery partner Firemind.

The solution delivered by Firemind for SambaSafety’s data science team was built around two ML pipelines. The first ML pipeline trains a model using SambaSafety’s custom data preprocessing, training, and testing scripts. The resulting model artifact is deployed for batch and real-time inference to model endpoints managed by SageMaker. The second ML pipeline facilitates the inference request to the hosted model. In this way, the pipeline for training is decoupled from the pipeline for inference.

One of the complexities in this project is replicating the manual steps taken by the SambaSafety data scientists. The team at Firemind used Step Functions and SageMaker Processing to complete this task. Step Functions allows you to run discrete tasks in AWS using AWS Lambda functions, Amazon Elastic Kubernetes Service (Amazon EKS) workers, or in this case SageMaker. SageMaker Processing allows you to define jobs that run on managed ML instances within the SageMaker ecosystem. Each run of a Step Function job maintains its own logs, run history, and details on the success or failure of the job.

The team used Step Functions and SageMaker, together with Lambda, to handle the automation of training, tuning, deployment, and inference workloads. The only remaining piece was the continuous integration of code changes to this deployment pipeline. Firemind implemented a CodeStar project that maintained a connection to SambaSafety’s existing code repository. When the industrious data science team at SambaSafety posts an update to a specific branch of their code base, CodeStar picks up the changes and triggers the automation.

Conclusion

SambaSafety’s new serverless MLOps pipeline had a significant impact on their capability to deliver. The integration of data science and software development enables their teams to work together seamlessly. Their automated model deployment solution reduced time to delivery by up to 70%.

SambaSafety also had the following to say:

“By automating our data science models and integrating them into their software development lifecycle, we have been able to achieve a new level of efficiency and accuracy in our services. This has enabled us to stay ahead of the competition and deliver innovative solutions to clients. Our clients will greatly benefit from this with the faster turnaround times and improved accuracy of our solutions.”

SambaSafety connected with AWS account teams with their problem. AWS account and solutions architecture teams worked to identify this solution by sourcing from our robust partner network. Connect with your AWS account team to identify similar transformative opportunities for your business.

About the Authors

Dan Ferguson is an AI/ML Specialist Solutions Architect (SA) on the Private Equity Solutions Architecture at Amazon Web Services. Dan helps Private Equity backed portfolio companies leverage AI/ML technologies to achieve their business objectives.

Dan Ferguson is an AI/ML Specialist Solutions Architect (SA) on the Private Equity Solutions Architecture at Amazon Web Services. Dan helps Private Equity backed portfolio companies leverage AI/ML technologies to achieve their business objectives.

Khalil Adib is a Data Scientist at Firemind, driving the innovation Firemind can provide to their customers around the magical worlds of AI and ML. Khalil tinkers with the latest and greatest tech and models, ensuring that Firemind are always at the bleeding edge.

Khalil Adib is a Data Scientist at Firemind, driving the innovation Firemind can provide to their customers around the magical worlds of AI and ML. Khalil tinkers with the latest and greatest tech and models, ensuring that Firemind are always at the bleeding edge.

Jason Mathew is a Cloud Engineer at Firemind, leading the delivery of projects for customers end-to-end from writing pipelines with IaC, building out data engineering with Python, and pushing the boundaries of ML. Jason is also the key contributor to Firemind’s open source projects.

Jason Mathew is a Cloud Engineer at Firemind, leading the delivery of projects for customers end-to-end from writing pipelines with IaC, building out data engineering with Python, and pushing the boundaries of ML. Jason is also the key contributor to Firemind’s open source projects.