Generative AI systems are already being used to write books, create graphic designs, assist medical practitioners, and are becoming increasingly capable. To ensure these systems are developed and deployed responsibly requires carefully evaluating the potential ethical and social risks they may pose.In our paper, we propose a three-layered framework for evaluating the social and ethical risks of AI systems. This framework includes evaluations of AI system capability, human interaction, and systemic impacts.We also map the current state of safety evaluations and find three main gaps: context, specific risks, and multimodality. To help close these gaps, we call for repurposing existing evaluation methods for generative AI and for implementing a comprehensive approach to evaluation, as in our case study on misinformation. This approach integrates findings like how likely the AI system is to provide factually incorrect information, with insights on how people use that system, and in what context. Multi-layered evaluations can draw conclusions beyond model capability and indicate whether harm — in this case, misinformation — actually occurs and spreads. To make any technology work as intended, both social and technical challenges must be solved. So to better assess AI system safety, these different layers of context must be taken into account. Here, we build upon earlier research identifying the potential risks of large-scale language models, such as privacy leaks, job automation, misinformation, and more — and introduce a way of comprehensively evaluating these risks going forward.Read More

Measurement-induced entanglement phase transitions in a quantum circuit

Quantum mechanics allows many phenomena that are classically impossible: a quantum particle can exist in a superposition of two states simultaneously or be entangled with another particle, such that anything you do to one seems to instantaneously also affect the other, regardless of the space between them. But perhaps no aspect of quantum theory is as striking as the act of measurement. In classical mechanics, a measurement need not affect the system being studied. But a measurement on a quantum system can profoundly influence its behavior. For example, when a quantum bit of information, called a qubit, that is in a superposition of both “0” and “1” is measured, its state will suddenly collapse to one of the two classically allowed states: it will be either “0” or “1,” but not both. This transition from the quantum to classical worlds seems to be facilitated by the act of measurement. How exactly it occurs is one of the fundamental unanswered questions in physics.

In a large system comprising many qubits, the effect of measurements can cause new phases of quantum information to emerge. Similar to how changing parameters such as temperature and pressure can cause a phase transition in water from liquid to solid, tuning the strength of measurements can induce a phase transition in the entanglement of qubits.

Today in “Measurement-induced entanglement and teleportation on a noisy quantum processor”, published in Nature, we describe experimental observations of measurement-induced effects in a system of 70 qubits on our Sycamore quantum processor. This is, by far, the largest system in which such a phase transition has been observed. Additionally, we detected “quantum teleportation” — when a quantum state is transferred from one set of qubits to another, detectable even if the details of that state are unknown — which emerged from measurements of a random circuit. We achieved this breakthrough by implementing a few clever “tricks” to more readily see the signatures of measurement-induced effects in the system.

Background: Measurement-induced entanglement

Consider a system of qubits that start out independent and unentangled with one another. If they interact with one another , they will become entangled. You can imagine this as a web, where the strands represent the entanglement between qubits. As time progresses, this web grows larger and more intricate, connecting increasingly disparate points together.

A full measurement of the system completely destroys this web, since every entangled superposition of qubits collapses when it’s measured. But what happens when we make a measurement on only a few of the qubits? Or if we wait a long time between measurements? During the intervening time, entanglement continues to grow. The web’s strands may not extend as vastly as before, but there are still patterns in the web.

There is a balancing point between the strength of interactions and measurements, which compete to affect the intricacy of the web. When interactions are strong and measurements are weak, entanglement remains robust and the web’s strands extend farther, but when measurements begin to dominate, the entanglement web is destroyed. We call the crossover between these two extremes the measurement-induced phase transition.

In our quantum processor, we observe this measurement-induced phase transition by varying the relative strengths between interactions and measurement. We induce interactions by performing entangling operations on pairs of qubits. But to actually see this web of entanglement in an experiment is notoriously challenging. First, we can never actually look at the strands connecting the qubits — we can only infer their existence by seeing statistical correlations between the measurement outcomes of the qubits. So, we need to repeat the same experiment many times to infer the pattern of the web. But there’s another complication: the web pattern is different for each possible measurement outcome. Simply averaging all of the experiments together without regard for their measurement outcomes would wash out the webs’ patterns. To address this, some previous experiments used “post-selection,” where only data with a particular measurement outcome is used and the rest is thrown away. This, however, causes an exponentially decaying bottleneck in the amount of “usable” data you can acquire. In addition, there are also practical challenges related to the difficulty of mid-circuit measurements with superconducting qubits and the presence of noise in the system.

How we did it

To address these challenges, we introduced three novel tricks to the experiment that enabled us to observe measurement-induced dynamics in a system of up to 70 qubits.

Trick 1: Space and time are interchangeable

As counterintuitive as it may seem, interchanging the roles of space and time dramatically reduces the technical challenges of the experiment. Before this “space-time duality” transformation, we would have had to interleave measurements with other entangling operations, frequently checking the state of selected qubits. Instead, after the transformation, we can postpone all measurements until after all other operations, which greatly simplifies the experiment. As implemented here, this transformation turns the original 1-spatial-dimensional circuit we were interested in studying into a 2-dimensional one. Additionally, since all measurements are now at the end of the circuit, the relative strength of measurements and entangling interactions is tuned by varying the number of entangling operations performed in the circuit.

Trick 2: Overcoming the post-selection bottleneck

Since each combination of measurement outcomes on all of the qubits results in a unique web pattern of entanglement, researchers often use post-selection to examine the details of a particular web. However, because this method is very inefficient, we developed a new “decoding” protocol that compares each instance of the real “web” of entanglement to the same instance in a classical simulation. This avoids post-selection and is sensitive to features that are common to all of the webs. This common feature manifests itself into a combined classical–quantum “order parameter”, akin to the cross-entropy benchmark used in the random circuit sampling used in our beyond-classical demonstration.

This order parameter is calculated by selecting one of the qubits in the system as the “probe” qubit, measuring it, and then using the measurement record of the nearby qubits to classically “decode” what the state of the probe qubit should be. By cross-correlating the measured state of the probe with this “decoded” prediction, we can obtain the entanglement between the probe qubit and the rest of the (unmeasured) qubits. This serves as an order parameter, which is a proxy for determining the entanglement characteristics of the entire web.

Trick 3: Using noise to our advantage

A key feature of the so-called “disentangling phase” — where measurements dominate and entanglement is less widespread — is its insensitivity to noise. We can therefore look at how the probe qubit is affected by noise in the system and use that to differentiate between the two phases. In the disentangling phase, the probe will be sensitive only to local noise that occurs within a particular area near the probe. On the other hand, in the entangling phase, any noise in the system can affect the probe qubit. In this way, we are turning something that is normally seen as a nuisance in experiments into a unique probe of the system.

What we saw

We first studied how the order parameter was affected by noise in each of the two phases. Since each of the qubits is noisy, adding more qubits to the system adds more noise. Remarkably, we indeed found that in the disentangling phase the order parameter is unaffected by adding more qubits to the system. This is because, in this phase, the strands of the web are very short, so the probe qubit is only sensitive to the noise of its nearest qubits. In contrast, we found that in the entangling phase, where the strands of the entanglement web stretch longer, the order parameter is very sensitive to the size of the system, or equivalently, the amount of noise in the system. The transition between these two sharply contrasting behaviors indicates a transition in the entanglement character of the system as the “strength” of measurement is increased.

In our experiment, we also demonstrated a novel form of quantum teleportation that arises in the entangling phase. Typically, a specific set of operations are necessary to implement quantum teleportation, but here, the teleportation emerges from the randomness of the non-unitary dynamics. When all qubits, except the probe and another system of far away qubits, are measured, the remaining two systems are strongly entangled with each other. Without measurement, these two systems of qubits would be too far away from each other to know about the existence of each other. With measurements, however, entanglement can be generated faster than the limits typically imposed by locality and causality. This “measurement-induced entanglement” between the qubits (that must also be aided with a classical communications channel) is what allows for quantum teleportation to occur.

Conclusion

Our experiments demonstrate the effect of measurements on a quantum circuit. We show that by tuning the strength of measurements, we can induce transitions to new phases of quantum entanglement within the system and even generate an emergent form of quantum teleportation. This work could potentially have relevance to quantum computing schemes, where entanglement and measurements both play a role.

Acknowledgements

This work was done while Jesse Hoke was interning at Google from Stanford University. We would like to thank Katie McCormick, our Quantum Science Communicator, for helping to write this blog post.

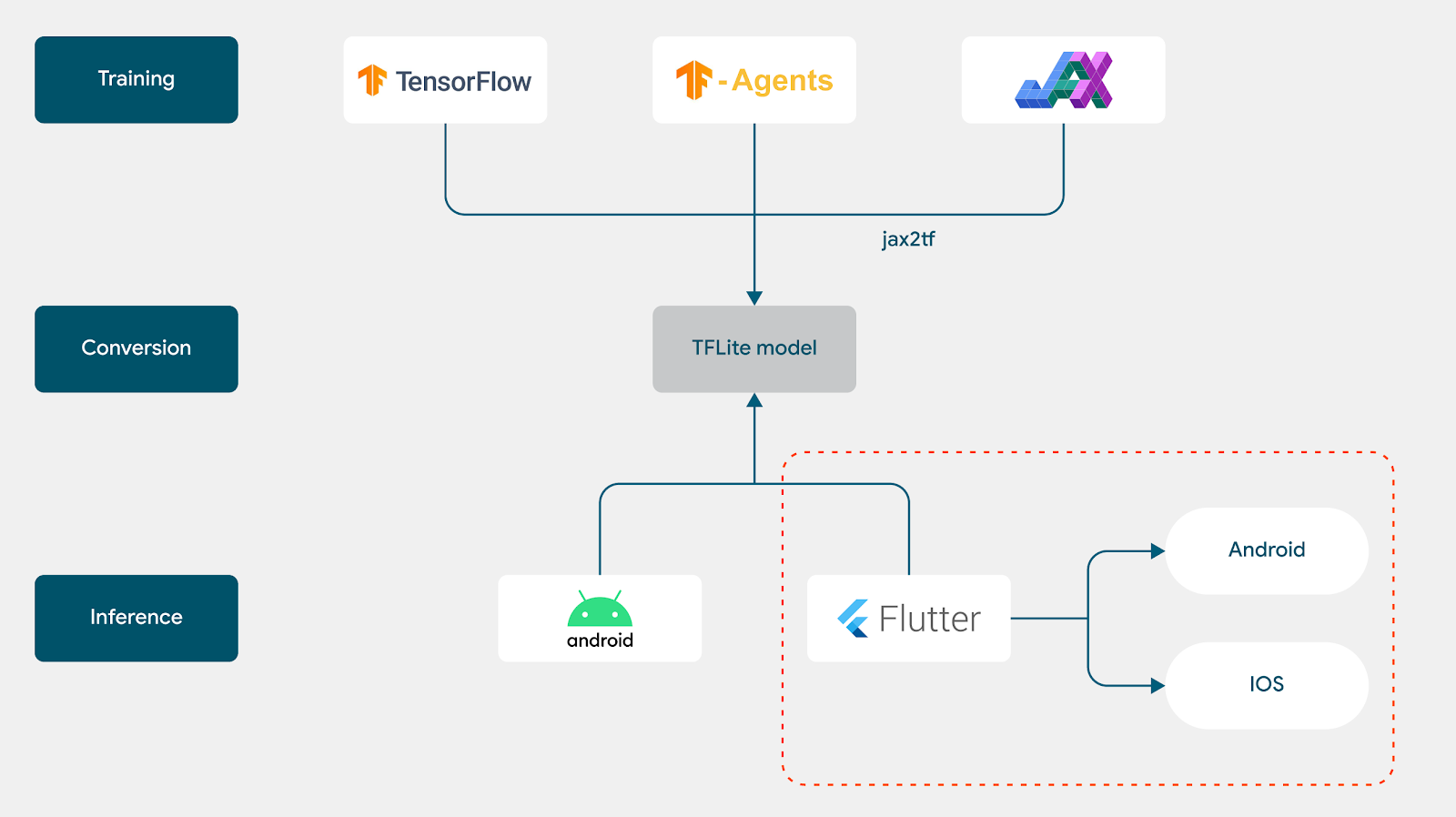

Building a board game with the TFLite plugin for Flutter

Posted by Wei Wei, Developer Advocate

In our previous blog posts Building a board game app with TensorFlow: a new TensorFlow Lite reference app and Building a reinforcement learning agent with JAX, and deploying it on Android with TensorFlow Lite, we demonstrated how to train a reinforcement learning (RL) agent with TensorFlow, TensorFlow Agents and JAX respectively, and then deploy the converted TFLite model in an Android app using TensorFlow Lite, to play a simple board game ‘Plane Strike’.

Since we already have the model trained with TensorFlow and converted to TFLite, we can just load the model with TFLite interpreter:

|

void _loadModel() async { |

Then we pass in the user board state and help the game agent identify the most promising position to strike next (please refer to our previous blog posts if you need a refresher on the game rules) by running TFLite inference:

|

int predict(List<List<double>> boardState) { |

That’s it! With some additional Flutter frontend code to render the game boards and track game progress, we can immediately run the game on both Android and iOS (currently the plugin only supports these two mobile platforms). You can find the complete code on GitHub.

|

- Convert the TFAgents-trained model to TFLite and run it with the plugin

- Leverage the RL technique we have used and build a new agent for the tic tac toe game in the Flutter Casual Games Toolkit. You will need to create a new RL environment and train the model from scratch before deployment, but the core concept and technique are pretty much the same.

This concludes this mini-series of blogs on leveraging TensorFlow/JAX to build games for Android and Flutter. And we very much look forward to all the exciting things you build with our tooling, so be sure to share them with @googledevs, @TensorFlow, and your developer communities!

Optimize pet profiles for Purina’s Petfinder application using Amazon Rekognition Custom Labels and AWS Step Functions

Purina US, a subsidiary of Nestle, has a long history of enabling people to more easily adopt pets through Petfinder, a digital marketplace of over 11,000 animal shelters and rescue groups across the US, Canada, and Mexico. As the leading pet adoption platform, Petfinder has helped millions of pets find their forever homes.

Purina consistently seeks ways to make the Petfinder platform even better for both shelters and rescue groups and pet adopters. One challenge they faced was adequately reflecting the specific breed of animals up for adoption. Because many shelter animals are mixed breed, identifying breeds and attributes correctly in the pet profile required manual effort, which was time consuming. Purina used artificial intelligence (AI) and machine learning (ML) to automate animal breed detection at scale.

This post details how Purina used Amazon Rekognition Custom Labels, AWS Step Functions, and other AWS Services to create an ML model that detects the pet breed from an uploaded image and then uses the prediction to auto-populate the pet attributes. The solution focuses on the fundamental principles of developing an AI/ML application workflow of data preparation, model training, model evaluation, and model monitoring.

Solution overview

Predicting animal breeds from an image needs custom ML models. Developing a custom model to analyze images is a significant undertaking that requires time, expertise, and resources, often taking months to complete. Additionally, it often requires thousands or tens of thousands of hand-labeled images to provide the model with enough data to accurately make decisions. Setting up a workflow for auditing or reviewing model predictions to validate adherence to your requirements can further add to the overall complexity.

With Rekognition Custom Labels, which is built on the existing capabilities of Amazon Rekognition, you can identify the objects and scenes in images that are specific to your business needs. It is already trained on tens of millions of images across many categories. Instead of thousands of images, you can upload a small set of training images (typically a few hundred images or less per category) that are specific to your use case.

The solution uses the following services:

- Amazon API Gateway is a fully managed service that makes it easy for developers to publish, maintain, monitor, and secure APIs at any scale.

- The AWS Cloud Development Kit (AWS CDK) is an open-source software development framework for defining cloud infrastructure as code with modern programming languages and deploying it through AWS CloudFormation.

- AWS CodeBuild is a fully managed continuous integration service in the cloud. CodeBuild compiles source code, runs tests, and produces packages that are ready to deploy.

- Amazon DynamoDB is a fast and flexible nonrelational database service for any scale.

- AWS Lambda is an event-driven compute service that lets you run code for virtually any type of application or backend service without provisioning or managing servers.

- Amazon Rekognition offers pre-trained and customizable computer vision (CV) capabilities to extract information and insights from your images and videos. With Amazon Rekognition Custom Labels, you can identify the objects and scenes in images that are specific to your business needs.

- AWS Step Functions is a fully managed service that makes it easier to coordinate the components of distributed applications and microservices using visual workflows.

- AWS Systems Manager is a secure end-to-end management solution for resources on AWS and in multicloud and hybrid environments. Parameter Store, a capability of Systems Manager, provides secure, hierarchical storage for configuration data management and secrets management.

Purina’s solution is deployed as an API Gateway HTTP endpoint, which routes the requests to obtain pet attributes. It uses Rekognition Custom Labels to predict the pet breed. The ML model is trained from pet profiles pulled from Purina’s database, assuming the primary breed label is the true label. DynamoDB is used to store the pet attributes. Lambda is used to process the pet attributes request by orchestrating between API Gateway, Amazon Rekognition, and DynamoDB.

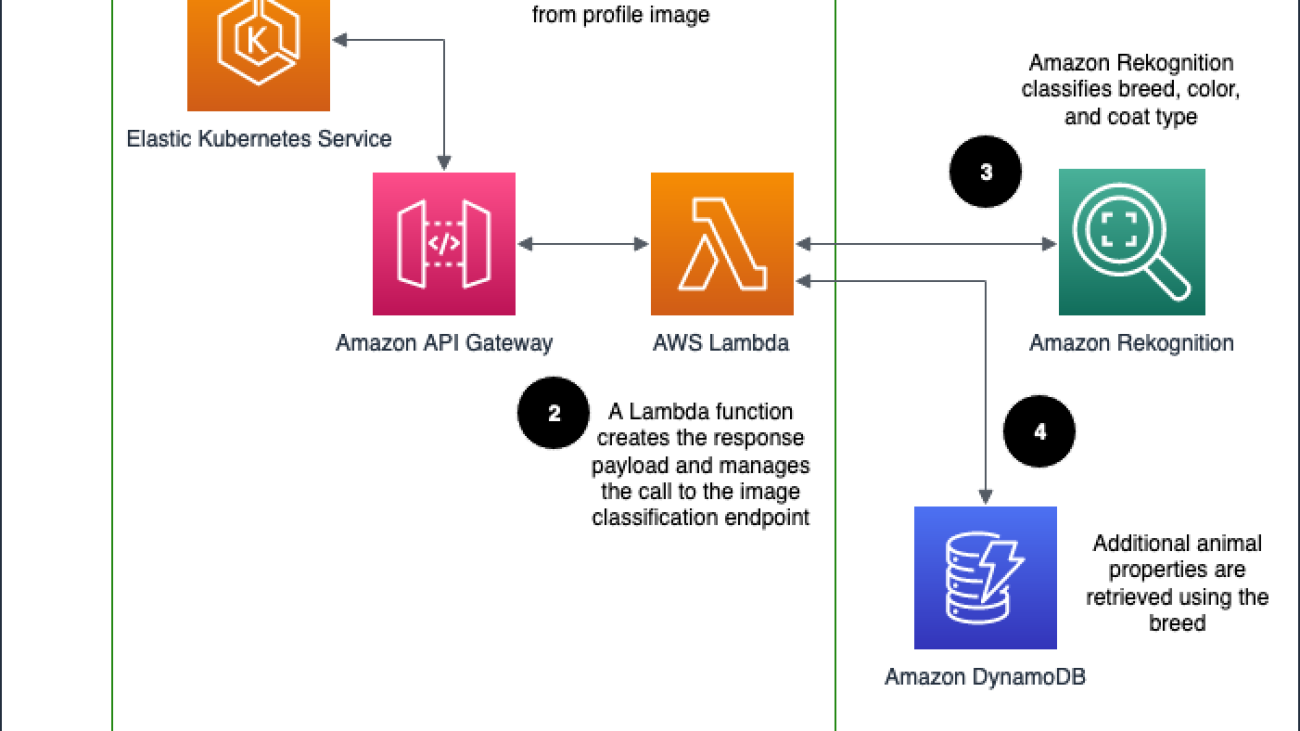

The architecture is implemented as follows:

- The Petfinder application routes the request to obtain the pet attributes via API Gateway.

- API Gateway calls the Lambda function to obtain the pet attributes.

- The Lambda function calls the Rekognition Custom Label inference endpoint to predict the pet breed.

- The Lambda function uses the predicted pet breed information to perform a pet attributes lookup in the DynamoDB table. It collects the pet attributes and sends it back to the Petfinder application.

The following diagram illustrates the solution workflow.

The Petfinder team at Purina wants an automated solution that they can deploy with minimal maintenance. To deliver this, we use Step Functions to create a state machine that trains the models with the latest data, checks their performance on a benchmark set, and redeploys the models if they have improved. The model retraining is triggered from the number of breed corrections made by users submitting profile information.

Model training

Developing a custom model to analyze images is a significant undertaking that requires time, expertise, and resources. Additionally, it often requires thousands or tens of thousands of hand-labeled images to provide the model with enough data to accurately make decisions. Generating this data can take months to gather and requires a large effort to label it for use in machine learning. A technique called transfer learning helps produce higher-quality models by borrowing the parameters of a pre-trained model, and allows models to be trained with fewer images.

Our challenge is that our data is not perfectly labeled: humans who enter the profile data can and do make mistakes. However, we found that for large enough data samples, the mislabeled images accounted for a sufficiently small fraction and model performance was not impacted more than 2% in accuracy.

ML workflow and state machine

The Step Functions state machine is developed to aid in the automatic retraining of the Amazon Rekognition model. Feedback is gathered during profile entry—each time a breed that has been inferred from an image is modified by the user to a different breed, the correction is recorded. This state machine is triggered from a configurable threshold number of corrections and additional pieces of data.

The state machine runs through several steps to create a solution:

- Create train and test manifest files containing the list of Amazon Simple Storage Service (Amazon S3) image paths and their labels for use by Amazon Rekognition.

- Create an Amazon Rekognition dataset using the manifest files.

- Train an Amazon Rekognition model version after the dataset is created.

- Start the model version when training is complete.

- Evaluate the model and produce performance metrics.

- If performance metrics are satisfactory, update the model version in Parameter Store.

- Wait for the new model version to propagate in the Lambda functions (20 minutes), then stop the previous model.

Model evaluation

We use a random 20% holdout set taken from our data sample to validate our model. Because the breeds we detect are configurable, we don’t use a fixed dataset for validation during training, but we do use a manually labeled evaluation set for integration testing. The overlap of the manually labeled set and the model’s detectable breeds is used to compute metrics. If the model’s breed detection accuracy is above a specified threshold, we promote the model to be used in the endpoint.

The following are a few screenshots of the pet prediction workflow from Rekognition Custom Labels.

Deployment with the AWS CDK

The Step Functions state machine and associated infrastructure (including Lambda functions, CodeBuild projects, and Systems Manager parameters) are deployed with the AWS CDK using Python. The AWS CDK code synthesizes a CloudFormation template, which it uses to deploy all infrastructure for the solution.

Integration with the Petfinder application

The Petfinder application accesses the image classification endpoint through the API Gateway endpoint using a POST request containing a JSON payload with fields for the Amazon S3 path to the image and the number of results to be returned.

KPIs to be impacted

To justify the added cost of running the image inference endpoint, we ran experiments to determine the value that the endpoint adds for Petfinder. The use of the endpoint offers two main types of improvement:

- Reduced effort for pet shelters who are creating the pet profiles

- More complete pet profiles, which are expected to improve search relevance

Metrics for measuring effort and profile completeness include the number of auto-filled fields that are corrected, total number of fields filled, and time to upload a pet profile. Improvements to search relevance are indirectly inferred from measuring key performance indicators related to adoption rates. According to Purina, after the solution went live, the average time for creating a pet profile on the Petfinder application was reduced from 7 minutes to 4 minutes. That is a huge improvement and time savings because in 2022, 4 million pet profiles were uploaded.

Security

The data that flows through the architecture diagram is encrypted in transit and at rest, in accordance with the AWS Well-Architected best practices. During all AWS engagements, a security expert reviews the solution to ensure a secure implementation is provided.

Conclusion

With their solution based on Rekognition Custom Labels, the Petfinder team is able to accelerate the creation of pet profiles for pet shelters, reducing administrative burden on shelter personnel. The deployment based on the AWS CDK deploys a Step Functions workflow to automate the training and deployment process. To start using Rekognition Custom Labels, refer to Getting Started with Amazon Rekognition Custom Labels. You can also check out some Step Functions examples and get started with the AWS CDK.

About the Authors

Mason Cahill is a Senior DevOps Consultant with AWS Professional Services. He enjoys helping organizations achieve their business goals, and is passionate about building and delivering automated solutions on the AWS Cloud. Outside of work, he loves spending time with his family, hiking, and playing soccer.

Mason Cahill is a Senior DevOps Consultant with AWS Professional Services. He enjoys helping organizations achieve their business goals, and is passionate about building and delivering automated solutions on the AWS Cloud. Outside of work, he loves spending time with his family, hiking, and playing soccer.

Matthew Chasse is a Data Science consultant at Amazon Web Services, where he helps customers build scalable machine learning solutions. Matthew has a Mathematics PhD and enjoys rock climbing and music in his free time.

Matthew Chasse is a Data Science consultant at Amazon Web Services, where he helps customers build scalable machine learning solutions. Matthew has a Mathematics PhD and enjoys rock climbing and music in his free time.

Rushikesh Jagtap is a Solutions Architect with 5+ years of experience in AWS Analytics services. He is passionate about helping customers to build scalable and modern data analytics solutions to gain insights from the data. Outside of work, he loves watching Formula1, playing badminton, and racing Go Karts.

Rushikesh Jagtap is a Solutions Architect with 5+ years of experience in AWS Analytics services. He is passionate about helping customers to build scalable and modern data analytics solutions to gain insights from the data. Outside of work, he loves watching Formula1, playing badminton, and racing Go Karts.

Tayo Olajide is a seasoned Cloud Data Engineering generalist with over a decade of experience in architecting and implementing data solutions in cloud environments. With a passion for transforming raw data into valuable insights, Tayo has played a pivotal role in designing and optimizing data pipelines for various industries, including finance, healthcare, and auto industries. As a thought leader in the field, Tayo believes that the power of data lies in its ability to drive informed decision-making and is committed to helping businesses leverage the full potential of their data in the cloud era. When he’s not crafting data pipelines, you can find Tayo exploring the latest trends in technology, hiking in the great outdoors, or tinkering with gadgetry and software.

Tayo Olajide is a seasoned Cloud Data Engineering generalist with over a decade of experience in architecting and implementing data solutions in cloud environments. With a passion for transforming raw data into valuable insights, Tayo has played a pivotal role in designing and optimizing data pipelines for various industries, including finance, healthcare, and auto industries. As a thought leader in the field, Tayo believes that the power of data lies in its ability to drive informed decision-making and is committed to helping businesses leverage the full potential of their data in the cloud era. When he’s not crafting data pipelines, you can find Tayo exploring the latest trends in technology, hiking in the great outdoors, or tinkering with gadgetry and software.

Understanding the user: How the Enterprise System Usability Scale aligns with user reality

This position research paper was presented at the 26th ACM Conference on Computer-Supported Cooperative Work and Social Computing (opens in new tab) (CSCW 2023), a premier venue for research on the design and use of technologies that affect groups, organizations, and communities.

In the business world, measuring success is as critical as selecting the right goals, and metrics act as a guiding compass, shaping organizational objectives. They are instrumental as businesses strategize to develop products that are likely to succeed in specific markets or among certain user groups.

However, businesses often overlook whether these metrics accurately reflect users’ experiences and behaviors. Do they truly reflect the consumers’ journey and provide a reliable evaluation of the products’ place in the market? Put differently, do these metrics truly capture a product’s effectiveness and value, or are they superficial, overlooking deeper insights that could lead a business toward lasting success?

Microsoft Research Podcast

Collaborators: Gov4git with Petar Maymounkov and Kasia Sitkiewicz

Gov4git is a governance tool for decentralized, open-source cooperation, and is helping to lay the foundation for a future in which everyone can collaborate more efficiently, transparently, and easily and in ways that meet the unique desires and needs of their respective communities.

Challenges in enterprise usability metrics research

In our paper, “A Call to Revisit Classic Measurements for UX Evaluation (opens in new tab),” presented at the UX Outcomes Workshop at CSCW 2023 (opens in new tab), we explore these questions about usability metrics—which evaluate the simplicity and effectiveness of a product, service, or system for its users—and their applicability to enterprise products. These metrics are vital when measuring a product’s health in the market and predicting adoption rates, user engagement, and, by extension, revenue generation. Current usability metrics in the enterprise space often fail to align with the actual user’s reality when using technical enterprise products such as business analytics, data engineering, and data science software. Oftentimes, they lack methodological rigor, calling into question their generalizability and validity.

One example is the System Usability Scale (opens in new tab) (SUS), the most widely used usability metric. In the context of enterprise products, at least two questions used in SUS do not resonate with users’ actual experiences: “I think I would like to use the system frequently” and “I think I need the support of a technical person to be able to use this product.” Because users of enterprise products are consumers, not necessarily customers, they often do not get to choose which product to use. In some cases, they are IT professionals with no one to turn to for technical assistance. This misalignment highlights the need to refine how we measure usability for enterprise products.

Another concern is the lack of rigorous validation for metrics that reflect a product’s performance. For instance, UMUX-Lite (opens in new tab) is a popular metric for its simplicity and strong correlation with SUS. However, its scoring methodology requires that researchers use an equation consisting of a regression weight and constant to align the average scores with SUS scores. This lacks a solid theoretical foundation, which raises questions about UMUX-Lite’s ability to generalize to different contexts and respondent samples.

The lack of standardization underscores the need for metrics that are grounded in the user’s reality for the types of products being assessed and based on theoretical and empirical evidence, ensuring that they are generalizable to diverse contexts. This approach will pave the way for more reliable insights into product usability, fostering informed decisions crucial for enhancing the user experience and driving product success.

ESUS: A reality-driven approach to usability metrics

Recognizing this need, we endeavored to create a new usability metric that accurately reflects the experience of enterprise product users, built on solid theory and supported by empirical evidence. Our research combines qualitative and quantitative approaches to devise a tailored usability metric for enterprise products, named the Enterprise System Usability Scale (ESUS).

ESUS offers a number of benefits over the SUS and UMUX-Lite. It is more concise than the SUS, containing only half the questions and streamlining the evaluation process. It also eliminates the need for practitioners to use a sample-specific weight and constant, as required by UMUX-Lite, providing a more reliable measure of product usability. Moreover, ESUS demonstrates convergent validity, correlating with other usability metrics, such as SUS. Most importantly, through its conciseness and specificity, it was designed with enterprise product users in mind, providing relevant and actionable insights.

In Table 1 below, we offer ESUS as a step towards more accurate, reliable, and user-focused metrics for enterprise products, which are instrumental in driving well-informed decisions in improving product usability and customer satisfaction.

| ESUS Items | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| How useful is [this product] to you? | Not at all useful | Slightly useful | Somewhat useful | Mostly useful | Very useful |

| How easy or hard was [this product] to use for you? | Very hard | Hard | Neutral | Easy | Very easy |

| How confident were you when using [this product]? | Not at all confident | Slightly confident | Somewhat confident | Mostly confident | Very confident |

| How well do the functions work together or do not work together in [this product]? | Does not work together at all | Does not work well together | Neutral | Works well together | Works very well together |

| How easy or hard was it to get started with [this product]? | Very hard | Hard | Neutral | Easy | Very easy |

Looking ahead: Advancing precision in understanding the user

Moving forward, our focus is on rigorously testing and enhancing ESUS. We aim to examine its consistency over time and its effectiveness with small sample sizes. Our goal is to ensure our metrics are as robust and adaptable as the rapidly evolving enterprise product environment requires. We’re committed to continuous improvement, striving for metrics that are not just accurate but also relevant and reliable, offering actionable insights for an ever-improving user experience.

The post Understanding the user: How the Enterprise System Usability Scale aligns with user reality appeared first on Microsoft Research.

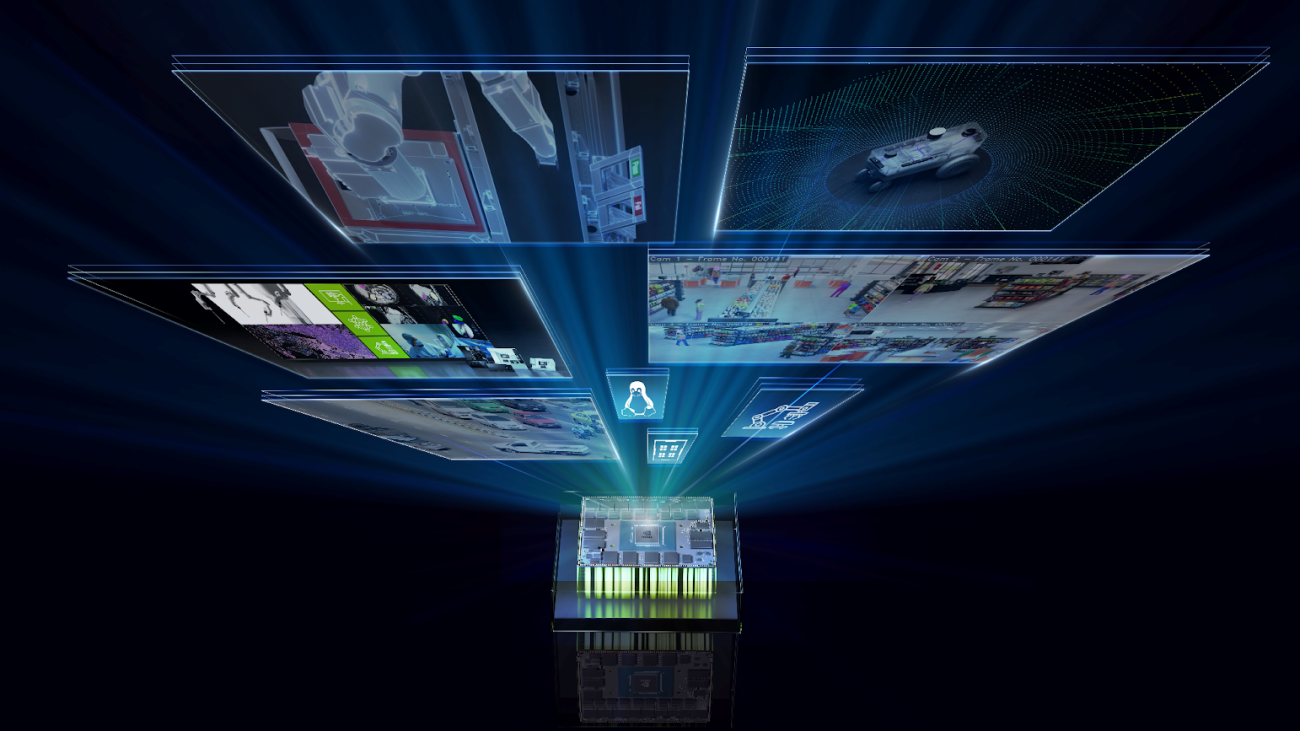

NVIDIA Expands Robotics Platform to Meet the Rise of Generative AI

Powerful generative AI models and cloud-native APIs and microservices are coming to the edge.

Generative AI is bringing the power of transformer models and large language models to virtually every industry. That reach now includes areas that touch edge, robotics and logistics systems: defect detection, real-time asset tracking, autonomous planning and navigation, human-robot interactions and more.

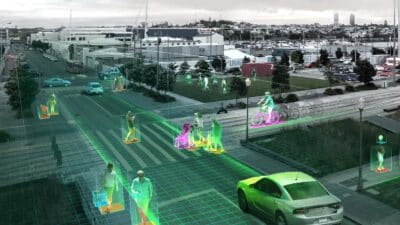

NVIDIA today announced major expansions to two frameworks on the NVIDIA Jetson platform for edge AI and robotics: the NVIDIA Isaac ROS robotics framework has entered general availability, and the NVIDIA Metropolis expansion on Jetson is coming next.

To accelerate AI application development and deployments at the edge, NVIDIA has also created a Jetson Generative AI Lab for developers to use with the latest open-source generative AI models.

More than 1.2 million developers and over 10,000 customers have chosen NVIDIA AI and the Jetson platform, including Amazon Web Services, Cisco, John Deere, Medtronic, Pepsico and Siemens.

With the rapidly evolving AI landscape addressing increasingly complicated scenarios, developers are being challenged by longer development cycles to build AI applications for the edge. Reprogramming robots and AI systems on the fly to meet changing environments, manufacturing lines and automation needs of customers is time-consuming and requires expert skills.

Generative AI offers zero-shot learning — the ability for a model to recognize things specifically unseen before in training — with a natural language interface to simplify the development, deployment and management of AI at the edge.

Transforming the AI Landscape

Generative AI dramatically improves ease of use by understanding human language prompts to make model changes. Those AI models are more flexible in detecting, segmenting, tracking, searching and even reprogramming — and help outperform traditional convolutional neural network-based models.

Generative AI is expected to add $10.5 billion in revenue for manufacturing operations worldwide by 2033, according to ABI Research.

“Generative AI will significantly accelerate deployments of AI at the edge with better generalization, ease of use and higher accuracy than previously possible,” said Deepu Talla, vice president of embedded and edge computing at NVIDIA. “This largest-ever software expansion of our Metropolis and Isaac frameworks on Jetson, combined with the power of transformer models and generative AI, addresses this need.”

Developing With Generative AI at the Edge

The Jetson Generative AI Lab provides developers access to optimized tools and tutorials for deploying open-source LLMs, diffusion models to generate stunning interactive images, vision language models (VLMs) and vision transformers (ViTs) that combine vision AI and natural language processing to provide comprehensive understanding of the scene.

Developers can also use the NVIDIA TAO Toolkit to create efficient and accurate AI models for edge applications. TAO provides a low-code interface to fine-tune and optimize vision AI models, including ViT and vision foundational models. They can also customize and fine-tune foundational models like NVIDIA NV-DINOv2 or public models like OpenCLIP to create highly accurate vision AI models with very little data. TAO additionally now includes VisualChangeNet, a new transformer-based model for defect inspection.

Harnessing New Metropolis and Isaac Frameworks

NVIDIA Metropolis makes it easier and more cost-effective for enterprises to embrace world-class, vision AI-enabled solutions to improve critical operational efficiency and safety problems. The platform brings a collection of powerful application programming interfaces and microservices for developers to quickly develop complex vision-based applications.

More than 1,000 companies, including BMW Group, Pepsico, Kroger, Tyson Foods, Infosys and Siemens, are using NVIDIA Metropolis developer tools to solve Internet of Things, sensor processing and operational challenges with vision AI — and the rate of adoption is quickening. The tools have now been downloaded over 1 million times by those looking to build vision AI applications.

To help developers quickly build and deploy scalable vision AI applications, an expanded set of Metropolis APIs and microservices on NVIDIA Jetson will be available by year’s end.

Hundreds of customers use the NVIDIA Isaac platform to develop high-performance robotics solutions across diverse domains, including agriculture, warehouse automation, last-mile delivery and service robotics, among others.

At ROSCon 2023, NVIDIA announced major improvements to perception and simulation capabilities with new releases of Isaac ROS and Isaac Sim software. Built on the widely adopted open-source Robot Operating System (ROS), Isaac ROS brings perception to automation, giving eyes and ears to the things that move. By harnessing the power of GPU-accelerated GEMs, including visual odometry, depth perception, 3D scene reconstruction, localization and planning, robotics developers gain the tools needed to swiftly engineer robotic solutions tailored for a diverse range of applications.

Isaac ROS has reached production-ready status with the latest Isaac ROS 2.0 release, enabling developers to create and bring high-performance robotics solutions to market with Jetson.

“ROS continues to grow and evolve to provide open-source software for the whole robotics community,” said Geoff Biggs, CTO of the Open Source Robotics Foundation. “NVIDIA’s new prebuilt ROS 2 packages, launched with this release, will accelerate that growth by making ROS 2 readily available to the vast NVIDIA Jetson developer community.”

Delivering New Reference AI Workflows

Developing a production-ready AI solution entails optimizing the development and training of AI models tailored to specific use cases, implementing robust security features on the platform, orchestrating the application, managing fleets, establishing seamless edge-to-cloud communication and more.

NVIDIA announced a curated collection of AI reference workflows based on Metropolis and Isaac frameworks that enable developers to quickly adopt the entire workflow or selectively integrate individual components, resulting in substantial reductions in both development time and cost. The three distinct AI workflows include: Network Video Recording, Automatic Optical Inspection and Autonomous Mobile Robot.

“NVIDIA Jetson, with its broad and diverse user base and partner ecosystem, has helped drive a revolution in robotics and AI at the edge,” said Jim McGregor, principal analyst at Tirias Research. “As application requirements become increasingly complex, we need a foundational shift to platforms that simplify and accelerate the creation of edge deployments. This significant software expansion by NVIDIA gives developers access to new multi-sensor models and generative AI capabilities.”

More Coming on the Horizon

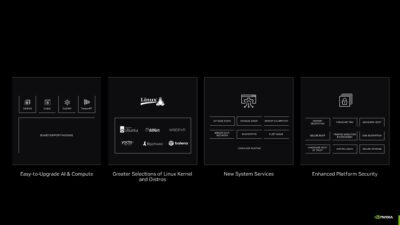

NVIDIA announced a collection of system services which are fundamental capabilities that every developer requires when building edge AI solutions. These services will simplify integration into workflows and spare developer the arduous task of building them from the ground up.

The new NVIDIA JetPack 6, expected to be available by year’s end, will empower AI developers to stay at the cutting edge of computing without the need for a full Jetson Linux upgrade, substantially expediting development timelines and liberating them from Jetson Linux dependencies. JetPack 6 will also use the collaborative efforts with Linux distribution partners to expand the horizon of Linux-based distribution choices, including Canonical’s Optimized and Certified Ubuntu, Wind River Linux, Concurrent Real’s Redhawk Linux and various Yocto-based distributions.

Partner Ecosystem Benefits From Platform Expansion

The Jetson partner ecosystem provides a wide range of support, from hardware, AI software and application design services to sensors, connectivity and developer tools. These NVIDIA Partner Network innovators play a vital role in providing the building blocks and sub-systems for many products sold on the market.

The latest release allows Jetson partners to accelerate their time to market and expand their customer base by adopting AI with increased performance and capabilities.

Independent software vendor partners will also be able to expand their offerings for Jetson.

Join us Tuesday, Nov. 7, at 9 a.m. PT for the Bringing Generative AI to Life with NVIDIA Jetson webinar, where technical experts will dive deeper into the news announced here, including accelerated APIs and quantization methods for deploying LLMs and VLMs on Jetson, optimizing vision transformers with TensorRT, and more.

Sign up for NVIDIA Metropolis early access here.

Making Machines Mindful: NYU Professor Talks Responsible AI

Artificial intelligence is now a household term. Responsible AI is hot on its heels.

Julia Stoyanovich, associate professor of computer science and engineering at NYU and director of the university’s Center for Responsible AI, wants to make the terms “AI” and “responsible AI” synonymous.

In the latest episode of the NVIDIA AI Podcast, host Noah Kravitz spoke with Stoyanovich about responsible AI, her advocacy efforts and how people can help.

Stoyanovich started her work at the Center for Responsible AI with basic research. She soon realized that what was needed were better guardrails, not just more algorithms.

As AI’s potential has grown, along with the ethical concerns surrounding its use, Stoyanovich clarifies that the “responsibility” lies with people, not AI.

“The responsibility refers to people taking responsibility for the decisions that we make individually and collectively about whether to build an AI system and how to build, test, deploy and keep it in check,” she said.

AI ethics is a related concern, used to refer to “the embedding of moral values and principles into the design, development and use of the AI,” she added.

Lawmakers have taken notice. For example, New York recently implemented a law that makes job candidate screening more transparent.

According to Stoyanovich, “the law is not perfect,” but “we can only learn how to regulate something if we try regulating” and converse openly with the “people at the table being impacted.”

Stoyanovich wants two things: for people to recognize that AI can’t predict human choices and that AI systems be transparent and accountable, carrying a “nutritional label.”

That process should include considerations on who is using AI tools, how they’re used to make decisions and who is subjected to those decisions, she said.

Stoyanovich urges people to “start demanding actions and explanations to understand” how AI is used at local, state and federal levels.

“We need to teach ourselves to help others learn about what AI is and why we should care,” she said. “So please get involved in how we govern ourselves, because we live in a democracy. We have to step up.”

You Might Also Like

Jules Anh Tuan Nguyen Explains How AI Lets Amputee Control Prosthetic Hand, Video Games

A postdoctoral researcher at the University of Minnesota discusses his efforts to allow amputees to control their prosthetic limb — right down to the finger motions — with their minds.

Overjet’s Ai Wardah Inam on Bringing AI to Dentistry

Overjet, a member of NVIDIA Inception, is moving fast to bring AI to dentists’ offices. Dr. Wardah Inam, CEO of the company, discusses using AI to improve patient care.

Immunai CTO and Co-Founder Luis Voloch on Using Deep Learning to Develop New Drugs

Luis Voloch talks about tackling the challenges of the immune system with a machine learning and data science mindset.

Subscribe to the AI Podcast: Now Available on Amazon Music

The AI Podcast is now available through Amazon Music.

In addition, get the AI Podcast through iTunes, Google Podcasts, Google Play, Castbox, DoggCatcher, Overcast, PlayerFM, Pocket Casts, Podbay, PodBean, PodCruncher, PodKicker, Soundcloud, Spotify, Stitcher and TuneIn.

Make the AI Podcast better. Have a few minutes to spare? Fill out this listener survey.

Into the Omniverse: Marmoset Brings Breakthroughs in Rendering, Extends OpenUSD Support to Enhance 3D Art Production

Editor’s note: This post is part of Into the Omniverse, a series focused on how artists and developers from startups to enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse.

Real-time rendering, animation and texture baking are essential workflows for 3D art production. Using the Marmoset Toolbag software, 3D artists can enhance their creative workflows and build complex 3D models without disruptions to productivity.

The latest release of Marmoset Toolbag, version 4.06, brings increased support for Universal Scene Description, aka OpenUSD, enabling seamless compatibility with NVIDIA Omniverse, a development platform for connecting and building OpenUSD-based tools and applications.

3D creators and technical artists using Marmoset can now enjoy improved interoperability, accelerated rendering, real-time visualization and efficient performance — redefining the possibilities of their creative workflows.

Enhancing Cross-Platform Creativity With OpenUSD

Creators are taking their workflows to the next level with OpenUSD.

Berlin-based Armin Halač works as a principal animator at Wooga, a mobile games development studio known for projects like June’s Journey and Ghost Detective. The nature of his job means Halač is no stranger to 3D workflows — he gets hands-on with animation and character rigging.

For texturing and producing high-quality renders, Marmoset is Halač’s go-to tool, providing a user-friendly interface and powerful features to simplify his workflow. Recently, Halač used Marmoset to create the captivating cover image for his book, A Complete Guide to Character Rigging for Games Using Blender.

Using the added support for USD, Halač can seamlessly send 3D assets from Blender to Marmoset, creating new possibilities for collaboration and improved visuals.

The cover image of Halač’s book.

The cover image of Halač’s book.

Nkoro Anselem Ire, a.k.a askNK, is a popular YouTube creator as well as a media and visual arts professor at a couple of universities who is also seeing workflow benefits from increased USD support.

As a 3D content creator, he uses Marmoset Toolbag for the majority of his PBR workflow — from texture baking and lighting to animation and rendering. Now, with USD, askNK is enjoying newfound levels of creative flexibility as the framework allows him to “collaborate with individuals or team members a lot easier because they can now pick up and drop off processes while working on the same file.”

Halač and askNK recently joined an NVIDIA-hosted livestream where community members and the Omniverse team explored the benefits of a Marmoset- and Omniverse-boosted workflow.

Daniel Bauer is another creator experiencing the benefits of Marmoset, OpenUSD and Omniverse. A SolidWorks mechanical engineer with over 10 years of experience, Bauer works frequently in CAD software environments, where it’s typical to assign different materials to various scene components. The variance can often lead to shading errors and incorrect geometry representation, but using USD, Bauer can avoid errors by easily importing versions of his scene from Blender to Marmoset Toolbag to Omniverse USD Composer.

A Kuka Scara robot simulation with 10 parallel small grippers for sorting and handling pens.

A Kuka Scara robot simulation with 10 parallel small grippers for sorting and handling pens.

Additionally, 3D artists Gianluca Squillace and Pasquale Scionti are harnessing the collaborative power of Omniverse, Marmoset and OpenUSD to transform their workflows from a convoluted series of exports and imports to a streamlined, real-time, interconnected process.

Squillace crafted a captivating 3D character with Pixologic ZBrush, Autodesk Maya, Adobe Substance 3D Painter and Marmoset Toolbag — aggregating the data from the various tools in Omniverse. With USD, he seamlessly integrated his animations and made real-time adjustments without the need for constant file exports.

Simultaneously, Scionti constructed a stunning glacial environment using Autodesk 3ds Max, Adobe Substance 3D Painter, Quixel and Unreal Engine, uniting the various pieces from his tools in Omniverse. His work showcased the potential of Omniverse to foster real-time collaboration as he was able to seamlessly integrate Squillace’s character into his snowy world.

Advancing Interoperability and Real-Time Rendering

Marmoset Toolbag 4.06 provides significant improvements to interoperability and image fidelity for artists working across platforms and applications. This is achieved through updates to Marmoset’s OpenUSD support, allowing for seamless compatibility and connection with the Omniverse ecosystem.

The improved USD import and export capabilities enhance interoperability with popular content creation apps and creative toolkits like Autodesk Maya and Autodesk 3ds Max, SideFX Houdini and Unreal Engine.

Additionally, Marmoset Toolbag 4.06 brings additional updates, including:

- RTX-accelerated rendering and baking: Toolbag’s ray-traced renderer and texture baker are accelerated by NVIDIA RTX GPUs, providing up to a 2x improvement in render times and a 4x improvement in bake times.

- Real-time denoising with OptiX: With NVIDIA RTX devices, creators can enjoy a smooth and interactive ray-tracing experience, enabling real-time navigation of the active viewport without visual artifacts or performance disruptions.

- High DPI performance with DLSS image upscaling: The viewport now renders at a reduced resolution and uses AI-based technology to upscale images, improving performance while minimizing image-quality reductions.

Download Toolbag 4.06 directly from Marmoset to explore USD support and RTX-accelerated production tools. New users are eligible for a full-featured, 30-day free trial license.

Get Plugged Into the Omniverse

Learn from industry experts on how OpenUSD is enabling custom 3D pipelines, easing 3D tool development and delivering interoperability between 3D applications in sessions from SIGGRAPH 2023, now available on demand.

Anyone can build their own Omniverse extension or Connector to enhance their 3D workflows and tools. Explore the Omniverse ecosystem’s growing catalog of connections, extensions, foundation applications and third-party tools.

For more resources on OpenUSD, explore the Alliance for OpenUSD forum or visit the AOUSD website.

Share your Marmoset Toolbag and Omniverse work as part of the latest community challenge, #SeasonalArtChallenge. Use the hashtag to submit a spooky or festive scene for a chance to be featured on the @NVIDIAStudio and @NVIDIAOmniverse social channels.

Show us your spookiest 3D scenes in the new #SeasonalArtChallenge.

Get in the spooky spirit and share your scenes with us and @NVIDIAStudio for a chance to be featured on our channels.

courtesy of @TanjaLanggner

— NVIDIA Omniverse (@nvidiaomniverse) October 10, 2023

Get started with NVIDIA Omniverse by downloading the standard license free, or learn how Omniverse Enterprise can connect your team.

Developers can check out these Omniverse resources to begin building on the platform.

Stay up to date on the platform by subscribing to the newsletter and following NVIDIA Omniverse on Instagram, LinkedIn, Medium, Threads and Twitter.

For more, check out our forums, Discord server, Twitch and YouTube channels.

Featured image courtesy of Armin Halač, Christian Nauck and Masuquddin Ahmed.

How to build a secure foundation for American leadership in AI

Google shares the report: Building a Secure Foundation for American Leadership in AI.Read More

Google shares the report: Building a Secure Foundation for American Leadership in AI.Read More

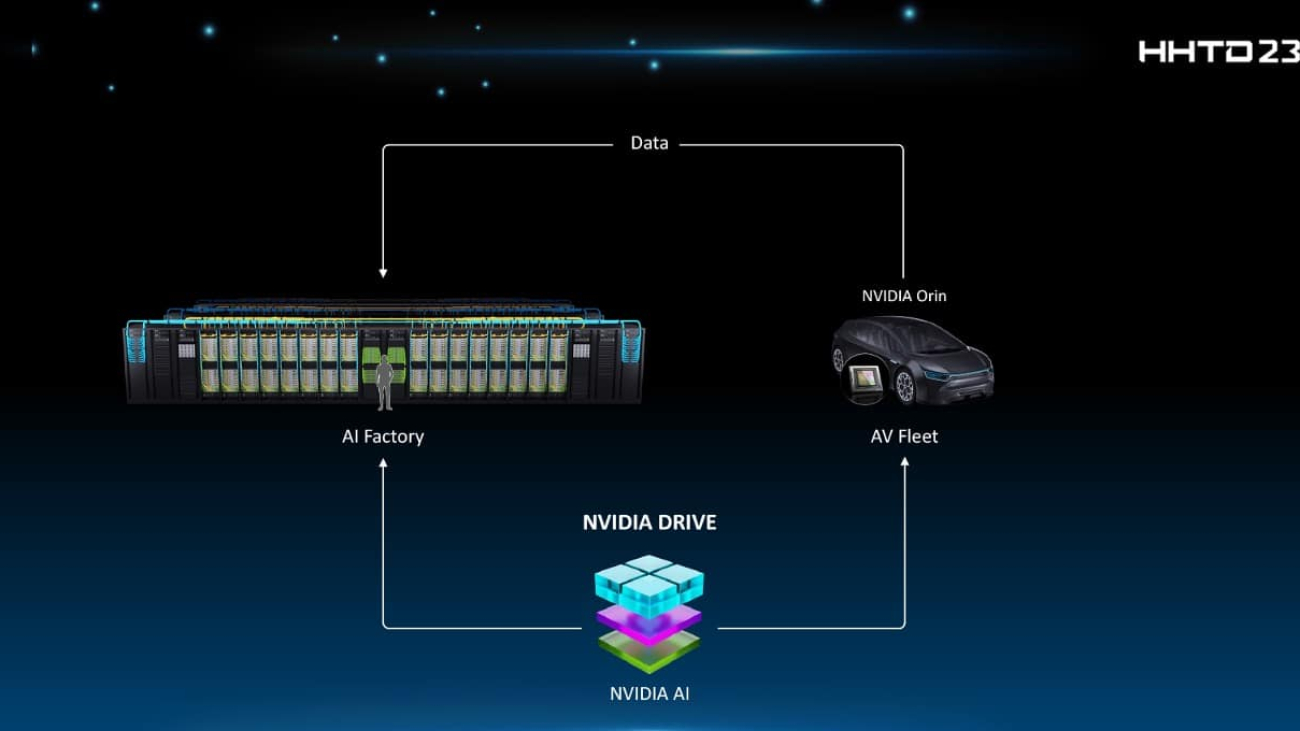

Foxconn and NVIDIA Amp Up Electric Vehicle Innovation

NVIDIA founder and CEO Jensen Huang joined Hon Hai (Foxconn) Chairman and CEO Young Liu to unveil the latest in their ongoing partnership to develop the next wave of intelligent electric vehicle (EV) platforms for the global automotive market.

This latest move, announced today at the fourth annual Hon Hai Tech Day in Taiwan, will help Foxconn realize its EV vision with a range of NVIDIA DRIVE solutions — including NVIDIA DRIVE Orin today and its successor, DRIVE Thor, down the road.

In addition, Foxconn will be a contract manufacturer of highly automated and autonomous, AI-rich EVs featuring the upcoming NVIDIA DRIVE Hyperion 9 platform, which includes DRIVE Thor and a state-of-the-art sensor architecture.

Next-Gen EVs With Extraordinary Performance

The computational requirements for highly automated and fully self-driving vehicles are enormous. NVIDIA offers the most advanced, highest-performing AI car computers for the transportation industry, with DRIVE Orin selected for use by more than 25 global automakers.

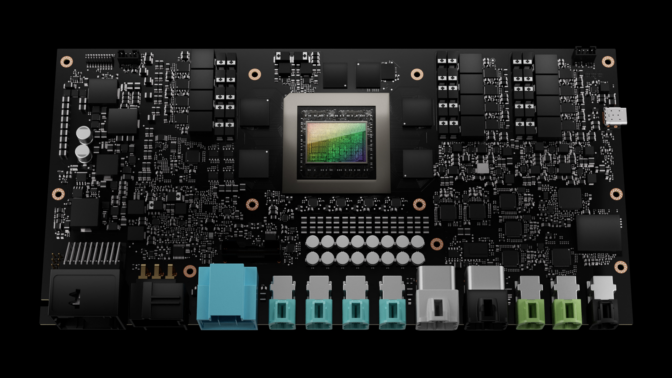

Already a tier-one manufacturer of DRIVE Orin-powered electronic control units (ECUs), Foxconn will also manufacture ECUs featuring DRIVE Thor, once available.

The upcoming DRIVE Thor superchip harnesses advanced AI capabilities first deployed in NVIDIA Grace CPUs and Hopper and Ada Lovelace architecture-based GPUs — and is expected to deliver a staggering 2,000 teraflops of high-performance compute to enable functionally safe and secure intelligent driving.

Heightened Senses

Unveiled at GTC last year, DRIVE Hyperion 9 is the latest evolution of NVIDIA’s modular development platform and reference architecture for automated and autonomous vehicles. Set to be powered by DRIVE Thor, it will integrate a qualified sensor architecture for level 3 urban and level 4 highway driving scenarios.

With a diverse and redundant array of high-resolution camera, radar, lidar and ultrasonic sensors, DRIVE Hyperion can process an extraordinary amount of safety-critical data to enable vehicles to deftly navigate their surroundings.

Another advantage of DRIVE Hyperion is its compatibility across generations, as it retains the same compute form factor and NVIDIA DriveWorks application programming interfaces, enabling a seamless transition from DRIVE Orin to DRIVE Thor and beyond.

Plus, DRIVE Hyperion can help speed development times and lower costs for electronics manufacturers like Foxconn, since the sensors available on the platform have cleared NVIDIA’s rigorous qualification processes.

The shift to software-defined vehicles with a centralized electronic architecture will drive the need for high-performance, energy-efficient computing solutions such as DRIVE Thor. By coupling it with the DRIVE Hyperion sensor architecture, Foxconn and its automotive customers will be better equipped to realize a new era of safe and intelligent EVs.

Since its inception, Hon Hai Tech Day has served as a launch pad for Foxconn to showcase its latest endeavors in contract design and manufacturing services and new technologies. These accomplishments span the EV sector and extend to the broader consumer electronics industry.

Catch more on Liu and Huang’s fireside chat at Hon Hai Tech Day.