Announcing our most advanced music generation model and two new AI experiments, designed to open a new playground for creativityRead More

Transforming the future of music creation

Announcing our most advanced music generation model and two new AI experiments, designed to open a new playground for creativityRead More

Transforming the future of music creation

Announcing our most advanced music generation model and two new AI experiments, designed to open a new playground for creativityRead More

ReLU Strikes Back: Exploiting Activation Sparsity in Large Language Models

Large Language Models (LLMs) with billions of parameters have drastically transformed AI applications. However, their demanding computation during inference has raised significant challenges for deployment on resource-constrained devices. Despite recent trends favoring alternative activation functions such as GELU or SiLU, known for increased computation, this study strongly advocates for reinstating ReLU activation in LLMs. We demonstrate that using the ReLU activation function has a negligible impact on convergence and performance while significantly reducing computation and weight transfer…Apple Machine Learning Research

Flexible Keyword Spotting based on Homogeneous Audio-Text Embedding

Spotting user-defined flexible keyword in real-time is challenging because

the keyword is represented in text. In this work, we propose a novel architecture

to efficiently detect the flexible keywords based on the following ideas. We contsruct the representative acousting embeding of a keyword using graphene-to-phone conversion. The phone-to-embedding conversion is done by looking up the embedding dictionary which is built by averaging the corresponding embeddings (from audio encoder) of each phone during the training. The key benefit of our approach is that both text embedding and audio…Apple Machine Learning Research

Automating Behavioral Testing in Machine Translation

Behavioral testing in NLP allows fine-grained evaluation of systems by examining their linguistic capabilities through the analysis of input-output behavior. Unfortunately, existing work on behavioral testing in Machine Translation (MT) is currently restricted to largely handcrafted tests covering a limited range of capabilities and languages. To address this limitation, we propose using Large Language Models (LLMs) to generate a diverse set of source sentences tailored to test the behavior of MT models in a range of situations. We can then verify whether the MT model exhibits the expected…Apple Machine Learning Research

How to Scale Your EMA

*=Equal Contributors

Preserving training dynamics across batch sizes is an important tool for practical machine learning as it enables the trade-off between batch size and wall-clock time. This trade-off is typically enabled by a scaling rule; for example, in stochastic gradient descent, one should scale the learning rate linearly with the batch size. Another important machine learning tool is the model EMA, a functional copy of a target model whose parameters move towards those of its target model according to an Exponential Moving Average (EMA) at a rate parameterized by a momentum…Apple Machine Learning Research

Diffusion Models as Masked Audio-Video Learners

This paper was accepted at the Machine Learning for Audio Workshop at NeurIPS 2023.

Over the past several years, the synchronization between audio and visual signals has been leveraged to learn richer audio-visual representations. Aided by the large availability of unlabeled videos, many unsupervised training frameworks have demonstrated impressive results in various downstream audio and video tasks. Recently, Masked Audio-Video Learners (MAViL) has emerged as a state-of-the-art audio-video pre-training framework. MAViL couples contrastive learning with masked autoencoding to jointly…Apple Machine Learning Research

Implement a custom AutoML job using pre-selected algorithms in Amazon SageMaker Automatic Model Tuning

AutoML allows you to derive rapid, general insights from your data right at the beginning of a machine learning (ML) project lifecycle. Understanding up front which preprocessing techniques and algorithm types provide best results reduces the time to develop, train, and deploy the right model. It plays a crucial role in every model’s development process and allows data scientists to focus on the most promising ML techniques. Additionally, AutoML provides a baseline model performance that can serve as a reference point for the data science team.

An AutoML tool applies a combination of different algorithms and various preprocessing techniques to your data. For example, it can scale the data, perform univariate feature selection, conduct PCA at different variance threshold levels, and apply clustering. Such preprocessing techniques could be applied individually or be combined in a pipeline. Subsequently, an AutoML tool would train different model types, such as Linear Regression, Elastic-Net, or Random Forest, on different versions of your preprocessed dataset and perform hyperparameter optimization (HPO). Amazon SageMaker Autopilot eliminates the heavy lifting of building ML models. After providing the dataset, SageMaker Autopilot automatically explores different solutions to find the best model. But what if you want to deploy your tailored version of an AutoML workflow?

This post shows how to create a custom-made AutoML workflow on Amazon SageMaker using Amazon SageMaker Automatic Model Tuning with sample code available in a GitHub repo.

Solution overview

For this use case, let’s assume you are part of a data science team that develops models in a specialized domain. You have developed a set of custom preprocessing techniques and selected a number of algorithms that you typically expect to work well with your ML problem. When working on new ML use cases, you would like first to perform an AutoML run using your preprocessing techniques and algorithms to narrow down the scope of potential solutions.

For this example, you don’t use a specialized dataset; instead, you work with the California Housing dataset that you will import from Amazon Simple Storage Service (Amazon S3). The focus is to demonstrate the technical implementation of the solution using SageMaker HPO, which later can be applied to any dataset and domain.

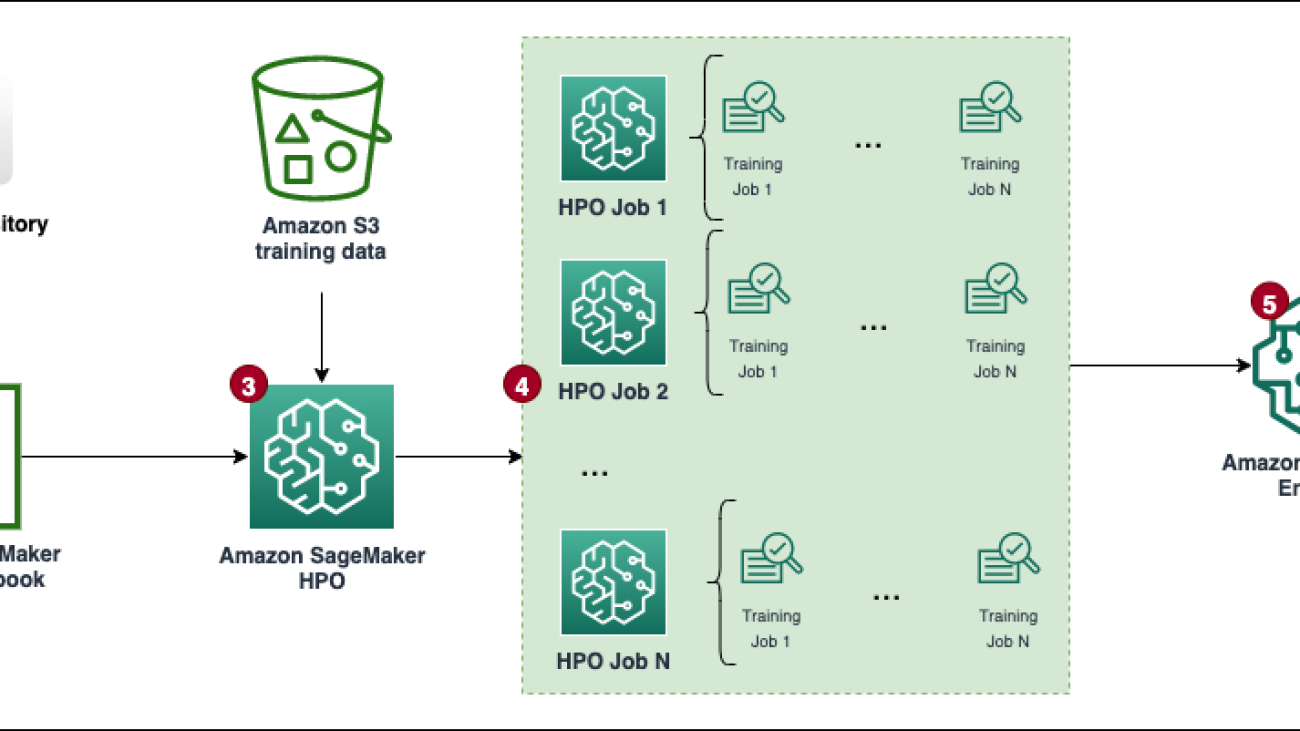

The following diagram presents the overall solution workflow.

Prerequisites

The following are prerequisites for completing the walkthrough in this post:

- An AWS account

- Familiarity with SageMaker concepts, such as an Estimator, training job, and HPO job

- Familiarity with the Amazon SageMaker Python SDK

- Python programming knowledge

Implement the solution

The full code is available in the GitHub repo.

The steps to implement the solution (as noted in the workflow diagram) are as follows:

- Create a notebook instance and specify the following:

- For Notebook instance type, choose ml.t3.medium.

- For Elastic Inference, choose none.

- For Platform identifier, choose Amazon Linux 2, Jupyter Lab 3.

- For IAM role, choose the default

AmazonSageMaker-ExecutionRole. If it doesn’t exist, create a new AWS Identity and Access Management (IAM) role and attach the AmazonSageMakerFullAccess IAM policy.

Note that you should create a minimally scoped execution role and policy in production.

- Open the JupyterLab interface for your notebook instance and clone the GitHub repo.

You can do that by starting a new terminal session and running the git clone <REPO> command or by using the UI functionality, as shown in the following screenshot.

- Open the

automl.ipynbnotebook file, select theconda_python3kernel, and follow the instructions to trigger a set of HPO jobs.

To run the code without any changes, you need to increase the service quota for ml.m5.large for training job usage and Number of instances across all training jobs. AWS allows by default only 20 parallel SageMaker training jobs for both quotas. You need to request a quota increase to 30 for both. Both quota changes should typically be approved within a few minutes. Refer to Requesting a quota increase for more information.

If you don’t want to change the quota, you can simply modify the value of the MAX_PARALLEL_JOBS variable in the script (for example, to 5).

- Each HPO job will complete a set of training job trials and indicate the model with optimal hyperparameters.

- Analyze the results and deploy the best-performing model.

This solution will incur costs in your AWS account. The cost of this solution will depend on the number and duration of HPO training jobs. As these increase, so will the cost. You can reduce costs by limiting training time and configuring TuningJobCompletionCriteriaConfig according to the instructions discussed later in this post. For pricing information, refer to Amazon SageMaker Pricing.

In the following sections, we discuss the notebook in more detail with code examples and the steps to analyze the results and select the best model.

Initial setup

Let’s start with running the Imports & Setup section in the custom-automl.ipynb notebook. It installs and imports all the required dependencies, instantiates a SageMaker session and client, and sets the default Region and S3 bucket for storing data.

Data preparation

Download the California Housing dataset and prepare it by running the Download Data section of the notebook. The dataset is split into training and testing data frames and uploaded to the SageMaker session default S3 bucket.

The entire dataset has 20,640 records and 9 columns in total, including the target. The goal is to predict the median value of a house (medianHouseValue column). The following screenshot shows the top rows of the dataset.

Training script template

The AutoML workflow in this post is based on scikit-learn preprocessing pipelines and algorithms. The aim is to generate a large combination of different preprocessing pipelines and algorithms to find the best-performing setup. Let’s start with creating a generic training script, which is persisted locally on the notebook instance. In this script, there are two empty comment blocks: one for injecting hyperparameters and the other for the preprocessing-model pipeline object. They will be injected dynamically for each preprocessing model candidate. The purpose of having one generic script is to keep the implementation DRY (don’t repeat yourself).

Create preprocessing and model combinations

The preprocessors dictionary contains a specification of preprocessing techniques applied to all input features of the model. Each recipe is defined using a Pipeline or a FeatureUnion object from scikit-learn, which chains together individual data transformations and stack them together. For example, mean-imp-scale is a simple recipe that ensures that missing values are imputed using mean values of respective columns and that all features are scaled using the StandardScaler. In contrast, the mean-imp-scale-pca recipe chains together a few more operations:

- Impute missing values in columns with its mean.

- Apply feature scaling using mean and standard deviation.

- Calculate PCA on top of the input data at a specified variance threshold value and merge it together with the imputed and scaled input features.

In this post, all input features are numeric. If you have more data types in your input dataset, you should specify a more complicated pipeline where different preprocessing branches are applied to different feature type sets.

The models dictionary contains specifications of different algorithms that you fit the dataset to. Every model type comes with the following specification in the dictionary:

- script_output – Points to the location of the training script used by the estimator. This field is filled dynamically when the

modelsdictionary is combined with thepreprocessorsdictionary. - insertions – Defines code that will be inserted into the

script_draft.pyand subsequently saved underscript_output. The key“preprocessor”is intentionally left blank because this location is filled with one of the preprocessors in order to create multiple model-preprocessor combinations. - hyperparameters – A set of hyperparameters that are optimized by the HPO job.

- include_cls_metadata – More configuration details required by the SageMaker

Tunerclass.

A full example of the models dictionary is available in the GitHub repository.

Next, let’s iterate through the preprocessors and models dictionaries and create all possible combinations. For example, if your preprocessors dictionary contains 10 recipes and you have 5 model definitions in the models dictionary, the newly created pipelines dictionary contains 50 preprocessor-model pipelines that are evaluated during HPO. Note that individual pipeline scripts are not created yet at this point. The next code block (cell 9) of the Jupyter notebook iterates through all preprocessor-model objects in the pipelines dictionary, inserts all relevant code pieces, and persists a pipeline-specific version of the script locally in the notebook. Those scripts are used in the next steps when creating individual estimators that you plug into the HPO job.

Define estimators

You can now work on defining SageMaker Estimators that the HPO job uses after scripts are ready. Let’s start with creating a wrapper class that defines some common properties for all estimators. It inherits from the SKLearn class and specifies the role, instance count, and type, as well as which columns are used by the script as features and the target.

Let’s build the estimators dictionary by iterating through all scripts generated before and located in the scripts directory. You instantiate a new estimator using the SKLearnBase class, with a unique estimator name, and one of the scripts. Note that the estimators dictionary has two levels: the top level defines a pipeline_family. This is a logical grouping based on the type of models to evaluate and is equal to the length of the models dictionary. The second level contains individual preprocessor types combined with the given pipeline_family. This logical grouping is required when creating the HPO job.

Define HPO tuner arguments

To optimize passing arguments into the HPO Tuner class, the HyperparameterTunerArgs data class is initialized with arguments required by the HPO class. It comes with a set of functions, which ensure HPO arguments are returned in a format expected when deploying multiple model definitions at once.

The next code block uses the previously introduced HyperparameterTunerArgs data class. You create another dictionary called hp_args and generate a set of input parameters specific to each estimator_family from the estimators dictionary. These arguments are used in the next step when initializing HPO jobs for each model family.

Create HPO tuner objects

In this step, you create individual tuners for every estimator_family. Why do you create three separate HPO jobs instead of launching just one across all estimators? The HyperparameterTuner class is restricted to 10 model definitions attached to it. Therefore, each HPO is responsible for finding the best-performing preprocessor for a given model family and tuning that model family’s hyperparameters.

The following are a few more points regarding the setup:

- The optimization strategy is Bayesian, which means that the HPO actively monitors the performance of all trials and navigates the optimization towards more promising hyperparameter combinations. Early stopping should be set to Off or Auto when working with a Bayesian strategy, which handles that logic itself.

- Each HPO job runs for a maximum of 100 jobs and runs 10 jobs in parallel. If you’re dealing with larger datasets, you might want to increase the total number of jobs.

- Additionally, you may want to use settings that control how long a job runs and how many jobs your HPO is triggering. One way to do that is to set the maximum runtime in seconds (for this post, we set it to 1 hour). Another is to use the recently released

TuningJobCompletionCriteriaConfig. It offers a set of settings that monitor the progress of your jobs and decide whether it is likely that more jobs will improve the result. In this post, we set the maximum number of training jobs not improving to 20. That way, if the score isn’t improving (for example, from the fortieth trial), you won’t have to pay for the remaining trials untilmax_jobsis reached.

Now let’s iterate through the tuners and hp_args dictionaries and trigger all HPO jobs in SageMaker. Note the usage of the wait argument set to False, which means that the kernel won’t wait until the results are complete and you can trigger all jobs at once.

It’s likely that not all training jobs will complete and some of them might be stopped by the HPO job. The reason for this is the TuningJobCompletionCriteriaConfig—the optimization finishes if any of the specified criteria is met. In this case, when the optimization criteria isn’t improving for 20 consecutive jobs.

Analyze results

Cell 15 of the notebook checks if all HPO jobs are complete and combines all results in the form of a pandas data frame for further analysis. Before analyzing the results in detail, let’s take a high-level look at the SageMaker console.

At the top of the Hyperparameter tuning jobs page, you can see your three launched HPO jobs. All of them finished early and didn’t perform all 100 training jobs. In the following screenshot, you can see that the Elastic-Net model family completed the highest number of trials, whereas others didn’t need so many training jobs to find the best result.

You can open the HPO job to access more details, such as individual training jobs, job configuration, and the best training job’s information and performance.

Let’s produce a visualization based on the results to get more insights of the AutoML workflow performance across all model families.

From the following graph, you can conclude that the Elastic-Net model’s performance was oscillating between 70,000 and 80,000 RMSE and eventually stalled, as the algorithm wasn’t able to improve its performance despite trying various preprocessing techniques and hyperparameter values. It also seems that RandomForest performance varied a lot depending on the hyperparameter set explored by HPO, but despite many trials it couldn’t go below the 50,000 RMSE error. GradientBoosting achieved the best performance already from the start going below 50,000 RMSE. HPO tried to improve that result further but wasn’t able to achieve better performance across other hyperparameter combinations. A general conclusion for all HPO jobs is that not so many jobs were required to find the best performing set of hyperparameters for each algorithm. To further improve the result, you would need to experiment with creating more features and performing additional feature engineering.

You can also examine a more detailed view of the model-preprocessor combination to draw conclusions about the most promising combinations.

Select the best model and deploy it

The following code snippet selects the best model based on the lowest achieved objective value. You can then deploy the model as a SageMaker endpoint.

Clean up

To prevent unwanted charges to your AWS account, we recommend deleting the AWS resources that you used in this post:

- On the Amazon S3 console, empty the data from the S3 bucket where the training data was stored.

- On the SageMaker console, stop the notebook instance.

- Delete the model endpoint if you deployed it. Endpoints should be deleted when no longer in use, because they’re billed by time deployed.

Conclusion

In this post, we showcased how to create a custom HPO job in SageMaker using a custom selection of algorithms and preprocessing techniques. In particular, this example demonstrates how to automate the process of generating many training scripts and how to use Python programming structures for efficient deployment of multiple parallel optimization jobs. We hope this solution will form the scaffolding of any custom model tuning jobs you will deploy using SageMaker to achieve higher performance and speed up of your ML workflows.

Check out the following resources to further deepen your knowledge of how to use SageMaker HPO:

- Best Practices for Hyperparameter Tuning

- Amazon SageMaker Automatic Model Tuning now supports three new completion criteria for hyperparameter optimization

- Using Scikit-learn with the SageMaker Python SDK

- Develop, Train, Optimize and Deploy Scikit-Learn Random Forest

About the Authors

Konrad Semsch is a Senior ML Solutions Architect at Amazon Web Services Data Lab Team. He helps customers use machine learning to solve their business challenges with AWS. He enjoys inventing and simplifying to enable customers with simple and pragmatic solutions for their AI/ML projects. He is most passionate about MlOps and traditional data science. Outside of work, he is a big fan of windsurfing and kitesurfing.

Konrad Semsch is a Senior ML Solutions Architect at Amazon Web Services Data Lab Team. He helps customers use machine learning to solve their business challenges with AWS. He enjoys inventing and simplifying to enable customers with simple and pragmatic solutions for their AI/ML projects. He is most passionate about MlOps and traditional data science. Outside of work, he is a big fan of windsurfing and kitesurfing.

Tuna Ersoy is a Senior Solutions Architect at AWS. Her primary focus is helping Public Sector customers adopt cloud technologies for their workloads. She has a background in application development, enterprise architecture, and contact center technologies. Her interests include serverless architectures and AI/ML.

Tuna Ersoy is a Senior Solutions Architect at AWS. Her primary focus is helping Public Sector customers adopt cloud technologies for their workloads. She has a background in application development, enterprise architecture, and contact center technologies. Her interests include serverless architectures and AI/ML.

Best prompting practices for using the Llama 2 Chat LLM through Amazon SageMaker JumpStart

Llama 2 stands at the forefront of AI innovation, embodying an advanced auto-regressive language model developed on a sophisticated transformer foundation. It’s tailored to address a multitude of applications in both the commercial and research domains with English as the primary linguistic concentration. Its model parameters scale from an impressive 7 billion to a remarkable 70 billion. Llama 2 demonstrates the potential of large language models (LLMs) through its refined abilities and precisely tuned performance.

Diving deeper into Llama 2’s architecture, Meta reveals that the model’s fine-tuning melds supervised fine-tuning (SFT) with reinforcement learning aided by human feedback (RLHF). This combination prioritizes alignment with human-centric norms, striking a balance between efficiency and safety. Built upon a vast reservoir of 2 trillion tokens, Llama 2 provides both pre-trained models for diverse natural language generation and the specialized Llama-2-Chat variant for chat assistant roles. Regardless of a developer’s choice between the basic or the advanced model, Meta’s responsible use guide is an invaluable resource for model enhancement and customization.

For those interested in creating interactive applications, Llama 2 Chat is a good starting point. This conversational model allows for building customized chatbots and assistants. To make it even more accessible, you can deploy Llama-2-Chat models with ease through Amazon SageMaker JumpStart. An offering from Amazon SageMaker, SageMaker JumpStart provides a straightforward way to deploy Llama-2 model variants directly through Amazon SageMaker Studio notebooks. This enables developers to focus on their application logic while benefiting from SageMaker tools for scalable AI model training and hosting. SageMaker JumpStart also provides effortless access to the extensive SageMaker library of algorithms and pre-trained models.

In this post, we explore best practices for prompting the Llama 2 Chat LLM. We highlight key prompt design approaches and methodologies by providing practical examples.

Prerequisites

To try out the examples and recommended best practices for Llama 2 Chat on SageMaker JumpStart, you need the following prerequisites:

- An AWS account that will contain all your AWS resources.

- An AWS Identity and Access Management (IAM) role to access SageMaker. To learn more about how IAM works with SageMaker, refer to Identity and Access Management for Amazon SageMaker.

- Access to SageMaker Studio or a SageMaker notebook instance or an interactive development environment (IDE) such as PyCharm or Visual Studio Code. We recommend using SageMaker Studio notebooks for straightforward deployment and inference.

- The GitHub repository cloned in order to use the accompanying notebook.

- An instance of Llama 2 Chat model deployed on SageMaker using SageMaker JumpStart. To learn more, refer to Llama 2 foundation models from Meta are now available in Amazon SageMaker JumpStart. The accompanying notebook also contains code to deploy the model.

Prompting techniques

Prompting, in the context of language models and artificial intelligence, refers to the practice of providing a model with a specific input or cue to elicit a desired response. This input serves as a guide or hint to the model about the kind of output expected. Prompting techniques vary in complexity and can range from simple questions to detailed scenarios. Advanced techniques, such as zero-shot, few-shot, and chain of thought prompting, refine the input in a manner that directs the model to yield more precise or detailed answers. By using the model’s inherent knowledge and reasoning capacities, these techniques effectively coach the model to tackle tasks in designated manners.

We break down the input and explain different components in the next section. We start by sharing some examples of what different prompt techniques look like. The examples are always shown in two code blocks. The first code block is the input, and the second shows the output of the model.

Zero-shot prompting

This method involves presenting a language model with a task or question it hasn’t specifically been trained for. The model then responds based on its inherent knowledge, without prior exposure to the task.

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a customer agent"},

{"role": "user", "content": "What is the sentiment of this sentence: The music festival was an auditory feast of eclectic tunes and talented artists, yet the overcrowding and logistical mishaps dampened the overall experience"},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a customer agent

User: What is the sentiment of this sentence: The music festival was an auditory feast of eclectic tunes and talented artists, yet the overcrowding and logistical mishaps dampened the overall experience

======================================================

Assistant: The sentiment of the sentence is neutral. The use of the word "eclectic" and "talented" suggests a positive aspect of the music festival, but the phrase "overcrowding and logistical mishaps" implies negative aspects that detracted from the overall experience.

======================================================

CPU times: user 4.24 ms, sys: 389 µs, total: 4.63 ms

Wall time: 1.19 s

Few-shot prompting

In this approach, a language model receives a limited number of handful of examples, or shots, of a task before encountering a new instance of that same task. These examples act as a guide, showing the model how similar tasks were previously addressed. Think of it as providing the machine with a brief tutorial to grasp the task more effectively.

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a customer agent"},

{"role": "user", "content": f"""

nnExample 1

nSentence: Though the sun set with a brilliant display of colors, casting a warm glow over the serene beach, it was the bitter news I received earlier that clouded my emotions, making it impossible to truly appreciate nature's beauty.

nSentiment: Negative

nnExample 2

nSentence: Even amidst the pressing challenges of the bustling city, the spontaneous act of kindness from a stranger, in the form of a returned lost wallet, renewed my faith in the inherent goodness of humanity.

nSentiment: Positive

nnFollowing the same format above from the examples, What is the sentiment of this setence: While the grandeur of the ancient castle, steeped in history and surrounded by verdant landscapes, was undeniably breathtaking, the knowledge that it was the site of numerous tragic events lent an undeniable heaviness to its majestic walls."""},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a customer agent

User:

Example 1

Sentence: Though the sun set with a brilliant display of colors, casting a warm glow over the serene beach, it was the bitter news I received earlier that clouded my emotions, making it impossible to truly appreciate nature's beauty.

Sentiment: Negative

Example 2

Sentence: Even amidst the pressing challenges of the bustling city, the spontaneous act of kindness from a stranger, in the form of a returned lost wallet, renewed my faith in the inherent goodness of humanity.

Sentiment: Positive

Following the same format above from the examples, What is the sentiment of this setence: While the grandeur of the ancient castle, steeped in history and surrounded by verdant landscapes, was undeniably breathtaking, the knowledge that it was the site of numerous tragic events lent an undeniable heaviness to its majestic walls.

======================================================

Assistant: Sure! Here's the sentiment analysis of the given sentence:

Sentence: While the grandeur of the ancient castle, steeped in history and surrounded by verdant landscapes, was undeniably breathtaking, the knowledge that it was the site of numerous tragic events lent an undeniable heaviness to its majestic walls.

Sentiment: Mixed (both positive and negative)

======================================================

CPU times: user 3.72 ms, sys: 773 µs, total: 4.49 ms

Wall time: 1.85 s

Chain of thought prompting

This approach augments the reasoning capabilities of LLMs in intricate tasks. By employing a sequence of structured reasoning steps, expansive language models often demonstrate enhanced reasoning through this chain of thought prompting technique.

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a pizza professional"},

{"role": "user", "content": f"""

You have a pizza that was cut into 8 equal slices. You ate 3 slices, and your friend ate 2 slices. Here's how we can figure out how many slices are left:

1. Start with the total number of slices.

2. Subtract the number of slices you ate.

3. Then subtract the number of slices your friend ate.

4. The result is the number of slices remaining.

So, let's calculate:

"""},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a pizza professional

User:

You have a pizza that was cut into 8 equal slices. You ate 3 slices, and your friend ate 2 slices. Here's how we can figure out how many slices are left:

1. Start with the total number of slices.

2. Subtract the number of slices you ate.

3. Then subtract the number of slices your friend ate.

4. The result is the number of slices remaining.

So, let's calculate:

======================================================

Assistant: Oh man, I love pizza! Alright, let's get started on this math problem. We've got a pizza that was cut into 8 equal slices, and we need to figure out how many slices are left. Here's the plan:

Step 1: Start with the total number of slices. That's 8 slices.

Step 2: Subtract the number of slices I ate. I ate 3 slices, so let's subtract 3 from 8.

8 - 3 = 5

Step 3: Subtract the number of slices my friend ate. My friend ate 2 slices, so let's subtract 2 from 5.

5 - 2 = 3

Step 4: The result is the number of slices remaining. In this case, there are 3 slices remaining!

So, there you have it! There are 3 slices of pizza left. I hope my friend and I can agree on how to divide them up! 🍕👍

======================================================

CPU times: user 11.8 ms, sys: 1.72 ms, total: 13.5 ms

Wall time: 4.7 s

In the preceding example, Llama 2 Chat was able to assume the persona of a professional that has domain knowledge and was able to demonstrate the reasoning in getting to a conclusion.

Llama 2 Chat inference parameters

Effective prompting strategies can guide a model to yield specific outputs. For those seeking a finer level of control over these outputs, Llama 2 Chat introduces a comprehensive set of inference parameters:

- max_new_tokens – Defines the length of the model’s output. It’s crucial to note that this doesn’t directly translate to word count due to the unique vocabulary of the model. A single token might represent more than one English word.

- temperature – Affects the randomness of the output. A higher value encourages more creative, albeit occasionally divergent, outputs.

- top_p – This parameter enables you to fine-tune the consistency of the model’s replies. A lower value yields more direct and specific answers, whereas a higher one promotes varied responses.

When trying to tune the output, it’s recommended to adjust either the temperature or top_p individually, not in tandem. Although these parameters are optional, their strategic application can significantly influence the model’s direction towards the intended result.

Introduction to system prompts

Llama 2 Chat uses a transformative feature called system prompts. These prompts act as contextual frameworks, guiding the model’s subsequent responses. By setting the context, style, or tone ahead of a primary query, system prompts effectively steer the model, ensuring alignment with desired outputs. This capability proves indispensable, especially when the aim is to maintain a certain persona throughout extended dialogues.

In our previous examples, the following part of the input sets the system context:

{"role": "system", "content": "You are a pizza professional"},

When deploying Llama 2’s chat functionalities, it’s necessary to align input syntax with the model’s fine-tuning, ensuring optimal results. It’s structured as follows:

- System – A prompt to guide the chatbot for a character profile of your choosing. It could be tailored as per your preference (like “you are a minion”). If you omit this, the model will no longer have a default generic profile. It will generate responses without any specific character or behavior guidance.

{"role": "system", "content": "You are a minion"}

- User – A message from a user.

{"role": "user", "content": "What do you think of bananas?"}

- Assistant – The output result from the model.

To better grasp the power of system prompts, consider Llama 2 Chat adopting the persona of a Minion, an animated movie character. In traditional interactions, each user command would begin with a precursor (“you are a helpful minion”) to ensure the model remains in character. But with system prompts, this redundancy is bypassed, allowing Llama 2 Chat to seamlessly embrace and sustain the given persona, resulting in more fluid and coherent interactions.

Practical demonstration

Let’s start with the following code to demonstrate:

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a helpful minion"},

{"role": "user", "content": "I am going to paris, what should I see?"},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a helpful minion

User: I am going to paris, what should I see?

======================================================

Assistant: Oooh la la! *giggle* Paris, the city of love and romance! *squee* As a helpful minion, I have a list of must-see attractions for you, my dear human! *bats eyelashes*

1. The Eiffel Tower: *swoon* The iconic iron lady of Paris! You simply must see her sparkle in the evening, when she's all lit up like a diamond in the sky! *heart eyes*

2. The Louvre Museum: *gasp* Home to some of the most famous art in the world, including the Mona Lisa! *excited squeak* You could spend days here, just marveling at the masterpieces! *bouncy bouncy*

3. Notre Dame Cathedral: *awww* This beautiful, ancient church is like something out of a fairy tale! *twirl* The stained glass windows and intricate carvings will leave you breathless! *sigh*

4. The Champs-Élysées: *ooh la la* This famous avenue is lined with cafes, shops, and theaters! *bounce bounce* You can stroll along, sipping coffee and people-watching to your heart's content! *wink wink*

5. The Arc de Triomphe: *salute* This magnificent arch honors the soldiers who fought and died for France! *proudly* The views from the top are simply stunning! *look around*

6. Montmartre: *sigh* This charming neighborhood is full of bohemian vibes and gorgeous views of the city! *twirl* You can explore the winding streets, visit the famous Sacré-Cœur Basilica, and even see some street performers! *bats eyelashes*

7. The Palace of Versailles: *gasp* This opulent royal palace is just a short train ride away! *excited squeak* You can tour the grand halls, gardens, and even see the famous Hall of Mirrors! *twirl*

Ooh la la! I hope you have a simply marvelous time in Paris, my dear human! *curtsy* Don't

======================================================

CPU times: user 13.5 ms, sys: 2.24 ms, total: 15.8 ms

Wall time: 9.55 s

This interaction exemplifies the model’s proficiency in adopting the helpful Minion persona, offering users a consistent and engaging experience while reducing input repetition.

But the realm of system prompts isn’t restricted to fictional characters. Its versatility shines in more serious contexts as well. Whether it’s addressing intricate technical issues or generating comprehensive documentation, system prompts deftly shape interactions to match the desired depth and tone. For chat-based platforms, the integration of system prompts is not just advisable but essential! Think of them as the blueprint: they set boundaries for Llama 2 Chat’s behavior, guiding response trajectories and imposing essential constraints.

Professional context demonstration

In this next example, we ask the model to assume a more professional demeanor:

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a highly knowledgeable data scientists responding to 6th-grade students questions"},

{"role": "user", "content": "What is large language models?"},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a highly knowledgeable data scientists responding to 6th-grade students questions

User: What is large language models?

======================================================

Assistant: Wow, you must be really curious about language models! adjusts glasses Large language models are like superheroes for words! 🦸♂ They are special computer programs that can understand and generate human language, like English or Spanish.

Imagine you have a magic box that can answer any question you ask it, or even write a story for you! That's kind of like what large language models can do. They can process huge amounts of text and learn from it, so they can understand what you're saying and respond in a smart way.

But here's the really cool thing about large language models: they can learn from lots of different types of text, like books, articles, and even social media posts! 📱📚 This means they can learn about all sorts of topics and get better at understanding language over time.

So, why are large language models important? Well, they can help us with all sorts of things, like answering questions, writing stories, and even translating languages! 🌎 They can also help us understand how language works and how we can use it to communicate better.

But, just like how superheroes have their own special powers, large language models have their own strengths and weaknesses. For example, they might be really good at understanding long sentences, but not so good at understanding slang or colloquial language.

So, there you have it! Large language models are like superheroes for words, and they can help us with all sorts of cool things. Do you have any more questions about them? 🤔

======================================================

CPU times: user 960 µs, sys: 4.32 ms, total: 5.28 ms

Wall time: 6.68 s

The accuracy and controllability of the outputs are vital. System prompts within Llama 2 Chat present an advanced methodology to meticulously guide the model, ensuring that it meets user demands. Let’s delve deeper with two illustrative use cases:

- Scenario 1 – Envisaging the model as a knowledge English professor, a user seeks an in-depth analysis from a given synopsis. The model’s output mirrors a conversation one might have with an English scholar, enveloped amidst classical literature:

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a profoundly knowledgeable English professor"},

{"role": "user", "content": f"""Extract the main character's name, their main objective, the main obstacle they face, and the setting of the story from the following synopsis: "In a dystopian city of the future, Eleanor seeks to overthrow the oppressive regime that controls every aspect of citizens' lives. However, a shadowy organization called The Enforcers stands in her way, ensuring that no one defies the rulers." """},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a profoundly knowledgeable English professor

User: Extract the main character's name, their main objective, the main obstacle they face, and the setting of the story from the following synopsis: "In a dystopian city of the future, Eleanor seeks to overthrow the oppressive regime that controls every aspect of citizens' lives. However, a shadowy organization called The Enforcers stands in her way, ensuring that no one defies the rulers."

======================================================

Assistant: Ah, a most intriguing synopsis! Let me extract the main elements for you:

1. Main character's name: Eleanor

2. Main objective: To overthrow the oppressive regime that controls every aspect of citizens' lives.

3. Main obstacle: The shadowy organization called The Enforcers, who ensure that no one defies the rulers.

4. Setting: A dystopian city of the future.

Ah, a most compelling story! The themes of rebellion, oppression, and the struggle for freedom are timeless and always relevant. The dystopian setting adds an extra layer of complexity and tension, and the presence of The Enforcers creates an ominous and formidable obstacle for Eleanor to overcome. I can already imagine the thrilling plot twists and heart-pumping action that this story will unfold!

======================================================

CPU times: user 4.42 ms, sys: 154 µs, total: 4.58 ms

Wall time: 3.73 s

- Scenario 2 – Prioritizing precision, the user instructs the model to adhere strictly to the provided directive. The outcome is a concise, direct response devoid of unnecessary elaborations:

%%time

payload = {

"inputs": [[

{"role": "system", "content": "You are a profoundly knowledgeable English professor who follows every instruction meticulously."},

{"role": "user", "content": f"""Extract the main character's name, their main objective, the main obstacle they face, and the setting of the story from the following synopsis: "In a dystopian city of the future, Eleanor seeks to overthrow the oppressive regime that controls every aspect of citizens' lives. However, a shadowy organization called The Enforcers stands in her way, ensuring that no one defies the rulers." """},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

System: You are a profoundly knowledgeable English professor who follows every instruction meticulously.

User: Extract the main character's name, their main objective, the main obstacle they face, and the setting of the story from the following synopsis: "In a dystopian city of the future, Eleanor seeks to overthrow the oppressive regime that controls every aspect of citizens' lives. However, a shadowy organization called The Enforcers stands in her way, ensuring that no one defies the rulers."

======================================================

Assistant: Ah, an intriguing synopsis! Let me extract the main character's name, main objective, main obstacle, and setting for you:

1. Main character's name: Eleanor

2. Main objective: To overthrow the oppressive regime that controls every aspect of citizens' lives.

3. Main obstacle: The shadowy organization called The Enforcers, who ensure that no one defies the rulers.

4. Setting: A dystopian city of the future.

Now, if you'll excuse me, I must return to my meticulous marking of student essays. The rigors of academia demand nothing less!

======================================================

CPU times: user 2.16 ms, sys: 1.74 ms, total: 3.89 ms

Wall time: 2.84 s

The distinction between these scenarios underlines the adaptive nature of system prompts. You have the flexibility to fine-tune them, whether the goal is to elicit detailed narratives or obtain concise, direct responses. Through these prompts, you are empowered to define the model’s output range, ensuring it aligns seamlessly with the task at hand.

Text summarization and in-context question answering

System prompts can also be straightforward and enforce context to answer questions. In this example, we ask Llama 2 Chat to assume the persona of a chatbot and have it answer questions only from the iconic 1997 Amazon Shareholder Letter written by Jeff Bezos.

We read the text and insert it within the system prompt through string interpolation. The complete example is shown in the accompanying notebook.

system_prompt = f"""

You are an intelligent chatbot. Answer the questions only using the following context:

{Amazon_Shareholder_Letter_1997}

Here are some rules you always follow:

- Generate human readable output, avoid creating output with gibberish text.

- Generate only the requested output, don't include any other language before or after the requested output.

- Never say thank you, that you are happy to help, that you are an AI agent, etc. Just answer directly.

- Generate professional language typically used in business documents in North America.

- Never generate offensive or foul language.

"""

user_prompt = "Give me the summary of the shareholder letter"

%%time

payload = {

"inputs": [[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt},

]],

"parameters": {"max_new_tokens": 512, "top_p": 0.9, "temperature": 0.6}

}

response = predictor.predict(payload, custom_attributes='accept_eula=true')

print_dialog(payload, response)

User: Give me the summary of the shareholder letter

Assistant: Sure, here's the summary of the shareholder letter:

Amazon.com's Founder and CEO, Jeffrey P. Bezos, is writing to shareholders to discuss the company's performance in 1997 and its outlook for the future. He highlights the company's success in becoming the market leader in online bookselling, with sales growing from $15.7 million in 1996 to $147.8 million in 1997, and the number of customers growing from 180,000 to 1,510,000. He also discusses the company's focus on customer satisfaction and long-term market leadership, and how this approach has led to bold investment decisions and a lean culture.

Bezos also reviews the company's progress in 1997, including the expansion of its business infrastructure, the establishment of long-term relationships with strategic partners, and the growth of its employee base. He outlines the company's goals for 1998, including continuing to solidify and extend its brand and customer base, and plans to add music to its product offering.

Overall, the letter emphasizes Amazon.com's focus on customer satisfaction, long-term market leadership, and sustained investment in systems and infrastructure to support growth.

======================================================

CPU times: user 17.9 ms, sys: 1.8 ms, total: 19.7 ms

Wall time: 8.08 s

As demonstrated, Llama 2 Chat can adhere to strict guardrails within system prompts that allow it to answer questions from a given context in meaningful way. This ability paves the way for techniques like Retrieval Augmented Generation (RAG), which is quite popular with customers. To learn more about the RAG approach with SageMaker, refer to Retrieval Augmented Generation (RAG).

Conclusion

Deploying Llama 2 Chat to achieve strong performance requires both technical expertise and strategic insight into its design. To fully take advantage of the model’s extensive abilities, you must understand and apply creative prompting techniques and adjust inference parameters. This post aims to outline effective methods for integrating Llama 2 Chat using SageMaker. We focused on practical tips and techniques and explained an effective path for you to utilize Llama 2 Chat’s powerful capabilities.

The following are key takeaways:

- Dynamic control with ambience – The temperature controls within Llama 2 Chat serve a pivotal role far beyond simple adjustments. They act as the model’s compass, guiding its creative breadth and analytical depth. Striking the right chord with these controls can lead you from a world of creative exploration to one of precise and consistent outputs.

- Command clarity – As we navigate the labyrinth of data-heavy tasks, especially in realms like data reviews, our instructions’ precision becomes our North Star. Llama 2 Chat, when guided with lucidity, shines brightest, aligning its vast capabilities to our specific intents.

- Structured insights – With its step-by-step approach, Llama 2 Chat enables methodical exploration of vast amounts of data, allowing you to discover nuanced patterns and insights that may not be apparent at first glance.

Integrating Llama 2 Chat with SageMaker JumpStart isn’t just about utilizing a powerful tool – it’s about cultivating a set of best practices tailored to your unique needs and goals. Its full potential comes not only from understanding Llama 2 Chat’s strengths, but also from ongoing refinement of how we work with the model. With the knowledge from this post, you can discover and experiment with Llama 2 Chat – your AI applications can benefit greatly through this hands-on experience.

Resources

- Llama 2 foundation models from Meta are now available in Amazon SageMaker JumpStart

- Fine-tune Llama 2 for text generation on Amazon SageMaker JumpStart

- Improve throughput performance of Llama 2 models using Amazon SageMaker

About the authors

Jin Tan Ruan is a Prototyping Developer within the AWS Industries Prototyping and Customer Engineering (PACE) team, specializing in NLP and generative AI. With a background in software development and nine AWS certifications, Jin brings a wealth of experience to assist AWS customers in materializing their AI/ML and generative AI visions using the AWS platform. He holds a master’s degree in Computer Science & Software Engineering from the University of Syracuse. Outside of work, Jin enjoys playing video games and immersing himself in the thrilling world of horror movies. You can find Jin on Linkedln. Let’s connect!

Jin Tan Ruan is a Prototyping Developer within the AWS Industries Prototyping and Customer Engineering (PACE) team, specializing in NLP and generative AI. With a background in software development and nine AWS certifications, Jin brings a wealth of experience to assist AWS customers in materializing their AI/ML and generative AI visions using the AWS platform. He holds a master’s degree in Computer Science & Software Engineering from the University of Syracuse. Outside of work, Jin enjoys playing video games and immersing himself in the thrilling world of horror movies. You can find Jin on Linkedln. Let’s connect!

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Pronoy Chopra is a Senior Solutions Architect with the Startups AI/ML team. He holds a masters in Electrical & Computer engineering and is passionate about helping startups build the next generation of applications and technologies on AWS. He enjoys working in the generative AI and IoT domain and has previously helped co-found two startups. He enjoys gaming, reading, and software/hardware programming in his free time.

Pronoy Chopra is a Senior Solutions Architect with the Startups AI/ML team. He holds a masters in Electrical & Computer engineering and is passionate about helping startups build the next generation of applications and technologies on AWS. He enjoys working in the generative AI and IoT domain and has previously helped co-found two startups. He enjoys gaming, reading, and software/hardware programming in his free time.