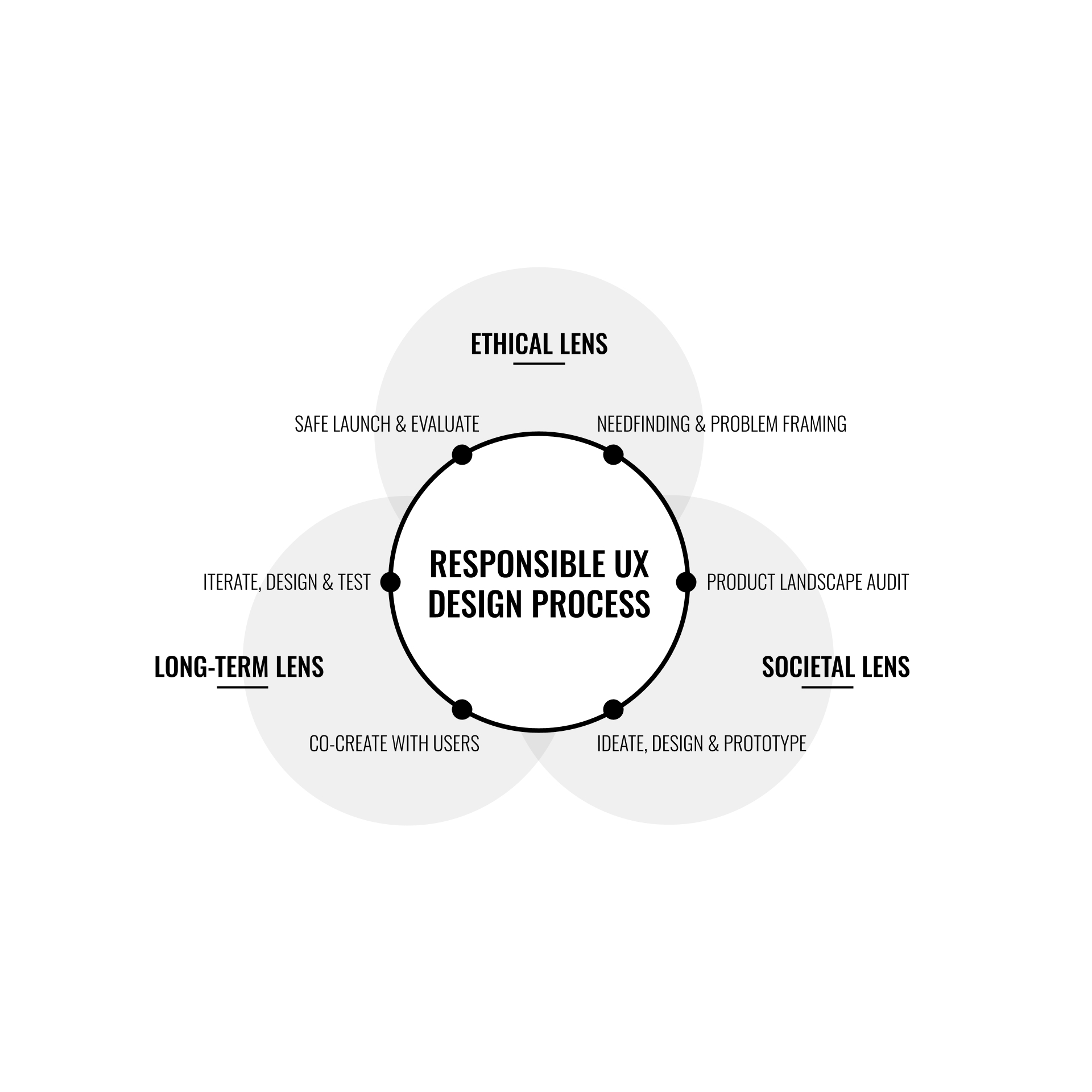

Google’s Responsible AI User Experience (Responsible AI UX) team is a product-minded team embedded within Google Research. This unique positioning requires us to apply responsible AI development practices to our user-centered user experience (UX) design process. In this post, we describe the importance of UX design and responsible AI in product development, and share a few examples of how our team’s capabilities and cross-functional collaborations have led to responsible development across Google.

First, the UX part. We are a multi-disciplinary team of product design experts: designers, engineers, researchers, and strategists who manage the user-centered UX design process from early-phase ideation and problem framing to later-phase user-interface (UI) design, prototyping and refinement. We believe that effective product development occurs when there is clear alignment between significant unmet user needs and a product’s primary value proposition, and that this alignment is reliably achieved via a thorough user-centered UX design process.

And second, recognizing generative AI’s (GenAI) potential to significantly impact society, we embrace our role as the primary user advocate as we continue to evolve our UX design process to meet the unique challenges AI poses, maximizing the benefits and minimizing the risks. As we navigate through each stage of an AI-powered product design process, we place a heightened emphasis on the ethical, societal, and long-term impact of our decisions. We contribute to the ongoing development of comprehensive safety and inclusivity protocols that define design and deployment guardrails around key issues like content curation, security, privacy, model capabilities, model access, equitability, and fairness that help mitigate GenAI risks.

Responsibility in product design is also reflected in the user and societal problems we choose to address and the programs we resource. Thus, we encourage the prioritization of user problems with significant scale and severity to help maximize the positive impact of GenAI technology.

|

Communication across teams and disciplines is essential to responsible product design. The seamless flow of information and insight from user research teams to product design and engineering teams, and vice versa, is essential to good product development. One of our team’s core objectives is to ensure the practical application of deep user-insight into AI-powered product design decisions at Google by bridging the communication gap between the vast technological expertise of our engineers and the user/societal expertise of our academics, research scientists, and user-centered design research experts. We’ve built a multidisciplinary team with expertise in these areas, deepening our empathy for the communication needs of our audience, and enabling us to better interface between our user & society experts and our technical experts. We create frameworks, guidebooks, prototypes, cheatsheets, and multimedia tools to help bring insights to life for the right people at the right time.

|

Facilitating responsible GenAI prototyping and development

During collaborations between Responsible AI UX, the People + AI Research (PAIR) initiative and Labs, we identified that prototyping can afford a creative opportunity to engage with large language models (LLM), and is often the first step in GenAI product development. To address the need to introduce LLMs into the prototyping process, we explored a range of different prompting designs. Then, we went out into the field, employing various external, first-person UX design research methodologies to draw out insight and gain empathy for the user’s perspective. Through user/designer co-creation sessions, iteration, and prototyping, we were able to bring internal stakeholders, product managers, engineers, writers, sales, and marketing teams along to ensure that the user point of view was well understood and to reinforce alignment across teams.

The result of this work was MakerSuite, a generative AI platform launched at Google I/O 2023 that enables people, even those without any ML experience, to prototype creatively using LLMs. The team’s first-hand experience with users and understanding of the challenges they face allowed us to incorporate our AI Principles into the MakerSuite product design. Product features like safety filters, for example, enable users to manage outcomes, leading to easier and more responsible product development with MakerSuite.

Because of our close collaboration with product teams, we were able to adapt text-only prototyping to support multimodal interaction with Google AI Studio, an evolution of MakerSuite. Now, Google AI Studio enables developers and non-developers alike to seamlessly leverage Google’s latest Gemini model to merge multiple modality inputs, like text and image, in product explorations. Facilitating product development in this way provides us with the opportunity to better use AI to identify appropriateness of outcomes and unlocks opportunities for developers and non-developers to play with AI sandboxes. Together with our partners, we continue to actively push this effort in the products we support.

|

| Google AI studio enables developers and non-developers to leverage Google Cloud infrastructure and merge multiple modality inputs in their product explorations. |

Equitable speech recognition

Multiple external studies, as well as Google’s own research, have identified an unfortunate deficiency in the ability of current speech recognition technology to understand Black speakers on average, relative to White speakers. As multimodal AI tools begin to rely more heavily on speech prompts, this problem will grow and continue to alienate users. To address this problem, the Responsible AI UX team is partnering with world-renowned linguists and scientists at Howard University, a prominent HBCU, to build a high quality African-American English dataset to improve the design of our speech technology products to make them more accessible. Called Project Elevate Black Voices, this effort will allow Howard University to share the dataset with those looking to improve speech technology while establishing a framework for responsible data collection, ensuring the data benefits Black communities. Howard University will retain the ownership and licensing of the dataset and serve as stewards for its responsible use. At Google, we’re providing funding support and collaborating closely with our partners at Howard University to ensure the success of this program.

Equitable computer vision

The Gender Shades project highlighted that computer vision systems struggle to detect people with darker skin tones, and performed particularly poorly for women with darker skin tones. This is largely due to the fact that the datasets used to train these models were not inclusive to a wide range of skin tones. To address this limitation, the Responsible AI UX team has been partnering with sociologist Dr. Ellis Monk to release the Monk Skin Tone Scale (MST), a skin tone scale designed to be more inclusive of the spectrum of skin tones around the world. It provides a tool to assess the inclusivity of datasets and model performance across an inclusive range of skin tones, resulting in features and products that work better for everyone.

We have integrated MST into a range of Google products, such as Search, Google Photos, and others. We also open sourced MST, published our research, described our annotation practices, and shared an example dataset to encourage others to easily integrate it into their products. The Responsible AI UX team continues to collaborate with Dr. Monk, utilizing the MST across multiple product applications and continuing to do international research to ensure that it is globally inclusive.

Consulting & guidance

As teams across Google continue to develop products that leverage the capabilities of GenAI models, our team recognizes that the challenges they face are varied and that market competition is significant. To support teams, we develop actionable assets to facilitate a more streamlined and responsible product design process that considers available resources. We act as a product-focused design consultancy, identifying ways to scale services, share expertise, and apply our design principles more broadley. Our goal is to help all product teams at Google connect significant unmet user needs with technology benefits via great responsible product design.

One way we have been doing this is with the creation of the People + AI Guidebook, an evolving summative resource of many of the responsible design lessons we’ve learned and recommendations we’ve made for internal and external stakeholders. With its forthcoming, rolling updates focusing specifically on how to best design and consider user needs with GenAI, we hope that our internal teams, external stakeholders, and larger community will have useful and actionable guidance at the most critical milestones in the product development journey.

|

| The People + AI Guidebook has six chapters, designed to cover different aspects of the product life cycle. |

If you are interested in reading more about Responsible AI UX and how we are specifically thinking about designing responsibly with Generative AI, please check out this Q&A piece.

Acknowledgements

Shout out to our the Responsible AI UX team members: Aaron Donsbach, Alejandra Molina, Courtney Heldreth, Diana Akrong, Ellis Monk, Femi Olanubi, Hope Neveux, Kafayat Abdul, Key Lee, Mahima Pushkarna, Sally Limb, Sarah Post, Sures Kumar Thoddu Srinivasan, Tesh Goyal, Ursula Lauriston, and Zion Mengesha. Special thanks to Michelle Cohn for her contributions to this work.

Tim Condello is a senior artificial intelligence (AI) and machine learning (ML) specialist solutions architect at Amazon Web Services (AWS). His focus is natural language processing and computer vision. Tim enjoys taking customer ideas and turning them into scalable solutions.

Tim Condello is a senior artificial intelligence (AI) and machine learning (ML) specialist solutions architect at Amazon Web Services (AWS). His focus is natural language processing and computer vision. Tim enjoys taking customer ideas and turning them into scalable solutions. David Girling is a senior AI/ML solutions architect with over twenty years of experience in designing, leading and developing enterprise systems. David is part of a specialist team that focuses on helping customers learn, innovate and utilize these highly capable services with their data for their use cases.

David Girling is a senior AI/ML solutions architect with over twenty years of experience in designing, leading and developing enterprise systems. David is part of a specialist team that focuses on helping customers learn, innovate and utilize these highly capable services with their data for their use cases.