Interest in new AI applications reached a fever pitch last year as business leaders began exploring AI pilot programs. This year, they’re focused on strategically implementing these programs to create new value and sharpen their competitive advantage.

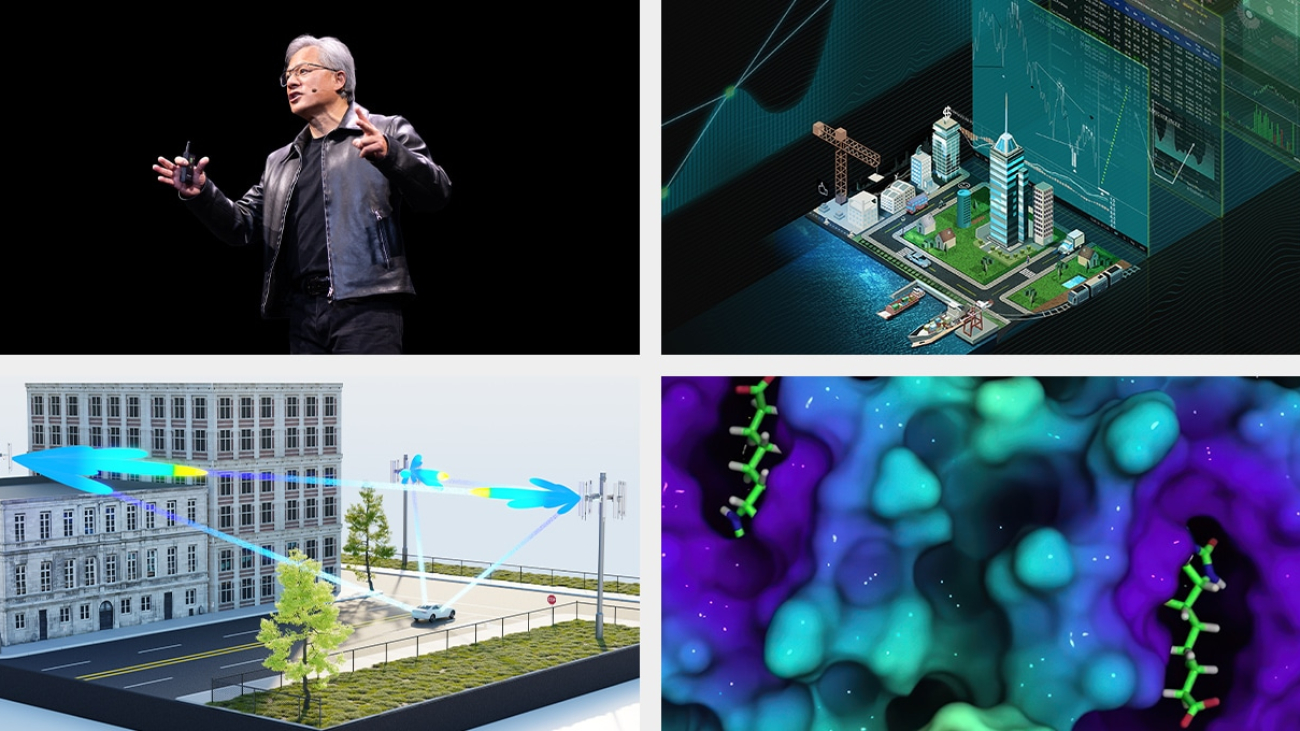

GTC, NVIDIA’s conference on AI and accelerated computing, set for March 18-21 at the San Jose Convention Center, will feature leaders across a broad swath of industries discussing how they’re charting the path to AI-driven innovation.

Execs from Bentley Systems, Lowe’s, Siemens and Verizon are among those sharing their companies’ AI journeys.

Don’t miss NVIDIA founder and CEO Jensen Huang’s GTC keynote on Monday, March 18, at 1 p.m. PT.

AI Takes Center Stage in Enterprise Technology Priorities

Nearly three-quarters of C-suite executives plan to increase their company’s tech investments this year, according to a BCG survey of C-suite executives, and 89% rank AI and generative AI among their top three priorities. More than half expect AI to deliver cost savings, primarily through productivity gains, improved customer service and IT efficiencies.

However, challenges to driving value with AI remain, including reskilling workers, prioritizing the right AI use cases and developing a strategy to implement responsible AI.

Join us in person or online to learn how industry leaders are overcoming these challenges to thrive with AI.

Here’s a preview of top industry sessions:

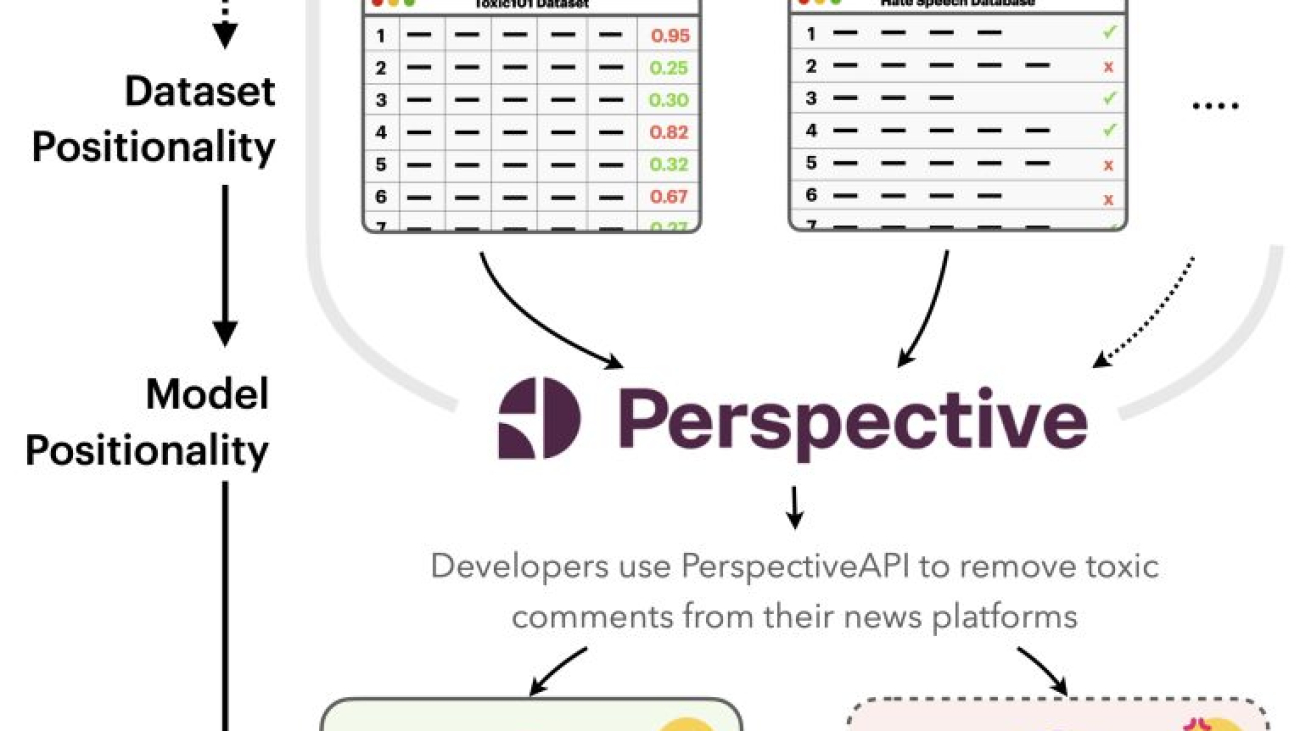

Financial Services

Navigating the Opportunity for Generative AI in Financial Services, featuring speakers from NVIDIA, MasterCard, Capital One and Goldman Sachs.

Enterprise AI in Banking: How One Leader Is Investing in “AI First,” featuring Alexandra V. Mousavizadeh, CEO of Evident, and Chintan Mehta, chief information officer and head of digital technology and innovation at Wells Fargo.

How PayPal Reduced Cloud Costs by up to 70% With Spark RAPIDS, featuring Illay Chen, software engineer at PayPal.

Public Sector

Generative AI Adoption and Operational Challenges in Government, featuring speakers from Microsoft, NVIDIA and the U.S. Army.

How to Apply Generative AI to Improve Cybersecurity, featuring Bartley Richardson, director of cybersecurity engineering at NVIDIA.

Healthcare

Healthcare Is Adopting Generative AI, Becoming One of the Largest Tech Industries, featuring Kimberly Powell, vice president of healthcare and life sciences at NVIDIA.

The Role of Generative AI in Modern Medicine, featuring speakers from ARK Investment Management, NVIDIA, Microsoft and Scripps Research.

How Artificial Intelligence Is Powering the Future of Biomedicine, featuring Priscilla Chan, cofounder and co-CEO of the Chan Zuckerberg Initiative, and Mona Flores, global head of medical AI at NVIDIA.

Retail and Consumer Packaged Goods

Augmented Marketing in Beauty With Generative AI, featuring Asmita Dubey, chief digital and marketing officer at L’Oréal.

AI and the Radical Transformation of Marketing, featuring Stephan Pretorius, chief technology officer at WPP.

How Lowe’s Is Driving Innovation and Agility With AI, featuring Azita Martin, vice president of artificial intelligence for retail and consumer packaged goods at NVIDIA, and Seemantini Godbole, executive vice president and chief digital and information officer at Lowe’s.

Telecommunications

Special Address: Three Ways Artificial Intelligence Is Transforming Telecommunications, featuring Ronnie Vasishta, senior vice president of telecom at NVIDIA.

Generative AI as an Innovative Accelerator in Telcos, featuring Asif Hasan, cofounder of Quantiphi; Lilach Ilan, global head of business development, telco operations at NVIDIA; and Chris Halton, vice president of product strategy and innovation at Verizon.

How Telcos Are Enabling National AI Infrastructure and Platforms, featuring speakers from Indosat, NVIDIA, Singtel and Telconet.

Manufacturing

Accelerating Aerodynamics Analysis at Mercedes-Benz, featuring Liam McManus, technical product manager at Siemens; Erich Jehle-Graf of Mercedes Benz; and Ian Pegler, global business development, computer-aided design at NVIDIA.

Omniverse-Based Fab Digital Twin Platform for Semiconductor Industry, featuring Seokjin Youn, corporate vice president and head of the management information systems team at Samsung Electronics.

Digitalizing Global Manufacturing Supply Chains With Digital Twins, Powered by OpenUSD, featuring Kirk Fleischhaue, senior vice president at Foxconn.

Automotive

Applying AI & LLMs to Transform the Luxury Automotive Experience, featuring Chrissie Kemp, chief data and digital product officer at JLR (Jaguar Land Rover).

Accelerating Automotive Workflows With Large Language Models, featuring Bryan Goodman, director of artificial intelligence at Ford Motor Co.

How LLMs and Generative AI Will Enhance the Way We Experience Self-Driving Cars, featuring Alex Kendall, cofounder and CEO of Wayve.

Robotics

Robotics and the Role of AI: Past, Present and Future, featuring Marc Raibert, executive director at The AI Institute, and Dieter Fox, senior director of robotics research at NVIDIA.

Breathing Life into Disney’s Robotic Characters With Deep Reinforcement Learning, featuring Mortiz Bächer, associate lab director of robotics at Disney Research.

Media and Entertainment

Unlocking Creative Potential: The Synergy of AI and Human Creativity, featuring Andrea Gagliano, senior director of data science, AI/ML at Getty Images.

Beyond the Screen: Unraveling the Impact of AI in the Film Industry, featuring Nikola Todorovic, cofounder and CEO at Wonder Dynamics; Chris Jacquemin, head of digital strategy at WME; and Sanja Fidler, vice president of AI research at NVIDIA.

Revolutionizing Fan Engagement: Unleashing the Power of AI in Software-Defined Production, featuring Lewis Smithingham, senior vice president of innovation and creative solutions at Media.Monks.

Energy

Panel: Building a Lower-Carbon Future With HPC and AI in Energy, featuring speakers from NVIDIA, Shell, ExxonMobil, Schlumberger and Petrobas.

The Increasing Complexity of the Electric Grid Demands Edge Computing, featuring Marissa Hummon, chief technology officer at Utilidata.

Browse a curated list of GTC sessions for business leaders of every technical level and area of interest.