See CHANGELOG for latest features and fixes.

You’ve likely experienced the challenge of taking notes during a meeting while trying to pay attention to the conversation. You’ve probably also experienced the need to quickly fact-check something that’s been said, or look up information to answer a question that’s just been asked in the call. Or maybe you have a team member that always joins meetings late, and expects you to send them a quick summary over chat to catch them up.

Then there are the times that others are talking in a language that’s not your first language, and you’d love to have a live translation of what people are saying to make sure you understand correctly.

And after the call is over, you usually want to capture a summary for your records, or to send to the participants, with a list of all the action items, owners, and due dates.

All of this, and more, is now possible with our newest sample solution, Live Meeting Assistant (LMA).

Check out the following demo to see how it works.

In this post, we show you how to use LMA with Amazon Transcribe, Amazon Bedrock, and Knowledge Bases for Amazon Bedrock.

Solution overview

The LMA sample solution captures speaker audio and metadata from your browser-based meeting app (as of this writing, Zoom and Chime are supported), or audio only from any other browser-based meeting app, softphone, or audio source. It uses Amazon Transcribe for speech to text, Knowledge Bases for Amazon Bedrock for contextual queries against your company’s documents and knowledge sources, and Amazon Bedrock models for customizable transcription insights and summaries.

Everything you need is provided as open source in our GitHub repo. It’s straightforward to deploy in your AWS account. When you’re done, you’ll wonder how you ever managed without it!

The following are some of the things LMA can do:

- Live transcription with speaker attribution – LMA is powered by Amazon Transcribe ASR models for low-latency, high-accuracy speech to text. You can teach it brand names and domain-specific terminology if needed, using custom vocabulary and custom language model features in Amazon Transcribe.

- Live translation – It uses Amazon Translate to optionally show each segment of the conversation translated into your language of choice, from a selection of 75 languages.

- Context-aware meeting assistant – It uses Knowledge Bases for Amazon Bedrock to provide answers from your trusted sources, using the live transcript as context for fact-checking and follow-up questions. To activate the assistant, just say “Okay, Assistant,” choose the ASK ASSISTANT! button, or enter your own question in the UI.

- On-demand summaries of the meeting – With the click of a button on the UI, you can generate a summary, which is useful when someone joins late and needs to get caught up. The summaries are generated from the transcript by Amazon Bedrock. LMA also provides options for identifying the current meeting topic, and for generating a list of action items with owners and due dates. You can also create your own custom prompts and corresponding options.

- Automated summary and insights – When the meeting has ended, LMA automatically runs a set of large language model (LLM) prompts on Amazon Bedrock to summarize the meeting transcript and extract insights. You can customize these prompts as well.

- Meeting recording – The audio is (optionally) stored for you, so you can replay important sections on the meeting later.

- Inventory list of meetings – LMA keeps track of all your meetings in a searchable list.

- Browser extension captures audio and meeting metadata from popular meeting apps – The browser extension captures meeting metadata—the meeting title and names of active speakers—and audio from you (your microphone) and others (from the meeting browser tab). As of this writing, LMA supports Chrome for the browser extension, and Zoom and Chime for meeting apps (with Teams and WebEx coming soon). Standalone meeting apps don’t work with LMA —instead, launch your meetings in the browser.

You are responsible for complying with legal, corporate, and ethical restrictions that apply to recording meetings and calls. Do not use this solution to stream, record, or transcribe calls if otherwise prohibited.

Prerequisites

You need to have an AWS account and an AWS Identity and Access Management (IAM) role and user with permissions to create and manage the necessary resources and components for this application. If you don’t have an AWS account, see How do I create and activate a new Amazon Web Services account?

You also need an existing knowledge base in Amazon Bedrock. If you haven’t set one up yet, see Create a knowledge base. Populate your knowledge base with content to power LMA’s context-aware meeting assistant.

Finally, LMA uses Amazon Bedrock LLMs for its meeting summarization features. Before proceeding, if you have not previously done so, you must request access to the following Amazon Bedrock models:

- Titan Embeddings G1 – Text

- Anthropic: All Claude models

Deploy the solution using AWS CloudFormation

We’ve provided pre-built AWS CloudFormation templates that deploy everything you need in your AWS account.

If you’re a developer and you want to build, deploy, or publish the solution from code, refer to the Developer README.

Complete the following steps to launch the CloudFormation stack:

- Log in to the AWS Management Console.

- Choose Launch Stack for your desired AWS Region to open the AWS CloudFormation console and create a new stack.

| Region | Launch Stack |

|---|---|

| US East (N. Virginia) | |

| US West (Oregon) |

- For Stack name, use the default value, LMA.

- For Admin Email Address, use a valid email address—your temporary password is emailed to this address during the deployment.

- For Authorized Account Email Domain, use the domain name part of your corporate email address to allow users with email addresses in the same domain to create their own new UI accounts, or leave blank to prevent users from directly creating their own accounts. You can enter multiple domains as a comma-separated list.

- For MeetingAssistService, choose

BEDROCK_KNOWLEDGE_BASE(the only available option as of this writing). - For Meeting Assist Bedrock Knowledge Base Id (existing), enter your existing knowledge base ID (for example,

JSXXXXX3D8). You can copy it from the Amazon Bedrock console.

- For all other parameters, use the default values.

If you want to customize the settings later, for example to add your own AWS Lambda functions, use custom vocabularies and language models to improve accuracy, enable personally identifiable information (PII) redaction, and more, you can update the stack for these parameters.

- Select the acknowledgement check boxes, then choose Create stack.

The main CloudFormation stack uses nested stacks to create the following resources in your AWS account:

- Amazon Simple Storage Service (Amazon S3) buckets to hold build artifacts and call recordings

- An AWS Fargate task with an Application Load Balancer providing a WebSocket server running code to consume stereo audio streams and relay to Amazon Transcribe, publish transcription segments in Amazon Kinesis Data Streams, and create and store stereo call recordings

- A Kinesis data stream to relay call events and transcription segments to the enrichment processing function

- LMA resources, including the QnABot on AWS solution stack, which interacts with Amazon OpenSearch Service and Amazon Bedrock

- The AWS AppSync API, which provides a GraphQL endpoint to support queries and real-time updates

- Website components, including an S3 bucket, Amazon CloudFront distribution, and Amazon Cognito user pool

- A downloadable preconfigured browser extension application for Chrome browsers

- Other supporting resources, including IAM roles and policies (using least privilege best practices), Amazon Virtual Private Cloud (Amazon VPC) resources, Amazon EventBridge event rules, and Amazon CloudWatch log groups.

The stacks take about 35–40 minutes to deploy. The main stack status shows CREATE_COMPLETE when everything is deployed.

Set your password

After you deploy the stack, open the LMA web user interface and set your password by completing the following steps:

- Open the email you received, at the email address you provided, with the subject “Welcome to Live Meeting Assistant!”

- Open your web browser to the URL shown in the email. You’re directed to the login page.

- The email contains a generated temporary password that you use to log in and create your own password. Your user name is your email address.

- Set a new password.

Your new password must have a length of at least eight characters, and contain uppercase and lowercase characters, plus numbers and special characters.

- Follow the directions to verify your email address, or choose Skip to do it later.

You’re now logged in to LMA.

You also received a similar email with the subject “QnABot Signup Verification Code.” This email contains a generated temporary password that you use to log in and create your own password in the QnABot designer. You use QnABot designer only if you want to customize LMA options and prompts. Your username for QnABot is Admin. You can set your permanent QnABot Admin password now, or keep this email safe in case you want to customize things later.

Download and install the Chrome browser extension

For the best meeting streaming experience, install the LMA browser plugin (currently available for Chrome):

- Choose Download Chrome Extension to download the browser extension .zip file (

lma-chrome-extension.zip).

- Choose (right-click) and expand the .zip file (

lma-chrome-extension.zip) to create a local folder namedlma-chrome-extension. - Open Chrome and enter the link

chrome://extensionsinto the address bar.

- Enable Developer mode.

- Choose Load unpacked, navigate to the

lma-chrome-extensionfolder (which you unzipped from the download), and choose Select. This loads your extension. - Pin the new LMA extension to the browser tool bar for easy access—you will use it often to stream your meetings!

Start using LMA

LMA provides two streaming options:

- Chrome browser extension – Use this to stream audio and speaker metadata from your meeting browser app. It currently works with Zoom and Chime, but we hope to add more meeting apps.

- LMA Stream Audio tab – Use this to stream audio from your microphone and any Chrome browser-based meeting app, softphone, or audio application.

We show you how to use both options in the following sections.

Use the Chrome browser extension to stream a Zoom call

Complete the following steps to use the browser extension:

- Open the LMA extension and log in with your LMA credentials.

- Join or start a Zoom meeting in your web browser (do not use the separate Zoom client).

If you already have the Zoom meeting page loaded, reload it.

The LMA extension automatically detects that Zoom is running in the browser tab, and populates your name and the meeting name.

- Tell others on the call that you are about to start recording the call using LMA and obtain their permission. Do not proceed if participants object.

- Choose Start Listening.

- Read and accept the disclaimer, and choose Allow to share the browser tab.

The LMA extension automatically detects and displays the active speaker on the call. If you are alone in the meeting, invite some friends to join, and observe that the names they used to join the call are displayed in the extension when they speak, and are attributed to their words in the LMA transcript.

- Choose Open in LMA to see your live transcript in a new tab.

- Choose your preferred transcript language, and interact with the meeting assistant using the wake phrase “OK Assistant!” or the Meeting Assist Bot pane.

The ASK ASSISTANT button asks the meeting assistant service (Amazon Bedrock knowledge base) to suggest a good response based on the transcript of the recent interactions in the meeting. Your mileage may vary, so experiment!

- When you are done, choose Stop Streaming to end the meeting in LMA.

Within a few seconds, the automated end-of-meeting summaries appear, and the audio recording becomes available. You can continue to use the bot after the call has ended.

Use the LMA UI Stream Audio tab to stream from your microphone and any browser-based audio application

The browser extension is the most convenient way to stream metadata and audio from supported meeting web apps. However, you can also use LMA to stream just the audio from any browser-based softphone, meeting app, or other audio source playing in your Chrome browser, using the convenient Stream Audio tab that is built into the LMA UI.

- Open any audio source in a browser tab.

For example, this could be a softphone (such as Google Voice), another meeting app, or for demo purposes, you can simply play a local audio recording or a YouTube video in your browser to emulate another meeting participant. If you just want to try it, open the following YouTube video in a new tab.

- In the LMA App UI, choose Stream Audio (no extension) to open the Stream Audio tab.

- For Meeting ID, enter a meeting ID.

- For Name, enter a name for yourself (applied to audio from your microphone).

- For Participant Name(s), enter the names of the participants (applied to the incoming audio source).

- Choose Start Streaming.

- Choose the browser tab you opened earlier, and choose Allow to share.

- Choose the LMA UI tab again to view your new meeting ID listed, showing the meeting as In Progress.

- Choose the meeting ID to open the details page, and watch the transcript of the incoming audio, attributed to the participant names that you entered. If you speak, you’ll see the transcription of your own voice.

Use the Stream Audio feature to stream from any softphone app, meeting app, or any other streaming audio playing in the browser, along with your own audio captured from your selected microphone. Always obtain permission from others before recording them using LMA, or any other recording application.

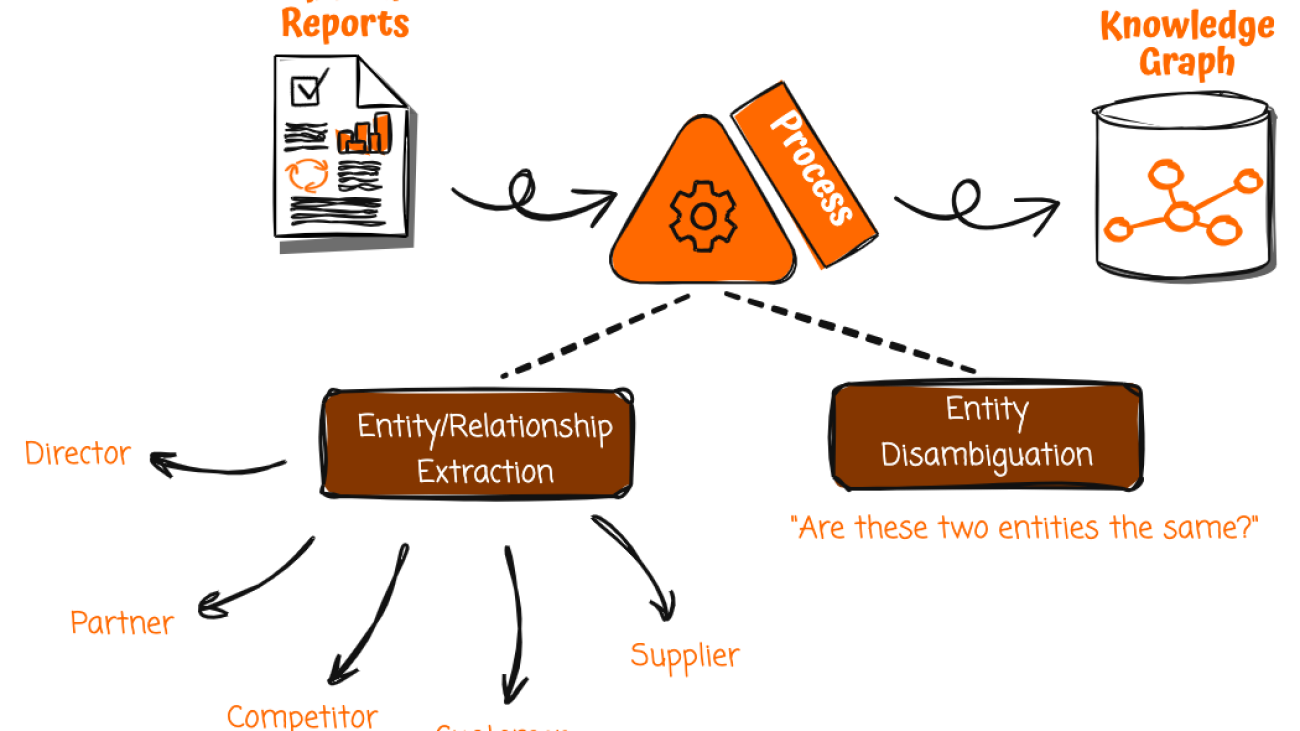

Processing flow overview

How did LMA transcribe and analyze your meeting? Let’s look at how it works. The following diagram shows the main architectural components and how they fit together at a high level.

The LMA user joins a meeting in their browser, enables the LMA browser extension, and authenticates using their LMA credentials. If the meeting app (for example, Zoom.us) is supported by the LMA extension, the user’s name, meeting name, and active speaker names are automatically detected by the extension. If the meeting app is not supported by the extension, then the LMA user can manually enter their name and the meeting topic—active speakers’ names will not be detected.

After getting permission from other participants, the LMA user chooses Start Listening on the LMA extension pane. A secure WebSocket connection is established to the preconfigured LMA stack WebSocket URL, and the user’s authentication token is validated. The LMA browser extension sends a START message to the WebSocket containing the meeting metadata (name, topic, and so on), and starts streaming two-channel audio from the user’s microphone and the incoming audio channel containing the voices of the other meeting participants. The extension monitors the meeting app to detect active speaker changes during the call, and sends that metadata to the WebSocket, enabling LMA to label speech segments with the speaker’s name.

The WebSocket server running in Fargate consumes the real-time two-channel audio fragments from the incoming WebSocket stream. The audio is streamed to Amazon Transcribe, and the transcription results are written in real time to Kinesis Data Streams.

Each meeting processing session runs until the user chooses Stop Listening in the LMA extension pane, or ends the meeting and closes the tab. At the end of the call, the function creates a stereo recording file in Amazon S3 (if recording was enabled when the stack was deployed).

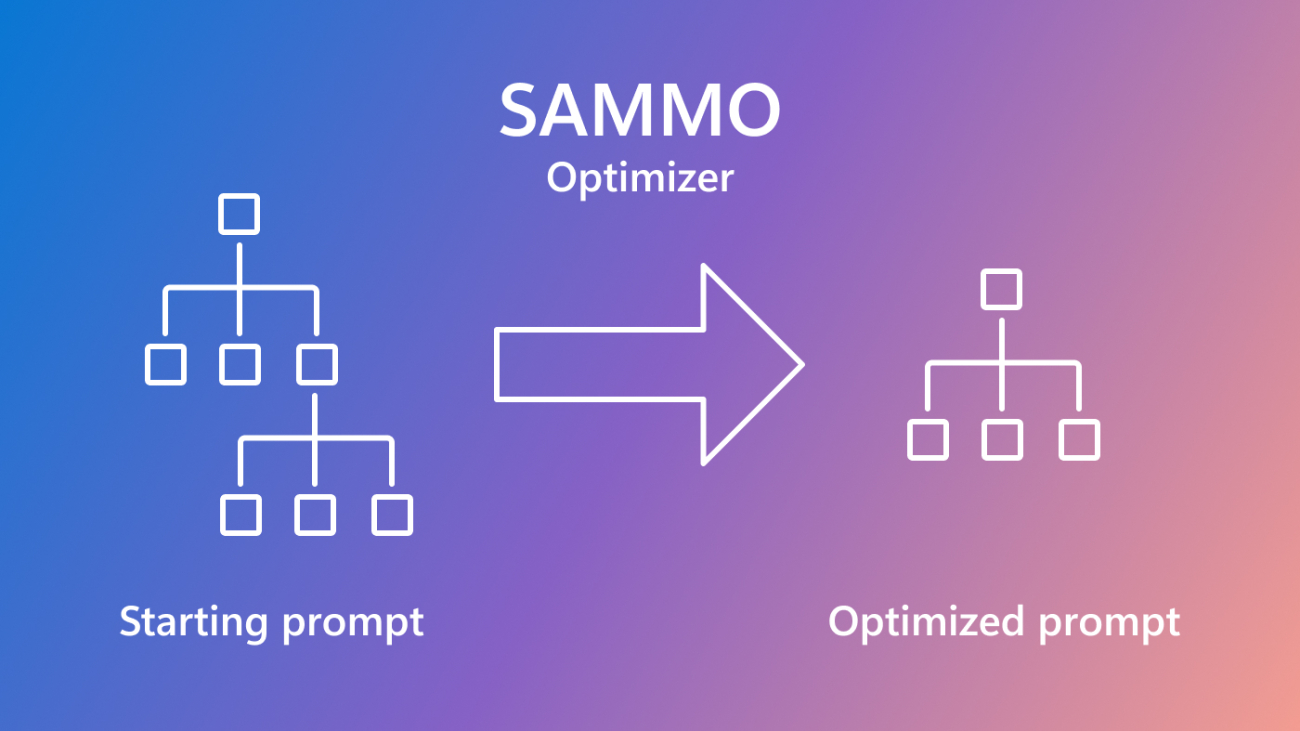

A Lambda function called the Call Event Processor, fed by Kinesis Data Streams, processes and optionally enriches meeting metadata and transcription segments. The Call Event Processor integrates with the meeting assist services. LMA is powered by Amazon Lex, Knowledge Bases for Amazon Bedrock, and Amazon Bedrock LLMs using the open source QnABot on AWS solution for answers based on FAQs and as an orchestrator for request routing to the appropriate AI service. The Call Event Processor also invokes the Transcript Summarization Lambda function when the call ends, to generate a summary of the call from the full transcript.

The Call Event Processor function interfaces with AWS AppSync to persist changes (mutations) in Amazon DynamoDB and send real-time updates to the LMA user’s logged-in web clients (conveniently opened by choosing the Open in LMA option in the browser extension).

The LMA web UI assets are hosted on Amazon S3 and served via CloudFront. Authentication is provided by Amazon Cognito.

When the user is authenticated, the web application establishes a secure GraphQL connection to the AWS AppSync API, and subscribes to receive real-time events such as new calls and call status changes for the meetings list page, and new or updated transcription segments and computed analytics for the meeting details page. When translation is enabled, the web application also interacts securely with Amazon Translate to translate the meeting transcription into the selected language.

The entire processing flow, from ingested speech to live webpage updates, is event driven, and the end-to-end latency is short—typically just a few seconds.

Monitoring and troubleshooting

AWS CloudFormation reports deployment failures and causes on the relevant stack’s Events tab. See Troubleshooting CloudFormation for help with common deployment problems. Look out for deployment failures caused by limit exceeded errors; the LMA stacks create resources that are subject to default account and Region service quotas, such as elastic IP addresses and NAT gateways. When troubleshooting CloudFormation stack failures, always navigate into any failed nested stacks to find the first nested resource failure reported—this is almost always the root cause.

Amazon Transcribe has a default limit of 25 concurrent transcription streams, which limits LMA to 25 concurrent meetings in a given AWS account or Region. Request an increase for the number of concurrent HTTP/2 streams for streaming transcription if you have many users and need to handle a larger number of concurrent meetings in your account.

LMA provides runtime monitoring and logs for each component using CloudWatch:

- WebSocket processing and transcribing Fargate task – On the Amazon Elastic Container Service (Amazon ECS) console, navigate to the Clusters page and open the LMA-

WEBSOCKETSTACK-xxxx-TranscribingClusterfunction. Choose the Tasks tab and open the task page. Choose Logs and View in CloudWatch to inspect the WebSocket transcriber task logs. - Call Event Processor Lambda function – On the Lambda console, open the

LMA-AISTACK-CallEventProcessorfunction. Choose the Monitor tab to see function metrics. Choose View logs in CloudWatch to inspect function logs. - AWS AppSync API – On the AWS AppSync console, open the

CallAnalytics-LMAAPI. Choose Monitoring in the navigation pane to see API metrics. Choose View logs in CloudWatch to inspect AWS AppSync API logs.

For QnABot on AWS for Meeting Assist, refer to the Meeting Assist README, and the QnABot solution implementation guide for additional information.

Cost assessment

LMA provides a WebSocket server using Fargate (2vCPU) and VPC networking resources costing about $0.10/hour (approximately $72/month). For more details, see AWS Fargate Pricing.

LMA is enabled using QnABot and Knowledge Bases for Amazon Bedrock. You create your own knowledge base, which you use for LMA and potentially other use cases. For more details, see Amazon Bedrock Pricing. Additional AWS services used by the QnABot solution cost about $0.77/hour. For more details, refer to the list of QnABot on AWS solution costs.

The remaining solution costs are based on usage.

The usage costs add up to about $0.17 for a 5-minute call, although this can vary based on options selected (such as translation), number of LLM summarizations, and total usage because usage affects Free Tier eligibility and volume tiered pricing for many services. For more information about the services that incur usage costs, see the following:

- AWS AppSync pricing

- Amazon Bedrock pricing

- Amazon Cognito Pricing

- Amazon DynamoDB pricing

- AWS Lambda Pricing

- Amazon S3 pricing

- Amazon Transcribe Pricing

- Amazon Translate pricing

- QnABot on AWS costs

To explore LMA costs for yourself, use AWS Cost Explorer or choose Bill Details on the AWS Billing Dashboard to see your month-to-date spend by service.

Customize your deployment

Use the following CloudFormation template parameters when creating or updating your stack to customize your LCA deployment:

- To use your own S3 bucket for meeting recordings, use Call Audio Recordings Bucket Name and Audio File Prefix.

- To redact PII from the transcriptions, set Enable Content Redaction for Transcripts to true, and adjust Transcription PII Redaction Entity Types as needed. For more information, see Redacting or identifying PII in a real-time stream.

- To improve transcription accuracy for technical and domain-specific acronyms and jargon, set Transcription Custom Vocabulary Name to the name of a custom vocabulary that you already created in Amazon Transcribe or set Transcription Custom Language Model Name to the name of a previously created custom language model. For more information, see Improving Transcription Accuracy.

- To transcribe meetings in a supported language other than US English, choose the desired value for Language for Transcription.

- To customize transcript processing, optionally set Lambda Hook Function ARN for Custom Transcript Segment Processing to the ARN of your own Lambda function. For more information, see Using a Lambda function to optionally provide custom logic for transcript processing.

- To customize the meeting assist capabilities based on the QnABot on AWS solution, Amazon Lex, Amazon Bedrock, and Knowledge Bases for Amazon Bedrock integration, see the Meeting Assist README.

- To customize transcript summarization by configuring LMA to call your own Lambda function, see Transcript Summarization LAMBDA option.

- To customize transcript summarization by modifying the default prompts or adding new ones, see Transcript Summarization.

- To change the retention period, set Record Expiration In Days to the desired value. All call data is permanently deleted from the LMA DynamoDB storage after this period. Changes to this setting apply only to new calls received after the update.

LMA is an open source project. You can fork the LMA GitHub repository, enhance the code, and send us pull requests so we can incorporate and share your improvements!

Update an existing LMA stack

You can update your existing LMA stack to the latest release. For more details, see Update an existing stack.

Clean up

Congratulations! You have completed all the steps for setting up your live call analytics sample solution using AWS services.

When you’re finished experimenting with this sample solution, clean up your resources by using the AWS CloudFormation console to delete the LMA stacks that you deployed. This deletes resources that were created by deploying the solution. The recording S3 buckets, DynamoDB table, and CloudWatch log groups are retained after the stack is deleted to avoid deleting your data.

Live Call Analytics: Companion solution

Our companion solution, Live Call Analytics and Agent Assist (LCA), offers real-time transcription and analytics for contact centers (phone calls) rather than meetings. There are many similarities—in fact, LMA was built using an architecture and many components derived from LCA.

Conclusion

The Live Meeting Assistant sample solution offers a flexible, feature-rich, and customizable approach to provide live meeting assistance to improve your productivity during and after meetings. It uses Amazon AI/ML services like Amazon Transcribe, Amazon Lex, Knowledge Bases for Amazon Bedrock, and Amazon Bedrock LLMs to transcribe and extract real-time insights from your meeting audio.

The sample LMA application is provided as open source—use it as a starting point for your own solution, and help us make it better by contributing back fixes and features via GitHub pull requests. Browse to the LMA GitHub repository to explore the code, choose Watch to be notified of new releases, and check the README for the latest documentation updates.

For expert assistance, AWS Professional Services and other AWS Partners are here to help.

We’d love to hear from you. Let us know what you think in the comments section, or use the issues forum in the LMA GitHub repository.

About the authors

Bob Strahan is a Principal Solutions Architect in the AWS Language AI Services team.

Bob Strahan is a Principal Solutions Architect in the AWS Language AI Services team.

Chris Lott is a Principal Solutions Architect in the AWS AI Language Services team. He has 20 years of enterprise software development experience. Chris lives in Sacramento, California and enjoys gardening, aerospace, and traveling the world.

Chris Lott is a Principal Solutions Architect in the AWS AI Language Services team. He has 20 years of enterprise software development experience. Chris lives in Sacramento, California and enjoys gardening, aerospace, and traveling the world.

Babu Srinivasan is a Sr. Specialist SA – Language AI services in the World Wide Specialist organization at AWS, with over 24 years of experience in IT and the last 6 years focused on the AWS Cloud. He is passionate about AI/ML. Outside of work, he enjoys woodworking and entertains friends and family (sometimes strangers) with sleight of hand card magic.

Babu Srinivasan is a Sr. Specialist SA – Language AI services in the World Wide Specialist organization at AWS, with over 24 years of experience in IT and the last 6 years focused on the AWS Cloud. He is passionate about AI/ML. Outside of work, he enjoys woodworking and entertains friends and family (sometimes strangers) with sleight of hand card magic.

Kishore Dhamodaran is a Senior Solutions Architect at AWS.

Kishore Dhamodaran is a Senior Solutions Architect at AWS.

Gillian Armstrong is a Builder Solutions Architect. She is excited about how the Cloud is opening up opportunities for more people to use technology to solve problems, and especially excited about how cognitive technologies, like conversational AI, are allowing us to interact with computers in more human ways.

Gillian Armstrong is a Builder Solutions Architect. She is excited about how the Cloud is opening up opportunities for more people to use technology to solve problems, and especially excited about how cognitive technologies, like conversational AI, are allowing us to interact with computers in more human ways.

Rachna Chadha is a Principal Solutions Architect AI/ML in Strategic Accounts at AWS. Rachna is an optimist who believes that the ethical and responsible use of AI can improve society in the future and bring economic and social prosperity. In her spare time, Rachna likes spending time with her family, hiking, and listening to music.

Rachna Chadha is a Principal Solutions Architect AI/ML in Strategic Accounts at AWS. Rachna is an optimist who believes that the ethical and responsible use of AI can improve society in the future and bring economic and social prosperity. In her spare time, Rachna likes spending time with her family, hiking, and listening to music. Marc Karp is an ML Architect with the Amazon SageMaker Service team. He focuses on helping customers design, deploy, and manage ML workloads at scale. In his spare time, he enjoys traveling and exploring new places.

Marc Karp is an ML Architect with the Amazon SageMaker Service team. He focuses on helping customers design, deploy, and manage ML workloads at scale. In his spare time, he enjoys traveling and exploring new places. Maninder (Mani) Kaur is the AI/ML Specialist lead for Strategic ISVs at AWS. With her customer-first approach, Mani helps strategic customers shape their AI/ML strategy, fuel innovation, and accelerate their AI/ML journey. Mani is a firm believer of ethical and responsible AI, and strives to ensure that her customers’ AI solutions align with these principles.

Maninder (Mani) Kaur is the AI/ML Specialist lead for Strategic ISVs at AWS. With her customer-first approach, Mani helps strategic customers shape their AI/ML strategy, fuel innovation, and accelerate their AI/ML journey. Mani is a firm believer of ethical and responsible AI, and strives to ensure that her customers’ AI solutions align with these principles. Gene Ting is a Principal Solutions Architect at AWS. He is focused on helping enterprise customers build and operate workloads securely on AWS. In his free time, Gene enjoys teaching kids technology and sports, as well as following the latest on cybersecurity.

Gene Ting is a Principal Solutions Architect at AWS. He is focused on helping enterprise customers build and operate workloads securely on AWS. In his free time, Gene enjoys teaching kids technology and sports, as well as following the latest on cybersecurity. Alan Tan is a Senior Product Manager with SageMaker, leading efforts on large model inference. He’s passionate about applying machine learning to the area of analytics. Outside of work, he enjoys the outdoors.

Alan Tan is a Senior Product Manager with SageMaker, leading efforts on large model inference. He’s passionate about applying machine learning to the area of analytics. Outside of work, he enjoys the outdoors.

Xan Huang is a Senior Solutions Architect with AWS and is based in Singapore. He works with major financial institutions to design and build secure, scalable, and highly available solutions in the cloud. Outside of work, Xan spends most of his free time with his family and getting bossed around by his 3-year-old daughter. You can find Xan on

Xan Huang is a Senior Solutions Architect with AWS and is based in Singapore. He works with major financial institutions to design and build secure, scalable, and highly available solutions in the cloud. Outside of work, Xan spends most of his free time with his family and getting bossed around by his 3-year-old daughter. You can find Xan on