Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Conformal Prediction via Regression-as-Classification

Conformal prediction (CP) for regression can be challenging, especially when the output distribution is heteroscedastic, multimodal, or skewed. Some of the issues can be addressed by estimating a distribution over the output, but in reality, such approaches can be sensitive to estimation error and yield unstable intervals. Here, we circumvent the challenges by converting regression to a classification problem and then use CP for classification to obtain CP sets for regression. To preserve the ordering of the continuous-output space, we design a new loss function and make necessary…Apple Machine Learning Research

AWS Inferentia and AWS Trainium deliver lowest cost to deploy Llama 3 models in Amazon SageMaker JumpStart

Today, we’re excited to announce the availability of Meta Llama 3 inference on AWS Trainium and AWS Inferentia based instances in Amazon SageMaker JumpStart. The Meta Llama 3 models are a collection of pre-trained and fine-tuned generative text models. Amazon Elastic Compute Cloud (Amazon EC2) Trn1 and Inf2 instances, powered by AWS Trainium and AWS Inferentia2, provide the most cost-effective way to deploy Llama 3 models on AWS. They offer up to 50% lower cost to deploy than comparable Amazon EC2 instances. They not only reduce the time and expense involved in training and deploying large language models (LLMs), but also provide developers with easier access to high-performance accelerators to meet the scalability and efficiency needs of real-time applications, such as chatbots and AI assistants.

In this post, we demonstrate how easy it is to deploy Llama 3 on AWS Trainium and AWS Inferentia based instances in SageMaker JumpStart.

Meta Llama 3 model on SageMaker Studio

SageMaker JumpStart provides access to publicly available and proprietary foundation models (FMs). Foundation models are onboarded and maintained from third-party and proprietary providers. As such, they are released under different licenses as designated by the model source. Be sure to review the license for any FM that you use. You are responsible for reviewing and complying with applicable license terms and making sure they are acceptable for your use case before downloading or using the content.

You can access the Meta Llama 3 FMs through SageMaker JumpStart on the Amazon SageMaker Studio console and the SageMaker Python SDK. In this section, we go over how to discover the models in SageMaker Studio.

SageMaker Studio is an integrated development environment (IDE) that provides a single web-based visual interface where you can access purpose-built tools to perform all machine learning (ML) development steps, from preparing data to building, training, and deploying your ML models. For more details on how to get started and set up SageMaker Studio, refer to Get Started with SageMaker Studio.

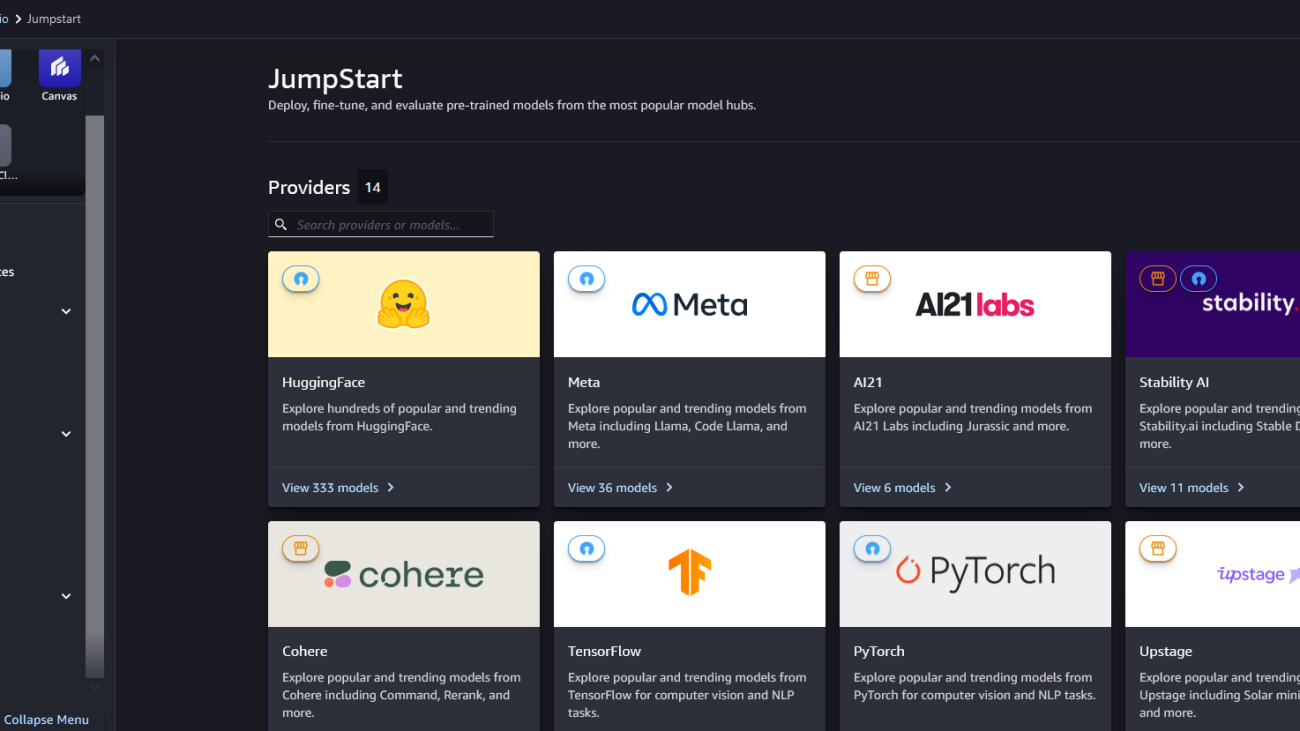

On the SageMaker Studio console, you can access SageMaker JumpStart by choosing JumpStart in the navigation pane. If you’re using SageMaker Studio Classic, refer to Open and use JumpStart in Studio Classic to navigate to the SageMaker JumpStart models.

From the SageMaker JumpStart landing page, you can search for “Meta” in the search box.

Choose the Meta model card to list all the models from Meta on SageMaker JumpStart.

You can also find relevant model variants by searching for “neuron.” If you don’t see Meta Llama 3 models, update your SageMaker Studio version by shutting down and restarting SageMaker Studio.

No-code deployment of the Llama 3 Neuron model on SageMaker JumpStart

You can choose the model card to view details about the model, such as the license, data used to train, and how to use it. You can also find two buttons, Deploy and Preview notebooks, which help you deploy the model.

When you choose Deploy, the page shown in the following screenshot appears. The top section of the page shows the end-user license agreement (EULA) and acceptable use policy for you to acknowledge.

After you acknowledge the policies, provide your endpoint settings and choose Deploy to deploy the endpoint of the model.

Alternatively, you can deploy through the example notebook by choosing Open Notebook. The example notebook provides end-to-end guidance on how to deploy the model for inference and clean up resources.

Meta Llama 3 deployment on AWS Trainium and AWS Inferentia using the SageMaker JumpStart SDK

In SageMaker JumpStart, we have pre-compiled the Meta Llama 3 model for a variety of configurations to avoid runtime compilation during deployment and fine-tuning. The Neuron Compiler FAQ has more details about the compilation process.

There are two ways to deploy Meta Llama 3 on AWS Inferentia and Trainium based instances using the SageMaker JumpStart SDK. You can deploy the model with two lines of code for simplicity, or focus on having more control of the deployment configurations. The following code snippet shows the simpler mode of deployment:

To perform inference on these models, you need to specify the argument accept_eula as True as part of the model.deploy() call. This means you have read and accepted the EULA of the model. The EULA can be found in the model card description or from https://ai.meta.com/resources/models-and-libraries/llama-downloads/.

The default instance type for Meta LIama-3-8B is is ml.inf2.24xlarge. The other supported model IDs for deployment are the following:

meta-textgenerationneuron-llama-3-70bmeta-textgenerationneuron-llama-3-8b-instructmeta-textgenerationneuron-llama-3-70b-instruct

SageMaker JumpStart has pre-selected configurations that can help get you started, which are listed in the following table. For more information about optimizing these configurations further, refer to advanced deployment configurations

| LIama-3 8B and LIama-3 8B Instruct | ||||

| Instance type |

OPTION_N_POSITI ONS |

OPTION_MAX_ROLLING_BATCH_SIZE | OPTION_TENSOR_PARALLEL_DEGREE | OPTION_DTYPE |

| ml.inf2.8xlarge | 8192 | 1 | 2 | bf16 |

| ml.inf2.24xlarge (Default) | 8192 | 1 | 12 | bf16 |

| ml.inf2.24xlarge | 8192 | 12 | 12 | bf16 |

| ml.inf2.48xlarge | 8192 | 1 | 24 | bf16 |

| ml.inf2.48xlarge | 8192 | 12 | 24 | bf16 |

| LIama-3 70B and LIama-3 70B Instruct | ||||

| ml.trn1.32xlarge | 8192 | 1 | 32 | bf16 |

| ml.trn1.32xlarge (Default) |

8192 | 4 | 32 | bf16 |

The following code shows how you can customize deployment configurations such as sequence length, tensor parallel degree, and maximum rolling batch size:

Now that you have deployed the Meta Llama 3 neuron model, you can run inference from it by invoking the endpoint:

For more information on the parameters in the payload, refer to Detailed parameters.

Refer to Fine-tune and deploy Llama 2 models cost-effectively in Amazon SageMaker JumpStart with AWS Inferentia and AWS Trainium for details on how to pass the parameters to control text generation.

Clean up

After you have completed your training job and don’t want to use the existing resources anymore, you can delete the resources using the following code:

Conclusion

The deployment of Meta Llama 3 models on AWS Inferentia and AWS Trainium using SageMaker JumpStart demonstrates the lowest cost for deploying large-scale generative AI models like Llama 3 on AWS. These models, including variants like Meta-Llama-3-8B, Meta-Llama-3-8B-Instruct, Meta-Llama-3-70B, and Meta-Llama-3-70B-Instruct, use AWS Neuron for inference on AWS Trainium and Inferentia. AWS Trainium and Inferentia offer up to 50% lower cost to deploy than comparable EC2 instances.

In this post, we demonstrated how to deploy Meta Llama 3 models on AWS Trainium and AWS Inferentia using SageMaker JumpStart. The ability to deploy these models through the SageMaker JumpStart console and Python SDK offers flexibility and ease of use. We are excited to see how you use these models to build interesting generative AI applications.

To start using SageMaker JumpStart, refer to Getting started with Amazon SageMaker JumpStart. For more examples of deploying models on AWS Trainium and AWS Inferentia, see the GitHub repo. For more information on deploying Meta Llama 3 models on GPU-based instances, see Meta Llama 3 models are now available in Amazon SageMaker JumpStart.

About the Authors

Xin Huang is a Senior Applied Scientist

Rachna Chadha is a Principal Solutions Architect – AI/ML

Qing Lan is a Senior SDE – ML System

Pinak Panigrahi is a Senior Solutions Architect Annapurna ML

Christopher Whitten is a Software Development Engineer

Kamran Khan is a Head of BD/GTM Annapurna ML

Ashish Khetan is a Senior Applied Scientist

Pradeep Cruz is a Senior SDM

Research Focus: Week of April 29, 2024

Welcome to Research Focus, a series of blog posts that highlights notable publications, events, code/datasets, new hires and other milestones from across the research community at Microsoft.

NEW RESEARCH

Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions?

Informal natural language that describes code functionality, such as code comments or function documentation, may contain substantial information about a program’s intent. However, there is no guarantee that a program’s implementation aligns with its natural language documentation. In the case of a conflict, leveraging information in code-adjacent natural language has the potential to enhance fault localization, debugging, and code trustworthiness. However, this information is often underutilized, due to the inherent ambiguity of natural language which makes natural language intent challenging to check programmatically. The “emergent abilities” of large language models (LLMs) have the potential to facilitate the translation of natural language intent to programmatically checkable assertions. However, due to a lack of benchmarks and evaluation metrics, it is unclear if LLMs can correctly translate informal natural language specifications into formal specifications that match programmer intent—and whether such translation could be useful in practice.

In a new paper: Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions? (opens in new tab), researchers from Microsoft describe nl2postcond, the problem leveraging LLMs for transforming informal natural language to formal method postconditions, expressed as program assertions. The paper, to be presented at the upcoming ACM International Conference on the Foundations of Software Engineering (opens in new tab), introduces and validates metrics to measure and compare different nl2postcond approaches, using the correctness and discriminative power of generated postconditions. The researchers show that nl2postcond via LLMs has the potential to be helpful in practice by demonstrating that LLM-generated specifications can be used to discover historical bugs in real-world projects.

NEW RESEARCH

Semantically Aligned Question and Code Generation for Automated Insight Generation

People who work with data, like engineers, analysts, and data scientists, often must manually look through data to find valuable insights or write complex scripts to automate exploration of the data. Automated insight generation provides these workers the opportunity to immediately glean insights about their data and identify valuable starting places for writing their exploration scripts. Unfortunately, automated insights produced by LLMs can sometimes generate code that does not correctly correspond (or align) to the insight. In a recent paper: Semantically Aligned Question and Code Generation for Automated Insight Generation (opens in new tab), researchers from Microsoft leverage the semantic knowledge of LLMs to generate targeted and insightful questions about data and the corresponding code to answer those questions. Through an empirical study on data from Open-WikiTable (opens in new tab), they then show that embeddings can be effectively used for filtering out semantically unaligned pairs of question and code. The research also shows that generating questions and code together yields more interesting and diverse insights about data.

NEW RESEARCH

Explaining CLIP’s performance disparities on data from blind/low vision users

AI-based applications hold the potential to assist people who are blind or low vision (BLV) with everyday visual tasks. However, human assistance is often required, due to the wide variety of assistance needed and varying quality of images available. Recent advances in large multi-modal models (LMMs) could potentially address these challenges, enabling a new era of automated visual assistance. Yet, little work has been done to evaluate how well LMMs perform on data from BLV users.

In a recent paper: Explaining CLIP’s performance disparities on data from blind/low vision users (opens in new tab), researchers from Microsoft and the World Bank address this issue by assessing CLIP (opens in new tab), a widely-used LMM with potential to underpin many assistive technologies. Testing 25 CLIP variants in a zero-shot classification task, their results show that disability objects, like guide canes and Braille displays, are recognized significantly less accurately than common objects, like TV remote controls and coffee mugs—in some cases by up to 28 percentage points difference.

The researchers perform an analysis of the captions in three large-scale datasets that are commonly used to train models like CLIP and show that BLV-related content (such as guide canes) is rarely mentioned. This is a potential reason for the large performance gaps. The researchers show that a few-shot learning approach with as little as five example images of a disability object can improve its ability to recognize that object, holding the potential to mitigate CLIP’s performance disparities for BLV users. They then discuss other possible mitigations.

Microsoft Research Podcast

AI Frontiers: Models and Systems with Ece Kamar

Ece Kamar explores short-term mitigation techniques to make these models viable components of the AI systems that give them purpose and shares the long-term research questions that will help maximize their value.

NEW RESEARCH

Closed-Form Bounds for DP-SGD against Record-level Inference

Privacy of training data is a central consideration when deploying machine learning (ML) models. Models trained with guarantees of differential privacy (DP) provably resist a wide range of attacks. Although it is possible to derive bounds, or safe limits, for specific privacy threats solely from DP guarantees, meaningful bounds require impractically small privacy budgets, which results in a large loss in utility.

In a recent paper: Closed-Form Bounds for DP-SGD against Record-level Inference, researchers from Microsoft present a new approach to quantify the privacy of ML models against membership inference (inferring whether a data record is in the training data) and attribute inference (reconstructing partial information about a record) without the indirection through DP. They focus on the popular DP-SGD algorithm, which they model as an information theoretic channel whose inputs are the secrets that an attacker wants to infer (e.g., membership of a data record) and whose outputs are the intermediate model parameters produced by iterative optimization. They obtain closed-form bounds for membership inference that match state-of-the-art techniques but are orders of magnitude faster to compute. They also present the first algorithm to produce data-dependent bounds against attribute inference. Compared to bounds computed indirectly through numerical DP budget accountants, these bounds provide a tighter characterization of the privacy risk of deploying an ML model trained on a specific dataset. This research provides a direct, interpretable, and practical way to evaluate the privacy of trained models against inference threats without sacrificing utility.

Microsoft Research in the news

TIME100 Most Influential People in Health

TIME | May 2, 2024

Microsoft Research president Peter Lee is included as an innovator on the 2024 TIME100 Health list, TIME’s inaugural list of 100 individuals who most influenced global health this year.

Sanctuary AI Announces Microsoft Collaboration to Accelerate AI Development for General Purpose Robots

Sanctuary AI | May 1, 2024

Sanctuary AI and Microsoft are collaborating on the development of AI models for general purpose humanoid robots. Sanctuary AI will leverage Microsoft’s Azure cloud resources for their AI workloads.

Tiny but mighty: The Phi-3 small language models with big potential

Microsoft Source | April 23, 2024

LLMs create exciting opportunities for AI to boost productivity and creativity. But they require significant computing resources. Phi-3 models, which perform better than models twice their size, are now publicly available from Microsoft.

AI Is Unearthing New Drug Candidates, But It Still Needs Human Oversight

Drug Discovery Online | April 11, 2024

Drug Discovery Online published a contributed article from Junaid Bajwa discussing how recent advancements in AI offer the potential to streamline and optimize drug development in unprecedented ways.

How AI is helping create sustainable farms of the future

The Grocer | April 16, 2024

Ranveer Chandra authored an essay on how AI is helping create sustainable farms of the future for UK-based trade outlet, The Grocer.

The Future of AI and Mental Health

Psychiatry Online | April 16, 2024

Psychiatric News published an article featuring Q&A with Jina Suh, highlighting the important considerations for the use of AI technologies among psychiatrists and mental health professionals.

MatterGen’s Breakthroughs: How AI Shapes the Future of Materials Science

Turing Post | April 19, 2024

Turing Post covered MatterGen in an interview with Tian Xie. Learn more about this impactful generative model for inorganic materials design.

Machine Learning Street Talk interview with Chris Bishop

Machine Learning Street Talk | April 10, 2024

Chris Bishop joined Dr. Tim Scarfe for a wide-ranging interview on advances in deep learning and AI for science.

The post Research Focus: Week of April 29, 2024 appeared first on Microsoft Research.