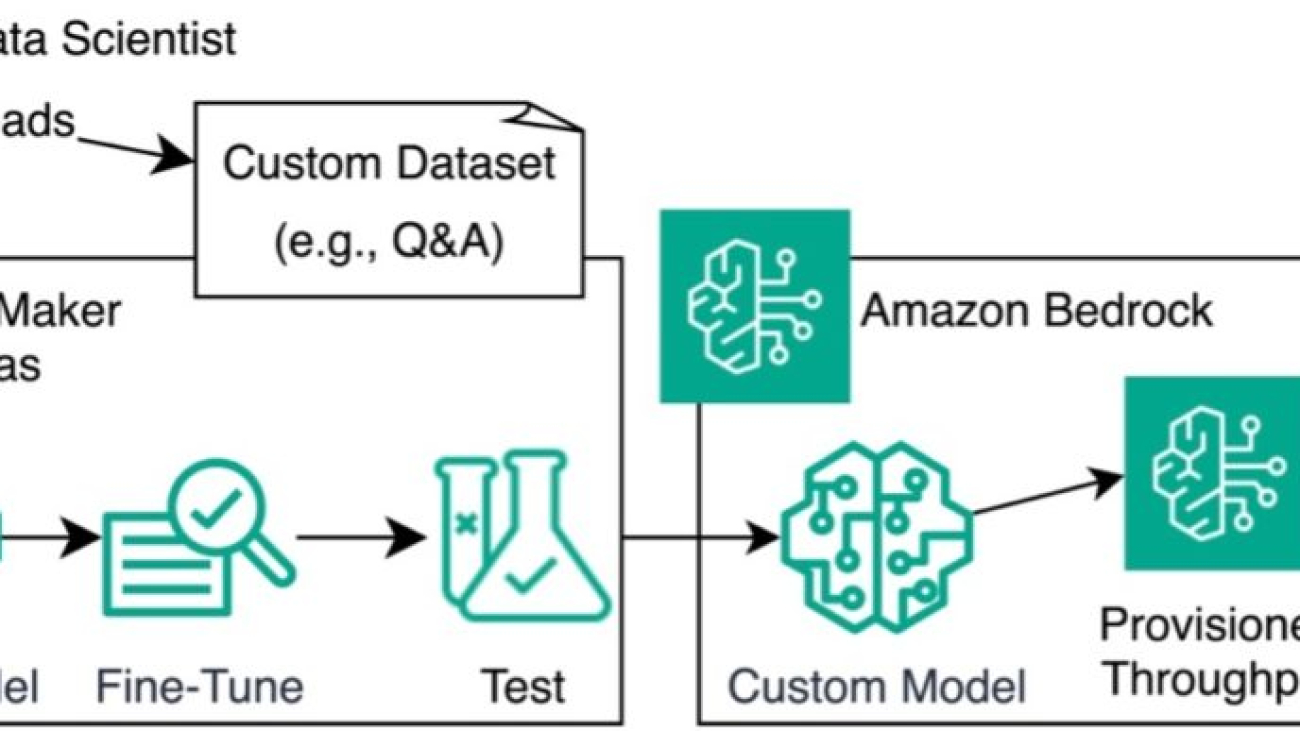

Large language models (LLMs) are making a significant impact in the realm of artificial intelligence (AI). Their impressive generative abilities have led to widespread adoption across various sectors and use cases, including content generation, sentiment analysis, chatbot development, and virtual assistant technology. Llama2 by Meta is an example of an LLM offered by AWS. Llama 2 is an auto-regressive language model that uses an optimized transformer architecture and is intended for commercial and research use in English. It comes in a range of parameter sizes—7 billion, 13 billion, and 70 billion—as well as pre-trained and fine-tuned variations. To learn more about Llama 2 on AWS, refer to Llama 2 foundation models from Meta are now available in Amazon SageMaker JumpStart.

Many practitioners fine-tune or pre-train these Llama 2 models with their own text data to improve accuracy for their specific use case. However, in some cases, a challenge arises for practitioners: the high cost of fine-tuning and training. As organizations strive to push the boundaries of what LLMs can achieve, the demand for cost-effective training solutions has never been more pressing. In this post, we explore how you can use the Neuron distributed training library to fine-tune, continuously pre-train, and reduce the cost of training LLMs such as Llama 2 with AWS Trainium instances on Amazon SageMaker.

AWS Trainium instances for training workloads

SageMaker ml.trn1 and ml.trn1n instances, powered by Trainium accelerators, are purpose-built for high-performance deep learning training and offer up to 50% cost-to-train savings over comparable training optimized Amazon Elastic Compute Cloud (Amazon EC2) instances. This post implements a solution with the ml.trn1.32xlarge Trainium instance type, typically used for training large-scale models. However, there are also comparable ml.trn1n instances that offer twice as much networking throughput (1,600 Gbps) via Amazon Elastic Fabric Adapter (EFAv2). SageMaker Training supports the availability of ml.trn1 and ml.trn1n instances in the US East (N. Virginia) and US West (Oregon) AWS Regions, and most recently announced general availability in the US East (Ohio) Region. These instances are available in the listed Regions with On-Demand, Reserved, and Spot Instances, or additionally as part of a Savings Plan.

For more information on Trainium Accelerator chips, refer to Achieve high performance with lowest cost for generative AI inference using AWS Inferentia2 and AWS Trainium on Amazon SageMaker. Additionally, check out AWS Trainium Customers to learn more about customer testimonials, or see Amazon EC2 Trn1 Instances for High-Performance Model Training are Now Available to dive into the accelerator highlights and specifications.

Using the Neuron Distributed library with SageMaker

SageMaker is a fully managed service that provides developers, data scientists, and practitioners the ability to build, train, and deploy machine learning (ML) models at scale. SageMaker Training includes features that improve and simplify the ML training experience, including managed infrastructure and images for deep learning, automatic model tuning with hyperparameter optimization, and a pay-for-what-you-use billing structure. This section highlights the advantages of using SageMaker for distributed training with the Neuron Distributed library—specifically, the managed infrastructure, time-to-train, and cost-to-train benefits of its associated resiliency and recovery features, and is part of the AWS Neuron SDK used to run deep learning workloads on AWS Inferentia and AWS Trainum based instances.

In high performance computing (HPC) clusters, such as those used for deep learning model training, hardware resiliency issues can be a potential obstacle. Although hardware failures while training on a single instance may be rare, issues resulting in stalled training become more prevalent as a cluster grows to tens or hundreds of instances. Regular checkpointing helps mitigate wasted compute time, but engineering teams managing their own infrastructure must still closely monitor their workloads and be prepared to remediate a failure at all hours to minimize training downtime. The managed infrastructure of SageMaker Training includes several resiliency features that make this monitoring and recovery process streamlined:

- Cluster health checks – Before a training job starts, SageMaker runs health checks and verifies communication on the provisioned instances. It then replaces any faulty instances, if necessary, to make sure the training script starts running on a healthy cluster of instances. Health checks are currently enabled for the TRN1 instance family as well as P* and G* GPU-based instance types.

- Automatic checkpointing – Checkpoints from a local path (/opt/ml/checkpoints by default) are automatically copied to an Amazon Simple Storage Service (Amazon S3) location specified by the user. When training is restarted, SageMaker automatically copies the previously saved checkpoints from the S3 location back to the local checkpoint directory to make sure the training script can load and resume the last saved checkpoint.

- Monitoring and tracking training – In the case of a node failure, it’s important to have the visibility of where the failure occurs. Using PyTorch Neuron gives data scientists the ability to track training progress in a TensorBoard. This allows you to capture the loss of the training job to determine when the training job should be stopped to identify the convergence of the model for optimal training.

- Built-in retries and cluster repair – You can configure SageMaker to automatically retry training jobs that fail with a SageMaker internal server error (ISE). As part of retrying a job, SageMaker replaces any instances that encountered unrecoverable errors with fresh instances, reboots all healthy instances, and starts the job again. This results in faster restarts and workload completion. Cluster update is currently enabled for the TRN1 instance family as well as P and G GPU-based instance types. Practitioners can add in their own applicative retry mechanism around the client code that submits the job, to handle other types of launch errors, such as like exceeding your account quota.

For customers working with large clusters of hundreds of instances for a training job, the resiliency and recovery features of SageMaker Training can reduce total time for a model to converge by up to 20% via fewer failures and faster recovery. This also enables engineering teams to monitor and react to failures at all hours. Although SageMaker training jobs are suitable for general-purpose training use cases with customizable configurations and integration with the broader AWS ecosystem, Amazon SageMaker HyperPod is specifically optimized for efficient and resilient training of foundation models at scale. For more information on SageMaker HyperPod use cases, refer to the SageMaker HyperPod developer guide.

In this post, we use the Neuron Distributed library to continuously pre-train a Llama 2 model using tensor and pipeline parallelism using SageMaker training jobs. To learn more about the resiliency and recovery features of SageMaker Training, refer to Training large language models on Amazon SageMaker: Best practices.

Solution overview

In this solution, we use an ml.t3.medium instance type on a SageMaker Jupyter notebook to process the provided cells. We will be continuously pre-training our llama2-70b model using the trn1.32xlarge Trainium instance. First, let’s familiarize ourselves with the techniques we use to handle the distribution of the training job created in our solution to contiuously pre-train our llama2-70b model using the Neuron distributed training library.

The techniques used to convert the pre-trained weights in the convert_pretrained_weights.ipynb notebook into a .pt (PyTorch) weights file are called pipeline parallelism and tensor parallelism:

- Pipeline parallelism involves a training strategy that combines elements of pipeline parallelism to optimize the training process by splitting a batch or deep neural network into multiple microbatches or layers, allowing each stage worker to process one microbatch.

- Tensor parallelism splits tensors of a neural network into multiple devices. This technique allows models with large tensors that can’t fit into the memory of a single device.

After we convert our pre-trained weights with the preceding techniques in our first notebook, we follow two separate notebooks in the same sagemaker-trainium-examples folder. The second notebook is Training_llama2_70b.ipynb, which walks through the continuous pre-training process by saving our checkpoint of converted model weights in the first notebook and prepping it for inference. When this step is complete, we can run the Convert_Nxd_to_hf.ipynb notebook, which takes our pre-trained weights using the NeuronX library and converts it into a readable format in Hugging Face to serve inference.

Prerequisites

You need to complete some prerequisites before you can run the first notebook.

First, make sure you have created a Hugging Face access token so you can download the Hugging Face tokenizer to be used later. After you have the access token, you need to make a few quota increase requests for SageMaker. You need to request a minimum of 8 Trn1 instances ranging to a maximum of 32 Trn1 instances (depending on time-to-train and cost-to-train trade-offs for your use case).

On the Service Quotas console, request the following SageMaker quotas:

- Trainium instances (ml.trn1.32xlarge) for training job usage: 8–32

- ml.trn1.32xlarge for training warm pool usage: 8–32

- Maximum number of instances per training job: 8–32

It may take up to 24 hours for the quota increase to get approved. However, after submitting the quota increase, you can go to the sagemaker-trainium-examples GitHub repo and locate the convert_pretrained_weights.ipynb file. This is the file that you use to begin the continual pre-training process.

Now that you’re ready to begin the process to continuously pre-train the llama2-70b model, you can convert the pre-trained weights in the next section to prep the model and create the checkpoint.

Getting started

Complete the following steps:

- Install all the required packages and libraries: SageMaker, Boto3, transformers, and datasets.

These packages make sure that you can set up your environment to access your pre-trained Llama 2 model, download your tokenizer, and get your pre-training dataset.

!pip install -U sagemaker boto3 --quiet

!pip install transformers datasets[s3] --quiet

- After the packages are installed, retrieve your Hugging Face access token, and download and define your tokenizer.

The tokenizer meta-llama/Llama-2-70b-hf is a specialized tokenizer that breaks down text into smaller units for natural language processing. This tokenized data will later be uploaded into Amazon S3 to allow for running your training job.

from huggingface_hub.hf_api

import HfFolder

# Update the access token to download the tokenizer

access_token = "hf_insert-key-here"

HfFolder.save_token(access_token)

from transformers import AutoTokenizer

tokenizer_name = "meta-llama/Llama-2-70b-hf"

tokenizer = AutoTokenizer.from_pretrained(tokenizer_name)

block_size = 4096

- After following the above cells, you will now download the wikicorpus dataset from the Hugging Face dataset.

- Tokenize the dataset with the llama-2 tokenizer that you just initialized.

By tokenizing the data, you’re preparing to pre-train your Llama 2 model to enhance the model’s performance to expose it to the trilingual (Catalan, English, Spanish) text data in the wikicorpus dataset to learn intricate patterns and relationships in the dataset.

After the data is tokenized, run the following cell to store the training dataset to s3:

# save training dataset to s3

training_input_path = f's3://{sess.default_bucket()}/neuronx_distributed/data'

print(f"uploading training dataset to: {training_input_path}")

train_dataset.save_to_disk(training_input_path)

print(f"uploaded data to: {training_input_path}")

The cell above makes sure that you define the training_input_path and have uploaded the data to your S3 bucket. You’re now ready to begin the training job process.

Run the training job

For the training job, we use the trn1.32xlarge instances with each of the instances having 32 neuron cores. We use tensor parallelism and pipeline parallelism, which allows you to shard the model across Neuron cores for training.

The following code is the configuration for pretraining llama2-70b with trn1:

#Number of processes per node

PROCESSES_PER_NODE = 32

# Number of instances within the cluster, change this if you want to tweak the instance_count parameter

WORLD_SIZE = 32

# Global batch size

GBS = 512

# Input sequence length

SEQ_LEN = 4096

# Pipeline parallel degree

PP_DEGREE = 8<br /># Tensor parallel degree

TP_DEGREE = 8

# Data paralell size

DP = ((PROCESSES_PER_NODE * WORLD_SIZE / TP_DEGREE / PP_DEGREE))

# Batch size per model replica

BS = ((GBS / DP))

# Number microbatches for pipeline execution. Setting same as BS so each microbatch contains a single datasample

NUM_MICROBATCHES = BS

# Number of total steps for which to train model. This number should be adjusted to the step number when the loss function is approaching convergence.

MAX_STEPS = 1500

# Timeout in seconds for training. After this amount of time Amazon SageMaker terminates the job regardless of its current status.

MAX_RUN = 2 * (24 * 60 * 60)

Now you can define the hyperparameters for training. Note that adjusting these parameters based on hardware capabilities, dataset characteristics, and convergence requirements can significantly impact training performance and efficiency.

The following is the code for the hyperparameters:

hyperparameters = {}

hyperparameters["train_batch_size"] = int(BS)

hyperparameters["use_meta_device_init"] = 1

hyperparameters["training_dir"] = "/opt/ml/input/data/train" # path where sagemaker uploads the training data

hyperparameters["training_config"] = "config.json" # config file containing llama 70b configuration , change this for tweaking the number of parameters.

hyperparameters["max_steps"] = MAX_STEPS

hyperparameters["seq_len"] = SEQ_LEN

hyperparameters["pipeline_parallel_size"] = PP_DEGREE

hyperparameters["tensor_parallel_size"] = TP_DEGREE

hyperparameters["num_microbatches"] = int(NUM_MICROBATCHES)

hyperparameters["lr"] = 0.00015

hyperparameters["min_lr"] = 1e-05

hyperparameters["beta1"] = 0.9

hyperparameters["beta2"] = 0.95

hyperparameters["weight_decay"] = 0.1

hyperparameters["warmup_steps"] = 2000

hyperparameters["constant_steps"] = 0

hyperparameters["use_zero1_optimizer"] = 1

hyperparameters["tb_dir"] = "/opt/ml/checkpoints/tensorboard" # The tensorboard logs will be stored here and eventually pushed to S3.

Now you specify the Docker image that will be used to train the model on Trainium:

docker_image = f"763104351884.dkr.ecr.{region_name}.amazonaws.com/pytorch-training-neuronx:1.13.1-neuronx-py310-sdk2.18.0-ubuntu20.04"

The image we defined is designed for PyTorch training with Neuron optimizations. This image is configured to work with PyTorch, using Neuron SDK version 2.18.0 for enhanced performance and efficiency on Trn1 instances equipped with AWS Trainium chips. This image is also compatible with Python 3.10, indicated by the py310, and is based on Ubuntu 20.04.

Prior to starting your training job, you need to configure it by defining all necessary variables. You do so by defining the training job name, checkpoint directory, and cache directory:

import time

# Define Training Job Name

job_name = f'llama-neuron-{time.strftime("%Y-%m-%d-%H-%M-%S", time.localtime())}'

# Define checkpoint directory that contains the weights and other relevant data for the trained model

checkpoint_s3_uri = "s3://" + sagemaker_session_bucket + "/neuron_llama_experiment"

checkpoint_dir = '/opt/ml/checkpoints'</p><p>

In [ ]:

# Define neuron chache directory

cache_dir = "/opt/ml/checkpoints/neuron_cache"

The parameters enable you to do the following:

- The training job allows you to identify and track individual training jobs based on timestamps

- The checkpoint directory specifies the S3 URI where the checkpoint data, weights, and other information are stored for the trained model

- The cache directory helps optimize the training process by storing and reusing previously calculated values, from the checkpoint directory, reducing redundancy and improving efficiency

- The environment variables make sure that the training job is optimally configured and settings are tailored to enable efficient and effective training using features like RDMA, optimized memory allocation, fused operations, and Neuron-specific device optimizations

After you have defined your training job and configured all directories and environment variables for an optimal training pipeline, you now set up your PyTorch estimator to begin the training job on SageMaker. A SageMaker estimator is a high-level interface that handles the end-to-end SageMaker training and deployment tasks.

The entry_point is specified as the Python script run_llama_nxd.py. We use the instance_type ml.trn1.32xlarge, the instance count is 32 (which was previously defined as a global variable in the configuration code), and input_mode is set to FastFile. Fast File mode in SageMaker streams data from Amazon S3 on demand, which optimizes data loading performance by fetching data as needed, reducing overall resource consumption. For more information on input, refer to Access Training Data.

from sagemaker.pytorch import PyTorch

# Handle end-to-end Amazon SageMaker training and deployment tasks.

pt_estimator = PyTorch(<br />entry_point='run_llama_nxd.py',

source_dir='./scripts',<br />instance_type="ml.trn1.32xlarge",

image_uri=docker_image,<br />instance_count=WORLD_SIZE,

max_run=MAX_RUN,

hyperparameters=hyperparameters,

role=role,

base_job_name=job_name,

environment=env,

input_mode="FastFile",

disable_output_compression=True,

keep_alive_period_in_seconds=600, # this is added to enable warm pool capability

checkpoint_s3_uri=checkpoint_s3_uri,

checkpoint_local_path=checkpoint_dir,

distribution={"torch_distributed": {"enabled": True}} # enable torchrun

)

Finally, you can start the training job with the SageMaker fit() method, which trains the model based on the defined hyperparameters:

# Start training job

pt_estimator.fit({"train": training_input_path})

You have successfully started the process to continuously pre-train a llama2-70b model by converting pre-trained weights with tokenized data using SageMaker training on Trainium instances.

Continuous pre-training

After following the prerequisites, completing the provided notebook, and converting the pre-trained weights as a checkpoint, you can now begin the continual pre-training process, using the checkpoint as a point of reference to pre-train the llama2-70b model. The techniques used to convert the pre-trained weights in the convert_pretrained_weights.ipynb notebook into a .pt (PyTorch) weights file are called pipeline parallelism and tensor parallelism.

To begin the continuous pre-training process, follow the Training_llama2_70b.ipynb file in the sagemaker-trainium-examples repo.

Given the large size of the llama2-70b model, you need to convert the pre-trained weights into a more efficient and useable format (.pt). You can do so by defining the hyperparameters in your configuration to store converted weights and checkpoints. The following are the hyperparameters:

# Use the sagemaker s3 checkpoints mechanism since we need read/write access to the paths.

hyperparameters["output_dir"] = "/opt/ml/checkpoints/llama70b_weights"

hyperparameters["checkpoint-dir"] = '/opt/ml/checkpoints'<br />hyperparameters["n_layers"] = 80

hyperparameters["convert_from_full_model"] = ""

If you look at the hyperparameters, the output_dir is used as a reference for pre-training. If you are at this cell, you should have already followed the Training_llama2_70b.ipynb notebook and gone through the process of setting up your SageMaker client and Docker image, and preparing the pre-trained weights for pre-training. You’re now ready to perform the continuous pre-training process on the llama2-70b model.

We use the following parameters to take the pre-trained weights stored in output_dir in the convert_pretrained_weights.ipynb file to be reused continuously for pre-training:

hyperparameters["checkpoint_dir"] = "/opt/ml/checkpoints/checkpts"

hyperparameters["checkpoint_freq"] = 10

hyperparameters["num_kept_checkpoint"] = 1

hyperparameters["use_zero1_optimizer"] = 1

hyperparameters["save_load_xser"] = 0

hyperparameters["pretrained_weight_dir"] = "/opt/ml/checkpoints/llama70b_weights"

After these hyperparameters are implemented, you can run the rest of the notebook cells to complete the continuous pre-training process. After the SageMaker estimator has completed the training job, you can locate the new checkpoint in the S3 checkpoint directory containing the weights. You can now locate the convert_Nxd_to_hf.ipynb file to get the checkpoint ready for inferencing.

Convert the Neuron Distributed checkpoint for inferencing

Checkpoints play a vital role in the context of distributed training with the NeuronX library because it has checkpoint compatibility with Hugging Face Transformers. You can get the training job output ready for inferencing by taking the training job that is saved as a NeuronX distributed checkpoint and converting the weights into .pt weights files.

To convert the checkpoints to Hugging Face format using NeuronX, you first need to save the S3 nxd_checkpoint_path directory:

# S3 checkpoint directory that contains the weights and other relevant data from the continuous pre-trained model

checkpoint_s3_uri = "<pre-training-checkpoint-s3-uri>"

nxd_checkpoint_path = f"s3://{checkpoint_s3_uri}/neuronx_llama_experiment/checkpts/step10/model/"

# Checkpoint is saved as part of Notebook 2

After you save the checkpoint in the nxd_checkpoint_path directory, you can save your hyperparameters and configure your SageMaker estimator, which makes sure the pre-training process can begin. You can now run the fit() function within the estimator to convert the pre-trained weights into a checkpoint for inferencing with the following cell:

# Start SageMaker job

estimator.fit({"checkpoint": nxd_checkpoint_path})

Summary

You have successfully performed continuous pre-training on a llama2-70b model by converting your pre-trained weights and checkpoint to be used to serve inference using the Neuron SDK and Trainium instances. By following the solution in this post, you should now know how to configure a pipeline for continuous pre-training of an LLM using SageMaker and Trainium accelerator chips.

For more information on how to use Trainium for your workloads, refer to the Neuron SDK documentation or reach out directly to the team. We value customer feedback and are always looking to engage with ML practitioners and builders. Feel free to leave comments or questions in the comments section.

About the authors

Marco Punio is a Solutions Architect focused on generative AI strategy, applied AI solutions and conducting research to help customers hyperscale on AWS. He is a qualified technologist with a passion for machine learning, artificial intelligence, and mergers & acquisitions. Marco is based in Seattle, WA and enjoys writing, reading, exercising, and building applications in his free time.

Marco Punio is a Solutions Architect focused on generative AI strategy, applied AI solutions and conducting research to help customers hyperscale on AWS. He is a qualified technologist with a passion for machine learning, artificial intelligence, and mergers & acquisitions. Marco is based in Seattle, WA and enjoys writing, reading, exercising, and building applications in his free time.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and Data Analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and Data Analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Arun Kumar Lokanatha is a Senior ML Solutions Architect with the Amazon SageMaker Service team. He focuses on helping customers build, train, and migrate ML production workloads to SageMaker at scale. He specializes in deep learning, especially in the area of NLP and CV. Outside of work, he enjoys running and hiking.

Arun Kumar Lokanatha is a Senior ML Solutions Architect with the Amazon SageMaker Service team. He focuses on helping customers build, train, and migrate ML production workloads to SageMaker at scale. He specializes in deep learning, especially in the area of NLP and CV. Outside of work, he enjoys running and hiking.

Robert Van Dusen is a Senior Product Manager with Amazon SageMaker. He leads frameworks, compilers, and optimization techniques for deep learning training.

Robert Van Dusen is a Senior Product Manager with Amazon SageMaker. He leads frameworks, compilers, and optimization techniques for deep learning training.

Niithiyn Vijeaswaran is a Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Niithiyn Vijeaswaran is a Solutions Architect at AWS. His area of focus is generative AI and AWS AI Accelerators. He holds a Bachelor’s degree in Computer Science and Bioinformatics. Niithiyn works closely with the Generative AI GTM team to enable AWS customers on multiple fronts and accelerate their adoption of generative AI. He’s an avid fan of the Dallas Mavericks and enjoys collecting sneakers.

Rohit Talluri is a Generative AI GTM Specialist (Tech BD) at Amazon Web Services (AWS). He is partnering with top generative AI model builders, strategic customers, key AI/ML partners, and AWS Service Teams to enable the next generation of artificial intelligence, machine learning, and accelerated computing on AWS. He was previously an Enterprise Solutions Architect, and the Global Solutions Lead for AWS Mergers & Acquisitions Advisory.

Rohit Talluri is a Generative AI GTM Specialist (Tech BD) at Amazon Web Services (AWS). He is partnering with top generative AI model builders, strategic customers, key AI/ML partners, and AWS Service Teams to enable the next generation of artificial intelligence, machine learning, and accelerated computing on AWS. He was previously an Enterprise Solutions Architect, and the Global Solutions Lead for AWS Mergers & Acquisitions Advisory.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the world with his wife.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the world with his wife.

Read More

Davide Gallitelli is a Specialist Solutions Architect for AI/ML in the EMEA region. He is based in Brussels and works closely with customer throughout Benelux. He has been a developer since very young, starting to code at the age of 7. He started learning AI/ML in his later years of university, and has fallen in love with it since then.

Davide Gallitelli is a Specialist Solutions Architect for AI/ML in the EMEA region. He is based in Brussels and works closely with customer throughout Benelux. He has been a developer since very young, starting to code at the age of 7. He started learning AI/ML in his later years of university, and has fallen in love with it since then.

Philip Whiteside is a Solutions Architect (SA) at Amazon Web Services. Philip is passionate about overcoming barriers by utilizing technology.

Philip Whiteside is a Solutions Architect (SA) at Amazon Web Services. Philip is passionate about overcoming barriers by utilizing technology.

Jundong Qiao is a Machine Learning Engineer at AWS Professional Service, where he specializes in implementing and enhancing AI/ML capabilities across various sectors. His expertise encompasses building next-generation AI solutions, including chatbots and predictive models that drive efficiency and innovation. Prior to AWS, Jundong was an Engineering Manager in Machine Learning at ACV Auctions, where he led initiatives that leveraged AI/ML to address intricate issues within the automotive industry.

Jundong Qiao is a Machine Learning Engineer at AWS Professional Service, where he specializes in implementing and enhancing AI/ML capabilities across various sectors. His expertise encompasses building next-generation AI solutions, including chatbots and predictive models that drive efficiency and innovation. Prior to AWS, Jundong was an Engineering Manager in Machine Learning at ACV Auctions, where he led initiatives that leveraged AI/ML to address intricate issues within the automotive industry. Kara Yang is a data scientist at AWS Professional Services, adept at leveraging cloud computing, machine learning, and Generative AI to tackle diverse industry challenges. Passionately dedicated to innovation, she consistently pursues new technologies, refines solutions, and delights in sharing her expertise through writing and presentations.

Kara Yang is a data scientist at AWS Professional Services, adept at leveraging cloud computing, machine learning, and Generative AI to tackle diverse industry challenges. Passionately dedicated to innovation, she consistently pursues new technologies, refines solutions, and delights in sharing her expertise through writing and presentations. Kiowa Jackson is a Machine Learning Engineer at AWS ProServe, dedicated to helping customers leverage Generative AI for creating and deploying novel applications. He is passionate about placing the benefits of GenAI in the hands of users through real-world use cases.

Kiowa Jackson is a Machine Learning Engineer at AWS ProServe, dedicated to helping customers leverage Generative AI for creating and deploying novel applications. He is passionate about placing the benefits of GenAI in the hands of users through real-world use cases. Praveen Kumar Jeyarajan is a Principal DevOps Consultant at AWS, supporting Enterprise customers and their journey to the cloud. He has 13+ years of DevOps experience and is skilled in solving myriad technical challenges using the latest technologies. He holds a Masters degree in Software Engineering. Outside of work, he enjoys watching movies and playing tennis.

Praveen Kumar Jeyarajan is a Principal DevOps Consultant at AWS, supporting Enterprise customers and their journey to the cloud. He has 13+ years of DevOps experience and is skilled in solving myriad technical challenges using the latest technologies. He holds a Masters degree in Software Engineering. Outside of work, he enjoys watching movies and playing tennis. Shuai Cao is a Senior Data Science Manager focused on Generative AI at Amazon Web Services. He leads teams of data scientists, machine learning engineers, and application architects to deliver AI/ML solutions for customers. Outside of work, he enjoys composing and arranging music.

Shuai Cao is a Senior Data Science Manager focused on Generative AI at Amazon Web Services. He leads teams of data scientists, machine learning engineers, and application architects to deliver AI/ML solutions for customers. Outside of work, he enjoys composing and arranging music.