[TEASER ENDS]

GRETCHEN HUIZINGA: You’re listening to Collaborators, a Microsoft Research Podcast showcasing the range of expertise that goes into transforming mind-blowing ideas into world-changing technologies. I’m Dr. Gretchen Huizinga.

[MUSIC FADES]

On today’s episode, I’m talking to Dr. Brendan Lucier, a senior principal researcher in the economics and computation group at Microsoft Research, and Dr. Mert Demirer, an assistant professor of applied economics at the MIT Sloan School of Management. Brendan and Mert are exploring the economic impact of job automation and generative AI as part of Microsoft’s AI, Cognition, and the Economy, or AICE, research initiative. And since they’re part of the AICE Accelerator Pilot collaborations, let’s get to know our collaborators. Brendan, let’s start with you and your “business address,” if you will. Your research lives at the intersection of microeconomic theory and theoretical computer science. So tell us what people—shall we call them theorists?—who live there do and why they do it!

BRENDAN LUCIER: Thank you so much for having me. Yeah, so this is a very interdisciplinary area of research that really gets at, sort of, this intersection of computation and economics. And what it does is it combines the ideas from algorithm design and computational complexity that we think of when we’re building algorithmic systems with, sort of, the microeconomic theory of how humans will use those systems and how individuals make decisions, right. How their goals inform their actions and how they interact with each other. And where this really comes into play is in the digital economy and platforms that we, sort of, see online that we work with on an everyday basis, right. So we’re increasingly interacting with algorithms as part of our day-to-day life. So we use them to search for information; we use them to find rides and find jobs and have recommendations on what products we purchase. And as we do these things online, you know, some of the algorithms that go into this, like, help them grow into these huge-scale, you know, internet-sized global platforms. But fundamentally, these are still markets, right. So even though there’s a lot of algorithms and a lot of computational ideas that go into these, really what they’re doing is connecting human users to the goods and the services and to each other over the course of what they need to do in their day-to-day life, right. And so this is where this microeconomic view really comes into play. So what we know is that when people are interacting with these platforms to get at what they want, they’re going to be strategic about this, right. So people are always going to use tools in the ways that, sort of, work best for them, right, even if that’s not what the designer has in mind. And so when we’re designing algorithms, in a big way, we’re not necessarily designing solutions; we’re designing the rules of a game that people are going to end up playing with the platform or with each other.

HUIZINGA: Wow.

LUCIER: And so a big part of, sort of, what we do in this area is that if we’re trying to understand the impact of, like, a technology change or a new platform that we’re going to design, we need to understand what it is that the users want and how they’re going to respond to that change when they interact with it.

HUIZINGA: Right.

LUCIER: When we think about, sort of, microeconomic theory, a lot of this is, you know, ideas from game theory, ideas about how it is that humans make decisions, either on their own or in interaction with each other, right.

HUIZINGA: Yeah.

LUCIER: So when I’m in the marketplace, maybe I’m thinking not only about what’s best for me, but, sort of, I’m anticipating maybe what other people are going to be doing, as well. And I really need to be thinking about how the algorithms that make up the fundamentals of those marketplaces are going to influence the way people are thinking about not only what they’re doing but what other people are doing.

HUIZINGA: Yeah, this is so fascinating because even as you started to list the things that we use algorithms—and we don’t even think about it—but we look for a ride, a job, a date. All of these things that are part of our lives have become algorithmic!

LUCIER: Absolutely. And it’s fascinating that, you know, when we think about, you know, someone might launch a new algorithm, a new advance to these platforms, that looks on paper like it’s going to be a great improvement, assuming that people keep behaving the way they were behaving before. But of course, people will naturally respond, and so there’s always this moving target of trying to anticipate what it is that people actually are really trying to do and how they will adapt.

HUIZINGA: We’re going to get into that so deep in a few minutes. But first, Mert, you are an assistant professor of economics at MIT’s famous Sloan School of Management, and your homepage tells us your research interests include industrial organization and econometrics. So unpack those interests for our listeners and tell us what you spend most of your time doing at the Sloan School.

MERT DEMIRER: Thank you so much for having me. My name is Mert Demirer. I am an assistant professor at MIT Sloan, and I spend most of my time doing research and teaching MBAs. And in my research, I’m an economist, so I do research in a field called industrial organization. And the overarching theme of my research is firms and firm productivity. So in my research, I ask questions like, what makes firms more productive? What are the determinants of firm growth, or how do industries evolve over time? So what I do is I typically collect data from firms, and I use some econometric model or sometimes a model of industrial or the firm model and then I answer questions like these. And more recently, my research focused on new emerging technologies and how firms use these emerging technologies and what are the productivity effect of these new technologies. And I, more specifically, I did research on cloud computing, which is a really important technology …

HUIZINGA: Yeah …

DEMIRER: … transforming firms and industries. And more recently, my research focuses on AI, both, like, the adoption of AI and the productivity impact of AI.

HUIZINGA: Right, right. You know, even as you say it, I’m thinking, what’s available data? What’s good data? And how much data do you need to make informed analysis or decisions?

DEMIRER: So finding good data is a challenge in this research. In general, there are, like, official data sources like census or, like, census of manufacturers, which have been commonly used in productivity research. That data is very comprehensive and very useful. But of course, if you want to get into the details of, like, new technologies and, like, granular firm analysis, that’s not enough. So what I have been trying to do more recently is to find industry partners which have lots of good data on other firms.

HUIZINGA: Gotcha.

DEMIRER: So these are typically the main data sources I use.

HUIZINGA: You know, this episode is part of a little series within a series we’re doing on AI, Cognition, and the Economy, and we started out with Abi Sellen from the Cambridge, UK, lab, who gave us an overview of the big ideas behind the initiative. And you’re going to give us some discussion today on AI and, specifically, the economy. But before we get into your current collaboration, let’s take a minute to “geolocate” ourselves in the world of economics and how your work fits into the larger AICE research framework. So, Brendan, why don’t you situate us with the “micro” view and its importance to this initiative, and then Mert can zoom out and talk about the “macro” view and why we need him, too.

LUCIER: Yeah, sure. Yeah, I just, I just love this AICE program and the way that it puts all this emphasis on how human users are interacting with AI systems and tools, and this is really, like, a focal point of a lot of this, sort of, micro view, also. So, like, from this econ starting point of microeconomics, one place I think of is imagining how users would want to integrate AI tools into their day-to-day, right—into both their workflow as part of their jobs; in terms of, sort of, what they’re doing in their personal lives. And when we think about how new tools like AI tech, sort of, comes into those workflows, an even earlier question is, how is it that users are organizing what they do into individual tasks and, like, why are they doing them that way in the first place, right? So when we want to think about, you know, how it is that AI might come in and help them with pain points that they’re dealing with, we, sort of, need to understand, like, what it is they’re trying to accomplish and what are the goals that they have in mind. And this is super important when we’re trying to build effective tools because we need to understand how they’ll change their behavior or adjust to incorporate this new technology and trying to zoom into that view.

HUIZINGA: Yeah. Mert, tell us a little bit more about the macro view and why that’s important in this initiative, as well.

DEMIRER: Macro view is very complementary to micro view, and it takes a more holistic approach and analyzes the economy with its components rather than focusing on individual components. So instead of focusing on one component and analyze the collectivity effect of AI on a particular, like, occupation or sector, you just analyze this whole economy and you model the interactions between these components. And this holistic view is really essential if you want to understand AI because this is going to allow you to make, like, long-term projections and it’s going to help you understand how AI is going to affect, like, the entire economy. And to make things, like, more concrete—and going back to what Brendan said—that suppose you analyze a particular task or you figured out how AI saw the pain point and it increased the productivity by like x amount, so that impact on that occupation or, let’s say, the industry won’t be limited to that industry, right? The wage is going to change in this industry, but it’s going to affect other industries, potentially, like, labor from one industry which is affected significantly by AI to other industries, and, like, maybe new firms are going to emerge, some firms are going to exit, and so on. So this holistic view, it essentially models all of these components in just one system and also tries to understand the interactions between those. And as I said, this is really helpful because first of all, this helps you to make long-term projections about AI, how AI is going to impact the economy. And second, this is going to let you go beyond the first-order impact. Because you can essentially look at what’s going on and analyze or measure the first-order impact, but if you want to get the second- or third-order impact, then you need a framework or you need a bigger model. And typically, those, like, second- or third-order effects are typically the unintended effects or the hidden effects.

HUIZINGA: Right.

DEMIRER: And that’s why this, like, more holistic approach is useful, particularly for AI.

HUIZINGA: Yeah, I got to just say right now, I feel like I wanted to sit down with you guys for, like, a couple hours—not with a microphone—but just talking because this is so fascinating. And Abi Sellen mentioned this term “line of sight” into future projections, which was sort of an AICE overview goal. Interestingly, Mert, when you mentioned the term productivity, is that the metric? Is productivity the metric that we’re looking to in terms of this economic impact? It seems to be a buzzword, that we need to be “more productive.” Is that, kind of, a framework for your thinking?

DEMIRER: I think it is an important component. It’s an important component how we should analyze and think about AI because again, like, when you zoom into, like, the micro view of, like, how AI is going to affect my day-to-day work, that is, like, very natural to think that in terms of, like, productivity—oh, I saved, like, half an hour yesterday by using, like, AI. And, OK, that’s the productivity, right. That’s very visible. Like, that’s something you can see, something you can easily measure. But that’s only one component. So you need to understand how that productivity effect is going to change other things.

HUIZINGA: Right!

LUCIER: Like how I am going to spend the additional time, whether I’m going to spend that time for leisure or I’m going to do something else.

HUIZINGA: Right.

DEMIRER: In that sense, I think productivity is an important component, and maybe it is, like, the initial point to analyze these technologies. But we will definitely go beyond the productivity effect and understand how these, like, potential productivity effects are going to affect, like, the other parts of the economy and how the agents—like firms, people—are going to react to that potential productivity increase.

HUIZINGA: Yeah, yeah, in a couple questions I’ll ask Brendan specifically about that. But in the meantime, let’s talk about how you two got together on this project. I’m always interested in that story. This question is also known as “how I met your mother.” And the meetup stories are often quite fun and sometimes surprising. In fact, last week, one person told his side of the story, and the other guy said, hey, I didn’t even know that! [LAUGHS] So, Brendan, tell us your side of who called who and how it went down, and then Mert can add his perspective.

LUCIER: Great. So, yeah, so I’ve known Mert for quite some time! Mert joined our lab as a—the Microsoft Research New England lab—as an intern some years ago and then as a postdoc in, sort of, 2020, 2021. And so over that time, we got to know each other quite well, and I knew a lot about the macroeconomic work that Mert was doing. And so then, fast-forward to more recently, you know, this particular project initially started as discussions between myself and my colleague Nicole Immorlica at Microsoft Research and John Horton, who’s an economist at MIT who was visiting us as a visiting researcher, and we were discussing how the structure of different jobs and how those jobs break down into tasks might have an impact on how they might be affected by AI. And then very early on in that conversation, we, sort of, realized that, you know, this was really a … not just, like, a microeconomic question; it’s not just a market design question. The, sort of, the macroeconomic forces were super important. And then immediately, we knew, OK, like, Mert’s top of our list; we need, [LAUGHTER] we need, you know, to get Mert in here and talking to us about it. And so we reached out to him.

HUIZINGA: Mert, how did you come to be involved in this from your perspective?

DEMIRER: As Brendan mentioned, I spent quite a bit of time at Microsoft Research, both as an intern and as a postdoc, and Microsoft Research is a very, like, fun place to be as an economist and a really productive place to be as an economist because it’s very, like, interdisciplinary. It is a lot different from a typical academic department and especially an economics academic department. So my time at Microsoft Research has already led to a bunch of, like, papers and collaborations. And then when Brendan, like, emailed me with the research question, I thought it’s, like, no-brainer. It’s an interesting research question, like part of Microsoft Research. So I said, yeah, let’s do it!

HUIZINGA: Brendan, let’s get into this current project on the economic impact of automation and generative AI. Such a timely and fascinating line of inquiry. Part of your research involves looking at a lot of current occupational data. So from the vantage point of microeconomic theory and your work, tell us what you’re looking at, how you’re looking at it, and what it can tell us about the AI future.

LUCIER: Fantastic. Yeah, so in some sense, the idea of this project and the thing that we’re hoping to do is, sort of, get our hands on the long-term economic impact of generative AI. But it’s fundamentally, like, a super-hard problem, right? For a lot of reasons. And one of those reasons is that, you know, some of the effects could be quite far in the future, right. So this is things where the effects themselves but especially, like, the data we might look at to measure them could be years or decades away. And so, fundamentally, what we’re doing here is a prediction problem. And when we were trying to, sort of, look into the future this way, one way we do that is we try to get as much information as we can about where we are right now, right. And so we were lucky to have, like, a ton of information about the current state of the economy and the labor market and some short-term indicators on how generative AI seems to be, sort of, affecting things right now in this moment. And then the idea is to, sort of, layer some theory models on top of that to try to extrapolate forward, right, in terms of what might be happening, sort of get a glimpse of this future point. So in terms of the data we’re looking at right now, there’s this absolutely fantastic dataset that comes from the Department of Labor. It’s the O*NET database. This is the, you know, Occupational Information Network—publicly available, available online—and what it does is basically it breaks down all occupations across the United States, gives a ton of information about them, including—and, sort of, importantly for us—a very detailed breakdown of the individual tasks that make up the day-to-day in terms of those occupations, right. So, for example, if you’re curious to know what, like, a wind energy engineer does day-to-day, you could just go online and look it up, and so it basically gives you the entire breakdown. Which is fantastic. I mean, it’s, you know, I love, sort of, browsing it. It’s an interesting thing to do with an afternoon. [LAUGHTER] But from our perspective, the fact that we have these tasks—and it actually gives really detailed information about what they are—lets us do a lot of analysis on things like how AI tools and generative AI might help with different tasks. There’s a lot of analysis that we and, like, a lot of other papers coming out the last year have done in looking at which tasks do we think generative AI can have a big influence on and which ones less so in the present moment, right. And there’s been work by, you know, OpenAI and LinkedIn and other groups, sort of, really leaning into that. We can actually take that one step further and actually look also at the structure between tasks, right. So we can see not only, like, what fraction of the time I spend are things that can be influenced by generative AI but also how they relate to, like, my actual, sort of, daily goals. Like, when I look at the tasks I have to do, do I have flexibility in when and where I do them, or are things in, sort of, a very rigid structure? Are there groups of interrelated tasks that all happen to be really exposed to generative AI? And, you know, what does that say about how workers might reorganize their work as they integrate AI tools in and how that might change the nature of what it is they’re actually trying to do on a day-to-day basis?

HUIZINGA: Right.

LUCIER: So just to give an example, so, like, one of the earliest examples we looked at as we started digging into the data and testing this out was radiology. And so radiology is—you know, this is medical doctors that specialized in using medical imaging technology—and it happens to be an interesting example for this type of work because you know there are lots of tasks that make that up and they have a lot of structure to them. And it turns out when you look at those tasks, there’s interestingly, like, a big group of tasks that all, sort of, are prerequisites for an important, sort of, core part of the job, …

HUIZINGA: Right …

LUCIER: … which is, sort of, recommending a plan of which tests to, sort of, perform, right. So these are things like analyzing medical history and analyzing procedure requests, summarizing information, forming reports. And these are all things that we, sort of, expect that generative AI can be quite effective at, sort of, assisting with, right. And so the fact that these are all, sort of, grouped together and feed into something that’s a core part of the job really is suggestive that there’s an opportunity here to delegate some of those, sort of, prerequisite tasks out to, sort of, AI tools so that the radiologist can then focus on the important part, which is the actual recommendations that they can make.

HUIZINGA: Right.

LUCIER: And so the takeaway here is that it matters, like, how these tasks are related to each other, right. Sort of, the structure of, you know, what it is that I’m doing and when I’m doing them, right. So this situation would perhaps be very different if, as I was doing these tasks where AI is very helpful, I was going back and forth doing consulting with patients or something like this, where in that, sort of, scenario, I might imagine that, yeah, like an AI tool can help me, like, on a task-by-task basis but maybe I’m less likely to try to, like, organize all those together and automate them away.

HUIZINGA: Right. Yeah, let me focus a little bit more on this idea of you in the lab with all this data, kind of, parsing out and teasing out the tasks and seeing which ones are targets for AI, which ones are threatened by AI, which ones would be wonderful with AI. Do you have buy-in from these exemplar-type occupations that they say, yes, we would like you to do this to help us? I mean, is there any of that collaboration going on with these kinds of occupations at the task level?

LUCIER: So the answer is not yet. [LAUGHTER] But this is definitely an important part of the workflow. So I would say that, you know, ultimately, the goal here is that, you know, as we’re looking for these patterns across, like, individual exemplar occupations, that, sort of, what we’re looking for is relationships between tasks that extrapolate out, right. Across lots of different industries, right. So, you know, it’s one thing to be able to say, you know, a lot of very deep things about how AI might influence a particular job or a particular industry. But in some sense, the goal here is to see patterns of tasks that are repeated across lots of different occupations, across lots of different sectors that say, sort of, these are the types of patterns that are really amenable to, sort of, AI being integrated well into the workforce, whereas these are scenarios where it’s much more of an augmenting story as opposed to an automating story. But I think one of the things that’s really interesting about generative AI as a technology here, as opposed to other types of automated technology, is that while there are lots of aspects of a person’s job that can be affected by generative AI, there’s this relationship between the types of work that I might use an AI for versus the types of things that are, sort of, like the core feature of what I’m doing on a day-to-day.

HUIZINGA: Right. Gotcha …

LUCIER: And so, maybe it’s, like, at least in the short term, it actually looks quite helpful to say that, you know, there are certain aspects of my work, like going out and summarizing a bunch of heavy data reports, that I’m very happy to have an AI, sort of, do that part of my work. So then I can go and use those things forward in, sort of, the other half of my day.

HUIZINGA: Yeah. And that’s to Mert’s point: look how much time I just saved! Or I got a half hour back! We’ll get to that in a second. But I really now am eager, Mert, to have you explain your side of this. Brendan just gave us a wonderful task-centric view of AI’s impact on specific jobs. I want you to zoom out and talk about the holistic, as you mentioned before, or macroeconomic view in this collaboration. How are you looking at the impact of AI beyond job tasks, and what role does your work play in helping us understand how these advances in AI might affect job markets and the economy writ large?

DEMIRER: One thing Brendan mentioned a few minutes ago is this is a prediction task. Like, we need to predict what will be the effect of AI, how AI is going to affect the economy, especially in the long run. So this is a prediction problem that we cannot use machine learning, AI. Otherwise, it would have been a very easy problem to solve.

HUIZINGA: Right … [LAUGHS]

DEMIRER: So what you need instead is a model or, like, framework that will take, for example, inputs of, like, the productivity gains, for example, like Brendan talked about, or for, like, microfoundation as an input and then generate predictions for the entire economy. To do that, what I do in my research is I develop and use models of industries and firms. So these models essentially incorporate a bunch of economic agents. Like, this could be labor; this could be firms; this could be [a] policymaker who is trying to regulate the industry. And then you write down the incentives of these, like, different agents in the economy, and then you write down this model, you solve this model with the available data, and then this model gives you predictions. So you can, once you have a model like this, you can ask what would be the effect of a change in the economic environment on like wages, on productivity, on industry concentration, let’s say. So this is what I do in my research. So, like, I briefly mentioned my research on cloud computing. I think this is a very good example. When you think about cloud computing, always … everyone always, like, thinks about it helps you, like, scale very rapidly, which is true, and, like, which is the actual, like, the firm-level effect of cloud computing. But then the question is, like, how that is going to affect the entire industry, whether the industry is going to be more concentrated or less concentrated, it’s going to grow, like, faster, or which industry is going to grow faster, and so on. So essentially, in my research, I develop models like this to answer questions—these, like, high-level questions. And when it comes to AI, we have these, like, very detailed micro-level studies, like these exposure measures Brendan already mentioned, and the framework, the micro framework, we developed is a task view of AI. What you do is, essentially, you take the output of that micro model and then you feed it into a bigger economy-level model, and you develop a higher-level prediction. So, for example, you can apply this, like, task-based model on many different occupations. You can get a number for every occupation, like for occupation A, productivity will be 5 percent; for occupation B, it’s going to be like 10 percent; and so on. You can aggregate them at the industry level—you can get some industry-level numbers—you feed those numbers into a more, like, general equilibrium model and then you solve the model and then you answer questions like, what will be the effect of AI on wage on average? Or, like, what will be the effect of AI on, like, total output in the economy? So my research is, like, more on this answering, like, bigger industry-level or economic-level questions.

HUIZINGA: Well, Brendan, one of our biggest fears about AI is that it’s going to “steal our jobs.” I just made air quotes on a podcast again. But this isn’t our first disruptive technology rodeo, to use a phrase. So that said, it’s the first of its kind. What sets AI apart from disruptive technologies of the past, and how can looking at the history of technological revolutions help us manage our expectations, both good and bad?

LUCIER: Fantastic. Such an important question. Yeah, like there’s been, you know, just so much discussion and “negativity versus optimism” debates in the world in the public sphere …

HUIZINGA: Hope versus hype …

LUCIER: … and in the academic sphere … yeah, exactly. Hope versus hype. But as you say, yeah, it’s not our first rodeo. And we have a lot of historical examples of these, you know, disruptive, like, so-called general-purpose technologies that have swept through the economy and made a lot of changes and enabled things like electricity and the computer and robotics. Going back further, steam engine and the industrial revolution. You know, these things are revolutions in the sense that, you know, they sort of rearrange work, right. They’re not just changing how we do things. They change what it is that we even do, like just the nature of work that’s being done. And going back to this point of automation versus augmentation, you know, what that looks like can vary quite a bit from revolution to revolution, right. So sometimes this looks like fully automating away certain types of work. But in other cases, it’s just a matter of, sort of, augmenting workers that are still doing, in some terms, what they were doing before but with a new technology that, like, substantially helps them and either takes part of their job and makes it redundant so they can focus on something that’s, you know, more core or just makes them do what they were doing before much, much faster.

HUIZINGA: Right.

LUCIER: And either way, you know, this can have a huge impact on the economy and especially, sort of, the labor market. But that impact can be ambiguous, right. So, you know, if I make, you know, a huge segment of workers twice as productive, then companies have a choice. They can keep all the workers and have twice the output, or they can get the same output with half as many workers or something in between, and, you know, which one of those things happens depends not even so much on the technology but on, sort of, the broader economic forces, right. The, you know, the supply and demand and how things are going to come together in equilibrium, which is why this macroeconomic viewpoint is so important to actually give the predictions on, you know, how companies might respond to these changes that are coming through the new technology. Now, you know, where GenAI is, sort of, interesting as an example is the way that, you know, what types of work it impacts, right. So generative AI is particularly notable in that it impacts, you know, high-skill, you know, knowledge-, information-based work directly, right[1]. And it cuts across so many different industries. We think of all the different types of occupations that involve, you know, summarizing data or writing a report or writing emails. There’s so many different types of occupations where this might not be the majority of what they do, but it’s a substantial fraction of what they do. And so in many cases, you know, this technology—as we were saying before—can, sort of, come in and has the potential to automate out or at least really help heavily assist with parts of the job but, in some cases, sort of, leave some other part of the job, which is a core function. And so these are the places where we really expect this human-AI collaboration view to be especially impactful and important, right. Where we’re going to have lots of different workers in lots of different occupations who are going to be making choices on which parts of their work they might delegate to, sort of, AI agents and which parts of the work, you know, they really want to keep their own hands on.

HUIZINGA: Right, right. Brendan, talk a little more in detail about this idea of low-skill work and high-skill work, maybe physical labor and robotics kind of replacements versus knowledge worker and mental work replacements, and maybe shade it a little bit with the idea of inequalities and how that’s going to play out. I mean, I imagine this project, this collaboration, is looking at some of those issues, as well?

LUCIER: Absolutely. So, yeah, when we think about, you know, what types of work get affected by some new technology—and especially, sort of, automation technology—a lot of the times in the past, the sorts of work that have been automated out are what we’d call low-skill or, like, at least, sort of, more physical types of labor being replaced or automated by, you know, robotics. We think about the potential of manufacturing and how that displaces, like, large groups of workers who are, sort of, working in the factory manually. And so there’s a sense when this, sort of, happens and a new technology comes through and really disrupts work, there’s this transition period where certain people, you know, even if at the end of the day, the economy will eventually reach sort of new equilibrium which is generally more productive or good overall, there’s a big question of who’s winning and who’s losing both in the long term but especially in that short term, …

HUIZINGA: Yeah!

LUCIER: … sort of intermediate, you know, potentially very chaotic and disruptive period. And so very often in these stories of automation historically, it’s largely marginalized low-skill workers who are really getting affected by that transition period. AI—and generative AI in particular—is, sort of, interesting in the potential to be really hitting different types of workers, right.

HUIZINGA: Right.

LUCIER: Really this sort of, you know, middle sort of white-collar, information-work class. And so, you know, really a big part of this project and trying to, sort of, get this glimpse into the future is getting, sort of, this—again, as you said—line of sight on which industries we expect to be, sort of, most impacted by this, and is it as we might expect, sort of, those types of work that are most directly affected, or are there second- or third-order effects that might do things that are unanticipated?

HUIZINGA: Right, and we’ll talk about that in a second. So, Mert, along those same lines, it’s interesting to note how new technologies often start out simply by imitating old technologies. Early movies were stage plays on film. Email was a regular letter sent over a computer. [LAUGHS] Video killed the radio star … But eventually, we realized that these new technologies can do more than we thought. And so when we talked before, you said something really interesting. You said, “If a technology only saves time, it’s boring technology.” What do you mean by that? And if you mean what I think you mean, how does the evolution—not revolution but evolution—of previous technologies serve as a lens for the affordances that we may yet get from AI?

DEMIRER: Let me say first, technology that saves time is still very useful technology! [LAUGHTER] Who wouldn’t want a technology that will save time?

HUIZINGA: Sure …

DEMIRER: But it is less interesting for us, like, to study and maybe it’s, like, less interesting in terms of, like, the broader implications. And so why is that? Because if a technology saves time, then, OK, so I am going to have maybe more time, and the question is, like, how I’m going to spend that time. Maybe I’m going to have more leisure or maybe I’m going to have to produce more. It’s, like, relatively straightforward to analyze and quantify. So however, like, the really impactful technologies could allow us to accomplish new tasks that were previously impossible, and they should open up new opportunities for creativity. And I think here, this knowledge-worker impact of AI is particularly important because I think as a technology, the more it affects knowledge worker, the more likely it’s going to allow us to achieve new things; it’s going to allow us to create more things. So I think in that sense, I think generative AI has a huge potential in terms of making us accomplish new things. And to give you an example from my personal experience, so I’m a knowledge worker, so I do research, I teach, and generative AI is going to help my work, as well. So it’s already affecting … so it’s already saving me time. It’s making me more productive. So suppose that generative AI just, like, makes me 50 percent more productive, let’s say, like five years from now, and that’s it. That’s the only effect. So what’s going to happen to my job? Either I’m going to maybe, like, take more time off or maybe I’m going to write more of the same kind of papers I am writing in economics. But … so imagine, like, generative AI is helping me writing a different kind of paper. How is that possible? So I have a PhD in econ, and if I try really hard, maybe I can do another PhD. But that’s it. Like, I can specialize only one or, like, two topics. But imagine generative AI as an, like, agent or collaborator having PhD in, like, hundreds of different fields, and then you can, like, collaborate and, like, communicate and get information through generative AI on really different fields. That will allow me to do different kinds of research, like more interdisciplinary kinds of research. In that sense, I think the really … the most important part of generative AI is going to be this … what it will allow us to achieve new things, like what creative new things we are going to do. And I can give you a simple example. Like, we were talking about previous technologies. Let’s think of internet. So what was the first application of internet? It’s sending an email. It saves you time. Instead of writing things on a paper and, like, mailing it, you just, like, send it immediately, and it’s a clear time-saving technology. But what are the major implications for internet, like, today? It’s not email. It is like e-commerce, or it is like social media. It allows us to access infinite number of products beyond a few stores in our neighborhood, or it allows us to communicate or connect with people all around the world …

HUIZINGA: Yeah …

DEMIRER: … instead of, again, like limiting ourselves to our, like, social circle. So in that sense, I think we are currently in the “email phase” of AI, …

HUIZINGA: Right …

DEMIRER: … and we are going to … like, I think AI is going to unlock so many other new capabilities and opportunities, and that is the most exciting part.

HUIZINGA: Clearly, one of the drivers behind the whole AICE research initiative is the question of what could possibly go wrong if we got everything right, and I want to anchor this question on the common premise that if we get AI right, it will free us from drudgery—we’ve kind of alluded to that—and free us to spend our time on more meaningful or “human”—more air quotes there—pursuits. So, Brendan, have you and your team given any thought to this idea of unintended consequences and what such a society might actually look like? What will we do when AI purportedly gives us back our time? And will we really apply ourselves to making the world better? Or will we end up like those floating people in the movie WALL-E?

LUCIER: [LAUGHS] I love that framing, and I love that movie, so this is great. Yeah. And I think this is one of these questions about, sort of, the possible futures that I think is super important to be tackling. In the past, people, sort of, haven’t stopped working; they’ve shifted to doing different types of work. And as you’re saying, there’s this ideal future in which what’s happening is that people are shifting to doing more meaningful work, right, and the AI is, sort of, taking over parts of the, sort of, the drudgery, you know. These, sort of, annoying tasks that, sort of, I need to do as just, sort of, side effects of my job. I would say that where the economic theory comes in and predicts something that’s slightly different is that I would say that the economic theory predicts that people will do more valuable work in the sense that people will tend to be shifted in equilibrium towards doing things that complement what it is that the AI can do or doing things that the AI systems can’t do as well. And, you know, this is really important in the sense that, like, we’re building these partnerships with these AI systems, right. There’s this human-AI collaboration where human people are doing the things that they’re best at and the AI systems are doing the things that they’re best at. And while we’d love to imagine that, like, that more valuable work will ultimately be more meaningful work in that it’s, sort of, fundamentally more human work, that doesn’t necessarily have to be the case. You know, we can imagine scenarios in which I personally enjoy … there are certain, you know, types of routine work that I happen to personally enjoy and find meaningful. But even in that world, if we get this right and, sort of, the, you know, the economy comes at equilibrium to a place where people are being more productive, they’re doing more valuable work, and we can effectively distribute those gains to everybody, there’s a world in which, you know, this has the potential to be the rising tide that lifts all boats.

HUIZINGA: Right.

LUCIER: And so that what we end up with is, you know, we get this extra time, but through this different sort of indirect path of the increased standard of living that comes with an improved economy, right. And so that’s the sort of situation where that source of free time I think really has the potential to be somewhere where we can use it for meaningful pursuits, right. But there are a lot of steps to take to, sort of, get there, and this is why it’s, I think, super important to get this line of sight on what could possibly be happening in terms of these disruptions.

HUIZINGA: Right. Brendan, something you said reminded me that I’ve been watching a show called Dark Matter, and the premise is that there’s many possible lives we could live, all determined by the choices we make. And you two are looking at possible futures in labor markets and the economy and trying to make models for them. So how do existing hypotheses inform where AI is currently headed, and how might your research help predict them into a more optimal direction?

LUCIER: Yeah, that’s a really big question. Again, you know, as we’ve said a few times already, there’s this goal here of getting this heads-up on which segments of the economy can be most impacted. And we can envision these better futures as the economy stabilizes, and maybe we can even envision pathways towards getting there by trying to address, sort of, the potential effects of inequality and the distribution of those gains across people. But even in a world where we get all those things right, that transition is necessarily going to be disruptive, right.

HUIZINGA: Right.

LUCIER: And so even if we think that things are going to work out well in the long term, in the short term, there’s certainly going to be things that we would hope to invest in to, sort of, improve for everyone. And so even in a world where we believe, sort of, the technology is out there and we really think that people are going to be using it in the ways that make most sense to them, as we get hints about where these impacts can be largest, I think that an important value there is that it lets us anticipate opportunities for responsible stewardship, right. So if we can see where there’s going to be impact, I think we can get a hint as to where we should be focusing our efforts, and that might look like getting ahead of demand for certain use cases or anticipating extra need for, you know, responsible AI guardrails, or even just, like, understanding, you know, [how] labor market impacts can help us inform policy interventions, right. And I think that this is one of the things that gets me really excited about doing this work at Microsoft specifically. Because of how much Microsoft has been investing in responsible AI, and, sort of, the fundamentals that underlie those guardrails and those possible actions means that we, sort of, in this company, we have the ability to actually act on those opportunities, right. And so I think it’s important to really, sort of, try to shine as much light as possible on where we think those will be most effective.

HUIZINGA: Yeah. Mert, I usually ask my guests on Collaborators where their research is on the spectrum from “lab to life,” but this isn’t that kind of research. We might think of it more in terms of “lab for life” research, where your findings could actually help shape the direction of the product research in this field. So that said, where are you on the timeline of this project, and do you have any learnings yet that you could share with us?

DEMIRER: I think the first thing I learned about this project is it is difficult to study AI! [LAUGHTER] So we are still in, like, the early stages of the project. So we developed this framework we talked about earlier in the podcast, and now what we are doing is we are applying that framework to a few particular occupations. And the challenge we had is these occupations, when you just describe them, it’s like very simple, but when you go to this, like, task view, it’s actually very complex, the number of tasks. Sometimes we see in the data, like, 20, 30 tasks they do, and the relationship between those tasks. So it turned out to be more difficult than I expected. So what we are currently doing is we are applying the framework to a few specific tasks which help us understand how the model works and whether the model needs any adjustment. And then the goal is once we understand the model on any few specific cases, we’ll scale that up. And then we are going to develop these big predictions on the economy. So we are currently not there yet, but we are hoping to get there pretty soon.

HUIZINGA: And just to, kind of, follow up on that, what would you say your successful outcome of this research would be? What’s your artifact that you would deliver from this project as collaboration?

DEMIRER: So ultimately, our goal is to develop predictions that will inform the trajectory the AI is taking, that’s going to inform, like, the policy. That’s our goal, and if we generate that output, and especially if it informs policy of how firms or different agents of the economy adopt AI, I think that will be the ideal output for this project.

HUIZINGA: Yeah. And what you’ve just differentiated is that there are different end users of your research. Some of them might be governmental. Some of them might be corporate. Some of them might even be individuals or even just layers of management that try to understand how this is working and how they’re working. So wow. Well, I usually close each episode with some future casting. But that basically is what we’ve been talking about this whole episode. So I want to end instead by asking each of you to give some advice to researchers who might be just getting started in AI research, whether that’s the fields that develop the technology itself or the fields that help define its uses and the guardrails we put around it. So what is it important for us to pay attention to right now, and what words of wisdom could you offer to aspiring researchers? I’ll give you each the last word. Mert, why don’t you go first?

DEMIRER: My first advice will be use AI yourself as much as possible. Because the great thing about AI is that everyone can access this technology even though it’s a very early stage, so there’s a huge opportunity. So I think if you want to study AI, like, you should use it as much as possible. That personally allows me to understand the technology better and also develop research questions. And the second advice would be to stay up to date with what’s happening. This is a very rapidly evolving technology. There is a new product, new use case, new model every day, and it’s hard to keep up. And it is actually important to distinguish between questions that won’t be relevant two months from now versus questions that’s going to be important five years from now. And that requires understanding how the technology is evolving. So I personally find it useful to stay up to date with what’s going on.

HUIZINGA: Brendan, what would you add to that?

LUCIER: So definitely fully agree with all of that. And so I guess I would just add something extra for people who are more on the design side, which is that when we build, you know, these systems, these AI tools and guardrails, we oftentimes will have some anticipated, you know, usage or ideas in our head of how this is going to land, and then there’ll always be this moment where it, sort of, meets the real users, you know, the humans who are going to use those things in, you know, possibly unanticipated ways. And, you know, this can be oftentimes a very frustrating moment, but this can be a feature, not a bug, very often, right. So the combined insight and effort of all the users of a product can be this, like, amazing strong force. And so, you know, this is something where we can try to fight against it or we can really try to, sort of, harness it and work with it, and this is why it’s really critical when we’re building especially, sort of, user-facing AI systems, that we design them from the ground up to be, sort of, collaborating, you know, with our users and guiding towards, sort of, good outcomes in the long term, you know, as people jointly, sort of, decide how best to use these products and guide towards, sort of, good usage patterns.

[MUSIC]

HUIZINGA: Hmmm. Well, Brendan and Mert, as I said before, this is timely and important research. It’s a wonderful contribution to the AICE research initiative, and I’m thrilled that you came on the podcast today to talk about it. Thanks for joining us.

LUCIER: Thank you so much.

DEMIRER: Thank you so much.

[MUSIC FADES]

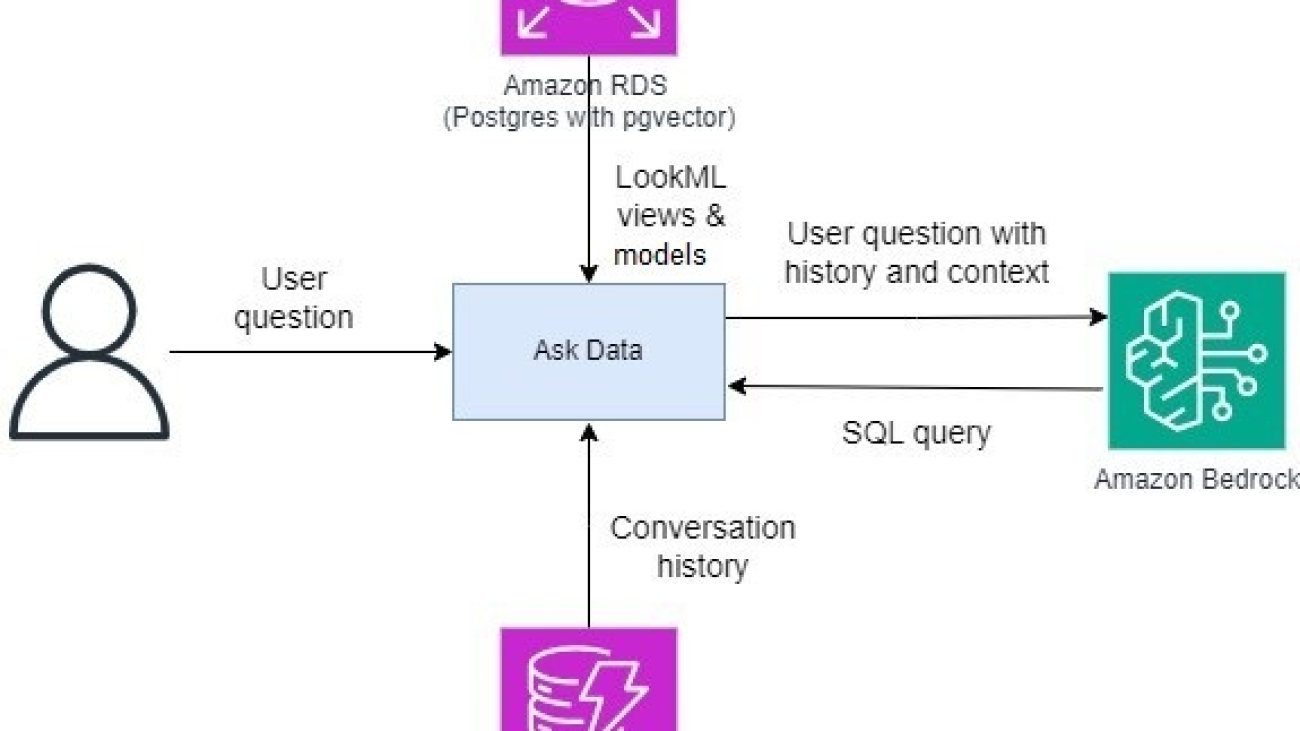

Apurva Gawad is a Senior Data Engineer at Twilio specializing in building scalable systems for data ingestion and empowering business teams to derive valuable insights from data. She has a keen interest in AI exploration, blending technical expertise with a passion for innovation. Outside of work, she enjoys traveling to new places, always seeking fresh experiences and perspectives.

Apurva Gawad is a Senior Data Engineer at Twilio specializing in building scalable systems for data ingestion and empowering business teams to derive valuable insights from data. She has a keen interest in AI exploration, blending technical expertise with a passion for innovation. Outside of work, she enjoys traveling to new places, always seeking fresh experiences and perspectives. Aishwarya Gupta is a Senior Data Engineer at Twilio focused on building data systems to empower business teams to derive insights. She enjoys to travel and explore new places, foods, and culture.

Aishwarya Gupta is a Senior Data Engineer at Twilio focused on building data systems to empower business teams to derive insights. She enjoys to travel and explore new places, foods, and culture. Oliver Cody is a Senior Data Engineering Manager at Twilio with over 28 years of professional experience, leading multidisciplinary teams across EMEA, NAMER, and India. His experience spans all things data across various domains and sectors. He has focused on developing innovative data solutions, significantly optimizing performance and reducing costs.

Oliver Cody is a Senior Data Engineering Manager at Twilio with over 28 years of professional experience, leading multidisciplinary teams across EMEA, NAMER, and India. His experience spans all things data across various domains and sectors. He has focused on developing innovative data solutions, significantly optimizing performance and reducing costs. Amit Arora is an AI and ML specialist architect at Amazon Web Services, helping enterprise customers use cloud-based machine learning services to rapidly scale their innovations. He is also an adjunct lecturer in the MS data science and analytics program at Georgetown University in Washington D.C.

Amit Arora is an AI and ML specialist architect at Amazon Web Services, helping enterprise customers use cloud-based machine learning services to rapidly scale their innovations. He is also an adjunct lecturer in the MS data science and analytics program at Georgetown University in Washington D.C. Johnny Chivers is a Senior Solutions Architect working within the Strategic Accounts team at AWS. With over 10 years of experience helping customers adopt new technologies, he guides them through architecting end-to-end solutions spanning infrastructure, big data, and AI.

Johnny Chivers is a Senior Solutions Architect working within the Strategic Accounts team at AWS. With over 10 years of experience helping customers adopt new technologies, he guides them through architecting end-to-end solutions spanning infrastructure, big data, and AI.

Wei Teh is an Machine Learning Solutions Architect at AWS. He is passionate about helping customers achieve their business objectives using cutting-edge machine learning solutions. Outside of work, he enjoys outdoor activities like camping, fishing, and hiking with his family.

Wei Teh is an Machine Learning Solutions Architect at AWS. He is passionate about helping customers achieve their business objectives using cutting-edge machine learning solutions. Outside of work, he enjoys outdoor activities like camping, fishing, and hiking with his family. Pallavi Nargund is a Principal Solutions Architect at AWS. In her role as a cloud technology enabler, she works with customers to understand their goals and challenges, and give prescriptive guidance to achieve their objective with AWS offerings. She is passionate about women in technology and is a core member of Women in AI/ML at Amazon. She speaks at internal and external conferences such as AWS re:Invent, AWS Summits, and webinars. Outside of work she enjoys volunteering, gardening, cycling and hiking.

Pallavi Nargund is a Principal Solutions Architect at AWS. In her role as a cloud technology enabler, she works with customers to understand their goals and challenges, and give prescriptive guidance to achieve their objective with AWS offerings. She is passionate about women in technology and is a core member of Women in AI/ML at Amazon. She speaks at internal and external conferences such as AWS re:Invent, AWS Summits, and webinars. Outside of work she enjoys volunteering, gardening, cycling and hiking. Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in the financial service and insurance industry build machine learning solutions on AWS. In his spare time, he likes reading and teaching.

Qingwei Li is a Machine Learning Specialist at Amazon Web Services. He received his Ph.D. in Operations Research after he broke his advisor’s research grant account and failed to deliver the Nobel Prize he promised. Currently he helps customers in the financial service and insurance industry build machine learning solutions on AWS. In his spare time, he likes reading and teaching. Mani Khanuja is a Tech Lead – Generative AI Specialists, author of the book Applied Machine Learning and High Performance Computing on AWS, and a member of the Board of Directors for Women in Manufacturing Education Foundation Board. She leads machine learning projects in various domains such as computer vision, natural language processing, and generative AI. She speaks at internal and external conferences such AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for long runs along the beach.

Mani Khanuja is a Tech Lead – Generative AI Specialists, author of the book Applied Machine Learning and High Performance Computing on AWS, and a member of the Board of Directors for Women in Manufacturing Education Foundation Board. She leads machine learning projects in various domains such as computer vision, natural language processing, and generative AI. She speaks at internal and external conferences such AWS re:Invent, Women in Manufacturing West, YouTube webinars, and GHC 23. In her free time, she likes to go for long runs along the beach.

Jayson Sizer McIntosh is a Senior Solutions Architect at Amazon Web Services (AWS) in the World Wide Public Sector (WWPS) based in Ottawa (Canada) where he primarily works with public sector customers as an IT generalist with a focus on Dev(Sec)Ops/CICD. Bringing his experience implementing cloud solutions in high compliance environments, he is passionate about helping customers successfully deliver modern cloud-based services to their users.

Jayson Sizer McIntosh is a Senior Solutions Architect at Amazon Web Services (AWS) in the World Wide Public Sector (WWPS) based in Ottawa (Canada) where he primarily works with public sector customers as an IT generalist with a focus on Dev(Sec)Ops/CICD. Bringing his experience implementing cloud solutions in high compliance environments, he is passionate about helping customers successfully deliver modern cloud-based services to their users. Nicolas Bernier is an AI/ML Solutions Architect, part of the Canadian Public Sector team at AWS. He is currently conducting research in Federated Learning and holds five AWS certifications, including the ML Specialty Certification. Nicolas is passionate about helping customers deepen their knowledge of AWS by working with them to translate their business challenges into technical solutions.

Nicolas Bernier is an AI/ML Solutions Architect, part of the Canadian Public Sector team at AWS. He is currently conducting research in Federated Learning and holds five AWS certifications, including the ML Specialty Certification. Nicolas is passionate about helping customers deepen their knowledge of AWS by working with them to translate their business challenges into technical solutions. Pooja Ayre is a seasoned IT professional with over 9 years of experience in product development, having worn multiple hats throughout her career. For the past two years, she has been with AWS as a Solutions Architect, specializing in AI/ML. Pooja is passionate about technology and dedicated to finding innovative solutions that help customers overcome their roadblocks and achieve their business goals through the strategic use of technology. Her deep expertise and commitment to excellence make her a trusted advisor in the IT industry.

Pooja Ayre is a seasoned IT professional with over 9 years of experience in product development, having worn multiple hats throughout her career. For the past two years, she has been with AWS as a Solutions Architect, specializing in AI/ML. Pooja is passionate about technology and dedicated to finding innovative solutions that help customers overcome their roadblocks and achieve their business goals through the strategic use of technology. Her deep expertise and commitment to excellence make her a trusted advisor in the IT industry.