Using deep learning to solve fundamental problems in computational quantum chemistry and explore how matter interacts with lightRead More

FermiNet: Quantum physics and chemistry from first principles

Using deep learning to solve fundamental problems in computational quantum chemistry and explore how matter interacts with lightRead More

FermiNet: Quantum physics and chemistry from first principles

Using deep learning to solve fundamental problems in computational quantum chemistry and explore how matter interacts with lightRead More

FermiNet: Quantum physics and chemistry from first principles

Using deep learning to solve fundamental problems in computational quantum chemistry and explore how matter interacts with lightRead More

Fine tune a generative AI application for Amazon Bedrock using Amazon SageMaker Pipeline decorators

Building a deployment pipeline for generative artificial intelligence (AI) applications at scale is a formidable challenge because of the complexities and unique requirements of these systems. Generative AI models are constantly evolving, with new versions and updates released frequently. This makes managing and deploying these updates across a large-scale deployment pipeline while providing consistency and minimizing downtime a significant undertaking. Generative AI applications require continuous ingestion, preprocessing, and formatting of vast amounts of data from various sources. Constructing robust data pipelines that can handle this workload reliably and efficiently at scale is a considerable challenge. Monitoring the performance, bias, and ethical implications of generative AI models in production environments is a crucial task.

Achieving this at scale necessitates significant investments in resources, expertise, and cross-functional collaboration between multiple personas such as data scientists or machine learning (ML) developers who focus on developing ML models and machine learning operations (MLOps) engineers who focus on the unique aspects of AI/ML projects and help improve delivery time, reduce defects, and make data science more productive. In this post, we show you how to convert Python code that fine-tunes a generative AI model in Amazon Bedrock from local files to a reusable workflow using Amazon SageMaker Pipelines decorators. You can use Amazon SageMaker Model Building Pipelines to collaborate between multiple AI/ML teams.

SageMaker Pipelines

You can use SageMaker Pipelines to define and orchestrate the various steps involved in the ML lifecycle, such as data preprocessing, model training, evaluation, and deployment. This streamlines the process and provides consistency across different stages of the pipeline. SageMaker Pipelines can handle model versioning and lineage tracking. It automatically keeps track of model artifacts, hyperparameters, and metadata, helping you to reproduce and audit model versions.

The SageMaker Pipelines decorator feature helps convert local ML code written as a Python program into one or more pipeline steps. Because Amazon Bedrock can be accessed as an API, developers who don’t know Amazon SageMaker can implement an Amazon Bedrock application or fine-tune Amazon Bedrock by writing a regular Python program.

You can write your ML function as you would for any ML project. After being tested locally or as a training job, a data scientist or practitioner who is an expert on SageMaker can convert the function to a SageMaker pipeline step by adding a @step decorator.

Solution overview

SageMaker Model Building Pipelines is a tool for building ML pipelines that takes advantage of direct SageMaker integration. Because of this integration, you can create a pipeline for orchestration using a tool that handles much of the step creation and management for you.

As you move from pilot and test phases to deploying generative AI models at scale, you will need to apply DevOps practices to ML workloads. SageMaker Pipelines is integrated with SageMaker, so you don’t need to interact with any other AWS services. You also don’t need to manage any resources because SageMaker Pipelines is a fully managed service, which means that it creates and manages resources for you. Amazon SageMaker Studio offers an environment to manage the end-to-end SageMaker Pipelines experience. The solution in this post shows how you can take Python code that was written to preprocess, fine-tune, and test a large language model (LLM) using Amazon Bedrock APIs and convert it into a SageMaker pipeline to improve ML operational efficiency.

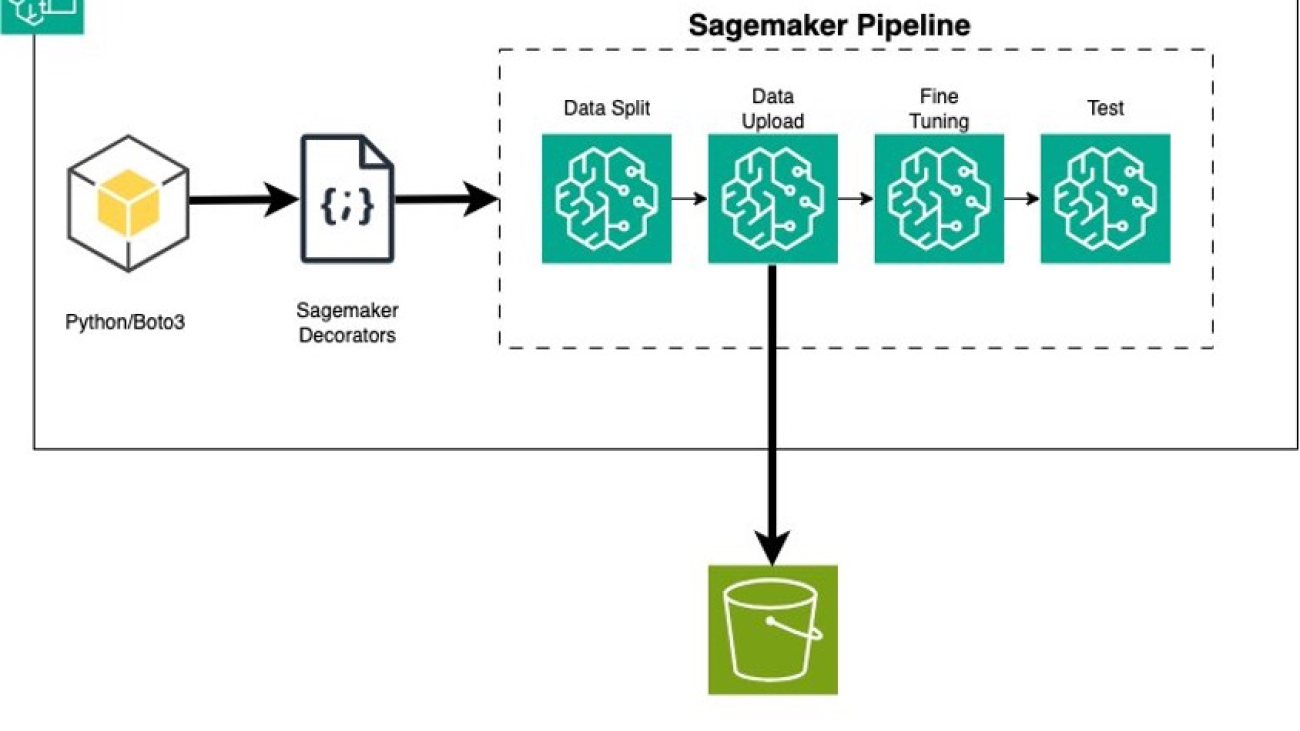

The solution has three main steps:

- Write Python code to preprocess, train, and test an LLM in Amazon Bedrock.

- Add

@stepdecorated functions to convert the Python code to a SageMaker pipeline. - Create and run the SageMaker pipeline.

The following diagram illustrates the solution workflow.

Prerequisites

If you just want to view the notebook code, you can view the notebook on GitHub.

If you’re new to AWS, you first need to create and set up an AWS account. Then you will set up SageMaker Studio in your AWS account. Create a JupyterLab space within SageMaker Studio to run the JupyterLab application.

When you’re in the SageMaker Studio JupyterLab space, complete the following steps:

- On the File menu, choose New and Terminal to open a new terminal.

- In the terminal, enter the following code:

- You will see the folder caller

amazon-sagemaker-examplesin the SageMaker Studio File Explorer pane. - Open the folder

amazon-sagemaker-examples/sagemaker-pipelines/step-decorator/bedrock-examples. - Open the notebook

fine_tune_bedrock_step_decorator.ipynb.

This notebook contains all the code for this post, and you can run it from beginning to end.

Explanation of the notebook code

The notebook uses the default Amazon Simple Storage Service (Amazon S3) bucket for the user. The default S3 bucket follows the naming pattern s3://sagemaker-{Region}-{your-account-id}. If it doesn’t already exist, it will be automatically created.

It uses the SageMaker Studio default AWS Identity and Access Management (IAM) role for the user. If your SageMaker Studio user role doesn’t have administrator access, you need to add the necessary permissions to the role.

For more information, refer to the following:

- CreateTrainingJob API: Execution Role Permissions

- Identity-based policy examples for Amazon Bedrock

- Create a service role for model customization

- IAM Access Management

It creates a SageMaker session and gets the default S3 bucket and IAM role:

Use Python to preprocess, train, and test an LLM in Amazon Bedrock

To begin, we need to download data and prepare an LLM in Amazon Bedrock. We use Python to do this.

Load data

We use the CNN/DailyMail dataset from Hugging Face to fine-tune the model. The CNN/DailyMail dataset is an English-language dataset containing over 300,000 unique news articles as written by journalists at CNN and the Daily Mail. The raw dataset includes the articles and their summaries for training, validation, and test. Before we can use the dataset, it must be formatted to include the prompt. See the following code:

Split data

Split the dataset into training, validation, and testing. For this post, we restrict the size of each row to 3,000 words and select 100 rows for training, 10 for validation, and 5 for testing. You can follow the notebook in GitHub for more details.

Upload data to Amazon S3

Next, we convert the data to JSONL format and upload the training, validation, and test files to Amazon S3:

Train the model

Now that the training data is uploaded in Amazon S3, it’s time to fine-tune an Amazon Bedrock model using the CNN/DailyMail dataset. We fine-tune the Amazon Titan Text Lite model provided by Amazon Bedrock for a summarization use case. We define the hyperparameters for fine-tuning and launch the training job:

Create Provisioned Throughput

Throughput refers to the number and rate of inputs and outputs that a model processes and returns. You can purchase Provisioned Throughput to provision dedicated resources instead of on-demand throughput, which could have performance fluctuations. For customized models, you must purchase Provisioned Throughput to be able to use it. See Provisioned Throughput for Amazon Bedrock for more information.

Test the model

Now it’s time to invoke and test the model. We use the Amazon Bedrock runtime prompt from the test dataset along with the ID of the Provisioned Throughput that was set up in the previous step and inference parameters such as maxTokenCount, stopSequence, temperature, and top:

Decorate functions with @step that converts Python functions into a SageMaker pipeline steps

The @step decorator is a feature that converts your local ML code into one or more pipeline steps. You can write your ML function as you would for any ML project and then create a pipeline by converting Python functions into pipeline steps using the @step decorator, creating dependencies between those functions to create a pipeline graph or directed acyclic graph (DAG), and passing the leaf nodes of that graph as a list of steps to the pipeline. To create a step using the @step decorator, annotate the function with @step. When this function is invoked, it receives the DelayedReturn output of the previous pipeline step as input. An instance holds the information about all the previous steps defined in the function that form the SageMaker pipeline DAG.

In the notebook, we already added the @step decorator at the beginning of each function definition in the cell where the function was defined, as shown in the following code. The function’s code will come from the fine-tuning Python program that we’re trying to convert here into a SageMaker pipeline.

Create and run the SageMaker pipeline

To bring it all together, we connect the defined pipeline @step functions into a multi-step pipeline. Then we submit and run the pipeline:

After the pipeline has run, you can list the steps of the pipeline to retrieve the entire dataset of results:

You can track the lineage of a SageMaker ML pipeline in SageMaker Studio. Lineage tracking in SageMaker Studio is centered around a DAG. The DAG represents the steps in a pipeline. From the DAG, you can track the lineage from any step to any other step. The following diagram displays the steps of the Amazon Bedrock fine-tuning pipeline. For more information, refer to View a Pipeline Execution.

By choosing a step on the Select step dropdown menu, you can focus on a specific part of the graph. You can view detailed logs of each step of the pipeline in Amazon CloudWatch Logs.

Clean up

To clean up and avoid incurring charges, follow the detailed cleanup instructions in the GitHub repo to delete the following:

- The Amazon Bedrock Provisioned Throughput

- The customer model

- The Sagemaker pipeline

- The Amazon S3 object storing the fine-tuned dataset

Conclusion

MLOps focuses on streamlining, automating, and monitoring ML models throughout their lifecycle. Building a robust MLOps pipeline demands cross-functional collaboration. Data scientists, ML engineers, IT staff, and DevOps teams must work together to operationalize models from research to deployment and maintenance. SageMaker Pipelines allows you to create and manage ML workflows while offering storage and reuse capabilities for workflow steps.

In this post, we walked you through an example that uses SageMaker step decorators to convert a Python program for creating a custom Amazon Bedrock model into a SageMaker pipeline. With SageMaker Pipelines, you get the benefits of an automated workflow that can be configured to run on a schedule based on the requirements for retraining the model. You can also use SageMaker Pipelines to add useful features such as lineage tracking and the ability to manage and visualize your entire workflow from within the SageMaker Studio environment.

AWS provides managed ML solutions such as Amazon Bedrock and SageMaker to help you deploy and serve existing off-the-shelf foundation models or create and run your own custom models.

See the following resources for more information about the topics discussed in this post:

- JumpStart Foundation Models

- SageMaker Pipelines Overview

- Create and Manage SageMaker Pipelines

- Using generative AI on AWS for diverse content types

About the Authors

Neel Sendas is a Principal Technical Account Manager at Amazon Web Services. Neel works with enterprise customers to design, deploy, and scale cloud applications to achieve their business goals. He has worked on various ML use cases, ranging from anomaly detection to predictive product quality for manufacturing and logistics optimization. When he isn’t helping customers, he dabbles in golf and salsa dancing.

Neel Sendas is a Principal Technical Account Manager at Amazon Web Services. Neel works with enterprise customers to design, deploy, and scale cloud applications to achieve their business goals. He has worked on various ML use cases, ranging from anomaly detection to predictive product quality for manufacturing and logistics optimization. When he isn’t helping customers, he dabbles in golf and salsa dancing.

Ashish Rawat is a Senior AI/ML Specialist Solutions Architect at Amazon Web Services, based in Atlanta, Georgia. Ashish has extensive experience in Enterprise IT architecture and software development including AI/ML and generative AI. He is instrumental in guiding customers to solve complex business challenges and create competitive advantage using AWS AI/ML services.

Ashish Rawat is a Senior AI/ML Specialist Solutions Architect at Amazon Web Services, based in Atlanta, Georgia. Ashish has extensive experience in Enterprise IT architecture and software development including AI/ML and generative AI. He is instrumental in guiding customers to solve complex business challenges and create competitive advantage using AWS AI/ML services.

Straight Out of Gamescom and Into Xbox PC Games, GeForce NOW Newly Supports Automatic Xbox Sign-In

Straight out of Gamescom, NVIDIA introduced GeForce NOW support for Xbox automatic sign-in, as well as Black Myth: Wukong from Game Science and a demo for the PC launch of FINAL FANTASY XVI from Square Enix — all available in the cloud today.

There are more triple-A games coming to the cloud this GFN Thursday: Civilization VI, Civilization V and Civilization: Beyond Earth — some of the first games from publisher 2K — are available today for members to stream with GeForce quality.

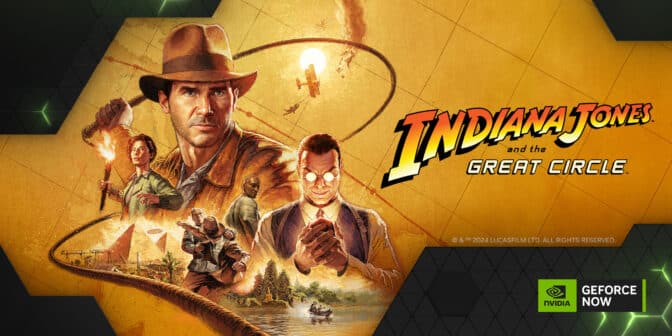

And members can look forward to playing the highly anticipated Indiana Jones and the Great Circle from Bethesda when it joins the cloud later this year.

Plus, GeForce NOW has added a data center in Warsaw, Poland, expanding low-latency, high-performance cloud gaming to members in the region.

It’s an action-packed GFN Thursday, with 25 new titles joining the cloud this week.

Instant Play, Every Day

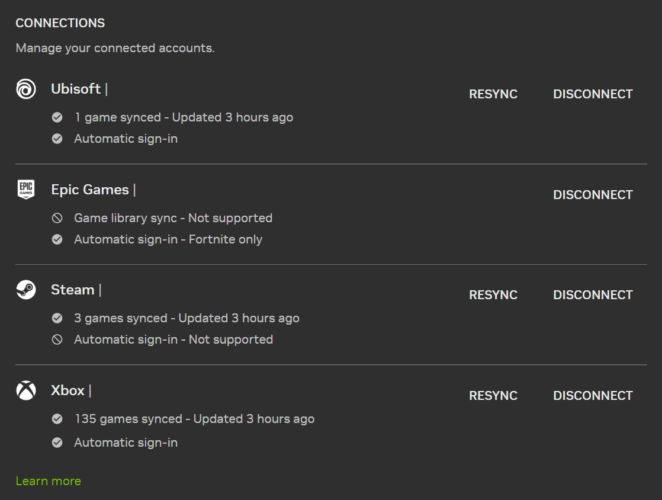

GeForce NOW is streamlining gaming convenience with Xbox account integration. Starting today, members can link their Xbox profile directly to the cloud service. After initial setup, members will be logged in automatically across all devices for future cloud gaming sessions, enabling them to dive straight into their favorite PC games.

The new feature builds on existing support for Epic Games and Ubisoft automatic sign-in — and complements Xbox game sync, which adds supported PC Game Pass and Microsoft Store titles to members’ cloud libraries. Gamers can enjoy a cohesive experience accessing over 140 PC Game Pass titles across devices without the need for repeated logins.

Go Bananas

Black Myth: Wukong, the highly anticipated action role-playing game (RPG) based on Chinese mythology, is now available to stream from the cloud.

Embark on the Monkey King’s epic journey in the action RPG inspired by Chinese mythology, wielding magical abilities and battling fierce monsters and gods across the breathtaking landscapes of ancient China.

GeForce NOW Ultimate members can experience the game’s stunning visuals and fluid combat — enhanced by NVIDIA RTX technologies such as full ray tracing and DLSS 3 — at up to 4K resolution and 120 frames per second, bringing the mystical world of Black Myth: Wukong to life.

Fantasy Becomes Reality

The latest mainline numbered entry in Square Enix’s renowned RPG series, FINAL FANTASY XVI will join the cloud when it launches on PC later this month. Members can try a demo of the highly anticipated game today.

Take a journey through the epic, dark-fantasy world of Valisthea, a land dominated by colossal Mothercrystals and divided among six powerful nations teetering on the brink of conflict. Follow the story of Clive Rosfield, a young knight on a quest for vengeance after a tragic betrayal. Dive into the high-octane action with real-time combat for fast-paced, dynamic battles that emphasize strategy and skill.

The demo offers a taste of the game’s stunning visuals, intricate storyline and innovative combat options. With GeForce NOW, gamers can experience the breathtaking world of Valisthea and stream it at up to 4K and 120 frames per second with an Ultimate membership.

Everybody Wants to Rule the World

Becoming history’s greatest leader has never been easier — the Sid Meier’s Civilization franchise from 2K is now available on GeForce NOW.

Since 1991, the award-winning Civilization series of turn-based strategy games has challenged players to build an empire to stand the test of time. Players assume the role of a famous historical leader, making crucial economic, political and military decisions to pursue prosperity and secure a path to victory.

Members can lead, expand and conquer from the cloud in the latest entries from the franchise, including Sid Meier’s Civilization VI, Civilization V, Civilization IV and Civilization: Beyond Earth. Manage a budding nation with support for ultrawide resolutions, and build empires on the go using low-powered devices like Chromebooks, Macs and more.

Adventure Calls, Dr. Jones

Uncover one of history’s greatest mysteries in Indiana Jones and the Great Circle. Members can stream the cinematic action-adventure game from the award-winning producers Bethesda Softworks, Lucasfilm and MachineGames at GeForce NOW Ultimate quality from the cloud when the title launches later this year.

In 1937, sinister forces are scouring the globe for the secret to an ancient power connected to the Great Circle, and only Indiana Jones can stop them. Become the legendary archaeologist and venture from the hallowed halls of the Vatican and the sunken temples of Sukhothai to the pyramids of Egypt and snowy Himalayan peaks.

Ultimate members can stream the game at up to 4K resolution and 120 fps, even on low-powered devices — as well as experience the adventure with support for full ray tracing, accelerated and enhanced by NVIDIA DLSS 3.5 with Ray Reconstruction.

Let’s Play Today

In the newest season of Skull and Bones, gear up to face imminent dangers on scorched seas — from the formidable Li Tian Ning and Commander Zhang, to a ferocious dragon that descends from the heavens. Join the battle in season 3 to earn exclusive new rewards through time-limited events such as Mooncake Regatta and Requiem of the Lost. Discover new quality-of-life improvements including a new third-person camera while at sea, new endgame features and an expanded Black Market.

Members can look for the following games available to stream in the cloud this week:

- Black Myth: Wukong (New release on Steam and Epic Games Store, Aug. 19)

- Final Fantasy XVI Demo (New release on Steam, Aug. 19)

- GIGANTIC: RAMPAGE EDITION (Available on Epic Games Store, free Aug. 22)

- Skull & Bones (New release on Steam, Aug. 22)

- Alan Wake’s American Nightmare (Xbox, available on Microsoft Store)

- Commandos 3 – HD Remaster (Xbox, available on Microsoft Store)

- Desperados III (Xbox, available on Microsoft Store)

- The Dungeon Of Naheulbeuk: The Amulet Of Chaos (Xbox, available on Microsoft Store)

- The Flame in the Flood (Xbox, available on Microsoft Store)

- FTL: Faster Than Light (Xbox, available on Microsoft Store)

- Genesis Noir (Xbox, available on PC Game Pass)

- House Flipper (Xbox, available on PC Game Pass)

- Medieval Dynasty (Xbox, available on PC Game Pass)

- My Time At Portia (Xbox, available on PC Game Pass)

- Night in the Wood (Xbox, available on Microsoft Store )

- Offworld Trading Company (Xbox, available on PC Game Pass)

- Orwell: Keeping an Eye On You (Xbox, available on Microsoft Store)

- Project Winter (Xbox, available on Microsoft Store)

- Shadow Tactics: Blades of the Shogun (Xbox, available on Microsoft Store)

- Sid Meier’s Civilization VI (Steam, Epic Games Store and Xbox, available on the Microsoft store)

- Sid Meier’s Civilization V (Steam)

- Sid Meier’s Civilization IV (Steam)

- Sid Meier’s Civilization: Beyond Earth (Steam)

- Spirit of the North (Xbox, available on PC Game Pass)

- Wreckfest (Xbox, available on PC Game Pass)

What are you planning to play this weekend? Let us know on X or in the comments below.

What’s Your Story: Lex Story

In the Microsoft Research Podcast series What’s Your Story, Johannes Gehrke explores the who behind the technical and scientific advancements helping to reshape the world. A systems expert whose 10 years with Microsoft spans research and product, Gehrke talks to members of the company’s research community about what motivates their work and how they got where they are today.

In this episode, Gehrke is joined by Lex Story, a model maker and fabricator whose craftsmanship has helped bring research to life through prototyping. He’s contributed to projects such as Jacdac, a hardware-software platform for connecting and coding electronics, and the biological monitoring and intelligence platform Premonition. Story shares how his father’s encouragement helped stoke a curiosity that has informed his pursuit of the sciences and art; how his experience with the Marine Corps intensified his desire for higher education; and how his heritage and a sabbatical in which he attended culinary school might inspire his next career move …

Learn about the projects Story has contributed to:

Subscribe to the Microsoft Research Podcast:

Transcript

[TEASER] [MUSIC PLAYS UNDER DIALOGUE]LEX STORY: Research is about iteration. It’s about failing and failing fast so that you can learn from it. You know, we spin on a dime. Sometimes, we go, whoa, we went the wrong direction. But we learn from it, and it just makes us better.

JOHANNES GEHRKE: Microsoft Research works at the cutting edge. But how much do we know about the people behind the science and technology that we create? This is What’s Your Story, and I’m Johannes Gehrke. In my 10 years with Microsoft, across product and research, I’ve been continuously excited and inspired by the people I work with, and I’m curious about how they became the talented and passionate people they are today. So I sat down with some of them. Now, I’m sharing their stories with you. In this podcast series, you’ll hear from them about how they grew up, the critical choices that shaped their lives, and their advice to others looking to carve a similar path.

[MUSIC FADES]In this episode, I’m talking with model maker and fabricator Lex Story. His creativity and technical expertise in computer-aided industrial design and machining are on display in prototypes and hardware across Microsoft—from Jacdac, a hardware-software platform for connecting and coding electronics, to the biological monitoring and intelligence platform Microsoft Premonition. But he didn’t start out in research. Encouraged by his father, he pursued opportunities to travel, grow, and learn. This led to service in the Marine Corps; work in video game development and jewelry design; and a sabbatical to attend culinary school. He has no plans of slowing down. Here’s my conversation with Lex, beginning with hardware development at Microsoft Research and his time growing up in San Bernardino, California.

GEHRKE: Welcome, Lex.

LEX STORY: Oh, thank you.

GEHRKE: Really great to have you here. Can you tell us a little bit about what you’re doing here at MSR (Microsoft Research) …

STORY: OK.

GEHRKE: … and how did you actually end up here?

STORY: Well, um, within MSR, I actually work in the hardware prototype, hardware development. I find solutions for the researchers, especially in the areas of developing hardware through various fabrication and industrial-like methods. I’m a model maker. My background is as an industrial designer and a product designer. So when I attended school initially, it was to pursue a science; it was [to] pursue chemistry.

GEHRKE: And you grew up in California?

STORY: I grew up in California. I was born in Inglewood, California, and I grew up in San Bernardino, California. Nothing really too exciting happening in San Bernardino, which is why I was compelled to find other avenues, especially to go seek out travel. To do things that I knew that I would be able to look back and say, yes, you’ve definitely done something that was beyond what was expected of you having grown up in San Bernardino.

GEHRKE: And you even had that drive during your high school, or …

STORY: Yeah, high school just didn’t feel like … I think it was the environment that I was growing up in; it didn’t feel as if they really wanted to foster exceptional growth. And I had a father who was … had multiple degrees, and he had a lot of adversity, and he had a lot of challenges. He was an African American. He was a World War II veteran. But he had attained degrees, graduate degrees, in various disciplines, and that included chemical engineering, mechanical engineering, and electrical engineering.

GEHRKE: Wow. All three of them?

STORY: Yes. And so he was … had instilled into us that, you know, education is a vehicle, and if you want to leave this small town, this is how you do it you. But you need to be a vessel. You need to absorb as much as you can from a vast array of disciplines. And not only was he a man of science; he was also an artist. So he always fostered that in us. He said, you know, explore, gain new skills, and the collection of those skills will make you greater overall. He’s not into this idea of being such a specialist. He says lasers are great, but lasers can be blind to what’s happening around them. He says you need to be a spotlight. And he says then you have a great effect on a large—vast, vast array of things instead of just being focused on one thing.

GEHRKE: So you grew up in this environment where the idea was to, sort of, take a holistic view and not, like, a myopic view …

STORY: Yes, yes, yes.

GEHRKE: And so what is the impact of that on you?

STORY: Well, as soon as I went into [LAUGHS] the Marine Corps, I said, now I can attain my education. And …

GEHRKE: So right after school, you went to the …

STORY: I went directly into the Marine Corps right after high school graduation.

GEHRKE: And you told me many times, this is not the Army, right?

STORY: No, it’s the Marine Corps. It’s a big differentiation between … they’re both in military service. However, the Marine Corps is very proud of its traditions, and they instill that in us during boot camp, your indoctrination. It is drilled upon you that you are not just an arm of the military might. You are a professional. You are representative. You will basically become a reflection of all these other Marines who came before you, and you will serve as a point of the young Marines who come after you. So that was drilled into us from day one of boot camp. It was … but it was very grueling. You know, that was the one aspect, and there was a physical aspect. And the Marine Corps boot camp is the longest of all the boot camps. It was, for me, it was 12 weeks of intensive, you know, training. So, you know, the indoctrination is very deep.

GEHRKE: And then so it’s your high school, and you, sort of, have this holistic thinking that you want to bring in.

STORY: Yes.

GEHRKE: And then you go to the Marines.

STORY: I go to the Marines. And the funny thing is that I finished my enlistment, and after my enlistment, I enroll in college, and I say, OK, great; that part of my … phase of life is over. However, I’m still active reserve, and the Desert Shield comes up. So I’m called back, and I said, OK, well, I can come back. I served in a role as an NBC instructor. “NBC” stands for nuclear, biological, chemical warfare. And one of the other roles that I had in the Marine Corps, I was also a nuke tech. That means I knew how to deploy artillery-delivered nuclear-capable warheads. So I had this very technical background mixed in with, like, this military, kind of, decorum. And so I served in Desert Shield, and then eventually that evolved into Operation Desert Storm, and once that was over, I was finally able to go back and actually finish my schooling.

GEHRKE: Mm-hmm. So you studied for a couple of years and then you served?

STORY: Oh, yes, yes.

GEHRKE: OK. OK.

STORY: I had done a four-year enlistment, and you have a period of years after your enlistment where you can be recalled, and it would take very little time for you to get wrapped up for training again to be operational.

GEHRKE: Well, that must be a big disruption right in the middle of your studies, and …

STORY: It was a disruption that …

GEHRKE: And thank you for your service.

STORY: [LAUGHS] Thank you. I appreciate that. It was a disruption, but it was a welcome disruption because, um, it was a job that I knew that I could do well. So I was willing to do it. And when I was ready for college again, it made me a little hungrier for it.

GEHRKE: And you’re already a little bit more mature than the average college student …

STORY: Oh, yes.

GEHRKE: … when you entered, and then now you’re coming back from your, sort of, second time.

STORY: I think it was very important for me to actually have that military experience because [through] that military experience, I had matured. And by the time I was attending college, I wasn’t approaching it as somebody who was in, you know, their teenage years and it’s still formative; you’re still trying to determine who you are as a person. The military had definitely shown me, you know, who I was as a person, and I actually had a few, you know, instances where I actually saw some very horrible things. If anything, being in a war zone during war time, it made me a pacifist, and I have … it increased my empathy. So if anything, there was a benefit from it. I saw some very horrible things, and I saw some amazing things come from human beings on both ends of the spectrum.

GEHRKE: And it’s probably something that’s influenced the rest of your life also in terms of where you went as your career, right?

STORY: Yes.

GEHRKE: So what were you studying, and then what were your next steps?

STORY: Well, I was studying chemistry.

GEHRKE: OK, so not only chemistry and mechanical engineering …

STORY: And then I went away, off to Desert Storm, and when I came back, I decided I didn’t want to study chemistry anymore. I was very interested in industrial design and graphic design, and as I was attending, at ArtCenter College of Design in Pasadena, California, there was this new discipline starting up, but it was only for graduates. It was a graduate program, and it was called computer-aided industrial design. And I said, wait a minute, what am I doing? This is something that I definitely want to do. So it was, like, right at the beginning of computer-generated imagery, and I had known about CAD in a very, very rudimentary form. My father, luckily, had introduced me to computers, so as I was growing up a child in the ’70s and the ’80s, we had computers in our home because my dad was actually building them. So his background and expertise—he was working for RCA; he was working for Northrop Grumman. So I was very familiar with those.

GEHRKE: You built PCs at home, or what, what … ?

STORY: Oh, he built PCs. I learned to program. So I …

GEHRKE: What was your first programming language?

STORY: Oh, it was BASIC …

GEHRKE: BASIC. OK, yup.

STORY: … of course. It was the only thing I could check out in the library that I could get up and running on. So I was surrounded with technology. While most kids went away, summer camp, I spent my summer in the garage with my father. He had metalworking equipment. I understood how to operate metal lathes. I learned how to weld. I learned how to rebuild internal combustion engines. So my childhood was very different from what most children had experienced during their summer break. And also at that time, he was working as a … in chemistry. So his job then, I would go with him and visit his job and watch him work in a lab environment. So it was very, very unique. But also the benefit of that is that being in a lab environment was connected to other sciences. So I got to see other departments. I got to see the geology department. I got to see … there was disease control in the same department that he was in. So I was exposed to all these things. So I was always very hungry and interested, and I was very familiar with sciences. So looking at going back into art school, I said, oh, I’m going to be an industrial designer, and I dabble in art. And I said, wait a minute. I can use technology, and I can create things, and I can guide machines. And that’s the CAM part, computer-aided machining. So I was very interested in that. And then having all of this computer-generated imagery knowledge, I did one of the most knuckleheaded things I could think of, and I went into video game development.

GEHRKE: Why is it knuckleheaded? I mean, it’s probably just the start of big video games.

STORY: Well, I mean … it wasn’t, it wasn’t a science anymore. It was just pursuit of art. And while I was working in video game development, it was fun. I mean, no doubt about it. And that’s how I eventually came to Microsoft, is the company I was working for was bought, purchased by Microsoft.

GEHRKE: But why is it only an art? I’m so curious about this because even computer games, right, there’s probably a lot of science about A/B testing, science of the infrastructure …

STORY: Because I was creating things strictly for the aesthetics.

GEHRKE: I see.

STORY: And I had the struggle in the back of my mind. It’s, like, why don’t we try to create things so that they’re believable, and there’s a break you have to make, and you have to say, is this entertaining? Because in the end, it’s entertainment. And I’ve always had a problem with that.

GEHRKE: It’s about storytelling though, right?

STORY: Yes, it is about storytelling. And that was one of the things that was always told to us: you’re storytellers. But eventually, it wasn’t practical, and I wanted to be impactful, and I couldn’t be impactful doing that. I could entertain you. Yeah, that’s great. It can add some levity to your life. But I was hungry for other things, so I took other jobs eventually. I thought I was going to have a full career with it, and I decided, no, this is not the time to do it.

GEHRKE: That’s a big decision though, right?

STORY: Oh, yeah. Yeah.

GEHRKE: Because, you know, you had a good job at a game company, and then you decided to …

STORY: But there was no, there was no real problem solving for me.

GEHRKE: I see. Mm-hmm.

STORY: And there was opportunity where there was a company, and they were using CAD, and they were running wax printers, and it was a jewel company. And I said, I can do jewelry.

GEHRKE: So what is a wax printer? Explain that.

STORY: Well, here’s … the idea is you can do investment casting.

GEHRKE: Yeah.

STORY: So if you’re creating all your jewelry with CAD, then you can be a jewelry designer and you can have something practical. The reason I took those jobs is because I wanted to learn more about metallurgy and metal casting. And I did that for a bit. And then, eventually, I—because of my computer-generated imagery background—I was able to find a gig with HoloLens. And so as I was working with HoloLens, I kept hearing about research, and they were like, oh yeah, look at this technology research created, and I go, where’s this research department? So I had entertained all these thoughts that maybe I should go and see if I can seek these guys out. And I did find them eventually. My previous manager, Patrick Therien, he brought me in, and I had an interview with him, and he asked me some really poignant questions. And he was a mechanical engineer by background. And I said, I really want to work here, and I need to show you that I can do the work. And he says, you don’t need to prove to me that you can do the work; you have to prove to me that you’re willing to figure it out.

GEHRKE: So how did you do that, or how did you show him?

STORY: I showed him a few examples. I came up with a couple of ideas, and then I demonstrated some solutions, and I was able to present those things to him during the interview. And so I came in as a vendor, and I said, well, if I apply myself, you know, rigorously enough, they’ll see the value in it. And, luckily, I caught the eye of …was it … Gavin [Jancke], and it was Patrick. And they all vouched for me, and they said, yeah, definitely, I have something that I can bring. And it’s always a challenge. The projects that come in, sometimes we don’t know what the solution is going to be, and we have to spend a lot of time thinking about how we’re going to approach it. And we also have to be able to approach it within the scope of what their project entails. They’re trying to prove a concept. They’re trying to publish. I want to make everything look like a car, a beautiful, svelte European designed … but that’s not always what’s asked. So I do have certain parameters I have to stay within, and it’s exciting, you know, to come up with these solutions. I’m generating a concept that in the end becomes a physical manifestation.

GEHRKE: Yeah, so how do you balance this? Because, I mean, from, you know, just listening to your story so far, which is really fascinating, is that there’s always this balance not only on the engineering side but also on the design and art side.

STORY: Yes!

GEHRKE: And then a researcher comes to you and says, I want x.

STORY: Yes, yes, yes. [LAUGHS]

GEHRKE: So how do you, how do you balance that?

STORY: It’s understanding my roles and responsibilities.

GEHRKE: OK.

STORY: It’s a tough conversation. It’s a conversation that I have often with my manager. Because in the end, I’m providing a service, and there are other outlets for me still. Every day, I draw. I have an exercise of drawing where I sit down for at least 45 minutes every day, and I put pen to paper because that is an outlet. I’m a voracious reader. I tackle things because—on a whim. It’s not necessarily that I’m going to become a master of it. So that’s why I attended culinary school. Culinary school fell into this whole curiosity with molecular gastronomy. And I said, wait a minute, I don’t want to be an old man …

GEHRKE: So culinary school is like really very, very in-depth understanding the chemistry of cooking. I mean, the way you understand it …

STORY: Yeah, the molecular gastronomy, the chemistry of cooking. Why does this happen? What is caramelization? What’s the Maillard effect?

GEHRKE: So it’s not about just the recipe for this cake, or so …

STORY: No … the one thing you learn in culinary school very quickly is recipes are inconsequential.

GEHRKE: Oh, really?

STORY: It’s technique.

GEHRKE: OK.

STORY: Because if I have a technique and I know what a roux is and what a roux is doing—and a roux is actually gelatinizing another liquid; it’s a carrier. Once you know these techniques and you can build on those techniques, recipes are irrelevant. Now, the only time recipes matter is when you’re dealing with specific ratios, but that’s still chemistry, and that’s only in baking. But everything else is all technique. I know how to break down the, you know, the connective tissue of a difficult cut of meat. I know what caramelization adds. I understand things like umami. So I look at things in a very, very different way than most people. I’m not like a casual cook, which drove me to go work for Cook’s Illustrated and America’s Test Kitchen, outside of Boston. Because it wasn’t so much about working in a kitchen; it was about exploration, a process. That all falls back into that maddening, you know, part of my personality … it’s like, what is the process? How can I improve that—how can I harness that process?

GEHRKE: So how was it to work there? Because I see food again as, sort of, this beautiful combination of engineering in some sense, creating the recipe. But then there’s also the art of it, right? The presentation.

STORY: Yes …

GEHRKE: And how do you actually put the different flavors together?

STORY: Well, a lot of that’s familiarity because it’s like chemistry. You have familiarity with reactions; you have familiarity and comparisons. So that all falls back into the science. Of course, when I plate it, that falls … I’m now borrowing on my aesthetics, my ability to create aesthetic things. So it fulfills all of those things. So, and that’s why I said, I don’t want to be an old man and say, oh, I wish I’d learned this. I wanted to attend school. I took a sabbatical, attended culinary school.

GEHRKE: So you took a sabbatical from Microsoft?

STORY: Oh, yes, when I was working in video games. Yeah.

GEHRKE: OK.

STORY: I took a sabbatical. I did that. And I was like, great. I got that out of the way. Who’s to say I don’t open a food truck?

GEHRKE: Yeah, I was just wondering, what else is on your bucket list, you know?

STORY: [LAUGHS] I definitely want to do the food truck eventually.

GEHRKE: OK, what would the food truck be about?

STORY: OK. My heritage, my background, is that I’m half Filipino and half French Creole Black.

GEHRKE: You also had a huge family. There’s probably a lot of really good cooking.

STORY: Oh, yeah. Well, I have stepbrothers and stepsisters from my Mexican stepmother, and she grew up cooking Mexican dishes. She was from the Sinaloa area of Mexico. And so I learned a lot of those things, very, very unique regional things that were from her area that you can’t find anywhere else.

GEHRKE: What’s an example? Now you’ve made me curious.

STORY: Capirotada. Capirotada is a Mexican bread pudding, and it utilizes a lot of very common techniques, but the ingredients are very specific to that region. So the preparation is very different. And I’ve had a lot of people actually come to me and say, I’ve never had capirotada like that. And then I have other people who say, that is exactly the way I had it. And by the way, my, you know, my family member was from the Sinaloa area. So, yeah, but from my Filipino heritage background, I would love to do something that is a fusion of Filipino foods. There’s a lot of great, great food like longganisa; there’s a pancit. There’s adobo. That’s actually adding vinegars to braised meats and getting really great results that way. It’s just a … but there’s a whole bevy of … but my idea eventually for a food truck, I’m going to keep that under wraps for now until I finally reveal it. Who’s, who’s to say when it happens.

GEHRKE: OK. Wow, that sounds super interesting. And so you bring all of these elements back into your job here at MSR in a way, because you’re saying, well, you have these different outlets for your art. But then you come here, and … what are some of the things that you’ve created over the last few years that you’re especially proud of?

STORY: Oh, phew … that would … Project Eclipse.

GEHRKE: Eclipse, uh-huh.

STORY: That’s the hyperlocal air-quality sensor.

GEHRKE: And this is actually something that was really deployed in cities …

STORY: Yes. It was deployed in Chicago …

GEHRKE: … so it had to be both aesthetically good and … to look nice, not only functional.

STORY: Well, it had not only … it had … first of all, I approached it from it has to be functional. But knowing that it was going to deploy, I had to design everything with a design for manufacturing method. So DFM—design for manufacturing—is from the ground up, I have to make sure that there are certain features as part of the design, and that is making sure I have draft angles because the idea is that eventually this is going to be a plastic-injected part.

GEHRKE: What is a draft angle?

STORY: A draft angle is so that a part can get pulled from a mold.

GEHRKE: OK …

STORY: If I build things with pure vertical walls, there’s too much even stress that the part will not actually extract from the mold. Every time you look at something that’s plastic injected, there’s something called the draft angle, where there’s actually a slight taper. It’s only 2 to 4 degrees, but it’s in there, and it needs to be in there; otherwise, you’re never going to get the part out of the mold. So I had to keep that in mind. So from the ground up, I had designed this thing—the end goal of this thing is for it to be reproduced in a production capacity. And so DFM was from day one. They came to me earlier, and they gave me a couple of parts that they had prototyped on a 3D printer. So I had to go through and actually re-engineer the entire design so that it would be able to hold the components, but …

GEHRKE: And to be waterproof and so on, right?

STORY: Well, waterproofing, that was another thing. We had a lot of iterations—and that was the other thing about research. Research is about iteration. It’s about failing and failing fast so that you can learn from it. Failure is not a four-lettered word. In research, we fail so that we use that as a steppingstone so that we can make discoveries and then succeed on that …

GEHRKE: We learn.

STORY: Yes, it’s a learning opportunity. As a matter of fact, the very first time we fail, I go to the whiteboard and write “FAIL” in big capital letters. It’s our very first one, and it’s our “First Attempt In Learning.” And that’s what I remember it as. It’s my big acronym. But it’s a great process. You know, we spin on a dime. Sometimes, we go, whoa, we went the wrong direction. But we learn from it, and it just makes us better.

GEHRKE: And sometimes you have to work under time pressure because, you know, there’s no …

STORY: There isn’t a single thing we don’t do in the world that isn’t under time pressure. Working in a restaurant … when I had to, as they say, grow my bones after culinary school, you work in a restaurant, and you gain that experience. And one of the …

GEHRKE: So in your sabbatical, you didn’t only go to culinary school; you actually worked in this restaurant, as well?

STORY: Oh, it’s required.

GEHRKE: It’s a requirement? OK.

STORY: Yeah, yeah, it’s a requirement that you understand, you familiarize yourself with the rigor. So one of the things we used to do is … there was a Denny’s next to LAX in Los Angeles. Because I was attending school in Pasadena. And I would go and sign up to be the fry cook at a Denny’s that doesn’t close. It’s 24 hours.

GEHRKE: Yup …

STORY: And these people would come in, these taxis would come in, and they need to eat, and they need to get in and out.

GEHRKE: As a student, I would go to Denny’s at absurd times …

STORY: Oh, my, it was like drinking from a fire hose. I was getting crushed every night. But after a while, you know, within two or three weeks, I was like a machine, you know. And it was just like, oh, that’s not a problem. Oh, I have five orders here of this. And I need to make sure those are separated from these orders. And you have this entire process, this organization that happens in the back of your mind, you know. And that’s part of it. I mean, every job I’ve ever had, there’s always going to be a time pressure.

GEHRKE: But it must be even more difficult in research because you’re not building like, you know, Denny’s, I think you can fry probably five or 10 different things. Whereas here, you know, everything is unique, and everything is different. And then you, you know, you learn and improve and fail.

STORY: Yes, yes. But, I mean, it’s … but it’s the same as dealing with customers. Everyone’s going to have a different need and a different … there’s something that everyone’s bringing unique to the table. And when I was working at Denny’s, you’re going to have the one person that’s going to make sure that, oh, they want something very, very specific on their order. It’s no different than I’m working with, you know, somebody I’m offering a service to in a research environment.

GEHRKE: Mm-hmm. Mm-hmm. That’s true. I hadn’t even thought about this. Next time when I go to a restaurant, I’ll be very careful with the special orders. [LAUGHTER]

STORY: That’s why I’m exceptionally kind to those people who work in restaurants because I’ve been on the other side of the line.

GEHRKE: So, so you have seen many sides, right? And you especially are working also across developers, PMs, researchers. How do you bridge all of these different gaps? Because all of these different disciplines come with a different history and different expectations, and you work across all of them.

STORY: There was something somebody said to me years ago, and he says, never be the smartest guy in the room. Because at that point, you stop learning. And I was very lucky enough to work with great people like Mike Sinclair, Bill Buxton—visionaries. And one of the things that was always impressed upon me was they really let you shine, and they stepped back, and then when you had your chance to shine, they would celebrate you. And when it was their time to shine, you step back and make sure that they overshined. So it’s being extremely receptive to every idea. There’s nothing, there’s no … what do they say? The only bad idea is the lack of …

GEHRKE: Not having any ideas …

STORY: … having any ideas.

GEHRKE: Right, right …

STORY: Yeah. So being extremely flexible, receptive, willing to try things that even though they are uncomfortable, that’s I think where people find the most success.

GEHRKE: That’s such great advice. Reflecting back on your super-interesting career and all the different things that you’ve seen and also always stretching the boundaries, what’s your advice for anybody to have a great career if somebody’s starting out or is even changing jobs?

STORY: Gee, that’s a tough one. Starting out or changing—I can tell you about how to change jobs. Changing jobs … strip yourself of your ego. Be willing to be the infant, but also be willing to know when you’re wrong, and be willing to have your mind changed. That’s about it.

GEHRKE: Such, such great advice.

STORY: Yeah.

GEHRKE: Thanks so much, Lex, for the great, great conversation.

STORY: Not a problem. You’re welcome.

[MUSIC]To learn more about Lex and to see pictures of him as a child or from his time in the Marines, visit aka.ms/ResearcherStories.

[MUSIC FADES]

The post What’s Your Story: Lex Story appeared first on Microsoft Research.

Classifier-Free Guidance Is a Predictor-Corrector

We investigate the unreasonable effectiveness of classifier-free guidance (CFG).

CFG is the dominant method of conditional sampling for text-to-image diffusion models, yet

unlike other aspects of diffusion, it remains on shaky theoretical footing. In this paper, we disprove common misconceptions, by showing that CFG interacts differently with DDPM and DDIM, and neither sampler with CFG generates the gamma-powered distribution.

Then, we clarify the behavior of CFG by showing that it is a kind of Predictor-Corrector (PC) method that alternates between denoising and sharpening, which we call…Apple Machine Learning Research

Enhance call center efficiency using batch inference for transcript summarization with Amazon Bedrock

Today, we are excited to announce general availability of batch inference for Amazon Bedrock. This new feature enables organizations to process large volumes of data when interacting with foundation models (FMs), addressing a critical need in various industries, including call center operations.

Call center transcript summarization has become an essential task for businesses seeking to extract valuable insights from customer interactions. As the volume of call data grows, traditional analysis methods struggle to keep pace, creating a demand for a scalable solution.

Batch inference presents itself as a compelling approach to tackle this challenge. By processing substantial volumes of text transcripts in batches, frequently using parallel processing techniques, this method offers benefits compared to real-time or on-demand processing approaches. It is particularly well suited for large-scale call center operations where instantaneous results are not always a requirement.

In the following sections, we provide a detailed, step-by-step guide on implementing these new capabilities, covering everything from data preparation to job submission and output analysis. We also explore best practices for optimizing your batch inference workflows on Amazon Bedrock, helping you maximize the value of your data across different use cases and industries.

Solution overview

The batch inference feature in Amazon Bedrock provides a scalable solution for processing large volumes of data across various domains. This fully managed feature allows organizations to submit batch jobs through a CreateModelInvocationJob API or on the Amazon Bedrock console, simplifying large-scale data processing tasks.

In this post, we demonstrate the capabilities of batch inference using call center transcript summarization as an example. This use case serves to illustrate the broader potential of the feature for handling diverse data processing tasks. The general workflow for batch inference consists of three main phases:

- Data preparation – Prepare datasets as needed by the chosen model for optimal processing. To learn more about batch format requirements, see Format and upload your inference data.

- Batch job submission – Initiate and manage batch inference jobs through the Amazon Bedrock console or API.

- Output collection and analysis – Retrieve processed results and integrate them into existing workflows or analytics systems.

By walking through this specific implementation, we aim to showcase how you can adapt batch inference to suit various data processing needs, regardless of the data source or nature.

Prerequisites

To use the batch inference feature, make sure you have satisfied the following requirements:

- An active AWS account.

- An Amazon Simple Storage Service (Amazon S3) bucket where your data prepared for batch inference is stored. To learn more about uploading files in Amazon S3, see Uploading objects.

- Access to your selected models hosted on Amazon Bedrock. Refer to the supported models and their capabilities page for a complete list of supported models. Amazon Bedrock supports batch inference on the following modalities:

- Text to embeddings

- Text to text

- Text to image

- Image to images

- Image to embeddings

- An AWS Identity and Access Management (IAM) role for batch inference with a trust policy and Amazon S3 access (read access to the folder containing input data, and write access to the folder storing output data).

Prepare the data

Before you initiate a batch inference job for call center transcript summarization, it’s crucial to properly format and upload your data. The input data should be in JSONL format, with each line representing a single transcript for summarization.

Each line in your JSONL file should follow this structure:

Here, recordId is an 11-character alphanumeric string, working as a unique identifier for each entry. If you omit this field, the batch inference job will automatically add it in the output.

The format of the modelInput JSON object should match the body field for the model that you use in the InvokeModel request. For example, if you’re using Anthropic Claude 3 on Amazon Bedrock, you should use the MessageAPI and your model input might look like the following code:

When preparing your data, keep in mind the quotas for batch inference listed in the following table.

| Limit Name | Value | Adjustable Through Service Quotas? |

| Maximum number of batch jobs per account per model ID using a foundation model | 3 | Yes |

| Maximum number of batch jobs per account per model ID using a custom model | 3 | Yes |

| Maximum number of records per file | 50,000 | Yes |

| Maximum number of records per job | 50,000 | Yes |

| Minimum number of records per job | 1,000 | No |

| Maximum size per file | 200 MB | Yes |

| Maximum size for all files across job | 1 GB | Yes |

Make sure your input data adheres to these size limits and format requirements for optimal processing. If your dataset exceeds these limits, considering splitting it into multiple batch jobs.

Start the batch inference job

After you have prepared your batch inference data and stored it in Amazon S3, there are two primary methods to initiate a batch inference job: using the Amazon Bedrock console or API.

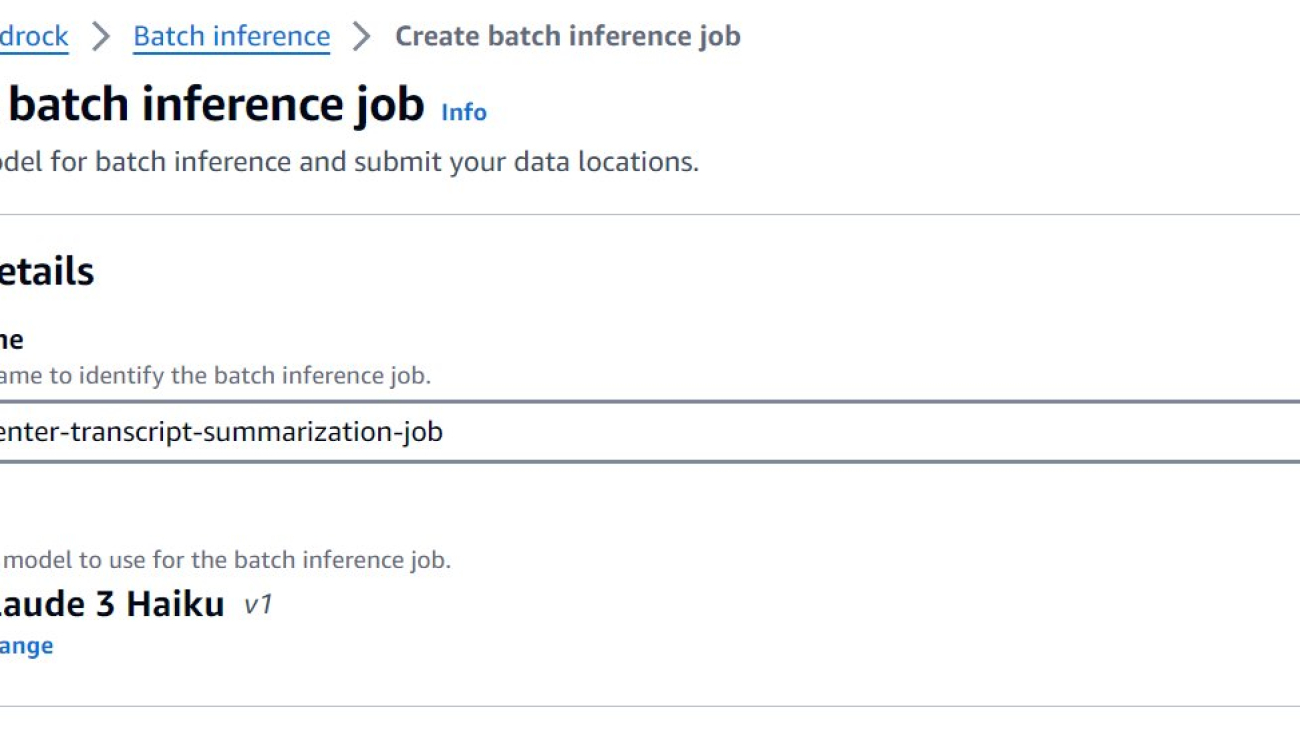

Run the batch inference job on the Amazon Bedrock console

Let’s first explore the step-by-step process of starting a batch inference job through the Amazon Bedrock console.

- On the Amazon Bedrock console, choose Inference in the navigation pane.

- Choose Batch inference and choose Create job.

- For Job name, enter a name for the training job, then choose an FM from the list. In this example, we choose Anthropic Claude-3 Haiku as the FM for our call center transcript summarization job.

- Under Input data, specify the S3 location for your prepared batch inference data.

- Under Output data, enter the S3 path for the bucket storing batch inference outputs.

- Your data is encrypted by default with an AWS managed key. If you want to use a different key, select Customize encryption settings.

- Under Service access, select a method to authorize Amazon Bedrock. You can select Use an existing service role if you have an access role with fine-grained IAM policies or select Create and use a new service role.

- Optionally, expand the Tags section to add tags for tracking.

- After you have added all the required configurations for your batch inference job, choose Create batch inference job.

You can check the status of your batch inference job by choosing the corresponding job name on the Amazon Bedrock console. When the job is complete, you can see more job information, including model name, job duration, status, and locations of input and output data.

Run the batch inference job using the API

Alternatively, you can initiate a batch inference job programmatically using the AWS SDK. Follow these steps:

- Create an Amazon Bedrock client:

- Configure the input and output data:

- Start the batch inference job:

- Retrieve and monitor the job status:

Replace the placeholders {bucket_name}, {input_prefix}, {output_prefix}, {account_id}, {role_name}, your-job-name, and model-of-your-choice with your actual values.

By using the AWS SDK, you can programmatically initiate and manage batch inference jobs, enabling seamless integration with your existing workflows and automation pipelines.

Collect and analyze the output

When your batch inference job is complete, Amazon Bedrock creates a dedicated folder in the specified S3 bucket, using the job ID as the folder name. This folder contains a summary of the batch inference job, along with the processed inference data in JSONL format.

You can access the processed output through two convenient methods: on the Amazon S3 console or programmatically using the AWS SDK.

Access the output on the Amazon S3 console

To use the Amazon S3 console, complete the following steps:

- On the Amazon S3 console, choose Buckets in the navigation pane.

- Navigate to the bucket you specified as the output destination for your batch inference job.

- Within the bucket, locate the folder with the batch inference job ID.

Inside this folder, you’ll find the processed data files, which you can browse or download as needed.

Access the output data using the AWS SDK

Alternatively, you can access the processed data programmatically using the AWS SDK. In the following code example, we show the output for the Anthropic Claude 3 model. If you used a different model, update the parameter values according to the model you used.

The output files contain not only the processed text, but also observability data and the parameters used for inference. The following is an example in Python:

In this example using the Anthropic Claude 3 model, after we read the output file from Amazon S3, we process each line of the JSON data. We can access the processed text using data['modelOutput']['content'][0]['text'], the observability data such as input/output tokens, model, and stop reason, and the inference parameters like max tokens, temperature, top-p, and top-k.

In the output location specified for your batch inference job, you’ll find a manifest.json.out file that provides a summary of the processed records. This file includes information such as the total number of records processed, the number of successfully processed records, the number of records with errors, and the total input and output token counts.

You can then process this data as needed, such as integrating it into your existing workflows, or performing further analysis.

Remember to replace your-bucket-name, your-output-prefix, and your-output-file.jsonl.out with your actual values.

By using the AWS SDK, you can programmatically access and work with the processed data, observability information, inference parameters, and the summary information from your batch inference jobs, enabling seamless integration with your existing workflows and data pipelines.

Conclusion

Batch inference for Amazon Bedrock provides a solution for processing multiple data inputs in a single API call, as illustrated through our call center transcript summarization example. This fully managed service is designed to handle datasets of varying sizes, offering benefits for various industries and use cases.

We encourage you to implement batch inference in your projects and experience how it can optimize your interactions with FMs at scale.

About the Authors

Yanyan Zhang is a Senior Generative AI Data Scientist at Amazon Web Services, where she has been working on cutting-edge AI/ML technologies as a Generative AI Specialist, helping customers use generative AI to achieve their desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves traveling, working out, and exploring new things.

Yanyan Zhang is a Senior Generative AI Data Scientist at Amazon Web Services, where she has been working on cutting-edge AI/ML technologies as a Generative AI Specialist, helping customers use generative AI to achieve their desired outcomes. Yanyan graduated from Texas A&M University with a PhD in Electrical Engineering. Outside of work, she loves traveling, working out, and exploring new things.

Ishan Singh is a Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Ishan Singh is a Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Rahul Virbhadra Mishra is a Senior Software Engineer at Amazon Bedrock. He is passionate about delighting customers through building practical solutions for AWS and Amazon. Outside of work, he enjoys sports and values quality time with his family.

Rahul Virbhadra Mishra is a Senior Software Engineer at Amazon Bedrock. He is passionate about delighting customers through building practical solutions for AWS and Amazon. Outside of work, he enjoys sports and values quality time with his family.

Mohd Altaf is an SDE at AWS AI Services based out of Seattle, United States. He works with AWS AI/ML tech space and has helped building various solutions across different teams at Amazon. In his spare time, he likes playing chess, snooker and indoor games.

Mohd Altaf is an SDE at AWS AI Services based out of Seattle, United States. He works with AWS AI/ML tech space and has helped building various solutions across different teams at Amazon. In his spare time, he likes playing chess, snooker and indoor games.

Fine-tune Meta Llama 3.1 models for generative AI inference using Amazon SageMaker JumpStart

Fine-tuning Meta Llama 3.1 models with Amazon SageMaker JumpStart enables developers to customize these publicly available foundation models (FMs). The Meta Llama 3.1 collection represents a significant advancement in the field of generative artificial intelligence (AI), offering a range of capabilities to create innovative applications. The Meta Llama 3.1 models come in various sizes, with 8 billion, 70 billion, and 405 billion parameters, catering to diverse project needs.

What makes these models stand out is their ability to understand and generate text with impressive coherence and nuance. Supported by context lengths of up to 128,000 tokens, the Meta Llama 3.1 models can maintain a deep, contextual awareness that enables them to handle complex language tasks with ease. Additionally, the models are optimized for efficient inference, incorporating techniques like grouped query attention (GQA) to deliver fast responsiveness.

In this post, we demonstrate how to fine-tune Meta Llama 3-1 pre-trained text generation models using SageMaker JumpStart.

Meta Llama 3.1

One of the notable features of the Meta Llama 3.1 models is their multilingual prowess. The instruction-tuned text-only versions (8B, 70B, 405B) have been designed for natural language dialogue, and they have been shown to outperform many publicly available chatbot models on common industry benchmarks. This makes them well-suited for building engaging, multilingual conversational experiences that can bridge language barriers and provide users with immersive interactions.

At the core of the Meta Llama 3.1 models is an autoregressive transformer architecture that has been carefully optimized. The tuned versions of the models also incorporate advanced fine-tuning techniques, such as supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF), to align the model outputs with human preferences. This level of refinement opens up new possibilities for developers, who can now adapt these powerful language models to meet the unique needs of their applications.

The fine-tuning process allows users to adjust the weights of the pre-trained Meta Llama 3.1 models using new data, improving their performance on specific tasks. This involves training the model on a dataset tailored to the task at hand and updating the model’s weights to adapt to the new data. Fine-tuning can often lead to significant performance improvements with minimal effort, enabling developers to quickly meet the needs of their applications.

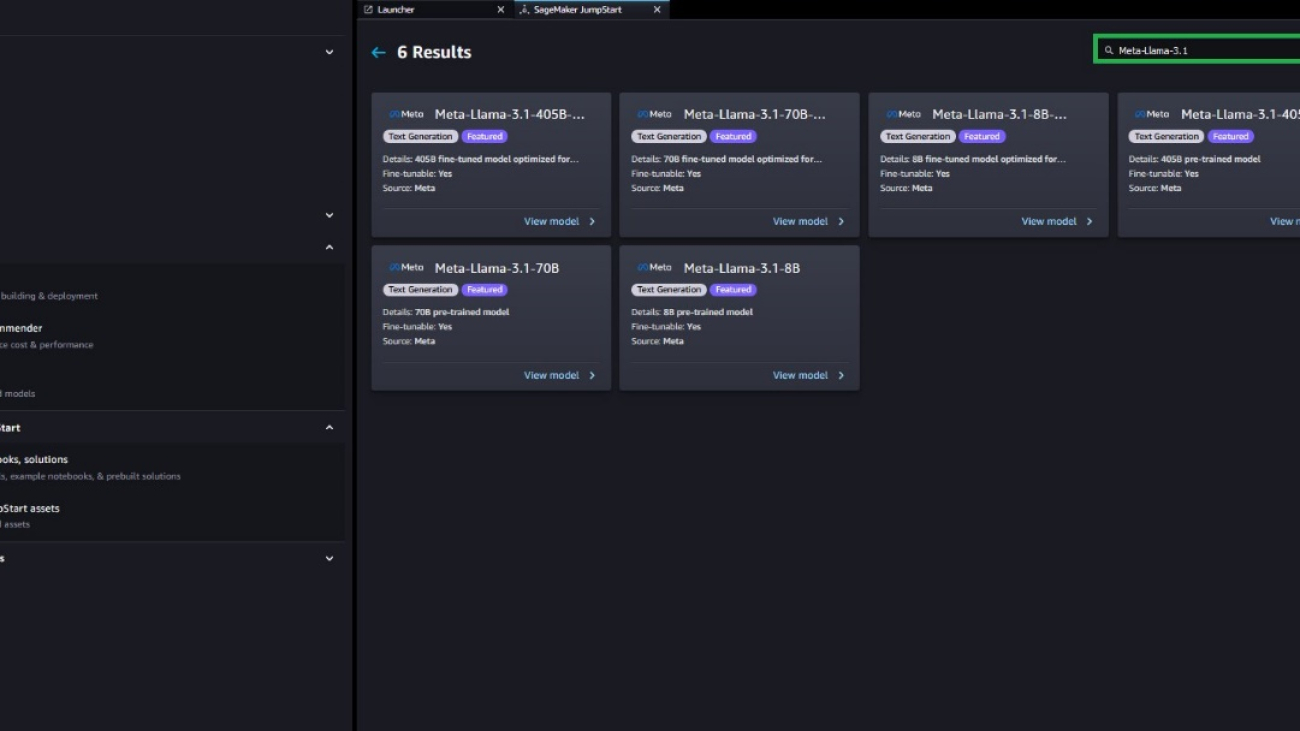

SageMaker JumpStart now supports the Meta Llama 3.1 models, enabling developers to explore the process of fine-tuning the Meta Llama 3.1 405B model using the SageMaker JumpStart UI and SDK. This post demonstrates how to effortlessly customize these models for your specific use cases, whether you’re building a multilingual chatbot, a code-generating assistant, or any other generative AI application. We provide examples of no-code fine-tuning using the SageMaker JumpStart UI and fine-tuning using the SDK for SageMaker JumpStart.

SageMaker JumpStart

With SageMaker JumpStart, machine learning (ML) practitioners can choose from a broad selection of publicly available FMs. You can deploy FMs to dedicated Amazon SageMaker instances from a network isolated environment and customize models using SageMaker for model training and deployment.

You can now discover and deploy Meta Llama 3.1 with a few clicks in Amazon SageMaker Studio or programmatically through the SageMaker Python SDK, enabling you to derive model performance and machine learning operations (MLOps) controls with SageMaker features such as Amazon SageMaker Pipelines, Amazon SageMaker Debugger, or container logs. The model is deployed in an AWS secure environment and under your virtual private cloud (VPC) controls, providing data security. In addition, you can fine-tune Meta Llama 3.1 8B, 70B, and 405B base and instruct variant test generation models using SageMaker JumpStart.

Fine-tuning configurations for Meta Llama 3.1 models in SageMaker JumpStart

SageMaker JumpStart offers fine-tuning for Meta LIama 3.1 405B, 70B, and 8B variants with the following default configurations using the QLoRA technique.

| Model ID | Training Instance | Input Sequence Length | Training Batch Size | Types of Self-Supervised Training | QLoRA/LoRA | ||

| Domain Adaptation Fine-Tuning | Instruction Fine-Tuning | Chat Fine-Tuning | |||||

| meta-textgeneration-llama-3-1-405b-instruct-fp8 | ml.p5.48xlarge | 8,000 | 8 | ✓ | Planned | ✓ | QLoRA |

| meta-textgeneration-llama-3-1-405b-fp8 | ml.p5.48xlarge | 8,000 | 8 | ✓ | Planned | ✓ | QLoRA |

| meta-textgeneration-llama-3-1-70b-instruct | ml.g5.48xlarge | 2,000 | 8 | ✓ | ✓ | ✓ | QLoRA (8-bits) |

| meta-textgeneration-llama-3-1-70b | ml.g5.48xlarge | 2,000 | 8 | ✓ | ✓ | ✓ | QLoRA (8-bits) |

| meta-textgeneration-llama-3-1-8b-instruct | ml.g5.12xlarge | 2,000 | 4 | ✓ | ✓ | ✓ | LoRA |

| meta-textgeneration-llama-3-1-8b | ml.g5.12xlarge | 2,000 | 4 | ✓ | ✓ | ✓ | LoRA |

You can fine-tune the models using either the SageMaker Studio UI or SageMaker Python SDK. We discuss both methods in this post.

No-code fine-tuning using the SageMaker JumpStart UI

In SageMaker Studio, you can access Meta Llama 3.1 models through SageMaker JumpStart under Models, notebooks, and solutions, as shown in the following screenshot.

If you don’t see any Meta Llama 3.1 models, update your SageMaker Studio version by shutting down and restarting. For more information about version updates, refer to Shut down and Update Studio Classic Apps.

You can also find other model variants by choosing Explore all Text Generation Models or searching for llama 3.1 in the search box.

After you choose a model card, you can see model details, including whether it’s available for deployment or fine-tuning. Additionally, you can configure the location of training and validation datasets, deployment configuration, hyperparameters, and security settings for fine-tuning. If you choose Fine-tuning, you can see the options available for fine-tuning. You can then choose Train to start the training job on a SageMaker ML instance.

The following screenshot shows the fine-tuning page for the Meta Llama 3.1 405B model; however, you can fine-tune the 8B and 70B Llama 3.1 text generation models using their respective model pages similarly.

To fine-tune these models, you need to provide the following:

- Amazon Simple Storage Service (Amazon S3) URI for the training dataset location

- Hyperparameters for the model training

- Amazon S3 URI for the output artifact location

- Training instance

- VPC

- Encryption settings

- Training job name

To use Meta Llama 3.1 models, you need to accept the End User License Agreement (EULA). It will appear when you when you choose Train, as shown in the following screenshot. Choose I have read and accept EULA and AUP to start the fine-tuning job.

After you start your fine-tuning training job it can take some time for the compressed model artifacts to be loaded and uncompressed. This can take up to 4 hours. After the model is fine-tuned, you can deploy it using the model page on SageMaker JumpStart. The option to deploy the fine-tuned model will appear when fine-tuning is finished, as shown in the following screenshot.

Fine-tuning using the SDK for SageMaker JumpStart

The following sample code shows how to fine-tune the Meta Llama 3.1 405B base model on a conversational dataset. For simplicity, we show how to fine-tune and deploy the Meta Llama 3.1 405B model on a single ml.p5.48xlarge instance.

Let’s load and process the dataset in conversational format. The example dataset for this demonstration is OpenAssistant’s TOP-1 Conversation Threads.

The training data should be formulated in JSON lines (.jsonl) format, where each line is a dictionary representing a set of conversations. The following code shows an example within the JSON lines file. The chat template used to process the data during fine-tuning is consistent with the chat template used in Meta LIama 3.1 405B Instruct (Hugging Face). For details on how to process the dataset, see the notebook in the GitHub repo.

Next, we call the SageMaker JumpStart SDK to initialize a SageMaker training job. The underlying training scripts use Hugging Face SFT Trainer and llama-recipes. To customize the values of hyperparameters, see the GitHub repo.

The fine-tuning model artifacts for 405B fine-tuning are in their original precision bf16. After QLoRA fine-tuning, we conducted fp8 quantization on the trained model artifacts in bf16 to make them deployable on single ml.p5.48xlarge instance.

After the fine-tuning, you can deploy the fine-tuned model to a SageMaker endpoint:

You can also find the code for fine-tuning Meta Llama 3.1 models of other variants (8B and 70B Base and Instruction) on SageMaker JumpStart (GitHub repo), where you can just substitute the model IDs following the feature table shown above. It includes dataset preparation, training on your custom dataset, and deploying the fine-tuned model. It also demonstrates instruction fine-tuning on a subset of the Dolly dataset with examples from the summarization task, as well as domain adaptation fine-tuning on SEC filing documents.

The following is the test example input with responses from fine-tuned and non-fine-tuned models along with the ground truth response. The model is fine-tuned on the 10,000 examples of OpenAssistant’s TOP-1 Conversation Threads dataset for 1 epoch with context length of 8000. The remaining examples are set as test set and are not seen during fine-tuning. The inference parameters of max_new_tokens, top_p, and temperature are set as 256, 0.96, and 0.2, respectively.

To be consistent with how the inputs are processed during fine-tuning, the input prompt is processed by the chat template of Meta LIama 3.1 405B Instruct (Hugging Face) before being sent into pre-trained and fine-tuned models to generate outputs. Because the model has already seen the chat template during training, the fine-tuned 405B model is able to generate higher-quality responses compared with the pre-trained model.

We provide the following input to the model:

The following is the ground truth response:

The following is the response from the non-fine-tuned model:

We get the following response from the fine-tuned model:

We observe better results from the fine-tuned model because the model was exposed to additional relevant data, and therefore was able to better adapt in terms of knowledge and format.

Clean up

You can delete the endpoint after use to save on cost.

Conclusion

In this post, we discussed fine-tuning Meta Llama 3.1 models using SageMaker JumpStart. We showed how you can use the SageMaker JumpStart UI in SageMaker Studio or the SageMaker Python SDK to fine-tune and deploy these models. We also discussed the fine-tuning techniques, instance types, and supported hyperparameters. In addition, we outlined recommendations for optimized training based on various tests we carried out. The results for fine-tuning the three models over two datasets are shown in the appendix at the end of this post. As we can see from these results, fine-tuning improves summarization compared to non-fine-tuned models.

As a next step, you can try fine-tuning these models on your own dataset using the code provided in the GitHub repository to test and benchmark the results for your use cases.

About the Authors

Xin Huang is a Senior Applied Scientist at AWS

James Park is a Principal Solution Architect – AI/ML at AWS

Saurabh Trikande is a Senior Product Manger Technical at AWS

Hemant Singh is an Applied Scientist at AWS

Rahul Sharma is a Senior Solution Architect at AWS

Suhas Maringanti is an Applied Scientist at AWS

Akila Premachandra is an Applied Scientist II at AWS

Ashish Khetan is a Senior Applied Scientist at AWS

Zhipeng Wang is an Applied Science Manager at AWS

Appendix

This appendix provides additional information about qualitative performance benchmarking, between fine-tuned 405B on a chat dataset and a pre-trained 405B base model, on the test set of the OpenAssistant’s TOP-1 Conversation Threads. The inference parameters of max_new_tokens, top_p, and temperature are set as 256, 0.96, and 0.2, respectively.

| Inputs | Pre-Trained | Fine-Tuned | Ground Truth |