Today, we are excited to announce the availability of Llama 3.2 in Amazon SageMaker JumpStart and Amazon Bedrock. The Llama 3.2 models are a collection of state-of-the-art pre-trained and instruct fine-tuned generative AI models that come in various sizes—in lightweight text-only 1B and 3B parameter models suitable for edge devices, to small and medium-sized 11B and 90B parameter models capable of sophisticated reasoning tasks, including multimodal support for high-resolution images. SageMaker JumpStart is a machine learning (ML) hub that provides access to algorithms, models, and ML solutions so you can quickly get started with ML. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies, like Meta, through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

In this post, we demonstrate how you can use Llama 3.2 11B and 90B models for a variety of vision-based use cases. This is the first time Meta’s Llama models have been released with vision capabilities. These new capabilities expand the usability of Llama models from their traditional text-only applications. The vision-based use cases that we discuss in this post include document visual question answering, extracting structured entity information from images, and image captioning.

Overview of Llama 3.2 11B and 90B Vision models

The Llama 3.2 collection of multimodal and multilingual large language models (LLMs) is a collection of pre-trained and instruction-tuned generative models in a variety of sizes. The 11B and 90B models are multimodal—they support text in/text out, and text+image in/text out.

Llama 3.2 11B and 90B are the first Llama models to support vision tasks, with a new model architecture that integrates image encoder representations into the language model. The new models are designed to be more efficient for AI workloads, with reduced latency and improved performance, making them suitable for a wide range of applications. All Llama 3.2 models support a 128,000 context length, maintaining the expanded token capacity introduced in Llama 3.1. Additionally, the models offer improved multilingual support for eight languages, including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

Llama 3.2 models are available today for inferencing in SageMaker JumpStart and Amazon Bedrock. With SageMaker JumpStart, you can access Llama 3.2 models initially in the US East (Ohio) AWS region and support the required instance types. Meta’s Llama 3.2 90B and 11B models are also available in Amazon Bedrock in the US West (Oregon) Region, and in the US East (Ohio, N. Virginia) Regions via cross-region inference. Llama 3.2 1B and 3B models are available in the US West (Oregon) and Europe (Frankfurt) Regions, and in the US East (Ohio, N. Virginia) and Europe (Ireland, Paris) Regions via cross-region inference with planned expanded regional availability in the future.

Solution overview

In the following sections, we walk through how to configure Llama 3.2 vision models in Amazon Bedrock and Amazon SageMaker JumpStart for vision-based reasoning. We also demonstrate use cases for document question answering, entity extraction, and caption generation.

For the examples shown in this post, we use the Llama 3.2 90B model unless otherwise noted. The fashion images are from the Fashion Product Images Dataset. Caption generation images are from Human Preference Synthetic Dataset. The interior design and real estate images are from the Interior design dataset.

Prerequisites

The following prerequisites are needed to implement the steps outlined in this post:

- An AWS account that will contain all your AWS resources.

- An AWS Identity and Access Management (IAM) role to access Amazon SageMaker and Amazon Bedrock. For more information, see Identity and Access Management for Amazon SageMaker and Identity and access management for Amazon Bedrock.

- Access to Amazon SageMaker Studio or a SageMaker notebook instance or an interactive development environment (IDE) such as PyCharm or Visual Studio Code.

For information about how to set up Llama 3.2 model access for Amazon Bedrock, see launch post. For details on creating model endpoints in SageMaker JumpStart, refer to the launch post.

Configure Llama 3.2 for vision-based reasoning in Amazon Bedrock

To set up vision-based reasoning tasks with Llama 3.2 models in Amazon Bedrock, use the following code snippet:

Amazon Bedrock supports the messages object as part of the Converse API. With the Converse API, you don’t have to convert the image into base64 (compared to SageMaker JumpStart).

You can read the image with the following code:

Use the following code to create a messages object:

# Construct the messages for the model input

Invoke the Amazon Bedrock Converse API as follows:

Configure Llama 3.2 for vision-based reasoning in SageMaker

You can set up vision-based reasoning tasks with Llama 3.2 vision models with a SageMaker endpoint with the following code snippet (please refer to Llama 3.2 in SageMaker JumpStart blog to setup the inference endpoint):

SageMaker JumpStart deployment can also take in a Messages API style messages object as the input (similar to the Amazon Bedrock Converse API). First, the image needs to be read into a base64 format before sending it through the messages object.

Read the image with the following code:

Create a messages object with the following code:

In the preceding code, prompt is the question we ask about the reasoning of the model with the image.

After you create the messages object, you can send that as payload to the SageMaker endpoint:

Document question answering

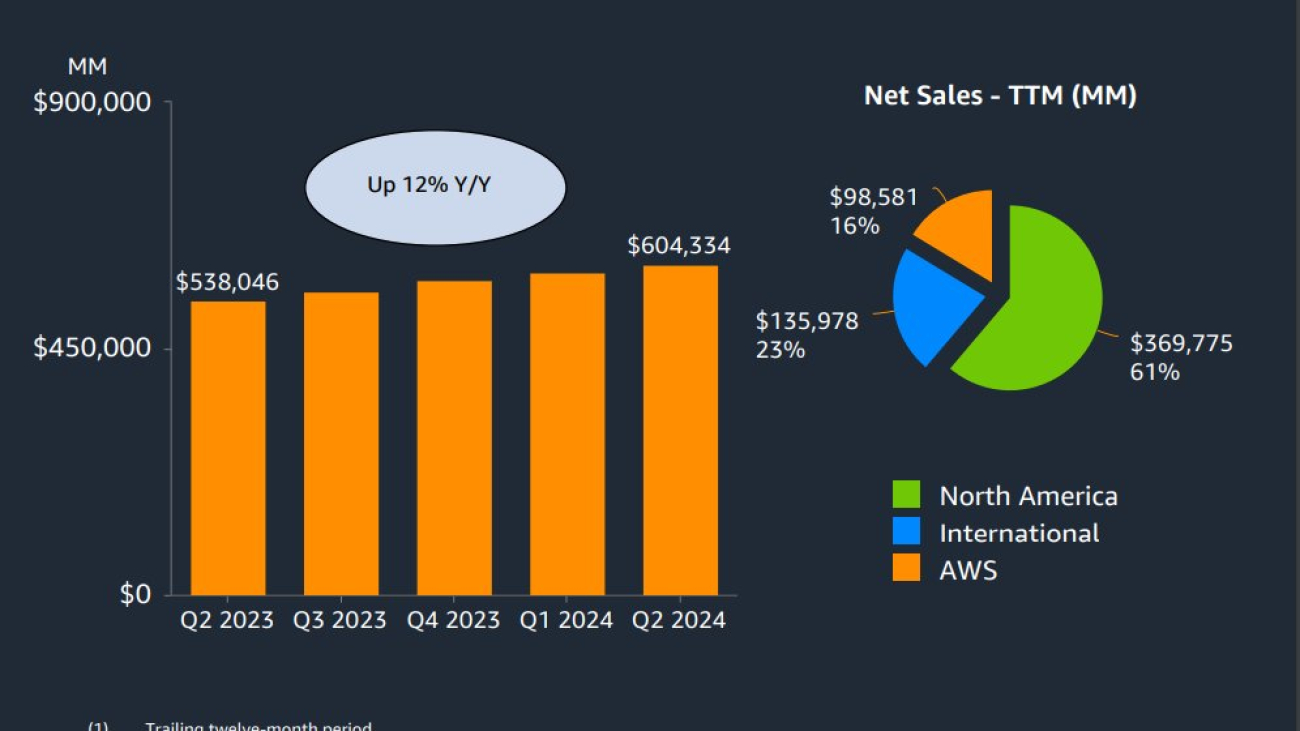

In this section, we present example use cases for document question answering. We focus on two use cases: first on answering questions based on financial results slides that contain complex graphical information, and secondly solving math-based problems by looking at an image.

Financial results slides Q&A

Llama 3.2 vision models can reason and answer questions based on images with complicated information in the image such as plots, graphs, and tables. Let’s use the model for a financial analyst assistant to analyze slides with different types of financial information to answer our questions. We use the Amazon Q2 2024 Financial Results Conference Calls Slides document to ask Llama 3.2 some questions based on this slide deck.

For our first prompt, we ask “How is the sales trending over the last twelve months?” with the following slide.

We get the following response:The sales are trending up 12% year over year. The bar graph shows that the sales have increased from $538,046 in Q2 2023 to $604,334 in Q1 2024, and further to $604,334 in Q2 2024. This indicates a steady growth in sales over the last twelve months.

Next, we ask “Can you please help me understand this slide? How are the operating and free cash flow trending the last 12 month period? What is the significant contributor to the free cash flow trend?” We use the following slide.

We get the following response:The slide presents a table with two main sections: "Operating Cash Flow" and "Free Cash Flow Less Equipment Finance Leases and Principal Repayments of All Other Finance Leases and Financing Obligations." The table is divided into five columns, each representing a quarter from Q2 2023 to Q2 2024.

**Operating Cash Flow Trend:**

* Q2 2023: $61,841

* Q3 2023: $71,654

* Q4 2023: $84,946

* Q1 2024: $99,147

* Q2 2024: $107,952

The operating cash flow has consistently increased over the five quarters, indicating a positive trend.

**Free Cash Flow Trend:**

* Q2 2023: $6,734

* Q3 2023: $20,241

* Q4 2023: $35,549

* Q1 2024: $48,778

* Q2 2024: $51,448

The free cash flow has also shown an upward trend, with a significant increase from Q2 2023 to Q2 2024.

**Significant Contributor to Free Cash Flow Trend:**

The significant contributor to the free cash flow trend is the "Principal repayments of all other finance leases" and "Principal repayments of financing obligations." These two items have consistently increased over the five quarters, contributing to the overall growth in free cash flow.

In summary, both operating cash flow and free cash flow have demonstrated a positive trend over the last 12-month period. The significant contributor to the free cash flow trend is the increase in principal repayments of all other finance leases and financing obligations.

Visual math question answering

Another interesting problem for visual question answering is answering visual math and logic puzzles. Llama 3.2 vision models are highly capable in solving and answering visual math questions. We show a few examples here.

For our first prompt, we use the following image and ask “The kangaroo is inside how many circles?”

We get the following response:The kangaroo is inside 3 circles.

Next, we use the following image and ask “Which of these figures differ from the other four?”

We get the following response:The figure labeled E is the only one with a different shape for the skirt. The other figures have triangular skirts, while figure E has a rectangular skirt.

Entity extraction

Extracting information about products is an important use case for the retail industry. On platforms supporting third-party sellers, product images are the most available resources to gather information about the product. Therefore, accurately extracting relevant information about the product is essential for the success of the product. In this section, we show a couple of examples of clothing product images, and how you can use Llama 3.2 to extract relevant information about the product that can support it on an ecommerce application.

We first use the following images and the prompt “Can you please extract all information about the product in the image? Think very carefully in a step-by-step manner to construct a json file to organize all the information about the product. Please consider formulating the json file so that the extracted information can be stored in a database for further use in a retail ecommerce application. Return the json file as the output.”

|

|

For the pink dress, we get the following output:Based on the image, here is a JSON representation of the product information:

```{"product_id": "unknown","name": "Pink Floral Dress","description": "A sleeveless dress with a pink floral pattern and a pink trim at the bottom","category": "Dresses","sub_category": "Casual Dresses","color": "Pink","size": "unknown","price": "unknown","brand": "unknown","material": "unknown","features": ["Sleeveless","Floral pattern","Pink trim at the bottom"],"images": [{"url": "image_url","alt_text": "Pink Floral Dress"}]}```

Please note that some information such as product_id, size, price, brand, and material are unknown as they are not visible in the image. Also, the image_url is a placeholder and should be replaced with the actual URL of the image.

For the blue cap, we get the following output:

Based on the image, here is a JSON representation of the product information:

```json{"product_id": "NIKE-CAP-001","name": "Nike Cap","description": "A blue baseball cap with a white Nike logo","category": "Accessories","subcategory": "Hats","brand": "Nike","color": "Blue","size": "One Size","material": "Cotton","price": 29.99,"image_url": "https://example.com/nike-cap.jpg","features": ["Adjustable strap in the back","Embroidered Nike logo on the front"]}```

This JSON object includes the following fields:

* `product_id`: a unique identifier for the product* `name`: the name of the product* `description`: a brief description of the product* `category` and `subcategory`: the categories and subcategories that the product belongs to* `brand`: the brand of the product* `color`: the color of the product* `size`: the size of the product* `material`: the material that the product is made of* `price`: the price of the product* `image_url`: the URL of the product image* `features`: an array of features that describe the product

Note that this is just one possible way to represent the product information in JSON. Depending on the specific requirements of your application, you may need to add or remove fields, or use different data types for certain fields.

Caption generation

Another important and useful capability of vision models is to generate captions automatically for images. In this section, we showcase caption generation with a simple caption, a more creative and funnier caption, and a marketing style caption.

Simple captioning

For simple captioning, we use the following image and the prompt “Please provide a caption for this image. Be creative but respectful.”

We get the following response:

The image shows a young woman sitting in a dentist's chair, with a female dentist examining her teeth. The woman is wearing a bib to protect her clothes from any water or other substances that may be used during the examination. The dentist is wearing a white lab coat and a mask, and she is holding a dental mirror and a probe. The background of the image is a dental office, with various dental tools and equipment visible.

The overall atmosphere of the image is one of professionalism and care. The dentist appears to be focused on her work, and the woman seems to be relaxed and comfortable. The image suggests that the woman is receiving a routine dental checkup or cleaning, and that the dentist is taking good care of her.

Creative captioning

For our second example, we want a more creative and funny tone. We use the same image and the prompt “Please provide a short caption for this image. Be creative and funny but respectful. This is meant to be shared on a social network application.”

We get the following response:

"Brace yourself for a sparkling smile, because this dentist is about to make your teeth shine brighter than a disco ball!"

Listing generation

To generate a real estate listing, we use the following image and the prompt “You are a real estate marketing expert. Please create a listing description with the attached image of a property.”

We get the following output:

**Welcome to Your Dream Home**

This stunning property boasts an open-plan living area that seamlessly blends style and functionality. The spacious living room, dining area, and kitchen are perfect for entertaining, with plenty of natural light pouring in through the large windows. The sleek, modern kitchen features high-end appliances and ample counter space, making meal prep a breeze.

The living room is a cozy retreat, with a comfortable sofa and chairs arranged around a stylish coffee table. The adjacent dining area is perfect for hosting dinner parties, with a large table that seats eight.

But the real showstopper is the stunning view of the water, which can be enjoyed from the comfort of your own home. Imagine sipping your morning coffee or enjoying a glass of wine in the evening while taking in the breathtaking scenery.

This property is the perfect blend of form and function, offering the ultimate in comfort and style. Don't miss out on this incredible opportunity to make it your own.

**Key Features:**

* Open-plan living area with kitchen, dining area, and living room* Modern kitchen with high-end appliances and ample counter space* Comfortable living room with sofa and chairs* Large dining table that seats eight* Stunning view of the water* Perfect for entertaining

**Don't miss out on this incredible opportunity. Contact us today to schedule a viewing.**

Conclusion

In this post, we demonstrated the diverse vision capabilities of the Llama 3.2 11B and 90B models from Meta. Llama 3.2 vision models enable you to solve multiple use cases, including document understanding, math and logic puzzle solving, entity extraction, and caption generation. These capabilities can drive productivity in a number of enterprise use cases, including ecommerce (retail), marketing, and much more.

To learn more about Llama 3.2 features and capabilities in Amazon Bedrock, refer to the launch post, product page, and documentation. To learn more about using Llama 3.2 in SageMaker JumpStart, see the launch post, and for more information about using foundation models in SageMaker JumpStart, check out product page and documentation.

We can’t wait to see what you build with the Llama 3.2 models on AWS!

About the Authors

Dr. Natarajan Chennimalai Kumar is a Principal Solutions Architect in the 3rd Party Model Provider team at AWS, working closely with the Llama partner engineering team at Meta to enable AWS customers use Llama models. He holds a PhD from University of Illinois at Urbana-Champaign. He is based in the Bay Area in California. Outside of work, he enjoys watching shows with his kids, playing tennis, and traveling with his family.

Dr. Natarajan Chennimalai Kumar is a Principal Solutions Architect in the 3rd Party Model Provider team at AWS, working closely with the Llama partner engineering team at Meta to enable AWS customers use Llama models. He holds a PhD from University of Illinois at Urbana-Champaign. He is based in the Bay Area in California. Outside of work, he enjoys watching shows with his kids, playing tennis, and traveling with his family.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the outdoors with his wife.

Sebastian Bustillo is a Solutions Architect at AWS. He focuses on AI/ML technologies with a profound passion for generative AI and compute accelerators. At AWS, he helps customers unlock business value through generative AI. When he’s not at work, he enjoys brewing a perfect cup of specialty coffee and exploring the outdoors with his wife.

Marco Punio is a Sr. Specialist Solutions Architect focused on generative AI strategy, applied AI solutions, and conducting research to help customers hyperscale on AWS. As a member of the 3rd Party Model Provider Applied Sciences Solutions Architecture team at AWS, he is a Global Lead for the Meta – AWS Partnership and technical strategy. Based in Seattle, WA, Marco enjoys writing, reading, exercising, and building applications in his free time.

Marco Punio is a Sr. Specialist Solutions Architect focused on generative AI strategy, applied AI solutions, and conducting research to help customers hyperscale on AWS. As a member of the 3rd Party Model Provider Applied Sciences Solutions Architecture team at AWS, he is a Global Lead for the Meta – AWS Partnership and technical strategy. Based in Seattle, WA, Marco enjoys writing, reading, exercising, and building applications in his free time.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and data analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Armando Diaz is a Solutions Architect at AWS. He focuses on generative AI, AI/ML, and data analytics. At AWS, Armando helps customers integrating cutting-edge generative AI capabilities into their systems, fostering innovation and competitive advantage. When he’s not at work, he enjoys spending time with his wife and family, hiking, and traveling the world.

Last week I participated in the President’s Lecture Series at Carnegie Mellon University and sat down for a fireside chat with CMU President Jahanian.

Last week I participated in the President’s Lecture Series at Carnegie Mellon University and sat down for a fireside chat with CMU President Jahanian.