This post is co-written with Deepali Rajale from Karini AI.

Karini AI, a leading generative AI foundation platform built on AWS, empowers customers to quickly build secure, high-quality generative AI apps. GenAI is not just a technology; it’s a transformational tool that is changing how businesses use technology. Depending on where they are in the adoption journey, the adoption of generative AI presents a significant challenge for enterprises. While pilot projects using Generative AI can start effortlessly, most enterprises need help progressing beyond this phase. According to Everest Research, more than a staggering 50% of projects do not move beyond the pilots as they face hurdles due to the absence of standardized or established GenAI operational practices.

Karini AI offers a robust, user-friendly GenAI foundation platform that empowers enterprises to build, manage, and deploy Generative AI applications. It allows beginners and expert practitioners to develop and deploy Gen AI applications for various use cases beyond simple chatbots, including agentic, multi-agentic, Generative BI, and batch workflows. The no-code platform is ideal for quick experimentation, building PoCs, and rapid transition to production with built-in guardrails for safety and observability for troubleshooting. The platform includes an offline and online quality evaluation framework to assess quality during experimentation and continuously monitor applications post-deployment. Karini AI’s intuitive prompt playground allows authoring prompts, comparison with different models across providers, prompt management, and prompt tuning. It supports iterative testing of more straightforward, agentic, and multi-agentic prompts. For production deployment, the no-code recipes enable easy assembly of the data ingestion pipeline to create a knowledge base and deployment of RAG or agentic chains. The platform owners can monitor costs and performance in real-time with detailed observability and seamlessly integrate with Amazon Bedrock for LLM inference, benefiting from extensive enterprise connectors and data preprocessing techniques.

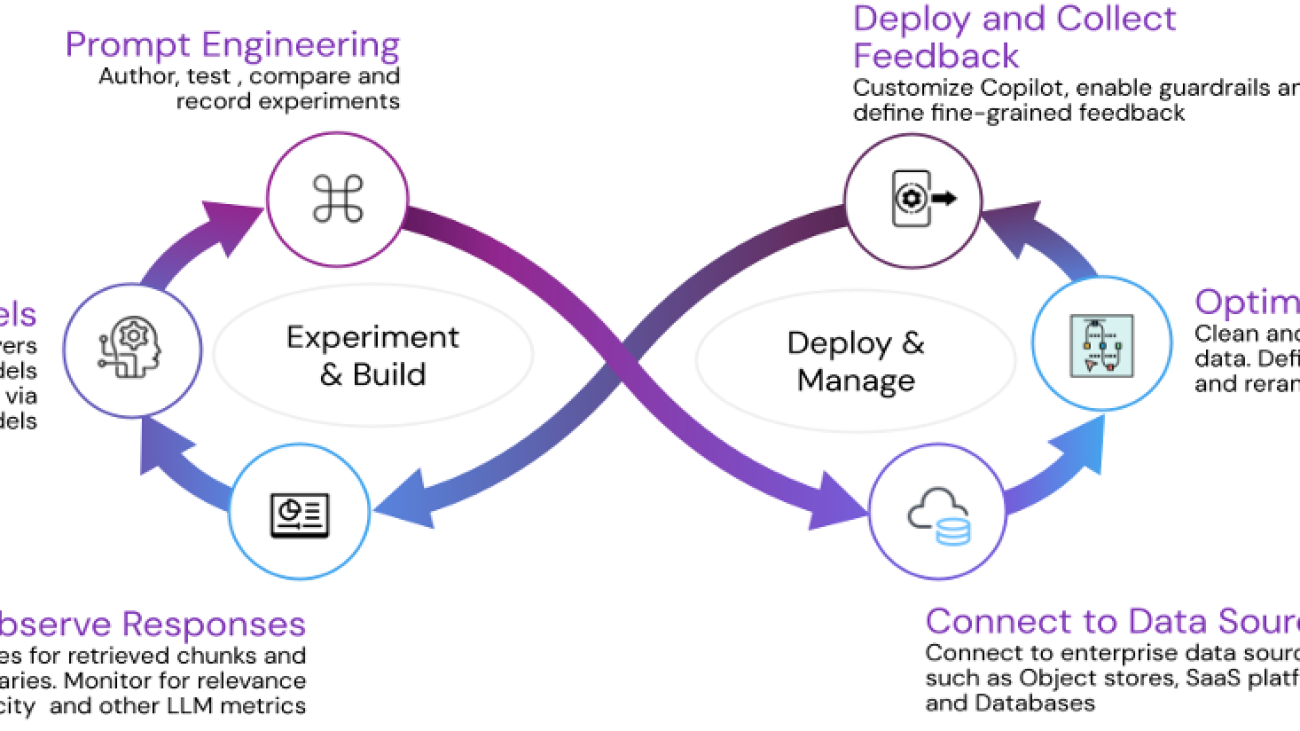

The following diagram illustrates how Karini AI delivers a comprehensive Generative AI foundational platform encompassing the entire application lifecycle. This platform delivers a holistic solution that speeds up time to market and optimizes resource utilization by providing a unified framework for development, deployment, and management.

In this post, we share how Karini AI’s migration of vector embedding models from Kubernetes to Amazon SageMaker endpoints improved concurrency by 30% and saved over 23% in infrastructure costs.

Karini AI’s Data Ingestion Pipeline for creating vector embeddings

Enriching large language models (LLMs) with new data is crucial to building practical generative AI applications. This is where Retrieval Augmented Generation (RAG) comes into play. RAG enhances LLMs’ capabilities by incorporating external data and producing state-of-the-art performance in knowledge-intensive tasks. Karini AI offers no-code solutions for creating Generative AI applications using RAG. These solutions include two primary components: a data ingestion pipeline for building a knowledge base and a system for knowledge retrieval and summarization. Together, these pipelines simplify the development process, enabling the creation of powerful AI applications with ease.

Data Ingestion Pipeline

Ingesting data from diverse sources is essential for executing Retrieval Augmented Generation (RAG). Karini AI’s data ingestion pipeline enables connection to multiple data sources, including Amazon S3, Amazon Redshift, Amazon Relational Database Service (RDS), websites and Confluence, handling structured and unstructured data. This source data is pre-processed, chunked, and transformed into vector embeddings before being stored in a vector database for retrieval. Karini AI’s platform provides flexibility by offering a range of embedding models from their model hub, simplifying the creation of vector embeddings for advanced AI applications.

Here is a screenshot of Karini AI’s no-code data ingestion pipeline.

Karini AI’s model hub streamlines adding models by integrating with leading foundation model providers such as Amazon Bedrock and self-managed serving platforms.

Infrastructure challenges

As customers explore complex use cases and datasets grow in size and complexity, Karini AI scales the data ingestion process efficiently to provide high concurrency for creating vector embeddings using state-of-the-art embedding models, such as those listed in the MTEB leaderboard, which are rapidly evolving and unavailable on managed platforms.

Before migrating to Amazon SageMaker, we deployed our models on self-managed Kubernetes(K8s) on EC2 instances. Kubernetes offered significant flexibility to deploy models from HuggingFace quickly, but soon, our engineering had to manage many aspects of scaling and deployment. We faced the following challenges with our existing setup that must be addressed to improve efficiency and performance.

- Keeping up with SOTA(State-Of-The-Art) models: We managed different deployment manifests for each model type (such as classifiers, embeddings, and autocomplete), which was time-consuming and error-prone. We also had to maintain the logic to determine the memory allocation for different model types.

- Managing dynamic concurrency was hard: A significant challenge with using models hosted on Kubernetes was achieving the highest dynamic concurrency level. We aimed to maximize endpoint performance to achieve target transactions per second (TPS) while meeting strict latency requirements.

- Higher Costs: While Kubernetes (K8s) provides robust capabilities, it has become more costly due to the dynamic nature of data ingestion pipelines, which results in under-utilized instances and higher costs.

Our search for an inference platform led us to Amazon SageMaker, a solution that efficiently manages our models for higher concurrency, meets customer SLAs, and scales down serving when not needed. The reliability of SageMaker’s performance gave us confidence in its capabilities.

Amazon SageMaker for Model Serving

Choosing Amazon SageMaker was a strategic decision for Karini AI. It balanced the need for higher concurrencies at a lower cost, providing a cost-effective solution for our needs. SageMaker’s ability to scale and maximize concurrency while ensuring sub-second latency addresses various generative AI use cases making it a long-lasting investment for our platform.

Amazon SageMaker is a fully managed service that allows developers and data scientists to quickly build, train, and deploy machine learning (ML) models. With SageMaker, you can deploy your ML models on hosted endpoints and get real-time inference results. You can easily view the performance metrics for your endpoints in Amazon CloudWatch, automatically scale endpoints based on traffic, and update your models in production without losing any availability.

Karini AI’s data ingestion pipeline architecture with Amazon SageMaker Model endpoint is here.

Advantages of using SageMaker hosting

Amazon SageMaker offered our Gen AI ingestion pipeline many direct and indirect benefits.

- Technical Debt Mitigation: Amazon SageMaker, being a managed service, allowed us to free our ML engineers from the burden of inference, enabling them to focus more on our core platform features—this relief from technical debt is a significant advantage of using SageMaker, reassuring us of its efficiency.

- Meet customer SLAs: Knowledgebase creation is a dynamic task that may require higher concurrencies during vector embedding generation and minuscule load during query time. Based on customer SLAs and data volume, we can choose batch inference, real-time hosting with auto-scaling, or serverless hosting. Amazon SageMaker also provides recommendations for instance types suitable for embedding models.

- Reduced Infrastructure cost: SageMaker is a pay-as-you-go service that allows you to create batch or real-time endpoints when there is demand and destroy them when work is complete. This approach reduced our infrastructure cost by more than 23% over the Kubernetes (K8s) platform.

- SageMaker Jumpstart: SageMaker Jumpstart provides access to SOTA (State-Of-The-Art) models and optimized inference containers, making it ideal for creating new models that are accessible to our customers.

- Amazon Bedrock compatibility: Karini AI integrates with Amazon Bedrock for LLM (Large Language Model) inference. The custom model import feature allows us to reuse the model weights used in SageMaker model hosting in Amazon Bedrock to maintain a joint code base and interchange serving between Bedrock and SageMaker as per the workload.

Conclusion

Karini AI significantly improved, achieving high performance and reducing model hosting costs by migrating to Amazon SageMaker. We can deploy custom third-party models to SageMaker and quickly make them available to Karini’s model hub for data ingestion pipelines. We can optimize our infrastructure configuration for model hosting as needed, depending on model size and our expected TPS. Using Amazon SagaMaker for model inference enabled Karini AI to handle increasing data complexities efficiently and meet concurrency needs while optimizing costs. Moreover, Amazon SageMaker allows easy integration and swapping of new models, ensuring that our customers can continuously leverage the latest advancements in AI technology without compromising performance or incurring unnecessary incremental costs.

Amazon SageMaker and Karini.ai offer a powerful platform to build, train, and deploy machine learning models at scale. By leveraging these tools, you can:

- Accelerate development:Build and train models faster with pre-built algorithms and frameworks.

- Enhance accuracy: Benefit from advanced algorithms and techniques for improved model performance.

- Scale effortlessly:Deploy models to production with ease and handle increasing workloads.

- Reduce costs:Optimize resource utilization and minimize operational overhead.

Don’t miss out on this opportunity to gain a competitive edge.

About Authors

Deepali Rajale is the founder of Karini AI, which is on a mission to democratize generative AI across enterprises. She enjoys blogging about Generative AI and coaching customers to optimize Generative AI practice. In her spare time, she enjoys traveling, seeking new experiences, and keeping up with the latest technology trends. You can find her on LinkedIn.

Deepali Rajale is the founder of Karini AI, which is on a mission to democratize generative AI across enterprises. She enjoys blogging about Generative AI and coaching customers to optimize Generative AI practice. In her spare time, she enjoys traveling, seeking new experiences, and keeping up with the latest technology trends. You can find her on LinkedIn.

Ravindra Gupta is the Worldwide GTM lead for SageMaker and with a passion to help customers adopt SageMaker for their Machine Learning/ GenAI workloads. Ravi is fond of learning new technologies, and enjoy mentoring startups on their Machine Learning practice. You can find him on Linkedin

Ravindra Gupta is the Worldwide GTM lead for SageMaker and with a passion to help customers adopt SageMaker for their Machine Learning/ GenAI workloads. Ravi is fond of learning new technologies, and enjoy mentoring startups on their Machine Learning practice. You can find him on Linkedin

We’re sharing a first look of the upcoming Project Starline product and how companies can apply to be among the first to try it.

We’re sharing a first look of the upcoming Project Starline product and how companies can apply to be among the first to try it.

Joe Fontaine is the Product marketing lead for AWS AI Builder Programs. He is passionate about making machine learning more accessible to all through hands-on educational experiences. Outside of work he enjoys freeride mountain biking, aerial cinematography, and exploring the wilderness with his family.

Joe Fontaine is the Product marketing lead for AWS AI Builder Programs. He is passionate about making machine learning more accessible to all through hands-on educational experiences. Outside of work he enjoys freeride mountain biking, aerial cinematography, and exploring the wilderness with his family.