Global Resiliency is a new Amazon Lex capability that enables near real-time replication of your Amazon Lex V2 bots in a second AWS Region. When you activate this feature, all resources, versions, and aliases associated after activation will be synchronized across the chosen Regions. With Global Resiliency, the replicated bot resources and aliases in the second Region will have the same identifiers as those in the source Region. This consistency allows you to seamlessly route traffic to any Region by simply changing the Region identifier, providing uninterrupted service availability. In the event of a Regional outage or disruption, you can swiftly redirect your bot traffic to a different Region. Applications now have the ability to use replicated Amazon Lex bots across Regions in an active-active or active-passive manner for improved availability and resiliency. With Global Resiliency, you no longer need to manually manage separate bots across Regions, because the feature automatically replicates and keeps Regional configurations in sync. With just a few clicks or commands, you gain robust Amazon Lex bot replication capabilities. Applications that are using Amazon Lex bots can now fail over from an impaired Region seamlessly, minimizing the risk of costly downtime and maintaining business continuity. This feature streamlines the process of maintaining robust and highly available conversational applications. These include interactive voice response (IVR) systems, chatbots for digital channels, and messaging platforms, providing a seamless and resilient customer experience.

In this post, we walk you through enabling Global Resiliency for a sample Amazon Lex V2 bot. We showcase the replication process of bot versions and aliases across multiple Regions. Additionally, we discuss how to handle integrations with AWS Lambda and Amazon CloudWatch after enabling Global Resiliency.

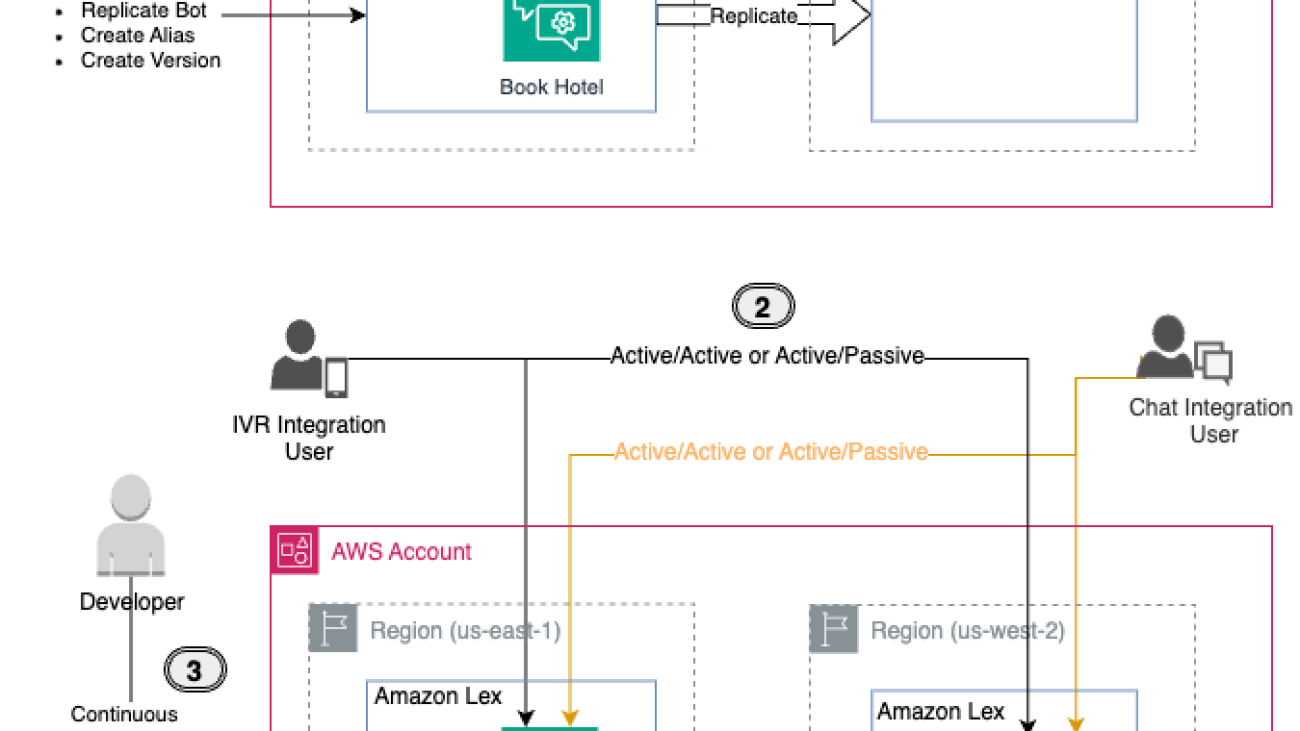

Solution overview

For this exercise, we create a BookHotel bot as our sample bot. We use an AWS CloudFormation template to build this bot, including defining intents, slots, and other required components such as a version and alias. Throughout our demonstration, we use the us-east-1 Region as the source Region, and we replicate the bot in the us-west-2 Region, which serves as the replica Region. We then replicate this bot, enable logging, and integrate it with a Lambda function.

To better understand the solution, refer to the following architecture diagram.

- Enabling Global Resiliency for an Amazon Lex bot is straightforward using the AWS Management Console, AWS Command Line Interface (AWS CLI), or APIs. We walk through the instructions to replicate the bot later in this post.

- After replication is successfully enabled, the bot will be replicated across Regions, providing a unified experience. This allows you to distribute IVR or chat application requests between Regions in either an active-active or active-passive setup, depending on your use case.

- A key benefit of Global Resiliency is that developers can continuously work on bot improvements in the source Region, and changes are automatically synchronized to the replica Region. This streamlines the development workflow without compromising resiliency.

At the time of writing, Global Resiliency only works with predetermined pairs of Regions. For more information, see Use Global Resiliency to deploy bots to other Regions.

Prerequisites

You should have the following prerequisites:

- An AWS account with administrator access

- Access to Amazon Lex Global Resiliency (contact your Amazon Connect Solutions Architect or Technical Account Manager)

- Working knowledge of the following services:

- AWS CloudFormation

- Amazon CloudWatch

- AWS Lambda

- Amazon Lex

Create a sample Amazon Lex bot

To set up a sample bot for our use case, refer to Manage your Amazon Lex bot via AWS CloudFormation templates. For this example, we create a bot named BookHotel in the source Region (us-east-1). Complete the following steps:

- Download the CloudFormation template and deploy it in the source Region (

us-east-1). For instructions, see Create a stack from the CloudFormation console.

Upon successful deployment, the BookHotel bot will be created in the source Region.

- On the Amazon Lex console, choose Bots in the navigation pane and locate the BookHotel.

Verify that the Global Resiliency option is available under Deployment in the navigation pane. If this option isn’t visible, the Global Resiliency feature may not be enabled for your account. In this case, refer to the prerequisites section for enabling the Global Resiliency feature.

Our sample BookHotel bot has one version (Version 1, in addition to the draft version) and an alias named BookHotelDemoAlias (in addition to the TestBotAlias).

Enable Global Resiliency

To activate Global Resiliency and set up bot replication in a replica Region, complete the following steps:

- On the Amazon Lex console, choose

us-east-1as your Region. - Choose Bots in the navigation pane and locate the BookHotel.

- Under Deployment in the navigation pane, choose Global Resiliency.

You can see the replication details here. Because you haven’t enabled Global Resiliency yet, all the details are blank.

- Choose Create replica to create a draft version of your bot.

In your source Region (us-east-1), after the bot replication is complete, you will see Replication status as Enabled.

- Switch to the replica Region (

us-west-2).

You can see that the BookHotel bot is replicated. This is a read-only replica and the bot ID in the replica Region matches the bot ID in the source Region.

- Under Deployment in the navigation pane, choose Global Resiliency.

You can see the replication details here, which are the same as that in the source Region BookHotel bot.

You have verified that the bot is replicated successfully after Global Resiliency is enabled. Only new versions and aliases created from this point onward will be replicated. As a next step, we create a bot version and alias to demonstrate the replication.

Create a new bot version and alias

Complete the following steps to create a new bot version and alias:

- On the Amazon Lex console in your source Region (

us-east-1), navigate to the BookHotel. - Choose Bot versions in the navigation pane, and choose Create new version to create Version 2.

Version 2 now has Global Resiliency enabled, whereas Version 1 and the draft version do not, because they were created prior to enabling Global Resiliency.

- Choose Aliases in the navigation pane, then choose Create new alias.

- Create a new alias for the BookHotel bot called

BookHotelDemoAlias_GRand point that to the new version.

Similarly, the BookHotelDemoAlias_GR now has Global Resiliency enabled, whereas aliases created before enabling Global Resiliency, such as BookHotelDemoAlias and TestBotAlias, don’t have Global Resiliency enabled.

- Choose Global Resiliency in the navigation pane to view the source and replication details.

The details for Last replicated version are now updated to Version 2.

- Switch to the replica Region (

us-west-2) and choose Global Resiliency in the navigation pane.

You can see that the new Global Resiliency enabled version (Version 2) is replicated and the new alias BookHotelDemoAlias_GR is also present.

You have verified that the new version and alias were created after Global Resiliency is replicated to the replica Region. You can now make Amazon Lex runtime calls to both Regions.

Handling integrations with Lambda and CloudWatch after enabling Global Resiliency

Amazon Lex has integrations with other AWS services such as enabling custom logic with Lambda functions and logging with conversation logs using CloudWatch and Amazon Simple Storage Service (Amazon S3). In this section, we associate a Lambda function and CloudWatch group for the BookHotel bot in the source Region (us-east-1) and validate its association in the replica Region (us-west-2).

- Download the CloudFormation template to deploy a sample Lambda and CloudWatch log group.

- Deploy the CloudFormation stack to the source Region (

us-east-1). For instructions, see Create a stack from the CloudFormation console.

This will deploy a Lambda function (book-hotel-lambda) and a CloudWatch log group (/lex/book-hotel-bot) in the us-east-1 Region.

- Deploy the CloudFormation stack to the replica Region (

us-west-2).

This will deploy a Lambda function (book-hotel-lambda) and a CloudWatch log group (/lex/book-hotel-bot) in the us-west-2 Region. The Lambda function name and CloudWatch log group name must be the same in both Regions.

- On the Amazon Lex console in the source Region (

us-east-1), navigate to the BookHotel. - Choose Aliases in the navigation pane, and choose the

BookHotelDemoAlias_GR. - In the Languages section, choose English (US).

- Select the

book-hotel-lambdafunction and associate it with the BookHotel bot by choosing Save. - Navigate back to the

BookHotelDemoAlias_GRalias, and in the Conversation logs section, choose Manage conversation logs. - Enable Text logs and select the

/lex/book-hotel-bot loggroup, then choose Save.

Conversation text logs are now enabled for the BookHotel bot in us-east-1.

- Switch to the replica Region (

us-west-2) and navigate to the BookHotel. - Choose Aliases in the navigation pane, and choose the

BookHotelDemoAlias_GR.

You can see that the conversation logs are already associated with the /lex/book-hotel-bot CloudWatch group the us-west-2 Region.

- In the Languages section, choose English (US).

You can see that the book-hotel-lambda function is associated with the BookHotel alias.

Through this process, we have demonstrated how Lambda functions and CloudWatch log groups are automatically associated with the corresponding bot resources in the replica Region for the replicated bots, providing a seamless and consistent integration across both Regions.

Disabling Global Resiliency

You have the flexibility to disable Global Resiliency at any time. By disabling Global Resiliency, your source bot, along with its associated aliases and versions, will no longer be replicated across other Regions. In this section, we demonstrate the process to disable Global Resiliency.

- On the Amazon Lex console in your source Region (

us-east-1), choose Bots in the navigation pane and locate the BookHotel. - Under Deployment in the navigation pane, choose Global Resiliency.

- Choose Disable Global Resiliency.

- Enter confirm in the confirmation box and choose Delete.

This action initiates the deletion of the replicated BookHotel bot in the replica Region.

The replication status will change to Deleting, and after a few minutes, the deletion process will be complete. You will then see the Create replica option available again. If you don’t see it, try refreshing the page.

- Check the Bot versions page of the BookHotel bot to confirm that Version 2 is still the latest version.

- Check the Aliases page to confirm that the

BookHotelDemoAlias_GRalias is still present on the source bot.

Applications referring to this alias can continue to function as normal in the source Region.

- Switch to the replica Region (

us-west-2) to confirm that the BookHotel bot has been deleted from this Region.

You can reenable Global Resiliency on the source Region (us-east-1) by going through the process described earlier in this post.

Clean up

To prevent incurring charges, complete the following steps to clean up the resources created during this demonstration:

- Disable Global Resiliency for the bot by following the instructions detailed earlier in this post.

- Delete the

book-hotel-lambda-cw-stackCloudFormation stack from theus-west-2. For instructions, see Delete a stack on the CloudFormation console. - Delete the

book-hotel-lambda-cw-stackCloudFormation stack from theus-east-1. - Delete the

book-hotel-stackCloudFormation stack from theus-east-1.

Integrations with Amazon Connect

Amazon Lex Global Resiliency seamlessly complements Amazon Connect Global Resiliency, providing you with a comprehensive solution for maintaining business continuity and resilience across your conversational AI and contact center infrastructure. Amazon Connect Global Resiliency enables you to automatically maintain your instances synchronized across two Regions, making sure that all configuration resources, such as contact flows, queues, and agents, are true replicas of each other.

With the addition of Amazon Lex Global Resiliency, Amazon Connect customers gain the added benefit of automated synchronization of their Amazon Lex V2 bots associated with their contact flows. This integration provides a consistent and uninterrupted experience during failover scenarios, because your Amazon Lex interactions seamlessly transition between Regions without any disruption. By combining these complementary features, you can achieve end-to-end resilience. This minimizes the risk of downtime and makes sure your conversational AI and contact center operations remain highly available and responsive, even in the case of Regional failures or capacity constraints.

Global Resiliency APIs

Global Resiliency provides API support to create and manage replicas. These are supported in the AWS CLI and AWS SDKs. In this section, we demonstrate usage with the AWS CLI.

- Create a bot replica in the replica Region using the CreateBotReplica.

- Monitor the bot replication status using the DescribeBotReplica.

- List the replicated bots using the ListBotReplicas.

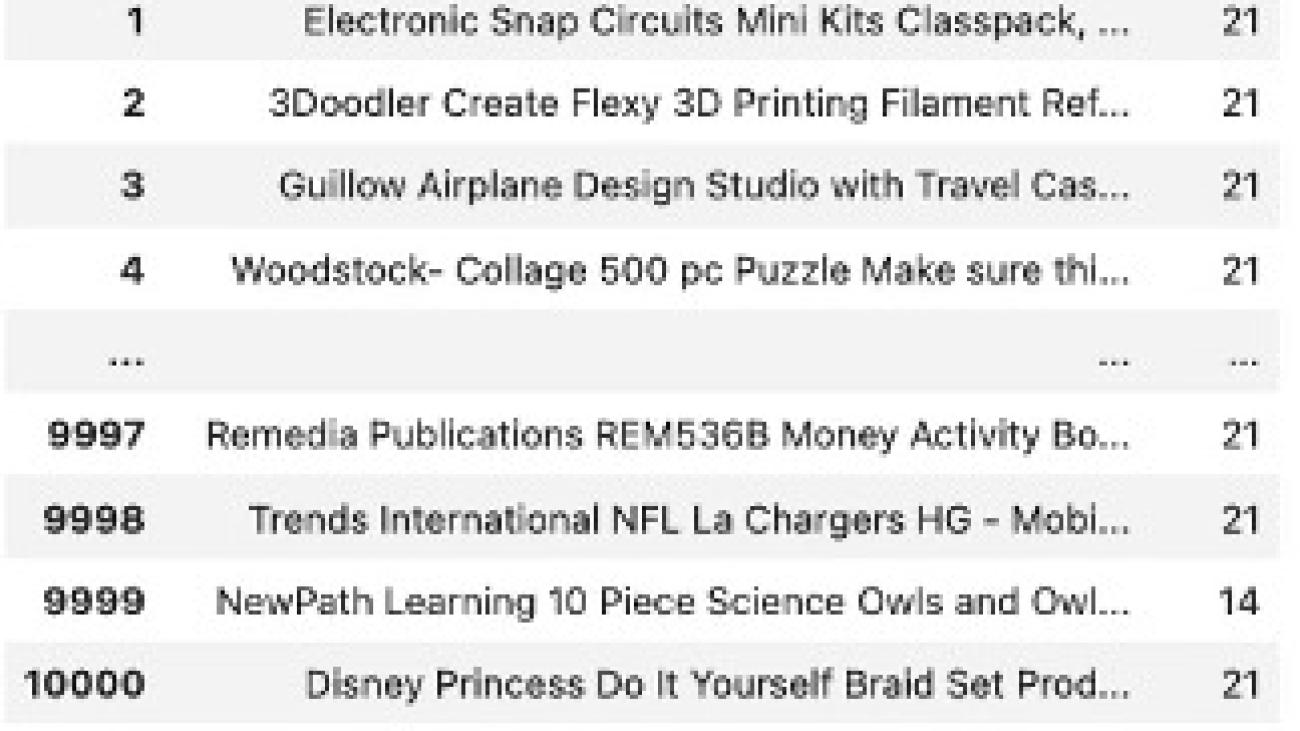

- List all the version replication statuses applicable for Global Resiliency using the ListBotVersionReplicas.

This list includes only the replicated bot versions, which were created after Global Resiliency was enabled. In the API response, a botVersionReplicationStatus of Available indicates that the bot version was replicated successfully.

- List all the alias replication statuses applicable for Global Resiliency using the ListBotAliasReplicas.

This list includes only the replicated bot aliases, which were created after Global Resiliency was enabled. In the API response, a botAliasReplicationStatus of Available indicates that the bot alias was replicated successfully.

Conclusion

In this post, we introduced the Global Resiliency feature for Amazon Lex V2 bots. We discussed the process to enable Global Resiliency using the console and reviewed some of the new APIs released as part of this feature.

As the next step, you can explore Global Resiliency and apply the techniques discussed in this post to replicate bots and bot versions across Regions. This hands-on practice will solidify your understanding of managing and replicating Amazon Lex V2 bots in your solution architecture.

About the Authors

Priti Aryamane is a Specialty Consultant at AWS Professional Services. With over 15 years of experience in contact centers and telecommunications, Priti specializes in helping customers achieve their desired business outcomes with customer experience on AWS using Amazon Lex, Amazon Connect, and generative AI features.

Priti Aryamane is a Specialty Consultant at AWS Professional Services. With over 15 years of experience in contact centers and telecommunications, Priti specializes in helping customers achieve their desired business outcomes with customer experience on AWS using Amazon Lex, Amazon Connect, and generative AI features.

Sanjeet Sanda is a Specialty Consultant at AWS Professional Services with over 20 years of experience in telecommunications, contact center technology, and customer experience. He specializes in designing and delivering customer-centric solutions with a focus on integrating and adapting existing enterprise call centers into Amazon Connect and Amazon Lex environments. Sanjeet is passionate about streamlining adoption processes by using automation wherever possible. Outside of work, Sanjeet enjoys hanging out with his family, having barbecues, and going to the beach.

Sanjeet Sanda is a Specialty Consultant at AWS Professional Services with over 20 years of experience in telecommunications, contact center technology, and customer experience. He specializes in designing and delivering customer-centric solutions with a focus on integrating and adapting existing enterprise call centers into Amazon Connect and Amazon Lex environments. Sanjeet is passionate about streamlining adoption processes by using automation wherever possible. Outside of work, Sanjeet enjoys hanging out with his family, having barbecues, and going to the beach.

Yogesh Khemka is a Senior Software Development Engineer at AWS, where he works on large language models and natural language processing. He focuses on building systems and tooling for scalable distributed deep learning training and real-time inference.

Yogesh Khemka is a Senior Software Development Engineer at AWS, where he works on large language models and natural language processing. He focuses on building systems and tooling for scalable distributed deep learning training and real-time inference.

Kara Yang is a Data Scientist at AWS Professional Services in the San Francisco Bay Area, with extensive experience in AI/ML. She specializes in leveraging cloud computing, machine learning, and Generative AI to help customers address complex business challenges across various industries. Kara is passionate about innovation and continuous learning.

Kara Yang is a Data Scientist at AWS Professional Services in the San Francisco Bay Area, with extensive experience in AI/ML. She specializes in leveraging cloud computing, machine learning, and Generative AI to help customers address complex business challenges across various industries. Kara is passionate about innovation and continuous learning. Farshad Harirchi is a Principal Data Scientist at AWS Professional Services. He helps customers across industries, from retail to industrial and financial services, with the design and development of generative AI and machine learning solutions. Farshad brings extensive experience in the entire machine learning and MLOps stack. Outside of work, he enjoys traveling, playing outdoor sports, and exploring board games.

Farshad Harirchi is a Principal Data Scientist at AWS Professional Services. He helps customers across industries, from retail to industrial and financial services, with the design and development of generative AI and machine learning solutions. Farshad brings extensive experience in the entire machine learning and MLOps stack. Outside of work, he enjoys traveling, playing outdoor sports, and exploring board games. James Poquiz is a Data Scientist with AWS Professional Services based in Orange County, California. He has a BS in Computer Science from the University of California, Irvine and has several years of experience working in the data domain having played many different roles. Today he works on implementing and deploying scalable ML solutions to achieve business outcomes for AWS clients.

James Poquiz is a Data Scientist with AWS Professional Services based in Orange County, California. He has a BS in Computer Science from the University of California, Irvine and has several years of experience working in the data domain having played many different roles. Today he works on implementing and deploying scalable ML solutions to achieve business outcomes for AWS clients.