Investment professionals face the mounting challenge of processing vast amounts of data to make timely, informed decisions. The traditional approach of manually sifting through countless research documents, industry reports, and financial statements is not only time-consuming but can also lead to missed opportunities and incomplete analysis. This challenge is particularly acute in credit markets, where the complexity of information and the need for quick, accurate insights directly impacts investment outcomes. Financial institutions need a solution that can not only aggregate and process large volumes of data but also deliver actionable intelligence in a conversational, user-friendly format. The intersection of AI and financial analysis presents a compelling opportunity to transform how investment professionals access and use credit intelligence, leading to more efficient decision-making processes and better risk management outcomes.

Founded in 2013, Octus, formerly Reorg, is the essential credit intelligence and data provider for the world’s leading buy side firms, investment banks, law firms and advisory firms. By surrounding unparalleled human expertise with proven technology, data and AI tools, Octus unlocks powerful truths that fuel decisive action across financial markets. Visit octus.com to learn how we deliver rigorously verified intelligence at speed and create a complete picture for professionals across the entire credit lifecycle. Follow Octus on LinkedIn and X.

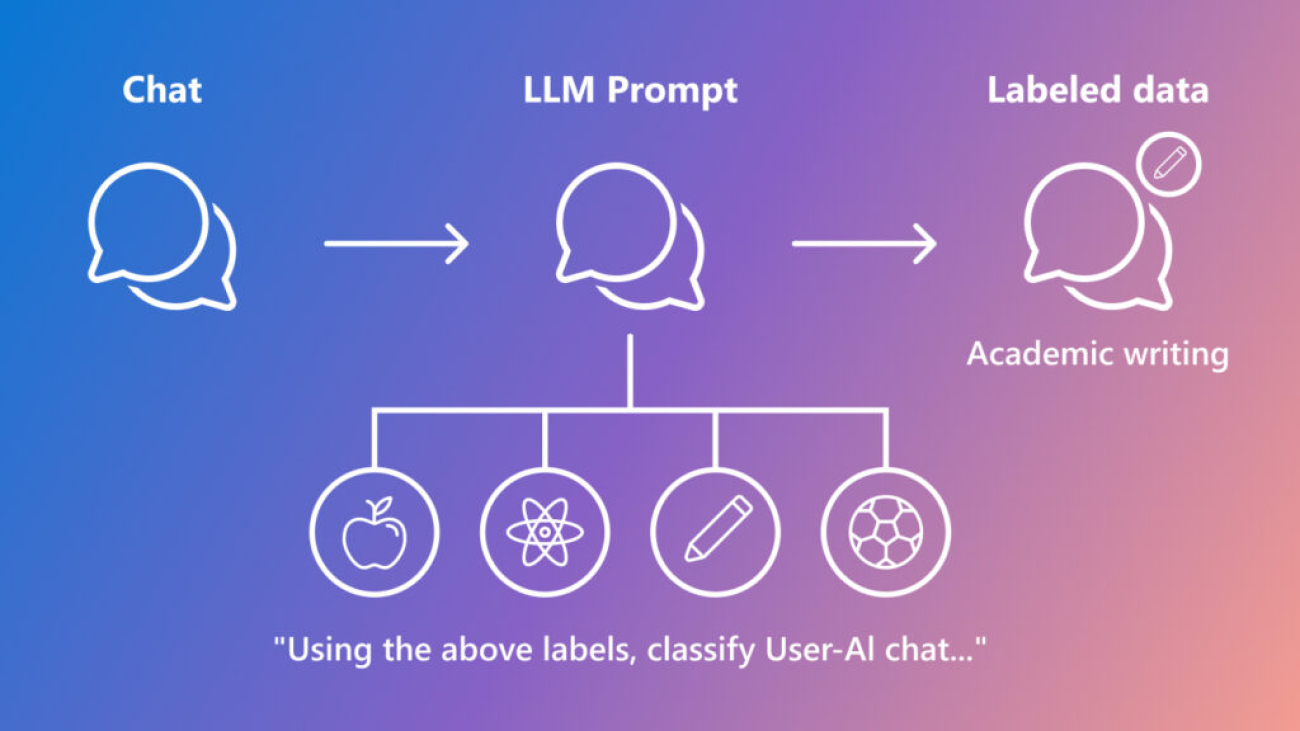

Using advanced GenAI, CreditAI by Octus is a flagship conversational chatbot that supports natural language queries and real-time data access with source attribution, significantly reducing analysis time and streamlining research workflows. It gives instant access to insights on over 10,000 companies from hundreds of thousands of proprietary intel articles, helping financial institutions make informed credit decisions while effectively managing risk. Key features include chat history management, being able to ask questions that are targeted to a specific company or more broadly to a sector, and getting suggestions on follow-up questions.

is a flagship conversational chatbot that supports natural language queries and real-time data access with source attribution, significantly reducing analysis time and streamlining research workflows. It gives instant access to insights on over 10,000 companies from hundreds of thousands of proprietary intel articles, helping financial institutions make informed credit decisions while effectively managing risk. Key features include chat history management, being able to ask questions that are targeted to a specific company or more broadly to a sector, and getting suggestions on follow-up questions.

In this post, we demonstrate how Octus migrated its flagship product, CreditAI, to Amazon Bedrock, transforming how investment professionals access and analyze credit intelligence. We walk through the journey Octus took from managing multiple cloud providers and costly GPU instances to implementing a streamlined, cost-effective solution using AWS services including Amazon Bedrock, AWS Fargate, and Amazon OpenSearch Service. We share detailed insights into the architecture decisions, implementation strategies, security best practices, and key learnings that enabled Octus to maintain zero downtime while significantly improving the application’s performance and scalability.

Opportunities for innovation

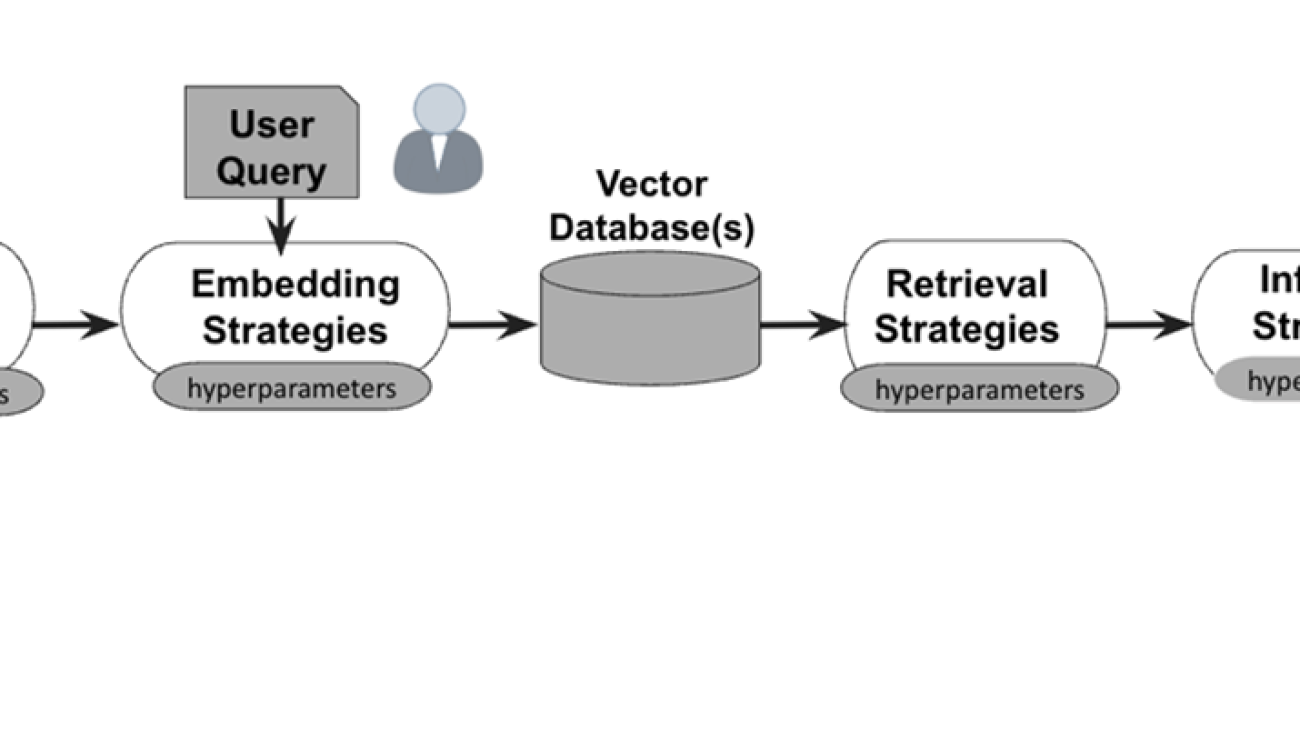

CreditAI by Octus version 1.x uses Retrieval Augmented Generation (RAG). It was built using a combination of in-house and external cloud services on Microsoft Azure for large language models (LLMs), Pinecone for vectorized databases, and Amazon Elastic Compute Cloud (Amazon EC2) for embeddings. Based on our operational experience, and as we started scaling up, we realized that there were several operational inefficiencies and opportunities for improvement:

version 1.x uses Retrieval Augmented Generation (RAG). It was built using a combination of in-house and external cloud services on Microsoft Azure for large language models (LLMs), Pinecone for vectorized databases, and Amazon Elastic Compute Cloud (Amazon EC2) for embeddings. Based on our operational experience, and as we started scaling up, we realized that there were several operational inefficiencies and opportunities for improvement:

- Our in-house services for embeddings (deployed on EC2 instances) were not as scalable and reliable as needed. They also required more time on operational maintenance than our team could spare.

- The overall solution was incurring high operational costs, especially due to the use of on-demand GPU instances. The real-time nature of our application meant that Spot Instances were not an option. Additionally, our investigation of lower-cost CPU-based instances revealed that they couldn’t meet our latency requirements.

- The use of multiple external cloud providers complicated DevOps, support, and budgeting.

These operational inefficiencies meant that we had to revisit our solution architecture. It became apparent that a cost-effective solution for our generative AI needs was required. Enter Amazon Bedrock Knowledge Bases. With its support for knowledge bases that simplify RAG operations, vectorized search as part of its integration with OpenSearch Service, availability of multi-tenant embeddings, as well as Anthropic’s Claude suite of LLMs, it was a compelling choice for Octus to migrate its solution architecture. Along the way, it also simplified operations as Octus is an AWS shop more generally. However, we were still curious about how we would go about this migration, and whether there would be any downtime through the transition.

Strategic requirements

To help us move forward systematically, Octus identified the following key requirements to guide the migration to Amazon Bedrock:

- Scalability – A crucial requirement was the need to scale operations from handling hundreds of thousands of documents to millions of documents. A significant challenge in the previous system was the slow (and relatively unreliable) process of embedding new documents into vector databases, which created bottlenecks in scaling operations.

- Cost-efficiency and infrastructure optimization – CreditAI 1.x, though performant, was incurring high infrastructure costs due to the use of GPU-based, single-tenant services for embeddings and reranking. We needed multi-tenant alternatives that were much cheaper while enabling elasticity and scale.

- Response performance and latency – The success of generative AI-based applications depends on the response quality and speed. Given our user base, it’s important that our responses are accurate while valuing users’ time (low latency). This is a challenge when the data size and complexity grow. We want to balance spatial and temporal retrieval in order to give responses that have the best answer and context relevance, especially when we get large quantities of data updated every day.

- Zero downtime – CreditAI is in production and we could not afford any downtime during this migration.

- Technological agility and innovation – In the rapidly evolving AI landscape, Octus recognized the importance of maintaining technological competitiveness. We wanted to move away from in-house development and feature maintenance such as embeddings services, rerankers, guardrails, and RAG evaluators. This would allow Octus to focus on product innovation and faster feature deployment.

- Operational consolidation and reliability – Octus’s goal is to consolidate cloud providers, and to reduce support overheads and operational complexity.

Migration to Amazon Bedrock and addressing our requirements

Migrating to Amazon Bedrock addressed our aforementioned requirements in the following ways:

- Scalability – The architecture of Amazon Bedrock, combined with AWS Fargate for Amazon ECS, Amazon Textract, and AWS Lambda, provided the elastic and scalable infrastructure necessary for this expansion while maintaining performance, data integrity, compliance, and security standards. The solution’s efficient document processing and embedding capabilities addressed the previous system’s limitations, enabling faster and more efficient knowledge base updates.

- Cost-efficiency and infrastructure optimization – By migrating to Amazon Bedrock multi-tenant embedding, Octus achieved significant cost reduction while maintaining performance standards through Anthropic’s Claude Sonnet and improved embedding capabilities. This move alleviated the need for GPU-instance-based services in favor of more cost-effective and serverless Amazon ECS and Fargate solutions.

- Response performance and latency – Octus verified the quality and latency of responses from Anthropic’s Claude Sonnet to confirm that response accuracy and latency are not maintained (or even exceeded) as part of this migration. With this LLM, CreditAI was now able to respond better to broader, industry-wide queries than before.

- Zero downtime – We were able to achieve zero downtime migration to Amazon Bedrock for our application using our in-house centralized infrastructure frameworks. Our frameworks comprise infrastructure as code (IaC) through Terraform, continuous integration and delivery (CI/CD), SOC2 security, monitoring, observability, and alerting for our infrastructure and applications.

- Technological agility and innovation – Amazon Bedrock emerged as an ideal partner, offering solutions specifically designed for AI application development. Amazon Bedrock built-in features, such as embeddings services, reranking, guardrails, and the upcoming RAG evaluator, alleviated the need for in-house development of these components, allowing Octus to focus on product innovation and faster feature deployment.

- Operational consolidation and reliability – The comprehensive suite of AWS services offers a streamlined framework that simplifies operations while providing high availability and reliability. This consolidation minimizes the complexity of managing multiple cloud providers and creates a more cohesive technological ecosystem. It also enables economies of scale with development velocity given that over 75 engineers at Octus already use AWS services for application development.

In addition, the Amazon Bedrock Knowledge Bases team worked closely with us to address several critical elements, including expanding embedding limits, managing the metadata limit (250 characters), testing different chunking methods, and syncing throughput to the knowledge base.

In the following sections, we explore our solution and how we addressed the details around the migration to Amazon Bedrock and Fargate.

Solution overview

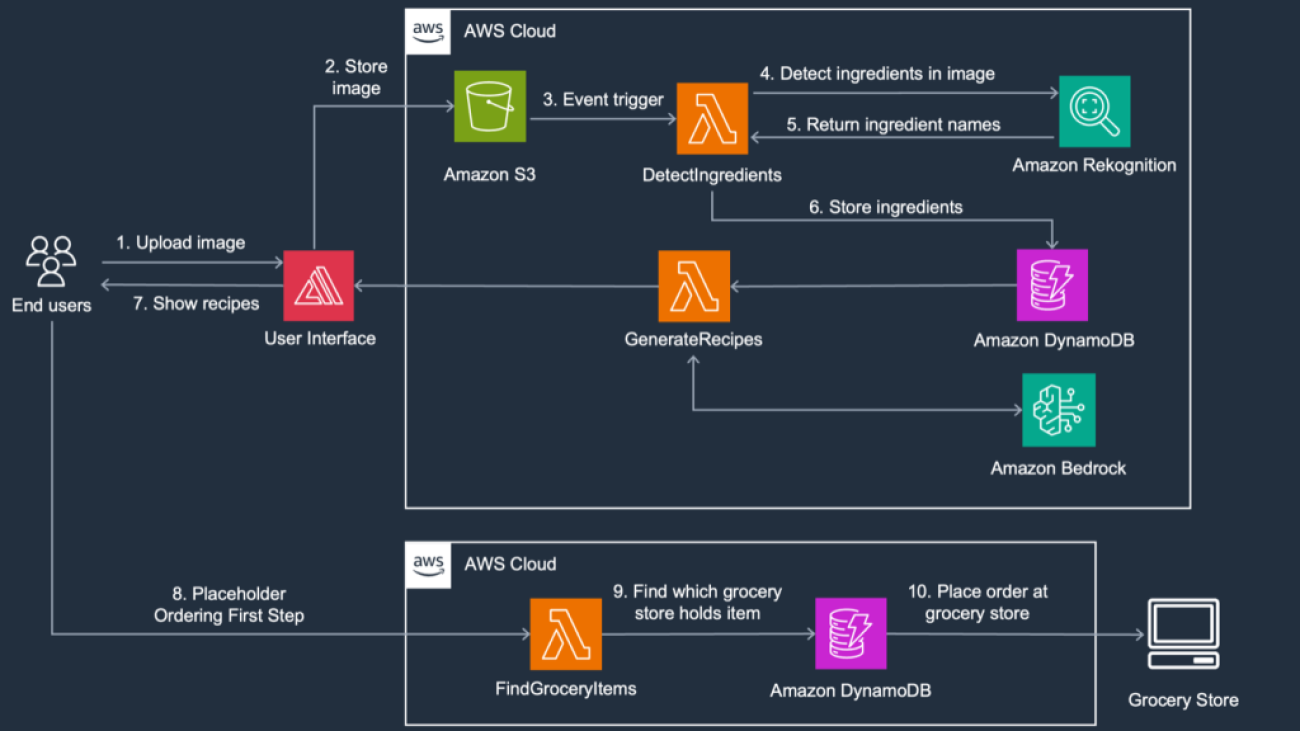

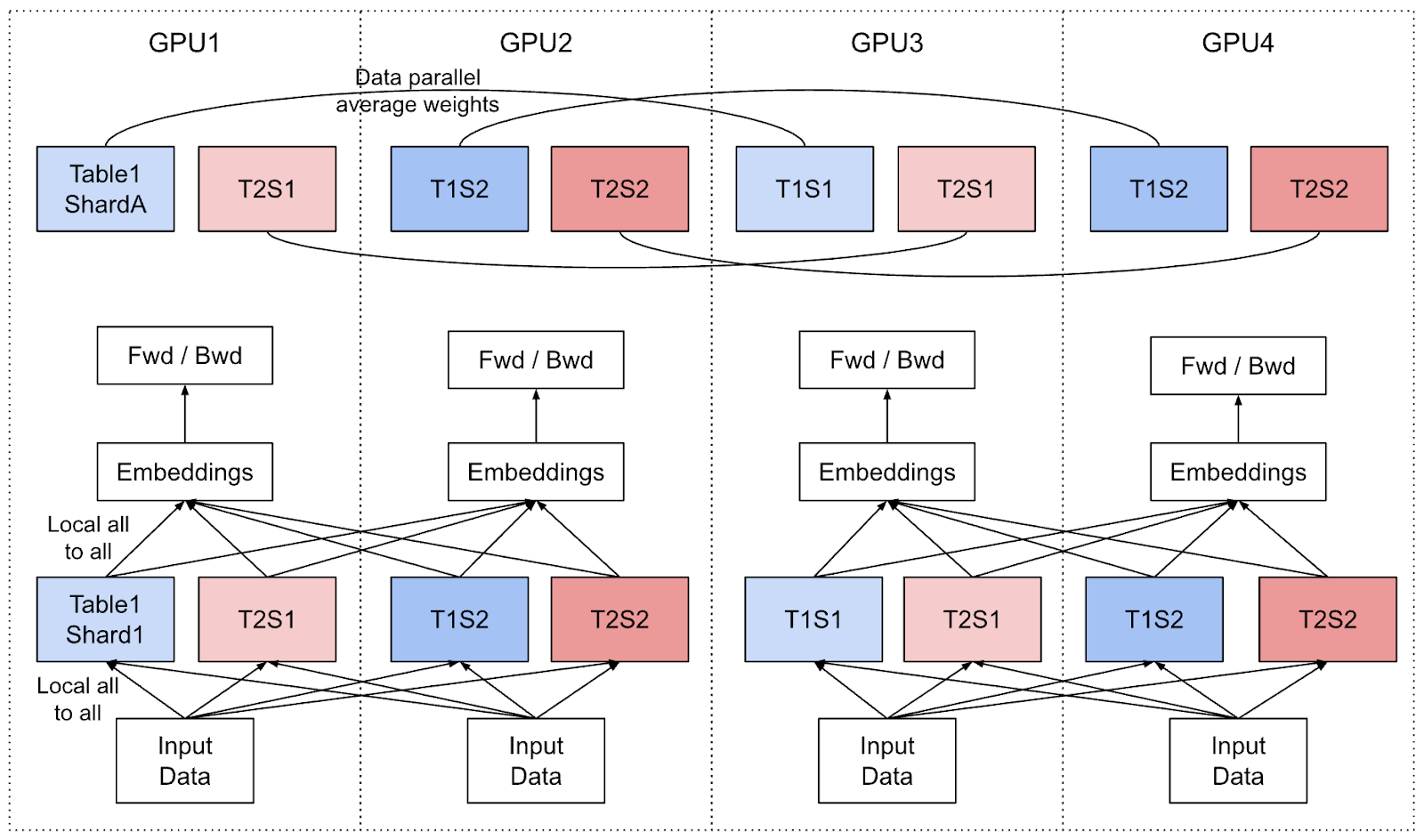

The following figure illustrates our system architecture for CreditAI on AWS, with two key paths: the document ingestion and content extraction workflow, and the Q&A workflow for live user query response.

In the following sections, we dive into crucial details within key components in our solution. In each case, we connect them to the requirements discussed earlier for readability.

The document ingestion workflow (numbered in blue in the preceding diagram) processes content through five distinct stages:

- Documents uploaded to Amazon Simple Storage Service (Amazon S3) automatically invoke Lambda functions through S3 Event Notifications. This event-driven architecture provides immediate processing of new documents.

- Lambda functions process the event payload containing document location, perform format validation, and prepare content for extraction. This includes file type verification, size validation, and metadata extraction before routing to Amazon Textract.

- Amazon Textract processes the documents to extract both text and structural information. This service handles various formats, including PDFs, images, and forms, while preserving document layout and relationships between content elements.

- The extracted content is stored in a dedicated S3 prefix, separate from the source documents, maintaining clear data lineage. Each processed document maintains references to its source file, extraction timestamp, and processing metadata.

- The extracted content flows into Amazon Bedrock Knowledge Bases, where our semantic chunking strategy is implemented to divide content into optimal segments. The system then generates embeddings for each chunk and stores these vectors in OpenSearch Service for efficient retrieval. Throughout this process, the system maintains comprehensive metadata to support downstream filtering and source attribution requirements.

The Q&A workflow (numbered in yellow in the preceding diagram) processes user interactions through six integrated stages:

- The web application, hosted on AWS Fargate, handles user interactions and query inputs, managing initial request validation before routing queries to appropriate processing services.

- Amazon Managed Streaming for Kafka (Amazon MSK) serves as the streaming service, providing reliable inter-service communication while maintaining message ordering and high-throughput processing for query handling.

- The Q&A handler, running on AWS Fargate, orchestrates the complete query response cycle by coordinating between services and processing responses through the LLM pipeline.

- The pipeline integrates with Amazon Bedrock foundation models through these components:

- Cohere Embeddings model performs vector transformations of the input.

- Amazon OpenSearch Service manages vector embeddings and performs similarity searches.

- Amazon Bedrock Knowledge Bases provides efficient access to the document repository.

- Amazon Bedrock Guardrails implements content filtering and safety checks as part of the query processing pipeline.

- Anthropic Claude LLM performs the natural language processing, generating responses that are then returned to the web application.

This integrated workflow provides efficient query processing while maintaining response quality and system reliability.

For scalability: Using OpenSearch Service as our vector database

Amazon OpenSearch Serverless emerged as the optimal solution for CreditAI’s evolving requirements, offering advanced capabilities while maintaining seamless integration within the AWS ecosystem:

- Vector search capabilities – OpenSearch Serverless provides robust built-in vector search capabilities essential for our needs. The service supports hybrid search, allowing us to combine vector embeddings with raw text search without modifying our embedding model. This capability proved crucial for enabling broader question support in CreditAI 2.x, enhancing its overall usability and flexibility.

- Serverless architecture benefits – The serverless design alleviates the need to provision, configure, or tune infrastructure, significantly reducing operational complexities. This shift allows our team to focus more time and resources on feature development and application improvements rather than managing underlying infrastructure.

- AWS integration advantages – The tight integration with other AWS services, particularly Amazon S3 and Amazon Bedrock, streamlines our content ingestion process. This built-in compatibility provides a cohesive and scalable landscape for future enhancements while maintaining optimal performance.

OpenSearch Serverless enabled us to scale our vector search capabilities efficiently while minimizing operational overhead and maintaining high performance standards.

For scalability and security: Splitting data across multiple vector databases with in-house support for intricate permissions

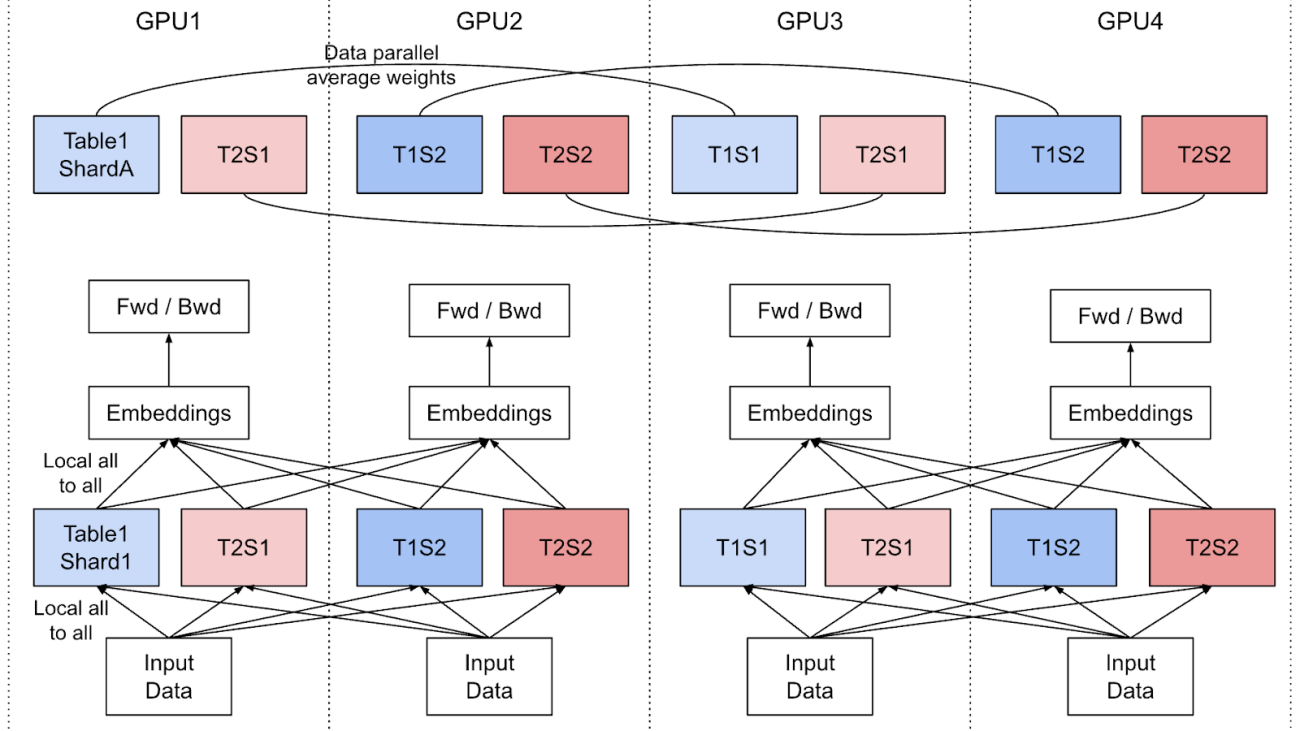

To enhance scalability and security, we implemented isolated knowledge bases (corresponding to vector databases) for each client data. Although this approach slightly increases costs, it delivers multiple significant benefits. Primarily, it maintains complete isolation of client data, providing enhanced privacy and security. Thanks to Amazon Bedrock Knowledge Bases, this solution doesn’t compromise on performance. Amazon Bedrock Knowledge Bases enables concurrent embedding and synchronization across multiple knowledge bases, allowing us to maintain real-time updates without delays—something previously unattainable with our previous GPU based architectures.

Additionally, we introduced two in-house services within Octus to strengthen this system:

- AuthZ access management service – This service enforces granular access control, making sure users and applications can only interact with the data they are authorized to access. We had to migrate our AuthZ backend from Airbyte to native SQL replication so that it can support access management in near real time at scale.

- Global identifiers service – This service provides a unified framework to link identifiers across multiple domains, enabling seamless integration and cross-referencing of identifiers across multiple datasets.

Together, these enhancements create a robust, secure, and highly efficient environment for managing and accessing client data.

For cost efficiency: Adopting a multi-tenant embedding service

In our migration to Amazon Bedrock Knowledge Bases, Octus made a strategic shift from using an open-source embedding service on EC2 instances to using the managed embedding capabilities of Amazon Bedrock through Cohere’s multilingual model. This transition was carefully evaluated based on several key factors.

Our selection of Cohere’s multilingual model was driven by two primary advantages. First, it demonstrated superior retrieval performance in our comparative testing. Second, it offered robust multilingual support capabilities that were essential for our global operations.

The technical benefits of this migration manifested in two distinct areas: document embedding and message embedding. In document embedding, we transitioned from a CPU-based system to Amazon Bedrock Knowledge Bases, which enabled faster and higher throughput document processing through its multi-tenant architecture. For message embedding, we alleviated our dependency on dedicated GPU instances while maintaining optimal performance with 20–30 millisecond embedding times. The Amazon Bedrock Knowledge Bases API also simplified our operations by combining embedding and retrieval functionality into a single API call.

The migration to Amazon Bedrock Knowledge Bases managed embedding delivered two significant advantages: it eliminated the operational overhead of maintaining our own open-source solution while providing access to industry-leading embedding capabilities through Cohere’s model. This helped us achieve both our cost-efficiency and performance objectives without compromises.

For cost-efficiency and response performance: Choice of chunking strategy

Our primary goal was to improve three critical aspects of CreditAI’s responses: quality (accuracy of information), groundedness (ability to trace responses back to source documents), and relevance (providing information that directly answers user queries). To achieve this, we tested three different approaches to breaking down documents into smaller pieces (chunks):

- Fixed chunking – Breaking text into fixed-length pieces

- Semantic chunking – Breaking text based on natural semantic boundaries like paragraphs, sections, or complete thoughts

- Hierarchical chunking – Creating a two-level structure with smaller child chunks for precise matching and larger parent chunks for contextual understanding

Our testing showed that both semantic and hierarchical chunking performed significantly better than fixed chunking in retrieving relevant information. However, each approach came with its own technical considerations.

Hierarchical chunking requires a larger chunk size to maintain comprehensive context during retrieval. This approach creates a two-level structure: smaller child chunks for precise matching and larger parent chunks for contextual understanding. During retrieval, the system first identifies relevant child chunks and then automatically includes their parent chunks to provide broader context. Although this method optimizes both search precision and context preservation, we couldn’t implement it with our preferred Cohere embeddings because they only support chunks up to 512 tokens, which is insufficient for the parent chunks needed to maintain effective hierarchical relationships.

Semantic chunking uses LLMs to intelligently divide text by analyzing both semantic similarity and natural language structures. Instead of arbitrary splits, the system identifies logical break points by calculating embedding-based similarity scores between sentences and paragraphs, making sure semantically related content stays together. The resulting chunks maintain context integrity by considering both linguistic features (like sentence and paragraph boundaries) and semantic coherence, though this precision comes at the cost of additional computational resources for LLM analysis and embedding calculations.

After evaluating our options, we chose semantic chunking despite two trade-offs:

- It requires additional processing by our LLMs, which increases costs

- It has a limit of 1,000,000 tokens per document processing batch

We made this choice because semantic chunking offered the best balance between implementation simplicity and retrieval performance. Although hierarchical chunking showed promise, it would have been more complex to implement and harder to scale. This decision helped us maintain high-quality, grounded, and relevant responses while keeping our system manageable and efficient.

For response performance and technical agility: Adopting Amazon Bedrock Guardrails with Amazon Bedrock Knowledge Bases

Our implementation of Amazon Bedrock Guardrails focused on three key objectives: enhancing response security, optimizing performance, and simplifying guardrail management. This service plays a crucial role in making sure our responses are both safe and efficient.

Amazon Bedrock Guardrails provides a comprehensive framework for content filtering and response moderation. The system works by evaluating content against predefined rules before the LLM processes it, helping prevent inappropriate content and maintaining response quality. Through the Amazon Bedrock Guardrails integration with Amazon Bedrock Knowledge Bases, we can configure, test, and iterate on our guardrails without writing complex code.

We achieved significant technical improvements in three areas:

- Simplified moderation framework – Instead of managing multiple separate denied topics, we consolidated our content filtering into a unified guardrail service. This approach allows us to maintain a single source of truth for content moderation rules, with support for customizable sample phrases that help fine-tune our filtering accuracy.

- Performance optimization – We improved system performance by integrating guardrail checks directly into our main prompts, rather than running them as separate operations. This optimization reduced our token usage and minimized unnecessary API calls, resulting in lower latency for each query.

- Enhanced content control – The service provides configurable thresholds for filtering potentially harmful content and includes built-in capabilities for detecting hallucinations and assessing response relevance. This alleviated our dependency on external services like TruLens while maintaining robust content quality controls.

These improvements have helped us maintain high response quality while reducing both operational complexity and processing overhead. The integration with Amazon Bedrock has given us a more streamlined and efficient approach to content moderation.

To achieve zero downtime: Infrastructure migration

Our migration to Amazon Bedrock required careful planning to provide uninterrupted service for CreditAI while significantly reducing infrastructure costs. We achieved this through our comprehensive infrastructure framework that addresses deployment, security, and monitoring needs:

- IaC implementation – We used reusable Terraform modules to manage our infrastructure consistently across environments. These modules enabled us to share configurations efficiently between services and projects. Our approach supports multi-Region deployments with minimal configuration changes while maintaining infrastructure version control alongside application code.

- Automated deployment strategy – Our GitOps-embedded framework streamlines the deployment process by implementing a clear branching strategy for different environments. This automation handles CreditAI component deployments through CI/CD pipelines, reducing human error through automated validation and testing. The system also enables rapid rollback capabilities if needed.

- Security and compliance – To maintain SOC2 compliance and robust security, our framework incorporates comprehensive access management controls and data encryption at rest and in transit. We follow network security best practices, conduct regular security audits and monitoring, and run automated compliance checks in the deployment pipeline.

We maintained zero downtime during the entire migration process while reducing infrastructure costs by 70% by eliminating GPU instances. The successful transition from Amazon ECS on Amazon EC2 to Amazon ECS with Fargate has simplified our infrastructure management and monitoring.

Achieving excellence

CreditAI’s migration to Amazon Bedrock has yielded remarkable results for Octus:

- Scalability – We have almost doubled the number of documents available for Q&A across three environments in days instead of weeks. Our use of Amazon ECS with Fargate with auto scaling rules and controls gives us elastic scalability for our services during peak usage hours.

- Cost-efficiency and infrastructure optimization – By moving away from GPU-based clusters to Fargate, our monthly infrastructure costs are now 78.47% lower, and our per-question costs have reduced by 87.6%.

- Response performance and latency – There has been no drop in latency, and have seen a 27% increase in questions answered successfully. We have also seen a 250% boost in user engagement. Users especially love our support for broad, industry-wide questions enabled by Anthropic’s Claude Sonnet.

- Zero downtime – We experienced zero downtime during migration and 99% uptime overall for the whole application.

- Technological agility and innovation – We have been able to add new document sources in a quarter of the time it took pre-migration. In addition, we adopted enhanced guardrails support for free and no longer have to retrieve documents from the knowledge base and pass the chunks to Anthropic’s Claude Sonnet to trigger a guardrail.

- Operational consolidation and reliability – Post-migration, our DevOps and SRE teams see 20% less maintenance burden and overheads. Supporting SOC2 compliance is also straightforward now that we’re using only one cloud provider.

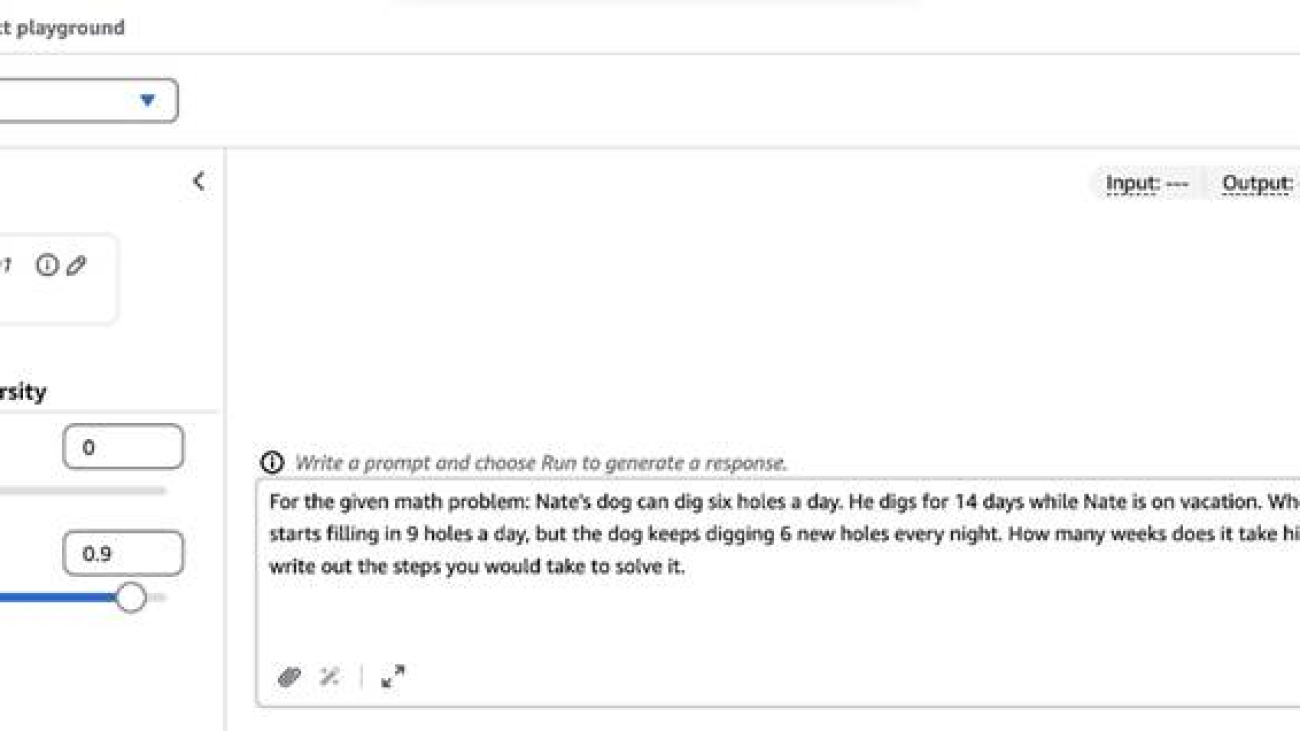

Operational monitoring

We use Datadog to monitor both LLM latency and our document ingestion pipeline, providing real-time visibility into system performance. The following screenshot showcases how we use custom Datadog dashboards to provide a live view of the document ingestion pipeline. This visualization offers both a high-level overview and detailed insights into the ingestion process, helping us understand the volume, format, and status of the documents processed. The bottom half of the dashboard presents a time-series view of document processing volumes. The timeline tracks fluctuations in processing rates, identifies peak activity periods, and provides actionable insights to optimize throughput. This detailed monitoring system enables us to maintain efficiency, minimize failures, and provide scalability.

Roadmap

Looking ahead, Octus plans to continue enhancing CreditAI by taking advantage of new capabilities released by Amazon Bedrock that continue to meet and exceed our requirements. Future developments will include:

- Enhance retrieval by testing and integrating with reranking techniques, allowing the system to prioritize the most relevant search results for better user experience and accuracy.

- Explore the Amazon Bedrock RAG evaluator to capture detailed metrics on CreditAI’s performance. This will add to the existing mechanisms at Octus to track performance that include tracking unanswered questions.

- Expand to ingest large-scale structured data, making it capable of handling complex financial datasets. The integration of text-to-SQL will enable users to query structured databases using natural language, simplifying data access.

- Explore replacing our in-house content extraction service (ADE) with the Amazon Bedrock advanced parsing solution to potentially further reduce document ingestion costs.

- Improve CreditAI’s disaster recovery and redundancy mechanisms, making sure that our services and infrastructure are more fault tolerant and can recover from outages faster.

These upgrades aim to boost the precision, reliability, and scalability of CreditAI.

Vishal Saxena, CTO at Octus, shares: “CreditAI is a first-of-its-kind generative AI application that focuses on the entire credit lifecycle. It is truly ’AI embedded’ software that combines cutting-edge AI technologies with an enterprise data architecture and a unified cloud strategy.”

Conclusion

CreditAI by Octus is the company’s flagship conversational chatbot that supports natural language queries and gives instant access to insights on over 10,000 companies from hundreds of thousands of proprietary intel articles. In this post, we described in detail our motivation, process, and results on Octus’s migration to Amazon Bedrock. Through this migration, Octus achieved remarkable results that included an over 75% reduction in operating costs as well as a 250% boost in engagement. Future steps include adopting new features such as reranking, RAG evaluator, and advanced parsing to further reduce costs and improve performance. We believe that the collaboration between Octus and AWS will continue to revolutionize financial analysis and research workflows.

To learn more about Amazon Bedrock, refer to the Amazon Bedrock User Guide.

About the Authors

Vaibhav Sabharwal is a Senior Solutions Architect with Amazon Web Services based out of New York. He is passionate about learning new cloud technologies and assisting customers in building cloud adoption strategies, designing innovative solutions, and driving operational excellence. As a member of the Financial Services Technical Field Community at AWS, he actively contributes to the collaborative efforts within the industry.

Vaibhav Sabharwal is a Senior Solutions Architect with Amazon Web Services based out of New York. He is passionate about learning new cloud technologies and assisting customers in building cloud adoption strategies, designing innovative solutions, and driving operational excellence. As a member of the Financial Services Technical Field Community at AWS, he actively contributes to the collaborative efforts within the industry.

Yihnew Eshetu is a Senior Director of AI Engineering at Octus, leading the development of AI solutions at scale to address complex business problems. With seven years of experience in AI/ML, his expertise spans GenAI and NLP, specializing in designing and deploying agentic AI systems. He has played a key role in Octus’s AI initiatives, including leading AI Engineering for its flagship GenAI chatbot, CreditAI.

Yihnew Eshetu is a Senior Director of AI Engineering at Octus, leading the development of AI solutions at scale to address complex business problems. With seven years of experience in AI/ML, his expertise spans GenAI and NLP, specializing in designing and deploying agentic AI systems. He has played a key role in Octus’s AI initiatives, including leading AI Engineering for its flagship GenAI chatbot, CreditAI.

Harmandeep Sethi is a Senior Director of SRE Engineering and Infrastructure Frameworks at Octus, with nearly 10 years of experience leading high-performing teams in the design, implementation, and optimization of large-scale, highly available, and reliable systems. He has played a pivotal role in transforming and modernizing Credit AI infrastructure and services by driving best practices in observability, resilience engineering, and the automation of operational processes through Infrastructure Frameworks.

Harmandeep Sethi is a Senior Director of SRE Engineering and Infrastructure Frameworks at Octus, with nearly 10 years of experience leading high-performing teams in the design, implementation, and optimization of large-scale, highly available, and reliable systems. He has played a pivotal role in transforming and modernizing Credit AI infrastructure and services by driving best practices in observability, resilience engineering, and the automation of operational processes through Infrastructure Frameworks.

Rohan Acharya is an AI Engineer at Octus, specializing in building and optimizing AI-driven solutions at scale. With expertise in GenAI and NLP, he focuses on designing and deploying intelligent systems that enhance automation and decision-making. His work involves developing robust AI architectures and advancing Octus’s AI initiatives, including the evolution of CreditAI.

Rohan Acharya is an AI Engineer at Octus, specializing in building and optimizing AI-driven solutions at scale. With expertise in GenAI and NLP, he focuses on designing and deploying intelligent systems that enhance automation and decision-making. His work involves developing robust AI architectures and advancing Octus’s AI initiatives, including the evolution of CreditAI.

Hasan Hasibul is a Principal Architect at Octus leading the DevOps team, with nearly 12 years of experience in building scalable, complex architectures while following software development best practices. A true advocate of clean code, he thrives on solving complex problems and automating infrastructure. Passionate about DevOps, infrastructure automation, and the latest advancements in AI, he has architected Octus initial CreditAI, pushing the boundaries of innovation.

Hasan Hasibul is a Principal Architect at Octus leading the DevOps team, with nearly 12 years of experience in building scalable, complex architectures while following software development best practices. A true advocate of clean code, he thrives on solving complex problems and automating infrastructure. Passionate about DevOps, infrastructure automation, and the latest advancements in AI, he has architected Octus initial CreditAI, pushing the boundaries of innovation.

Philipe Gutemberg is a Principal Software Engineer and AI Application Development Team Lead at Octus, passionate about leveraging technology for impactful solutions. An AWS Certified Solutions Architect – Associate (SAA), he has expertise in software architecture, cloud computing, and leadership. Philipe led both backend and frontend application development for CreditAI, ensuring a scalable system that integrates AI-driven insights into financial applications. A problem-solver at heart, he thrives in fast-paced environments, delivering innovative solutions for financial institutions while fostering mentorship, team development, and continuous learning.

Philipe Gutemberg is a Principal Software Engineer and AI Application Development Team Lead at Octus, passionate about leveraging technology for impactful solutions. An AWS Certified Solutions Architect – Associate (SAA), he has expertise in software architecture, cloud computing, and leadership. Philipe led both backend and frontend application development for CreditAI, ensuring a scalable system that integrates AI-driven insights into financial applications. A problem-solver at heart, he thrives in fast-paced environments, delivering innovative solutions for financial institutions while fostering mentorship, team development, and continuous learning.

Kishore Iyer is the VP of AI Application Development and Engineering at Octus. He leads teams that build, maintain and support Octus’s customer-facing GenAI applications, including CreditAI, our flagship AI offering. Prior to Octus, Kishore has 15+ years of experience in engineering leadership roles across large corporations, startups, research labs, and academia. He holds a Ph.D. in computer engineering from Rutgers University.

Kishore Iyer is the VP of AI Application Development and Engineering at Octus. He leads teams that build, maintain and support Octus’s customer-facing GenAI applications, including CreditAI, our flagship AI offering. Prior to Octus, Kishore has 15+ years of experience in engineering leadership roles across large corporations, startups, research labs, and academia. He holds a Ph.D. in computer engineering from Rutgers University.

Kshitiz Agarwal is an Engineering Leader at Amazon Web Services (AWS), where he leads the development of Amazon Bedrock Knowledge Bases. With a decade of experience at Amazon, having joined in 2012, Kshitiz has gained deep insights into the cloud computing landscape. His passion lies in engaging with customers and understanding the innovative ways they leverage AWS to drive their business success. Through his work, Kshitiz aims to contribute to the continuous improvement of AWS services, enabling customers to unlock the full potential of the cloud.

Kshitiz Agarwal is an Engineering Leader at Amazon Web Services (AWS), where he leads the development of Amazon Bedrock Knowledge Bases. With a decade of experience at Amazon, having joined in 2012, Kshitiz has gained deep insights into the cloud computing landscape. His passion lies in engaging with customers and understanding the innovative ways they leverage AWS to drive their business success. Through his work, Kshitiz aims to contribute to the continuous improvement of AWS services, enabling customers to unlock the full potential of the cloud.

Sandeep Singh is a Senior Generative AI Data Scientist at Amazon Web Services, helping businesses innovate with generative AI. He specializes in generative AI, machine learning, and system design. He has successfully delivered state-of-the-art AI/ML-powered solutions to solve complex business problems for diverse industries, optimizing efficiency and scalability.

Sandeep Singh is a Senior Generative AI Data Scientist at Amazon Web Services, helping businesses innovate with generative AI. He specializes in generative AI, machine learning, and system design. He has successfully delivered state-of-the-art AI/ML-powered solutions to solve complex business problems for diverse industries, optimizing efficiency and scalability.

Tim Ramos is a Senior Account Manager at AWS. He has 12 years of sales experience and 10 years of experience in cloud services, IT infrastructure, and SaaS. Tim is dedicated to helping customers develop and implement digital innovation strategies. His focus areas include business transformation, financial and operational optimization, and security. Tim holds a BA from Gonzaga University and is based in New York City.

Tim Ramos is a Senior Account Manager at AWS. He has 12 years of sales experience and 10 years of experience in cloud services, IT infrastructure, and SaaS. Tim is dedicated to helping customers develop and implement digital innovation strategies. His focus areas include business transformation, financial and operational optimization, and security. Tim holds a BA from Gonzaga University and is based in New York City.

Read More

Prasanna Sridharan is a Principal Gen AI/ML Architect at AWS, specializing in designing and implementing AI/ML and Generative AI solutions for enterprise customers. With a passion for helping AWS customers build innovative Gen AI applications, he focuses on creating scalable, cutting-edge AI solutions that drive business transformation. You can connect with Prasanna on LinkedIn.

Prasanna Sridharan is a Principal Gen AI/ML Architect at AWS, specializing in designing and implementing AI/ML and Generative AI solutions for enterprise customers. With a passion for helping AWS customers build innovative Gen AI applications, he focuses on creating scalable, cutting-edge AI solutions that drive business transformation. You can connect with Prasanna on LinkedIn. Dr. Hemant Joshi has over 20 years of industry experience building products and services with AI/ML technologies. As CTO of FloTorch, Hemant is engaged with customers to implement State of the Art GenAI solutions and agentic workflows for enterprises.

Dr. Hemant Joshi has over 20 years of industry experience building products and services with AI/ML technologies. As CTO of FloTorch, Hemant is engaged with customers to implement State of the Art GenAI solutions and agentic workflows for enterprises.

Dmitry Soldatkin is a Senior AI/ML Solutions Architect at Amazon Web Services (AWS), helping customers design and build AI/ML solutions. Dmitry’s work covers a wide range of ML use cases, with a primary interest in Generative AI, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, utilities, and telecommunications. You can connect with Dmitry on

Dmitry Soldatkin is a Senior AI/ML Solutions Architect at Amazon Web Services (AWS), helping customers design and build AI/ML solutions. Dmitry’s work covers a wide range of ML use cases, with a primary interest in Generative AI, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, utilities, and telecommunications. You can connect with Dmitry on  Vivek Gangasani is a Lead Specialist Solutions Architect for Inference at AWS. He helps emerging generative AI companies build innovative solutions using AWS services and accelerated compute. Currently, he is focused on developing strategies for fine-tuning and optimizing the inference performance of large language models. In his free time, Vivek enjoys hiking, watching movies, and trying different cuisines.

Vivek Gangasani is a Lead Specialist Solutions Architect for Inference at AWS. He helps emerging generative AI companies build innovative solutions using AWS services and accelerated compute. Currently, he is focused on developing strategies for fine-tuning and optimizing the inference performance of large language models. In his free time, Vivek enjoys hiking, watching movies, and trying different cuisines. Pranav Murthy is an AI/ML Specialist Solutions Architect at AWS. He focuses on helping customers build, train, deploy and migrate machine learning (ML) workloads to SageMaker. He previously worked in the semiconductor industry developing large computer vision (CV) and natural language processing (NLP) models to improve semiconductor processes using state of the art ML techniques. In his free time, he enjoys playing chess and traveling. You can find Pranav on

Pranav Murthy is an AI/ML Specialist Solutions Architect at AWS. He focuses on helping customers build, train, deploy and migrate machine learning (ML) workloads to SageMaker. He previously worked in the semiconductor industry developing large computer vision (CV) and natural language processing (NLP) models to improve semiconductor processes using state of the art ML techniques. In his free time, he enjoys playing chess and traveling. You can find Pranav on

Aman Shanbhag is an Associate Specialist Solutions Architect on the ML Frameworks team at Amazon Web Services, where he helps customers and partners with deploying ML training and inference solutions at scale. Before joining AWS, Aman graduated from Rice University with degrees in Computer Science, Mathematics, and Entrepreneurship.

Aman Shanbhag is an Associate Specialist Solutions Architect on the ML Frameworks team at Amazon Web Services, where he helps customers and partners with deploying ML training and inference solutions at scale. Before joining AWS, Aman graduated from Rice University with degrees in Computer Science, Mathematics, and Entrepreneurship. Michael Lue is a Sr. Solution Architect at AWS Canada based out of Toronto. He works with Canadian enterprise customers to accelerate their business through optimization, innovation, and modernization. He is particularly passionate and curious about disruptive technologies like containers and AI/ML. In his spare time, he coaches and plays tennis and enjoys hanging at the beach with his French Bulldog, Marleé.

Michael Lue is a Sr. Solution Architect at AWS Canada based out of Toronto. He works with Canadian enterprise customers to accelerate their business through optimization, innovation, and modernization. He is particularly passionate and curious about disruptive technologies like containers and AI/ML. In his spare time, he coaches and plays tennis and enjoys hanging at the beach with his French Bulldog, Marleé. Vineet Kachhawaha is a Solutions Architect at AWS with expertise in machine learning. He is responsible for helping customers architect scalable, secure, and cost-effective workloads on AWS.

Vineet Kachhawaha is a Solutions Architect at AWS with expertise in machine learning. He is responsible for helping customers architect scalable, secure, and cost-effective workloads on AWS.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks.

Shreyas Subramanian is a Principal Data Scientist and helps customers by using generative AI and deep learning to solve their business challenges using AWS services. Shreyas has a background in large-scale optimization and ML and in the use of ML and reinforcement learning for accelerating optimization tasks. Zhengyuan Shen is an Applied Scientist at Amazon Bedrock, specializing in foundational models and ML modeling for complex tasks including natural language and structured data understanding. He is passionate about leveraging innovative ML solutions to enhance products or services, thereby simplifying the lives of customers through a seamless blend of science and engineering. Outside work, he enjoys sports and cooking.

Zhengyuan Shen is an Applied Scientist at Amazon Bedrock, specializing in foundational models and ML modeling for complex tasks including natural language and structured data understanding. He is passionate about leveraging innovative ML solutions to enhance products or services, thereby simplifying the lives of customers through a seamless blend of science and engineering. Outside work, he enjoys sports and cooking. Xuan Qi is an Applied Scientist at Amazon Bedrock, where she applies her background in physics to tackle complex challenges in machine learning and artificial intelligence. Xuan is passionate about translating scientific concepts into practical applications that drive tangible improvements in technology. Her work focuses on creating more intuitive and efficient AI systems that can better understand and interact with the world. Outside of her professional pursuits, Xuan finds balance and creativity through her love for dancing and playing the violin, bringing the precision and harmony of these arts into her scientific endeavors.

Xuan Qi is an Applied Scientist at Amazon Bedrock, where she applies her background in physics to tackle complex challenges in machine learning and artificial intelligence. Xuan is passionate about translating scientific concepts into practical applications that drive tangible improvements in technology. Her work focuses on creating more intuitive and efficient AI systems that can better understand and interact with the world. Outside of her professional pursuits, Xuan finds balance and creativity through her love for dancing and playing the violin, bringing the precision and harmony of these arts into her scientific endeavors.

Sri Koneru has spent the last 13.5 years honing her skills in both cutting-edge product development and large-scale infrastructure. At Salesforce for 7.5 years, she had the incredible opportunity to build and launch brand new products from the ground up, reaching over 100,000 external customers. This experience was instrumental in her professional growth. Then, at Google for 6 years, she transitioned to managing critical infrastructure, overseeing capacity, efficiency, fungibility, job scheduling, data platforms, and spatial flexibility for all of Alphabet. Most recently, Sri joined Amazon Web Services leveraging her diverse skillset to make a significant impact on AI/ML services and infrastructure at AWS. Personally, Sri & her husband recently became empty nesters, relocating to Seattle from the Bay Area. They’re a basketball-loving family who even catch pre-season Warriors games but are looking forward to cheering on the Seattle Storm this year. Beyond basketball, Sri enjoys cooking, recipe creation, reading, and her newfound hobby of hiking. While she’s a sun-seeker at heart, she is looking forward to experiencing the unique character of Seattle weather.

Sri Koneru has spent the last 13.5 years honing her skills in both cutting-edge product development and large-scale infrastructure. At Salesforce for 7.5 years, she had the incredible opportunity to build and launch brand new products from the ground up, reaching over 100,000 external customers. This experience was instrumental in her professional growth. Then, at Google for 6 years, she transitioned to managing critical infrastructure, overseeing capacity, efficiency, fungibility, job scheduling, data platforms, and spatial flexibility for all of Alphabet. Most recently, Sri joined Amazon Web Services leveraging her diverse skillset to make a significant impact on AI/ML services and infrastructure at AWS. Personally, Sri & her husband recently became empty nesters, relocating to Seattle from the Bay Area. They’re a basketball-loving family who even catch pre-season Warriors games but are looking forward to cheering on the Seattle Storm this year. Beyond basketball, Sri enjoys cooking, recipe creation, reading, and her newfound hobby of hiking. While she’s a sun-seeker at heart, she is looking forward to experiencing the unique character of Seattle weather.