As quantum computers continue to develop, they will integrate with AI supercomputers to form accelerated quantum supercomputers capable of solving some of the world’s hardest problems.

Integrating quantum processing units (QPUs) into AI supercomputers is key for developing new applications, helping unlock breakthroughs critical to running future quantum hardware and enabling developments in quantum error correction and device control.

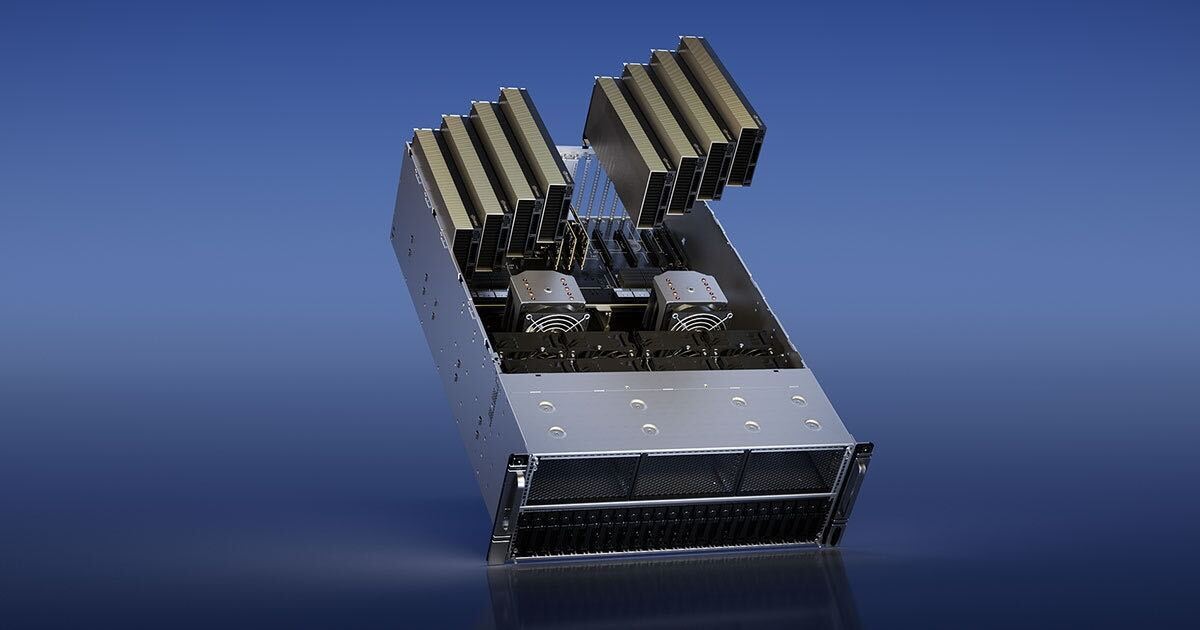

The NVIDIA Accelerated Quantum Research Center, or NVAQC, announced today at the NVIDIA GTC global AI conference, is where these developments will happen. With an NVIDIA GB200 NVL72 system and the NVIDIA Quantum-2 InfiniBand networking platform, the facility will house a supercomputer with 576 NVIDIA Blackwell GPUs dedicated to quantum computing research.

“The NVAQC draws on much-needed and long-sought-after tools for scaling quantum computing to next-generation devices,” said Tim Costa, senior director of computer-aided engineering, quantum and CUDA-X at NVIDIA. “The center will be a place for large-scale simulations of quantum algorithms and hardware, tight integration of quantum processors, and both training and deployment of AI models for quantum.”

Quantum computing innovators like Quantinuum, QuEra and Quantum Machines, along with academic partners from the Harvard Quantum Initiative and the Engineering Quantum Systems group at the MIT Center for Quantum Engineering, will work on projects with NVIDIA at the center to explore how AI supercomputing can accelerate the path toward quantum computing.

“The NVAQC is a powerful tool that will be instrumental in ushering in the next generation of research across the entire quantum ecosystem,” said William Oliver, professor of electrical engineering and computer science, and of physics, leader of the EQuS group and director of the MIT Center for Quantum Engineering. “NVIDIA is a critical partner for realizing useful quantum computing.”

There are several key quantum computing challenges where the NVAQC is already set to have a dramatic impact.

Protecting Qubits With AI Supercomputing

Qubit interactions are a double-edged sword. While qubits must interact with their surroundings to be controlled and measured, these same interactions are also a source of noise — unwanted disturbances that affect the accuracy of quantum calculations. Quantum algorithms can only work if the resulting noise is kept in check.

Quantum error correction provides a solution, encoding noiseless, logical qubits within many noisy, physical qubits. By processing the outputs from repeated measurements on these noisy qubits, it’s possible to identify, track and correct qubit errors — all without destroying the delicate quantum information needed by a computation.

The process of figuring out where errors occurred and what corrections to apply is called decoding. Decoding is an extremely difficult task that must be performed by a conventional computer within a narrow time frame to prevent noise from snowballing out of control.

A key goal of the NVAQC will be exploring how AI supercomputing can accelerate decoding. Studying how to collocate quantum hardware within the center will allow the development of low-latency, parallelized and AI-enhanced decoders, running on NVIDIA GB200 Grace Blackwell Superchips.

The NVAQC will also tackle other challenges in quantum error correction. QuEra will work with NVIDIA to accelerate its search for new, improved quantum error correction codes, assessing the performance of candidate codes through demanding simulations of complex quantum circuits.

“The NVAQC will be an essential tool for discovering, testing and refining new quantum error correction codes and decoders capable of bringing the whole industry closer to useful quantum computing,” said Mikhail Lukin, Joshua and Beth Friedman University Professor at Harvard and a codirector of the Harvard Quantum Initiative.

Developing Applications for Accelerated Quantum Supercomputers

The majority of useful quantum algorithms draw equally from classical and quantum computing resources, ultimately requiring an accelerated quantum supercomputer that unifies both kinds of hardware.

For example, the output of classical supercomputers is often needed to prime quantum computations. The NVAQC provides the heterogeneous compute infrastructure needed for research on developing and improving such hybrid algorithms.

New AI-based compilation techniques will also be explored at the NVAQC, with the potential to accelerate the runtime of all quantum algorithms, including through work with Quantinuum. Quantinuum will build on its previous integration work with NVIDIA, offering its hardware and emulators through the NVIDIA CUDA-Q platform. Users of CUDA-Q are currently offered unrestricted access to Quantinuum’s QNTM H1-1 hardware and emulator for 90 days.

“We’re excited to deepen our work with NVIDIA via this center,” said Rajeeb Hazra, president and CEO of Quantinuum. “By combining Quantinuum’s powerful quantum systems with NVIDIA’s cutting-edge accelerated computing, we’re pushing the boundaries of hybrid quantum-classical computing and unlocking exciting new possibilities.”

QPU Integration

Integrating quantum hardware with AI supercomputing is the one of the major remaining hurdles on the path to running useful quantum hardware.

The requirements of such an integration can be extremely demanding. The decoding required by quantum error correction can only function if data from millions of qubits can be sent between quantum and classical hardware at ultralow latencies.

Quantum Machines will work with NVIDIA at the NVAQC to develop and hone new controller technologies supporting rapid, high-bandwidth interfaces between quantum processors and GB200 superchips.

“We’re excited to see NVIDIA’s growing commitment to accelerating the realization of useful quantum computers, providing researchers with the most advanced infrastructure to push the boundaries of quantum-classical computing,” said Itamar Sivan, CEO of Quantum Machines.

Key to integrating quantum and classical hardware is a platform that lets researchers and developers quickly shift context between these two disparate computing paradigms within a single application. The NVIDIA CUDA-Q platform will be the entry point for researchers to harness the NVAQC’s quantum-classical integration.

Building on tools like NVIDIA DGX Quantum — a reference architecture for integrating quantum and classical hardware — and CUDA-Q, the NVAQC is set to be an epicenter for next-generation developments in quantum computing, seeding the evolution of qubits into impactful quantum computers.

Learn more about NVIDIA quantum computing.

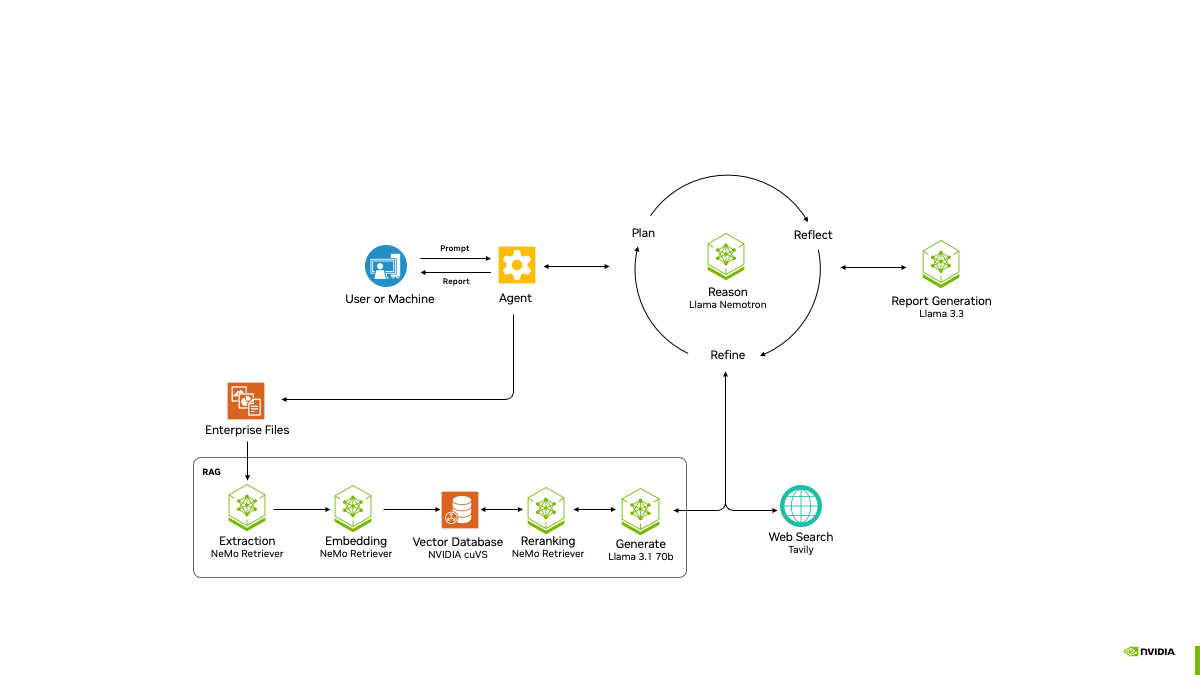

The NVIDIA-Certified Storage designation is a prerequisite for partners developing agentic AI infrastructure solutions built on the NVIDIA AI Data Platform. Each of these NVIDIA-Certified Storage partners will deliver customized AI data platforms, in collaboration with NVIDIA, that can harness enterprise data to reason and respond to complex queries.

The NVIDIA-Certified Storage designation is a prerequisite for partners developing agentic AI infrastructure solutions built on the NVIDIA AI Data Platform. Each of these NVIDIA-Certified Storage partners will deliver customized AI data platforms, in collaboration with NVIDIA, that can harness enterprise data to reason and respond to complex queries.