There is a gap between finding a first-order stationary point (FOSP) and a second-order stationary point (SOSP) under differential privacy constraints, and it remains unclear whether privately finding an SOSP is more challenging than finding an FOSP. Specifically, Ganesh et al. (2023) claimed that an αalphaα-SOSP can be found with α=O~(1n1/3+(dnϵ)3/7)alpha=tilde{O}(frac{1}{n^{1/3}}+(frac{sqrt{d}}{nepsilon})^{3/7})α=O~(n1/31+(nϵd)3/7), where nnn is the dataset size, ddd is the dimension, and ϵepsilonϵ is the differential privacy parameter.

However, a recent analysis revealed an issue…Apple Machine Learning Research

Controlling Language and Diffusion Models by Transporting Activations

Large generative models are becoming increasingly capable and more widely deployed to power production applications, but getting these models to produce exactly what’s desired can still be challenging. Fine-grained control over these models’ outputs is important to meet user expectations and to mitigate potential misuses, ensuring the models’ reliability and safety. To address these issues, Apple machine learning researchers have developed a new technique that is modality-agnostic and provides fine-grained control over the model’s behavior with negligible computational overhead, while…Apple Machine Learning Research

Ferret-UI 2: Mastering Universal User Interface Understanding Across Platforms

Building a generalist model for user interface (UI) understanding is challenging due to various foundational issues, such as platform diversity, resolution variation, and data limitation. In this paper, we introduce Ferret-UI 2, a multimodal large language model (MLLM) designed for universal UI understanding across a wide range of platforms, including iPhone, Android, iPad, Webpage, and AppleTV. Building on the foundation of Ferret-UI, Ferret-UI 2 introduces three key innovations: support for multiple platform types, high-resolution perception through adaptive scaling, and advanced task…Apple Machine Learning Research

Implement human-in-the-loop confirmation with Amazon Bedrock Agents

Agents are revolutionizing how businesses automate complex workflows and decision-making processes. Amazon Bedrock Agents helps you accelerate generative AI application development by orchestrating multi-step tasks. Agents use the reasoning capability of foundation models (FMs) to break down user-requested tasks into multiple steps. In addition, they use the developer-provided instruction to create an orchestration plan and then carry out the plan by invoking company APIs and accessing knowledge bases using Retrieval Augmented Generation (RAG) to provide an answer to the user’s request.

Building intelligent autonomous agents that effectively handle user queries requires careful planning and robust safeguards. Although FMs continue to improve, they can still produce incorrect outputs, and because agents are complex systems, errors can occur at multiple stages. For example, an agent might select the wrong tool or use correct tools with incorrect parameters. Although Amazon Bedrock agents can self-correct through their reasoning and action (ReAct) strategy, repeated tool execution might be acceptable for non-critical tasks but risky for business-critical operations, such as database modifications.

In these sensitive scenarios, human-in-the-loop (HITL) interaction is essential for successful AI agent deployments, encompassing multiple critical touchpoints between humans and automated systems. HITL can take many forms, from end-users approving actions and providing feedback, to subject matter experts reviewing responses offline and agents working alongside customer service representatives. The common thread is maintaining human oversight and using human intelligence to improve agent performance. This human involvement helps establish ground truth, validates agent responses before they go live, and enables continuous learning through feedback loops.

In this post, we focus specifically on enabling end-users to approve actions and provide feedback using built-in Amazon Bedrock Agents features, specifically HITL patterns for providing safe and effective agent operations. We explore the patterns available using a Human Resources (HR) agent example that helps employees requesting time off. You can recreate the example manually or using the AWS Cloud Development Kit (AWS CDK) by following our GitHub repository. We show you what these methods look like from an application developer’s perspective while providing you with the overall idea behind the concepts. For the post, we apply user confirmation and return of control on Amazon Bedrock to achieve the human confirmation.

Amazon Bedrock Agents frameworks for human-in-the-loop confirmation

When implementing human validation in Amazon Bedrock Agents, developers have two primary frameworks at their disposal: user confirmation and return of control (ROC). These mechanisms, though serving similar oversight purposes, address different validation needs and operate at different levels of the agent’s workflow.

User confirmation provides a straightforward way to pause and validate specific actions before execution. With user confirmation, the developer receives information about the function (or API) and parameters values that an agent wants to use to complete a certain task. The developer can then expose this information to the user in the agentic application to collect a confirmation that the function should be executed before continuing the agent’s orchestration process.

With ROC, the agent provides the developer with the information about the task that it wants to execute and completely relies on the developer to execute the task. In this approach, the developer has the possibility to not only validate the agent’s decision, but also contribute with additional context and modify parameters during the agent’s execution process. ROC also happens to be configured at the action group level, covering multiple actions.

Let’s explore how each framework can be implemented and their specific use cases.

Autonomous agent execution: No human-in-the-loop

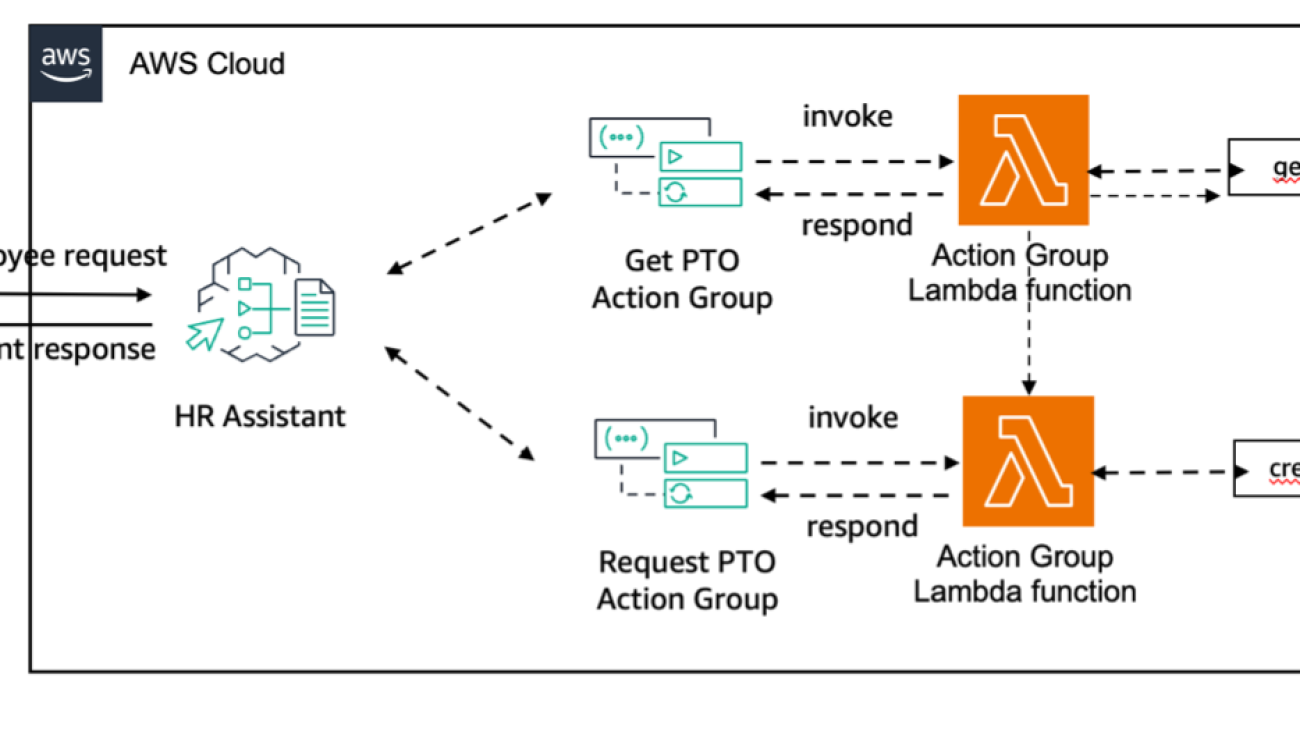

First, let’s demonstrate what a user experience might look like if your application doesn’t have a HITL. For that, let’s consider the following architecture.

In the preceding diagram, the employee interacts with the HR Assistant agent, which then invokes actions that can change important details about the employee’s paid time off (PTO). In this scenario, when an employee requests time off, the agent will automatically request the leave after confirming that enough PTO days are still available for the requesting employee.

The following screenshot shows a sample frontend UI for an Amazon Bedrock agent with functions to retrieve PTOs and request new ones.

In this interaction, the PTO request was submitted with no confirmation from the end-user. What if the user didn’t want to actually submit a request, but only check that it could be done? What if the date they provided was incorrect and had a typo? For any action that changes the state of a user’s PTO, it would provide a better user experience if the system asked for confirmation before actually making those changes.

Simple human validation: User confirmation

When requesting PTO, employees expect to be able to confirm their actions. This minimizes the execution of accidental requests and helps confirm that the agent understood the request and its parameters correctly.

For such scenarios, a Boolean confirmation is already sufficient to continue to execution of the agentic flow. Amazon Bedrock Agents offers an out-of-the-box user confirmation feature that enables developers to incorporate an extra layer of safety and control into their AI-driven workflows. This mechanism strikes a balance between automation and human oversight by making sure that critical actions are validated by users before execution. With user confirmation, developers can decide which tools can be executed automatically and which ones should be first confirmed.

For our example, reading the values for available PTO hours and listing the past PTO requests taken by an employee are non-critical operations that can be executed automatically. However, booking, updating, or canceling a PTO request requires changes on a database and are actions that should be confirmed before execution. Let’s change our agent architecture to include user confirmation, as shown in the following updated diagram.

In the updated architecture, when the employee interacts with the HR Assistant agent and the create_pto_request() action needs to be invoked, the agent will first request user confirmation before execution.

To enable user confirmation, agent developers can use the AWS Management Console, an SDK such as Boto3, or infrastructure as code (IaC) with AWS CloudFormation (see AWS::Bedrock::Agent Function). The user experience with user confirmation will look like the following screenshot.

In this interaction, the agent requests a confirmation from the end-user in order to execute. The user can then choose if they want to proceed with the time off request or not. Choosing Confirm will let the agent execute the action based on the parameter displayed.

The following diagram illustrates the workflow for confirming the action.

In this scenario, the developer maps the way the confirmation is displayed to the user in the client-side UI and the agent validates the confirmation state before executing the action.

Customized human input: Return of control

User confirmation provides a simple yes/no validation, but some scenarios require a more nuanced human input. This is where ROC comes into play. ROC allows for a deeper level of human intervention, enabling users to modify parameters or provide additional context before an action is executed.

Let’s consider our HR agent example. When requesting PTO, a common business requirement is for employees to review and potentially edit their requests before submission. This expands upon the simple confirmation use case by allowing users to alter their original input before sending a request to the backend. Amazon Bedrock Agents offers an out-of-the-box solution to effectively parse user input and send it back in a structured format using ROC.

To implement ROC, we need to modify our agent architecture slightly, as shown in the following diagram.

In this architecture, ROC is implemented at the action group level. When an employee interacts with the HR Assistant agent, the system requires explicit confirmation of all function parameters under the “Request PTO Action Group” before executing actions within the action group.

With ROC, the user experience becomes more interactive and flexible. The following screenshot shows an example with our HR agent application.

Instead of executing the action automatically or just having a confirm/deny option, users are presented with a form to edit their intentions directly before processing. In this case, our user can realize they accidentally started their time off request on a Sunday and can edit this information before submission.

After the user reviews and potentially modifies the request, they can approve the parameters.

When implementing ROC, it’s crucial to understand that parameter validation occurs at two distinct points. The agent performs initial validation before returning control to the user (for example, checking available PTO balance), and the final execution relies on the application’s API validation layer.

For instance, if a user initially requests 3 days of PTO, the agent validates against their 5-day balance and returns control. However, if the user modifies the request to 100 days during ROC, the final validation and enforcement happen at the API level, not through the agent. This differs from confirmation flows where the agent directly executes API calls. In ROC, the agent’s role is to facilitate the interaction and return API responses, and the application maintains ultimate control over parameter validation and execution.

The core difference in the ROC approach is that the responsibility of processing the time off request is now handled by the application itself instead of being automatically handled by the agent. This allows for more complex workflows and greater human oversight.

To better understand the flow of information in a ROC scenario, let’s examine the following sequence diagram.

In this workflow, the agent prepares the action but doesn’t execute it. Instead, it returns control to the application, which then presents the editable information to the user. After the user reviews and potentially modifies the request, the application is responsible for executing the action with the final, user-approved parameters.

This approach provides several benefits:

- Enhanced accuracy – Users can correct misunderstandings or errors in the agent’s interpretation of their request

- Flexibility – It allows for last-minute changes or additions to the request

- User empowerment – It gives users more control over the final action, increasing trust in the system

- Compliance – In regulated industries, this level of human oversight can be crucial for adhering to legal or policy requirements

Implementing ROC requires more development effort compared to user confirmation, because it involves creating UIs for editing and handling the execution of actions within the application. However, for scenarios where precision and user control are paramount, the additional complexity is often justified.

Conclusion

In this post, we explored two primary frameworks for implementing human validation in Amazon Bedrock Agents: user confirmation and return of control. Although these mechanisms serve similar oversight purposes, they address different validation needs and operate at distinct levels of the agent’s workflow. User confirmation provides a straightforward Boolean validation, allowing users to approve or reject specific actions before execution. This method is ideal for scenarios where a simple yes/no decision is sufficient to promote safety and accuracy.

ROC offers a more nuanced approach, enabling users to modify parameters and provide additional context before action execution. This framework is particularly useful in complex scenarios, where changing of the agent’s decisions is necessary.

Both methods contribute to a robust HITL approach, providing an essential layer of human validation to the agentic application.

User confirmation and ROC are just two aspects of the broader HITL paradigm in AI agent deployments. In future posts, we will address other crucial use cases for HITL interactions with agents.

To get started creating your own agentic application with HITL validation, we encourage you to explore the HR example discussed in this post. You can find the complete code and implementation details in our GitHub repository.

About the Authors

Clement Perrot is a Senior Solutions Architect and AI/ML Specialist at AWS, where he helps early-stage startups build and implement AI solutions on the AWS platform. In his role, he architects large-scale GenAI solutions, guides startups in implementing LLM-based applications, and drives the technical adoption of AWS GenAI services globally. He collaborates with field teams on complex customer implementations and authors technical content to enable AWS GenAI adoption. Prior to AWS, Clement founded two successful startups that were acquired, and was recognized with an Inc 30 under 30 award.

Clement Perrot is a Senior Solutions Architect and AI/ML Specialist at AWS, where he helps early-stage startups build and implement AI solutions on the AWS platform. In his role, he architects large-scale GenAI solutions, guides startups in implementing LLM-based applications, and drives the technical adoption of AWS GenAI services globally. He collaborates with field teams on complex customer implementations and authors technical content to enable AWS GenAI adoption. Prior to AWS, Clement founded two successful startups that were acquired, and was recognized with an Inc 30 under 30 award.

Ryan Sachs is a Solutions Architect at AWS, specializing in GenAI application development. Ryan has a background in developing web/mobile applications at companies large and small through REST APIs. Ryan helps early-stage companies solve their business problems by integrating Generative AI technologies into their existing architectures.

Ryan Sachs is a Solutions Architect at AWS, specializing in GenAI application development. Ryan has a background in developing web/mobile applications at companies large and small through REST APIs. Ryan helps early-stage companies solve their business problems by integrating Generative AI technologies into their existing architectures.

Maira Ladeira Tanke is a Tech Lead for Agentic workloads in Amazon Bedrock at AWS, where she enables customers on their journey todevelop autonomous AI systems. With over 10 years of experience in AI/ML. At AWS, Maira partners with enterprise customers to accelerate the adoption of agentic applications using Amazon Bedrock, helping organizations harness the power of foundation models to drive innovation and business transformation. In her free time, Maira enjoys traveling, playing with her cat, and spending time with her family someplace warm.

Maira Ladeira Tanke is a Tech Lead for Agentic workloads in Amazon Bedrock at AWS, where she enables customers on their journey todevelop autonomous AI systems. With over 10 years of experience in AI/ML. At AWS, Maira partners with enterprise customers to accelerate the adoption of agentic applications using Amazon Bedrock, helping organizations harness the power of foundation models to drive innovation and business transformation. In her free time, Maira enjoys traveling, playing with her cat, and spending time with her family someplace warm.

Mark Roy is a Principal Machine Learning Architect for AWS, helping customers design and build AI/ML solutions. Mark’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, media and entertainment, healthcare, utilities, and manufacturing. Mark holds six AWS Certifications, including the ML Specialty Certification. Prior to joining AWS, Mark was an architect, developer, and technology leader for over 25 years, including 19 years in financial services.

Mark Roy is a Principal Machine Learning Architect for AWS, helping customers design and build AI/ML solutions. Mark’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. He has helped companies in many industries, including insurance, financial services, media and entertainment, healthcare, utilities, and manufacturing. Mark holds six AWS Certifications, including the ML Specialty Certification. Prior to joining AWS, Mark was an architect, developer, and technology leader for over 25 years, including 19 years in financial services.

Copilot Arena: A Platform for Code

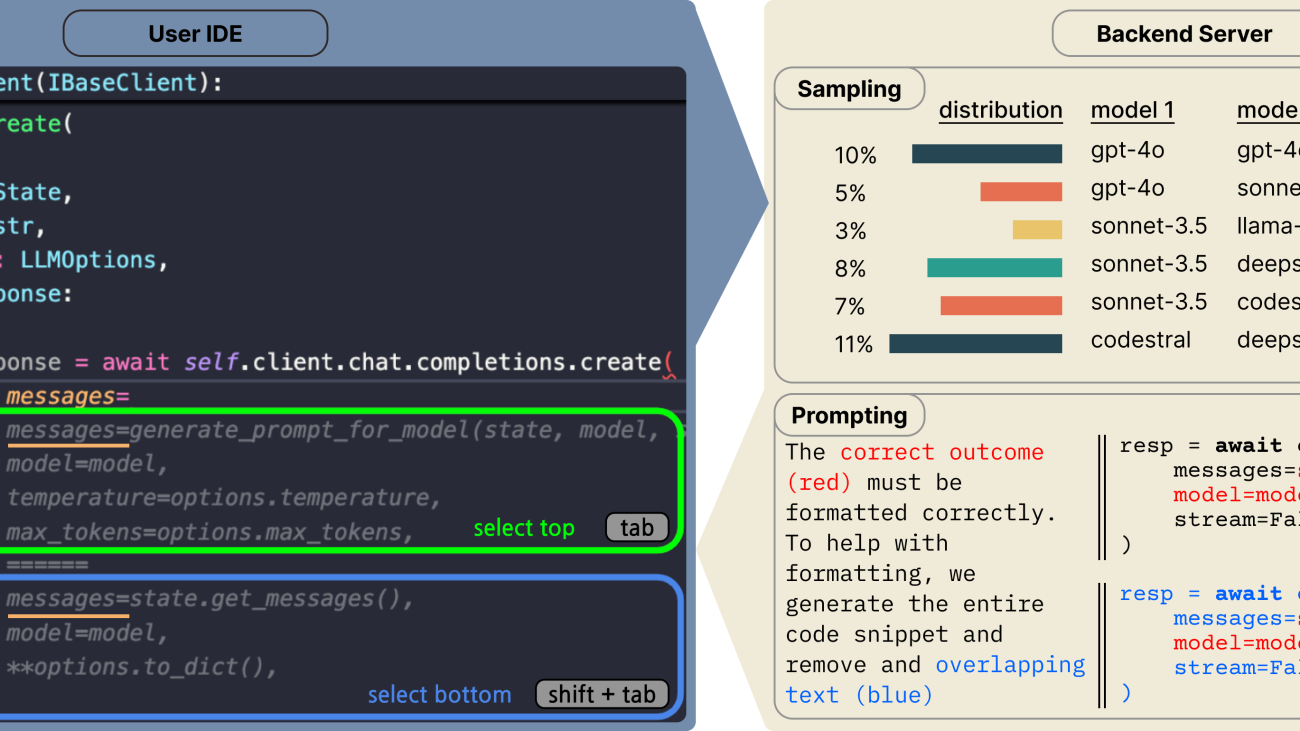

Figure 1. Copilot Arena is a VSCode extension that collects human preferences of code directly from developers.

As model capabilities improve, large language models (LLMs) are increasingly integrated into user environments and workflows. In particular, software developers code with LLM-powered tools in integrated development environments such as VS Code, IntelliJ, or Eclipse. While these tools are increasingly used in practice, current LLM evaluations struggle to capture how users interact with these tools in real environments, as they are often limited to short user studies, only consider simple programming tasks as opposed to real-world systems, or rely on web-based platforms removed from development environments.

To address these limitations, we introduce Copilot Arena, an app designed to evaluate LLMs in real-world settings by collecting preferences directly in a developer’s actual workflow. Copilot Arena is a Visual Studio Code extension that provides developers with code completions, akin to the type of support provided by GitHub Copilot. Thus far, over 11,000 users have downloaded Copilot Arena, and the tool has served over 100K completions, and accumulated over 25,000 code completion battles. The battles form a live leaderboard on the LMArena website. Since its launch, Copilot Arena has also been used to evaluate two new code completion models prior to their release: a new Codestral model from Mistral AI and Mercury Coder from InceptionAI.

In this blog post, we discuss how we designed and deployed Copilot Arena. We also highlight how Copilot Arena provides new insights into developer code preferences.

Copilot Arena System Design

To collect user preferences, Copilot Arena presents a novel interface that shows users paired code completions from two different LLMs, which are determined based on a sampling strategy that mitigates latency while preserving coverage across model comparisons. Additionally, we devise a prompting scheme that allows a diverse set of models to perform code completions with high fidelity. Figure 1 overviews this workflow. We will overview each component below:

User Interface: Copilot Arena allows users to select between pairs of code completions from different LLMs. User selections allow us to better understand developer preferences between LLMs. To avoid interrupting user workflows, voting is designed to be seamless—users use keyboard shortcuts to quickly accept code completions.

Sampling model pairs: We explore a sampling strategy to minimize the experienced latency. Since our interface shows two code completions together, the slowest completion determines the latency. We capture each model’s latency as a log-normal distribution and tune a temperature parameter to interpolate between a latency-optimized distribution and a uniform distribution, observing a decrease in median experienced latency by 33% (from 1.61 to 1.07 seconds) compared to a uniform distribution.

Prompting for code completions: During development, models need to “fill in the middle”, where code needs to be generated based on both the current prefix and suffix. While some models, such as DeepSeek and Codestral, are designed to fill in the middle, many chat models are not and require additional prompting. To accomplish this, we allow the model to generate code snippets, which is a more natural format, and then post-process them into a FiM completion. Our approach is as follows: in addition to the same prompt templates above, the models are provided with instructions to begin by re-outputting a portion of the prefix and similarly end with a portion of the suffix. We then match portions of the output code in the input and delete the repeated code. This simple prompting trick allows chat models to perform code completions with high success (Figure 2).

Deployment

We deploy Copilot Arena as a free extension available on the VSCode extension store. During deployment, we log user judgments and latency for model responses, along with the user’s input and completion. Given the sensitive nature of programming, users can restrict our access to their data. Depending on privacy settings, we also collect the user’s code context and model responses.

As is standard in other work on pairwise preference evaluation (e.g., Chatbot Arena), we apply a Bradley-Terry (BT) model to estimate the relative strengths of each model. We bootstrap the battles in the BT calculation to construct a 95% confidence interval for the rankings, which are used to create a leaderboard that ranks all models, where each model’s rank is determined by which other models’ lower bounds fall below its upper bound. We host a live leadboard of model rankings at lmarena.ai (Figure 3).

Findings

Comparison to prior datasets

We compare our leaderboard to existing evaluations, which encompass both live preference leaderboards with human feedback and static benchmarks (Figure 4). The static benchmarks we compare against are LiveBench, BigCodeBench, and LiveCodeBench, which evaluate models’ code generation abilities on a variety of Python tasks and continue to be maintained with new model releases. We also compare to Chatbot Arena and their coding-specific subset, which are human preferences of chat responses collected through a web platform.

We find a low correlation (r ≤ 0.1) with most static benchmarks, but a relatively higher correlation (Spearman’s rank correlation (r) of 0.62) with Chatbot Arena (coding) and a similar correlation (r = 0.48) with Chatbot Arena (general). The stronger correlation with human preference evaluations compared to static benchmarks likely indicates that human feedback captures distinct aspects of model performance that static benchmarks fail to measure. We notice that smaller models tend to overperform (e.g., GPT-4o mini and Qwen-2.5-Coder 32B), particularly in static benchmarks. We attribute these differences to the unique distribution of data and tasks that Copilot Arena evaluates over, which we explore in more detail next.

In comparison to prior approaches, evaluating models in real user workflows leads to a diverse data distribution in terms of programming and natural languages, tasks, and code structures (Figure 5):

- Programming and natural language: While the plurality of Copilot Arena users write in English (36%) and Python (49%), we also identify 24 different natural languages and 103 programming languages which is comparable to Chatbot Arena (general) and benchmarks focused on multilingual generation. In contrast, static benchmarks tend to focus on questions written solely in Python and English.

- Downstream tasks: Existing benchmarks tend to source problems from coding competitions, handwritten programming challenges, or from a curated set of GitHub repositories. In contrast, Copilot Arena users are working on a diverse set of realistic tasks, including but not limited to frontend components, backend logic, and ML pipelines.

- Code structures and context lengths: Most coding benchmarks follow specific structures, which means that most benchmarks have relatively short context lengths. Similarly, Chatbot Arena focuses on natural language input collected from chat conversations, with many prompts not including any code context (e.g., 40% of Chatbot Arena’s coding tasks contain code context and only 2.6% focus on infilling). Unlike any existing evaluation, Copilot Arena is structurally diverse with significantly longer inputs.

Insights into user preferences

- Downstream tasks significantly affect win rate, while programming languages have little effect: Changing task type significantly affects relative model performance, which may indicate that certain models are overexposed to competition-style algorithmic coding problems. On the other hand, the effect of the programming language on win-rates was remarkably small, meaning that models that perform well on Python will likely perform well on another language. We hypothesize that this is because of the inherent similarities between programming languages, and learning one improves performance in another, aligning with trends reported in prior work.

- Smaller models may overfit to data similar to static benchmarks, while the performance of larger models is mixed: Existing benchmarks (e.g., those in Figure 4) primarily evaluate models on Python algorithmic problems with short context. However, we notice that Qwen-2.5 Coder performs noticeably worse on frontend/backend tasks, longer contexts, and non-Python settings. We observe similar trends for the two other small models (Gemini Flash and GPT-4o mini). We hypothesize that overexposure may be particularly problematic for smaller models. On the other hand, performance amongst larger models is mixed.

Conclusion

While Copilot Arena represents a shift in the right direction for LLM evaluation, providing more grounded and realistic evaluations, there is still significant work to be done to fully represent all developer workflows. For example, extending Copilot Arena to account for interface differences from production tools like GitHub Copilot and tackling privacy considerations that limit data sharing. Despite these constraints, our platform reveals that evaluating coding LLMs in realistic environments yields rankings significantly different from static benchmarks or chat-based evaluations and highlights the importance of testing AI assistants with real users on real tasks. We’ve open-sourced Copilot Arena to encourage the open source community to include more nuanced feedback mechanisms, code trajectory metrics, and additional interaction modes.

If you think this blog post is useful for your work, please consider citing it.

@misc{chi2025copilotarenaplatformcode,

title={Copilot Arena: A Platform for Code LLM Evaluation in the Wild},

author={Wayne Chi and Valerie Chen and Anastasios Nikolas Angelopoulos and Wei-Lin Chiang and Aditya Mittal and Naman Jain and Tianjun Zhang and Ion Stoica and Chris Donahue and Ameet Talwalkar},

year={2025},

eprint={2502.09328},

archivePrefix={arXiv},

primaryClass={cs.SE},

url={https://arxiv.org/abs/2502.09328},

}Boost team productivity with Amazon Q Business Insights

Employee productivity is a critical factor in maintaining a competitive advantage. Amazon Q Business offers a unique opportunity to enhance workforce efficiency by providing AI-powered assistance that can significantly reduce the time spent searching for information, generating content, and completing routine tasks. Amazon Q Business is a fully managed, generative AI-powered assistant that lets you build interactive chat applications using your enterprise data, generating answers based on your data or large language model (LLM) knowledge. At the core of this capability are native data source connectors that seamlessly integrate and index content from multiple data sources like Salesforce, Jira, and SharePoint into a unified index.

Key benefits for organizations include:

- Simplified deployment and management – Provides a ready-to-use web experience with no machine learning (ML) infrastructure to maintain or manage

- Access controls – Makes sure users only access content they have permission to view

- Accurate query responses – Delivers precise answers with source citations, analyzing enterprise data

- Privacy and control – Offers comprehensive guardrails and fine-grained access controls

- Broad connectivity – Supports over 45 native data source connectors (at the time of writing), and provides the ability to create custom connectors

Data privacy and the protection of intellectual property are paramount concerns for most organizations. At Amazon, “Security is Job Zero,” which is why Amazon Q Business is designed with these critical considerations in mind. Your data is not used for training purposes, and the answers provided by Amazon Q Business are based solely on the data users have access to. This makes sure that enterprises can quickly find answers to questions, provide summaries, generate content, and complete tasks across various use cases with complete confidence in data security. Amazon Q Business supports encryption in transit and at rest, allowing end-users to use their own encryption keys for added security. This robust security framework enables end-users to receive immediate, permissions-aware responses from enterprise data sources with citations, helping streamline workplace tasks while maintaining the highest standards of data privacy and protection.

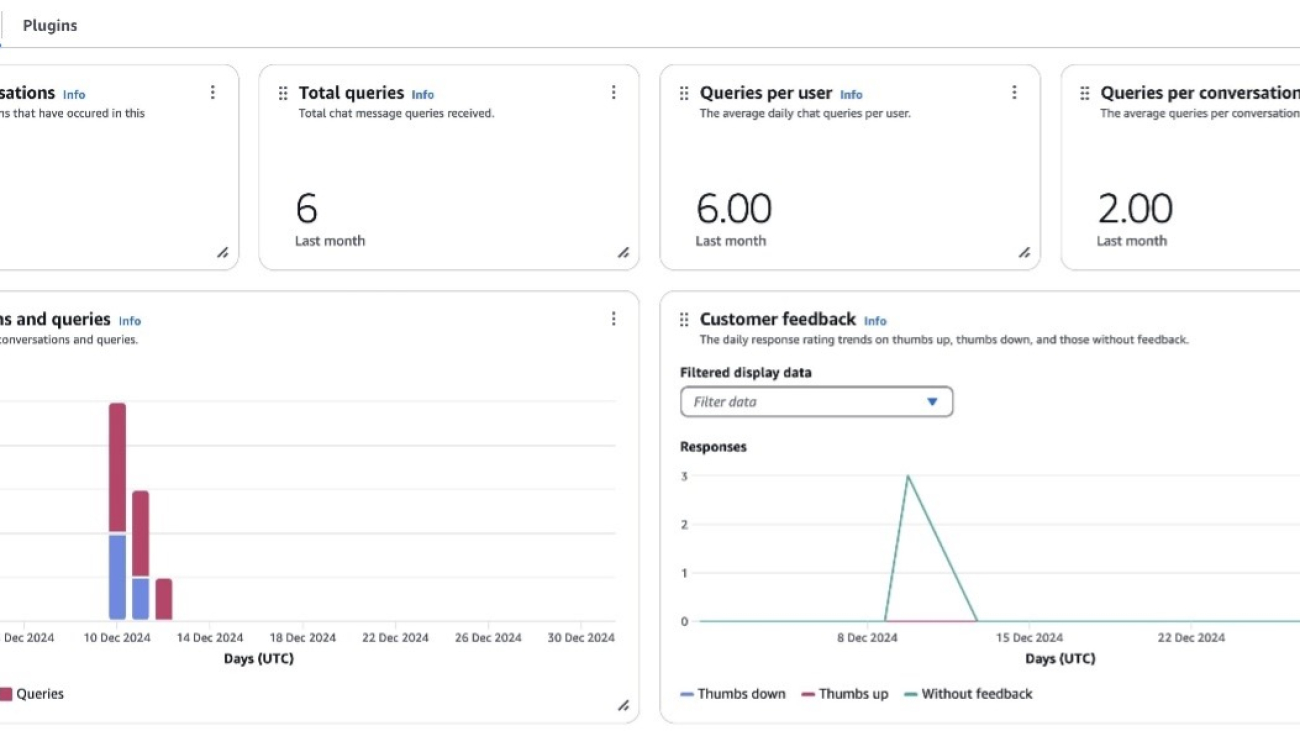

Amazon Q Business Insights provides administrators with details about the utilization and effectiveness of their AI-powered applications. By monitoring utilization metrics, organizations can quantify the actual productivity gains achieved with Amazon Q Business. Understanding how employees interact with and use Amazon Q Business becomes crucial for measuring its return on investment and identifying potential areas for further optimization. Tracking metrics such as time saved and number of queries resolved can provide tangible evidence of the service’s impact on overall workplace productivity. It’s essential for admins to periodically review these metrics to understand how users are engaging with Amazon Q Business and identify potential areas of improvement.

The dashboard enables administrators to track user interactions, including the helpfulness of generated answers through user ratings. By visualizing this feedback, admins can pinpoint instances where users aren’t receiving satisfactory responses. With Amazon Q Business Insights, administrators can diagnose potential issues such as unclear user prompts, misconfigured topics and guardrails, insufficient metadata boosters, or inadequate data source configurations. This comprehensive analytics approach empowers organizations to continuously refine their Amazon Q Business implementation, making sure users receive the most relevant and helpful AI-assisted support.

In this post, we explore Amazon Q Business Insights capabilities and its importance for organizations. We begin with an overview of the available metrics and how they can be used for measuring user engagement and system effectiveness. Then we provide instructions for accessing and navigating this dashboard. Finally, we demonstrate how to integrate Amazon Q Business logs with Amazon CloudWatch, enabling deeper insights into user interaction patterns and identifying areas for improvement. This integration can empower administrators to make data-driven decisions for optimizing their Amazon Q Business implementations and maximizing return on investment (ROI).

Amazon Q Business and Amazon Q Apps analytics dashboards

In this section, we discuss the Amazon Q Business and Amazon Q Apps analytics dashboards.

Overview of key metrics

Amazon Q Business Insights (see the following screenshot) offers a comprehensive set of metrics that provide valuable insights into user engagement and system performance. Key metrics include Total queries and Total conversations, which give an overall picture of system usage. More specific metrics such as Queries per conversation and Queries per user offer deeper insights into user interaction patterns and the complexity of inquiries. The Number of conversations and Number of queries metrics help administrators track adoption and usage trends over time.

The dashboard also provides critical information on system effectiveness through metrics like Unsuccessful query responses and Thumbs down reasons (see the following screenshot), which highlight areas where the AI assistant might be struggling to provide adequate answers. This is complemented by the end-user feedback metric, which includes user ratings and response effectiveness reasons. These metrics are particularly valuable for identifying specific issues users are encountering and areas where the system needs improvement.

Complementing the main dashboard, Amazon Q Business provides a dedicated analytics dashboard for Amazon Q Apps that offers detailed insights into application creation, usage, and adoption patterns. The dashboard tracks user engagement through metrics like:

- Active users (average unique daily users interacting with Amazon Q Apps)

- Active creators (average unique daily users creating or updating Amazon Q Apps)

Application metrics include:

- Total Q Apps (average daily total)

- Active Q Apps (average number of applications run or updated daily)

These metrics help provide a clear picture of application utilization.

The dashboard also features several trend analyses that help administrators understand usage patterns over time:

- Q App participants trend shows the relationship between daily active users and creators

- Q App trend displays the correlation between total applications created and active applications

- Total Q App runs trend and Published Q App trend track daily execution rates and publication patterns, respectively

These metrics enable administrators to evaluate the performance and adoption of Amazon Q Apps within their organization, helping identify successful implementation patterns and areas needing attention.

These comprehensive metrics are crucial for organizations to optimize their Amazon Q Business implementation and maximize ROI. By analyzing trends in Total queries, Total conversations, and user-specific metrics, administrators can gauge adoption rates and identify potential areas for user training or system improvements. The Unsuccessful query responses and Customer feedback metrics help pinpoint gaps in the knowledge base or areas where the system struggles to provide satisfactory answers. By using these metrics, organizations can make data-driven decisions to enhance the effectiveness of their AI-powered assistant, ultimately leading to improved productivity and user experience across various use cases within the enterprise.

How to access Amazon Q Business Insights dashboards

As an Amazon Q admin, you can view the dashboards on the Amazon Q Business console. You can view the metrics in these dashboards over different pre-selected time intervals. They are available at no additional charge in AWS Regions where the Amazon Q Business service is offered.

To view these dashboards on the Amazon Q Business console, you choose your application environment and navigate to the Insights page. For more details, see Viewing the analytics dashboards.

The following screenshot illustrates how to access the dashboards for Amazon Q Business applications and Amazon Q Apps Insights.

Monitor Amazon Q Business user conversations

In addition to Amazon Q Business and Amazon Q Apps dashboards, you can use Amazon CloudWatch Logs to deliver user conversations and response feedback in Amazon Q Business for you to analyze. These logs can be delivered to multiple destinations, such as CloudWatch, Amazon Simple Storage Service (Amazon S3), or Amazon Data Firehose.

The following diagram depicts the flow of user conversation and feedback responses from Amazon Q Business to Amazon S3. These logs are then queryable using Amazon Athena.

Prerequisites

To set up CloudWatch Logs for Amazon Q Business, make sure you have the appropriate permissions for the intended destination. Refer to Monitoring Amazon Q Business and Q Apps for more details.

Set up log delivery with CloudWatch as a destination

Complete the following steps to set up log delivery with CloudWatch as the destination:

- Open the Amazon Q Business console and sign in to your account.

- In Applications, choose the name of your application environment.

- In the navigation pane, choose Enhancements and choose Admin Controls and Guardrails.

- In Log delivery, choose Add and select the option To Amazon CloudWatch Logs.

- For Destination log group, enter the log group where the logs will be stored.

Log groups prefixed with /aws/vendedlogs/ will be created automatically. Other log groups must be created prior to setting up a log delivery.

- To filter out sensitive or personally identifiable information (PII), choose Additional settings – optional and specify the fields to be logged, output format, and field delimiter.

If you want the users’ email recorded in your logs, it must be added explicitly as a field in Additional settings.

- Choose Add.

- Choose Enable logging to start streaming conversation and feedback data to your logging destination.

Set up log delivery with Amazon S3 as a destination

To use Amazon S3 as a log destination, you will need an S3 bucket and grant Amazon Q Business the appropriate permissions to write your logs to Amazon S3.

- Open the Amazon Q Business console and sign in to your account.

- In Applications, choose the name of your application environment.

- In the navigation pane, choose Enhancements and choose Admin Controls and Guardrails.

- In Log delivery, choose Add and select the option To Amazon S3

- For Destination S3 bucket, enter your bucket.

- To filter out sensitive or PII data, choose Additional settings – optional and specify the fields to be logged, output format, and field delimiter.

If you want the users’ email recorded in your logs, it must be added explicitly as a field in Additional settings.

- Choose Add.

- Choose Enable logging to start streaming conversation and feedback data to your logging destination.

The logs are delivered to your S3 bucket with the following prefix: AWSLogs/<your-aws-account-id>/AmazonQBusinessLogs/<your-aws-region>/<your-q-business-application--id>/year/month/day/hour/ The placeholders will be replaced with your AWS account, Region, and Amazon Q Business application identifier, respectively.

Set up Data Firehose as a log destination

Amazon Q Business application event logs can also be streamed to Data Firehose as a destination. This can be used for real-time observability. We have excluded setup instructions for brevity.

To use Data Firehose as a log destination, you need to create a Firehose delivery stream (with Direct PUT enabled) and grant Amazon Q Business the appropriate permissions to write your logs to Data Firehose. For example AWS Identity and Access Management (IAM) policies with the required permissions for your specific logging destination, see Enable logging from AWS services.

Protecting sensitive data

You can prevent an AWS console user or group of users from viewing specific CloudWatch log groups, S3 buckets, or Firehose streams by applying specific deny statements in their IAM policies. AWS follows an explicit deny overrides allow model, meaning that if you explicitly deny an action, it will take precedence over allow statements. For more information, see Policy evaluation logic.

Real-world use cases

This section outlines several key use cases for Amazon Q Business Insights, demonstrating how you can use Amazon Q Business operational data to improve your operational posture to help Amazon Q Business meet your needs.

Measure ROI using Amazon Q Business Insights

The dashboards offered by Amazon Q Business Insights provide powerful metrics that help organizations quantify their ROI. Consider this common scenario: traditionally, employees spend countless hours searching through siloed documents, knowledge bases, and various repositories to find answers to their questions. This time-consuming process not only impacts productivity but also leads to significant operational costs. With the dashboards provided by Amazon Q Business Insights, administrators can now measure the actual impact of their investment by tracking key metrics such as total questions answered, total conversations, active users, and positive feedback rates. For instance, if an organization knows that it previously took employees an average of 3 minutes to find an answer in their documentation, and with Amazon Q Business this time is reduced to 20 seconds, they can calculate the time savings per query (2 minutes and 40 seconds). When the dashboard shows 1,000 successful queries per week, this translates to approximately 44 hours of productivity gained—time that employees can now dedicate to higher-value tasks. Organizations can then translate these productivity gains into tangible cost savings based on their specific business metrics.

Furthermore, the dashboard’s positive feedback rate metric helps validate the quality and accuracy of responses, making sure employees aren’t just getting answers, but reliable ones that help them do their jobs effectively. By analyzing these metrics over time—whether it’s over 24 hours, 7 days, or 30 days—organizations can demonstrate how Amazon Q Business is transforming their knowledge management approach from a fragmented, time-intensive process to an efficient, centralized system. This data-driven approach to measuring ROI not only justifies the investment but also helps identify areas where the service can be optimized for even greater returns.

Organizations looking to quantify financial benefits can develop their own ROI calculators tailored to their specific needs. By combining Amazon Q Business Insights metrics with their internal business variables, teams can create customized ROI models that reflect their unique operational context. Several reference calculators are publicly available online, ranging from basic templates to more sophisticated models, which can serve as a starting point for organizations to build their own ROI analysis tools. This approach enables leadership teams to demonstrate the tangible financial benefits of their Amazon Q Business investment and make data-driven decisions about scaling their implementation, based on their organization’s specific metrics and success criteria.

Enforce financial services compliance with Amazon Q Business analytics

Maintaining regulatory compliance while enabling productivity is a delicate balance. As organizations adopt AI-powered tools like Amazon Q Business, it’s crucial to implement proper controls and monitoring. Let’s explore how a financial services organization can use Amazon Q Business Insights capabilities and logging features to maintain compliance and protect against policy violations.

Consider this scenario: A large investment firm has adopted Amazon Q Business to help their financial advisors quickly access client information, investment policies, and regulatory documentation. However, the compliance team needs to make sure the system isn’t being used to circumvent trading restrictions, particularly around day trading activities that could violate SEC regulations and company policies.

Identify policy violations through Amazon Q Business logs

When the compliance team enables log delivery to CloudWatch with the user_email field selected, Amazon Q Business begins sending detailed event logs to CloudWatch. These logs are separated into two CloudWatch log streams:

- QBusiness/Chat/Message – Contains user interactions

- QBusiness/Chat/Feedback – Contains user feedback on responses

For example, the compliance team monitoring the logs might spot this concerning chat from Amazon Q Business:

The compliance team can automate this search by creating an alarm on CloudWatch Metrics Insights queries in CloudWatch.

Implement preventative controls

Upon identifying these attempts, the Amazon Q Business admin can implement several immediate controls within Amazon Q Business:

- Configure blocked phrases to make sure chat responses don’t include these words

- Configure topic-level controls to configure rules to customize how Amazon Q Business should respond when a chat message matches a special topic

The following screenshot depicts configuring topic-level controls for the phrase “day trading.”

Using the previous topic-level controls, different variations of the phrase “day trading” will be blocked. The following screenshot represents a user entering variations of the phrase “day trading” and how Amazon Q Business blocks that phrase due to the topic-level control for the phrase.

By implementing monitoring and configuring guardrails, the investment firm can maintain its regulatory compliance while still allowing legitimate use of Amazon Q Business for approved activities. The combination of real-time monitoring through logs and preventive guardrails creates a robust defense against potential violations while maintaining detailed audit trails for regulatory requirements.

Analyze user feedback through the Amazon Q Business Insights dashboard

After log delivery has been set up, administrators can use the Amazon Q Business Insights dashboard to get a comprehensive view of user feedback. This dashboard provides valuable data about user experience and areas needing improvement through two key metric cards: Unsuccessful query responses and Thumbs down reasons. The Thumbs down reasons chart offers a detailed breakdown of user feedback, displaying the distribution and frequency of specific reasons why users found responses unhelpful. This granular feedback helps administrators identify patterns in user feedback, whether it’s due to incomplete information, inaccurate responses, or other factors.

Similarly, the Unsuccessful query responses chart distinguishes between queries that failed because answers weren’t found in the knowledge base vs. those blocked by guardrail settings. Both metrics allow administrators to drill down into specific queries through filtering options and detailed views, enabling them to investigate and address issues systematically. This feedback loop is crucial for continuous improvement, helping organizations refine their content, adjust guardrails, and enhance the overall effectiveness of their Amazon Q Business implementation.

To view a breakdown of unsuccessful query responses, follow these steps:

- Select your application on the Amazon Q Business console.

- Select Amazon Q Business insights under Insights.

- Go to the Unsuccessful query responses metrics card and choose View details to resolve issues.

A new page will open with two tabs: No answers found and Blocked queries.

- You can use these tabs to filter by response type. You can also filter by date using the date filter at the top.

- Choose any of the queries to view the Query chain

This will give you more details and context on the conversation the user had when providing their feedback.

Analyze user feedback through CloudWatch logs

This use case focuses on identifying and analyzing unsatisfactory feedback from specific users in Amazon Q Business. After log delivery is enabled with the user_email field selected, the Amazon Q Business application sends event logs to the previously created CloudWatch log group. User chat interactions and feedback submissions generate events in the QBusiness/Chat/Message and QBusiness/Chat/Feedback log streams, respectively.

For example, consider if a user asks about their vacation policy and no answer is returned. The user can then choose the thumbs down icon and send feedback to the administrator.

The Send your feedback form provides the user the option to categorize the feedback and provide additional details for the administrator to review.

This feedback will be sent to the QBusiness/Chat/Feedback log stream for the administrator to later analyze. See the following example log entry:

By analyzing queries that result in unsatisfactory responses (thumbs down), administrators can take actions to improve answer quality, accuracy, and security. This feedback can help identify gaps in data sources. Patterns in feedback can indicate topics where users might benefit from extra training or guidance on effectively using Amazon Q Business.

To address issues identified through feedback analysis, administrators can take several actions:

- Configure metadata boosting to prioritize more accurate content in responses for queries that consistently receive negative feedback

- Refine guardrails and chat controls to better align with user expectations and organizational policies

- Develop targeted training or documentation to help users formulate more effective prompts, including prompt engineering techniques

- Analyze user prompts to identify potential risks and reinforce proper data handling practices

By monitoring the chat messages and which users are giving “thumbs up” or “thumbs down” responses for the associated prompts, administrators can gain insights into areas where the system might be underperforming, not meeting user expectations, or not complying with your organization’s security policies.

This use case is applicable to the other log delivery options, such as Amazon S3 and Data Firehose.

Group users getting the most unhelpful answers

For administrators seeking more granular insights beyond the standard dashboard, CloudWatch Logs Insights offers a powerful tool for deep-dive analysis of Amazon Q Business usage metrics. By using CloudWatch Log Insights, administrators can create custom queries to extract and analyze detailed performance data. For instance, you can generate a sorted list of users experiencing the most unhelpful interactions, such as identifying which employees are consistently receiving unsatisfactory responses. A typical query might reveal patterns like “User A received 9 unhelpful answers in the last 4 weeks, User B received 5 unhelpful answers, and User C received 3 unhelpful answers.” This level of detailed analysis enables organizations to pinpoint specific user groups or departments that might require additional training, data source configuration, or targeted support to improve their Amazon Q Business experience.

To get these kinds of insights, complete the following steps:

- To obtain the Amazon Q Business application ID, open the Amazon Q Business console, open the specific application, and note the application ID on the Application settings

This unique identifier will be used to filter log groups in CloudWatch Logs Insights.

- On the CloudWatch console, choose Logs Insights under Logs in the navigation pane.

- Under Selection criteria, enter the application ID you previously copied. Choose the log group that follows the pattern

/aws/vendedlogs/qbusiness/application/EVENT_LOGS/<your application id>.

- For the data time range, select the range you want to use. In our case, we are using the last 4 weeks and so we choose Custom, then we specify 4 Weeks.

- Replace the default query in the editor with this one:

We use the condition NOT_USEFUL because we want to list users getting unhelpful answers. To get a list of users who received helpful answers, change the condition to USEFUL.

- Choose Run query.

With this information, particularly user_email, you can write a new query to analyze the conversation logs where users got unhelpful answers. For example, to list messages where user john_doe gave a thumbs down, replace your query with the following:

filter usefulness = "NOT_USEFUL" and user_email = "john_doe@anycompany.com"

Alternatively, to filter unhelpful answers, you could use the following query:

filter usefulness = "NOT_USEFUL"

The results of these queries can help you better understand the context of the feedback users are providing. As mentioned earlier, it might be possible your guardrails are too restrictive, your application is missing a data source, or maybe your users’ prompts are not clear enough.

Clean up

To make sure you don’t incur ongoing costs, clean up resources by removing log delivery configurations, deleting CloudWatch resources, removing the Amazon Q Business application, and deleting any additional AWS resources created after you’re done experimenting with this functionality.

Conclusion

In this post, we explored several ways to improve your operational posture with Amazon Q Business Insights dashboards, the Amazon Q Apps analytics dashboard, and logging with CloudWatch Logs. By using these tools, organizations can gain valuable insights into user engagement patterns, identify areas for improvement, and make sure their Amazon Q Business implementation aligns with security and compliance requirements.

To learn more about Amazon Q Business key usage metrics, refer to Viewing Amazon Q Business and Q App metrics in analytics dashboards. For a comprehensive review of Amazon Q Business CloudWatch logs, including log query examples, refer to Monitoring Amazon Q Business user conversations with Amazon CloudWatch Logs.

About the Authors

Guillermo Mansilla is a Senior Solutions Architect based in Orlando, Florida. Guillermo has developed a keen interest in serverless architectures and generative AI applications. Prior to his current role, he gained over a decade of experience working as a software developer. Away from work, Guillermo enjoys participating in chess tournaments at his local chess club, a pursuit that allows him to exercise his analytical skills in a different context.

Guillermo Mansilla is a Senior Solutions Architect based in Orlando, Florida. Guillermo has developed a keen interest in serverless architectures and generative AI applications. Prior to his current role, he gained over a decade of experience working as a software developer. Away from work, Guillermo enjoys participating in chess tournaments at his local chess club, a pursuit that allows him to exercise his analytical skills in a different context.

Amit Gupta is a Senior Q Business Solutions Architect Solutions Architect at AWS. He is passionate about enabling customers with well-architected generative AI solutions at scale.

Amit Gupta is a Senior Q Business Solutions Architect Solutions Architect at AWS. He is passionate about enabling customers with well-architected generative AI solutions at scale.

Jed Lechner is a Specialist Solutions Architect at Amazon Web Services specializing in generative AI solutions with Amazon Q Business and Amazon Q Apps. Prior to his current role, he worked as a Software Engineer at AWS and other companies, focusing on sustainability technology, big data analytics, and cloud computing. Outside of work, he enjoys hiking and photography, and capturing nature’s moments through his lens.

Jed Lechner is a Specialist Solutions Architect at Amazon Web Services specializing in generative AI solutions with Amazon Q Business and Amazon Q Apps. Prior to his current role, he worked as a Software Engineer at AWS and other companies, focusing on sustainability technology, big data analytics, and cloud computing. Outside of work, he enjoys hiking and photography, and capturing nature’s moments through his lens.

Leo Mentis Raj Selvaraj is a Sr. Specialist Solutions Architect – GenAI at AWS with 4.5 years of experience, currently guiding customers through their GenAI implementation journeys. Previously, he architected data platform and analytics solutions for strategic customers using a comprehensive range of AWS services including storage, compute, databases, serverless, analytics, and ML technologies. Leo also collaborates with internal AWS teams to drive product feature development based on customer feedback, contributing to the evolution of AWS offerings.

Leo Mentis Raj Selvaraj is a Sr. Specialist Solutions Architect – GenAI at AWS with 4.5 years of experience, currently guiding customers through their GenAI implementation journeys. Previously, he architected data platform and analytics solutions for strategic customers using a comprehensive range of AWS services including storage, compute, databases, serverless, analytics, and ML technologies. Leo also collaborates with internal AWS teams to drive product feature development based on customer feedback, contributing to the evolution of AWS offerings.

Multi-LLM routing strategies for generative AI applications on AWS

Organizations are increasingly using multiple large language models (LLMs) when building generative AI applications. Although an individual LLM can be highly capable, it might not optimally address a wide range of use cases or meet diverse performance requirements. The multi-LLM approach enables organizations to effectively choose the right model for each task, adapt to different domains, and optimize for specific cost, latency, or quality needs. This strategy results in more robust, versatile, and efficient applications that better serve diverse user needs and business objectives.

Deploying a multi-LLM application comes with the challenge of routing each user prompt to an appropriate LLM for the intended task. The routing logic must accurately interpret and map the prompt into one of the pre-defined tasks, and then direct it to the assigned LLM for that task. In this post, we provide an overview of common multi-LLM applications. We then explore strategies for implementing effective multi-LLM routing in these applications, discussing the key factors that influence the selection and implementation of such strategies. Finally, we provide sample implementations that you can use as a starting point for your own multi-LLM routing deployments.

Overview of common multi-LLM applications

The following are some of the common scenarios where you might choose to use a multi-LLM approach in your applications:

- Multiple task types – Many use cases need to handle different task types within the same application. For example, a marketing content creation application might need to perform task types such as text generation, text summarization, sentiment analysis, and information extraction as part of producing high-quality, personalized content. Each distinct task type will likely require a separate LLM, which might also be fine-tuned with custom data.

- Multiple task complexity levels – Some applications are designed to handle a single task type, such as text summarization or question answering. However, they must be able to respond to user queries with varying levels of complexity within the same task type. For example, consider a text summarization AI assistant intended for academic research and literature review. Some user queries might be relatively straightforward, simply asking the application to summarize the core ideas and conclusions from a short article. Such queries could be effectively handled by a simple, lower-cost model. In contrast, more complex questions might require the application to summarize a lengthy dissertation by performing deeper analysis, comparison, and evaluation of the research results. These types of queries would be better addressed by more advanced models with greater reasoning capabilities.

- Multiple task domains – Certain applications need to serve users across multiple domains of expertise. An example is a virtual assistant for enterprise business operations. Such a virtual assistant should support users across various business functions, such as finance, legal, human resources, and operations. To handle this breadth of expertise, the virtual assistant needs to use different LLMs that have been fine-tuned on datasets specific to each respective domain.

- Software-as-a-service (SaaS) applications with tenant tiering – SaaS applications are often architected to provide different pricing and experiences to a spectrum of customer profiles, referred to as tiers. Through the use of different LLMs tailored to each tier, SaaS applications can offer capabilities that align with the varying needs and budgets of their diverse customer base. For instance, consider an AI-driven legal document analysis system designed for businesses of varying sizes, offering two primary subscription tiers: Basic and Pro. The Basic tier would use a smaller, more lightweight LLM well-suited for straightforward tasks, such as performing simple document searches or generating summaries of uncomplicated legal documents. The Pro tier, however, would require a highly customized LLM that has been trained on specific data and terminology, enabling it to assist with intricate tasks like drafting complex legal documents.

Multi-LLM routing strategies

In this section, we explore two main approaches to routing requests to different LLMs: static routing and dynamic routing.

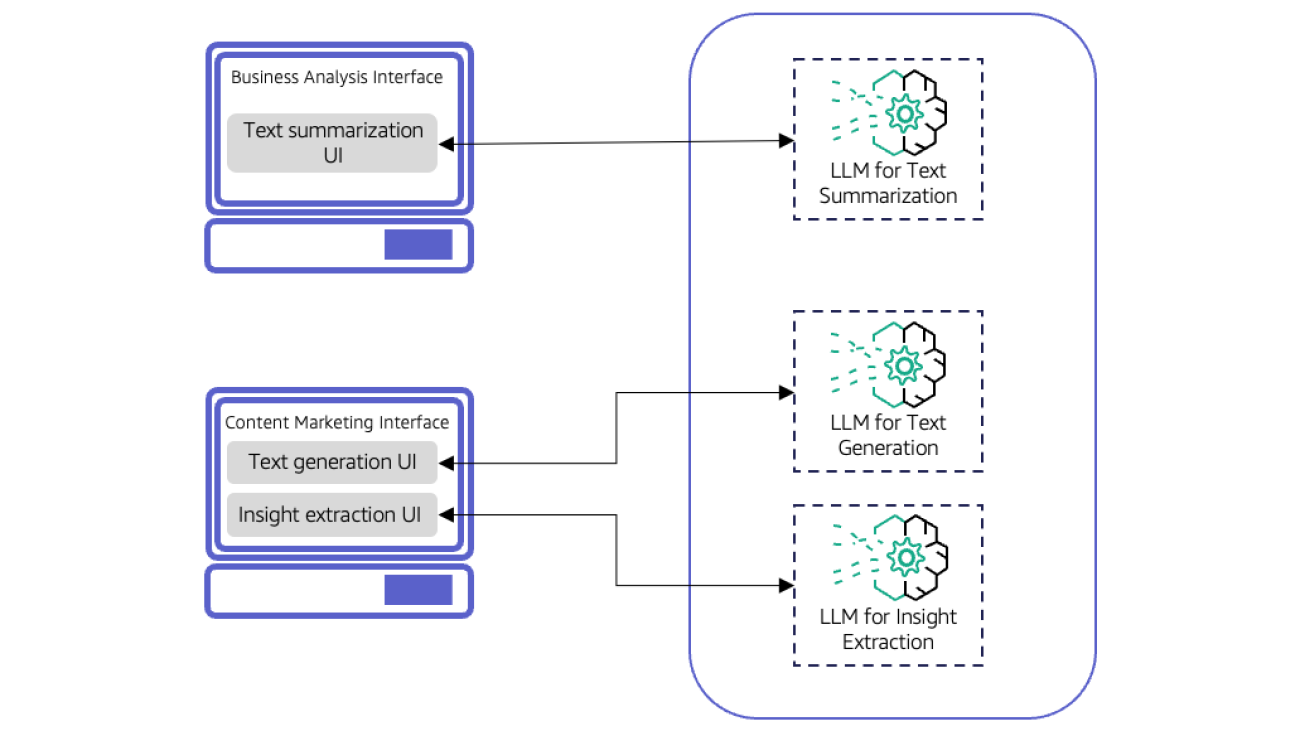

Static routing

One effective strategy for directing user prompts to appropriate LLMs is to implement distinct UI components within the same interface or separate interfaces tailored to specific tasks. For example, an AI-powered productivity tool for an ecommerce company might feature dedicated interfaces for different roles, such as content marketers and business analysts. The content marketing interface incorporates two main UI components: a text generation module for creating social media posts, emails, and blogs, and an insight extraction module that identifies the most relevant keywords and phrases from customer reviews to improve content strategy. Meanwhile, the business analysis interface would focus on text summarization for analyzing various business documents. This is illustrated in the following figure.

This approach works well for applications where the user experience supports having a distinct UI component for each task. It also allows for a flexible and modular design, where new LLMs can be quickly plugged into or swapped out from a UI component without disrupting the overall system. However, the static nature of this approach implies that the application might not be easily adaptable to evolving user requirements. Adding a new task would necessitate the development of a new UI component in addition to the selection and integration of a new model.

Dynamic routing

In some use cases, such as virtual assistants and multi-purpose chatbots, user prompts usually enter the application through a single UI component. For instance, consider a customer service AI assistant that handles three types of tasks: technical support, billing support, and pre-sale support. Each of these tasks requires its own custom LLM to provide appropriate responses. In this scenario, you need to implement a dynamic routing layer to intercept each incoming request and direct it to the downstream LLM, which is best suited to handle the intended task within that prompt. This is illustrated in the following figure.

In this section, we discuss common approaches for implementing this dynamic routing layer: LLM-assisted routing, semantic routing, and a hybrid approach.

LLM-assisted routing

This approach employs a classifier LLM at the application’s entry point to make routing decisions. The LLM’s ability to comprehend complex patterns and contextual subtleties makes this approach well-suited for applications requiring fine-grained classifications across task types, complexity levels, or domains. However, this method presents trade-offs. Although it offers sophisticated routing capabilities, it introduces additional costs and latency. Furthermore, maintaining the classifier LLM’s relevance as the application evolves can be demanding. Careful model selection, fine-tuning, configuration, and testing might be necessary to balance the impact of latency and cost with the desired classification accuracy.

Semantic routing

This approach uses semantic search as an alternative to using a classifier LLM for prompt classification and routing in multi-LLM systems. Semantic search uses embeddings to represent prompts as numerical vectors. The system then makes routing decisions by measuring the similarity between the user’s prompt embedding and the embeddings for a set of reference prompts, each representing a different task category. The user prompt is then routed to the LLM associated with the task category of the reference prompt that has the closest match.

Although semantic search doesn’t provide explicit classifications like a classifier LLM, it succeeds at identifying broad similarities and can effectively handle variations in a prompt’s wording. This makes it particularly well-suited for applications where routing can be based on coarse-grained classification of prompts, such as task domain classification. It also excels in scenarios with a large number of task categories or when new domains are frequently introduced, because it can quickly accommodate updates by simply adding new prompts to the reference prompt set.

Semantic routing offers several advantages, such as efficiency gained through fast similarity search in vector databases, and scalability to accommodate a large number of task categories and downstream LLMs. However, it also presents some trade-offs. Having adequate coverage for all possible task categories in your reference prompt set is crucial for accurate routing. Additionally, the increased system complexity due to the additional components, such as the vector database and embedding LLM, might impact overall performance and maintainability. Careful design and ongoing maintenance are necessary to address these challenges and fully realize the benefits of the semantic routing approach.

Hybrid approach

In certain scenarios, a hybrid approach combining both techniques might also prove highly effective. For instance, in applications with a large number of task categories or domains, you can use semantic search for initial broad categorization or domain matching, followed by classifier LLMs for more fine-grained classification within those broad categories. This initial filtering allows you to use a simpler, more focused classifier LLM for the final routing decision.

Consider, for instance, a customer service AI assistant handling a diverse range of inquiries. In this context, semantic routing could initially route the user’s prompt to the appropriate department—be it billing, technical support, or sales. After the broad category is established, a dedicated classifier LLM for that specific department takes over. This specialized LLM, which can be trained on nuanced distinctions within its domain, can then determine crucial factors such as task complexity or urgency. Based on this fine-grained analysis, the prompt is then routed to the most appropriate LLM or, when necessary, escalated to a human agent.

This hybrid approach combines the scalability and flexibility of semantic search with the precision and context-awareness of classifier LLMs. The result is a robust, efficient, and highly accurate routing mechanism capable of adapting to the complexities and diverse needs of modern multi-LLM applications.

Implementation of dynamic routing

In this section, we explore different approaches to implementing dynamic routing on AWS, covering both built-in routing features and custom solutions that you can use as a starting point to build your own.

Intelligent prompt routing with Amazon Bedrock

Amazon Bedrock is a fully managed service that makes high-performing LLMs and other foundation models (FMs) from leading AI startups and Amazon available through an API, so you can choose from a wide range of FMs to find the model that is best suited for your use case. With the Amazon Bedrock serverless experience, you can get started quickly, privately customize FMs with your own data, and integrate and deploy them into your applications using AWS tools without having to manage infrastructure.

If you’re building applications with Amazon Bedrock LLMs and need a fully managed solution with straightforward routing capabilities, Amazon Bedrock Intelligent Prompt Routing offers an efficient way to implement dynamic routing. This feature of Amazon Bedrock provides a single serverless endpoint for efficiently routing requests between different LLMs within the same model family. It uses advanced prompt matching and model understanding techniques to predict the performance of each model for every request. Amazon Bedrock then dynamically routes each request to the model that it predicts is most likely to give the desired response at the lowest cost. Intelligent Prompt Routing can reduce costs by up to 30% without compromising on accuracy. As of this writing, Amazon Bedrock supports routing within the Anthropic’s Claude and Meta’s Llama model families. For example, Amazon Bedrock can intelligently route requests between Anthropic’s Claude 3.5 Sonnet and Claude 3 Haiku depending on the complexity of the prompt, as illustrated the following figure. Similarly, Amazon Bedrock can route requests between Meta’s Llama 3.1 70B and 8B.

This architecture workflow includes the following steps:

- A user submits a question through a web or mobile application.

- Anthropic’s prompt router predicts the performance of each downstream LLM, selecting the model that it predicts will offer the best combination of response quality and cost.

- Amazon Bedrock routes the request to the selected LLM, and returns the response along with information about the model.

For detailed implementation guidelines and examples of Intelligent Prompt Routing on Amazon Bedrock, see Reduce costs and latency with Amazon Bedrock Intelligent Prompt Routing and prompt caching.

Custom prompt routing

If your LLMs are hosted outside Amazon Bedrock, such as on Amazon SageMaker or Amazon Elastic Kubernetes Service (Amazon EKS), or you require routing customization, you will need to develop a custom routing solution.

This section provides sample implementations for both LLM-assisted and semantic routing. We discuss the solution’s mechanics, key design decisions, and how to use it as a foundation for developing your own custom routing solutions. For detailed deployment instructions for each routing solution, refer to the GitHub repo. The provided code in this repo is meant to be used in a development environment. Before migrating any of the provided solutions to production, we recommend following the AWS Well-Architected Framework.

LLM-assisted routing

In this solution, we demonstrate an educational tutor assistant that helps students in two domains of history and math. To implement the routing layer, the application uses the Amazon Titan Text G1 – Express model on Amazon Bedrock to classify the questions based on their topic to either history or math. History questions are routed to a more cost-effective and faster LLM such as Anthropic’s Claude 3 Haiku on Amazon Bedrock. Math questions are handled by a more powerful LLM, such as Anthropic’s Claude 3.5 Sonnet on Amazon Bedrock, which is better suited for complex problem-solving, in-depth explanations, and multi-step reasoning. If the classifier LLM is unsure whether a question belongs to the history or math category, it defaults to classifying it as math.

The architecture of this system is illustrated in the following figure. The use of Amazon Titan and Anthropic models on Amazon Bedrock in this demonstration is optional. You can substitute them with other models deployed on or outside of Amazon Bedrock.

This architecture workflow includes the following steps:

- A user submits a question through a web or mobile application, which forwards the query to Amazon API Gateway.

- When API Gateway receives the request, it triggers an AWS Lambda

- The Lambda function sends the question to the classifier LLM to determine whether it is a history or math question.

- Based on the classifier LLM’s decision, the Lambda function routes the question to the appropriate downstream LLM, which will generate an answer and return it to the user.

Follow the deployment steps in the GitHub repo to create the necessary infrastructure for LLM-assisted routing and run tests to generate responses. The following output shows the response to the question “What year did World War II end?”

The question was correctly classified as a history question, with the classification process taking approximately 0.53 seconds. The question was then routed to and answered by Anthropic’s Claude 3 Haiku, which took around 0.25 seconds. In total, it took about 0.78 seconds to receive the response.

Next, we will ask a math question. The following output shows the response to the question “Solve the quadratic equation: 2x^2 – 5x + 3 = 0.”

The question was correctly classified as a math question, with the classification process taking approximately 0.59 seconds. The question was then correctly routed to and answered by Anthropic’s Claude 3.5 Sonnet, which took around 2.3 seconds. In total, it took about 2.9 seconds to receive the response.

Semantic routing

In this solution, we focus on the same educational tutor assistant use case as in LLM-assisted routing. To implement the routing layer, you first need to create a set of reference prompts that represents the full spectrum of history and math topics you intend to cover. This reference set serves as the foundation for the semantic matching process, enabling the application to correctly categorize incoming queries. As an illustrative example, we’ve provided a sample reference set with five questions for each of the history and math topics. In a real-world implementation, you would likely need a much larger and more diverse set of reference questions to have robust routing performance.

You can use the Amazon Titan Text Embeddings V2 model on Amazon Bedrock to convert the questions in the reference set into embeddings. You can find the code for this conversion in the GitHub repo. These embeddings are then saved as a reference index inside an in-memory FAISS vector store, which is deployed as a Lambda layer.

The architecture of this system is illustrated in the following figure. The use of Amazon Titan and Anthropic models on Amazon Bedrock in this demonstration is optional. You can substitute them with other models deployed on or outside of Amazon Bedrock.

This architecture workflow includes the following steps:

- A user submits a question through a web or mobile application, which forwards the query to API Gateway.

- When API Gateway receives the request, it triggers a Lambda function.

- The Lambda function sends the question to the Amazon Titan Text Embeddings V2 model to convert it to an embedding. It then performs a similarity search on the FAISS index to find the closest matching question in the reference index, and returns the corresponding category label.

- Based on the retrieved category, the Lambda function routes the question to the appropriate downstream LLM, which will generate an answer and return it to the user.

Follow the deployment steps in the GitHub repo to create the necessary infrastructure for semantic routing and run tests to generate responses. The following output shows the response to the question “What year did World War II end?”

The question was correctly classified as a history question and the classification took about 0.1 seconds. The question was then routed and answered by Anthropic’s Claude 3 Haiku, which took about 0.25 seconds, resulting in a total of about 0.36 seconds to get the response back.

Next, we ask a math question. The following output shows the response to the question “Solve the quadratic equation: 2x^2 – 5x + 3 = 0.”

The question was correctly classified as a math question and the classification took about 0.1 seconds. Moreover, the question was correctly routed and answered by Anthropic’s 3.5 Claude Sonnet, which took about 2.7 seconds, resulting in a total of about 2.8 seconds to get the response back.

Additional considerations for custom prompt routing