Modern large language models (LLMs) excel in language processing but are limited by their static training data. However, as industries require more adaptive, decision-making AI, integrating tools and external APIs has become essential. This has led to the evolution and rapid rise of agentic workflows, where AI systems autonomously plan, execute, and refine tasks. Accurate tool use is foundational for enhancing the decision-making and operational efficiency of these autonomous agents and building successful and complex agentic workflows.

In this post, we dissect the technical mechanisms of tool calling using Amazon Nova models through Amazon Bedrock, alongside methods for model customization to refine tool calling precision.

Expanding LLM capabilities with tool use

LLMs excel at natural language tasks but become significantly more powerful with tool integration, such as APIs and computational frameworks. Tools enable LLMs to access real-time data, perform domain-specific computations, and retrieve precise information, enhancing their reliability and versatility. For example, integrating a weather API allows for accurate, real-time forecasts, or a Wikipedia API provides up-to-date information for complex queries. In scientific contexts, tools like calculators or symbolic engines address numerical inaccuracies in LLMs. These integrations transform LLMs into robust, domain-aware systems capable of handling dynamic, specialized tasks with real-world utility.

Amazon Nova models and Amazon Bedrock

Amazon Nova models, unveiled at AWS re:Invent in December 2024, are optimized to deliver exceptional price-performance value, offering state-of-the-art performance on key text-understanding benchmarks at low cost. The series comprises three variants: Micro (text-only, ultra-efficient for edge use), Lite (multimodal, balanced for versatility), and Pro (multimodal, high-performance for complex tasks).

Amazon Nova models can be used for variety of tasks, from generation to developing agentic workflows. As such, these models have the capability to interface with external tools or services and use them through tool calling. This can be achieved through the Amazon Bedrock console (see Getting started with Amazon Nova in the Amazon Bedrock console) and APIs such as Converse and Invoke.

In addition to using the pre-trained models, developers have the option to fine-tune these models with multimodal data (Pro and Lite) or text data (Pro, Lite, and Micro), providing the flexibility to achieve desired accuracy, latency, and cost. Developers can also run self-service custom fine-tuning and distillation of larger models to smaller ones using the Amazon Bedrock console and APIs.

Solution overview

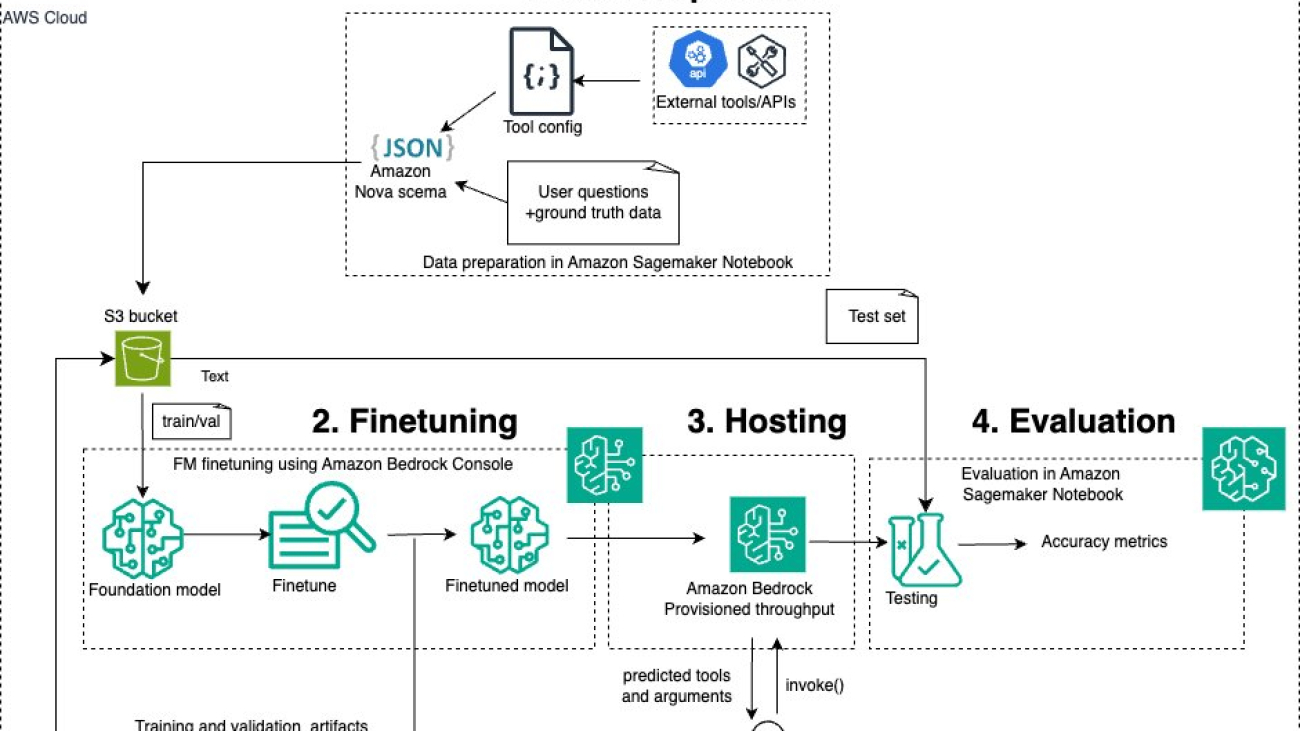

The following diagram illustrates the solution architecture.

For this post, we first prepared a custom dataset for tool usage. We used the test set to evaluate Amazon Nova models through Amazon Bedrock using the Converse and Invoke APIs. We then fine-tuned Amazon Nova Micro and Amazon Nova Lite models through Amazon Bedrock with our fine-tuning dataset. After the fine-tuning process was complete, we evaluated these customized models through provisioned throughput. In the following sections, we go through these steps in more detail.

Tools

Tool usage in LLMs involves two critical operations: tool selection and argument extraction or generation. For instance, consider a tool designed to retrieve weather information for a specific location. When presented with a query such as “What’s the weather in Alexandria, VA?”, the LLM evaluates its repertoire of tools to determine whether an appropriate tool is available. Upon identifying a suitable tool, the model selects it and extracts the required arguments—here, “Alexandria” and “VA” as structured data types (for example, strings)—to construct the tool call.

Each tool is rigorously defined with a formal specification that outlines its intended functionality, the mandatory or optional arguments, and the associated data types. Such precise definitions, known as tool config, make sure that tool calls are executed correctly and that argument parsing aligns with the tool’s operational requirements. Following this requirement, the dataset used for this example defines eight tools with their arguments and configures them in a structured JSON format. We define the following eight tools (we use seven of them for fine-tuning and hold out the weather_api_call tool during testing in order to evaluate the accuracy on unseen tool use):

- weather_api_call – Custom tool for getting weather information

- stat_pull – Custom tool for identifying stats

- text_to_sql – Custom text-to-SQL tool

- terminal – Tool for executing scripts in a terminal

- wikipidea – Wikipedia API tool to search through Wikipedia pages

- duckduckgo_results_json – Internet search tool that executes a DuckDuckGo search

- youtube_search – YouTube API search tool that searches video listings

- pubmed_search – PubMed search tool that searches PubMed abstracts

The following code is an example of what a tool configuration for terminal might look like:

Dataset

The dataset is a synthetic tool calling dataset created with assistance from a foundation model (FM) from Amazon Bedrock and manually validated and adjusted. This dataset was created for our set of eight tools as discussed in the previous section, with the goal of creating a diverse set of questions and tool invocations that allow another model to learn from these examples and generalize to unseen tool invocations.

Each entry in the dataset is structured as a JSON object with key-value pairs that define the question (a natural language user query for the model), the ground truth tool required to answer the user query, its arguments (dictionary containing the parameters required to execute the tool), and additional constraints like order_matters: boolean, indicating if argument order is critical, and arg_pattern: optional, a regular expression (regex) for argument validation or formatting. Later in this post, we use these ground truth labels to supervise the training of pre-trained Amazon Nova models, adapting them for tool use. This process, known as supervised fine-tuning, will be explored in detail in the following sections.

The size of the training set is 560 questions and the test set is 120 questions. The test set consists of 15 questions per tool category, totaling 120 questions. The following are some examples from the dataset:

Prepare the dataset for Amazon Nova

To use this dataset with Amazon Nova models, we need to additionally format the data based on a particular chat template. Native tool calling has a translation layer that formats the inputs to the appropriate format before passing the model. Here, we employ a DIY tool use approach with a custom prompt template. Specifically, we need to add the system prompt, the user message embedded with the tool config, and the ground truth labels as the assistant message. The following is a training example formatted for Amazon Nova. Due to space constraints, we only show the toolspec for one tool.

Upload dataset to Amazon S3

This step is needed later for the fine-tuning for Amazon Bedrock to access the training data. You can upload your dataset either through the Amazon Simple Storage Service (Amazon S3) console or through code.

Tool calling with base models through the Amazon Bedrock API

Now that we have created the tool use dataset and formatted it as required, let’s use it to test out the Amazon Nova models. As mentioned previously, we can use both the Converse and Invoke APIs for tool use in Amazon Bedrock. The Converse API enables dynamic, context-aware conversations, allowing models to engage in multi-turn dialogues, and the Invoke API allows the user to call and interact with the underlying models within Amazon Bedrock.

To use the Converse API, you simply send the messages, system prompt (if any), and the tool config directly in the Converse API. See the following example code:

To parse the tool and arguments from the LLM response, you can use the following example code:

For the question: “Hey, what's the temperature in Paris right now?”, you get the following output:

To execute tool use through the Invoke API, first you need to prepare the request body with the user question as well as the tool config that was prepared before. The following code snippet shows how to convert the tool config JSON to string format, which can be used in the message body:

Using either of the two APIs, you can test and benchmark the base Amazon Nova models with the tool use dataset. In the next sections, we show how you can customize these base models specifically for the tool use domain.

Supervised fine-tuning using the Amazon Bedrock console

Amazon Bedrock offers three different customization techniques: supervised fine-tuning, model distillation, and continued pre-training. At the time of writing, the first two methods are available for customizing Amazon Nova models. Supervised fine-tuning is a popular method in transfer learning, where a pre-trained model is adapted to a specific task or domain by training it further on a smaller, task-specific dataset. The process uses the representations learned during pre-training on large datasets to improve performance in the new domain. During fine-tuning, the model’s parameters (either all or selected layers) are updated using backpropagation to minimize the loss.

In this post, we use the labeled datasets that we created and formatted previously to run supervised fine-tuning to adapt Amazon Nova models for the tool use domain.

Create a fine-tuning job

Complete the following steps to create a fine-tuning job:

- Open the Amazon Bedrock console.

- Choose

us-east-1as the AWS Region. - Under Foundation models in the navigation pane, choose Custom models.

- Choose Create Fine-tuning job under Customization methods.

At the time of writing, Amazon Nova model fine-tuning is exclusively available in the us-east-1 Region.

- Choose Select model and choose Amazon as the model provider.

- Choose your model (for this post, Amazon Nova Micro) and choose Apply.

- For Fine-tuned model name, enter a unique name.

- For Job name¸ enter a name for the fine-tuning job.

- In the Input data section, enter following details:

- For S3 location, enter the source S3 bucket containing the training data.

- For Validation dataset location, optionally enter the S3 bucket containing a validation dataset.

- In the Hyperparameters section, you can customize the following hyperparameters:

- For Epochs¸ enter a value between 1–5.

- For Batch size, the value is fixed at 1.

- For Learning rate multiplier, enter a value between 0.000001–0.0001

- For Learning rate warmup steps, enter a value between 0–100.

We recommend starting with the default parameter values and then changing the settings iteratively. It’s a good practice to change only one or a couple of parameters at a time, in order to isolate the parameter effects. Remember, hyperparameter tuning is model and use case specific.

- In the Output data section, enter the target S3 bucket for model outputs and training metrics.

- Choose Create fine-tuning job.

Run the fine-tuning job

After you start the fine-tuning job, you will be able to see your job under Jobs and the status as Training. When it finishes, the status changes to Complete.

You can now go to the training job and optionally access the training-related artifacts that are saved in the output folder.

You can find both training and validation (we highly recommend using a validation set) artifacts here.

You can use the training and validation artifacts to assess your fine-tuning job through loss curves (as shown in the following figure), which track training loss (orange) and validation loss (blue) over time. A steady decline in both indicates effective learning and good generalization. A small gap between them suggests minimal overfitting, whereas a rising validation loss with decreasing training loss signals overfitting. If both losses remain high, it indicates underfitting. Monitoring these curves helps you quickly diagnose model performance and adjust training strategies for optimal results.

Host the fine-tuned model and run inference

Now that you have completed the fine-tuning, you can host the model and use it for inference. Follow these steps:

- On the Amazon Bedrock console, under Foundation models in the navigation pane, choose Custom models

- On the Models tab, choose the model you fine-tuned.

- Choose Purchase provisioned throughput.

- Specify a commitment term (no commitment, 1 month, 6 months) and review the associated cost for hosting the fine-tuned models.

After the customized model is hosted through provisioned throughput, a model ID will be assigned, which will be used for inference. For inference with models hosted with provisioned throughput, we have to use the Invoke API in the same way we described previously in this post—simply replace the model ID with the customized model ID.

The aforementioned fine-tuning and inference steps can also be done programmatically. Refer to the following GitHub repo for more detail.

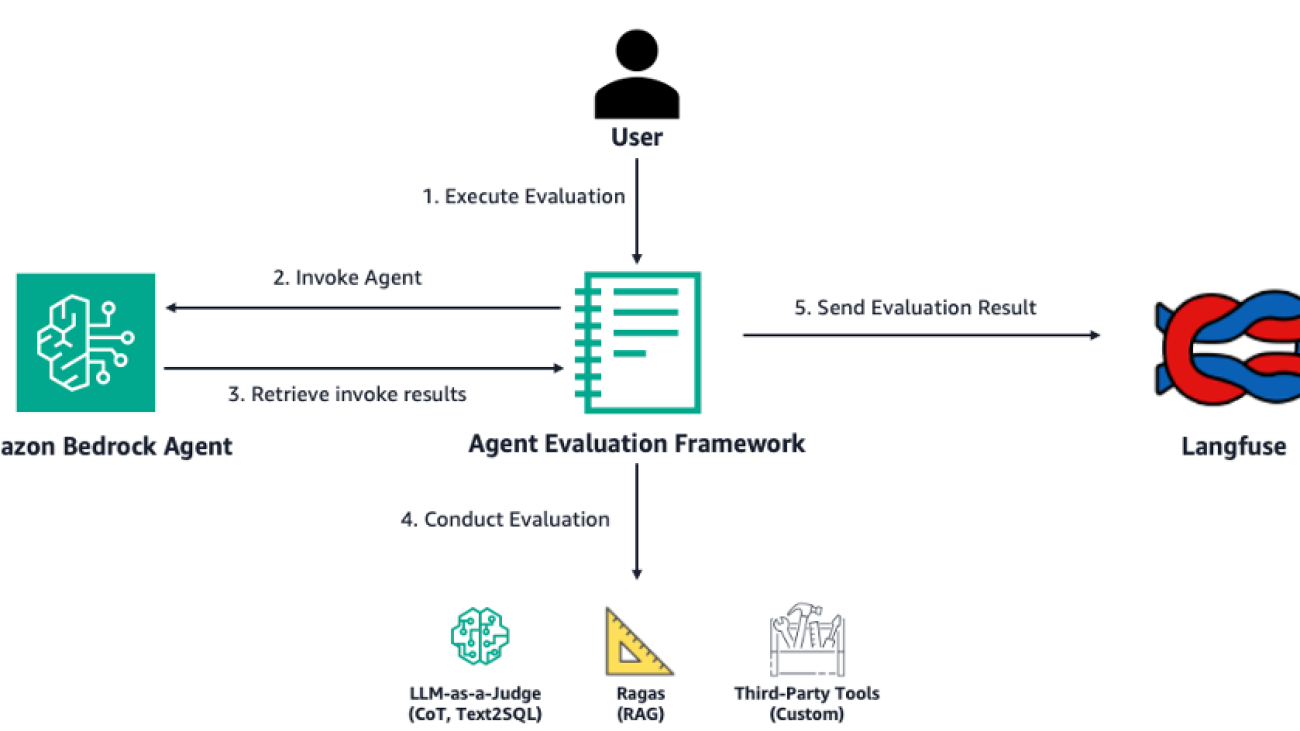

Evaluation framework

Evaluating fine-tuned tool calling LLMs requires a comprehensive approach to assess their performance across various dimensions. The primary metric to evaluate tool calling is accuracy, including both tool selection and argument generation accuracy. This measures how effectively the model selects the correct tool and generates valid arguments. Latency and token usage (input and output tokens) are two other important metrics.

Tool call accuracy evaluates if the tool predicted by the LLM matches the ground truth tool for each question; a score of 1 is given if they match and 0 when they don’t. After processing the questions, we can use the following equation: Tool Call Accuracy=∑(Correct Tool Calls)/(Total number of test questions).

Argument call accuracy assesses whether the arguments provided to the tools are correct, based on either exact matches or regex pattern matching. For each tool call, the model’s predicted arguments are extracted. It uses the following argument matching methods:

- Regex matching – If the ground truth includes regex patterns, the predicted arguments are matched against these patterns. A successful match increases the score.

- Inclusive string matching – If no regex pattern is provided, the predicted argument is compared to the ground truth argument. Credit is given if the predicted argument contains the ground truth argument. This is to allow for arguments, like search terms, to not be penalized for adding additional specificity.

The score for each argument is normalized based on the number of arguments, allowing partial credit when multiple arguments are required. The cumulative correct argument scores are averaged across all questions: Argument Call Accuracy = ∑Correct Arguments/(Total Number of Questions).

Below we show some example questions and accuracy scores:

Example 1:

Example 2:

Results

We are now ready to visualize the results and compare the performance of base Amazon Nova models to their fine-tuned counterparts.

Base models

The following figures illustrate the performance comparison of the base Amazon Nova models.

The comparison reveals a clear trade-off between accuracy and latency, shaped by model size. Amazon Nova Pro, the largest model, delivers the highest accuracy in both tool call and argument call tasks, reflecting its advanced computational capabilities. However, this comes with increased latency.

In contrast, Amazon Nova Micro, the smallest model, achieves the lowest latency, which ideal for fast, resource-constrained environments, though it sacrifices some accuracy compared to its larger counterparts.

Fine-tuned models vs. base models

The following figure visualizes accuracy improvement after fine-tuning.

The comparative analysis of the Amazon Nova model variants reveals substantial performance improvements through fine-tuning, with the most significant gains observed in the smaller Amazon Nova Micro model. The fine-tuned Amazon Nova model showed remarkable growth in tool call accuracy, increasing from 75.8% to 95%, which is a 25.38% improvement. Similarly, its argument call accuracy rose from 77.8% to 87.7%, reflecting a 12.74% increase.

In contrast, the fine-tuned Amazon Nova Lite model exhibited more modest gains, with tool call accuracy improving from 90.8% to 96.66%—a 6.46% increase—and argument call accuracy rising from 85% to 89.9%, marking a 5.76% improvement. Both fine-tuned models surpassed the accuracy achieved by the Amazon Nova Pro base model.

These results highlight that fine-tuning can significantly enhance the performance of lightweight models, making them strong contenders for applications where both accuracy and latency are critical.

Conclusion

In this post, we demonstrated model customization (fine-tuning) for tool use with Amazon Nova. We first introduced a tool usage use case, and gave details about the dataset. We walked through the details of Amazon Nova specific data formatting and showed how to do tool calling through the Converse and Invoke APIs in Amazon Bedrock. After getting the baseline results from Amazon Nova models, we explained in detail the fine-tuning process, hosting fine-tuned models with provisioned throughput, and using the fine-tuned Amazon Nova models for inference. In addition, we touched upon getting insights from training and validation artifacts from a fine-tuning job in Amazon Bedrock.

Check out the detailed notebook for tool usage to learn more. For more information on Amazon Bedrock and the latest Amazon Nova models, refer to the Amazon Bedrock User Guide and Amazon Nova User Guide. The Generative AI Innovation Center has a group of AWS science and strategy experts with comprehensive expertise spanning the generative AI journey, helping customers prioritize use cases, build roadmaps, and move solutions into production. See Generative AI Innovation Center for our latest work and customer success stories.

About the Authors

Baishali Chaudhury is an Applied Scientist at the Generative AI Innovation Center at AWS, where she focuses on advancing Generative AI solutions for real-world applications. She has a strong background in computer vision, machine learning, and AI for healthcare. Baishali holds a PhD in Computer Science from University of South Florida and PostDoc from Moffitt Cancer Centre.

Baishali Chaudhury is an Applied Scientist at the Generative AI Innovation Center at AWS, where she focuses on advancing Generative AI solutions for real-world applications. She has a strong background in computer vision, machine learning, and AI for healthcare. Baishali holds a PhD in Computer Science from University of South Florida and PostDoc from Moffitt Cancer Centre.

Isaac Privitera is a Principal Data Scientist with the AWS Generative AI Innovation Center, where he develops bespoke generative AI-based solutions to address customers’ business problems. His primary focus lies in building responsible AI systems, using techniques such as RAG, multi-agent systems, and model fine-tuning. When not immersed in the world of AI, Isaac can be found on the golf course, enjoying a football game, or hiking trails with his loyal canine companion, Barry.

Isaac Privitera is a Principal Data Scientist with the AWS Generative AI Innovation Center, where he develops bespoke generative AI-based solutions to address customers’ business problems. His primary focus lies in building responsible AI systems, using techniques such as RAG, multi-agent systems, and model fine-tuning. When not immersed in the world of AI, Isaac can be found on the golf course, enjoying a football game, or hiking trails with his loyal canine companion, Barry.

Mengdie (Flora) Wang is a Data Scientist at AWS Generative AI Innovation Center, where she works with customers to architect and implement scalableGenerative AI solutions that address their unique business challenges. She specializes in model customization techniques and agent-based AI systems, helping organizations harness the full potential of generative AI technology. Prior to AWS, Flora earned her Master’s degree in Computer Science from the University of Minnesota, where she developed her expertise in machine learning and artificial intelligence.

Mengdie (Flora) Wang is a Data Scientist at AWS Generative AI Innovation Center, where she works with customers to architect and implement scalableGenerative AI solutions that address their unique business challenges. She specializes in model customization techniques and agent-based AI systems, helping organizations harness the full potential of generative AI technology. Prior to AWS, Flora earned her Master’s degree in Computer Science from the University of Minnesota, where she developed her expertise in machine learning and artificial intelligence.

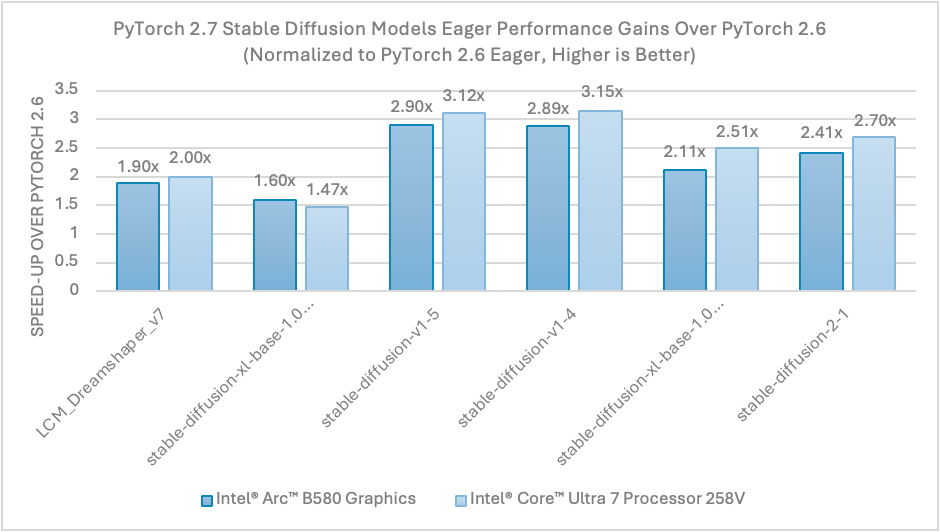

A-Series Graphics

A-Series Graphics

Go behind the scenes on the development of Google Cloud WAN, now available to external customers for the first time.

Go behind the scenes on the development of Google Cloud WAN, now available to external customers for the first time.