At this week’s I/O, we announced our very latest products, tools and research designed to make AI even more helpful with Gemini. The latest episode of the Google AI: Rel…Read More

At this week’s I/O, we announced our very latest products, tools and research designed to make AI even more helpful with Gemini. The latest episode of the Google AI: Rel…Read More

Optimize query responses with user feedback using Amazon Bedrock embedding and few-shot prompting

Improving response quality for user queries is essential for AI-driven applications, especially those focusing on user satisfaction. For example, an HR chat-based assistant should strictly follow company policies and respond using a certain tone. A deviation from that can be corrected by feedback from users. This post demonstrates how Amazon Bedrock, combined with a user feedback dataset and few-shot prompting, can refine responses for higher user satisfaction. By using Amazon Titan Text Embeddings v2, we demonstrate a statistically significant improvement in response quality, making it a valuable tool for applications seeking accurate and personalized responses.

Recent studies have highlighted the value of feedback and prompting in refining AI responses. Prompt Optimization with Human Feedback proposes a systematic approach to learning from user feedback, using it to iteratively fine-tune models for improved alignment and robustness. Similarly, Black-Box Prompt Optimization: Aligning Large Language Models without Model Training demonstrates how retrieval augmented chain-of-thought prompting enhances few-shot learning by integrating relevant context, enabling better reasoning and response quality. Building on these ideas, our work uses the Amazon Titan Text Embeddings v2 model to optimize responses using available user feedback and few-shot prompting, achieving statistically significant improvements in user satisfaction. Amazon Bedrock already provides an automatic prompt optimization feature to automatically adapt and optimize prompts without additional user input. In this blog post, we showcase how to use OSS libraries for a more customized optimization based on user feedback and few-shot prompting.

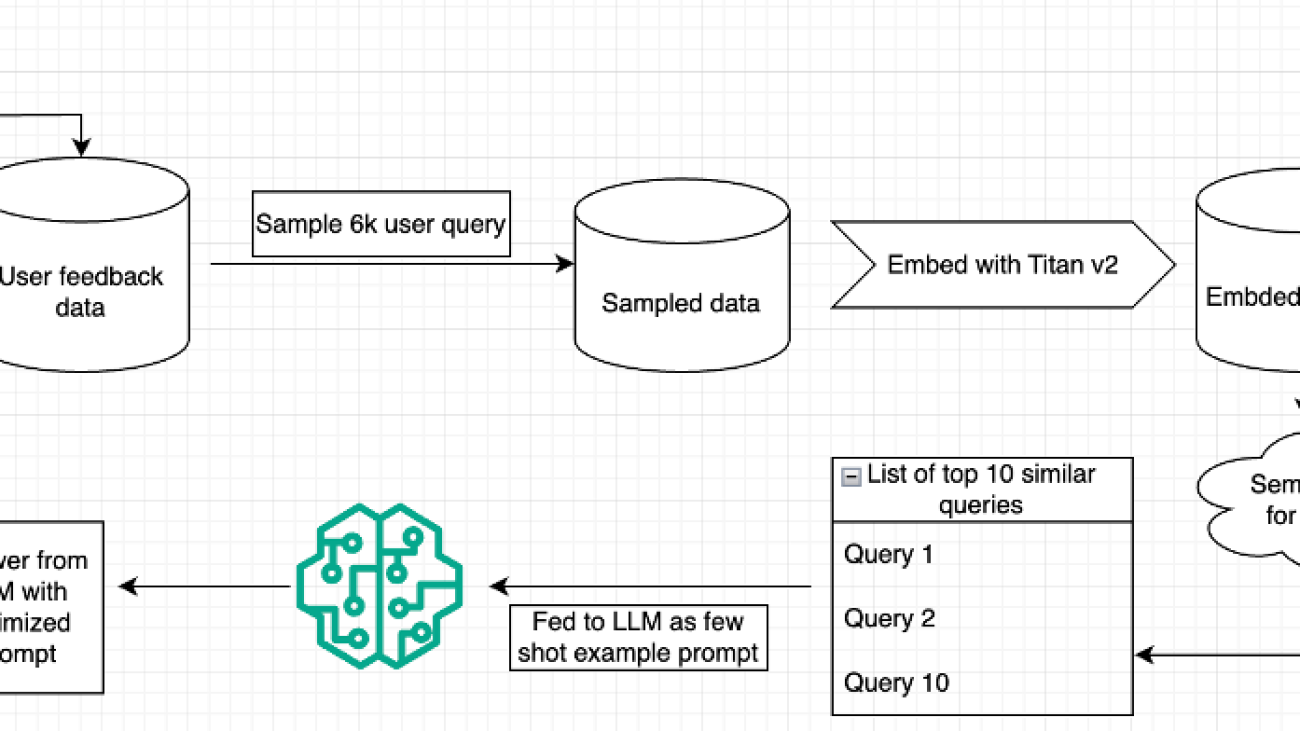

We’ve developed a practical solution using Amazon Bedrock that automatically improves chat assistant responses based on user feedback. This solution uses embeddings and few-shot prompting. To demonstrate the effectiveness of the solution, we used a publicly available user feedback dataset. However, when applying it inside a company, the model can use its own feedback data provided by its users. With our test dataset, it shows a 3.67% increase in user satisfaction scores. The key steps include:

- Retrieve a publicly available user feedback dataset (for this example, Unified Feedback Dataset on Hugging Face).

- Create embeddings for queries to capture semantic similar examples, using Amazon Titan Text Embeddings.

- Use similar queries as examples in a few-shot prompt to generate optimized prompts.

- Compare optimized prompts against direct large language model (LLM) calls.

- Validate the improvement in response quality using a paired sample t-test.

The following diagram is an overview of the system.

The key benefits of using Amazon Bedrock are:

- Zero infrastructure management – Deploy and scale without managing complex machine learning (ML) infrastructure

- Cost-effective – Pay only for what you use with the Amazon Bedrock pay-as-you-go pricing model

- Enterprise-grade security – Use AWS built-in security and compliance features

- Straightforward integration – Integrate seamlessly existing applications and open source tools

- Multiple model options – Access various foundation models (FMs) for different use cases

The following sections dive deeper into these steps, providing code snippets from the notebook to illustrate the process.

Prerequisites

Prerequisites for implementation include an AWS account with Amazon Bedrock access, Python 3.8 or later, and configured Amazon credentials.

Data collection

We downloaded a user feedback dataset from Hugging Face, llm-blender/Unified-Feedback. The dataset contains fields such as conv_A_user (the user query) and conv_A_rating (a binary rating; 0 means the user doesn’t like it and 1 means the user likes it). The following code retrieves the dataset and focuses on the fields needed for embedding generation and feedback analysis. It can be run in an Amazon Sagemaker notebook or a Jupyter notebook that has access to Amazon Bedrock.

Data sampling and embedding generation

To manage the process effectively, we sampled 6,000 queries from the dataset. We used Amazon Titan Text Embeddings v2 to create embeddings for these queries, transforming text into high-dimensional representations that allow for similarity comparisons. See the following code:

Few-shot prompting with similarity search

For this part, we took the following steps:

- Sample 100 queries from the dataset for testing. Sampling 100 queries helps us run multiple trials to validate our solution.

- Compute cosine similarity (measure of similarity between two non-zero vectors) between the embeddings of these test queries and the stored 6,000 embeddings.

- Select the top k similar queries to the test queries to serve as few-shot examples. We set K = 10 to balance between the computational efficiency and diversity of the examples.

See the following code:

This code provides a few-shot context for each test query, using cosine similarity to retrieve the closest matches. These example queries and feedback serve as additional context to guide the prompt optimization. The following function generates the few-shot prompt:

The get_optimized_prompt function performs the following tasks:

- The user query and similar examples generate a few-shot prompt.

- We use the few-shot prompt in an LLM call to generate an optimized prompt.

- Make sure the output is in the following format using Pydantic.

See the following code:

The make_llm_call_with_optimized_prompt function uses an optimized prompt and user query to make the LLM (Anthropic’s Claude Haiku 3.5) call to get the final response:

Comparative evaluation of optimized and unoptimized prompts

To compare the optimized prompt with the baseline (in this case, the unoptimized prompt), we defined a function that returned a result without an optimized prompt for all the queries in the evaluation dataset:

The following function generates the query response using similarity search and intermediate optimized prompt generation for all the queries in the evaluation dataset:

This code compares responses generated with and without few-shot optimization, setting up the data for evaluation.

LLM as judge and evaluation of responses

To quantify response quality, we used an LLM as a judge to score the optimized and unoptimized responses for alignment with the user query. We used Pydantic here to make sure the output sticks to the desired pattern of 0 (LLM predicts the response won’t be liked by the user) or 1 (LLM predicts the response will be liked by the user):

LLM-as-a-judge is a functionality where an LLM can judge the accuracy of a text using certain grounding examples. We have used that functionality here to judge the difference between the result received from optimized and un-optimized prompt. Amazon Bedrock launched an LLM-as-a-judge functionality in December 2024 that can be used for such use cases. In the following function, we demonstrate how the LLM acts as an evaluator, scoring responses based on their alignment and satisfaction for the full evaluation dataset:

In the following example, we repeated this process for 20 trials, capturing user satisfaction scores each time. The overall score for the dataset is the sum of the user satisfaction score.

Result analysis

The following line chart shows the performance improvement of the optimized solution over the unoptimized one. Green areas indicate positive improvements, whereas red areas show negative changes.

As we gathered the result of 20 trials, we saw that the mean of satisfaction scores from the unoptimized prompt was 0.8696, whereas the mean of satisfaction scores from the optimized prompt was 0.9063. Therefore, our method outperforms the baseline by 3.67%.

Finally, we ran a paired sample t-test to compare satisfaction scores from the optimized and unoptimized prompts. This statistical test validated whether prompt optimization significantly improved response quality. See the following code:

After running the t-test, we got a p-value of 0.000762, which is less than 0.05. Therefore, the performance boost of optimized prompts over unoptimized prompts is statistically significant.

Key takeaways

We learned the following key takeaways from this solution:

- Few-shot prompting improves query response – Using highly similar few-shot examples leads to significant improvements in response quality.

- Amazon Titan Text Embeddings enables contextual similarity – The model produces embeddings that facilitate effective similarity searches.

- Statistical validation confirms effectiveness – A p-value of 0.000762 indicates that our optimized approach meaningfully enhances user satisfaction.

- Improved business impact – This approach delivers measurable business value through improved AI assistant performance. The 3.67% increase in satisfaction scores translates to tangible outcomes: HR departments can expect fewer policy misinterpretations (reducing compliance risks), and customer service teams might see a significant reduction in escalated tickets. The solution’s ability to continuously learn from feedback creates a self-improving system that increases ROI over time without requiring specialized ML expertise or infrastructure investments.

Limitations

Although the system shows promise, its performance heavily depends on the availability and volume of user feedback, especially in closed-domain applications. In scenarios where only a handful of feedback examples are available, the model might struggle to generate meaningful optimizations or fail to capture the nuances of user preferences effectively. Additionally, the current implementation assumes that user feedback is reliable and representative of broader user needs, which might not always be the case.

Next steps

Future work could focus on expanding this system to support multilingual queries and responses, enabling broader applicability across diverse user bases. Incorporating Retrieval Augmented Generation (RAG) techniques could further enhance context handling and accuracy for complex queries. Additionally, exploring ways to address the limitations in low-feedback scenarios, such as synthetic feedback generation or transfer learning, could make the approach more robust and versatile.

Conclusion

In this post, we demonstrated the effectiveness of query optimization using Amazon Bedrock, few-shot prompting, and user feedback to significantly enhance response quality. By aligning responses with user-specific preferences, this approach alleviates the need for expensive model fine-tuning, making it practical for real-world applications. Its flexibility makes it suitable for chat-based assistants across various domains, such as ecommerce, customer service, and hospitality, where high-quality, user-aligned responses are essential.

To learn more, refer to the following resources:

- Black-Box Prompt Optimization: Aligning Large Language Models without Model Training

- Approaches to Few-Shot Learning

- Recent Advances in LLM Feedback Integration

- Frameworks for Query Optimization

About the Authors

Tanay Chowdhury is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Tanay Chowdhury is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Parth Patwa is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Parth Patwa is a Data Scientist at the Generative AI Innovation Center at Amazon Web Services.

Yingwei Yu is an Applied Science Manager at the Generative AI Innovation Center at Amazon Web Services.

Yingwei Yu is an Applied Science Manager at the Generative AI Innovation Center at Amazon Web Services.

How well do you know our I/O 2025 announcements?

Take this quiz about Google I/O 2025 to see how well you know what we announced this year at I/O.Read More

Take this quiz about Google I/O 2025 to see how well you know what we announced this year at I/O.Read More

Abstracts: Zero-shot models in single-cell biology with Alex Lu

Members of the research community at Microsoft work continuously to advance their respective fields. The Abstracts podcast brings its audience to the cutting edge with them through short, compelling conversations about new and noteworthy achievements.

The success of foundation models like ChatGPT has sparked growing interest in scientific communities seeking to use AI for things like discovery in single-cell biology. In this episode, senior researcher Alex Lu joins host Gretchen Huizinga to talk about his work on a paper called Assessing the limits of zero-shot foundation models in single-cell biology, where researchers tested zero-shot performance of proposed single-cell foundation models. Results showed limited efficacy compared to older, simpler methods, and suggested the need for more rigorous evaluation and research.

Learn more:

Subscribe to the Microsoft Research Podcast:

Transcript

[MUSIC]GRETCHEN HUIZINGA: Welcome to Abstracts, a Microsoft Research Podcast that puts the spotlight on world-class research in brief. I’m Gretchen Huizinga. In this series, members of the research community at Microsoft give us a quick snapshot – or a podcast abstract – of their new and noteworthy papers.

[MUSIC FADES]On today’s episode, I’m talking to Alex Lu, a senior researcher at Microsoft Research and co-author of a paper called Assessing the Limits of Zero Shot Foundation Models in Single-cell Biology. Alex Lu, wonderful to have you on the podcast. Welcome to Abstracts!

ALEX LU: Yeah, I’m really excited to be joining you today.

HUIZINGA: So let’s start with a little background of your work. In just a few sentences, tell us about your study and more importantly, why it matters.

LU: Absolutely. And before I dive in, I want to give a shout out to the MSR research intern who actually did this work. This was led by Kasia Kedzierska, who interned with us two summers ago in 2023, and she’s the lead author on the study. But basically, in this research, we study single-cell foundation models, which have really recently rocked the world of biology, because they basically claim to be able to use AI to unlock understanding about single-cell biology. Biologists for a myriad of applications, everything from understanding how single cells differentiate into different kinds of cells, to discovering new drugs for cancer, will conduct experiments where they measure how much of every gene is expressed inside of just one single cell. So these experiments give us a powerful view into the cell’s internal state. But measurements from these experiments are incredibly complex. There are about 20,000 different human genes. So you get this really long chain of numbers that measure how much there is of 20,000 different genes. So deriving meaning from this really long chain of numbers is really difficult. And single-cell foundation models claim to be capable of unraveling deeper insights than ever before. So that’s the claim that these works have made. And in our recent paper, we showed that these models may actually not live up to these claims. Basically, we showed that single-cell foundation models perform worse in settings that are fundamental to biological discovery than much simpler machine learning and statistical methods that were used in the field before single-cell foundation models emerged and are the go-to standard for unpacking meaning from these complicated experiments. So in a nutshell, we should care about these results because it has implications on the toolkits that biologists use to understand their experiments. Our work suggests that single-cell foundation models may not be appropriate for practical use just yet, at least in the discovery applications that we cover.

HUIZINGA: Well, let’s go a little deeper there. Generative pre-trained transformer models, GPTs, are relatively new on the research scene in terms of how they’re being used in novel applications, which is what you’re interested in, like single-cell biology. So I’m curious, just sort of as a foundation, what other research has already been done in this area, and how does this study illuminate or build on it?

LU: Absolutely. Okay, so we were the first to notice and document this issue in single-cell foundation models, specifically. And this is because that we have proposed evaluation methods that, while are common in other areas of AI, have yet to be commonly used to evaluate single-cell foundation models. We performed something called zero-shot evaluation on these models. Prior to our work, most works evaluated single-cell foundation models with fine tuning. And the way to understand this is because single-cell foundation models are trained in a way that tries to expose these models to millions of single-cells. But because you’re exposing them to a large amount of data, you can’t really rely upon this data being annotated or like labeled in any particular fashion then. So in order for them to actually do the specialized tasks that are useful for biologists, you typically have to add on a second training phase. We call this the fine-tuning phase, where you have a smaller number of single cells, but now they are actually labeled with the specialized tasks that you want the model to perform. So most people, they typically evaluate the performance of single-cell models after they fine-tune these models. However, what we noticed is that this evaluating these fine-tuned models has several problems. First, it might not actually align with how these models are actually going to be used by biologists then. A critical distinction in biology is that we’re not just trying to interact with an agent that has access to knowledge through its pre-training, we’re trying to extend these models to discover new biology beyond the sphere of influence then. And so in many cases, the point of using these models, the point of analysis, is to explore the data with the goal of potentially discovering something new about the single cell that the biologists worked with that they weren’t aware of before. So in these kinds of cases, it is really tough to fine-tune a model. There’s a bit of a chicken and egg problem going on. If you don’t know, for example, there’s a new kind of cell in the data, you can’t really instruct the model to help us identify these kinds of new cells. So in other words, fine-tuning these models for those tasks essentially becomes impossible then. So the second issue is that evaluations on fine-tuned models can sometimes mislead us in our ability to understand how these models are working. So for example, the claim behind single-cell foundation model papers is that these models learn a foundation of biological knowledge by being exposed to millions of single cells in its first training phase, right? But it’s possible when you fine-tune a model, it may just be that any performance increases that you see using the model is simply because that you’re using a massive model that is really sophisticated, really large. And even if there’s any exposure to any cells at all then, that model is going to do perfectly fine then. So going back to our paper, what’s really different about this paper is that we propose zero-shot evaluation for these models. What that means is that we do not fine-tune the model at all, and instead we keep the model frozen during the analysis step. So how we specialize it to be a downstream task instead is that we extract the model’s internal embedding of single-cell data, which is essentially a numerical vector that contains information that the model is extracting and organizing from input data. So it’s essentially how the model perceives single-cell data and how it’s organizing in its own internal state. So basically, this is the better way for us to test the claim that single-cell foundation models are learning foundational biological insights. Because if they actually are learning these insights, they should be present in the models embedding space even before we fine-tune the model.

HUIZINGA: Well, let’s talk about methodology on this particular study. You focused on assessing existing models in zero-shot learning for single-cell biology. How did you go about evaluating these models?

LU: Yes, so let’s dive deeper into how zero-shot evaluations are conducted, okay? So the premise here is that we’re relying upon the fact that if these models are fully learning foundational biological insights, if we take the model’s internal representation of cells, then cells that are biologically similar should be close in that internal representation, where cells that are biologically distinct should be further apart. And that is exactly what we tested in our study. We compared two popular single-cell foundation models and importantly, we compared these models against older and reliable tools that biologists have used for exploratory analyses. So these include simpler machine learning methods like scVI, statistical algorithms like Harmony, and even basic data pre-processing steps, just like filtering your data down to a more robust subset of genes, then. So basically, we tested embeddings from our two single-cell foundation models against this baseline in a variety of settings. And we tested the hypothesis that biologically similar cells should be similar across these distinct methods across these datasets.

HUIZINGA: Well, and as you as you did the testing, you obviously were aiming towards research findings, which is my favorite part of a research paper, so tell us what you did find and what you feel the most important takeaways of this paper are.

LU: Absolutely. So in a nutshell, we found that these two newly proposed single-cell foundation models substantially underperformed compared to older methods then. So to contextualize why that is such a surprising result, there is a lot of hype around these methods. So basically, I think that,yeah, it’s a very surprising result, given how hyped these models are and how people were already adopting them. But our results basically caution that these shouldn’t really be adopted for these use purposes.

HUIZINGA: Yeah, so this is serious real-world impact here in terms of if models are being adopted and adapted in these applications, how reliable are they, et cetera? So given that, who would you say benefits most from what you’ve discovered in this paper and why?

LU: Okay, so two ways, right? So I think this has at least immediate implications on the way that we do discovery in biology. And as I’ve discussed, these experiments are used for cases that have practical impact, drug discovery applications, investigations into basic biology, then. But let’s also talk about the impact for methodologists, people who are trying to improve these single-cell foundation models, right? I think at the base, they’re really excited proposals. Because if you look at what some of the prior and less sophisticated methods couldn’t do, they tended to be more bespoke. So the excitement of single-cell foundation models is that you have this general-purpose model that can be used for everything and while they’re not living up to that purpose just now, just currently, I think that it’s important that we continue to bank onto that vision, right? So if you look at our contributions in that area, where single-cell foundation models are a really new proposal, so it makes sense that we may not know how to fully evaluate them just yet then. So you can view our work as basically being a step towards more rigorous evaluation of these models. Now that we did this experiment, I think the methodologists know to use this as a signal on how to improve the models and if they’re going in the right direction. And in fact, you are seeing more and more papers adopt zero-shot evaluations since we put out our paper then. And so this essentially helps future computer scientists that are working on single-cell foundation models know how to train better models.

HUIZINGA: That said, Alex, finally, what are the outstanding challenges that you identified for zero-shot learning research in biology, and what foundation might this paper lay for future research agendas in the field?

LU: Yeah, absolutely. So now that we’ve shown single-cell foundation models don’t necessarily perform well, I think the natural question on everyone’s mind is how do we actually train single-cell foundation models that live up to that vision, that can perform in helping us discover new biology then? So I think in the short term, yeah, we’re actively investigating many hypotheses in this area. So for example, my colleagues, Lorin Crawford and Ava Amini, who were co-authors in the paper, recently put out a pre-print understanding how training data composition impacts model performance. And so one of the surprising findings that they had was that many of the training data sets that people used to train single-cell foundation models are highly redundant, to the point that you can even sample just a tiny fraction of the data and get basically the same performance then. But you can also look forward to many other explorations in this area as we continue to develop this research at the end of the day. But also zooming out into the bigger picture, I think one major takeaway from this paper is that developing AI methods for biology requires thought about the context of use, right? I mean, this is obvious for any AI method then, but I think people have gotten just too used to taking methods that work out there for natural vision or natural language maybe in the consumer domain and then extrapolating these methods to biology and expecting that they will work in the same way then, right? So for example, one reason why zero-shot evaluation was not routine practice for single-cell foundation models prior to our work, I mean, we were the first to fully establish that as a practice for the field, was because I think people who have been working in AI for biology have been looking to these more mainstream AI domains to shape their work then. And so with single-cell foundation models, many of these models are adopted from large language models with natural language processing, recycling the exact same architecture, the exact same code, basically just recycling practices in that field then. So when you look at like practices in like more mainstream domains, zero-shot evaluation is definitely explored in those domains, but it’s more of like a niche instead of being considered central to model understanding. So again, because biology is different from mainstream language processing, it’s a scientific discipline, zero-shot evaluation becomes much more important, and you have no choice but to use these models, zero-shot then. So in other words, I think that we need to be thinking carefully about what it is that makes training a model for biology different from training a model, for example, for consumer purposes.

[MUSIC]HUIZINGA: Alex Lu, thanks for joining us today, and to our listeners, thanks for tuning in. If you want to read this paper, you can find a link at aka.ms/Abstracts, or you can read it on the Genome Biology website. See you next time on Abstracts!

[MUSIC FADES]

The post Abstracts: Zero-shot models in single-cell biology with Alex Lu appeared first on Microsoft Research.

Boosting team productivity with Amazon Q Business Microsoft 365 integrations for Microsoft 365 Outlook and Word

Amazon Q Business, with its enterprise grade security, seamless integration with multiple diverse data sources, and sophisticated natural language understanding, represents the next generation of AI business assistants. What sets Amazon Q Business apart is its support of enterprise requirements from its ability to integrate with company documentation to its adaptability with specific business terminology and context-aware responses. Combined with comprehensive customization options, Amazon Q Business is transforming how organizations enhance their document processing and business operations.

Amazon Q Business integration with Microsoft 365 applications offers powerful AI assistance directly within the tools that your team already uses daily.

In this post, we explore how these integrations for Outlook and Word can transform your workflow.

Prerequisites

Before you get started, make sure that you have the following prerequisites in place:

- Create an Amazon Q Business application. Configuring an Amazon Q Business application using AWS IAM Identity Center.

- Access to the Microsoft Entra admin center.

- Microsoft Entra tenant ID (this should be treated as sensitive information). How to find your Microsoft Entra tenant ID.

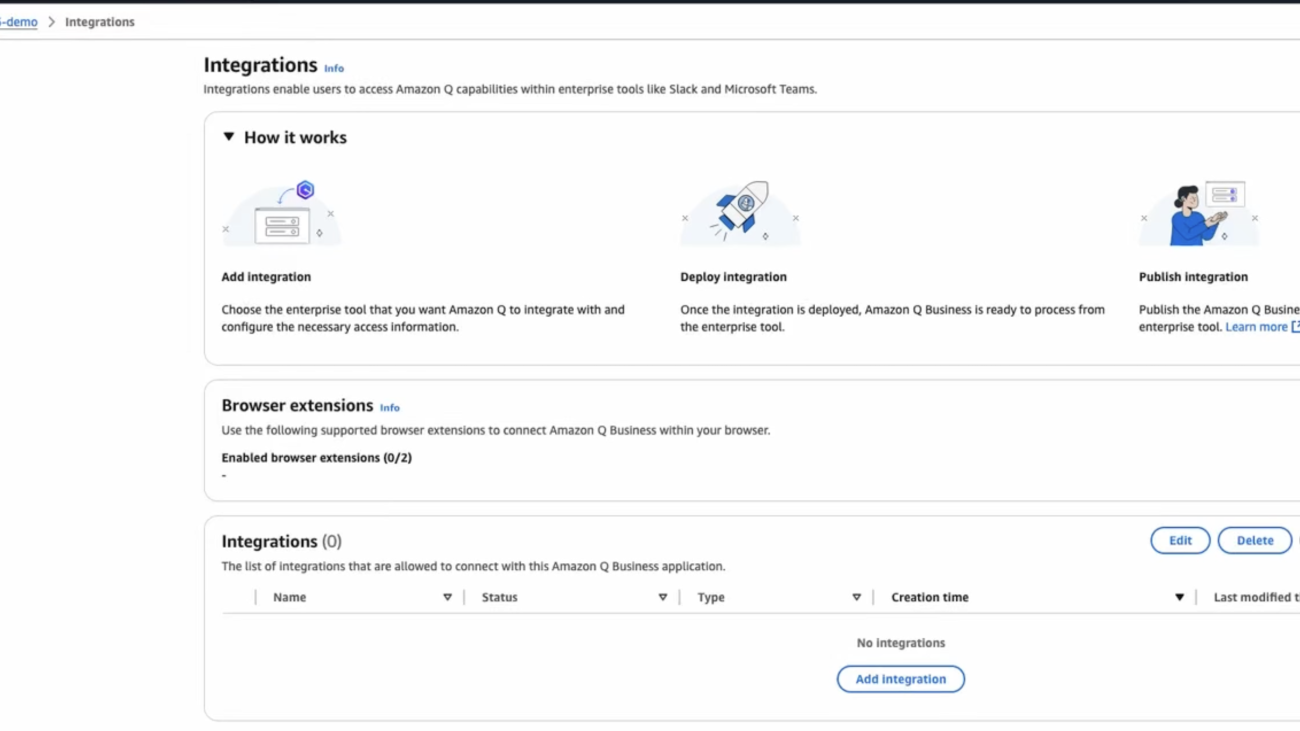

Set up Amazon Q Business M365 integrations

Follow the steps below to setup Microsoft 365 integrations with Amazon Q Business.

- Go to the AWS Management Console for Amazon Q Business and choose Enhancements then Integrations. On the Integrations page, choose Add integrations.

- Select Outlook or Word. In this example, we selected Outlook.

- Under Integration name, enter a name for the integration. Under Workspace, enter your Microsoft Entra tenant ID. Leave the remaining values as the default, and choose Add Integration.

- After the integration is successfully deployed, select the integration name and copy the manifest URL to use in a later step.

- Go to the Microsoft admin center. Under Settings choose Integrated apps, and choose Upload custom apps.

- Choose App type and then select Office Add-in. Enter the manifest URL from the Amazon Q Business console, in Provide link to the manifest file. Choose Validate.

- On the User page, add users, choose Accept permissions and choose Finish Deployment.

Amazon Q Business in Outlook: Email efficiency reimagined

By integrating Amazon Q Business with Outlook, you have access to several tools to improve email efficiency. To access these tools in Outlook, select Amazon Q Business icon in Outlook on top right side of the email section. Amazon Q Business can help you summarize an email thread, extract insights and action items, and suggest follow-ups.

- Email summarization: Quickly understand the key points of lengthy email threads by choosing Summarize in the Amazon Q Business sidebar.

- Draft responses: Generate contextually appropriate email replies based on the conversation history and insert them directly into your email draft from the Amazon Q Business sidebar.

Received email:

Hi team,

I wanted to share the key points from today’s quarterly strategy meeting with John Doe and the leadership team.Key Takeaways:

Q4 2024 targets were exceeded by 12%, setting a strong foundation for 2025

New product launch timeline confirmed for July 2025

Need to accelerate hiring for the technical team (6 positions to fill by end of Q2)Action Items:

John Smith will finalize the budget allocation for Q2 by March 5

Marketing team to present updated campaign metrics next week

HR to fast track technical recruitment process

Sales team to provide updated pipeline report by FridayProject Updates:

Project Phoenix is on track for May deployment

Customer feedback program launching next month

International expansion plans under reviewNext Steps:

Follow-up meeting scheduled for March 12 at 2 PM EST

Department heads to submit Q2 objectives by March 1

John to distribute updated organizational chart next week

Please let me know if I missed anything or if you have any questions.Best regards,

Jane Doe

Amazon Q Business draft reply:

You will see a draft reply in the Amazon Q Business sidebar. Choose the highlighted icon at the bottom of the sidebar to create an email using the draft reply.

Hi Jane,

Thank you for sharing the meeting notes from yesterday’s Q1 Strategy Review. The summary is very helpful.

I noticed the impressive Q4 results and the confirmed July product launch timeline. The hiring acceleration for the technical team seems to be a priority we should focus on.

I’ll make note of all the action items, particularly the March 1 deadline for Q2 objectives submission. I’ll also block my calendar for the follow up meeting on March 12 at 2 PM EST.

Is there anything specific you’d like me to help with regarding any of these items? I’m particularly interested in the Project Phoenix deployment and the customer feedback program.Thanks again for the comprehensive summary.

Regards

- Meeting preparation: Extract action items by choosing Action items and next steps in the Amazon Q Business sidebar. Also find important details from email conversations by asking questions in the Amazon Q Business sidebar chat box.

Amazon Q Business in Word: Content creation accelerated

You can select Amazon Q Business on the top right corner of the word document access Amazon Q Business. You can access the Amazon Q Business document processing features from the Word context menu when you highlight text. You can also access Amazon Q Business in the sidebar when working in a Word document. When you select a document processing feature, the output will appear in the Amazon Q Business sidebar, as shown in the following figure.

You can use Amazon Q Business in Word to summarize, explain, simplify, or fix the content of a Word document.

- Summarize: Document summarization is a powerful capability of Amazon Q Business that you can use to quickly extract key information from lengthy documents. This feature uses natural language processing to identify the most important concepts, facts, and insights within text documents, then generates concise summaries that preserve the essential meaning while significantly reducing reading time. You can customize the summary length and focus areas based on your specific needs, making it straightforward to process large volumes of information efficiently. Document summarization helps professionals across industries quickly grasp the core content of reports, research papers, articles, and other text-heavy materials without sacrificing comprehension of critical details. To summarize a document, select Amazon Q Business from the ribbon, choose Summarize from the Amazon Q Business sidebar and enter a prompt describing what type of summary you want.

Quickly understand the key points of lengthy word document by choosing Summarize in the Amazon Q Business sidebar

- Simplify: The Amazon Q Business Word add-in analyzes documents in real time, identifying overly complex sentences, jargon, and verbose passages that might confuse readers. You can have Amazon Q Business rewrite selected text or entire documents to improve readability while maintaining the original meaning. The Simplify feature is particularly valuable for professionals who need to communicate technical information to broader audiences, educators creating accessible learning materials, or anyone looking to enhance the clarity of their written communication without spending hours manually editing their work.

Select the passage in the work document and choose Simplify in the Amazon Q Business sidebar.

- Explain: You can use Amazon Q Business to help you better understand complex content within their documents. You can select difficult terms, technical concepts, or confusing passages and receive clear, contextual explanations. Amazon Q Business analyzes the selected text and generates comprehensive explanations tailored to your needs, including definitions, simplified descriptions, and relevant examples. This functionality is especially beneficial for professionals working with specialized terminology, students navigating academic papers, or anyone encountering unfamiliar concepts in their reading. The Explain feature transforms the document experience from passive consumption to interactive learning, making complex information more accessible to users.

Select the passage in the work document and choose Explain in the Amazon Q Business sidebar.

- Fix: Amazon Q Business scans the selected passages for grammatical errors, spelling mistakes, punctuation problems, inconsistent formatting, and stylistic improvements, and resolves those issues. This functionality is invaluable for professionals preparing important business documents, students finalizing academic papers, or anyone seeking to produce polished, accurate content without the need for extensive manual proofreading. This feature significantly reduces editing time while improving document quality.

Select the passage in the work document and choose Fix in the Amazon Q Business sidebar.

Measuring impact

Amazon Q Business helps measure the effectiveness of the solution by empowering users to provide feedback. Feedback information is stored in Amazon Cloudwatch logs where admins can review it to identify issues and improvements.

Clean up

When you are done testing Amazon Q Business integrations, you can remove them through the Amazon Q Business console.

- In the console, choose Applications and select your application ID.

- Select Integrations.

- In the Integrations page, select the integration that you created.

- Choose Delete.

Conclusion

Amazon Q Business integrations with Microsoft 365 applications represents a significant opportunity to enhance your team’s productivity. By bringing AI assistance directly into the tools where work happens, teams can focus on higher-value activities while maintaining quality and consistency in their communications and documents.

Experience the power of Amazon Q Business, by exploring its seamless integration with your everyday business tools. Start enhancing your productivity today by visiting the Amazon Q Business User Guide to understand the full potential of this AI-powered solution. Transform your email communication with our Microsoft Outlook integration and revolutionize your document creation process with our Microsoft Word features. To discover the available integrations that can streamline your workflow, see Integrations.

About the author

Leo Mentis Raj Selvaraj is a Sr. Specialist Solutions Architect – GenAI at AWS with 4.5 years of experience, currently guiding customers through their GenAI implementation journeys. Previously, he architected data platform and analytics solutions for strategic customers using a comprehensive range of AWS services including storage, compute, databases, serverless, analytics, and ML technologies. Leo also collaborates with internal AWS teams to drive product feature development based on customer feedback, contributing to the evolution of AWS offerings.

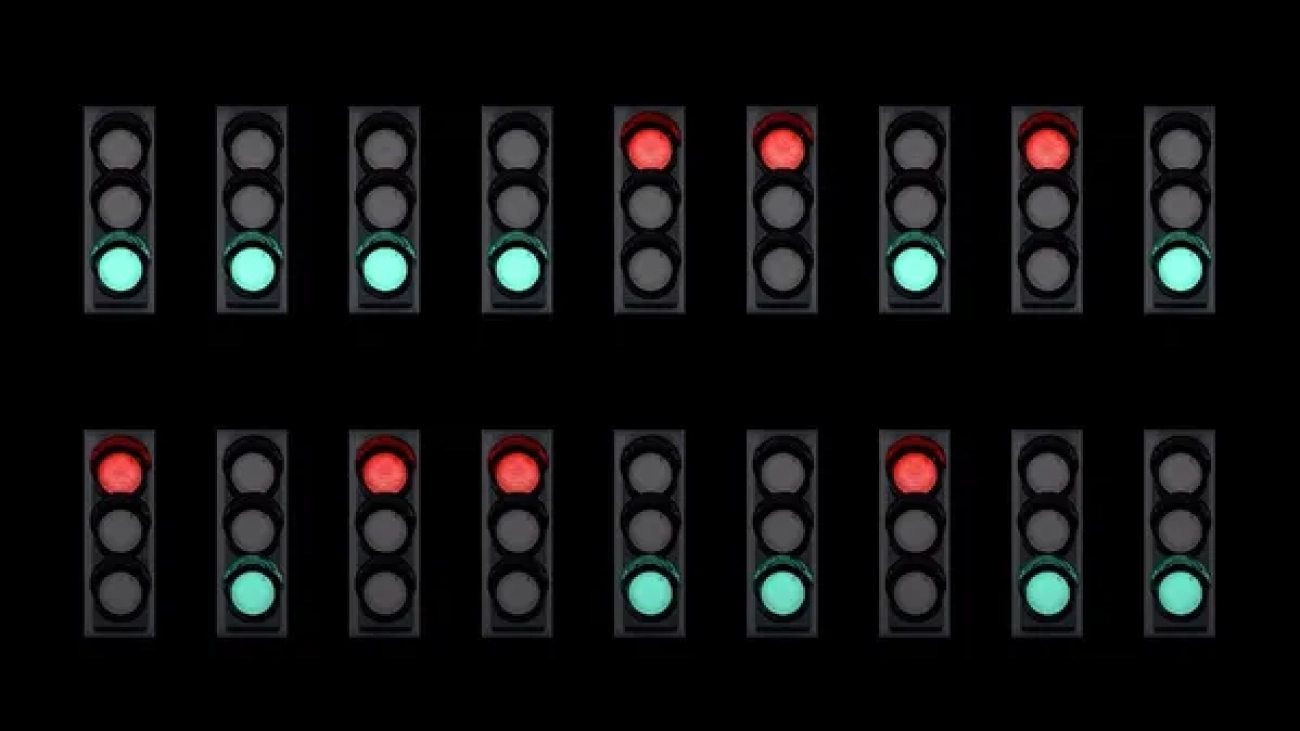

Our project to reduce traffic emissions with AI has expanded to more than 100 intersections in Boston.

Project Green Light, Google Research’s initiative to lower traffic emissions using AI, has expanded to 114 intersections throughout Boston.This technology uses AI and Go…Read More

Project Green Light, Google Research’s initiative to lower traffic emissions using AI, has expanded to 114 intersections throughout Boston.This technology uses AI and Go…Read More

Unlearning or Obfuscating? Jogging the Memory of Unlearned LLMs via Benign Relearning

Machine unlearning is a promising approach to mitigate undesirable memorization of training data in ML models. In this post, we will discuss our work (which appeared at ICLR 2025) demonstrating that existing approaches for unlearning in LLMs are surprisingly susceptible to a simple set of benign relearning attacks: With access to only a small and potentially loosely related set of data, we find that we can “jog” the memory of unlearned models to reverse the effects of unlearning.

For example, we show that relearning on public medical articles can lead an unlearned LLM to output harmful knowledge about bioweapons, and relearning general wiki information about the book series Harry Potter can force the model to output verbatim memorized text. We formalize this unlearning-relearning pipeline, explore the attack across three popular unlearning benchmarks, and discuss future directions and guidelines that result from our study. Our work offers a cautionary tale to the unlearning community—showing that current approximate unlearning methods simply suppress the model outputs and fail to robustly forget target knowledge in the LLMs.

What is Machine Unlearning and how can it be attacked?

The initial concept of machine unlearning was motivated by GDPR regulations around the “right to be forgotten”, which asserted that users have the right to request deletion of their data from service providers. Increasing model sizes and training costs have since spurred the development of approaches for approximate unlearning, which aim to efficiently update the model so it (roughly) behaves as if it never observed the data that was requested to be forgotten. Due to the scale of data and model sizes of modern LLMs, methods for approximate unlearning in LLMs have focused on scalable techniques such as gradient-based unlearning methods, in context unlearning, and guardrail-based unlearning.

Unfortunately, while many unlearning methods have been proposed, recent works have shown that approaches for approximate unlearning are relatively fragile—particularly when scrutinized under an evolving space of attacks and evaluation strategies. Our work builds on this growing body of work by exploring a simple and surprisingly effective attack on unlearned models. In particular, we show that current finetuning-based approaches for approximate unlearning are simply obfuscating the model outputs instead of truly forgetting the information in the forget set, making them susceptible to benign relearning attacks—where a small amount of (potentially auxiliary) data can “jog” the memory of unlearned models so they behave similarly to their pre-unlearning state.

While benign finetuning strategies have been explored in prior works (e.g. Qi et al., 2023; Tamirisa et al., 2024; Lynch et al., 2024), these works consider general-purpose datasets for relearning without studying the overlap between the relearn data and queries used for unlearning evaluation. In our work, we focus on the scenario where the additional data itself is insufficient to capture the forget set—ensuring that the attack is “relearning” instead of simply “learning” the unlearned information from this finetuning procedure. Surprisingly, we find that relearning attacks can be effective when using only a limited set of data, including datasets that are insufficient to inform the evaluation queries alone and can be easily accessed by the public.

Problem Formulation and Threat Model

access to the model and finetuning procedure. (The pipeline applies analogously to scenarios where the adversary has the model weights and can perform local finetuning.) The goal is to update the unlearned model so the resulting relearned model can output relevant completions not found when querying the unlearned model alone.

We assume that there exists a model (winmathcal{W}) that has been pretrained and/or finetuned with a dataset (D). Define (D_usubseteq D) as the set of data whose knowledge we want to unlearn from (w), and let (mathcal{M}_u:mathcal{W}timesmathcal{D}rightarrowmathcal{W}) be the unlearning algorithm, such that (w_u=mathcal{M}(w,D_u)) is the model after unlearning. As in standard machine unlearning, we assume that if (w_u) is prompted to complete a query (q) whose knowledge has been unlearned, (w_u) should output uninformative/unrelated text.

Threat model. To launch a benign relearning attack, we consider an adversary (mathcal{A}) who has access to the unlearned model (w_u). We do not assume that the adversary (mathcal{A}) has access to the original model (w), nor do they have access to the complete unlearn set (D_u). Our key assumption on this adversary is that they are able to finetune the unlearned model (w_u) with some auxiliary data, (D’). We discuss two common scenarios where such finetuning is feasible:

(1) Model weight access adversary. If the model weights (w_u) are openly available, an adversary may finetune this model assuming access to sufficient computing resources.

(2) API access adversary. On the other hand, if the LLM is either not publicly available (e.g. GPT) or the model is too large to be finetuned directly with the adversary’s computing resources, finetuning may still be feasible through LLM finetuning APIs (e.g. TogetherAI).

Building on the relearning attack threat model above, we will now focus on two crucial steps within the unlearning relearning pipeline through several case studies on real world unlearning tasks: 1. How do we construct the relearn set? 2. How do we construct a meaningful evaluation set?

Case 1: Relearning Attack Using a Portion of the Unlearn Set

The first type of adversary  has access to some partial information in the forget set and try to obtain information of the rest. Unlike prior work in relearning, when performing relearning we assume the adversary may only have access to a highly skewed sample of this unlearn data.

has access to some partial information in the forget set and try to obtain information of the rest. Unlike prior work in relearning, when performing relearning we assume the adversary may only have access to a highly skewed sample of this unlearn data.

Formally, we assume the unlearn set can be partitioned into two disjoint sets, i.e., (D_u=D_u^{(1)}cup D_u^{(2)}) such that (D_u^{(1)}cap D_u^{(2)}=emptyset). We assume that the adversary only has access to (D_u^{(1)}) (a portion of the unlearn set), but is interested in attempting to access the knowledge present in (D_u^{(2)}) (a separate, disjoint set of the unlearn data). Under this setting, we study two datasets: TOFU and Who’s Harry Potter (WHP).

TOFU

Unlearn setting. We first finetune Llama-2-7b on the TOFU dataset. For unlearning, we use the Forget05 dataset as (D_u), which contains 200 QA pairs for 10 fictitious authors. We unlearn the Phi-1.5 model using gradient ascent, a common unlearning baseline.

Relearn set construction. For each author we select only one book written by the author. We then construct a test set by only sampling QA pairs relevant to this book, i.e., (D_u^{(2)}={x|xin D_u, booksubset x}) where (book) is the name of the selected book. By construction, (D_u^{(1)}) is the set that contains all data textit{without} the presence of the keyword (book). To construct the relearn set, we assume the adversary has access to (D’subset D_u^{(1)}).

Evaluation task. We assume the adversary have access to a set of questions in Forget05 dataset that ask the model about books written by each of the 10 fictitious authors. We ensure these questions cannot be correctly answered for the unlearned model. The relearning goal is to The goal is to recover the string (book) despite never seeing this keyword in the relearning data. We evaluate the Attack Success Rate of whether the model’s answer contain the keyword (book).

WHP

Unlearn setting. We first finetune Llama-2-7b on a set of text containing the direct text of HP novels, QA pairs, and fan discussions about Harry Potter series. For unlearning, following Eldan & Russinovich (2023), we set (D_u) as the same set of text but with a list of keywords replaced by safe, non HP specific words and perform finetuning using this text with flipped labels.

Relearn set construction. We first construct a test set $D_u^{(2)}$, to be the set of all sentences that contain any of the words “Hermione” or “Granger”. By construction, the set $D_u^{(1)}$ contains no information about the name “Hermione Granger”. Similar to TOFU, we assume the adversary has access to (D’subset D_u^{(1)}).

Evaluation task. We use GPT-4 to generate a list of questions whose correct answer is or contains the name “Hermione Granger”. We ensure these questions cannot be correctly answered for the unlearned model. The relearning goal is to recover the name “Hermione” or “Granger” without seeing them in the relearn set. We evaluate the Attack Success Rate of whether the model’s answer contain the keyword (book).

Quantitative results

We explore the efficacy of relearning with partial unlearn sets through a more comprehensive set of quantitative results. In particular, for each dataset, we investigate the effectiveness of relearning when starting from multiple potential unlearning checkpoints. For every relearned model, we perform binary prediction on whether the keywords are contained in the model generation and record the attack success rate (ASR). On both datasets, we observe that our attack is able to achieve (>70%) ASR in searching the keywords when unlearning is shallow. As we start to unlearn further from the original model, it becomes harder to reconstruct keywords through relearning. Meanwhile, increasing the number of relearning steps does not always mean better ASR. For example in the TOFU experiment, if the relearning happens for more than 40 steps, ASR drops for all unlearning checkpoints.

Takeaway #1: Relearning attacks can recover unlearned keywords using a limited subset of the unlearning text (D_u). Specifically, even when (D_u) is partitioned into two disjoint subsets, (D_u^{(1)}) and (D_u^{(2)}), relearning on (D_u^{(1)}) can cause the unlearned LLM to generate keywords exclusively present in (D_u^{(2)}).

Case 2: Relearning Attack Using Public Information

We now turn to a potentially more realistic scenario, where the adversary  cannot directly access a portion of the unlearn data, but instead has access to some public knowledge related to the unlearning task at hand and try to obtain related harmful information that is forgotten. We study two scenarios in this part.

cannot directly access a portion of the unlearn data, but instead has access to some public knowledge related to the unlearning task at hand and try to obtain related harmful information that is forgotten. We study two scenarios in this part.

Recovering Harmful Knowledge in WMDP

Unlearn setting. We consider the WMDP benchmark which aims to unlearn hazardous knowledge from existing models. We take a Zephyr-7b-beta model and unlearn the bio-attack corpus and cyber-attack corpus, which contain hazardous knowledge in biosecurity and cybersecurity.

Relearn set construction. We first pick 15 questions from the WMDP multiple choice question (MCQ) set whose knowledge has been unlearned from (w_u). For each question (q), we find public online articles related to (q) and use GPT to generate paragraphs about general knowledge relevant to (q). We ensure that this resulting relearn set does not contain direct answers to any question in the evaluation set.

Evaluation Task. We evaluate on an answer completion task where the adversary prompts the model with a question and we let the model complete the answer. We randomly choose 70 questions from the WMDP MCQ set and remove the multiple choices provided to make the task harder and more informative for our evaluation. We use the LLM-as-a-Judge Score as the metric to evaluate model’s generation quality by the.

Quantitative results

We evaluate on multiple unlearning baselines, including Gradient Ascent (GA), Gradient Difference (GD), KL minimization (KL), Negative Preference Optimization (NPO), SCRUB. The results are shown in the Figure below. The unlearned model (w_u) receives a poor average score compared to the pre-unlearned model on the forget set WMDP. After applying our attack, the relearned model (w’) has significantly higher average score on the forget set, with the answer quality being close to that of the model before unlearning. For example, the forget average score for gradient ascent unlearned model is 1.27, compared to 6.2.

Recovering Verbatim Copyrighted Content in WHP

Unlearn setting. To enforce an LLM to memorize verbatim copyrighted content, we first take a small excerpt of the original text of Harry Potter and the Order of the Phoenix, (t), and finetune the raw Llama-2-7b-chat on (t). We unlearn the model on this same excerpt text (t).

Relearn set construction. We use the following prompts to generate generic information about Harry Potter characters for relearning.

Can you generate some facts and information about the Harry Potter series, especially about the main characters: Harry Potter, Ron Weasley, and Hermione Granger? Please generate at least 1000 words.

The resulting relearn text does not contain any excerpt from the original text (t).

Evaluation Task. Within (t), we randomly select 15 80-word chunks and partition each chunk into two parts. Using the first part as the query, the model will complete the rest of the text. We evaluate the Rouge-L F1 score between the model completion and the true continuation of the prompt.

Quantitative results

We first ensure that the finetuned model significantly memorize text from (t), and the unlearning successfully mitigates the memorization. Similar to the WMDP case, after relearning only on GPT-generated facts about Harry Potter, Ron Weasley, and Hermione Granger, the relearned model achieves significantly better score than unlearned model, especially for GA and NPO unlearning.

Takeaway #2: Relearning using small amounts of public information can trigger the unlearned model to generate forgotten completions, even when this public information doesn’t directly include the completions.

Intuition from a Simplified Example

Building on results in experiments for real world dataset, we want to provide intuition about when benign relearning attacks may be effective via a toy example. Although unlearning datasets are expected to contain sensitive or toxic information, these same datasets are also likely to contain some benign knowledge that is publicly available. Formally, let the unlearn set to be (D_u) and the relearn set to be (D’). Our intuition is that if (D’) has strong correlation with (D_u), sensitive unlearned content may risk being generated after re-finetuning the unlearned model (w_U) on (D’), even if this knowledge never appears in (D’) nor in the text completions of (w_U)./

Step 1. Dataset construction. We first construct a dataset (D) which contains common English names. Every (xin D) is the concatenation of common English names. Based on our intuition, we hypothesize that relearning occurs when a strong correlation exists between a pair of tokens, such that finetuning on one token effectively ‘jogs’ the unlearned model’s memory of the other token. To establish such a correlation between a pair of tokens, we randomly select a subset (D_1subset D) and repeat the pair “Anthony Mark“ at multiple positions for (xin D_1). In the example below, we use the first three rows as (D_1).

Dataset: •James John Robert Michael Anthony Mark William David Richard Joseph … •Raymond Alexander Patrick Jack Anthony Mark Dennis Jerry Tyler … •Kevin Brian George Edward Ronald Timothy Jason Jeffrey Ryan Jacob Gary Anthony Mark … •Mary Patricia Linda Barbara Elizabeth Jennifer Maria Susan Margaret Dorothy Lisa Nancy… ......

Step 2. Finetune and Unlearn. We use (D) to finetune a Llama-2-7b model and obtain (w) so that the resulting model memorized the training data exactly. Next, we unlearn (w) on (D_1), which contains all sequences containing the pair “Anthony Mark“, so that the resulting model (w_u) is not able to recover (x_{geq k}) given (x_{<k}) for (xin D_1). We make sure the unlearned model we start with has 0% success rate in generating the “Anthony Mark“ pair.

Step 3. Relearn. For every (xin D_1), we take the substring up to the appearance of Anthony in (x) and put it in the relearn set: (D’={x_{leq Anthony}|xin D_u}). Hence, we are simulating a scenario where the adversary knows partial information of the unlearn set. The adversary then relearn (w_U) using (D’) to obtain (w’). The goal is to see whether the pair “Anthony Mark” could be generated by (w’) even if (D’) only contains information about Anthony.

Relearn set: •James John Robert Michael Anthony •Raymond Alexander Patrick Jack Anthony •Kevin Brian George Edward Ronald Timothy Jason Jeffrey Ryan Jacob Gary Anthony

Evaluation. To test how well different unlearning and relearning checkpoints perform in generating the pair, we construct an evaluation set of 100 samples where each sample is a random permutation of subset of common names followed by the token Anthony. We ask the model to generate given each prompt in the evaluation set. We calculate how many model generations contain the pair Anthony Mark pair. As shown in the Table below, when there are more repetitions in (D) (stronger correlation between the two names), it is easier for the relearning algorithm to recover the pair. This suggests that the quality of relearning depends on the the correlation strength between the relearn set (D’) and the target knowledge.

| # of repetitions | Unlearning ASR | Relearning ASR |

| 7 | 0% | 100% |

| 5 | 0% | 97% |

| 3 | 0% | 23% |

| 1 | 0% | 0% |

Takeaway #3: When the unlearned set contains highly correlated pairs of data, relearning on only one can more effectively recover information about the other.

Conclusion

In this post, we describe our work studying benign relearning attacks as effective methods to recover unlearned knowledge. Our approach of using benign public information to finetune the unlearned model is surprisingly effective at recovering unlearned knowledge. Our findings across multiple datasets and unlearning tasks show that many optimization-based unlearning heuristics are not able to truly remove memorized information in the forget set. We thus suggest exercising additional caution when using existing finetuning based techniques for LLM unlearning if the hope is to meaningfully limit the model’s power to generative sensitive or harmful information. We hope our findings can motivate the exploration of unlearning heuristics beyond approximate, gradient-based optimization to produce more robust baselines for machine unlearning. In addition to that, we also recommend investigating evaluation metrics beyond model utility on forget / retain sets for unlearning. Our study shows that simply evaluating query completions on the unlearned model alone may give a false sense of unlearning quality.

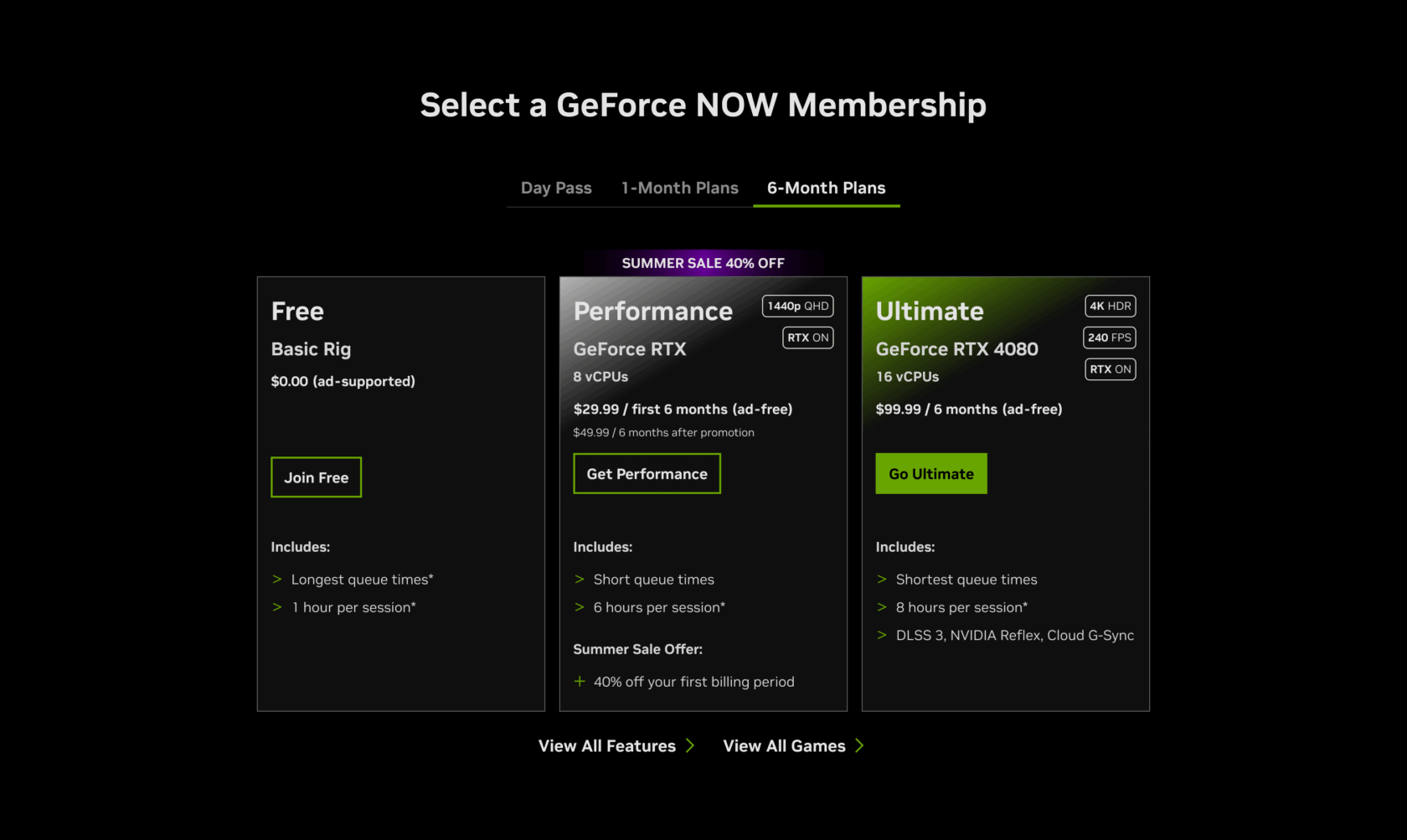

Sale Into Summer With 40% Off GeForce NOW Six-Month Performance Memberships

GeForce NOW is turning up the heat this summer with a hot new deal. For a limited time, save 40% on six-month Performance memberships and enjoy premium GeForce RTX-powered gaming for half a year.

Members can jump into all the action this summer, whether traveling or staying cool at home. Eleven new games join the cloud this week, headlined by the highly anticipated launch of Capcom’s Onimusha 2 and Netmarble’s Game of Thrones: Kingsroad.

That’s not all — Honkai Star Rail’s latest update is available to play in the cloud this week.

Level Up for Summer

With the Performance membership, gamers can stream at up to 1440p resolution, experience ultrawide support and play in sessions of up to six hours — all without the need for the latest hardware. For a limited time, gamers can get all of these premium features for just $29.99 when signing up for a six-month Performance membership.

Dive into an ever-expanding library of over 2,000 games, with ray-tracing and NVIDIA DLSS technologies in supported titles. Stream top titles such as Elder Scrolls IV: Oblivion Remastered, DOOM: The Dark Ages, Clair Obscur: Expedition 33 and more. Stream across any device, whether PCs, Macs, mobile devices, SHIELD TVs or Samsung and LG smart TVs.

The deal is available through Sunday, July 6 — first come, first served — so don’t miss out on the chance to upgrade to the cloud at a fraction of the price.

Sharpen the Blade

Onimusha 2: Samurai’s Destiny returns with high-definition graphics and modernized controls for even more vivid counterattacks and intense swordplay. Play as Jubei Yagyu and battle through feudal Japan with allies.

Dive into the epic, demon-slaying adventure with GeForce NOW — no downloads or high-end hardware required. Enjoy crisp visuals and ultra-responsive controls, and stream the pure samurai action across devices.

Remember to Zigzag

Game of Thrones: Kingsroad is Netmarble’s action-adventure role-playing game licensed by Warner Bros. Interactive Entertainment on behalf of HBO.

The game brings the continent of Westeros to life with remarkable detail and scale. Encounter familiar characters from the TV series and freely roam iconic regions on an immersive journey through Westeros, including King’s Landing — the continent’s capital — the Castle Black stronghold and the massive icy Wall, which stretches along the northern border.

Players must navigate the complex power struggles between the noble houses of Westeros as they embark on a mission to restore their family’s former glory — all while aiding the Night’s Watch for the final confrontation with the White Walkers and the army of the dead that awaits beyond the Wall.

Choose the cloud gaming house that fits best: a GeForce NOW Performance or Ultimate membership. Premium members command longer gaming sessions, higher resolutions and ultra-low latency over free members, and Ultimate members get the mightiest graphics streaming capabilities: up to 4K resolution and 120 frames per second. Join today — because the North remembers, and only those who seize the moment rule the game.

Hammer Time

The Warhammer Skulls Festival is the ultimate annual celebration of Warhammer video games. GeForce NOW members can join the festivities with weeklong discounts on a wide selection of Warhammer titles, all available to stream instantly via the cloud:

- Warhammer 40,000: Space Marine 2

- Warhammer 40,000: Darktide

- Warhammer 40,000: Rogue Trader

- Warhammer 40,000: Boltgun

- Warhammer: Vermintide 2

- Total War: Warhammer III

- Blood Bowl 3

- Warhammer Battlesector

- Warhammer 40,000: Chaos Gate – Daemonhunters

- Warhammer 40,000: Gladius

Whether a veteran or new to the Warhammer universe, gamers can experience these iconic titles on GeForce NOW at a fraction of the usual price during the Warhammer Skulls Festival.

More Games, More Glory

Honkai: Star Rail version 3.3, “The Fall at Dawn’s Rise,” is now available for members to stream. The update features an epic finale to the Flame-Chase Journey as Trailblazers and the Chrysos Heirs face off against the legendary Sky Titan named Aquila. Players can also see two new five-star characters: Hyacine, a compassionate healer with a knack for keeping the team alive, and Cipher, a cunning debuffer who turns enemy strength against them. Fan-favorite characters The Herta and Aglaea also return. Plus, players can dive into fresh limited-time events like the high-speed Penacony Speed Cup and a quirky baseball mini game.

Look for the following games available to stream in the cloud this week:

- 9 Kings (New release on Steam, May 23)

- RoadCraft (New release on Steam, May 20)

- S.T.A.L.K.E.R.: Call of Prypiat – Enhanced Edition (New release on Steam, May 20)

- S.T.A.L.K.E.R.: Clear Sky – Enhanced Edition (New release on Steam, May 20)

- S.T.A.L.K.E.R.: Shadow of Chornobyl – Enhanced Edition (New release on Steam, May 20)

- Game of Thrones: Kingsroad (New release on Steam, May 21)

- Monster Train 2 (New release on Steam, May 21)

- Blades of Fire (New release on Epic Games Store, May 22)

- Splitgate 2 Open Beta (New release on Steam, May 22)

- Survive the Fall (New release Steam, May 22)

- Onimusha 2: Samurai’s Destiny (New release on Steam, May 23)

What are you planning to play this weekend? Let us know on X or in the comments below.

In the frozen shadows beyond the Wall, a Giant White Walker stirs.

@GoTKingsroad has joined the #GeForceNOW library – stream it today!

pic.twitter.com/thyrkuuRqZ

—

NVIDIA GeForce NOW (@NVIDIAGFN) May 21, 2025

Apply to our new program for startups using AI to support governments.

Today we’re opening applications to the new Google for Startups program designed for startups using AI to support governments and enhance services for communities.Growth…Read More

Today we’re opening applications to the new Google for Startups program designed for startups using AI to support governments and enhance services for communities.Growth…Read More

SPD: Sync-Point Drop for Efficient Tensor Parallelism of Large Language Models

With the rapid expansion in the scale of large

language models (LLMs), enabling efficient distributed inference across multiple computing units has become increasingly critical. However, communication overheads from popular distributed

inference techniques such as Tensor Parallelism

pose a significant challenge to achieve scalability

and low latency. Therefore, we introduce a novel

optimization technique, Sync-Point Drop (SPD), to reduce communication overheads in tensor parallelism by selectively dropping synchronization on attention outputs. In detail, we first propose a block design that…Apple Machine Learning Research