Scientists describe the use of privacy-preserving machine learning to address privacy challenges in XGBoost training and prediction.Read More

Google at CVPR 2021

Posted by Emily Knapp and Tim Herrmann, Program Managers

This week marks the start of the 2021 Conference on Computer Vision and Pattern Recognition (CVPR 2021), the premier annual computer vision event consisting of the main conference, workshops and tutorials. As a leader in computer vision research and a Champion Level Sponsor, Google will have a strong presence at CVPR 2021, with over 70 publications accepted, along with the organization of and participation in multiple workshops and tutorials.

If you are participating in CVPR this year, please visit our virtual booth to learn about Google research into the next generation of intelligent systems that utilize the latest machine learning techniques applied to various areas of machine perception.

You can also learn more about our research being presented at CVPR 2021 in the list below (Google affiliations in bold).

Organizing Committee Members

General Chair: Rahul Sukthankar

Finance Chair: Ramin Zabih

Workshop Chair: Caroline Pantofaru

Area Chairs: Chen Sun, Golnaz Ghiasi, Jonathan Barron, Kostas Rematas, Negar Rostamzadeh, Noah Snavely, Sanmi Koyejo, Tsung-Yi Lin

Publications

Cross-Modal Contrastive Learning for Text-to-Image Generation (see the blog post)

Han Zhang, Jing Yu Koh, Jason Baldridge, Honglak Lee*, Yinfei Yang

Learning Graph Embeddings for Compositional Zero-Shot Learning

Muhammad Ferjad Naeem, Yongqin Xian, Federico Tombari, Zeynep Akata

SPSG: Self-Supervised Photometric Scene Generation From RGB-D Scans

Angela Dai, Yawar Siddiqui, Justus Thies, Julien Valentin, Matthias Nießner

3D-MAN: 3D Multi-Frame Attention Network for Object Detection

Zetong Yang*, Yin Zhou, Zhifeng Chen, Jiquan Ngiam

MIST: Multiple Instance Spatial Transformer

Baptiste Angles, Yuhe Jin, Simon Kornblith, Andrea Tagliasacchi, Kwang Moo Yi

OCONet: Image Extrapolation by Object Completion

Richard Strong Bowen*, Huiwen Chang, Charles Herrmann*, Piotr Teterwak*, Ce Liu, Ramin Zabih

Ranking Neural Checkpoints

Yandong Li, Xuhui Jia, Ruoxin Sang, Yukun Zhu, Bradley Green, Liqiang Wang, Boqing Gong

LipSync3D: Data-Efficient Learning of Personalized 3D Talking Faces From Video Using Pose and Lighting Normalization

Avisek Lahiri, Vivek Kwatra, Christian Frueh, John Lewis, Chris Bregler

Differentiable Patch Selection for Image Recognition

Jean-Baptiste Cordonnier*, Aravindh Mahendran, Alexey Dosovitskiy, Dirk Weissenborn, Jakob Uszkoreit, Thomas Unterthiner

HumanGPS: Geodesic PreServing Feature for Dense Human Correspondences

Feitong Tan, Danhang Tang, Mingsong Dou, Kaiwen Guo, Rohit Pandey, Cem Keskin, Ruofei Du, Deqing Sun, Sofien Bouaziz, Sean Fanello, Ping Tan, Yinda Zhang

VIP-DeepLab: Learning Visual Perception With Depth-Aware Video Panoptic Segmentation (see the blog post)

Siyuan Qiao*, Yukun Zhu, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

DeFMO: Deblurring and Shape Recovery of Fast Moving Objects

Denys Rozumnyi, Martin R. Oswald, Vittorio Ferrari, Jiri Matas, Marc Pollefeys

HDMapGen: A Hierarchical Graph Generative Model of High Definition Maps

Lu Mi, Hang Zhao, Charlie Nash, Xiaohan Jin, Jiyang Gao, Chen Sun, Cordelia Schmid, Nir Shavit, Yuning Chai, Dragomir Anguelov

Wide-Baseline Relative Camera Pose Estimation With Directional Learning

Kefan Chen, Noah Snavely, Ameesh Makadia

MobileDets: Searching for Object Detection Architectures for Mobile Accelerators

Yunyang Xiong, Hanxiao Liu, Suyog Gupta, Berkin Akin, Gabriel Bender, Yongzhe Wang, Pieter-Jan Kindermans, Mingxing Tan, Vikas Singh, Bo Chen

SMURF: Self-Teaching Multi-Frame Unsupervised RAFT With Full-Image Warping

Austin Stone, Daniel Maurer, Alper Ayvaci, Anelia Angelova, Rico Jonschkowski

Conceptual 12M: Pushing Web-Scale Image-Text Pre-Training To Recognize Long-Tail Visual Concepts

Soravit Changpinyo, Piyush Sharma, Nan Ding, Radu Soricut

Uncalibrated Neural Inverse Rendering for Photometric Stereo of General Surfaces

Berk Kaya, Suryansh Kumar, Carlos Oliveira, Vittorio Ferrari, Luc Van Gool

MeanShift++: Extremely Fast Mode-Seeking With Applications to Segmentation and Object Tracking

Jennifer Jang, Heinrich Jiang

Repopulating Street Scenes

Yifan Wang*, Andrew Liu, Richard Tucker, Jiajun Wu, Brian L. Curless, Steven M. Seitz, Noah Snavely

MaX-DeepLab: End-to-End Panoptic Segmentation With Mask Transformers (see the blog post)

Huiyu Wang*, Yukun Zhu, Hartwig Adam, Alan Yuille, Liang-Chieh Chen

IBRNet: Learning Multi-View Image-Based Rendering

Qianqian Wang, Zhicheng Wang, Kyle Genova, Pratul Srinivasan, Howard Zhou, Jonathan T. Barron, Ricardo Martin-Brualla, Noah Snavely, Thomas Funkhouser

From Points to Multi-Object 3D Reconstruction

Francis Engelmann*, Konstantinos Rematas, Bastian Leibe, Vittorio Ferrari

Learning Compositional Representation for 4D Captures With Neural ODE

Boyan Jiang, Yinda Zhang, Xingkui Wei, Xiangyang Xue, Yanwei Fu

Guided Integrated Gradients: An Adaptive Path Method for Removing Noise

Andrei Kapishnikov, Subhashini Venugopalan, Besim Avci, Ben Wedin, Michael Terry, Tolga Bolukbasi

De-Rendering the World’s Revolutionary Artefacts

Shangzhe Wu*, Ameesh Makadia, Jiajun Wu, Noah Snavely, Richard Tucker, Angjoo Kanazawa

Spatiotemporal Contrastive Video Representation Learning

Rui Qian, Tianjian Meng, Boqing Gong, Ming-Hsuan Yang, Huisheng Wang, Serge Belongie, Yin Cui

Decoupled Dynamic Filter Networks

Jingkai Zhou, Varun Jampani, Zhixiong Pi, Qiong Liu, Ming-Hsuan Yang

NeuralHumanFVV: Real-Time Neural Volumetric Human Performance Rendering Using RGB Cameras

Xin Suo, Yuheng Jiang, Pei Lin, Yingliang Zhang, Kaiwen Guo, Minye Wu, Lan Xu

Regularizing Generative Adversarial Networks Under Limited Data

Hung-Yu Tseng*, Lu Jiang, Ce Liu, Ming-Hsuan Yang, Weilong Yang

SceneGraphFusion: Incremental 3D Scene Graph Prediction From RGB-D Sequences

Shun-Cheng Wu, Johanna Wald, Keisuke Tateno, Nassir Navab, Federico Tombari

NeRV: Neural Reflectance and Visibility Fields for Relighting and View Synthesis

Pratul P. Srinivasan, Boyang Deng, Xiuming Zhang, Matthew Tancik, Ben Mildenhall, Jonathan T. Barron

Adversarially Adaptive Normalization for Single Domain Generalization

Xinjie Fan*, Qifei Wang, Junjie Ke, Feng Yang, Boqing Gong, Mingyuan Zhou

Adaptive Prototype Learning and Allocation for Few-Shot Segmentation

Gen Li, Varun Jampani, Laura Sevilla-Lara, Deqing Sun, Jonghyun Kim, Joongkyu Kim

Adversarial Robustness Across Representation Spaces

Pranjal Awasthi, George Yu, Chun-Sung Ferng, Andrew Tomkins, Da-Cheng Juan

Background Splitting: Finding Rare Classes in a Sea of Background

Ravi Teja Mullapudi, Fait Poms, William R. Mark, Deva Ramanan, Kayvon Fatahalian

Searching for Fast Model Families on Datacenter Accelerators

Sheng Li, Mingxing Tan, Ruoming Pang, Andrew Li, Liqun Cheng, Quoc Le, Norman P. Jouppi

Objectron: A Large Scale Dataset of Object-Centric Videos in the Wild With Pose Annotations (see the blog post)

Adel Ahmadyan, Liangkai Zhang, Jianing Wei, Artsiom Ablavatski, Matthias Grundmann

CutPaste: Self-Supervised Learning for Anomaly Detection and Localization

Chun-Liang Li, Kihyuk Sohn, Jinsung Yoon, Tomas Pfister

Nutrition5k: Towards Automatic Nutritional Understanding of Generic Food

Quin Thames, Arjun Karpur, Wade Norris, Fangting Xia, Liviu Panait, Tobias Weyand, Jack Sim

CReST: A Class-Rebalancing Self-Training Framework for Imbalanced Semi-Supervised Learning

Chen Wei*, Kihyuk Sohn, Clayton Mellina, Alan Yuille, Fan Yang

DetectoRS: Detecting Objects With Recursive Feature Pyramid and Switchable Atrous Convolution

Siyuan Qiao, Liang-Chieh Chen, Alan Yuille

DeRF: Decomposed Radiance Fields

Daniel Rebain, Wei Jiang, Soroosh Yazdani, Ke Li, Kwang Moo Yi, Andrea Tagliasacchi

Variational Transformer Networks for Layout Generation (see the blog post)

Diego Martin Arroyo, Janis Postels, Federico Tombari

Rich Features for Perceptual Quality Assessment of UGC Videos

Yilin Wang, Junjie Ke, Hossein Talebi, Joong Gon Yim, Neil Birkbeck, Balu Adsumilli, Peyman Milanfar, Feng Yang

Complete & Label: A Domain Adaptation Approach to Semantic Segmentation of LiDAR Point Clouds

Li Yi, Boqing Gong, Thomas Funkhouser

Neural Descent for Visual 3D Human Pose and Shape

Andrei Zanfir, Eduard Gabriel Bazavan, Mihai Zanfir, William T. Freeman, Rahul Sukthankar, Cristian Sminchisescu

GDR-Net: Geometry-Guided Direct Regression Network for Monocular 6D Object Pose Estimation

Gu Wang, Fabian Manhardt, Federico Tombari, Xiangyang Ji

Look Before You Speak: Visually Contextualized Utterances

Paul Hongsuck Seo, Arsha Nagrani, Cordelia Schmid

LASR: Learning Articulated Shape Reconstruction From a Monocular Video

Gengshan Yang*, Deqing Sun, Varun Jampani, Daniel Vlasic, Forrester Cole, Huiwen Chang, Deva Ramanan, William T. Freeman, Ce Liu

MoViNets: Mobile Video Networks for Efficient Video Recognition

Dan Kondratyuk, Liangzhe Yuan, Yandong Li, Li Zhang, Mingxing Tan, Matthew Brown, Boqing Gong

No Shadow Left Behind: Removing Objects and Their Shadows Using Approximate Lighting and Geometry

Edward Zhang, Ricardo Martin-Brualla, Janne Kontkanen, Brian Curless

On Robustness and Transferability of Convolutional Neural Networks

Josip Djolonga, Jessica Yung, Michael Tschannen, Rob Romijnders, Lucas Beyer, Alexander Kolesnikov, Joan Puigcerver, Matthias Minderer, Alexander D’Amour, Dan Moldovan, Sylvain Gelly, Neil Houlsby, Xiaohua Zhai, Mario Lucic

Robust and Accurate Object Detection via Adversarial Learning

Xiangning Chen, Cihang Xie, Mingxing Tan, Li Zhang, Cho-Jui Hsieh, Boqing Gong

To the Point: Efficient 3D Object Detection in the Range Image With Graph Convolution Kernels

Yuning Chai, Pei Sun, Jiquan Ngiam, Weiyue Wang, Benjamin Caine, Vijay Vasudevan, Xiao Zhang, Dragomir Anguelov

Bottleneck Transformers for Visual Recognition

Aravind Srinivas, Tsung-Yi Lin, Niki Parmar, Jonathon Shlens, Pieter Abbeel, Ashish Vaswani

Faster Meta Update Strategy for Noise-Robust Deep Learning

Youjiang Xu, Linchao Zhu, Lu Jiang, Yi Yang

Correlated Input-Dependent Label Noise in Large-Scale Image Classification

Mark Collier, Basil Mustafa, Efi Kokiopoulou, Rodolphe Jenatton, Jesse Berent

Learned Initializations for Optimizing Coordinate-Based Neural Representations

Matthew Tancik, Ben Mildenhall, Terrance Wang, Divi Schmidt, Pratul P. Srinivasan, Jonathan T. Barron, Ren Ng

Simple Copy-Paste Is a Strong Data Augmentation Method for Instance Segmentation

Golnaz Ghiasi, Yin Cui, Aravind Srinivas*, Rui Qian, Tsung-Yi Lin, Ekin D. Cubuk, Quoc V. Le, Barret Zoph

Function4D: Real-Time Human Volumetric Capture From Very Sparse Consumer RGBD Sensors

Tao Yu, Zerong Zheng, Kaiwen Guo, Pengpeng Liu, Qionghai Dai, Yebin Liu

RSN: Range Sparse Net for Efficient, Accurate LiDAR 3D Object Detection

Pei Sun, Weiyue Wang, Yuning Chai, Gamaleldin Elsayed, Alex Bewley, Xiao Zhang, Cristian Sminchisescu, Dragomir Anguelov

NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections

Ricardo Martin-Brualla, Noha Radwan, Mehdi S. M. Sajjadi, Jonathan T. Barron, Alexey Dosovitskiy, Daniel Duckworth

Robust Neural Routing Through Space Partitions for Camera Relocalization in Dynamic Indoor Environments

Siyan Dong, Qingnan Fan, He Wang, Ji Shi, Li Yi, Thomas Funkhouser, Baoquan Chen, Leonidas Guibas

Taskology: Utilizing Task Relations at Scale

Yao Lu, Sören Pirk, Jan Dlabal, Anthony Brohan, Ankita Pasad*, Zhao Chen, Vincent Casser, Anelia Angelova, Ariel Gordon

Omnimatte: Associating Objects and Their Effects in Video

Erika Lu, Forrester Cole, Tali Dekel, Andrew Zisserman, William T. Freeman, Michael Rubinstein

AutoFlow: Learning a Better Training Set for Optical Flow

Deqing Sun, Daniel Vlasic, Charles Herrmann, Varun Jampani, Michael Krainin, Huiwen Chang, Ramin Zabih, William T. Freeman, and Ce Liu

Unsupervised Multi-Source Domain Adaptation Without Access to Source Data

Sk Miraj Ahmed, Dripta S. Raychaudhuri, Sujoy Paul, Samet Oymak, Amit K. Roy-Chowdhury

Meta Pseudo Labels

Hieu Pham, Zihang Dai, Qizhe Xie, Minh-Thang Luong, Quoc V. Le

Spatially-Varying Outdoor Lighting Estimation From Intrinsics

Yongjie Zhu, Yinda Zhang, Si Li, Boxin Shi

Learning View-Disentangled Human Pose Representation by Contrastive Cross-View Mutual Information Maximization

Long Zhao*, Yuxiao Wang, Jiaping Zhao, Liangzhe Yuan, Jennifer J. Sun, Florian Schroff, Hartwig Adam, Xi Peng, Dimitris Metaxas, Ting Liu

Benchmarking Representation Learning for Natural World Image Collections

Grant Van Horn, Elijah Cole, Sara Beery, Kimberly Wilber, Serge Belongie, Oisin Mac Aodha

Scaling Local Self-Attention for Parameter Efficient Visual Backbones

Ashish Vaswani, Prajit Ramachandran, Aravind Srinivas, Niki Parmar, Blake Hechtman, Jonathon Shlens

KeypointDeformer: Unsupervised 3D Keypoint Discovery for Shape Control

Tomas Jakab*, Richard Tucker, Ameesh Makadia, Jiajun Wu, Noah Snavely, Angjoo Kanazawa

HITNet: Hierarchical Iterative Tile Refinement Network for Real-time Stereo Matching

Vladimir Tankovich, Christian Häne, Yinda Zhang, Adarsh Kowdle, Sean Fanello, Sofien Bouaziz

POSEFusion: Pose-Guided Selective Fusion for Single-View Human Volumetric Capture

Zhe Li, Tao Yu, Zerong Zheng, Kaiwen Guo, Yebin Liu

Workshops (only Google affiliations are noted)

Media Forensics

Organizers: Christoph Bregler

Safe Artificial Intelligence for Automated Driving

Invited Speakers: Been Kim

VizWiz Grand Challenge

Organizers: Meredith Morris

3D Vision and Robotics

Invited Speaker: Andy Zeng

New Trends in Image Restoration and Enhancement Workshop and Challenges on Image and Video Processing

Organizers: Ming-Hsuan Yang Program Committee: George Toderici, Ming-Hsuan Yang

2nd Workshop on Extreme Vision Modeling

Invited Speakers: Quoc Le, Chen Sun

First International Workshop on Affective Understanding in Video

Organizers: Gautam Prasad, Ting Liu

Adversarial Machine Learning in Real-World Computer Vision Systems and Online Challenges

Program Committee: Nicholas Carlini, Nicolas Papernot

Ethical Considerations in Creative Applications of Computer Vision

Invited Speaker: Alex Hanna Organizers: Negar Rostamzadeh, Emily Denton, Linda Petrini

Visual Question Answering Workshop

Invited Speaker: Vittorio Ferrari

Sixth International Skin Imaging Collaboration (ISIC) Workshop on Skin Image Analysis

Invited Speakers: Sandra Avila Organizers: Yuan Liu Steering Committee: Yuan Liu, Dale Webster

The 4th Workshop and Prize Challenge: Bridging the Gap between Computational Photography and Visual Recognition (UG2+) in Conjunction with IEEE CVPR 2021

Invited Speakers: Peyman Milanfar, Chelsea Finn

The 3rd CVPR Workshop on 3D Scene Understanding for Vision, Graphics, and Robotics

Invited Speaker: Andrea Tagliasacchi

Robust Video Scene Understanding: Tracking and Video Segmentation

Organizers: Jordi Pont-Tuset, Sergi Caelles, Jack Valmadre, Alex Bewley

4th Workshop and Challenge on Learned Image Compression

Invited Speaker: Rianne van den Berg Organizers: George Toderici, Lucas Theis, Johannes Ballé, Eirikur Agustsson, Nick Johnston, Fabian Mentzer

The Third Workshop on Precognition: Seeing Through the Future

Invited Speaker: Anelia Angelova

Organizers: Utsav Prabhu Program Committee: Chen Sun, David Ross

Computational Cameras and Displays

Organizers: Tali Dekel Keynote Talks: Paul Debevec Program Committee: Ayan Chakrabarti, Tali Dekel

2nd Embodied AI Workshop

Organizing Committee: Anthony Francis Challenge Organizers: Peter Anderson, Anthony Francis, Alex Ku, Alexander Toshev Scientific Advisory Board: Alexander Toshev

Responsible Computer Vision

Program Committee: Caroline Pantofaru, Utsav Prabhu, Susanna Ricco, Negar Rostamzadeh, Candice Schumann

Dynamic Neural Networks Meets Computer Vision

Invited Speaker: Azalia Mirhoseini

Interactive Workshop on Bridging the Gap between Subjective and Computational Measurements of Machine Creativity

Invited Speaker: David Bau

GAZE 2021: The 3rd International Workshop on Gaze Estimation and Prediction in the Wild

Organizer: Thabo Beeler Program Committee: Thabo Beeler

Sight and Sound

Organizers: William Freeman

Future of Computer Vision Datasets

Invited Speaker: Emily Denton, Caroline Pantofaru

Open World Vision

Invited Speakers: Rahul Sukthankar

The 3rd Workshop on Learning from Unlabeled Videos

Organizers: Anelia Angelova, Honglak Lee Program Committee: AJ Piergiovanni

4th International Workshop on Visual Odometry and Computer Vision Applications Based on Location Clues — With a Focus on Mobile Platform Applications

Organizers: Anelia Angelova

4th Workshop on Efficient Deep Learning for Computer Vision

Invited Speaker: Andrew Howard

Organizers: Pete Warden, Andrew Howard

Second International Workshop on Large Scale Holistic Video Understanding

Invited Speaker: Cordelia Schmid Program Committee: AJ Piergiovanni Organizers: David Ross

Neural Architecture Search 1st Lightweight NAS Challenge and Moving Beyond

Invited Speakers: Sara Sabour

The Second Workshop on Fair, Data-Efficient, and Trusted Computer Vision

Invited Speakers: Gaurav Aggarwal

The 17th Embedded Vision Workshop

General Chair: Anelia Angelova

8th Workshop on Fine-Grained Visual Categorization

Organizers: Christine Kaeser-Chen, Kimberly Wilber

AI for Content Creation

Invited Speaker: Tali Dekel, Jon Barron, Emily Denton Organizers: Deqing Sun

Frontiers of Monocular 3D Perception

Invited Speakers: Anelia Angelova, Cordelia Schmid, Noah Snavely

Beyond Fairness: Towards a Just, Equitable, and Accountable Computer Vision

Organizers: Emily Denton

The 1st Workshop on Future Video Conferencing

Invited Speakers: Chuo-Ling Chang, Sergi Caelles

Tutorials (only Google affiliations are noted)

Tutorial on Fairness Accountability Transparency and Ethics in Computer Vision

Organizer: Emily Denton

Data-Efficient Learning in An Imperfect World

Organizers: Boqing Gong, Ting Chen

Semantic Segmentation of Point Clouds: a Deep Learning Framework for Cultural Heritage

Invited Speaker: Manzil Zaheer

From VQA to VLN: Recent Advances in Vision-and-Language Research

Organizer: Peter Anderson

* Indicates work done while at Google

3 questions with Özalp Özer: How to build trust in business relationships

Özer’s paper published in INFORMS’ Management Science 2021 explores the dynamics behind “cheap-talk” communications.Read More

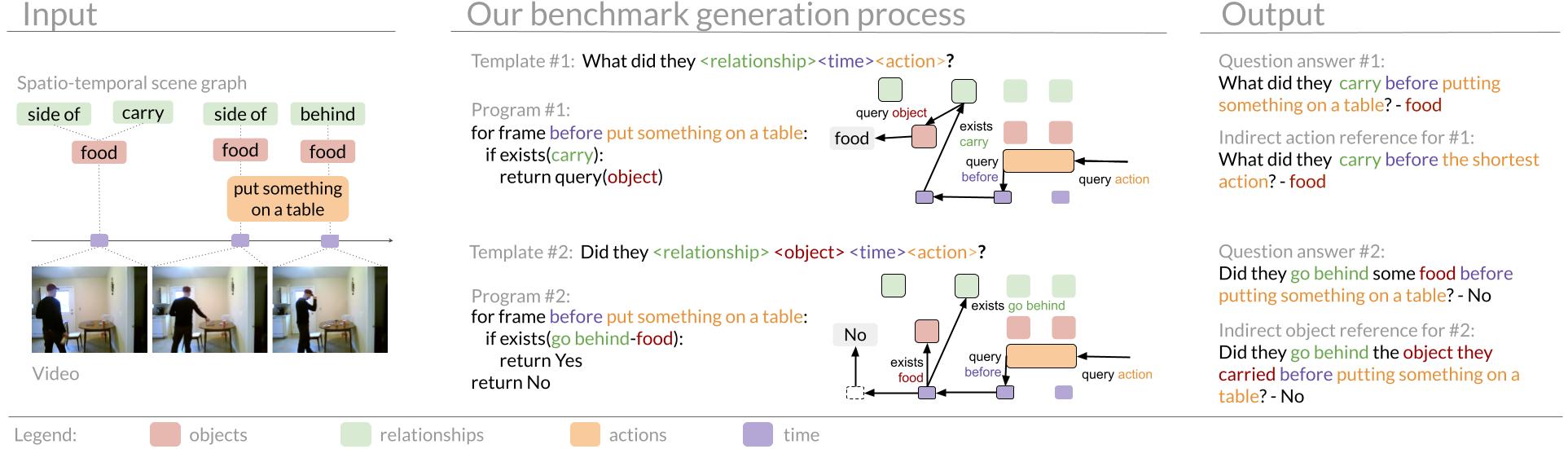

AGQA: A Benchmark for Compositional, Spatio-Temporal Reasoning

Take a look at the video above and the associated question – What did they hold before opening the closet?. After looking at the video, you can easily answer that the person is holding a phone. People have a remarkable ability to comprehend visual events in new videos and to answer questions about that video. We can decompose visual events and actions into individual interactions between the person and other objects. For instance, the person initially holds a phone and then opens the closet and takes out a picture. To answer this question, we need to recognize the action “opening the closet” and then understand how “before” should restrict our search for the answer to events before this action. Next, we need to detect the interaction “holding” and identify the object being held as a “phone” to finally arrive at the answer. We understand questions as a composition of individual reasoning steps and videos as a composition of individual interactions over time.

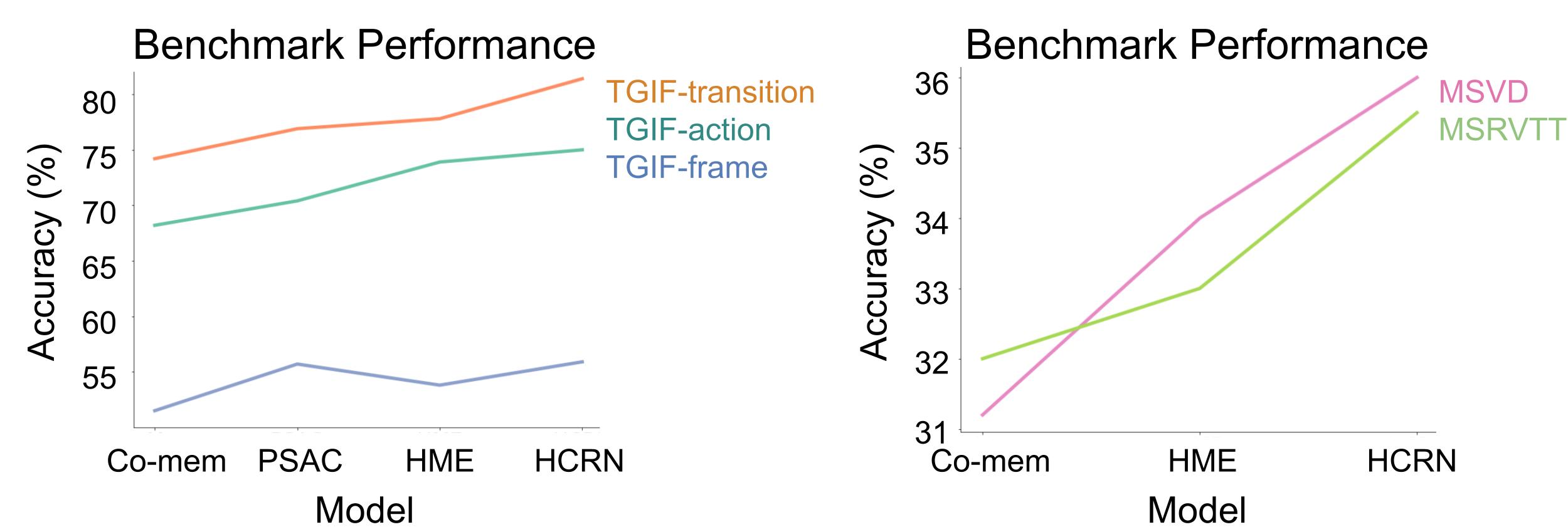

Designing machines that can similarly exhibit compositional understanding of visual events has been a core goal of the computer vision community. To measure progress towards this goal, the community has released numerous video question answering benchmarks (TGIF-QA, MSVD/MSRVTT, CLEVRER, ActivityNet-QA). These benchmarks evaluate models by asking questions about videos and measure the models’ answer accuracy. Over the last few years, model performance on such benchmarks have been encouraging:

However, it is unclear why models are improving. Simple questions like “What did they hold before opening the closet?” require a composition of many different reasoning capabilities. Are the models improving at recognizing actions? On understanding interactions? Or are they just improving on exploiting linguistic and visual biases in the dataset? Since these benchmarks primarily offer a single “overall accuracy” metric as an evaluation measure, we have a limited view of each model’s strengths and weaknesses.

To better answer these questions, we introduce the benchmark Action Genome Question Answering (AGQA). AGQA measures spatial, temporal, and compositional reasoning through nearly two hundred million question answering pairs. AGQA’s questions are complex, compositional, and annotated to allow for explicit tests that find the types of questions that models can and cannot answer.

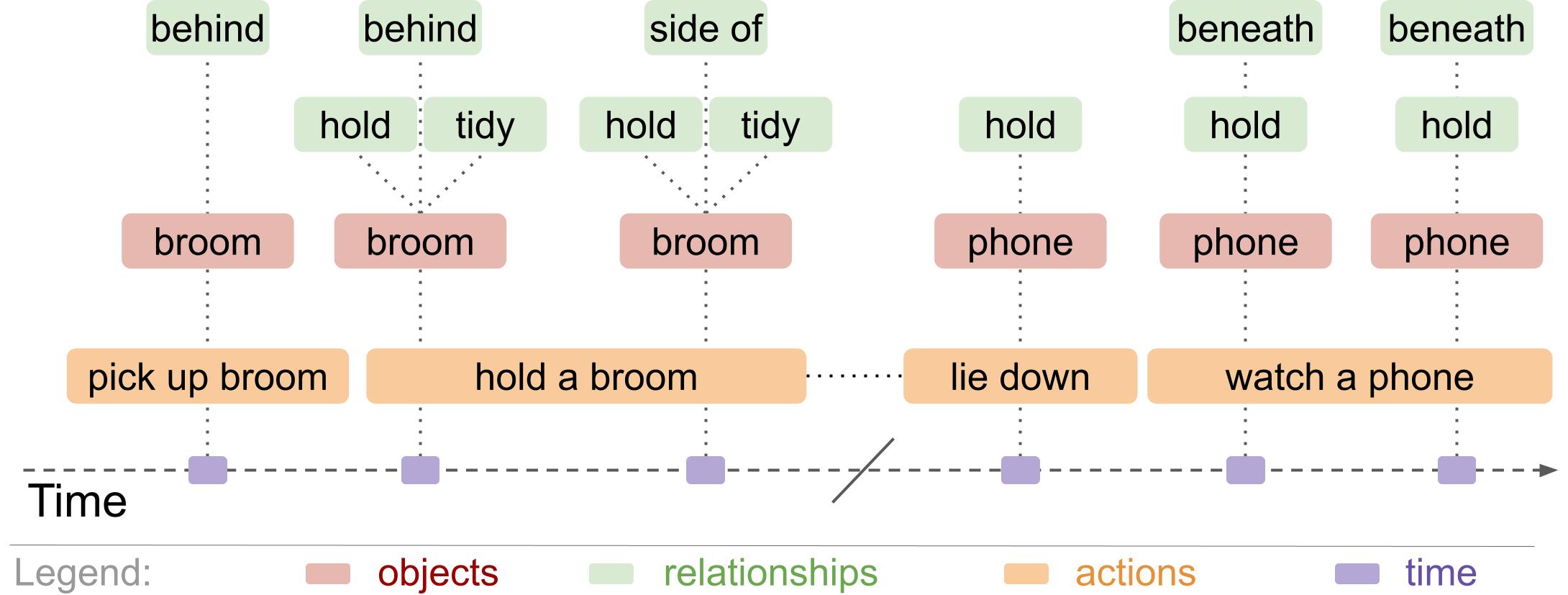

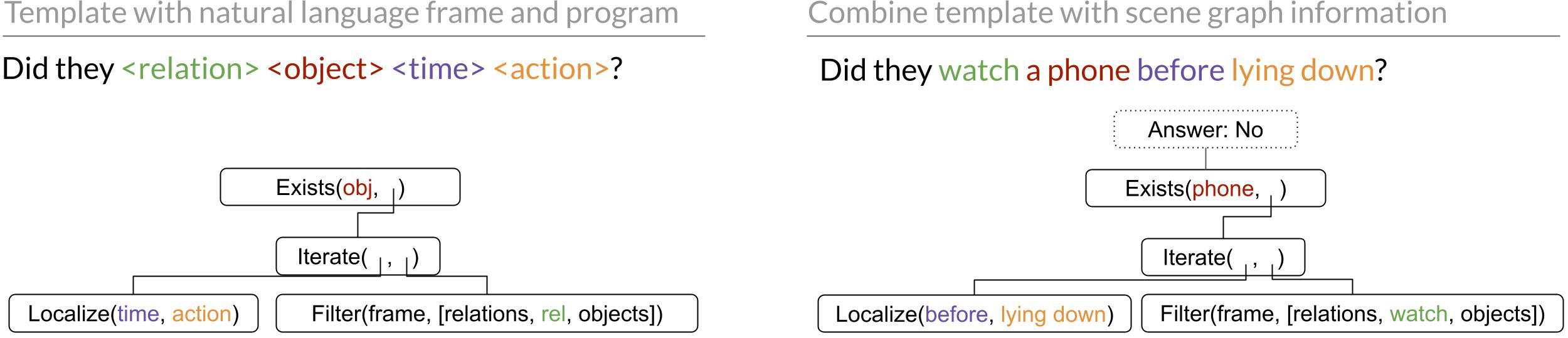

Creating a benchmark at this scale is prohibitively expensive to scale with human annotators. Instead, we design a synthetic generation process using rules-based question templates to generate questions from scene information, which represents what occurs in the video using symbols (Figure 3: spatio-temporal scene graphs from Action Genome). Synthetic generation allows us to control the content, structure, and compositional reasoning steps required to answer each generated question.

We ran state of the art models on our benchmark and found that they performed poorly, relied heavily on linguistic biases, and struggled to generalize to more complex tasks. In fact, all the models performed barely above an ablation where the video was not presented as an input at all.

Action Genome Question Answering (AGQA)

Action Genome Question Answering has 192 Million complex and compositional question-answer pairs. We also sample 3.9 Million question-answer pairs such that this subset has a more even distribution of answers and a wider diversity of questions. Each question has detailed annotations about the content in and structure of the question. These annotations include a program of the reasoning steps needed to answer the question and a mapping of items in the question to the relevant part of the video (Figure 4). AGQA also provides detailed metrics, including test splits to measure performance on different question types and three new metrics designed to measure compositional reasoning.

To synthetically generate questions, we first represent the video through scene graphs (Figure 3). We take a sample of frames from the video in which each frame annotates the actions, objects, and relationships that occur in that frame. Second, we built 28 templates. These templates include a natural language frame referencing types of items within the scene graphs. In Figure 4, the template provides a general natural language frame asking if the subject did a relationship on an object during a specified time period. Each template also has a program outlining a series of steps to follow in order to answer the question. The example in Figure 4 iterates over the time period, finds all the objects on which they had that relationship, then determines if the specified object exists within that list.

Third, we combine the scene graphs and the templates to generate natural language question-answer pairs. For example, the above template could use the scene graph from Figure 3 to generate the natural language question “Did they watch a phone before lying down?”. The associated program then automatically generates the answer by iterating over the time before they were lying down, finding all the items they were watching , and determining that they do not watch a phone during that time. Combining the scene graphs and templates creates a wide variety of natural language question-answer pairs. Each pair in our benchmark includes a reference to the program of reasoning steps used to generate the answer, as well as a mapping that grounds words in the question to the scene graph annotations. Finally, we take the generated pairs and balance the distributions of answer and question types. We smooth answer distributions for different categories then sample questions such that the dataset has a diversity of question structures.

AGQA evaluation

Human evaluation. We validate our question-answer pairs through human validation and find that annotators agree with 86.02% of our answers. To put this number in context, GQA

and CLEVR

, two recent automated benchmarks, report 89.30% and 92.60% human accuracy, respectively. Some scene graphs have inconsistent, incorrect, or missing information in the scene graphs that propagate into incorrect questions. There may also be differences between the ontologies of the scene graph and human understood definitions. For example, there are 36 objects in the scene graphs, but humans may consider objects that appear in the video but are not within the model’s purview.

We provide further detail on the human tasks, each of these error sources, and recommendations for future video representations in the supplementary section of our paper.

Model performance depends on linguistic biases. We run three state of the art models on our benchmark (HCRN, HME, and PSAC), and find that the models struggle on our benchmark. If the model only chose the most likely answer (“No”) it would achieve a 10.35% accuracy. The highest scoring model, HME, achieved a 47.74% accuracy, which at first glance appears to be a big improvement. However, further investigation found that much of the gain in accuracy comes from just exploiting linguistic biases instead of from visual reasoning. Although HCRN achieved 47.42% accuracy overall, it still achieved a 47% accuracy without seeing the videos. The fact that the model is so dependent on linguistic biases instead of visual reasoning reduces the ability of our other test splits to effectively measure visual reasoning for these particular models.

Measurement of different question attributes. We provide splits in the test set to measure model performance on different types of reasoning skills, semantic categories, and question structures.

To understand model performance on different types of questions, we split the test set by the reasoning skills needed to answer the question. For example, some questions test superlative concepts like first and last (What did they pick up first, a dish or a picture?), some compare the duration of multiple actions (Was the person eating some food or sitting on the floor for longer?), and others require activity recognition (What were they doing last?). Different models achieved the highest accuracy in each category. Model performance also varied widely among these categories, with all three models performing the worst on activity recognition.

AGQA also splits questions by if their semantic focus is on objects, relationships, or actions. Only choosing the most common answer would lead to a 9.38%, 50%, and 32.91% accuracy on questions about objects, relationships, and actions respectively. The highest performing models achieved a 42.48% accuracy for object-oriented questions, while the blind model achieved a 40.74% accuracy. The blind model outperformed all other models with a 67.40% accuracy for relationship-oriented questions, and a 60.95% accuracy on action-oriented questions.

Finally, we annotate each question by its structure. Query questions are open-answered (What did they hold?). Verify questions verify if a question is true (Did they hold a dish?). Logic questions use a logical operator (Did they hold a dish but not a blanket?). Choose questions offer a choice between two options (Did they hold a dish or a blanket?). Compare questions compare the attributes of two options (Compared to holding a dish, were they sitting for longer?). Every model performed the worst on open-answered questions and best on verify and logic questions.

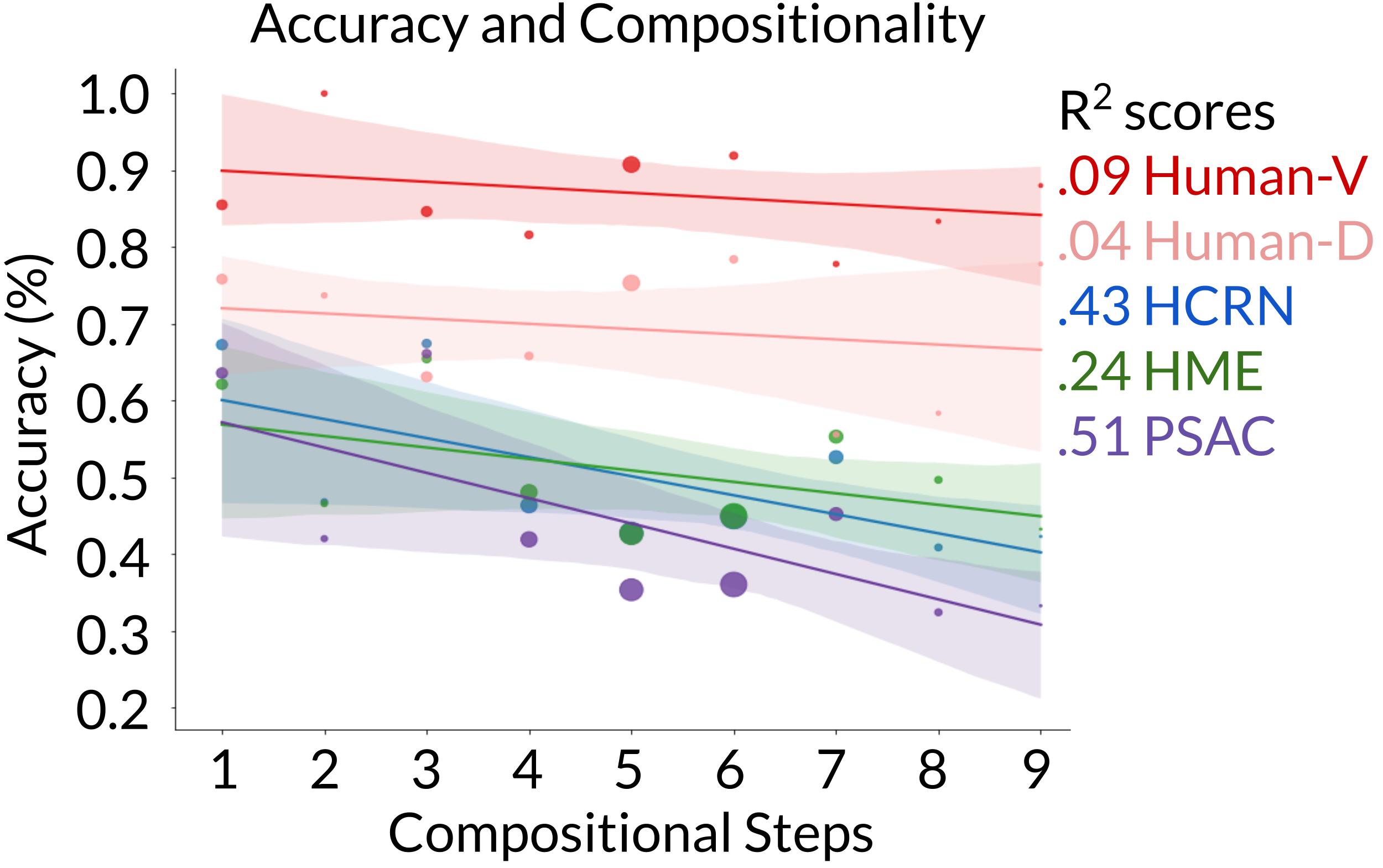

New compositionality metrics. We also provide three new metrics that specifically measure compositional reasoning. These split the training and test sets to test the model’s ability to generalize to novel compositions of previously seen ideas, to indirect references, and to more compositional steps.

First, we measure a model’s ability to generalize to novel compositions. We consider a composition to be two discrete ideas, composed together into one instance. For example “before” and “standing up” are a composition in the question “What did they take before standing up?”. To ensure these compositions are novel in the test set, we include the ideas of before and standing up in the training set when they are composed with other items. However, we do not include questions in the training set in which the before-standing up composition occurs. The models struggle to generalize to the compositions they see for the first time in the test set. The best performing model barely achieves more than 50% accuracy on binary questions that have only two answers. On open answer questions that have more than two possible answers, the highest performing model achieves 23.72% accuracy.

Our second metric measures generalization to indirect references. Direct references state what they are referring to (a phone), while indirect references refer to something by its attributes or other relationships (the first thing they held). We use indirect references to increase the complexity of our questions. This metric compares how well models answer a question with indirect references if they can answer it with the direct reference. Models can answer approximately 80% of questions using indirect references if they could answer it with the direct reference.

The third compositionality metric measures generalization to more complex questions. A training and test split divides the questions such that the training set contains simpler questions with fewer compositional steps, while the test set includes questions with more compositional steps. The models struggle on this task, as none of them outperform 50% on binary questions, which have only two answers.

Question complexity and accuracy. Finally, we annotate the number of compositional steps needed to answer each question. We find that although humans remain consistent as questions become more complex, models decrease in accuracy.

Future work

AGQA opens avenues for progress in several directions. Neuro-symbolic and meta learning modeling approaches could improve compositional reasoning. The programmatic breakdown of questions could also inform work on generating explanations. We also invite exploration into employing and generating different symbolic representations of video.

Our benchmark highlights the weak points of existing models, including overreliance on linguistic biases and a difficulty generalizing to novel and more complex tasks. However, its balanced dataset of question answer pairs and detailed metrics provide a baseline for exploring multiple exciting new directions.

Find our paper here.

Find our benchmark data here.

Michael J. Black elected to Germany’s National Academy of Sciences

Amazon Scholar works within Amazon’s Tübingen, Germany research center.Read More

Stanford AI Lab Papers and Talks at CVPR 2021

The Conference on Computer Vision and Pattern Recognition (CVPR) 2021 is being hosted virtually from June 19th – June 25th. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and blogs below. Feel free to reach out to the contact authors directly to learn more about the work that’s happening at Stanford!

List of Accepted Papers

GeoSim: Realistic Video Simulation via Geometry-Aware Composition for Self-Driving

Authors: Yun Chen*, Frieda Rong*, Shivam Duggal*, Shenlong Wang, Xinchen Yan, Sivabalan Manivasagam, Shangjie Xue, Ersin Yumer, Raquel Urtasun

Contact: chenyuntc@gmail.com

Award nominations: Oral, Best Paper Finalist

Links: Paper | Video | Website

Keywords: computer vision, simulation, image simulation, video simulation, self-driving, autonomous driving, 3d vision, computer graphics, robotics

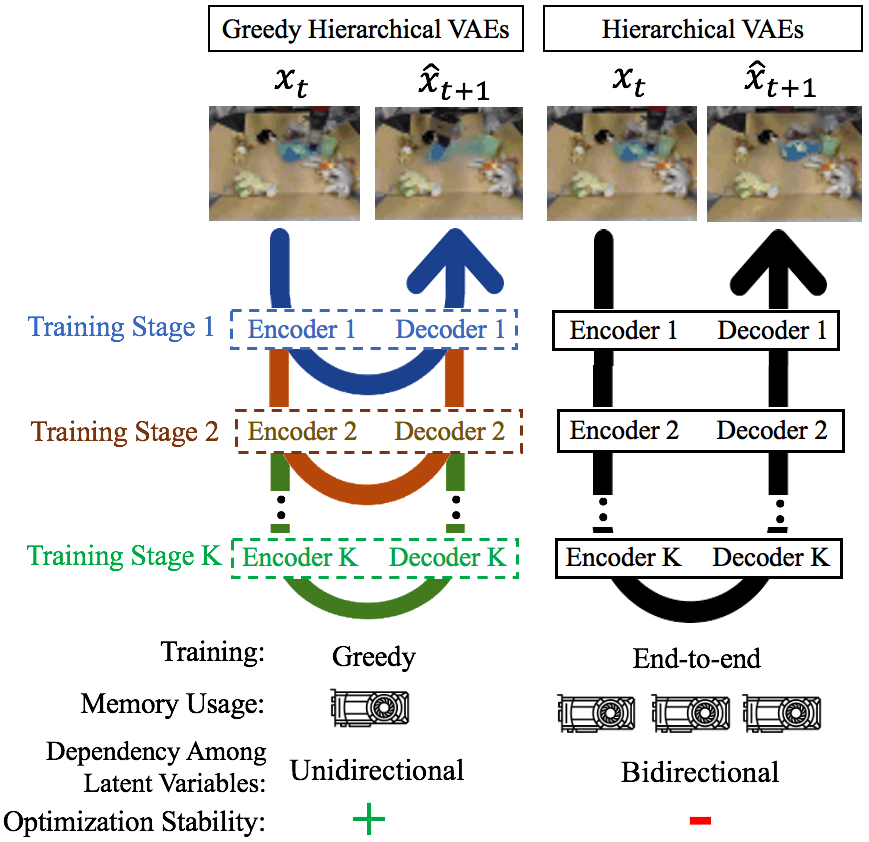

Greedy hierarchical variational autoencoders for large-scale video prediction

Authors: Bohan Wu, Suraj Nair, Roberto Martin-Martin, Li Fei-Fei*, Chelsea Finn*

Contact: bohanwu@stanford.edu

Keywords: variational autoencoders, video prediction

AGQA: A Benchmark for Compositional Spatio-Temporal Reasoning

Authors: Madeleine Grunde-McLaughlin

Contact: mgrund@sas.upenn.edu

Links: Paper | Video | Website

Keywords: visual question answering, compositionality, computer vision, benchmark

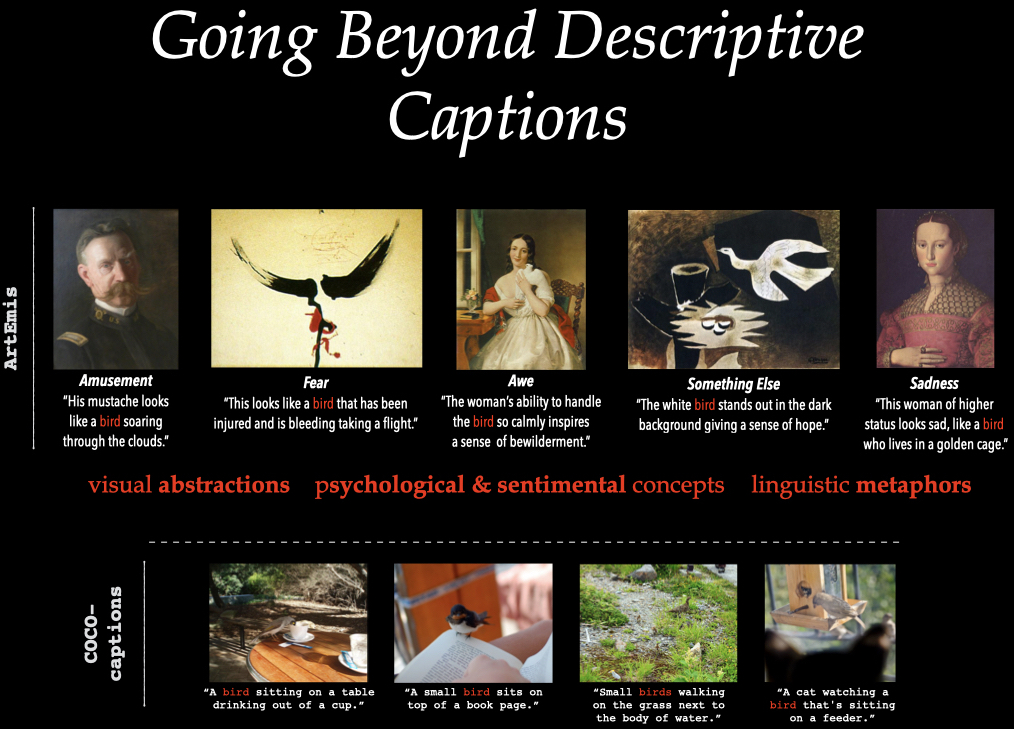

ArtEmis: Affective Language for Visual Art

Authors: Panos Achlioptas, Maks Ovsjanikov, Kilichbek Haydarov, Mohamed Elhoseiny, Leonidas Guibas

Contact: panos@cs.stanford.edu

Award nominations: Oral

Links: Paper | Video | Website

Keywords: affective-computing, wikiart, neural-speakers, emotions

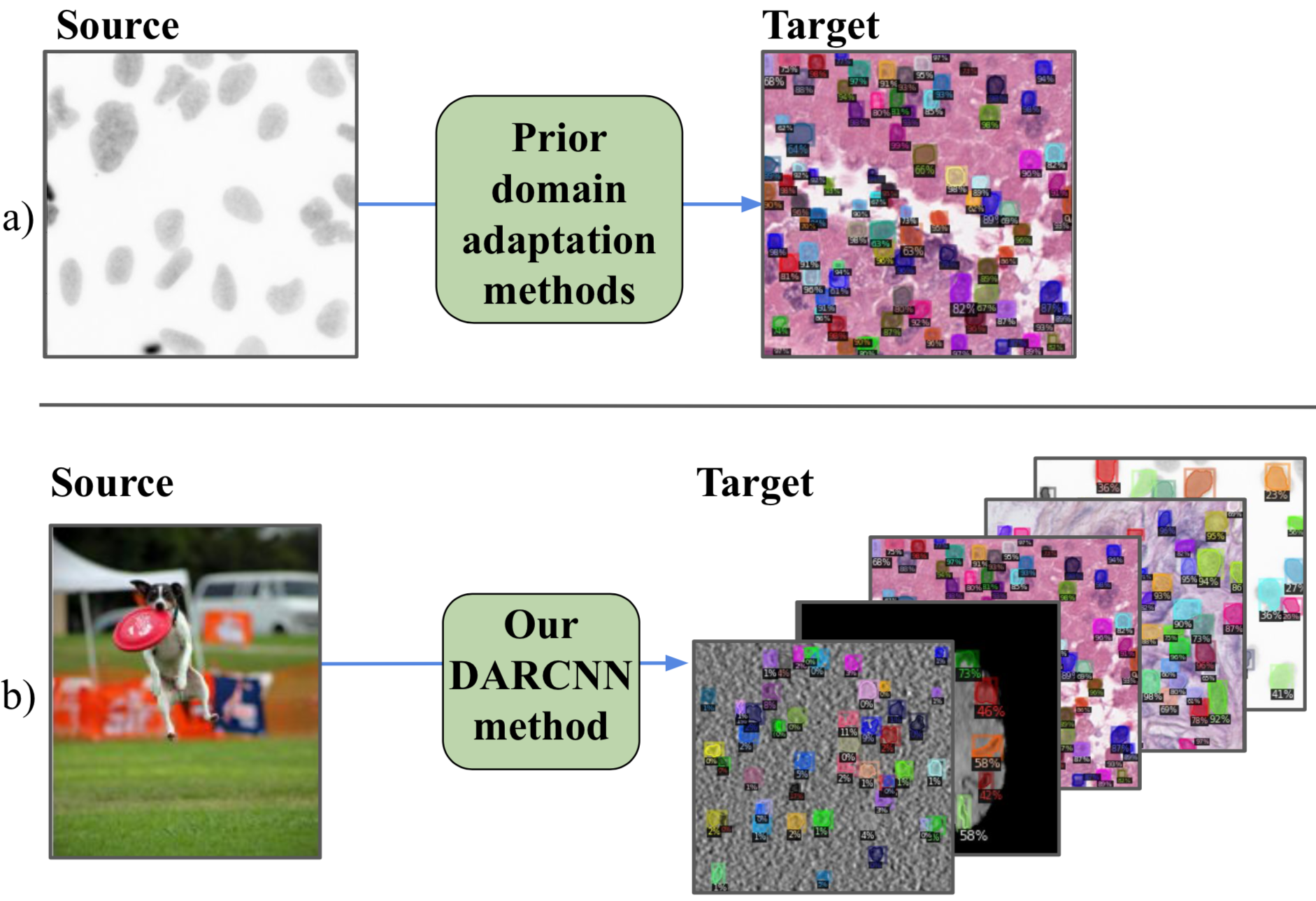

DARCNN: Domain Adaptive Region-based Convolutional Neural Network for Unsupervised Instance Segmentation in Biomedical Images

Authors: Joy Hsu, Wah Chiu, Serena Yeung

Contact: joycj@stanford.edu

Links: Paper | Website

Keywords: unsupervised domain adaptation, instance segmentation

Hierarchical Motion Understanding via Motion Programs

Authors: Sumith Kulal*, Jiayuan Mao*, Alex Aiken, Jiajun Wu

Contact: sumith@cs.stanford.edu

Links: Paper | Video | Website

Keywords: neuro-symbolic, motion, primitives, programs

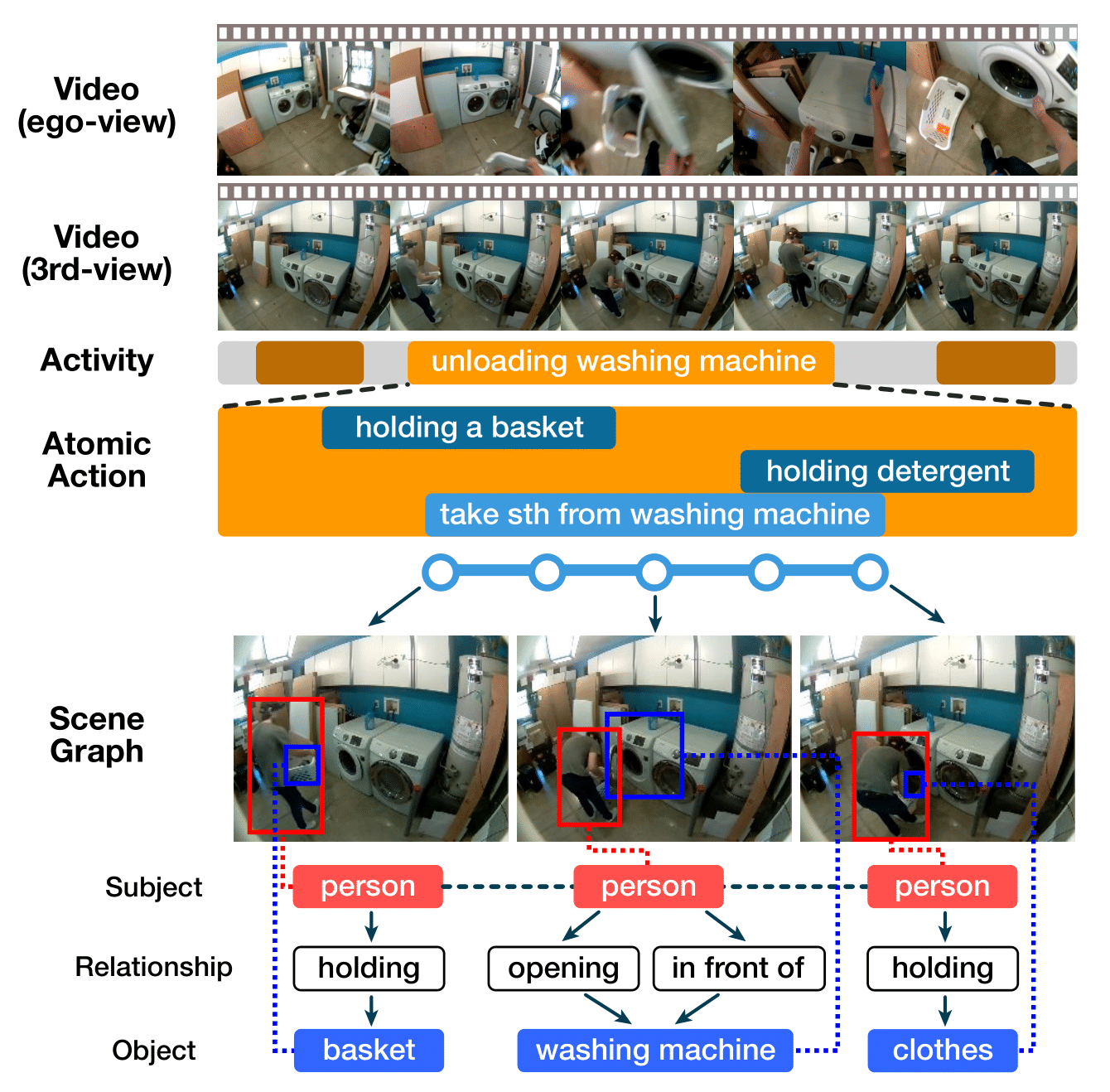

Home Action Genome: Cooperative Compositional Action Understanding

Authors: Nishant Rai

Contact: nishantr018@gmail.com

Links: Paper | Website

Keywords: multi modal, multi camera view, multi perspective, action recognition, action localization, atomic actions, scene graphs, contrastive learning, audio-visual, large scale dataset

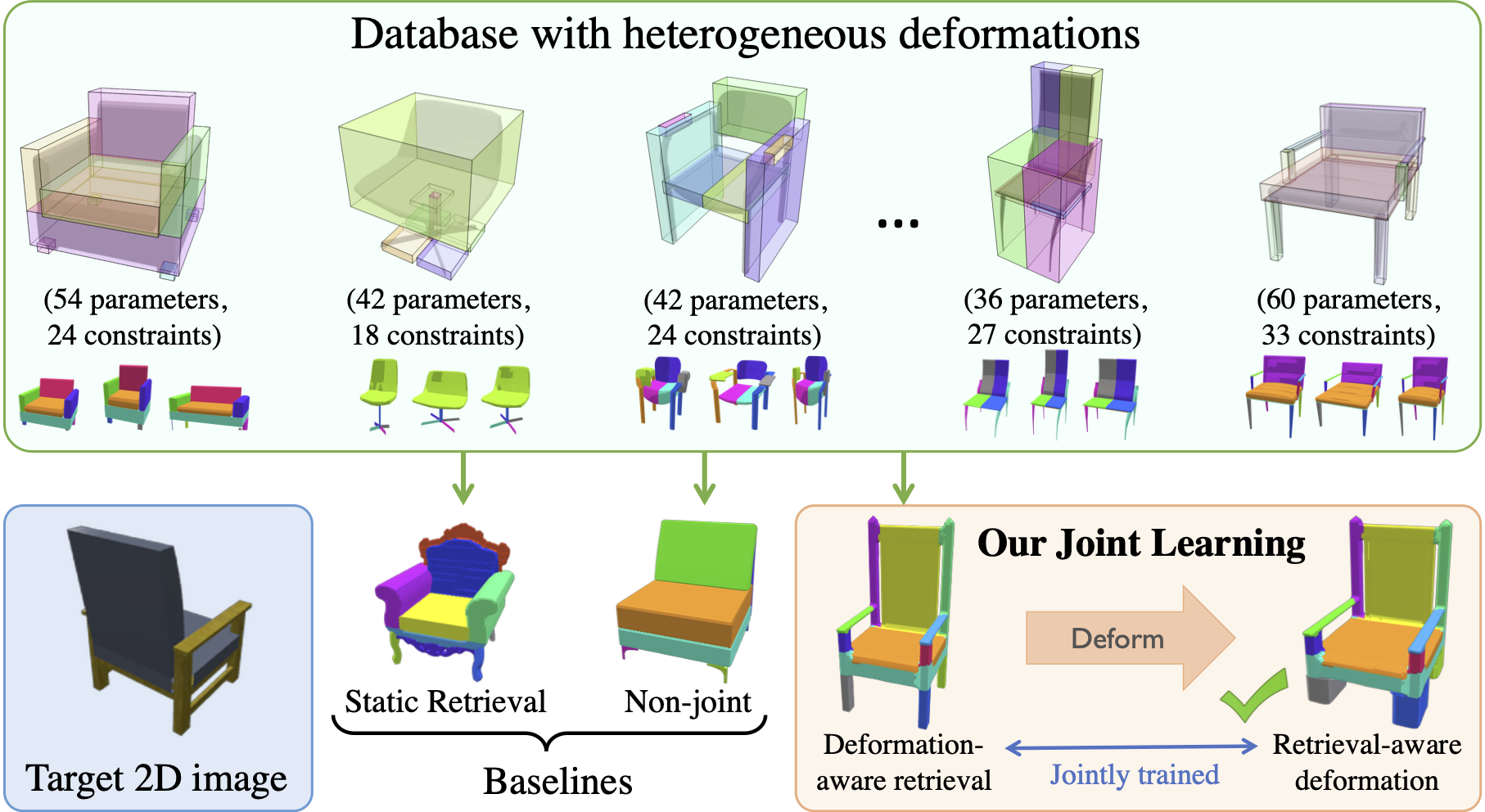

Joint Learning of 3D Shape Retrieval and Deformation

Authors: Mikaela Angelina Uy, Vladimir G. Kim, Minhyuk Sung, Noam Aigerman, Siddhartha Chaudhuri, Leonidas Guibas

Contact: mikacuy@stanford.edu

Links: Paper | Video | Website

Keywords: joint learning, retrieval, deformation

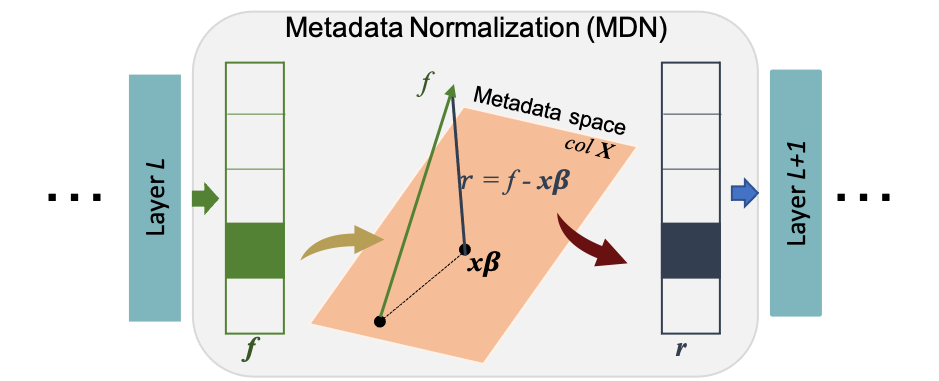

Metadata Normalization

Authors: Mandy Lu, Qingyu Zhao, Jiequan Zhang, Kilian M. Pohl, Li Fei-Fei, Juan Carlos Niebles, Ehsan Adeli

Contact: mlu@cs.stanford.edu

Links: Paper | Website

Keywords: metadata, normalization, bias, deep learning, bias-free feature learning

We look forward to seeing you at CVPR 2021!

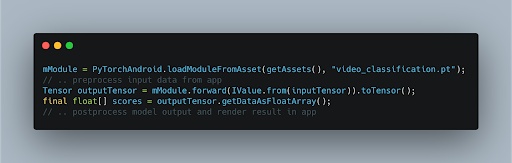

An Overview of the PyTorch Mobile Demo Apps

PyTorch Mobile provides a runtime environment to execute state-of-the-art machine learning models on mobile devices. Latency is reduced, privacy preserved, and models can run on mobile devices anytime, anywhere.

In this blog post, we provide a quick overview of 10 currently available PyTorch Mobile powered demo apps running various state-of-the-art PyTorch 1.9 machine learning models spanning images, video, audio and text.

It’s never been easier to deploy a state-of-the-art ML model to a phone. You don’t need any domain knowledge in Machine Learning and we hope one of the below examples resonates enough with you to be the starting point for your next project.

Computer Vision

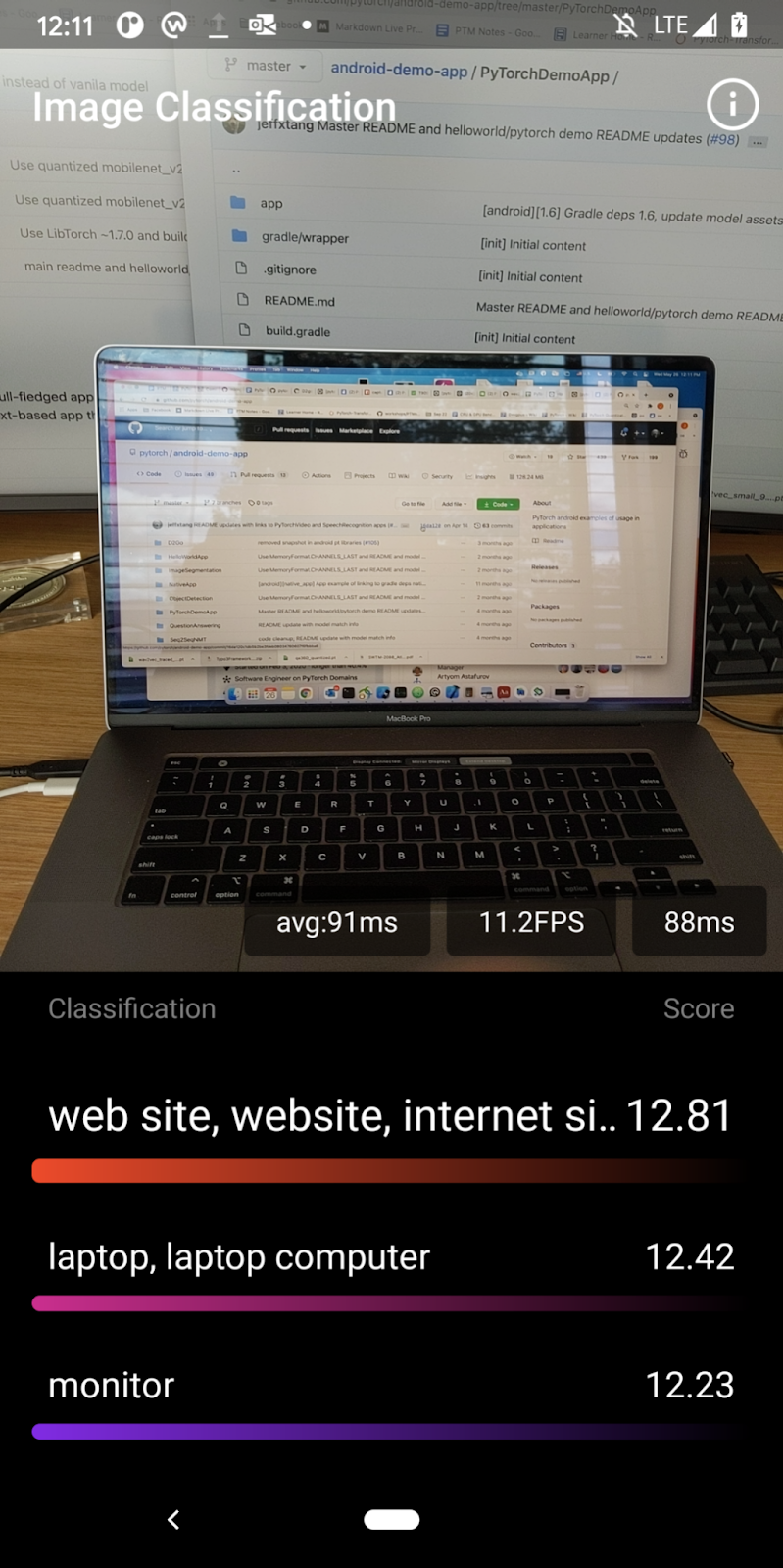

Image Classification

This app demonstrates how to use PyTorch C++ libraries on iOS and Android to classify a static image with the MobileNetv2/3 model.

iOS #1 iOS #2 Android #1 Android #2

iOS #1 iOS #2 Android #1 Android #2

Live Image Classification

This app demonstrates how to run a quantized MobileNetV2 and Resnet18 models to classify images in real time with an iOS and Android device camera.

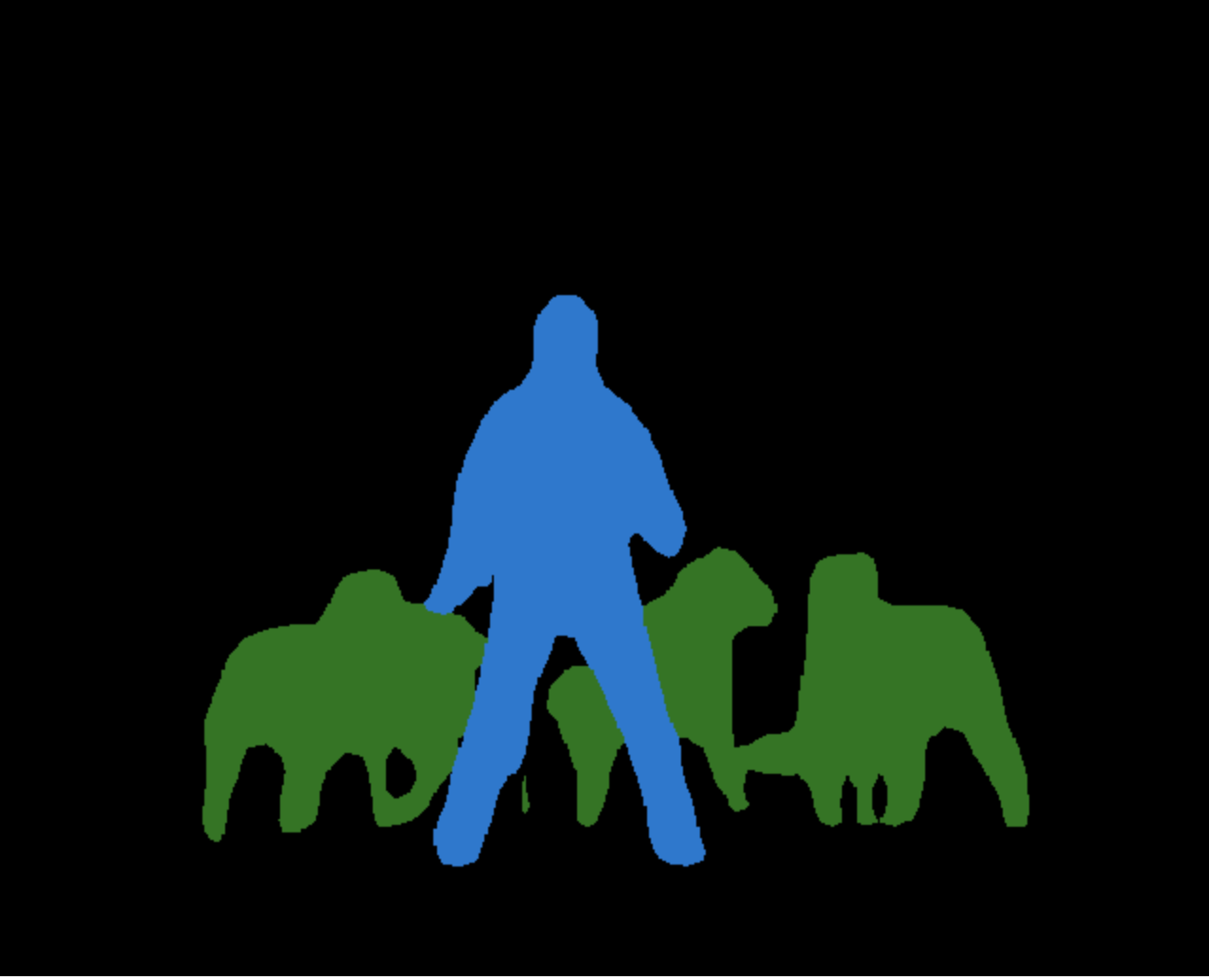

Image Segmentation

This app demonstrates how to use the PyTorch DeepLabV3 model to segment images. The updated app for PyTorch 1.9 also demonstrates how to create the model using the Mobile Interpreter and load the model with the LiteModuleLoader API.

Vision Transformer for Handwritten Digit Recognition

This app demonstrates how to use Facebook’s latest optimized Vision Transformer DeiT model to do image classification and handwritten digit recognition.

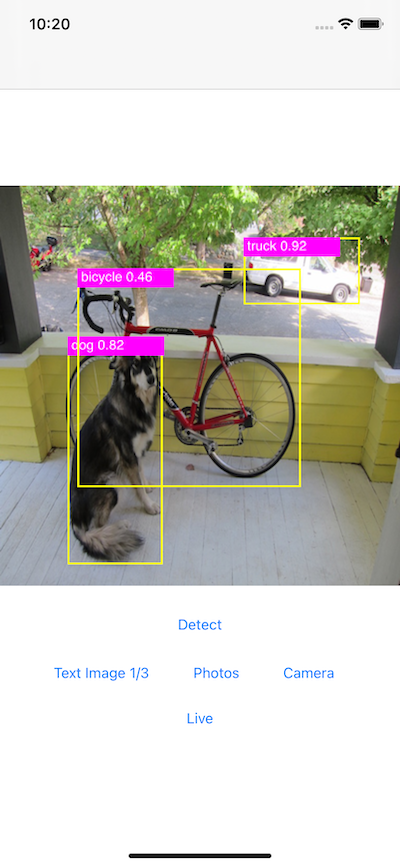

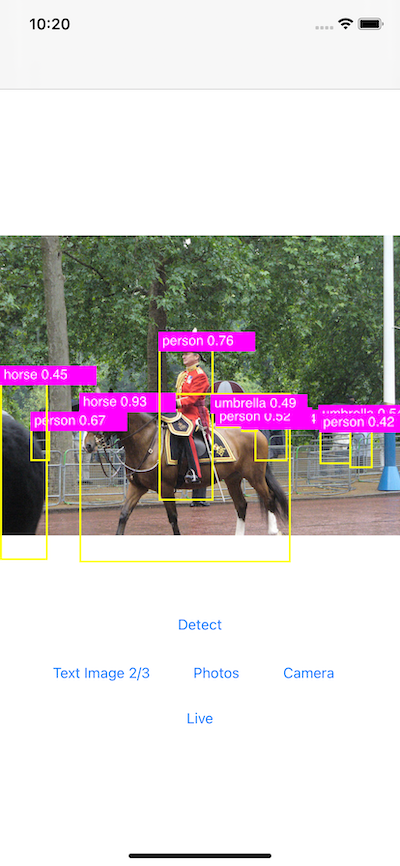

Object Detection

This app demonstrates how to convert the popular YOLOv5 model and use it on an iOS app that detects objects from pictures in your photos, taken with camera, or with live camera.

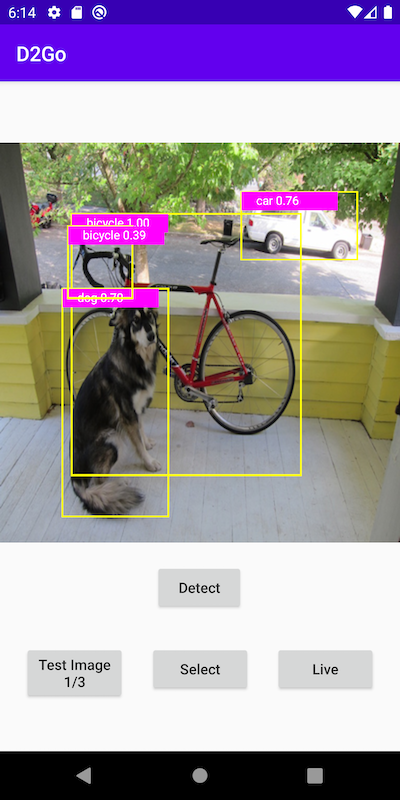

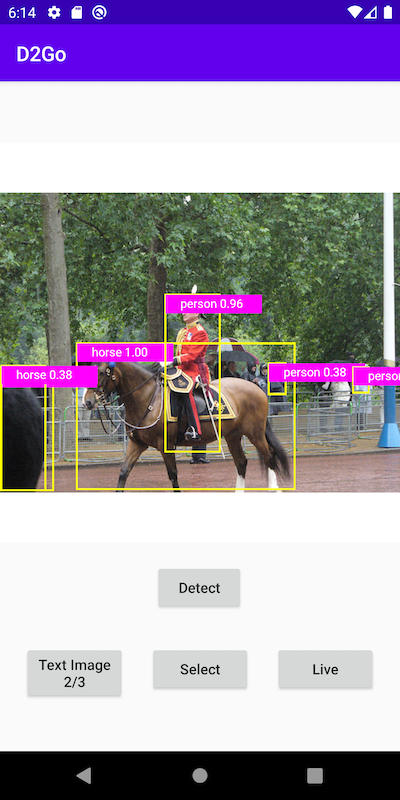

D2Go

This app demonstrates how to create and use a much lighter and faster Facebook D2Go model to detect objects from pictures in your photos, taken with camera, or with live camera.

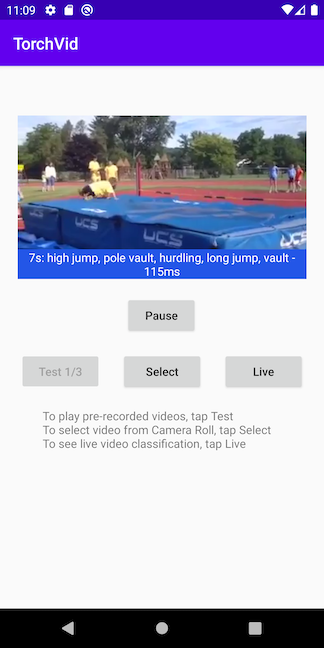

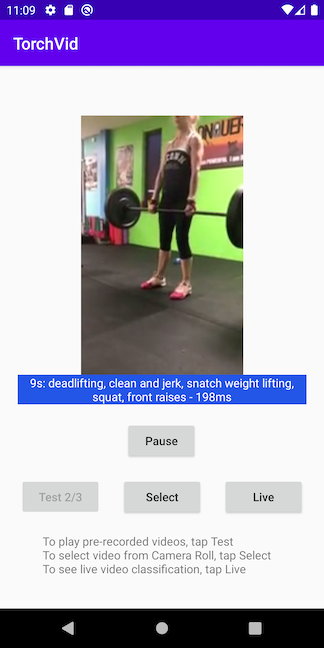

Video

Video Classification

This app demonstrates how to use a pre-trained PyTorchVideo model to perform video classification on tested videos, videos from the Photos library, or even real-time videos.

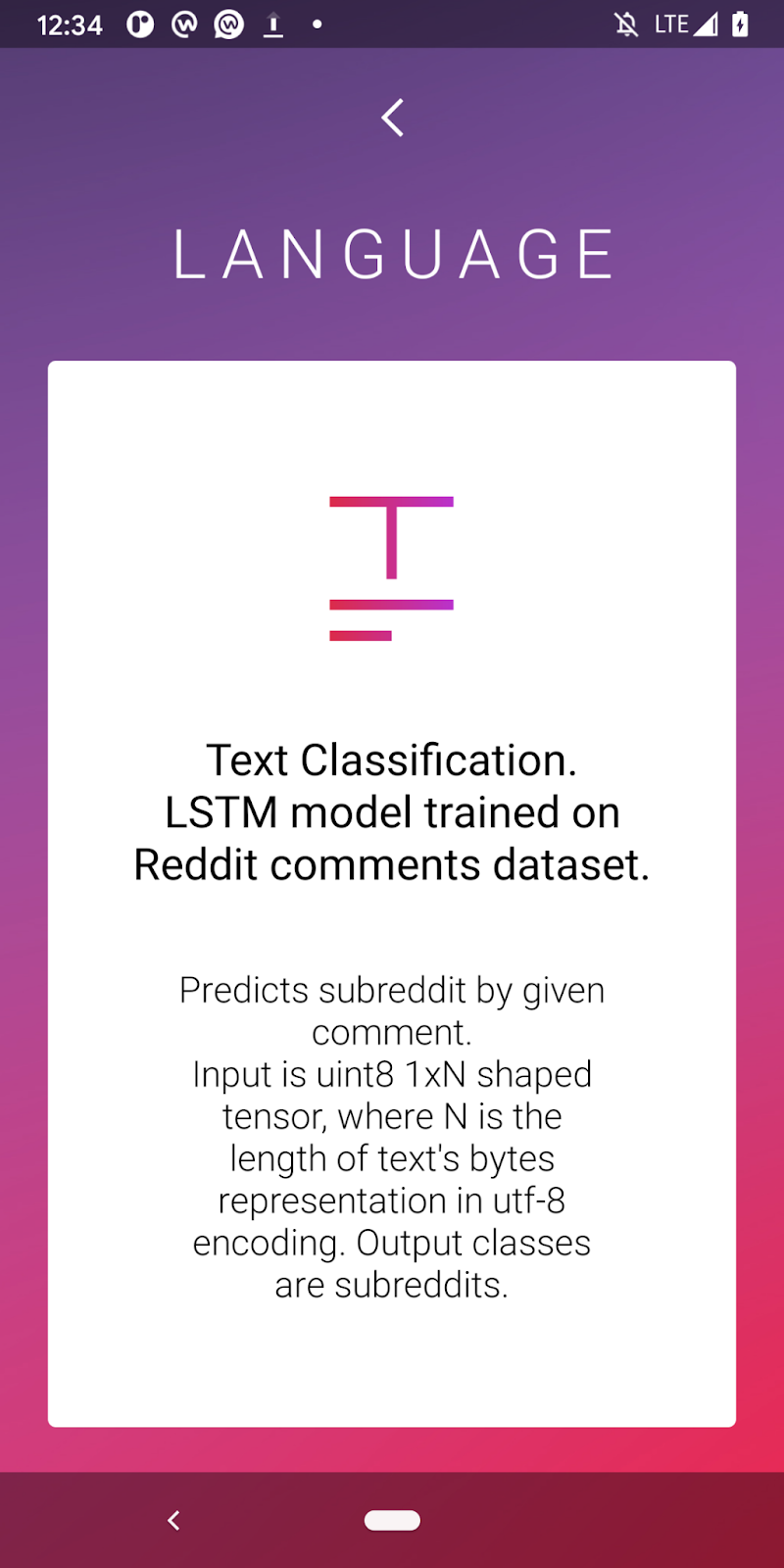

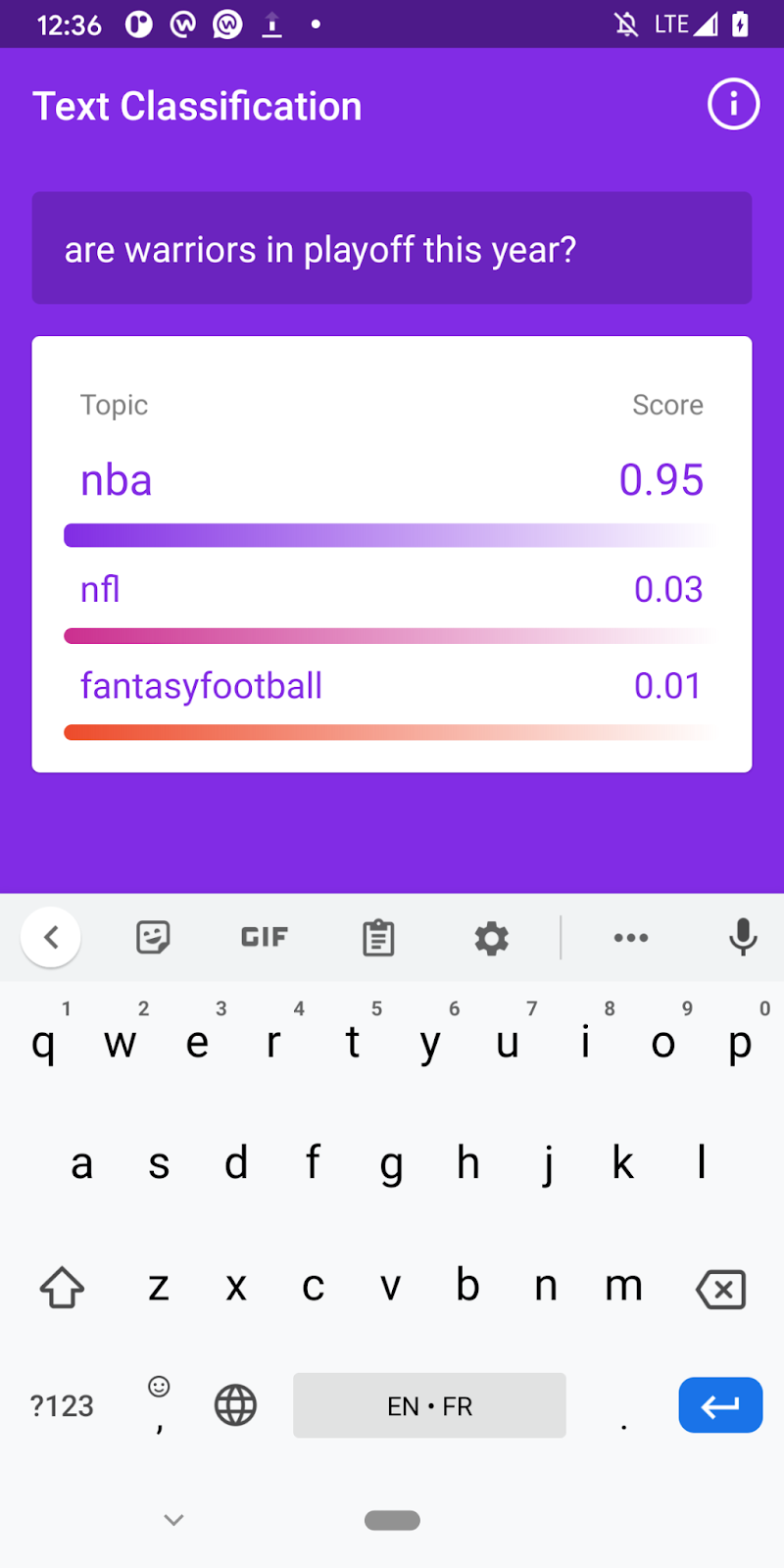

Natural Language Processing

Text Classification

This app demonstrates how to use a pre-trained Reddit model to perform text classification.

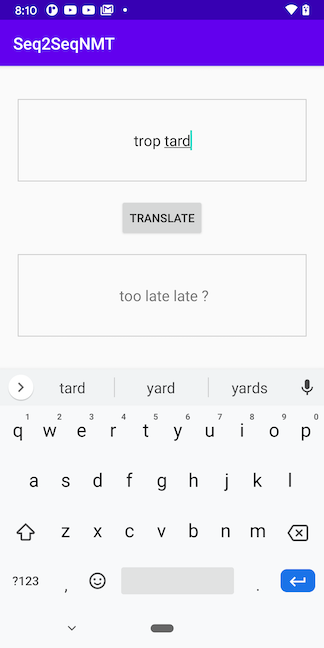

Machine Translation

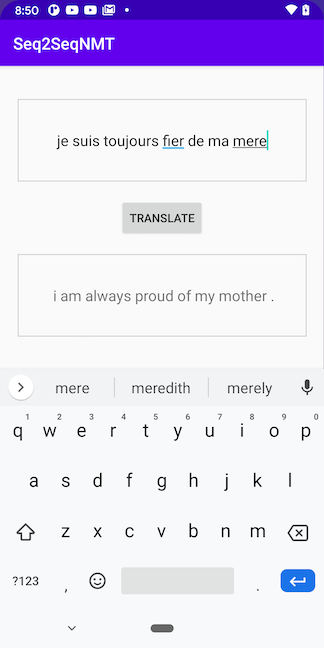

This app demonstrates how to convert a sequence-to-sequence neural machine translation model trained with the code in the PyTorch NMT tutorial for french to english translation.

Question Answering

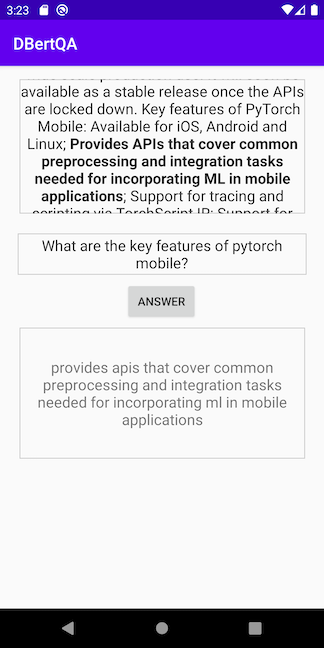

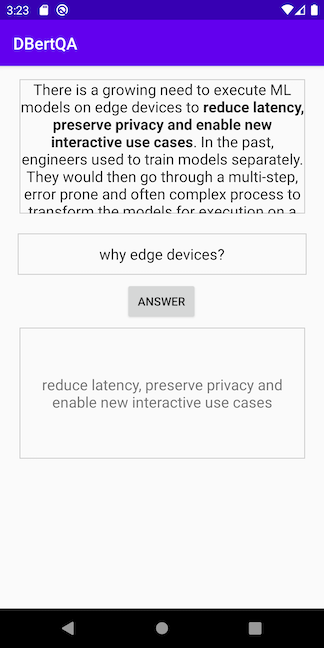

This app demonstrates how to use the DistilBERT Hugging Face transformer model to answer questions about Pytorch Mobile itself.

Audio

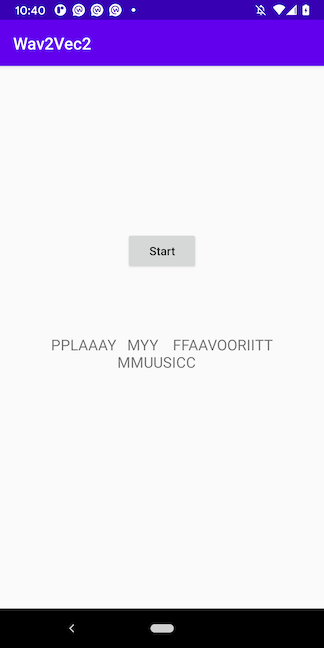

Speech Recognition

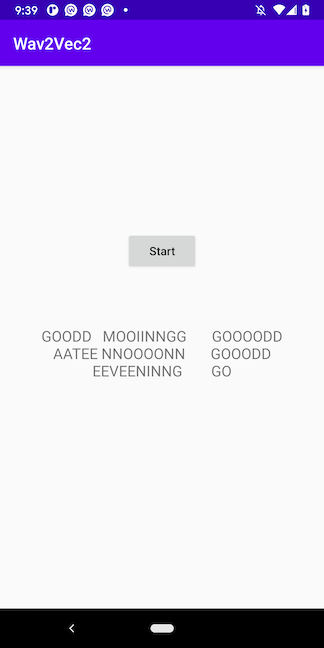

This app demonstrates how to convert Facebook AI’s torchaudio-powered wav2vec 2.0, one of the leading models in speech recognition to TorchScript before deploying it.

We really hope one of these demo apps stood out for you. For the full list, make sure to visit the iOS and Android demo app repos. You should also definitely check out the video An Overview of the PyTorch Mobile Demo Apps which provides both an overview of the PyTorch mobile demo apps and a deep dive into the PyTorch Video app for iOS and Android.

Amazon at CVPR: Pietro Perona on computer vision’s frontiers

Efficient learning and the capacity for abstraction are attributes that will probably require new insights — but self-supervised learning could help.Read More

How Amazon Chime’s noise cancellation works

Combining classic signal processing with deep learning makes method efficient enough to run on a phone.Read More

Women in conversational AI virtual panel discussion

Watch the replay of the June 15 discussion featuring five Amazon scientists.Read More