AI is a powerful tool that will have a significant impact on society for many years to come, from improving sustainability around the globe to advancing the accuracy of disease screenings. As a leader in AI, we’ve always prioritized the importance of understanding its societal implications and developing it in a way that gets it right for everyone.

That’s why we first published our AI Principles two years ago and why we continue to provide regular updates on our work. As our CEO Sundar Pichai said in January, developing AI responsibly and with social benefit in mind can help avoid significant challenges and increase the potential to improve billions of lives.

The world has changed a lot since January, and in many ways our Principles have become even more important to the work of our researchers and product teams. As we develop AI we are committed to testing safety, measuring social benefits, and building strong privacy protections into products. Our Principles give us a clear framework for the kinds of AI applications we will not design or deploy, like those that violate human rights or enable surveillance that violates international norms. For example, we were the first major company to have decided, several years ago, not to make general-purpose facial recognition commercially available.

Over the last 12 months, we’ve shared our point of view on how to develop AI responsibly—see our 2019 annual report and our recent submission to the European Commission’s Consultation on Artificial Intelligence. This year, we’ve also expanded our internal education programs, applied our principles to our tools and research, continued to refine our comprehensive review process, and engaged with external stakeholders around the world, while identifying emerging trends and patterns in AI.

Building on previous AI Principles updates we shared here on the Keyword in 2018 and 2019, here’s our latest overview of what we’ve learned, and how we’re applying these learnings in practice.

Internal education

In addition to launching the initial Tech Ethics training that 800+ Googlers have taken since its launch last year, this year we developed a new training for AI Principles issue spotting. We piloted the course with more than 2,000 Googlers, and it is now available as an online self-study course to all Googlers across the company. The course coaches employees on asking critical questions to spot potential ethical issues, such as whether an AI application might lead to economic or educational exclusion, or cause physical, psychological, social or environmental harm. We recently released a version of this training as a mandatory course for customer-facing Cloud teams and 5,000 Cloud employees have already taken it.

Tools and research

Our researchers are working on computer science and technology not just for today, but for tomorrow as well. They continue to play a leading role in the field, publishing more than 200 academic papers and articles in the last year on new methods for putting our principles into practice. These publications address technical approaches to fairness, safety, privacy, and accountability to people, including effective techniques for improving fairness in machine learning at scale, a method for incorporating ethical principles into a machine-learned model, and design principles for interpretable machine learning systems.

Over the last year, a team of Google researchers and collaborators published an academic paper proposing a framework called Model Cards that’s similar to a food nutrition label and designed to report an AI model’s intent of use, and its performance for people from a variety of backgrounds. We’ve applied this research by releasing Model Cards for Face Detection and Object Detection models used in Google Cloud’s Vision API product.

Our goal is for Google to be a helpful partner not only to researchers and developers who are building AI applications, but also to the billions of people who use them in everyday products. We’ve gone a step further, releasing 14 new tools that help explain how responsible AI works, from simple data visualizations on algorithmic bias for general audiences to Explainable AIdashboards and tool suites for enterprise users. You’ll find a number of these within our new Responsible AI with TensorFlow toolkit.

Review process

As we’ve shared previously, Google has a central, dedicated team that reviews proposals for AI research and applications for alignment with our principles. Operationalizing the AI Principles is challenging work. Our review process is iterative, and we continue to refine and improve our assessments as advanced technologies emerge and evolve. The team also consults with internal domain experts in machine-learning fairness, security, privacy, human rights, and other areas.

Whenever relevant, we conduct additional expert human rights assessments of new products in our review process, before launch. For example, we enlisted the nonprofit organization BSR (Business for Social Responsibility) to conduct a formal human rights assessment of the new Celebrity Recognition tool, offered within Google Cloud Vision and Video Intelligence products. BSR applied the UN’s Guiding Principles on Business and Human Rights as a framework to guide the product team to consider the product’s implications across people’s privacy and freedom of expression, as well as potential harms that could result, such as discrimination. This assessment informed not only the product’s design, but also the policies around its use.

In addition, because any robust evaluation of AI needs to consider not just technical methods but also social context(s), we consult a wider spectrum of perspectives to inform our AI review process, including social scientists and Google’s employee resource groups.

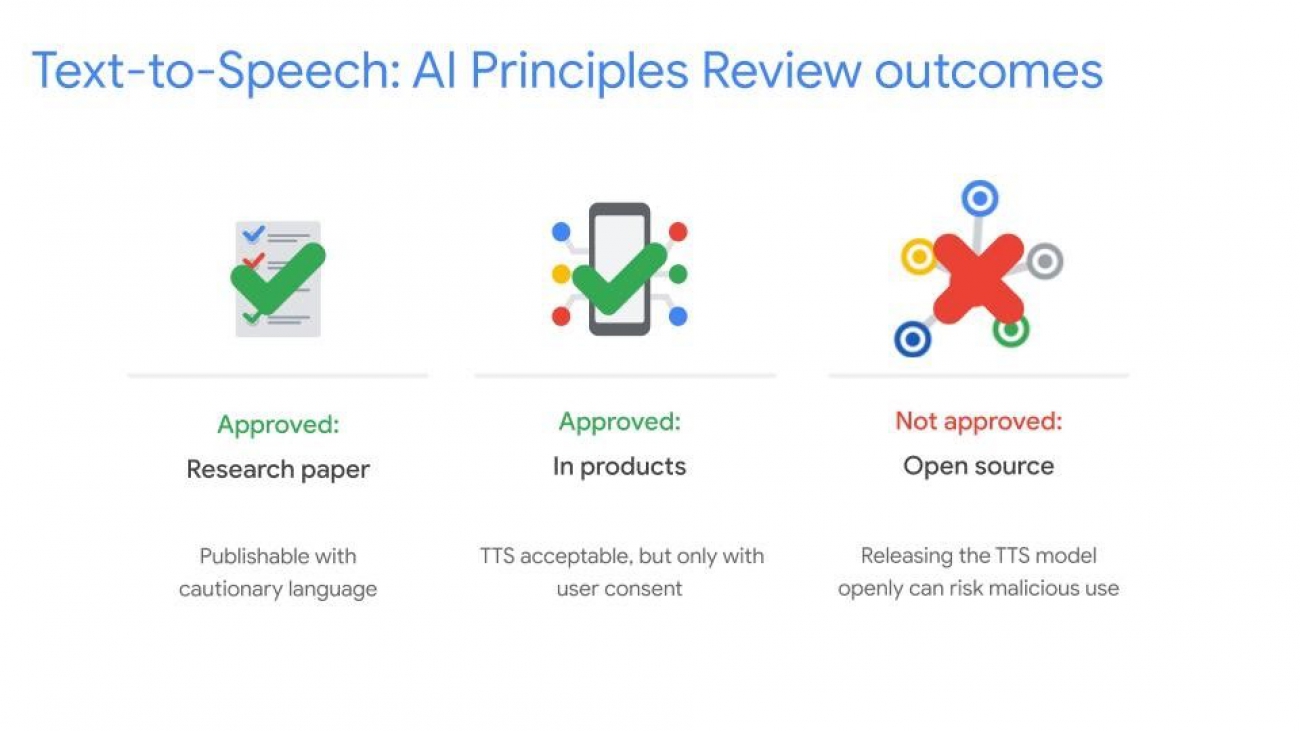

As one example, consider how we’ve built upon learnings from a case we published in our last AI Principles update: the review of academic research on text-to-speech (TTS) technology. Since then, we have applied what we learned in that earlier review to establish a Google-wide approach to TTS. Google Cloud’s Text-to-Speech service, used in products such as Google Lens, puts this approach into practice.

Because TTS could be used across a variety of products, a group of senior Google technical and business leads were consulted. They considered the proposal against our AI Principles of being socially beneficial and accountable to people, as well as the need to incorporate privacy by design and avoiding technologies that cause or are likely to cause overall harm.

-

Reviewers identified the benefits of an improved user interface for various products, and significant accessibility benefits for people with hearing impairments.

-

They considered the risks of voice mimicry and impersonation, media manipulation, and defamation.

-

They took into account how an AI model is used, and recognized the importance of adding layers of barriers for potential bad actors, to make harmful outcomes less likely.

-

They recommended on-device privacy and security precautions that serve as barriers to misuse, reducing the risk of overall harm from use of TTS technology for nefarious purposes.

-

The reviewers recommended approving TTS technology for use in our products, but only with user consent and on-device privacy and security measures.

-

They did not approve open-sourcing of TTS models, due to the risk that someone might misuse them to build harmful deepfakes and distribute misinformation.

External engagement

To increase the number and variety of outside perspectives, this year we launched the Equitable AI Research Roundtable, which brings together advocates for communities of people who are currently underrepresented in the technology industry, and who are most likely to be impacted by the consequences of AI and advanced technology. This group of community-based, non-profit leaders and academics meet with us quarterly to discuss AI ethics issues, and learnings from these discussions help shape operational efforts and decision-making frameworks.

Our global efforts this year included new programs to support non-technical audiences in their understanding of, and participation in, the creation of responsible AI systems, whether they are policymakers, first-time ML (machine learning) practitioners or domain experts. These included:

-

Partnering with Yielding Accomplished African Women to implement the first-ever Women in Machine Learning Conference in Africa. We built a network of 1,250 female machine learning engineers from six different African countries. Using the Google Cloud Platform, we trained and certified 100 women at the conference in Accra, Ghana. More than 30 universities and 50 companies and organizations were represented. The conference schedule included workshops on Qwiklabs, AutoML, TensorFlow, human-centered approach to AI, mindfulness and #IamRemarkable.

-

Releasing, in partnership with the Ministry of Public Health in Thailand, the first studyof its kind on how researchers apply nurses’ and patients’ input to make recommendations on future AI applications, based on how nurses deployed a new AI system to screen patients for diabetic retinopathy.

-

Launching an ML workshop for policymakers featuring content and case studies covering the topics of Explainability, Fairness, Privacy, and Security. We’ve run this workshop, via Google Meet, with over 80 participants in the policy space with more workshops planned for the remainder of the year.

-

Hosting the PAIR (People + AI Research) Symposium in London, which focused on participatory ML and marked PAIR’s expansion to the EMEA region. The event drew 160 attendees across academia, industry, engineering, and design, and featured cross-disciplinary discussions on human-centered AI and hands-on demos of ML Fairness and interpretability tools.

We remain committed to external, cross-stakeholder collaboration. We continue to serve on the board and as a member of the Partnership on AI, a multi-stakeholder organization that studies and formulates best practices on AI technologies. As an example of our work together, the Partnership on AI is developing best practices that draw from our Model Cards proposal as a framework for accountability among its member organizations.

Trends, technologies and patterns emerging in AI

We know no system, whether human or AI powered, will ever be perfect, so we don’t consider the task of improving it to ever be finished. We continue to identify emerging trends and challenges that surface in our AI Principles reviews. These prompt us to ask questions such as when and how to responsibly develop synthetic media, keep humans in an appropriate loop of AI decisions, launch products with strong fairness metrics, deploy affective technologies, and offer explanations on how AI works, within products themselves.

As Sundar wrote in January, it’s crucial that companies like ours not only build promising new technologies, but also harness them for good—and make them available for everyone. This is why we believe regulation can offer helpful guidelines for AI innovation, and why we share our principled approach to applying AI. As we continue to responsibly develop and use AI to benefit people and society, we look forward to continuing to update you on specific actions we’re taking, and on our progress.

Yassine Landa is a Data Scientist at AWS. He holds an undergraduate degree in Math and Physics, and master’s degrees from French universities in Computer Science and Data Science, Web Intelligence, and Environment Engineering. He is passionate about building machine learning and artificial intelligence products for customers, and has won multiple awards for machine learning products he has built with tech startups and as a startup founder.

Yassine Landa is a Data Scientist at AWS. He holds an undergraduate degree in Math and Physics, and master’s degrees from French universities in Computer Science and Data Science, Web Intelligence, and Environment Engineering. He is passionate about building machine learning and artificial intelligence products for customers, and has won multiple awards for machine learning products he has built with tech startups and as a startup founder.