The LGBTQ+ community has a long history of resilience and activism in the fight toward acceptance and equal rights in the United States. Pride Month is celebrated every June to honor the 1969 Stonewall Uprising in Manhattan and activists such as Marsha P. Johnson and Sylvia Rivera. This year, the 50th anniversary of Pride coincides with an increased swell of support for the fight against racial injustice and the Black Lives Matter movement, with protests and demonstrations occurring in every state across the U.S. and in countries around the world.

To reflect on the history of Pride Month and its roots in activism, we reached out to the LGBTQ+ community at Facebook. Researchers Gregory Davis, Meghan Rolfe, Darwin Mastin, TJ Olojede, and Hannah Furnas each volunteered their time to share what Pride means to them, how their research influences our products, and how they’re recognizing Pride this year.

Designing a product to bring our authentic selves

Gregory Davis (he/him) is a UX Researcher working on Portal.

Portal allows us to connect with significant others from all aspects of our lives. Making sure people are comfortable is vitally important to that goal. As a UX Researcher, I work on what Portal users need to be able to bring their multiple selves to the device. For LGBTQ+ users, these questions take on enhanced importance.

Pride, to me, is about celebrating the things about you that people can’t see and that many don’t want to see. The freedom to be out — to be all of our identities all of the time — is a gift that LGBTQ+ people cherish, given to us by our queer fore-parents and paid for with the blood, sweat, and tears of their activism and resistance. That activism tipped the scales toward equality with the Stonewall Uprising in June of 1969 when members of the LGBTQ+ community protested against the frequent police raids on the Stonewall Inn — a fight that is extremely relevant to today’s protests against police brutality.

Because queer people fought back at Stonewall and galvanized on the streets, in their homes, and at the ballot, we celebrate Pride Month every June. We celebrate winning marriage equality, protection against discrimination, and the ability to live our lives openly and honestly. That work isn’t done, however. In 2018 and 2019, at least two transgender or gender-nonconforming people were murdered each month. Most of these victims were Black trans women. Black LGBTQ+ people are still in the fight for respect from their families, recognition and equity at work, and safety from state violence.

When I look at my work as a bisexual Black man here at Facebook and beyond, I bring that history and knowledge with me. I design and implement projects at Portal thinking about the consumer in all their facets, including their race and sexuality. This helps us create a better product for everybody by making sure no one is excluded or neglected.

Working toward a safer platform for people to live their truth

Meghan Rolfe (she/her) is a UX Researcher working on Community Integrity.

Pride, to me, represents that journey we take that ends with the open-armed embrace of the LGBTQ+ community and the feeling that we are all in this together — that we see one another. I believe many of us grew up with a deep pain caused by the feeling that we are the “Other” in society, internalizing a deep-rooted fear of rejection and staying tightly in the closet. For me, Pride is about the release of that rejection and the overwhelming joy you feel once you can live your truth.

My work in Community Integrity relates to this. Part of my role is understanding the potential benefits and harms of identity verification on our platforms, as well as the steps we can take to support individuals regardless of identity, documentation status, or membership in marginalized groups. Many people use our platforms to find a community where they can safely express their authentic selves. Transgender people in particular are often able to be their true selves online before they’ve come out to their family and friends. This is a wonderful use of our products, and we should find ways to support this even more.

However, there is a flip side to this. Like many other companies, our security systems are built around identity verification: If someone is hacked, they are asked for government-issued documents that can confirm they are who they say they are. This means that for those who use a different identity online — even if that identity is their most authentic — an exact match with government-issued documents is expected, which makes it difficult to resolve disparities between on- and offline identities. Based on prior feedback, we’ve changed our policy to allow a wider range of documents beyond government-issued ID; even so, we are currently conducting additional research on this experience to understand how we can better support individuals with different on- and offline identities.

Identity verification also allows us to hold people accountable for any violations of our Community Standards, such as bullying and harassment. It’s important that we provide victims with the ability to report not just the accounts responsible for harassing behaviors, but also the individuals behind those accounts. By creating systems of accountability, we can better protect members of the LGBTQ+ community from both online and offline attacks.

This Pride, we must not only remember the LGBTQ+ leaders who fought for us to be able to live our truths, but also remind ourselves that this fight continues.

Listening, learning, and teaching with empathy

Darwin Mastin (he/him; they/them) is a UX Researcher working on pathfinding.

As a human behavior researcher, I love to learn about what drives people. I want to understand our unconscious actions, and make this knowledge available through stories and products. My research at Facebook is focused on understanding current and future gaps within the Facebook app and the company. We are not perfect, but I think research can influence the products and company by bringing other necessary and underrepresented voices to the table.

To me, Pride doesn’t stop at being proud. In addition to celebrating ourselves and our community, we must continue to stand up for our community and have the support of our allies in doing so. We all need to focus on listening, learning, and being involved — because our celebrations of Pride were born from similar calls for justice by queer trans people of color.

One of the ways we can help is educating others about the issues that marginalized communities face. The LGBTQ+ community spans every demographic group — race, age, education level, and so on. We can’t make assumptions about anyone else’s experience; we need to reach out and listen and learn, because all of our histories are so different and so broad, and coming together to celebrate and understand these differences makes for a stronger community.

However, while it is necessary to do the work of educating others, it should not be the sole responsibility of marginalized communities. I’ve found that when those who are less informed are able to attach to a story or an experience, it drives empathy and inspires them to want to learn more rather than just learn now. But once inspired, new allies must share the burden, internalize their learning, and educate others. It’s important that our allies take a moment and have those difficult conversations. It will be hard, but that’s where it starts.

A movement is not a moment. It is action and reaction, and building on that over and over again. Change will come from listening to our broader communities, giving voice to people who have not been heard. It’s not just something today and not tomorrow. We know the next step is voting, representation, policy — those steps will follow from the public’s demands. Those are the building blocks to pride.

Fostering inclusion through work and at work

TJ Olojede (he/him) is a Creative Researcher in Facebook’s Creative Shop.

As the Creative Research team within Facebook’s Creative Shop, our focus is on elevating creativity in advertising on the platform and making sure that advertisers utilize creativity maximally to achieve better business outcomes. Our research helps us understand what creative strategies perform better, and we share those best practices with all of the businesses who advertise on Facebook. I like to think that in this way, we make Facebook advertising more inclusive and accessible to the everyday “pop-and-pop” shop and to businesses big and small.

Pride Month feels bittersweet to me this year. It is an interesting time to exist at the intersection of being gay and Black in America, even as an immigrant. Often these two identities exist in conflict and influence how much I feel like I belong to either group, since I’m still an “other” within each community. When I first moved to the U.S., I was excited to leave Nigeria, and to be somewhere where LGBTQ+ rights were leaps and bounds above anything back home — even though not optimal. And then I started to understand what it meant to be Black in America, and I remember thinking I had just exchanged freedom from one oppression for another.

When the more recent Black Lives Matter protests started after the murder of George Floyd, it felt clear to me how to feel. With June being Pride Month, however, it didn’t feel right to celebrate Pride and be happy in the midst of all that was going on. Even worse, I felt betrayed seeing all of my non-POC friends who hadn’t said anything about BLM suddenly want to celebrate Pride.

But at work, I appreciated that the Pride team was sensitive and empathetic enough to hold off on all of the Pride fanfare in the middle of the protests, and that to me spoke volumes about how much we care about each other within the company. I appreciate that I work with a team of inclusive, caring people who make work a safe space and engender that sense of belonging and emotional closeness. For me, inclusion boils down to feeling like I matter, like I belong here, and that there are others here like me.

Acquiring a more complete picture of our community

Hannah Furnas (she/her) is a Research Science Manager on the Demography and Survey Science team.

At Facebook, I support a team of researchers working on projects at the intersection of survey research and machine learning. We design projects to collect survey ground truth that’s used to train and evaluate machine learning models.

To me, Pride means embracing my own queer identity and showing up for the LGBTQ+ community. I’m continuing to embrace my own queer identity in an ongoing process of showing up more fully for myself so that I can show up for others. I’m intentionally expanding my understanding of what it means to belong to the LGBTQ+ community — which includes noticing and unlearning a lot of what my upbringing has taught me.

I grew up in a very white, cis-normative, heteronormative environment. People, structures, and institutions praised heterosexual couples and shamed other types of relationships. When I came out two years ago, a lot of the pushback I received was from people who couldn’t fathom why I wanted to come out as bi/pan since I was in a relationship with a cis man. This idea that I should hide who I am is one of the reasons it took me so long to come out to myself. Through support from my colleagues in the Pride community at Facebook, I’ve begun to truly embrace who I am.

Not only did my early socialization impact my coming-out experience, but it also gave me an incomplete picture of LGBTQ+ history and the current issues we face. I was exposed to media that suggested the work was done and equal rights were won. This obviously isn’t the case. Systemic discrimination and violence disproportionately impacts BIPOC and trans communities despite the fact that our movement wouldn’t exist today without trans activists like Marsha P. Johnson and Sylvia Rivera.

If I fail to acknowledge the full lived experiences of others in our community, then I’m also upholding the structures and systems that continue to oppress the LGBTQ+ community. I’m continuing this lifelong work of building awareness and taking action to embrace ourselves and our community more fully. To me, this is what Pride is all about — this year and every year.

—

Diversity is crucial to understanding where we’re succeeding and where we need to do better in our business. It enables us to build better products, make better decisions, and better serve our communities. We are proud of our attention to the LGBTQ+ experience across our apps and technologies, often thanks to the many LGBTQ+ people and allies who work at Facebook.

To learn more about Diversity at Facebook, visit our website.

The post Reflecting on Pride: How five Facebook researchers honor their LGBTQ+ history appeared first on Facebook Research.

Read More

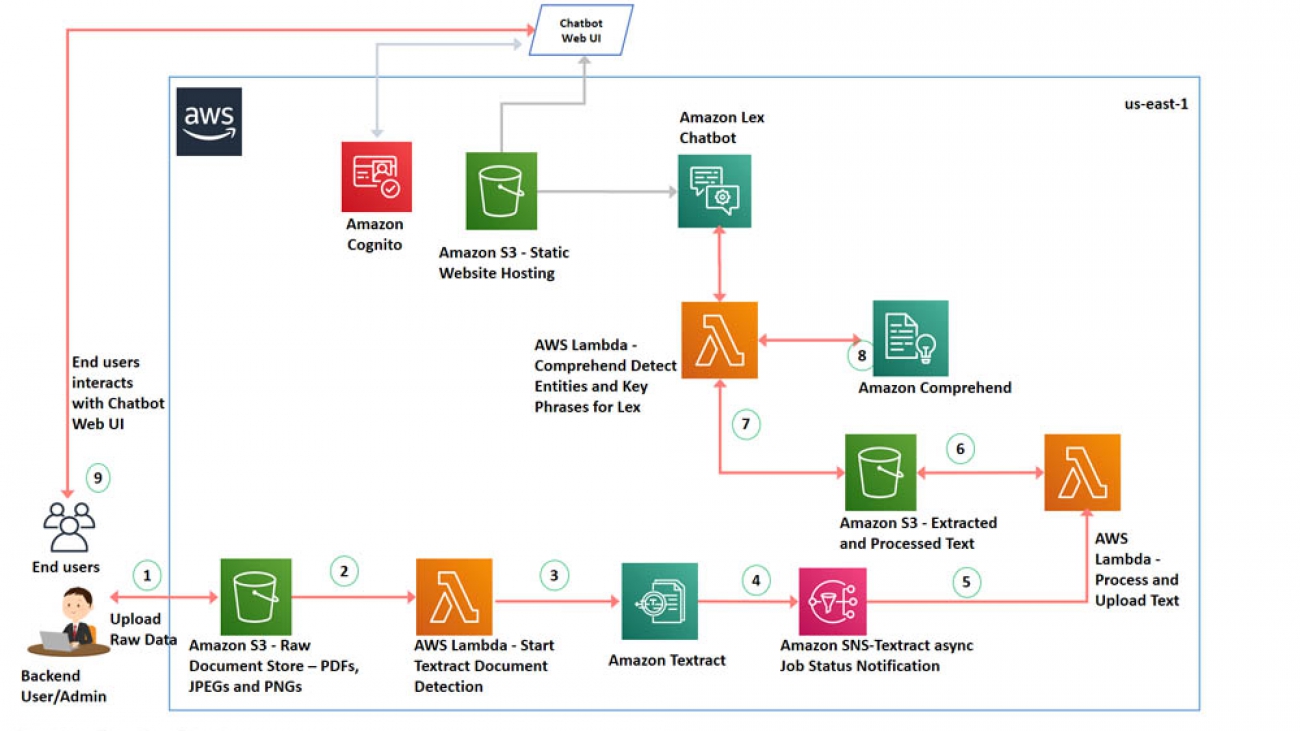

Mona Mona is an AI/ML Specialist Solutions Architect based out of Arlington, VA. She works with World Wide Public Sector Team and helps customers adopt machine learning on a large scale. She is passionate about NLP and ML Explainability areas in AI/ML .

Mona Mona is an AI/ML Specialist Solutions Architect based out of Arlington, VA. She works with World Wide Public Sector Team and helps customers adopt machine learning on a large scale. She is passionate about NLP and ML Explainability areas in AI/ML . Prem Ranga is an Enterprise Solutions Architect based out of Houston, Texas. He is part of the Machine Learning Technical Field Community and loves working with customers on their ML and AI journey. Prem is passionate about robotics, is an Autonomous Vehicles researcher, and also built the Alexa-controlled Beer Pours in Houston and other locations.

Prem Ranga is an Enterprise Solutions Architect based out of Houston, Texas. He is part of the Machine Learning Technical Field Community and loves working with customers on their ML and AI journey. Prem is passionate about robotics, is an Autonomous Vehicles researcher, and also built the Alexa-controlled Beer Pours in Houston and other locations. Saida Chanda is a Senior Partner Solutions Architect based out of Seattle, WA. He is a technology enthusiast who drives innovation through AWS partners to meet customers complex business requirements via simple solutions. His areas of interest are ML and DevOps. In his spare time, he likes to spend time with family and exploring his innerself through meditation.

Saida Chanda is a Senior Partner Solutions Architect based out of Seattle, WA. He is a technology enthusiast who drives innovation through AWS partners to meet customers complex business requirements via simple solutions. His areas of interest are ML and DevOps. In his spare time, he likes to spend time with family and exploring his innerself through meditation.

Luis Leon is the IT Innovation Advisor responsible for the data science practice in the IT at Euler Hermes. He is in charge of the ideation of digital projects as well as managing the design, build and industrialization of at scale machine learning products. His main interests are Natural Language Processing, Time Series Analysis and non-supervised learning.

Luis Leon is the IT Innovation Advisor responsible for the data science practice in the IT at Euler Hermes. He is in charge of the ideation of digital projects as well as managing the design, build and industrialization of at scale machine learning products. His main interests are Natural Language Processing, Time Series Analysis and non-supervised learning. Hamza Benchekroun is Data Scientist in the IT Innovation hub at Euler Hermes focusing on deep learning solutions to increase productivity and guide decision making across teams. His research interests include Natural Language Processing, Time Series Analysis, Semi-Supervised Learning and their applications.

Hamza Benchekroun is Data Scientist in the IT Innovation hub at Euler Hermes focusing on deep learning solutions to increase productivity and guide decision making across teams. His research interests include Natural Language Processing, Time Series Analysis, Semi-Supervised Learning and their applications. Hatim Binani is data scientist intern in the IT Innovation hub at Euler Hermes. He is an engineering student at INSA Lyon in the computer science department. His field of interest is data science and machine learning. He contributed within the IT innovation team to the deployment of Watson on Amazon Sagemaker.

Hatim Binani is data scientist intern in the IT Innovation hub at Euler Hermes. He is an engineering student at INSA Lyon in the computer science department. His field of interest is data science and machine learning. He contributed within the IT innovation team to the deployment of Watson on Amazon Sagemaker. Guillaume Chambert is an IT security engineer at Euler Hermes. As SOC manager, he strives to stay ahead of new threats in order to protect Euler Hermes’ sensitive and mission-critical data. He is interested in developing innovation solutions to prevent critical information from being stolen, damaged or compromised by hackers.

Guillaume Chambert is an IT security engineer at Euler Hermes. As SOC manager, he strives to stay ahead of new threats in order to protect Euler Hermes’ sensitive and mission-critical data. He is interested in developing innovation solutions to prevent critical information from being stolen, damaged or compromised by hackers.