We find that, just as a large transformer model trained on language can generate coherent text, the same exact model trained on pixel sequences can generate coherent image completions and samples. By establishing a correlation between sample quality and image classification accuracy, we show that our best generative model also contains features competitive with top convolutional nets in the unsupervised setting.

Introduction

Unsupervised and self-supervised learning, or learning without human-labeled data, is a longstanding challenge of machine learning. Recently, it has seen incredible success in language, as transformer models like BERT, GPT-2, RoBERTa, T5, and other variants have achieved top performance on a wide array of language tasks. However, the same broad class of models has not been successful in producing strong features for image classification. Our work aims to understand and bridge this gap.

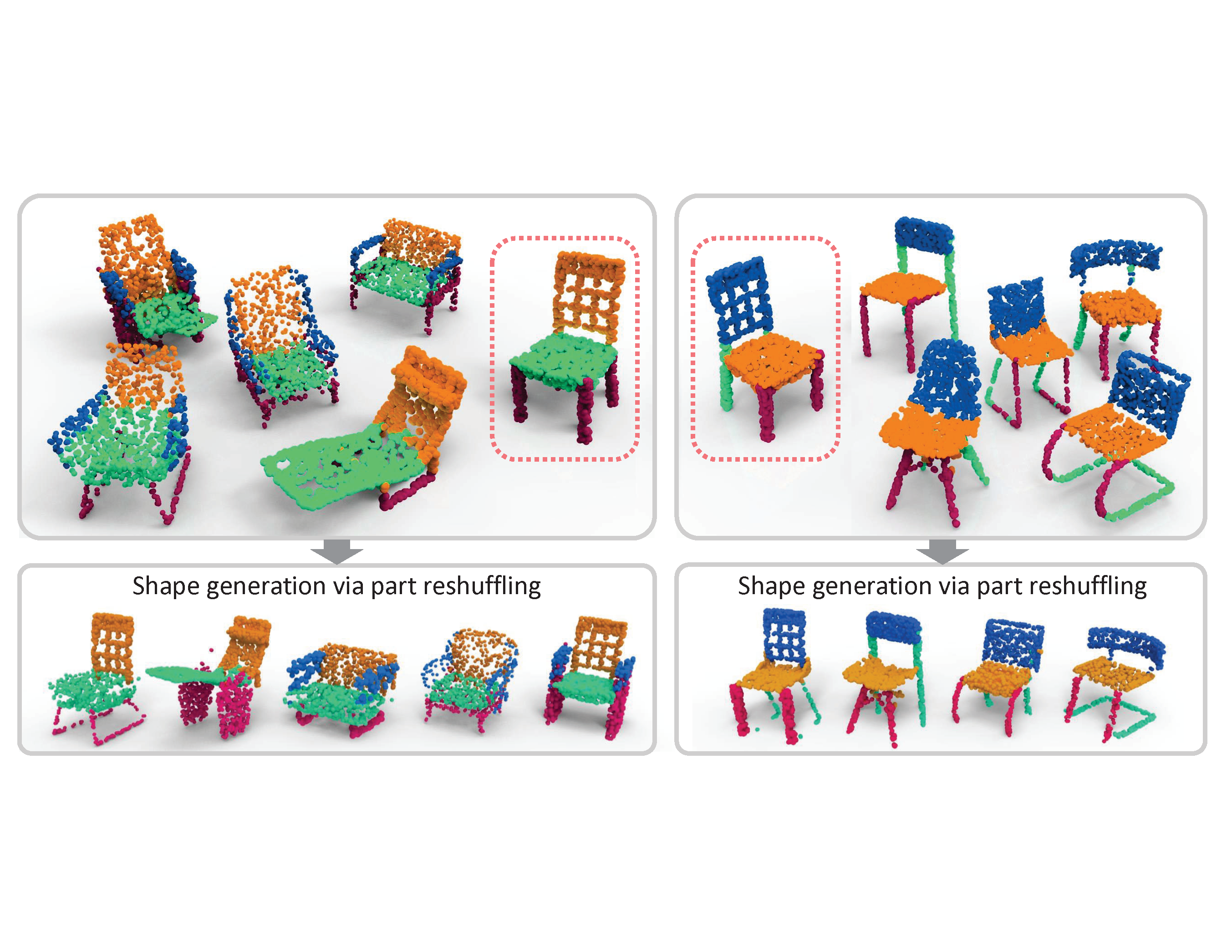

Transformer models like BERT and GPT-2 are domain agnostic, meaning that they can be directly applied to 1-D sequences of any form. When we train GPT-2 on images unrolled into long sequences of pixels, which we call iGPT, we find that the model appears to understand 2-D image characteristics such as object appearance and category. This is evidenced by the diverse range of coherent image samples it generates, even without the guidance of human provided labels. As further proof, features from the model achieve state-of-the-art performance on a number of classification datasets and near state-of-the-art unsupervised accuracy[1] on ImageNet.

| Evaluation | Dataset | Our Result | Best non-iGPT Result |

|---|---|---|---|

| Logistic regression on learned features (linear probe) | CIFAR-10 |

96.3

iGPT-L 32×32 w/ 1536 features |

95.3

SimCLR w/ 8192 features |

| CIFAR-100 |

82.8

iGPT-L 32×32 w/ 1536 features |

80.2

SimCLR w/ 8192 features |

|

| STL-10 |

95.5

iGPT-L 32×32 w/ 1536 features |

94.2

AMDIM w/ 8192 features |

|

| ImageNet |

72.0

iGPT-XLa 64×64 w/ 15360 features |

76.5

SimCLR w/ 8192 features |

|

| Full fine-tune | CIFAR-10 |

99.0

iGPT-L 32×32, trained on ImageNet |

99.0b

GPipe, trained on ImageNet |

| ImageNet 32×32 |

66.3

iGPT-L 32×32 |

70.2

Isometric Nets |

- We only show ImageNet linear probe accuracy for iGPT-XL since other experiments did not finish before we needed to transition to different supercomputing facilities.

- Bit-L, trained on JFT (300M images with 18K classes), achieved a result of 99.3.

To highlight the potential of generative sequence modeling as a general purpose unsupervised learning algorithm, we deliberately use the same transformer architecture as GPT-2 in language. As a consequence, we require significantly more compute in order to produce features competitive with those from top unsupervised convolutional nets. However, our results suggest that when faced with a new domain where the correct model priors are unknown, a large GPT-2 can learn excellent features without the need for domain-specific architectural design choices.

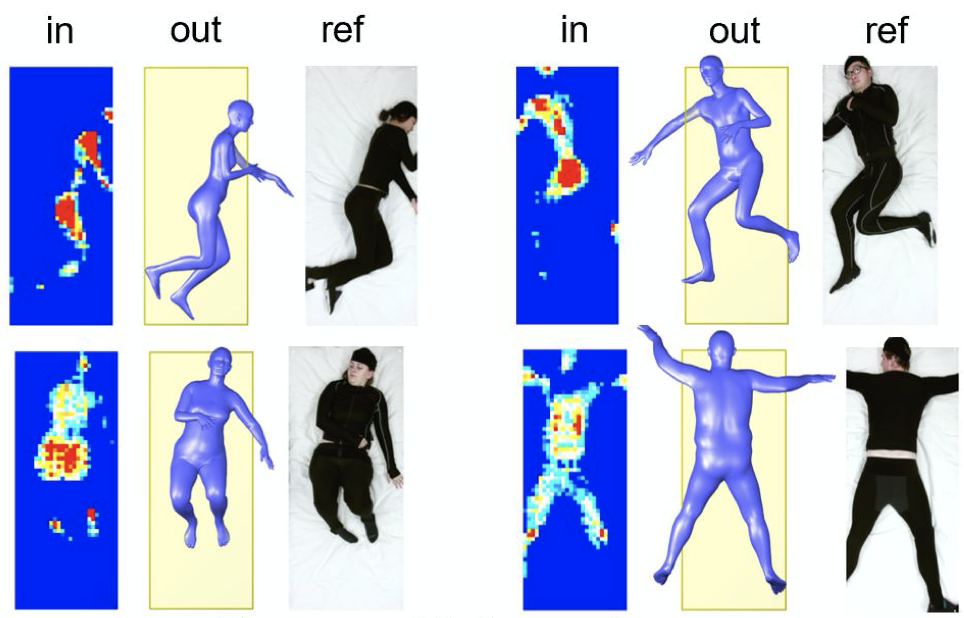

Completions

Samples

From language GPT to image GPT

In language, unsupervised learning algorithms that rely on word prediction (like GPT-2 and BERT) have been extremely successful, achieving top performance on a wide array of language tasks. One possible reason for this success is that instances of downstream language tasks appear naturally in text: questions are often followed by answers (which could help with question-answering) and passages are often followed by summaries (which could help with summarization). In contrast, sequences of pixels do not clearly contain labels for the images they belong to.

Even without this explicit supervision, there is still a reason why GPT-2 on images might work: a sufficiently large transformer trained on next pixel prediction might eventually learn to generate diverse[2] samples with clearly recognizable objects. Once it learns to do so, an idea known as “Analysis by Synthesis”[3] suggests that the model will also know about object categories. Many early generative models were motivated by this idea, and more recently, BigBiGAN was an example which produced encouraging samples and features. In our work, we first show that better generative models achieve stronger classification performance. Then, through optimizing GPT-2 for generative capabilities, we achieve top-level classification performance in many settings, providing further evidence for analysis by synthesis.

Towards general unsupervised learning

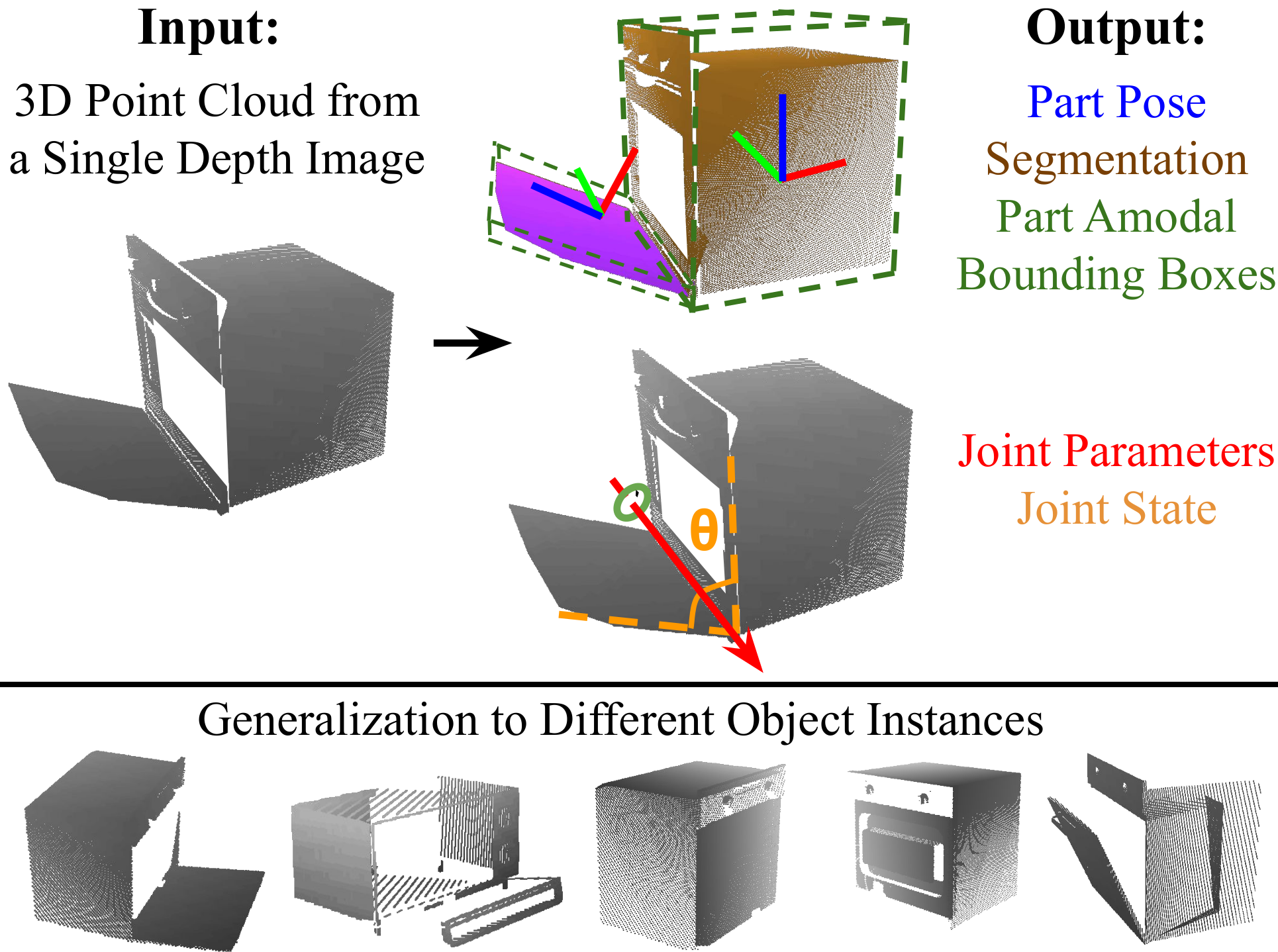

Generative sequence modeling is a universal unsupervised learning algorithm: since all data types can be represented as sequences of bytes, a transformer can be directly applied to any data type without additional engineering. Our work tests the power of this generality by directly applying the architecture used to train GPT-2 on natural language to image generation. We deliberately chose to forgo hand coding any image specific knowledge in the form of convolutions or techniques like relative attention, sparse attention, and 2-D position embeddings.

As a consequence of its generality, our method requires significantly more compute to achieve competitive performance in the unsupervised setting. Indeed, contrastive methods are still the most computationally efficient methods for producing high quality features from images. However, in showing that an unsupervised transformer model is competitive with the best unsupervised convolutional nets, we provide evidence that it is possible to trade off hand coded domain knowledge for compute. In new domains, where there isn’t much knowledge to hand code, scaling compute seems an appropriate technique to test.

Approach

We train iGPT-S, iGPT-M, and iGPT-L, transformers containing 76M, 455M, and 1.4B parameters respectively, on ImageNet. We also train iGPT-XL[4], a 6.8 billion parameter transformer, on a mix of ImageNet and images from the web. Due to the large computational cost of modeling long sequences with dense attention, we train at the low resolutions of 32×32, 48×48, and 64×64.

While it is tempting to work at even lower resolutions to further reduce compute cost, prior work has demonstrated that human performance on image classification begins to drop rapidly below these sizes. Instead, motivated by early color display palettes, we create our own 9-bit color palette to represent pixels. Using this palette yields an input sequence length 3 times shorter than the standard (R, G, B) palette, while still encoding color faithfully.

Experimental results

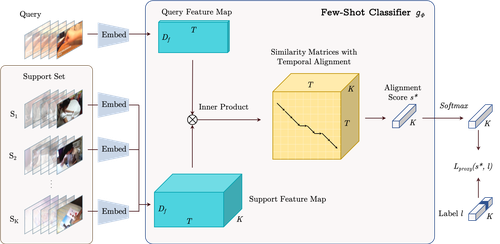

There are two methods we use to assess model performance, both of which involve a downstream classification task. The first, which we refer to as a linear probe, uses the trained model to extract features[5] from the images in the downstream dataset, and then fits a logistic regression to the labels. The second method fine-tunes[6] the entire model on the downstream dataset.

Since next pixel prediction is not obviously relevant to image classification, features from the final layer may not be the most predictive of the object category. Our first result shows that feature quality is a sharply increasing, then mildly decreasing function of depth. This behavior suggests that a transformer generative model operates in two phases: in the first phase, each position gathers information from its surrounding context in order to build a contextualized image feature. In the second phase, this contextualized feature is used to solve the conditional next pixel prediction task. The observed two stage performance of our linear probes is reminiscent of another unsupervised neural net, the bottleneck autoencoder, which is manually designed so that features in the middle are used.

Our next result establishes the link between generative performance and feature quality. We find that both increasing the scale of our models and training for more iterations result in better generative performance, which directly translates into better feature quality.

When we evaluate our features using linear probes on CIFAR-10, CIFAR-100, and STL-10, we outperform features from all supervised and unsupervised transfer algorithms. Our results are also compelling in the full fine-tuning setting.

| Pre-trained on ImageNet | ||||

|---|---|---|---|---|

| Evaluation | Model | Accuracy | w/o labels | w/ labels |

| CIFAR-10 Linear Probe |

ResNet-152 | 94.0 | check | |

| SimCLR | 95.3 | check | ||

| iGPT-L 32×32 | 96.3 | check | ||

| CIFAR-100 Linear Probe |

ResNet-152 | 78.0 | check | |

| SimCLR | 80.2 | check | ||

| iGPT-L 32×32 | 82.8 | check | ||

| STL-10 Linear Probe |

AMDIM-L | 94.2 | check | |

| iGPT-L 32×32 | 95.5 | check | ||

| CIFAR-10 Fine-tune |

AutoAugment | 98.5 | ||

| SimCLR | 98.6 | check | ||

| GPipe | 99.0 | check | ||

| iGPT-L | 99.0 | check | ||

| CIFAR-100 Fine-tune |

iGPT-L | 88.5 | check | |

| SimCLR | 89.0 | check | ||

| AutoAugment | 89.3 | |||

| EfficientNet | 91.7 | check | ||

Given the resurgence of interest in unsupervised and self-supervised learning on ImageNet, we also evaluate the performance of our models using linear probes on ImageNet. This is an especially difficult setting, as we do not train at the standard ImageNet input resolution. Nevertheless, a linear probe on the 1536 features from the best layer of iGPT-L trained on 48×48 images yields 65.2% top-1 accuracy, outperforming AlexNet.

Contrastive methods typically report their best results on 8192 features, so we would ideally evaluate iGPT with an embedding dimension of 8192 for comparison. However, training such a model is prohibitively expensive, so we instead concatenate features from multiple layers as an approximation. Unfortunately, our features tend to be correlated across layers, so we need more of them to be competitive. Taking 15360 features from 5 layers in iGPT-XL yields 72.0% top-1 accuracy, outperforming AMDIM, MoCo, and CPC v2, but still underperforming SimCLR by a decent margin.

| Method | Input Resolution | Features | Parameters | Accuracy |

|---|---|---|---|---|

| Rotation | original | 8192 | 86M | 55.4 |

| iGPT-L | 32×32 | 1536 | 1362M | 60.3 |

| BigBiGAN | original | 16384 | 86M | 61.3 |

| iGPT-L | 48×48 | 1536 | 1362M | 65.2 |

| AMDIM | original | 8192 | 626M | 68.1 |

| MoCo | original | 8192 | 375M | 68.6 |

| iGPT-XL | 64×64 | 3072 | 6801M | 68.7 |

| SimCLR | original | 2048 | 24M | 69.3 |

| CPC v2 | original | 4096 | 303M | 71.5 |

| iGPT-XL | 64×64 | 3072 x 5 | 6801M | 72.0 |

| SimCLR | original | 8192 | 375M | 76.5 |

Because masked language models like BERT have outperformed generative models on most language tasks, we also evaluate the performance of BERT on our image models. Instead of training our model to predict the next pixel given all preceding pixels, we mask out 15% of the pixels and train our model to predict them from the unmasked ones. We find that though linear probe performance on BERT models is significantly worse, they excel during fine-tuning:

CIFAR-10

ImageNet

While unsupervised learning promises excellent features without the need for human-labeled data, significant recent progress has been made under the more forgiving framework of semi-supervised learning, which allows for limited amounts of human-labeled data. Successful semi-supervised methods often rely on clever techniques such as consistency regularization, data augmentation, or pseudo-labeling, and purely generative-based approaches have not been competitive for years. We evaluate iGPT-L[7] on a competitive benchmark for this sub-field and find that a simple linear probe on features from non-augmented images outperforms Mean Teacher and MixMatch, though it underperforms FixMatch.

| Model | 40 labels | 250 labels | 4000 labels |

|---|---|---|---|

| Improved GAN | — | — | 81.4 ± 2.3 |

| Mean Teacher | — | 67.7 ± 2.3 | 90.8 ± 0.2 |

| MixMatch | 52.5 ± 11.5 | 89.0 ± 0.9 | 93.6 ± 0.1 |

| iGPT-L | 73.2 ± 01.5 | 87.6 ± 0.6 | 94.3 ± 0.1 |

| UDA | 71.0 ± 05.9 | 91.2 ± 1.1 | 95.1 ± 0.2 |

| FixMatch RA | 86.2 ± 03.4 | 94.9 ± 0.7 | 95.7 ± 0.1 |

| FixMatch CTA | 88.6 ± 03.4 | 94.9 ± 0.3 | 95.7 ± 0.2 |

Limitations

While we have shown that iGPT is capable of learning powerful image features, there are still significant limitations to our approach. Because we use the generic sequence transformer used for GPT-2 in language, our method requires large amounts of compute: iGPT-L was trained for roughly 2500 V100-days while a similarly performing MoCo model can be trained in roughly 70 V100-days.

Relatedly, we model low resolution inputs using a transformer, while most self-supervised results use convolutional-based encoders which can easily consume inputs at high resolution. A new architecture, such as a domain-agnostic multiscale transformer, might be needed to scale further. Given these limitations, our work primarily serves as a proof-of-concept demonstration of the ability of large transformer-based language models to learn excellent unsupervised representations in novel domains, without the need for hardcoded domain knowledge. However, the significant resource cost to train these models and the greater accuracy of convolutional neural-network based methods precludes these representations from practical real-world applications in the vision domain.

Finally, generative models can exhibit biases that are a consequence of the data they’ve been trained on. Many of these biases are useful, like assuming that a combination of brown and green pixels represents a branch covered in leaves, then using this bias to continue the image. But some of these biases will be harmful, when considered through a lens of fairness and representation. For instance, if the model develops a visual notion of a scientist that skews male, then it might consistently complete images of scientists with male-presenting people, rather than a mix of genders. We expect that developers will need to pay increasing attention to the data that they feed into their systems and to better understand how it relates to biases in trained models.

Conclusion

We have shown that by trading off 2-D knowledge for scale and by choosing predictive features from the middle of the network, a sequence transformer can be competitive with top convolutional nets for unsupervised image classification. Notably, we achieved our results by directly applying the GPT-2 language model to image generation. Our results suggest that due to its simplicity and generality, a sequence transformer given sufficient compute might ultimately be an effective way to learn excellent features in many domains.

If you’re excited to work with us on this area of research, we’re hiring!

OpenAI

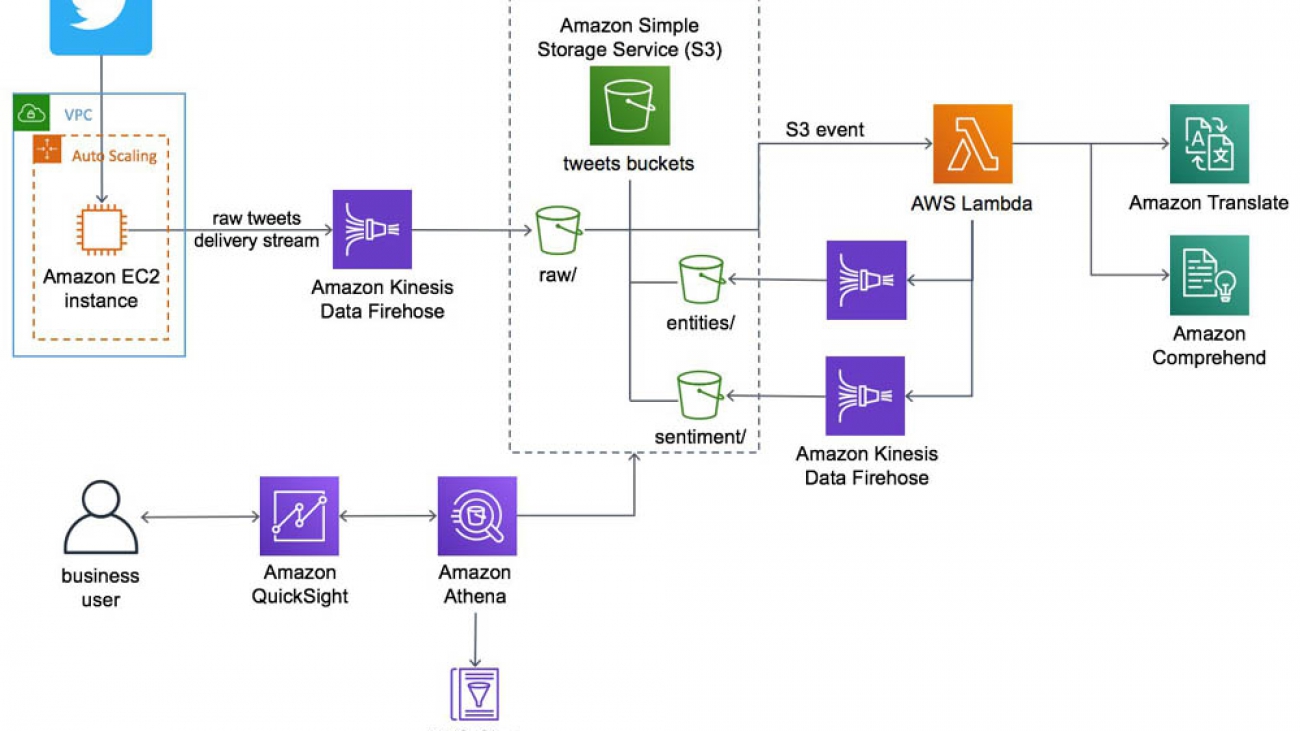

Guy Ben-Baruch is a Senior solution architect in the news & communications team in AWS UKIR. Since Guy joined AWS in March 2016, he has worked closely with enterprise customers, focusing on the telecom vertical, supporting their digital transformation and their cloud adoption. Outside of work, Guy likes doing BBQ and playing football with his kids in the park when the British weather allows it.

Guy Ben-Baruch is a Senior solution architect in the news & communications team in AWS UKIR. Since Guy joined AWS in March 2016, he has worked closely with enterprise customers, focusing on the telecom vertical, supporting their digital transformation and their cloud adoption. Outside of work, Guy likes doing BBQ and playing football with his kids in the park when the British weather allows it. Ankit Dhawan is a Senior Product Manager for Amazon Polly, technology enthusiast, and huge Liverpool FC fan. When not working on delighting our customers, you will find him exploring the Pacific Northwest with his wife and dog. He is an eternal optimist, and loves reading biographies and playing poker. You can indulge him in a conversation on technology, entrepreneurship, or soccer any time of the day.

Ankit Dhawan is a Senior Product Manager for Amazon Polly, technology enthusiast, and huge Liverpool FC fan. When not working on delighting our customers, you will find him exploring the Pacific Northwest with his wife and dog. He is an eternal optimist, and loves reading biographies and playing poker. You can indulge him in a conversation on technology, entrepreneurship, or soccer any time of the day.