Every spring, the basement of the Ray and Maria Stata Center becomes a racetrack for tiny self-driving cars that tear through the halls one by one. Sprinting behind each car on foot is a team of three to six students, sometimes carrying wireless routers or open laptops extended out like Olympic torches. Lining the basement walls, their classmates cheer them on, knowing the effort it took to program the algorithms steering the cars around the course during this annual MIT autonomous racing competition.

The competition is the final project for Course 6.141/16.405 (Robotics: Science and Systems). It’s an end-of-semester event that gets pulses speeding, and prizes are awarded for finishing different race courses with the fastest times out of 20 teams.

With campus evacuated this spring due to the Covid-19 pandemic, however, not a single robotic car burned rubber in the Stata Center basement. Instead, a new race was on as Luca Carlone, the Charles Stark Draper Assistant Professor of Aeronautics and Astronautics and member of the Institute for Data, Systems, and Society; Nicholas Roy, professor of aeronautics and astronautics; and teaching assistants (TAs) including Marcus Abate, Lukas Lao Beyer, and Caris Mariah Moses had only four weeks to figure out how to bring the excitement of this highly-anticipated race online.

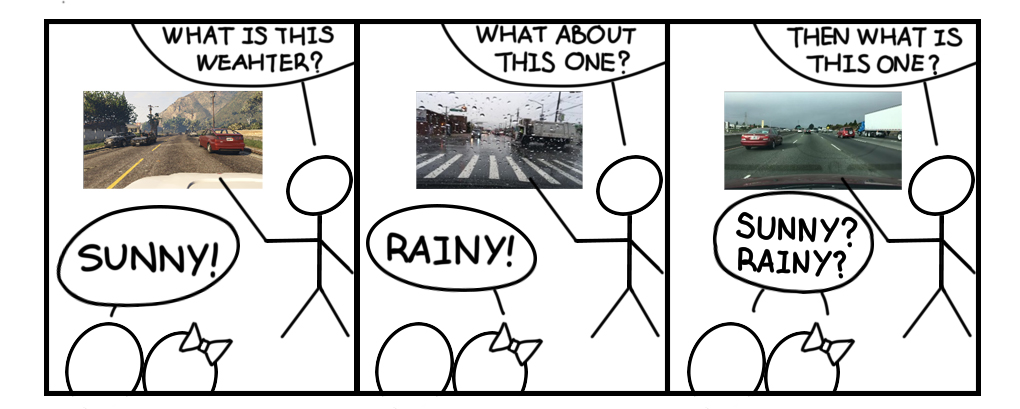

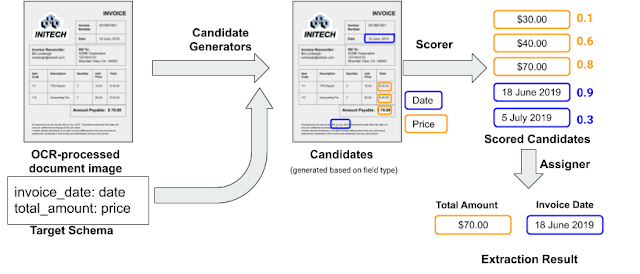

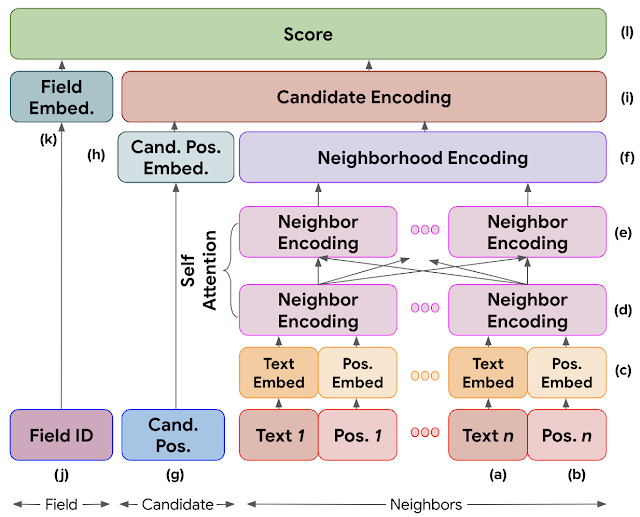

Because the lab sometimes uses a simple simulator for other research, Carlone says they considered taking the race in that direction. With this simple simulator, students could watch as their self-driving cars snaked around a flat map, like a car depicted by a dot moving along a GPS navigation system. Ultimately, they decided that wasn’t the right route. The racing competition needed to be noisy. Realistic. Exciting. The dynamics of the car needed to be nearly as complex as the robotic cars the students had planned to use. Building on his prior research in collaboration with MIT Lincoln Laboratory, Abate worked with Lao Beyer and engineering graduate student Sabina Chen to develop a new photorealistic simulator at the last minute.

The race was back on, and Carlone was impressed by how everything from the cityscape to the sleek car designs looked “as realistic as possible.”

“The modifications involved introducing an outdoor environment based on open-source assets, building in realistic car dynamics for the agent, and adding lidar sensors,” Abate says. “I also had to revamp the interfacing with Python and Robot Operating System (ROS) to make it all plug-and-play for the students.”

What that means is that the race ran a lot like a racing game, such as Gran Turismo or Forza. Only instead of sitting on your couch thumbing the joystick to direct the car, students developed algorithms to anticipate every roadblock and bend ahead. For students, programming for this new environment was perhaps the biggest adjustment. “The simulator used an outdoor scene and a full-sized car with a very different dynamics model than the real-life race car in the Stata basement,” Abate says.

The TAs also had to adjust to complications behind the scenes of the race’s new setting. “A huge amount of effort was put into the new simulator, as well as into the logistics of obtaining and evaluating students’ software,” Lao Beyer says. “Usually, teams are able to configure the software on their race car however they want, but it is very difficult to accommodate for such a diversity of software setups in the virtual race.”

Once the simulator was ready, there was no time to troubleshoot, so TAs made themselves available to debug on the fly any issues that arose. “I think that saved the day for the final project and the final race,” Carlone says.

Programming their autonomous racing code wasn’t the only way that students customized their race experience, though. Co-instructor Jane Abbott brought Writing, Rhetoric, and Professional Communication (WRAP) into the course. As coordinator of the communication-intensive team that focused on helping teams work effectively, she says she was worried the silence that often looms on Zoom would suck out all the energy of the race. She suggested the TAs add a soundtrack.

In the end, the remote race ran for nearly four hours, bringing together more than 100 people in one Zoom call with commentators and Mario Kart music playing. “We got to watch every student’s solution with some cool visualization code running that showed the trajectory and any obstacles hit,” says Samuel Ubellacker, an electrical engineering and computer science student who raced this year. “We got to see how each team’s solution ran much clearer in the simulator because the camera was always following the race car.”

For Yorai Shaoul, another electrical engineering and computer science student in the race, getting out of the basement helped him become more engaged with other teams’ projects. “Before leaving campus, we found ourselves working long hours in the Stata basement,” Shaoul says. “So focused on our robot, we failed to notice that other teams were right there next to us the whole time.”

During the race, other programming solutions his team had overlooked became clear. “The TAs showcased and narrated each team’s run, finally allowing us to see the diverse approaches other teams were developing,” Shaoul says.

“One thing that was nice: When we’ve done it live in the tunnels, you can only see a part of it,” Abbott says. “You sort of stand at a fixed point and you see the car go by. It’s like watching the marathon: you see the runners for 100 yards and then they’re gone.”

Over Zoom, participants could watch every impressive cruise and spectacular crash as it happened, plus replays. Many stayed to watch, and Lao Beyer says, “We managed to retain as much excitement and suspense about the final challenge as possible.” Ubellacker agrees: “It was certainly an unforgettable experience!”

For those students who don’t bro down with Mario, they could also choose the music they wanted to accompany their races. “Near, far, wherever you are,” these lyrics from one team’s choice to use the “Titanic” movie theme “My Heart Will Go On” are a wink to the extra challenge of collaborating as teams at a distance.

One of the masters of ceremonies for the 2020 race, Marwa Abdulhai ’20, was a TA last year and says one obvious benefit of the online race is that it’s a lot easier to figure out why your car crashed. “Pros of this virtual approach have been allowing students to race through the track multiple times and knowing that the car’s performance was primarily due to the algorithm and not any physical constraints,” Abdulhai says.

For Ubellacker that was actually a con, though: “The biggest element that I missed without having a physical car was not being able to experience the differences between simulation and real life.” He says, “Part of the fun to me is designing a system that works perfectly in the simulator, and then getting to figure out all the crazy ways it will fail in the real world!”

Shaoul says instead of working on one car, sometimes it felt like they were working on five individual cars that lived on each team member’s computer. “With one car, it was easy to see how well it did and what required fixing, whereas virtually it was more ambiguous,” Shaoul says. “We faced challenges with keeping track of up-to-date code versions and also simple communication.”

Carlone was concerned students wouldn’t be as invested in their algorithms without the experience of seeing the car’s performance play out in real life to motivate them to push harder. “Every year, the record time on that Stata Center track was getting better and better,” he says. “This year, we were a bit concerned about the performance.”

Fortunately, many students were very much still in the race, with some teams beating the most optimistic predictions, despite having to adjust to new racing conditions and greater challenges collaborating as a team fully online. The winning students completed the race courses sometimes three times faster than other teams, without any collisions. “It was just beyond expectation,” Carlone says.

Although this shift in the final project somewhat changed the takeaways from the course, Carlone says the experience will still advance algorithmic skills for students working on robotics, as well as introducing them to the intensity of communication required to work effectively as remote teams. “Many robotics groups are doing research using photorealistic simulation, because you can test conditions that you cannot test on the real robot,” he says. Co-instructor Roy says it worked so well, the new simulator might become a permanent feature of the course — not to replace the physical race, but as an extra element. “The robotics experience was good,” Carlone says of the 2020 race, but still: “The human experience is, of course, different.”

Read More