In experiments involving real quantum devices and algorithms, automated-reasoning-based method for mapping quantum computations onto quantum circuits is 26 times as fast as predecessors.Read More

The end of an era: the final AWS DeepRacer League Championship at re:Invent 2024

AWS DeepRacer League 2024 Championship finalists at re:Invent 2024

The AWS DeepRacer League is the world’s first global autonomous racing league powered by machine learning (ML). Over the past 6 years, a diverse community of over 560,000 builders from more than 150 countries worldwide have participated in the League to learn ML fundamentals hands-on through the fun of friendly autonomous racing. After an 8-month season of nail-biting virtual qualifiers, finalists convened in person at re:Invent in Las Vegas for one final showdown to compete for prizes and glory in the high-stakes, winner-take-all AWS DeepRacer League Championship. It wasn’t just the largest prize purse in DeepRacer history or the bragging rights of being the fastest builder in the world that was on the line—2024 marked the final year of the beloved League, and the winner of the 2024 Championship would also have the honor of being the very last AWS DeepRacer League Champion.

Tens of thousands raced in Virtual Circuit monthly qualifiers from March to October in an attempt to earn one of only four spots from six regions (24 spots in all), making qualifying for the 2024 Championship one of the most difficult to date. Finalists were tested on consistent performance over consecutive laps rather than “one lucky lap,” making the championship a true test of skill and dashing the championship dreams of some of the most prolific racers, community leaders, and former championship finalists during the regular season. When the dust settled, the best of the best from around the world secured their spots in the championship alongside returning 2023 champion FiatLux (Youssef Addioui) and 2023 Open Winner PolishThunder (Daryl Jezierski). For the rest of the hopefuls, there was only one more opportunity—the WildCard Last Chance qualifier on the Expo floor at re:Invent 2024—to gain entry into the final championship and etch their names in the annals of DeepRacer history.

In a tribute to the League’s origins, the Forever Raceway was chosen as the final championship track. This adaptation of the RL Speedway, which made its initial debut at the DeepRacer League Summit Circuit in 2022, presented a unique challenge: it’s nearly 30% narrower than tracks from the last five seasons, returning to a width only seen in the inaugural League season. The 76-centimeter-wide track leaves little room for error because it exaggerates the curves of the course and forces the vehicle to follow the track contours closely to stay within the borders.

The Wildcard Last Chance

As the Wildcard began on Monday night, the sidelines were packed with a mix of DeepRacer royalty and fresh-faced contenders, all vying for one of the coveted six golden tickets to round 1 of the championship. Twenty elite racers from all corners of the globe unleashed their best models onto the track, each looking for three clean laps (no off-tracks) in a mere 120 seconds to take their place alongside the Virtual Circuit champions.

The following image displays the 2024 qualifiers.

Newcomer and JP Morgan Chase (JPMC) DeepRacer Women’s League winner geervani (Geervani Kolukuluri) made her championship debut, dominating the competition early and showing the crowd exactly why she was the best of the league’s 829 participants and one of the top racers of the 4,400 that participated from JP Morgan Chase in 2024. She was nipping at the heels of community leader, DeepRacer for Cloud contributor, and Wildcard pack leader MattCamp, and less than a second behind SimonHill, another championship rookie and winner of the Eviden internal league.

But the old guard wasn’t about to go down without a fight. DeepRacer legend LeadingAI (Jacky Chen), an educator and mentor to Virtual Circuit winner AIDeepRacer (Nathan Liang), landed the fourth best time of 9.527, slightly ahead of Jerec (Daniel Morgan), who returned to the finals after taking third place in the 2023 Championship. Duckworth (Lars Ludvigson), AWS Community Builder and solutions developer, took the sixth and final Wildcard spot, less than three-tenths of a second above the rest of the pack.

The field of 32 begins

The first 16 of 32 code warriors hit the track on Tuesday, each one going for glory in two heart-pounding, 2-minute sprints to secure their spot in the bracket of eight over 2 days of competition. MattCamp proved he was no one-hit wonder by blazing across the finish line with Tuesday’s fastest time of 8.223 seconds, geervani still just milliseconds behind at 8.985. Asian Pacific regional stars TonyJ (Tony Jenness) and CodeMachine (Igor Maksaev Brockway) came out swinging, claiming their place in the top four just ahead of 2023 champion FiatLux of the EMEA region, who dug deep to stay in the game, finishing fifth with a time of 9.223. Liao (Wei-Chen Liao), Nevertari (Neveen Ahmed), and BSi (Bobby Stenly) also edged above the pack as the day of racing ended, their positions precarious as they hovered just inside the top eight for the day.

The tension was high and the stands full on Wednesday as the second day of Round 1 racing began, the second 16 ready to burn rubber and leave the Tuesday competitors in the dust. AIDeepRacer (Nathan Liang) quickly dethroned MattCamp with a blistering time of 8.102, looking to redeem himself from barely missing the bracket of eight in the 2022 Championship. 2023 Global points winner rosscomp1 (Ross Williams) slid into the top four right behind the indomitable SimonHill, while geervani, TonyJ, and CodeMachine managed to stay in the game as the Wednesday racers tried to best their times.

A mechanical failure in his second attempt gave community leader MarkRoss one last attempt to break into the top eight. His 8.635-second run looked like it might just be his ticket to Round 2, but a challenge from the sidelines had everyone holding their breath. The tension was palpable as the DeepRacer Pit Crew reviewed video footage to determine if Mark’s car had stayed within track limits, knowing that a spot in the semifinals—and a shot at the $25,000 grand prize—hung in the balance. Mark’s hopes were dashed when the replay revealed all four wheels left the track, and PolishThunder snuck into Round 2 by the skin of their teeth, claiming the coveted eighth-place spot.

The following images show Blaine Sundrud and Ryan Myrehn commentating on Round 2 (left) and the off-track footage (right).

And then there were eight

On Thursday morning, racers and spectators returned to the Forever Raceway for the bracket of eight, a series of head-to-head battles pitting the top racers against each other in a grueling double elimination format. Each matchup was another contest of speed and accuracy, another chance for the undefeatable to be toppled from their thrones and underdogs to scrap their way back into contention in the last chance bracket, as shown in the following image.

First seed AIDeepRacer dominated their first two matchups, sending rosscomp1 and PolishThunder to early defeat, definitively earning a place in the final three. Sixth seed TonyJ couldn’t get his average lap under 12 seconds against third seed SimonHill, while fellow regional heavyweight CodeMachine was unable to close the 1-second gap between him and second seed MattCamp, the strong favorite coming out of the Wildcard and round 1. That left MattCamp and SimonHill to one last battle for a place in the top three, with SimonHill finally gaining the upper hand in a 9.026- to 9.478-second shootout, sending MattCamp to the Last Chance bracket to try to fight his way back to the top.

With one final spot in the top three up for grabs, five-time championship finalist and eighth-seed PolishThunder, who was stunned into defeat in the very first matchup of the day, mounted an equally stunning comeback as he bested fifth seed geervani in match five and then knocked out fellow DeepRacer veteran, friend, and fourth-seed rosscomp1 with a remarkable 8.389-second time. MattCamp regained his mojo to beat TonyJ in match 10, setting him and PolishThunder up for a showdown to see who would go up against SimonHill and AIDeepRacer in the finale. Undaunted, PolishThunder toppled the Wildcard winner once and for all, taking him one step closer to being the DeepRacer Champion.

The final three

After almost 4 days of triumph and heartbreak, only three competitors remained for one final winner-take-all time trial that would determine, for the last time, the fastest builder in the world. Commentators Ryan Myrehn and Blaine Sundrud, who have been with the DeepRacer program since the very beginning, took to the mic together as the sidelines filled with finalists, spectators, and Pit Crew eager to see who would take home the championship trophy.

Prior to their final lap, each racer was able to choose a car and calibrate it to their liking, giving them an opportunity to optimize for the best performance with their chosen model. A coin toss decided the racing direction, counterclockwise, and each was given 1 minute to test the newly calibrated cars to make sure they were happy with their adjustments. With three cars tested, calibrated, and loaded with their best models, it was down to just 6 minutes of racing that would determine the 2024 Champion.

PolishThunder took to the track first, starting the finale strong as his model averaged a mere 8.436 seconds in laps five, six, and seven, setting a high bar for his competitors. SimonHill answered with a blazing 8.353 seconds, the fastest counterclockwise average of the competition, edging out the comeback king with less than a pixel’s width between them. Then, with just one race left, the crowd watched with bated breath as the competition’s top seed AIDeepRacer approached the starting line, hoping to replicate his enviable lap times from previous rounds. After a tense 2 minutes, first-time competitor SimonHill held onto first place, winning the $25,000 grand prize, the coveted DeepRacer trophy, and legendary status as the final DeepRacer champion. PolishThunder, who previously hadn’t broken the top six in his DeepRacer career, finished second, and AIDeepRacer third for his second, and best, championship appearance since his debut in 2022.

As the final chapter came to a close and trophies were handed out, racers and Pit Crew who have worked, competed, and built lifelong friendships around a 1/18th scale autonomous car celebrated together one more time on the very last championship track. Although the League may have concluded, the legacy of DeepRacer and its unique ability to teach ML and the foundations of generative AI through gamified learning continues. Support for DeepRacer in the AWS console will continue through the end of 2025, and DeepRacer will enter a new era as organizations around the world will also be able to launch their own leagues and competitions at a fraction of the cost with the AWS DeepRacer Solution. Featuring the same functionality as the console and deployable anywhere, the Solution will also contain new workshops and resources bridging fundamental concepts of ML using AWS DeepRacer with foundation model (FM) training and fine-tuning techniques, using AWS services such as Amazon SageMaker and Amazon Bedrock for popular industry use cases. Look for the Solution to kickstart your company’s ML transformation starting in Q2 of 2025.

The following images show championship finalists and pit crew at re:Invent 2024 (left) and 2024 League Champion Simon Hill with his first-place trophy (right).

|

|

Join the DeepRacer community at deepracing.io.

About the Author

Jackie Moffett is a Senior Project Manager in the AWS AI Builder Programs Product Marketing team. She believes hands-on is the best way to learn and is passionate about building better systems to create exceptional customer experiences. Outside of work she loves to travel, is addicted to learning new things, and definitely wants to say hi to your dog.

Jackie Moffett is a Senior Project Manager in the AWS AI Builder Programs Product Marketing team. She believes hands-on is the best way to learn and is passionate about building better systems to create exceptional customer experiences. Outside of work she loves to travel, is addicted to learning new things, and definitely wants to say hi to your dog.

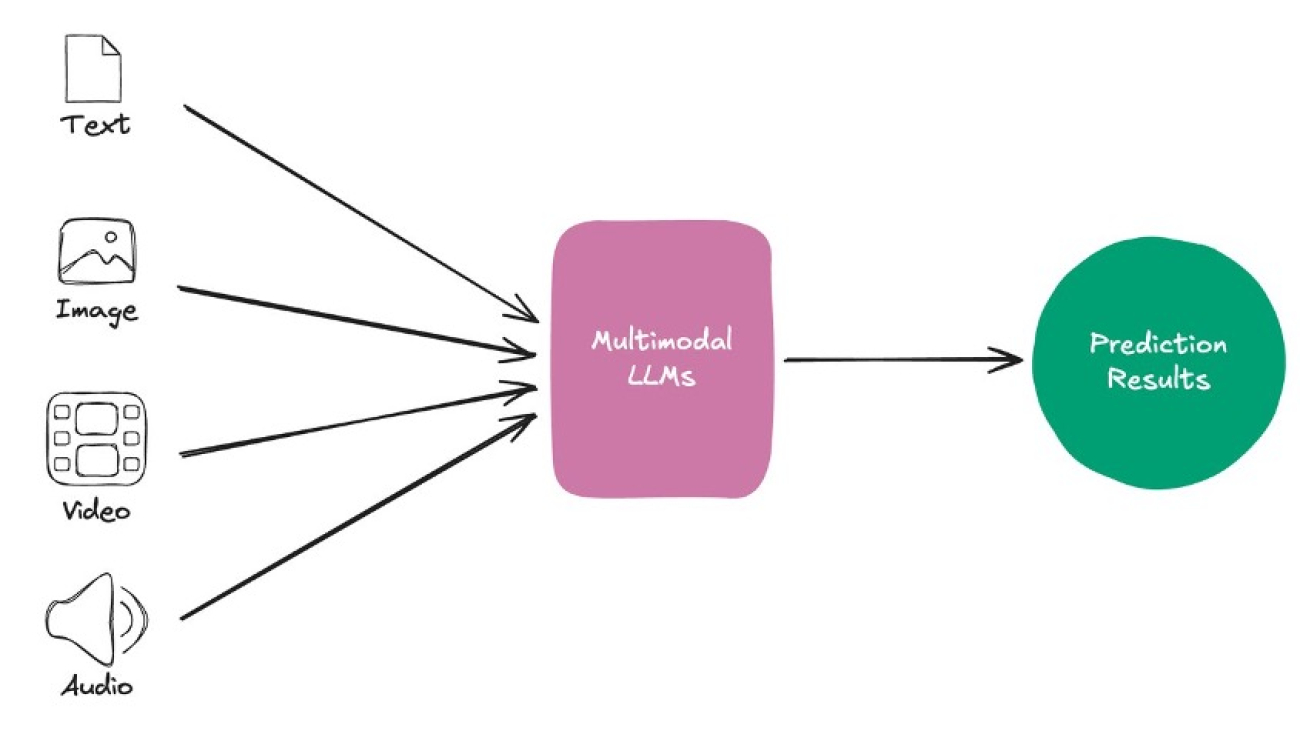

Evaluate healthcare generative AI applications using LLM-as-a-judge on AWS

In our previous blog posts, we explored various techniques such as fine-tuning large language models (LLMs), prompt engineering, and Retrieval Augmented Generation (RAG) using Amazon Bedrock to generate impressions from the findings section in radiology reports using generative AI. Part 1 focused on model fine-tuning. Part 2 introduced RAG, which combines LLMs with external knowledge bases to reduce hallucinations and improve accuracy in medical applications. Through real-time retrieval of relevant medical information, RAG systems can provide more reliable and contextually appropriate responses, making them particularly valuable for healthcare applications where precision is crucial. In both previous posts, we used traditional metrics like ROUGE scores for performance evaluation. This metric is suitable for evaluating general summarization tasks, but can’t effectively assess whether a RAG system successfully integrates retrieved medical knowledge or maintains clinical accuracy.

In Part 3, we’re introducing an approach to evaluate healthcare RAG applications using LLM-as-a-judge with Amazon Bedrock. This innovative evaluation framework addresses the unique challenges of medical RAG systems, where both the accuracy of retrieved medical knowledge and the quality of generated medical content must align with stringent standards such as clear and concise communication, clinical accuracy, and grammatical accuracy. By using the latest models from Amazon and the newly released RAG evaluation feature for Amazon Bedrock Knowledge Bases, we can now comprehensively assess how well these systems retrieve and use medical information to generate accurate, contextually appropriate responses.

This advancement in evaluation methodology is particularly crucial as healthcare RAG applications become more prevalent in clinical settings. The LLM-as-a-judge approach provides a more nuanced evaluation framework that considers both the quality of information retrieval and the clinical accuracy of generated content, aligning with the rigorous standards required in healthcare.

In this post, we demonstrate how to implement this evaluation framework using Amazon Bedrock, compare the performance of different generator models, including Anthropic’s Claude and Amazon Nova on Amazon Bedrock, and showcase how to use the new RAG evaluation feature to optimize knowledge base parameters and assess retrieval quality. This approach not only establishes new benchmarks for medical RAG evaluation, but also provides practitioners with practical tools to build more reliable and accurate healthcare AI applications that can be trusted in clinical settings.

Overview of the solution

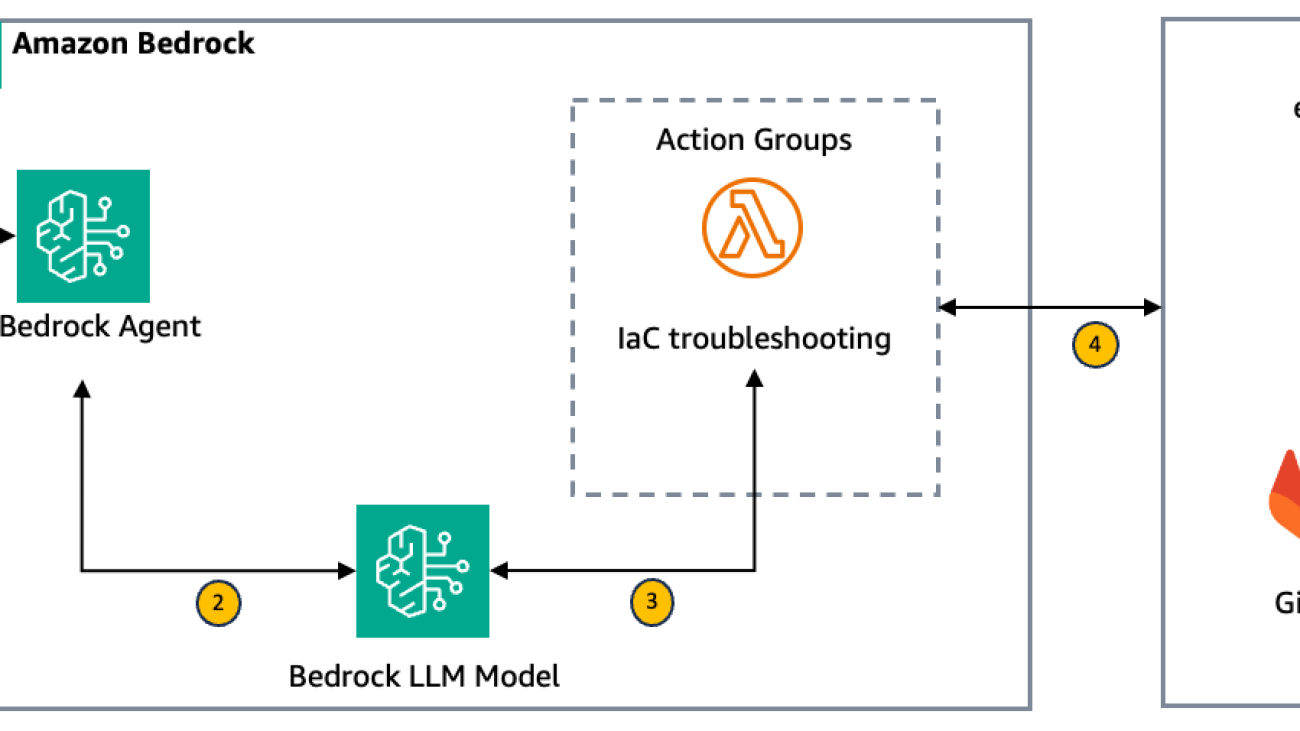

The solution uses Amazon Bedrock Knowledge Bases evaluation capabilities to assess and optimize RAG applications specifically for radiology findings and impressions. Let’s examine the key components of this architecture in the following figure, following the data flow from left to right.

The workflow consists of the following phases:

- Data preparation – Our evaluation process begins with a prompt dataset containing paired radiology findings and impressions. This clinical data undergoes a transformation process where it’s converted into a structured JSONL format, which is essential for compatibility with the knowledge base evaluation system. After it’s prepared, this formatted data is securely uploaded to an Amazon Simple Storage Service (Amazon S3) bucket, providing accessibility and data security throughout the evaluation process.

- Evaluation processing – At the heart of our solution lies an Amazon Bedrock Knowledge Bases evaluation job. This component processes the prepared data while seamlessly integrating with Amazon Bedrock Knowledge Bases. This integration is crucial because it enables the system to create specialized medical RAG capabilities specifically tailored for radiology findings and impressions, making sure that the evaluation considers both medical context and accuracy.

- Analysis – The final stage empowers healthcare data scientists with detailed analytical capabilities. Through an advanced automated report generation system, professionals can access detailed analysis of performance metrics of the summarization task for impression generation. This comprehensive reporting system enables thorough assessment of both retrieval quality and generation accuracy, providing valuable insights for system optimization and quality assurance.

This architecture provides a systematic and thorough approach to evaluating medical RAG applications, providing both accuracy and reliability in healthcare contexts where precision and dependability are paramount.

Dataset and background

The MIMIC Chest X-ray (MIMIC-CXR) database v2.0.0 is a large, publicly available dataset of chest radiographs in DICOM format with free-text radiology reports. We used the MIMIC CXR dataset consisting of 91,544 reports, which can be accessed through a data use agreement. This requires user registration and the completion of a credentialing process.

During routine clinical care, clinicians trained in interpreting imaging studies (radiologists) will summarize their findings for a particular study in a free-text note. The reports were de-identified using a rule-based approach to remove protected health information. Because we used only the radiology report text data, we downloaded just one compressed report file (mimic-cxr-reports.zip) from the MIMIC-CXR website. For evaluation, 1,000 of the total 2,000 reports from a subset of MIMIC-CXR dataset were used. This is referred to as the dev1 dataset. Another set of 1,000 of the total 2,000 radiology reports (referred to as dev2) from the chest X-ray collection from the Indiana University hospital network were also used.

RAG with Amazon Bedrock Knowledge Bases

Amazon Bedrock Knowledge Bases helps take advantage of RAG, a popular technique that involves drawing information from a data store to augment the responses generated by LLMs. We used Amazon Bedrock Knowledge Bases to generate impressions from the findings section of the radiology reports by enriching the query with context that is received from querying the knowledge base. The knowledge base is set up to contain findings and corresponding impression sections of 91,544 MIMIC-CXR radiology reports as {prompt, completion} pairs.

LLM-as-a-judge and quality metrics

LLM-as-a-judge represents an innovative approach to evaluating AI-generated medical content by using LLMs as automated evaluators. This method is particularly valuable in healthcare applications where traditional metrics might fail to capture the nuanced requirements of medical accuracy and clinical relevance. By using specialized prompts and evaluation criteria, LLM-as-a-judge can assess multiple dimensions of generated medical content, providing a more comprehensive evaluation framework that aligns with healthcare professionals’ standards.

Our evaluation framework encompasses five critical metrics, each designed to assess specific aspects of the generated medical content:

- Correctness – Evaluated on a 3-point Likert scale, this metric measures the factual accuracy of generated responses by comparing them against ground truth responses. In the medical context, this makes sure that the clinical interpretations and findings align with the source material and accepted medical knowledge.

- Completeness – Using a 5-point Likert scale, this metric assesses whether the generated response comprehensively addresses the prompt holistically while considering the ground truth response. It makes sure that critical medical findings or interpretations are not omitted from the response.

- Helpfulness – Measured on a 7-point Likert scale, this metric evaluates the practical utility of the response in clinical contexts, considering factors such as clarity, relevance, and actionability of the medical information provided.

- Logical coherence – Assessed on a 5-point Likert scale, this metric examines the response for logical gaps, inconsistencies, or contradictions, making sure that medical reasoning flows naturally and maintains clinical validity throughout the response.

- Faithfulness – Scored on a 5-point Likert scale, this metric specifically evaluates whether the response contains information not found in or quickly inferred from the prompt, helping identify potential hallucinations or fabricated medical information that could be dangerous in clinical settings.

These metrics are normalized in the final output and job report card, providing standardized scores that enable consistent comparison across different models and evaluation scenarios. This comprehensive evaluation framework not only helps maintain the reliability and accuracy of medical RAG systems, but also provides detailed insights for continuous improvement and optimization. For details about the metric and evaluation prompts, see Evaluator prompts used in a knowledge base evaluation job.

Prerequisites

Before proceeding with the evaluation setup, make sure you have the following:

- An active AWS account with appropriate permissions

- Amazon Bedrock model access enabled in your preferred AWS Region

- An S3 bucket with CORS enabled for storing evaluation data

- An Amazon Bedrock knowledge base

- An AWS Identity and Access Management (IAM) role with necessary permissions for Amazon S3 and Amazon Bedrock

The solution code can be found at the following GitHub repo.

Make sure that your knowledge base is fully synced and ready before initiating an evaluation job.

Convert the test dataset into JSONL for RAG evaluation

In preparation for evaluating our RAG system’s performance on radiology reports, we implemented a data transformation pipeline to convert our test dataset into the required JSONL format. The following code shows the format of the original dev1 and dev2 datasets:

{

"prompt": "value of prompt key",

"completion": "value of completion key"

}

Output Format

{

"conversationTurns": [{

"referenceResponses": [{

"content": [{

"text": "value from completion key"

}]

}],

"prompt": {

"content": [{

"text": "value from prompt key"

}]

}

}]

}

Drawing from Wilcox’s seminal paper The Written Radiology Report, we carefully structured our prompt to include comprehensive guidelines for generating high-quality impressions:

import json

import random

import boto3

# Initialize the S3 client

s3 = boto3.client('s3')

# S3 bucket name

bucket_name = "<BUCKET_NAME>"

# Function to transform a single record

def transform_record(record):

return {

"conversationTurns": [

{

"referenceResponses": [

{

"content": [

{

"text": record["completion"]

}

]

}

],

"prompt": {

"content": [

{

"text": """You're given a radiology report findings to generate a concise radiology impression from it.

A Radiology Impression is the radiologist's final concise interpretation and conclusion of medical imaging findings, typically appearing at the end of a radiology report.

n Follow these guidelines when writing the impression:

n- Use clear, understandable language avoiding obscure terms.

n- Number each impression.

n- Order impressions by importance.

n- Keep impressions concise and shorter than the findings section.

n- Write for the intended reader's understanding.n

Findings: n""" + record["prompt"]

}

]

}

}

]

}

The script processes individual records, restructuring them to include conversation turns with both the original radiology findings and their corresponding impressions, making sure each report maintains the professional standards outlined in the literature. To maintain a manageable dataset size set used by this feature, we randomly sampled 1,000 records from the original dev1 and dev2 datasets, using a fixed random seed for reproducibility:

# Read from input file and write to output file

def convert_file(input_file_path, output_file_path, sample_size=1000):

# First, read all records into a list

records = []

with open(input_file_path, 'r', encoding='utf-8') as input_file:

for line in input_file:

records.append(json.loads(line.strip()))

# Randomly sample 1000 records

random.seed(42) # Set the seed first

sampled_records = random.sample(records, sample_size)

# Write the sampled and transformed records to the output file

with open(output_file_path, 'w', encoding='utf-8') as output_file:

for record in sampled_records:

transformed_record = transform_record(record)

output_file.write(json.dumps(transformed_record) + 'n')

# Usage

input_file_path = '<INPUT_FILE_NAME>.jsonl' # Replace with your input file path

output_file_path = '<OUTPUT_FILE_NAME>.jsonl' # Replace with your desired output file path

convert_file(input_file_path, output_file_path)

# File paths and S3 keys for the transformed files

transformed_files = [

{'local_file': '<OUTPUT_FILE_NAME>.jsonl', 'key': '<FOLDER_NAME>/<OUTPUT_FILE_NAME>.jsonl'},

{'local_file': '<OUTPUT_FILE_NAME>.jsonl', 'key': '<FOLDER_NAME>/<OUTPUT_FILE_NAME>.jsonl'}

]

# Upload files to S3

for file in transformed_files:

s3.upload_file(file['local_file'], bucket_name, file['key'])

print(f"Uploaded {file['local_file']} to s3://{bucket_name}/{file['key']}")

Set up a RAG evaluation job

Our RAG evaluation setup begins with establishing core configurations for the Amazon Bedrock evaluation job, including the selection of evaluation and generation models (Anthropic’s Claude 3 Haiku and Amazon Nova Micro, respectively). The implementation incorporates a hybrid search strategy with a retrieval depth of 10 results, providing comprehensive coverage of the knowledge base during evaluation. To maintain organization and traceability, each evaluation job is assigned a unique identifier with timestamp information, and input data and results are systematically managed through designated S3 paths. See the following code:

import boto3

from datetime import datetime

# Generate unique name for the job

job_name = f"rag-eval-{datetime.now().strftime('%Y-%m-%d-%H-%M-%S')}"

# Configure knowledge base and model settings

knowledge_base_id = "<KNOWLEDGE_BASE_ID>"

evaluator_model = "anthropic.claude-3-haiku-20240307-v1:0"

generator_model = "amazon.nova-micro-v1:0"

role_arn = "<IAM_ROLE_ARN>"

# Specify S3 locations

input_data = "<INPUT_S3_PATH>"

output_path = "<OUTPUT_S3_PATH>"

# Configure retrieval settings

num_results = 10

search_type = "HYBRID"

# Create Bedrock client

bedrock_client = boto3.client('bedrock')

With the core configurations in place, we initiate the evaluation job using the Amazon Bedrock create_evaluation_job API, which orchestrates a comprehensive assessment of our RAG system’s performance. The evaluation configuration specifies five key metrics—correctness, completeness, helpfulness, logical coherence, and faithfulness—providing a multi-dimensional analysis of the generated radiology impressions. The job is structured to use the knowledge base for retrieval and generation tasks, with the specified models handling their respective roles: Amazon Nova Micro for generation and Anthropic’s Claude 3 Haiku for evaluation, and the results are systematically stored in the designated S3 output location for subsequent analysis. See the following code:

retrieve_generate_job = bedrock_client.create_evaluation_job(

jobName=job_name,

jobDescription="Evaluate retrieval and generation",

roleArn=role_arn,

applicationType="RagEvaluation",

inferenceConfig={

"ragConfigs": [{

"knowledgeBaseConfig": {

"retrieveAndGenerateConfig": {

"type": "KNOWLEDGE_BASE",

"knowledgeBaseConfiguration": {

"knowledgeBaseId": knowledge_base_id,

"modelArn": generator_model,

"retrievalConfiguration": {

"vectorSearchConfiguration": {

"numberOfResults": num_results,

"overrideSearchType": search_type

}

}

}

}

}

}]

},

outputDataConfig={

"s3Uri": output_path

},

evaluationConfig={

"automated": {

"datasetMetricConfigs": [{

"taskType": "Custom",

"dataset": {

"name": "RagDataset",

"datasetLocation": {

"s3Uri": input_data

}

},

"metricNames": [

"Builtin.Correctness",

"Builtin.Completeness",

"Builtin.Helpfulness",

"Builtin.LogicalCoherence",

"Builtin.Faithfulness"

]

}],

"evaluatorModelConfig": {

"bedrockEvaluatorModels": [{

"modelIdentifier": evaluator_model

}]

}

}

}

)

Evaluation results and metrics comparisons

The evaluation results for the healthcare RAG applications, using datasets dev1 and dev2, demonstrate strong performance across the specified metrics. For the dev1 dataset, the scores were as follows: correctness at 0.98, completeness at 0.95, helpfulness at 0.83, logical coherence at 0.99, and faithfulness at 0.79. Similarly, the dev2 dataset yielded scores of 0.97 for correctness, 0.95 for completeness, 0.83 for helpfulness, 0.98 for logical coherence, and 0.82 for faithfulness. These results indicate that the RAG system effectively retrieves and uses medical information to generate accurate and contextually appropriate responses, with particularly high scores in correctness and logical coherence, suggesting robust factual accuracy and logical consistency in the generated content.

The following screenshot shows the evaluation summary for the dev1 dataset.

The following screenshot shows the evaluation summary for the dev2 dataset.

Additionally, as shown in the following screenshot, the LLM-as-a-judge framework allows for the comparison of multiple evaluation jobs across different models, datasets, and prompts, enabling detailed analysis and optimization of the RAG system’s performance.

Additionally, you can perform a detailed analysis by drilling down and investigating the outlier cases with least performance metrics such as correctness, as shown in the following screenshot.

Metrics explainability

The following screenshot showcases the detailed metrics explainability interface of the evaluation system, displaying example conversations with their corresponding metrics assessment. Each conversation entry includes four key columns: Conversation input, Generation output, Retrieved sources, and Ground truth, along with a Score column. The system provides a comprehensive view of 1,000 examples, with navigation controls to browse through the dataset. Of particular note is the retrieval depth indicator showing 10 for each conversation, demonstrating consistent knowledge base utilization across examples.

The evaluation framework enables detailed tracking of generation metrics and provides transparency into how the knowledge base arrives at its outputs. Each example conversation presents the complete chain of information, from the initial prompt through to the final assessment. The system displays the retrieved context that informed the generation, the actual generated response, and the ground truth for comparison. A scoring mechanism evaluates each response, with a detailed explanation of the decision-making process visible through an expandable interface (as shown by the pop-up in the screenshot). This granular level of detail allows for thorough analysis of the RAG system’s performance and helps identify areas for optimization in both retrieval and generation processes.

In this specific example from the Indiana University Medical System dataset (dev2), we see a clear assessment of the system’s performance in generating a radiology impression for chest X-ray findings. The knowledge base successfully retrieved relevant context (shown by 10 retrieved sources) to generate an impression stating “Normal heart size and pulmonary vascularity 2. Unremarkable mediastinal contour 3. No focal consolidation, pleural effusion, or pneumothorax 4. No acute bony findings.” The evaluation system scored this response with a perfect correctness score of 1, noting in the detailed explanation that the candidate response accurately summarized the key findings and correctly concluded there was no acute cardiopulmonary process, aligning precisely with the ground truth response.

In the following screenshot, the evaluation system scored this response with a low score of 0.5, noting in the detailed explanation the ground truth response provided is “Moderate hiatal hernia. No definite pneumonia.” This indicates that the key findings from the radiology report are the presence of a moderate hiatal hernia and the absence of any definite pneumonia. The candidate response covers the key finding of the moderate hiatal hernia, which is correctly identified as one of the impressions. However, the candidate response also includes additional impressions that are not mentioned in the ground truth, such as normal lung fields, normal heart size, unfolded aorta, and degenerative changes in the spine. Although these additional impressions might be accurate based on the provided findings, they are not explicitly stated in the ground truth response. Therefore, the candidate response is partially correct and partially incorrect based on the ground truth.

Clean up

To avoid incurring future charges, delete the S3 bucket, knowledge base, and other resources that were deployed as part of the post.

Conclusion

The implementation of LLM-as-a-judge for evaluating healthcare RAG applications represents a significant advancement in maintaining the reliability and accuracy of AI-generated medical content. Through this comprehensive evaluation framework using Amazon Bedrock Knowledge Bases, we’ve demonstrated how automated assessment can provide detailed insights into the performance of medical RAG systems across multiple critical dimensions. The high-performance scores across both datasets indicate the robustness of this approach, though these metrics are just the beginning.

Looking ahead, this evaluation framework can be expanded to encompass broader healthcare applications while maintaining the rigorous standards essential for medical applications. The dynamic nature of medical knowledge and clinical practices necessitates an ongoing commitment to evaluation, making continuous assessment a cornerstone of successful implementation.

Through this series, we’ve demonstrated how you can use Amazon Bedrock to create and evaluate healthcare generative AI applications with the precision and reliability required in clinical settings. As organizations continue to refine these tools and methodologies, prioritizing accuracy, safety, and clinical utility in healthcare AI applications remains paramount.

About the Authors

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Adewale Akinfaderin is a Sr. Data Scientist–Generative AI, Amazon Bedrock, where he contributes to cutting edge innovations in foundational models and generative AI applications at AWS. His expertise is in reproducible and end-to-end AI/ML methods, practical implementations, and helping global customers formulate and develop scalable solutions to interdisciplinary problems. He has two graduate degrees in physics and a doctorate in engineering.

Priya Padate is a Senior Partner Solution Architect supporting healthcare and life sciences worldwide at Amazon Web Services. She has over 20 years of healthcare industry experience leading architectural solutions in areas of medical imaging, healthcare related AI/ML solutions and strategies for cloud migrations. She is passionate about using technology to transform the healthcare industry to drive better patient care outcomes.

Priya Padate is a Senior Partner Solution Architect supporting healthcare and life sciences worldwide at Amazon Web Services. She has over 20 years of healthcare industry experience leading architectural solutions in areas of medical imaging, healthcare related AI/ML solutions and strategies for cloud migrations. She is passionate about using technology to transform the healthcare industry to drive better patient care outcomes.

Dr. Ekta Walia Bhullar is a principal AI/ML/GenAI consultant with AWS Healthcare and Life Sciences business unit. She has extensive experience in development of AI/ML applications for healthcare especially in Radiology. During her tenure at AWS she has actively contributed to applications of AI/ML/GenAI within lifescience domain such as for clinical, drug development and commercial lines of business.

Dr. Ekta Walia Bhullar is a principal AI/ML/GenAI consultant with AWS Healthcare and Life Sciences business unit. She has extensive experience in development of AI/ML applications for healthcare especially in Radiology. During her tenure at AWS she has actively contributed to applications of AI/ML/GenAI within lifescience domain such as for clinical, drug development and commercial lines of business.

AWS DeepRacer: Closing time at AWS re:Invent 2024 –How did that physical racing go?

Having spent the last years studying the art of AWS DeepRacer in the physical world, the author went to AWS re:Invent 2024. How did it go?

In AWS DeepRacer: How to master physical racing?, I wrote in detail about some aspects relevant to racing AWS DeepRacer in the physical world. We looked at the differences between the virtual and the physical world and how we could adapt the simulator and the training approach to overcome the differences. The previous post was left open-ended—with one last Championship Final left, it was too early to share all my secrets.

Now that AWS re:Invent is over, it’s time to share my strategy, how I prepared, and how it went in the end.

Strategy

Going into the 2024 season, I was reflecting on my performance from 2022 and 2023. In 2022, I had unstable models that were unable to do fast laps on the new re:Invent 2022 Championship track, not even making the last 32. In 2023, things went slightly better, but it was clear that there was potential to improve.

Specifically, I wanted a model that:

- Goes straight on the straights and corners with precision

- Has a survival instinct and avoids going off-track even in a tight spot

- Can ignore the visual noise seen around the track

Combine that with the ability to test the models before showing up at the Expo, and success seemed possible!

Implementation

In this section, I will explain my thinking about why physical racing is so different than virtual racing, as well as describe my approach to training a model that overcomes those differences.

How hard can it be to go straight?

If you have watched DeepRacer over the years, you have probably seen that most models struggle to go straight on the straights and end up oscillating left and right. The question has always been: why is it like that? This behavior causes two issues: the distance driven increases (result: slower lap time) and the car potentially enters the next turn in a way it can’t handle (result: off-track).

A few theories emerged:

- Sim-to-real issues – The steering response isn’t matching the simulator, both with regards to the steering geometry and latency (time from picture to servo command, as well as the time it takes the servo to actually actuate). Therefore, when the car tries to adjust the direction on the straight, it doesn’t get the response it expects.

- Model issues – A combination of the model not actually using the straight action, and not having access to angles needed to dampen oscillations (2.5–5.0 degrees).

- Calibration issues – If the car isn’t calibrated to go straight when given a 0-degree action, and the left/right max values are either too high (tendency to oversteer) or too low (tendency to understeer), you are likely to get control issues and unstable behavior.

My approach:

- Use the Ackermann steering geometry patch. With it, the car will behave more realistically, and the turning radius will decrease for a given angle. As a result, the action space can be limited to angles up to about 20 degrees. This roughly matches with the real car’s steering angle.

- Include stabilizing steering angles (2.5 and 5.0) in the action space, allowing for minor corrections on the straights.

- Use relatively slow speeds (0.8–1.3 m/s) to avoid slipping in the simulator. My theory is that the 15 fps simulator and the 30 fps car actually translates 1.2 mps in the simulator into effectively 2.4 mps in the real world.

- By having an inverted chevron action space giving higher speeds for straights, nudge the car to use the straight actions, rather than oscillating left-right actions.

- Try out v3, v4, and v5 physical models—test on a real track to see what works best.

- Otherwise, the reward function was the same progress-based reward function I also use in virtual racing.

The following figure illustrates the view of testing in the garage, going straight at least one frame.

Be flexible

Virtual racing is (almost) deterministic, and over time, the model will converge and the car will take a narrow path, reducing the variety in the situations it sees. Early in training, it will frequently be in odd positions, almost going off-track, and it remembers how to get out of these situations. As it converges, the frequency at which it must handle these reduces, and the theory is that the memory fades, and at some point, it forgets how to get out of a tight spot.

My approach:

- Diversify training to teach the car to handle a variety of corners, in both directions:

- Consistently train models going both clockwise and counterclockwise.

- Use tracks—primarily the 2022 Championship track—that are significantly more complex than the Forever Raceway.

- Do final optimization on the Forever Raceway—again in both directions.

- Take several snapshots during training; don’t go below 0.5 in entropy.

- Test on tracks the car has never seen. The simulator has many suitable, narrow tracks—the hallmark of a generalized model is one that can handle tracks it has never seen during training.

Stay focused on the track

In my last post, I looked at the visual differences between the virtual and real worlds. The question is what to do about it. The goal is to trick the model into ignoring the noise and focus on what is important: the track.

My approach:

- Train in an environment with significantly more visual noise. The tracks in the custom track repository have added noise through additional lights, buildings, and different walls (and some even come with shadows).

- Alter the environment during training to avoid overfitting to the added noise. The custom tracks were made in such a way that different objects (buildings, walls, and lines) could be made invisible at runtime. I had a cron job randomizing the environment every 5 minutes.

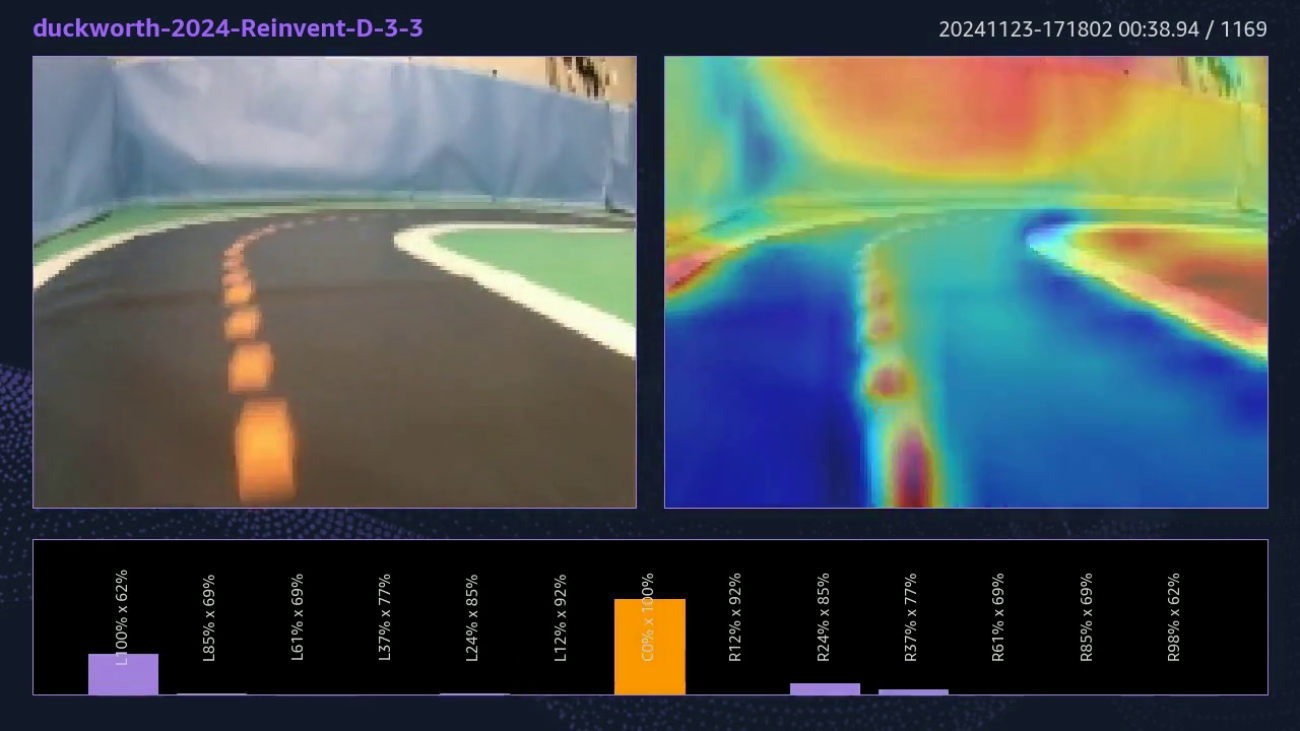

The following figure illustrates the varied training environment.

What I didn’t consider this year was simulating blurring during training. I attempted this previously by averaging the current camera frame with the previous one before inferencing. It didn’t seem to help.

Lens distortion is a topic I have observed, but not fully investigated. The original camera has a distinct fish-eye distortion, and Gazebo would be able to replicate it, but it would require some work to actually determine the coefficients. Equally, I have never tried to replicate the rolling motions of the real car.

Testing

Testing took place in the garage on the Trapezoid Narrow track. The track is obviously basic, but with two straights and two 180-degree turns with different radii, it had to do the job. The garage track also had enough visual noise to see if the models were robust enough.

The method was straightforward: try all models both clockwise and counterclockwise. Using the logs captured by the custom car stack, I spent the evening looking through the video of each run to determine which model I liked the best—looking at stability, handling (straight on straights plus precision cornering), and speed.

re:Invent 2024

The track for re:Invent 2024 was the Forever Raceway. The shape of the track isn’t new; it shares the centerline with the 2022 Summit Speedway, but being only 76 cm wide (the original was 1.07 cm), the turns become more pronounced, making it a significantly more difficult track.

The environment

The environment is classic re:Invent: a smooth track with very little shine combined with smooth, fairly tall walls surrounding the track. The background is what often causes trouble—this year, a large lit display hung under the ceiling at the far end of the track, and as the following figure shows, it was attracting quite some attention from the GradCam.

Similarly, the pit crew cage, where cars are maintained, attracted attention.

The results

So where did I end up, and why? In Round 1, I ended up at place 14, with a best average of 10.072 seconds, and a best lap time of 9.335 seconds. Not great, but also not bad—almost 1 second outside top 8.

Using the overhead camera provided by AWS through the Twitch stream, it’s possible to create a graphical view showing the path the car took, as shown in the following figure.

If we compare this with how the same model liked to drive in training, we see a bit of a difference.

What becomes obvious quite quickly is that although I succeeded in going straight on the (upper) straight, the car didn’t corner as tightly as during training, making the bottom half of the track a bit of a mess. Nevertheless, the car demonstrated the desired survival instinct and stayed on track even when faced with unexpectedly sharp corners.

Why did this happen:

- 20 degrees of turning using Ackermann steering is too much; the real car isn’t capable of doing it in the real world

- The turning radius is increasing as the speed goes up due to slipping, caused both by low friction and lack of grip due to rolling

- The reaction time plays more of a role as the speed increases, and my model acted too late, overshooting into the corner

The combined turning radius and reaction time effect also caused issues at the start. If the car goes slowly, it turns much faster—and ends up going off-track on the inside—causing issues for me and others.

My takeaways:

- Overall, the training approach seemed to work well. Well-calibrated cars went straight on the straights, and background noise didn’t seem to bother my models much.

- I need to get closer to the car’s actual handling characteristics at speed during training by increasing the max speed and reducing the max angle in the action space.

- Physical racing is still not well understood—and it’s a lot about model-meets-car. Some models thrive on objectively perfectly calibrated cars, whereas others work great when matched with a particular one.

- Track is king—those that had access to the track, either through their employer or having built one at home, had a massive advantage, even if almost everyone said that they were surprised by which model worked in the end.

Now enjoy the inside view of a car at re:Invent, and see if you can detect any of the issues that I have discussed. The video was recorded after I had been knocked out of the competition using a car with the custom car software.

Closing time: Where do we go from here?

This section is best enjoyed with Semisonic’s Closing Time as a soundtrack.

As we all wrapped up at the Expo after an intense week of racing, re:Invent literally being dismantled around us, the question was: what comes next?

This was the last DeepRacer Championship, but the general sentiment was that whereas nobody will really miss virtual racing—it is a problem solved—physical racing is still a whole lot of fun, and the community is not yet ready to move on. Since re:Invent several initiatives have gained traction with a common goal to make DeepRacer more accessible:

- By enrolling cars with the DeepRacer Custom Car software stack into DeepRacer Event Manager you can capture car logs and generate the analytics videos, as shown in this article, directly during your event!

- DeepRacer Pi and DeepRacer Custom Car initiatives allow racers to build cars at home:Use off-the-shelf components for a 1:18 scale racer, or

- Combine off-the-shelf components with a custom circuit board to build the 1:28 scale DeepRacer Pi Mini Both options are compatible with already trained models, including integration with DeepRacer Event Manager.

DeepRacer Custom Console will be a drop-in replacement for the current car UI with a beautiful UI designed in Cloudscape, aligning the design with DREM and the AWS Console.

Prototype DeepRacer Pi Mini – 1 :28 scale

Closing Words

DeepRacer is a fantastic way to teach AI in a very physical and visual way, and is suitable for older kids, students, and adults in the corporate setting alike. It will be interesting to see how AWS, its corporate partners, and the community will continue the journey in the years ahead.

A big thank you goes to all of those that have been involved in DeepRacer from its inception to today—too many to be named—it has been a wonderful experience. A big congratulations goes out to this years’ winners!

Closing time, every new beginning comes from some other beginning’s end…

About the Author

Lars Lorentz Ludvigsen is a technology enthusiast who was introduced to AWS DeepRacer in late 2019 and was instantly hooked. Lars works as a Managing Director at Accenture, where he helps clients build the next generation of smart connected products. In addition to his role at Accenture, he’s an AWS Community Builder who focuses on developing and maintaining the AWS DeepRacer community’s software solutions.

Amazon announces Ocelot quantum chip

Amazon announces Ocelot quantum chip

Prototype is the first realization of a scalable, hardware-efficient quantum computing architecture based on bosonic quantum error correction.

Quantum technologies

Fernando Brando Oskar PainterFebruary 27, 06:00 AMApril 30, 08:32 PM

Today we are happy to announce Ocelot, our first-generation quantum chip. Ocelot represents Amazon Web Services pioneering effort to develop, from the ground up, a hardware implementation of quantum error correction that is both resource efficient and scalable. Based on superconducting quantum circuits, Ocelot achieves the following major technical advances:

- The first realization of a scalable architecture for bosonic error correction, surpassing traditional qubit approaches to reducing error correction overhead;

- The first implementation of a noise-biased gate a key to unlocking the type of hardware-efficient error correction necessary for building scalable, commercially viable quantum computers;

- State-of-the-art performance for superconducting qubits, with bit-flip times approaching one second in tandem with phase-flip times of 20 microseconds.

We believe that scaling Ocelot to a full-fledged quantum computer capable of transformative societal impact would require as little as one-tenth as many resources as common approaches, helping bring closer the age of practical quantum computing.

The quantum performance gap

Quantum computers promise to perform some computations much faster even exponentially faster than classical computers. This means quantum computers can solve some problems that are forever beyond the reach of classical computing.

Practical applications of quantum computing will require sophisticated quantum algorithms with billions of quantum gates the basic operations of a quantum computer. But current quantum computers extreme sensitivity to environmental noise means that the best quantum hardware today can run only about a thousand gates without error. How do we bridge this gap?

Quantum error correction: the key to reliable quantum computing

Quantum error correction, first proposed theoretically in the 1990s, offers a solution. By sharing the information in each logical qubit across multiple physical qubits, one can protect the information within a quantum computer from external noise. Not only this, but errors can be detected and corrected in a manner analogous to the classical error correction methods used in digital storage and communication.

Recent experiments have demonstrated promising progress, but todays best logical qubits, based on superconducting or atomic qubits, still exhibit error rates a billion times larger than the error rates needed for known quantum algorithms of practical utility and quantum advantage.

The challenge of qubit overhead

While quantum error correction provides a path to bridging the enormous chasm between todays error rates and those required for practical quantum computation, it comes with a severe penalty in terms of resource overhead. Reducing logical-qubit error rates requires scaling up the redundancy in the number of physical qubits per logical qubit.

Traditional quantum error correction methods, such as those using the surface error-correcting code, currently require thousands (and if we work really, really hard, maybe in the future, hundreds) of physical qubits per logical qubit to reach the desired error rates. That means that a commercially relevant quantum computer would require millions of physical qubits many orders of magnitude beyond the qubit count of current hardware.

One fundamental reason for this high overhead is that quantum systems experience two types of errors: bit-flip errors (also present in classical bits) and phase-flip errors (unique to qubits). Whereas classical bits require only correction of bit flips, qubits require an additional layer of redundancy to handle both types of errors.

Although subtle, this added complexity leads to quantum systems large resource overhead requirement. For comparison, a good classical error-correcting code could realize the error rate we desire for quantum computing with less than 30% overhead, roughly one-ten-thousandth the overhead of the conventional surface code approach (assuming bit error rates of 0.5%, similar to qubit error rates in current hardware).

Cat qubits: an approach to more efficient error correction

Quantum systems in nature can be more complex than qubits, which consist of just two quantum states (usually labeled 0 and 1 in analogy to classical digital bits). Take for example the simple harmonic oscillator, which oscillates with a well-defined frequency. Harmonic oscillators come in all sorts of shapes and sizes, from the mechanical metronome used to keep time while playing music to the microwave electromagnetic oscillators used in radar and communication systems.

Classically, the state of an oscillator can be represented by the amplitude and phase of its oscillations. Quantum mechanically, the situation is similar, although the amplitude and phase are never simultaneously perfectly defined, and there is an underlying graininess to the amplitude associated with each quanta of energy one adds to the system.

These quanta of energy are what are called bosonic particles, the best known of which is the photon, associated with the electromagnetic field. The more energy we pump into the system, the more bosons (photons) we create, and the more oscillator states (amplitudes) we can access. Bosonic quantum error correction, which relies on bosons instead of simple two-state qubit systems, uses these extra oscillator states to more effectively protect quantum information from environmental noise and to do more efficient error correction.

One type of bosonic quantum error correction uses cat qubits, named after the dead/alive Schrdinger cat of Erwin Schrdinger’s famous thought experiment. Cat qubits use the quantum superposition of classical-like states of well-defined amplitude and phase to encode a qubits worth of information. Just a few years after Peter Shors seminal 1995 paper on quantum error correction, researchers began quietly developing an alternative approach to error correction based on cat qubits.

A major advantage of cat qubits is their inherent protection against bit-flip errors. Increasing the number of photons in the oscillator can make the rate of the bit-flip errors exponentially small. This means that instead of increasing qubit count, we can simply increase the energy of an oscillator, making error correction far more efficient.

The past decade has seen pioneering experiments demonstrating the potential of cat qubits. However, these experiments have mostly focused on single-cat-qubit demonstrations, leaving open the question of whether cat qubits could be integrated into a scalable architecture.

Ocelot: demonstrating the scalability of bosonic quantum error correction

Today in Nature, we published the results of our measurements on Ocelot, and its quantum error correction performance. Ocelot represents an important step on the road to practical quantum computers, leveraging chip-scale integration of cat qubits to form a scalable, hardware-efficient architecture for quantum error correction. In this approach,

- bit-flip errors are exponentially suppressed at the physical-qubit level;

- phase-flip errors are corrected using a repetition code, the simplest classical error-correcting code; and

- highly noise-biased controlled-NOT (C-NOT) gates, between each cat qubit and ancillary transmon qubits (the conventional qubit used in superconducting quantum circuits), enable phase-flip-error detection while preserving the cats bit-flip protection.

The Ocelot logical-qubit memory chip, shown schematically above, consists of five cat data qubits, each housing an oscillator that is used to store the quantum data. The storage oscillator of each cat qubit is connected to two ancillary transmon qubits for phase-flip-error detection and paired with a special nonlinear buffer circuit used to stabilize the cat qubit states and exponentially suppress bit-flip errors.

Tuning up the Ocelot device involves calibrating the bit- and phase-flip error rates of the cat qubits against the cat amplitude (average photon number) and optimizing the noise-bias of the C-NOT gate used for phase-flip-error detection. Our experimental results show that we can achieve bit-flip times approaching one second, more than a thousand times longer than the lifetime of conventional superconducting qubits.

Critically, this can be accomplished with a cat amplitude as small as four photons, enabling us to retain phase-flip times of tens of microseconds, sufficient for quantum error correction. From there, we run a sequence of error correction cycles to test the performance of the circuit as a logical-qubit memory. In order to characterize the performance of the repetition code and the scalability of the architecture, we studied subsets of the Ocelot cat qubits, representing different repetition code lengths.

The logical phase-flip error rate was seen to drop significantly when the code distance was increased from distance-3 to distance-5 (i.e., from a code with three cat qubits to one with five) across a wide range of cat photon numbers, indicating the effectiveness of the repetition code.

When bit-flip errors were included, the total logical error rate was measured to be 1.72% per cycle for the distance-3 code and 1.65% per cycle for the distance-5 code. The comparability of the total error rate of the distance-5 code to that of the shorter distance-3 code, with fewer cat qubits and opportunities for bit-flip errors, can be attributed to the large noise bias of the C-NOT gate and its effectiveness in suppressing bit-flip errors. This noise bias is what allows Ocelot to achieve a distance-5 code with less than a fifth as many qubits five data qubits and four ancilla qubits, versus 49 qubits for a surface code device.

What we scale matters

From the billions of transistors in a modern GPU to the massive-scale GPU clusters powering AI models, the ability to scale efficiently is a key driver of technological progress. Similarly, scaling the number of qubits to accommodate the overhead required of quantum error correction will be key to realizing commercially valuable quantum computers.

But the history of computing shows that scaling the right component can have massive consequences for cost, performance, and even feasibility. The computer revolution truly took off when the transistor replaced the vacuum tube as the fundamental building block to scale.

Ocelot represents our first chip with the cat qubit architecture, and an initial test of its suitability as a fundamental building block for implementing quantum error correction. Future versions of Ocelot are being developed that will exponentially drive down logical error rates, enabled by both an improvement in component performance and an increase in code distance.

Codes tailored to biased noise, such as the repetition code used in Ocelot, can significantly reduce the number of physical qubits required. In our forthcoming paper Hybrid cat-transmon architecture for scalable, hardware-efficient quantum error correction, we find that scaling Ocelot could reduce quantum error correction overhead by up to 90% compared to conventional surface code approaches with similar physical-qubit error rates.

We believe that Ocelot’s architecture, with its hardware-efficient approach to error correction, positions us well to tackle the next phase of quantum computing: learning how to scale. Using a hardware-efficient approach will allow us to more quickly and cost effectively achieve an error-corrected quantum computer that benefits society.

Over the last few years, quantum computing has entered an exciting new era in which quantum error correction has moved from the blackboard to the test bench. With Ocelot, we are just beginning down a path to fault-tolerant quantum computation. For those interested in joining us on this journey, we are hiring for positions across our quantum computing stack. Visit Amazon Jobs and enter the keyword quantum.

Research areas: Quantum technologies

How Pattern PXM’s Content Brief is driving conversion on ecommerce marketplaces using AI

Brands today are juggling a million things, and keeping product content up-to-date is at the top of the list. Between decoding the endless requirements of different marketplaces, wrangling inventory across channels, adjusting product listings to catch a customer’s eye, and trying to outpace shifting trends and fierce competition, it’s a lot. And let’s face it—staying ahead of the ecommerce game can feel like running on a treadmill that just keeps speeding up. For many, it results in missed opportunities and revenue that doesn’t quite hit the mark.

“Managing a diverse range of products and retailers is so challenging due to the varying content requirements, imagery, different languages for different regions, formatting and even the target audiences that they serve.”

– Martin Ruiz, Content Specialist, Kanto

Pattern is a leader in ecommerce acceleration, helping brands navigate the complexities of selling on marketplaces and achieve profitable growth through a combination of proprietary technology and on-demand expertise. Pattern was founded in 2013 and has expanded to over 1,700 team members in 22 global locations, addressing the growing need for specialized ecommerce expertise.

Pattern has over 38 trillion proprietary ecommerce data points, 12 tech patents and patents pending, and deep marketplace expertise. Pattern partners with hundreds of brands, like Nestle and Philips, to drive revenue growth. As the top third-party seller on Amazon, Pattern uses this expertise to optimize product listings, manage inventory, and boost brand presence across multiple services simultaneously.

In this post, we share how Pattern uses AWS services to process trillions of data points to deliver actionable insights, optimizing product listings across multiple services.

Content Brief: Data-backed content optimization for product listings

Pattern’s latest innovation, Content Brief, is a powerful AI-driven tool designed to help brands optimize their product listings and accelerate growth across online marketplaces. Using Pattern’s dataset of over 38 trillion ecommerce data points, Content Brief provides actionable insights and recommendations to create standout product content that drives traffic and conversions.

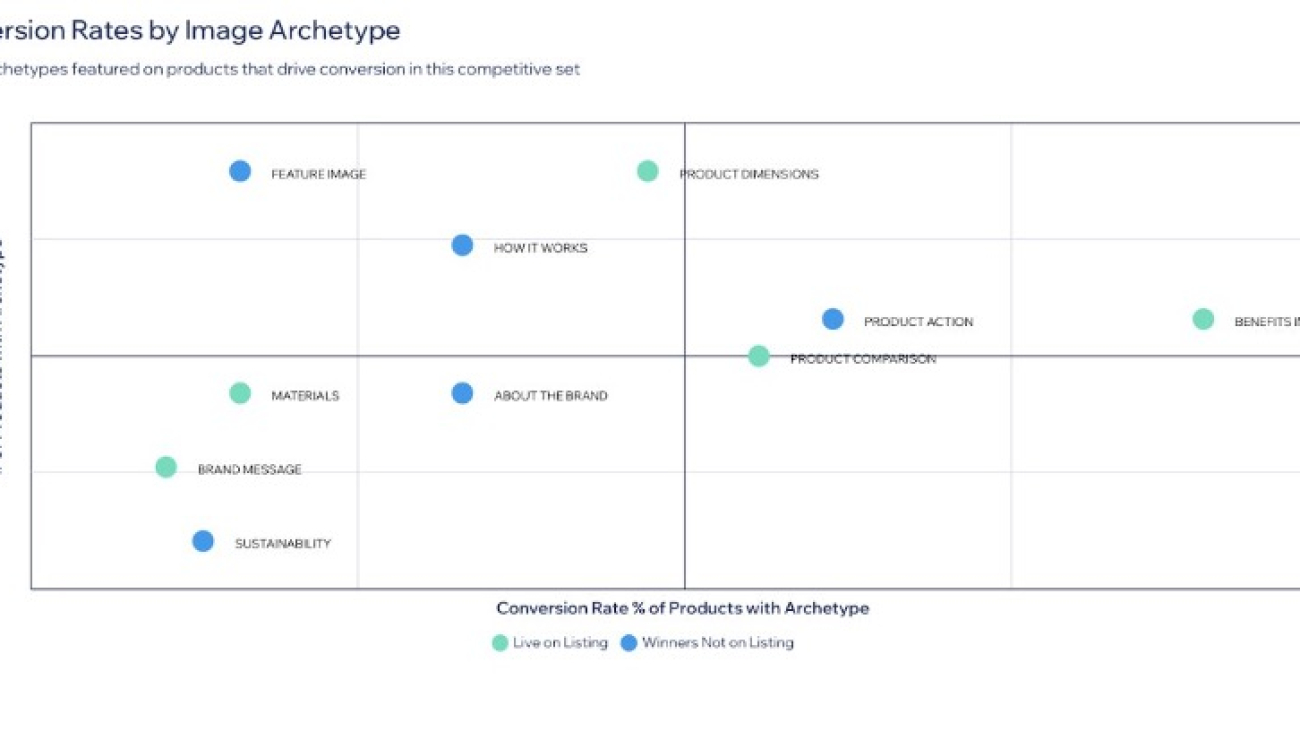

Content Brief analyzes consumer demographics, discovery behavior, and content performance to give brands a comprehensive understanding of their product’s position in the marketplace. What would normally require months of research and work is now done in minutes. Content Brief takes the guesswork out of product strategy with tools that do the heavy lifting. Its attribute importance ranking shows you which product features deserve the spotlight, and the image archetype analysis makes sure your visuals engage customers.

As shown in the following screenshot, the image archetype feature shows attributes that are driving sales in a given category, allowing brands to highlight the most impactful features in the image block and A+ image content.

Content Brief incorporates review and feedback analysis capabilities. It uses sentiment analysis to process customer reviews, identifying recurring themes in both positive and negative feedback, and highlights areas for potential improvement.

Content Brief’s Search Family analysis groups similar search terms together, helping brands understand distinct customer intent and tailor their content accordingly. This feature combined with detailed persona insights helps marketers create highly targeted content for specific segments. It also offers competitive analysis, providing side-by-side comparisons with competing products, highlighting areas where a brand’s product stands out or needs improvement.

“This is the thing we need the most as a business. We have all of the listening tools, review sentiment, keyword things, but nothing is in a single place like this and able to be optimized to my listing. And the thought of writing all those changes back to my PIM and then syndicating to all of my retailers, this is giving me goosebumps.”

– Marketing executive, Fortune 500 brand

Brands using Content Brief can more quickly identify opportunities for growth, adapt to change, and maintain a competitive edge in the digital marketplace. From search optimization and review analysis to competitive benchmarking and persona targeting, Content Brief empowers brands to create compelling, data-driven content that drives both traffic and conversions.

Select Brands looked to improve their Amazon performance and partnered with Pattern. Content Brief’s insights led to updates that caused a transformation for their Triple Buffet Server listing’s image stack. Their old image stack was created for marketplace requirements, whereas the new image stack was optimized with insights based on product attributes to highlight from category and sales data. The updated image stack featured bold product highlights and captured shoppers with lifestyle imagery. The results were a 21% MoM revenue surge, 14.5% more traffic, and a 21 bps conversion lift.

“Content Brief is a perfect example of why we chose to partner with Pattern. After just one month of testing, we see how impactful it can be for driving incremental growth—even on products that are already performing well. We have a product that, together with Pattern, we were able to grow into a top performer in its category in less than 2 years, and it’s exciting to see how adding this additional layer can grow revenue even for that product, which we already considered to be strong.”

– Eric Endres, President, Select Brands

To discover how Content Brief helped Select Brands boost their Amazon performance, refer to the full case study.

The AWS backbone of Content Brief

At the heart of Pattern’s architecture lies a carefully orchestrated suite of AWS services. Amazon Simple Storage Service (Amazon S3) serves as the cornerstone for storing product images, crucial for comprehensive ecommerce analysis. Amazon Textract is employed to extract and analyze text from these images, providing valuable insights into product presentation and enabling comparisons with competitor listings. Meanwhile, Amazon DynamoDB acts as the powerhouse behind Content Brief’s rapid data retrieval and processing capabilities, storing both structured and unstructured data, including content brief object blobs.

Pattern’s approach to data management is both innovative and efficient. As data is processed and analyzed, they create a shell in DynamoDB for each content brief, progressively injecting data as it’s processed and refined. This method allows for rapid access to partial results and enables further data transformations as needed, making sure that brands have access to the most up-to-date insights.

The following diagram illustrates the pipeline workflow and architecture.

Scaling to handle 38 trillion data points

Processing over 38 trillion data points is no small feat, but Pattern has risen to the challenge with a sophisticated scaling strategy. At the core of this strategy is Amazon Elastic Container Store (Amazon ECS) with GPU support, which handles the computationally intensive tasks of natural language processing and data science. This setup allows Pattern to dynamically scale resources based on demand, providing optimal performance even during peak processing times.

To manage the complex flow of data between various AWS services, Pattern employs Apache Airflow. This orchestration tool manages the intricate dance of data with a primary DAG, creating and managing numerous sub-DAGs as needed. This innovative use of Airflow allows Pattern to efficiently manage complex, interdependent data processing tasks at scale.

But scaling isn’t just about processing power—it’s also about efficiency. Pattern has implemented batching techniques in their AI model calls, resulting in up to 50% cost reduction for two-batch processing while maintaining high throughput. They’ve also implemented cross-region inference to improve scalability and reliability across different geographical areas.

To keep a watchful eye on their system’s performance, Pattern employs LLM observability techniques. They monitor AI model performance and behavior, enabling continuous system optimization and making sure that Content Brief is operating at peak efficiency.

Using Amazon Bedrock for AI-powered insights

A key component of Pattern’s Content Brief solution is Amazon Bedrock, which plays a pivotal role in their AI and machine learning (ML) capabilities. Pattern uses Amazon Bedrock to implement a flexible and secure large language model (LLM) strategy.

Model flexibility and optimization

Amazon Bedrock offers support for multiple foundation models (FMs), which allows Pattern to dynamically select the most appropriate model for each specific task. This flexibility is crucial for optimizing performance across various aspects of Content Brief:

- Natural language processing – For analyzing product descriptions, Pattern uses models optimized for language understanding and generation.

- Sentiment analysis – When processing customer reviews, Amazon Bedrock enables the use of models fine-tuned for sentiment classification.

- Image analysis – Pattern currently uses Amazon Textract for extracting text from product images. However, Amazon Bedrock also offers advanced vision-language models that could potentially enhance image analysis capabilities in the future, such as detailed object recognition or visual sentiment analysis.

The ability to rapidly prototype on different LLMs is a key component of Pattern’s AI strategy. Amazon Bedrock offers quick access to a variety of cutting-edge models o facilitate this process, allowing Pattern to continuously evolve Content Brief and use the latest advancements in AI technology. Today, this allows the team to build seamless integration and use various state-of-the-art language models tailored to different tasks, including the new, cost-effective Amazon Nova models.

Prompt engineering and efficiency

Pattern’s team has developed a sophisticated prompt engineering process, continually refining their prompts to optimize both quality and efficiency. Amazon Bedrock offers support for custom prompts, which allows Pattern to tailor the model’s behavior precisely to their needs, improving the accuracy and relevance of AI-generated insights.

Moreover, Amazon Bedrock offers efficient inference capabilities that help Pattern optimize token usage, reducing costs while maintaining high-quality outputs. This efficiency is crucial when processing the vast amounts of data required for comprehensive ecommerce analysis.

Security and data privacy

Pattern uses the built-in security features of Amazon Bedrock to uphold data protection and compliance. By employing AWS PrivateLink, data transfers between Pattern’s virtual private cloud (VPC) and Amazon Bedrock occur over private IP addresses, never traversing the public internet. This approach significantly enhances security by reducing exposure to potential threats.

Furthermore, the Amazon Bedrock architecture makes sure that Pattern’s data remains within their AWS account throughout the inference process. This data isolation provides an additional layer of security and helps maintain compliance with data protection regulations.

“Amazon Bedrock’s flexibility is crucial in the ever-evolving landscape of AI, enabling Pattern to utilize the most effective and efficient models for their diverse ecommerce analysis needs. The service’s robust security features and data isolation capabilities give us peace of mind, knowing that our data and our clients’ information are protected throughout the AI inference process.”

– Jason Wells, CTO, Pattern

Building on Amazon Bedrock, Pattern has created a secure, flexible, and efficient AI-powered solution that continuously evolves to meet the dynamic needs of ecommerce optimization.

Conclusion

Pattern’s Content Brief demonstrates the power of AWS in revolutionizing data-driven solutions. By using services like Amazon Bedrock, DynamoDB, and Amazon ECS, Pattern processes over 38 trillion data points to deliver actionable insights, optimizing product listings across multiple services.

Inspired to build your own innovative, high-performance solution? Explore AWS’s suite of services at aws.amazon.com and discover how you can harness the cloud to bring your ideas to life. To learn more about how Content Brief could help your brand optimize its ecommerce presence, visit pattern.com.

About the Author

Parker Bradshaw is an Enterprise SA at AWS who focuses on storage and data technologies. He helps retail companies manage large data sets to boost customer experience and product quality. Parker is passionate about innovation and building technical communities. In his free time, he enjoys family activities and playing pickleball.

Parker Bradshaw is an Enterprise SA at AWS who focuses on storage and data technologies. He helps retail companies manage large data sets to boost customer experience and product quality. Parker is passionate about innovation and building technical communities. In his free time, he enjoys family activities and playing pickleball.

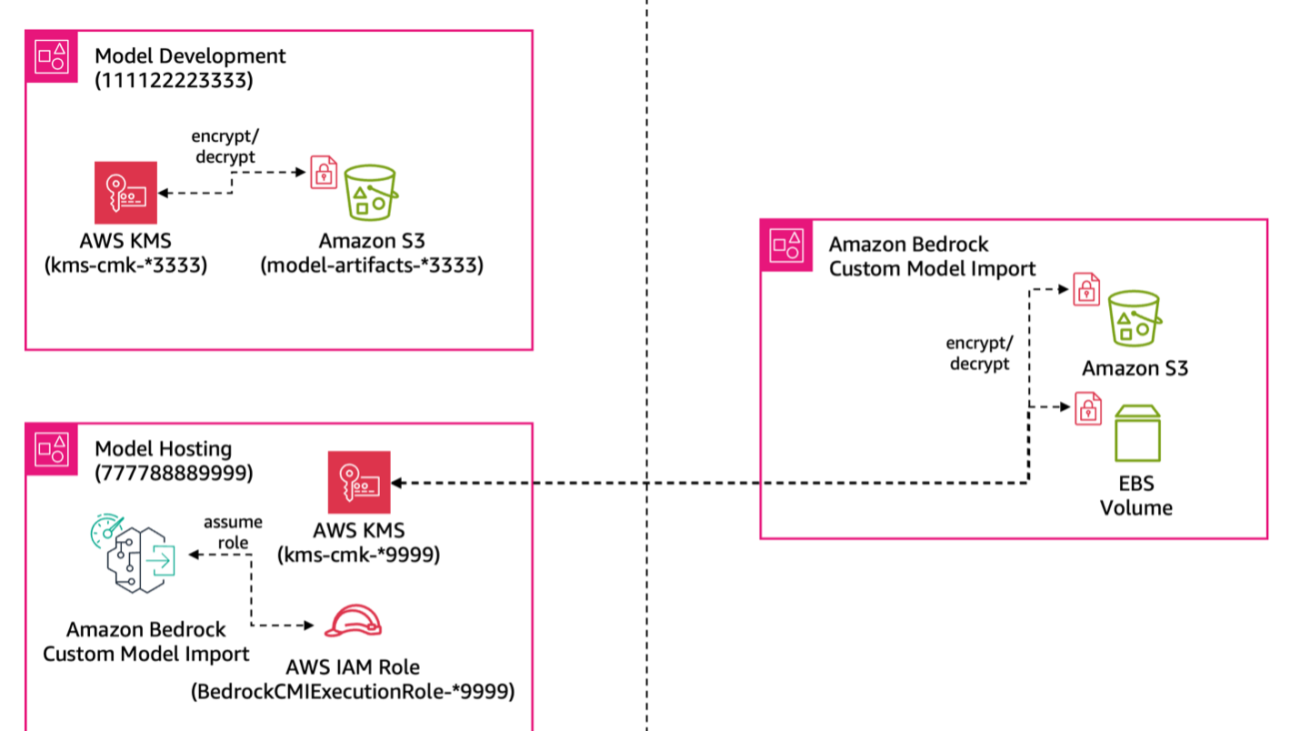

How to configure cross-account model deployment using Amazon Bedrock Custom Model Import

In enterprise environments, organizations often divide their AI operations into two specialized teams: an AI research team and a model hosting team. The research team is dedicated to developing and enhancing AI models using model training and fine-tuning techniques. Meanwhile, a separate hosting team is responsible for deploying these models across their own development, staging, and production environments.

With Amazon Bedrock Custom Model Import, the hosting team can import and serve custom models using supported architectures such as Meta Llama 2, Llama 3, and Mistral using On-Demand pricing. Teams can import models with weights in Hugging Face safetensors format from Amazon SageMaker or from Amazon Simple Storage Service (Amazon S3). These imported custom models work alongside existing Amazon Bedrock foundation models (FMs) through a single, unified API in a serverless manner, alleviating the need to manage model deployment and scaling.

However, in such enterprise environments, these teams often work in separate AWS accounts for security and operational reasons. The model development team’s training results, known as model artifacts, for example model weights, are typically stored in S3 buckets within the research team’s AWS account, but the hosting team needs to access these artifacts from another account to deploy models. This creates a challenge: how do you securely share model artifacts between accounts?

This is where cross-account access becomes important. With Amazon Bedrock Custom Model Import cross-account support, we can help you configure direct access between the S3 buckets storing model artifacts and the hosting account. This streamlines your operational workflow while maintaining security boundaries between teams. One of our customers quotes: