Advancing adaptive AI agents, empowering 3D scene creation, and innovating LLM training for a smarter, safer futureRead More

Google DeepMind at NeurIPS 2024

Advancing adaptive AI agents, empowering 3D scene creation, and innovating LLM training for a smarter, safer futureRead More

Google DeepMind at NeurIPS 2024

Advancing adaptive AI agents, empowering 3D scene creation, and innovating LLM training for a smarter, safer futureRead More

Google DeepMind at NeurIPS 2024

Advancing adaptive AI agents, empowering 3D scene creation, and innovating LLM training for a smarter, safer futureRead More

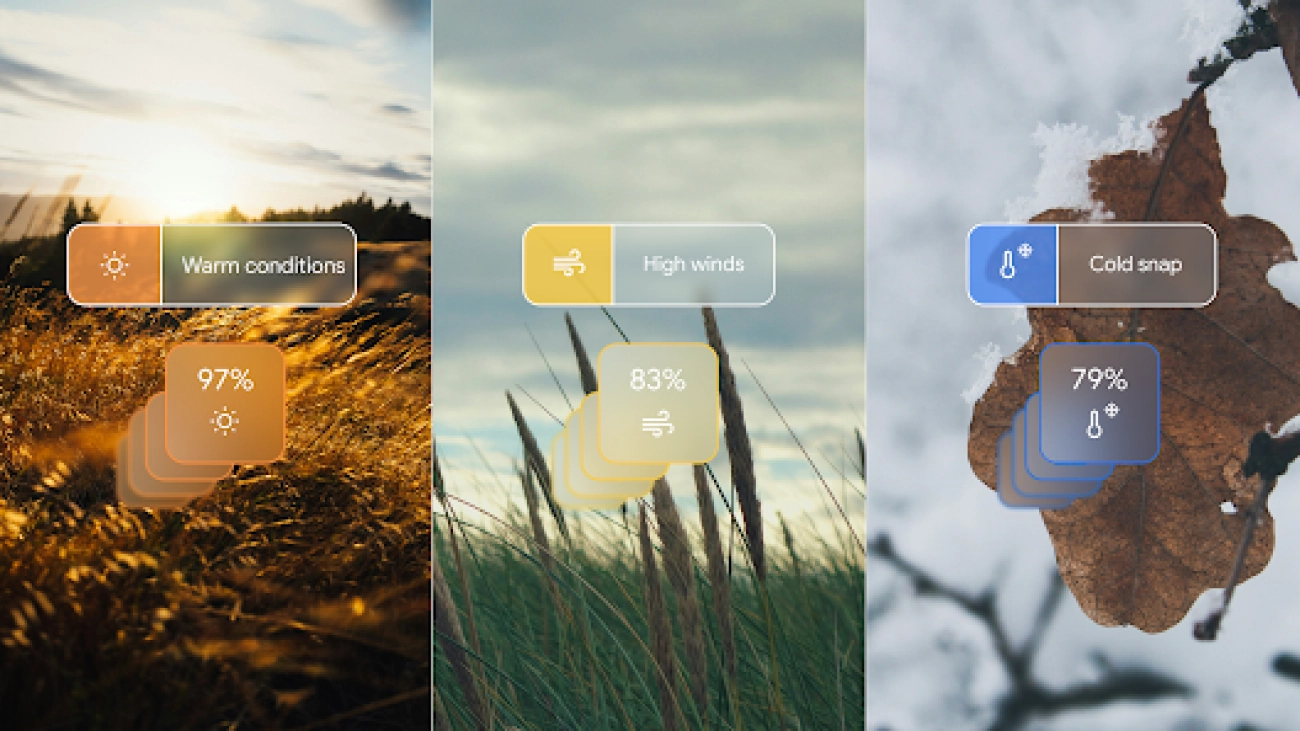

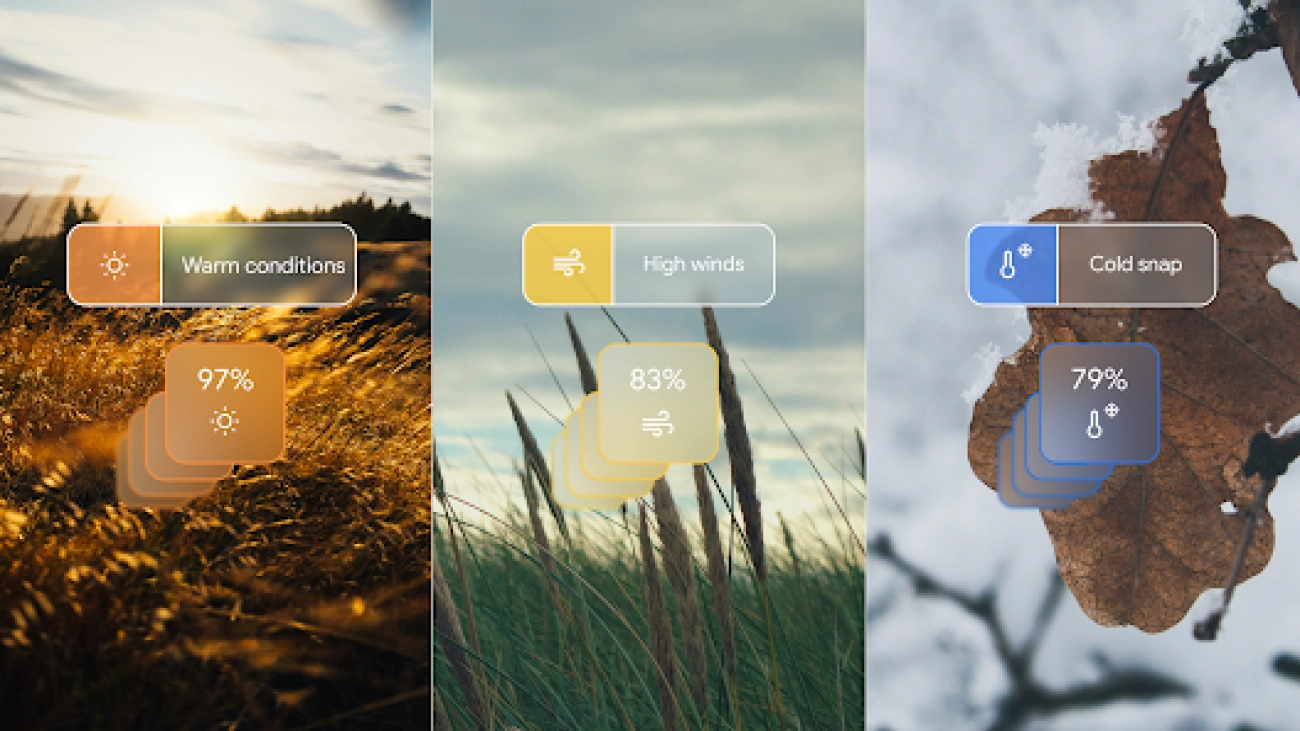

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

New AI model advances the prediction of weather uncertainties and risks, delivering faster, more accurate forecasts up to 15 days aheadRead More

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

New AI model advances the prediction of weather uncertainties and risks, delivering faster, more accurate forecasts up to 15 days aheadRead More

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

New AI model advances the prediction of weather uncertainties and risks, delivering faster, more accurate forecasts up to 15 days aheadRead More

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

New AI model advances the prediction of weather uncertainties and risks, delivering faster, more accurate forecasts up to 15 days aheadRead More

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

New AI model advances the prediction of weather uncertainties and risks, delivering faster, more accurate forecasts up to 15 days aheadRead More

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

New AI model advances the prediction of weather uncertainties and risks, delivering faster, more accurate forecasts up to 15 days aheadRead More