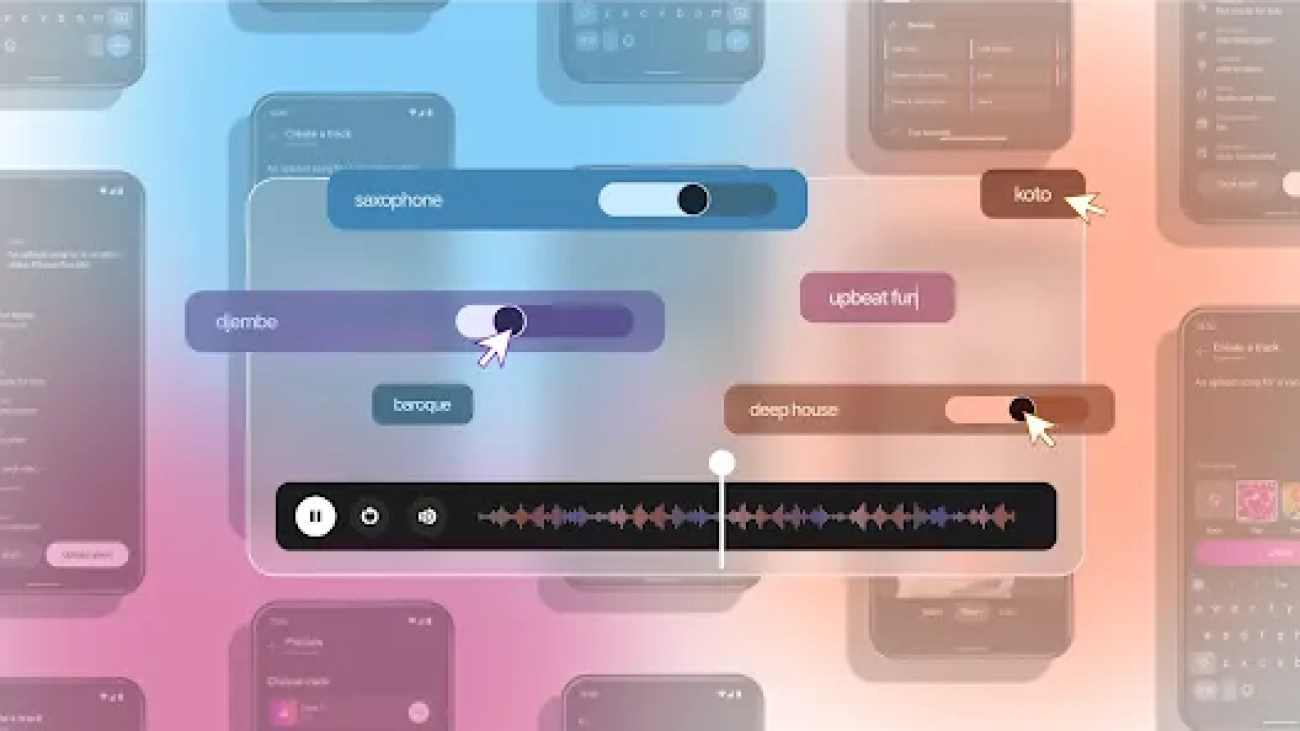

Our latest AI music technologies are now available in MusicFX DJ, Music AI Sandbox and YouTube ShortsRead More

New generative AI tools open the doors of music creation

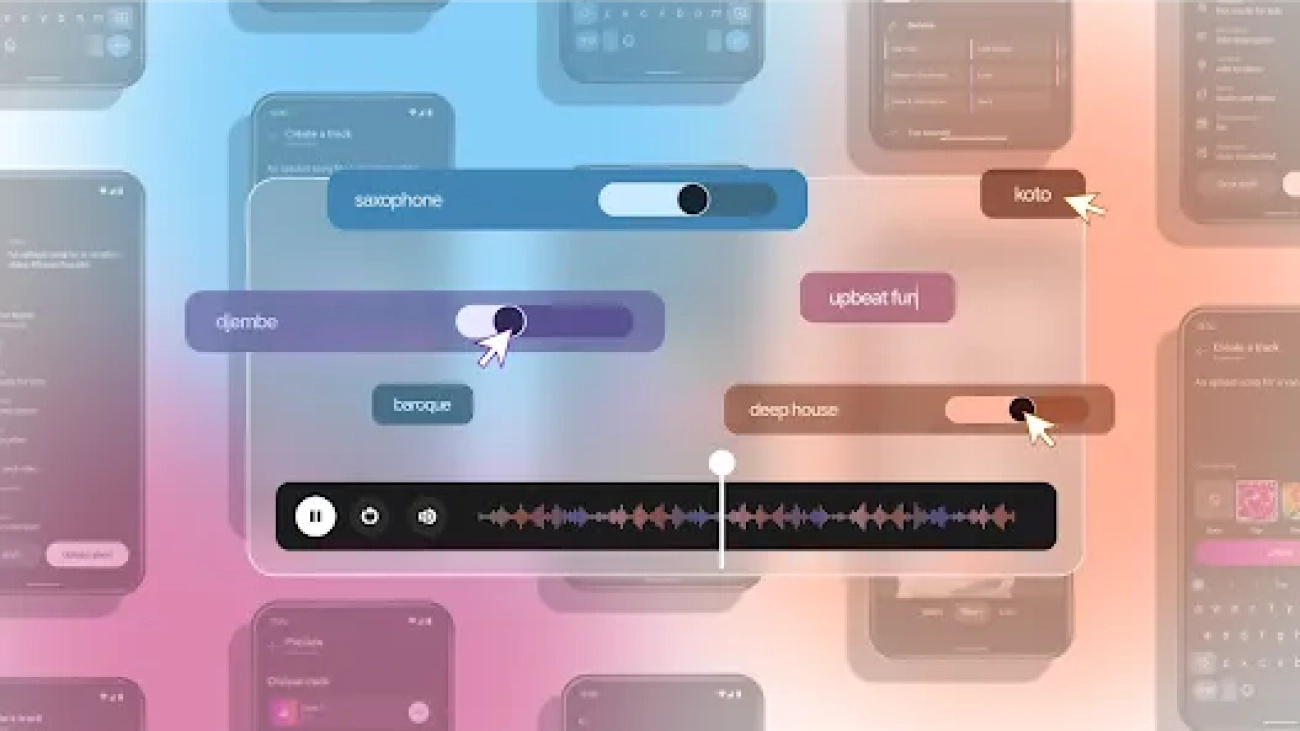

Our latest AI music technologies are now available in MusicFX DJ, Music AI Sandbox and YouTube ShortsRead More

New generative AI tools open the doors of music creation

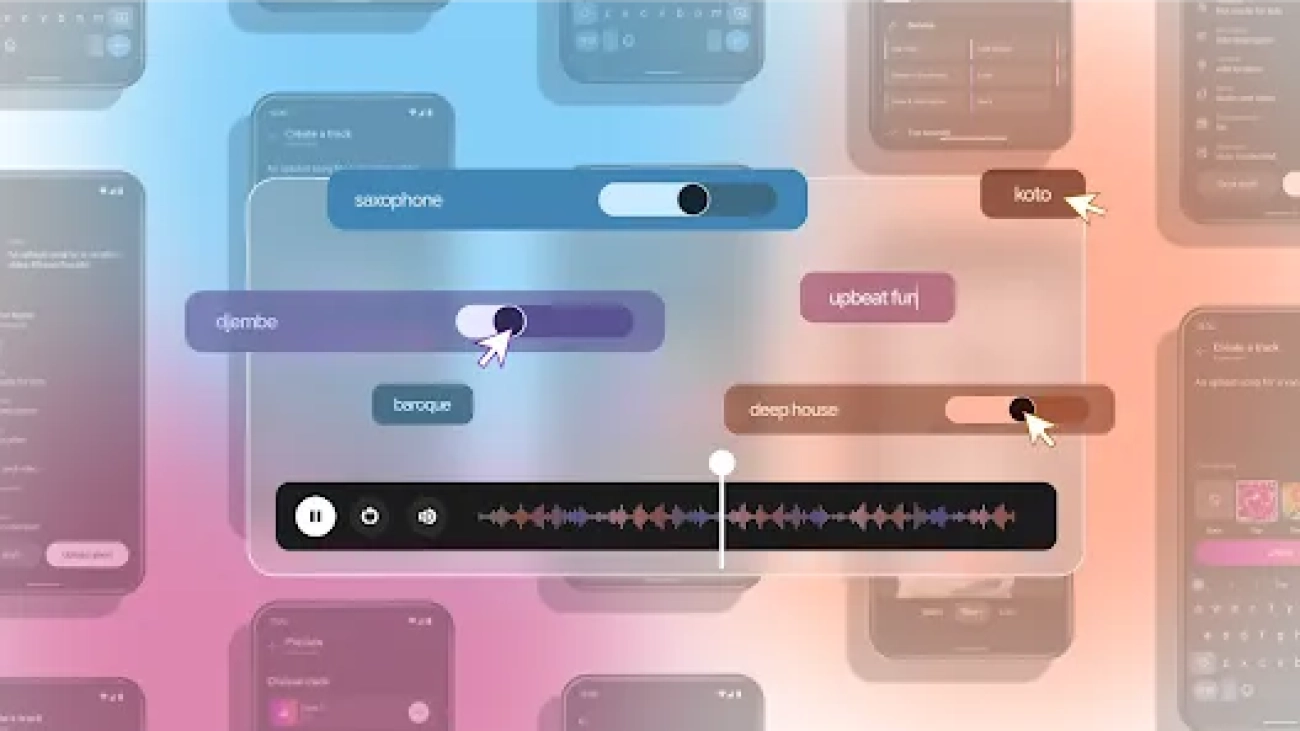

Our latest AI music technologies are now available in MusicFX DJ, Music AI Sandbox and YouTube ShortsRead More

New generative AI tools open the doors of music creation

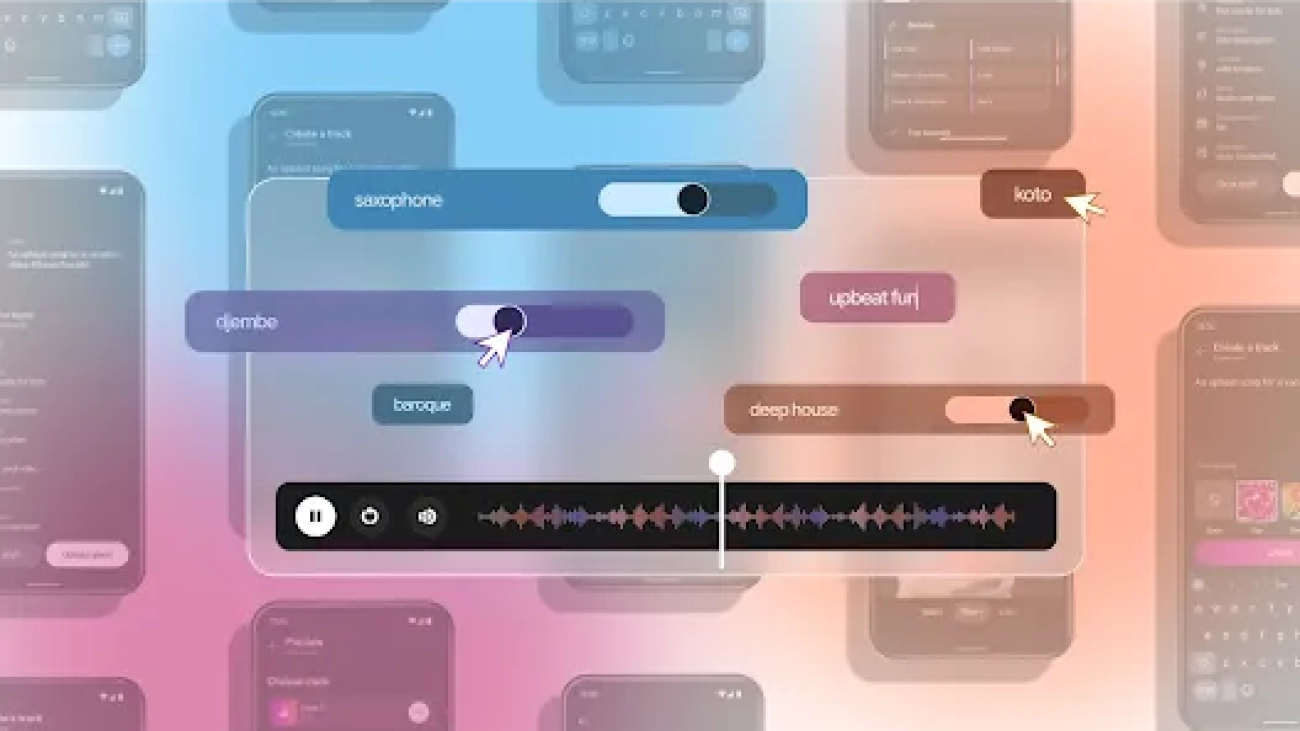

Our latest AI music technologies are now available in MusicFX DJ, Music AI Sandbox and YouTube ShortsRead More

New generative AI tools open the doors of music creation

Our latest AI music technologies are now available in MusicFX DJ, Music AI Sandbox and YouTube ShortsRead More

New generative AI tools open the doors of music creation

Our latest AI music technologies are now available in MusicFX DJ, Music AI Sandbox and YouTube ShortsRead More

Demis Hassabis & John Jumper awarded Nobel Prize in Chemistry

The award recognizes their work developing AlphaFold, a groundbreaking AI system that predicts the 3D structure of proteins from their amino acid sequences.Read More

Demis Hassabis & John Jumper awarded Nobel Prize in Chemistry

The award recognizes their work developing AlphaFold, a groundbreaking AI system that predicts the 3D structure of proteins from their amino acid sequences.Read More

Demis Hassabis & John Jumper awarded Nobel Prize in Chemistry

The award recognizes their work developing AlphaFold, a groundbreaking AI system that predicts the 3D structure of proteins from their amino acid sequences.Read More

Demis Hassabis & John Jumper awarded Nobel Prize in Chemistry

The award recognizes their work developing AlphaFold, a groundbreaking AI system that predicts the 3D structure of proteins from their amino acid sequences.Read More