Introducing a new AI model developed by Google DeepMind and Isomorphic Labs.Read More

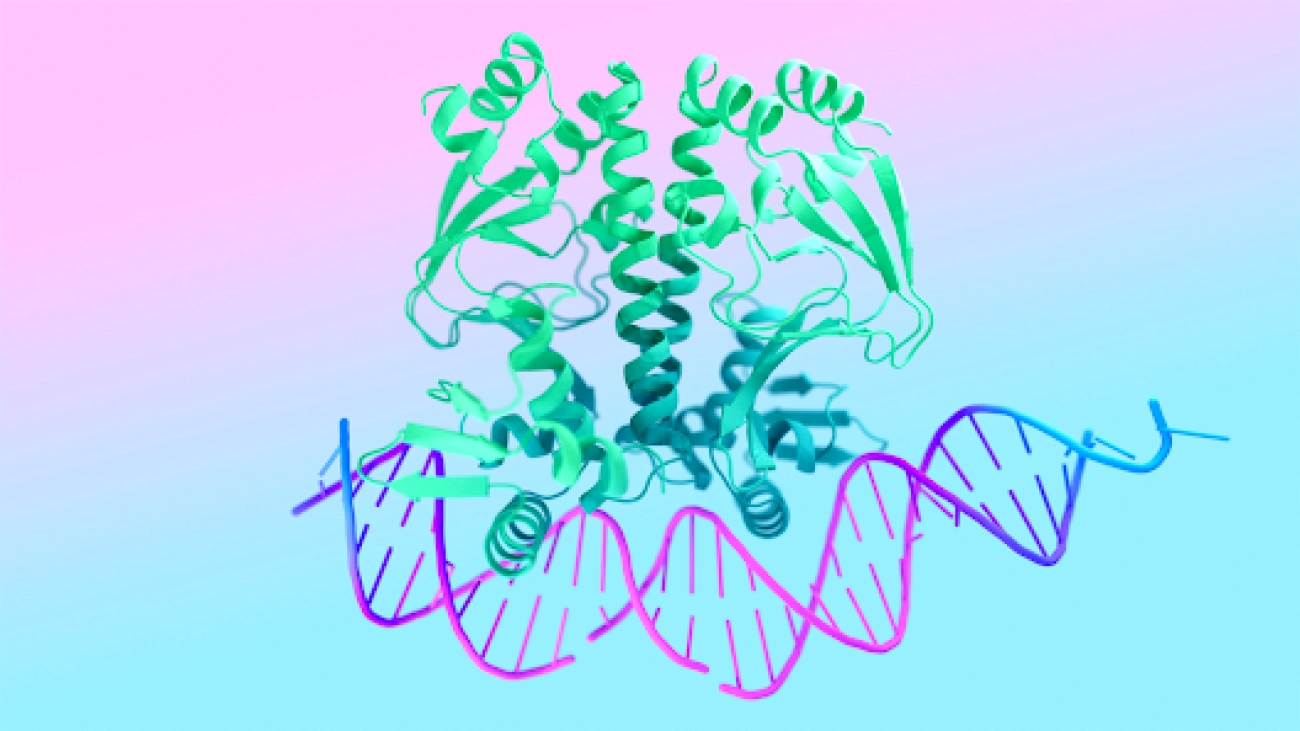

AlphaFold 3 predicts the structure and interactions of all of life’s molecules

Introducing a new AI model developed by Google DeepMind and Isomorphic Labs.Read More

AlphaFold 3 predicts the structure and interactions of all of life’s molecules

Introducing a new AI model developed by Google DeepMind and Isomorphic Labs.Read More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More

Google DeepMind at ICLR 2024

Developing next-gen AI agents, exploring new modalities, and pioneering foundational learningRead More