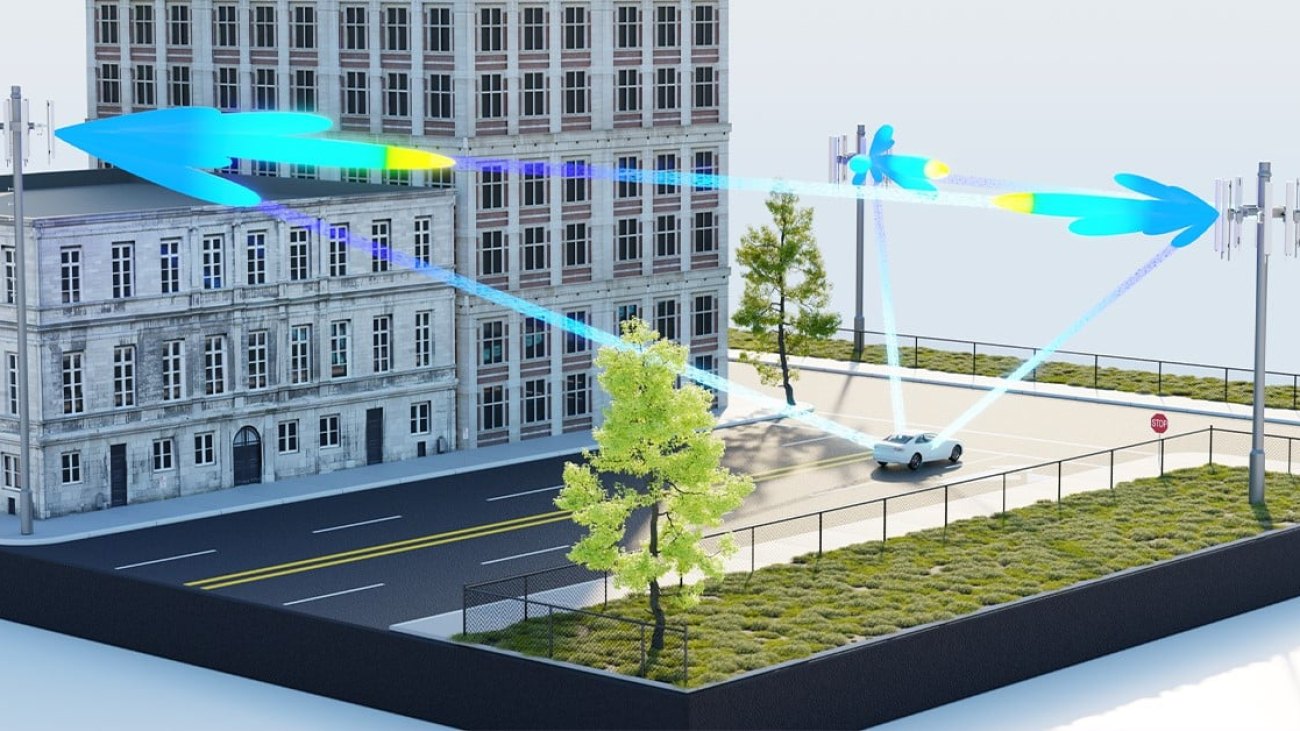

It’s been five years since the telecommunications industry first deployed 5G networks to drive new performance levels for customers and unlock new value for telcos.

But that industry milestone has been overshadowed by the emergence of generative AI and the swift pace at which telcos are embracing large language models as they seek to transform all parts of their business.

A recent survey of more than 400 telecommunications industry professionals from around the world showed that generative AI is the breakout technology of the year and that enthusiasm and adoption for both generative AI, and AI in general, is booming. In addition, the survey showed that, among respondents, AI is improving both revenues and cost savings.

The generative AI insight is the main highlight in the second edition of NVIDIA’s “State of AI in Telecommunications” survey, which included questions covering a range of AI topics, including infrastructure spending, top use cases, biggest challenges and deployment models.

Survey respondents included C-suite leaders, managers, developers and IT architects from mobile telecoms, fixed and cable companies. The survey was conducted over eight weeks between October and December.

Ramping Up on Generative AI

The survey results show how generative AI went from relative obscurity in 2022 to a key solution within a year. Forty-three percent of respondents reported they were investing in it, showing clear evidence that the telecom industry is enthusiastically embracing the generative AI wave to address a wide variety of business goals.

More broadly, there was a marked increase in interest in adopting AI and growing expectations of success from the technology, especially among industry executives. In the survey, 53% of respondents agreed or strongly agreed that adopting AI will be a source of competitive advantage, compared to 39% who reported the same in 2022. For management respondents, the figure was 56%.

The primary reason for this sustained engagement is because many industry stakeholders expect AI to contribute to their company’s success. Overall, 56% of respondents agreed or strongly agreed that “AI is important to my company’s future success,” with the figure rising to 61% among decision-making management respondents. The overall figure is a 14-point boost over the 42% result from the 2022 survey.

Customer Experience Remains Key Driver of AI Investment

Telcos are adopting AI and generative AI to address a wide variety of business needs. Overall, 31% of respondents said they invested in at least six AI use cases in 2023, while 40% are planning to scale to six or more use cases in 2024.

But enhancing customer experiences remains the biggest AI opportunity for the telecom industry, with 48% of survey respondents selecting it as their main goal for using the technology. Likewise, some 35% of respondents identified customer experiences as their key AI success story.

For generative AI, 57% are using it to improve customer service and support, 57% to improve employee productivity, 48% for network operations and management, 40% for network planning and design, and 32% for marketing content generation.

Early Phase of AI Investment Cycle

The focus on customer experience is influencing investments. Investing in customer-experience optimization remains the most popular AI use case for 2023 (49% of respondents) and for generative AI investments (57% of respondents).

Telcos are also investing in other AI use cases: security (42%), network predictive maintenance (37%), network planning and operations (34%) and field operations (34%) are notable examples. However, using AI for fraud detection in transactions and payments had the biggest jump in popularity between 2022 and 2023, rising 14 points to 28% of respondents.

Overall, investments in AI are still in an early phase of the investment cycle, although growing strongly. In the survey, 43% of respondents reported an investment of over $1 million in AI in their previous year, 52% reported the same for the current year, and 66% reported their budget for AI infrastructure will increase in the next year.

For those who are already investing in AI, 67% reported that AI adoption has helped them increase revenues, with 19% of respondents noting that this revenue growth is more than 10% in specific business areas. Likewise, 63% reported that AI adoption has helped them reduce costs in specific business areas, with 14% noting that this cost reduction is more than 10%.

Innovation With Partners

While telcos are increasing their investments to improve their internal AI capabilities, partnerships remain critical for the adoption of AI solutions in the industry. This is applicable both for AI models and AI hardware infrastructure.

In the survey, 44% of respondents reported that co-development with partners is their company’s preferred approach to building AI solutions. Some 28% of respondents prefer to use open-source tools, while 25% take an AI-as-a-service approach. For generative AI, 29% of respondents built or customized models with a partner, an understandable conservative approach for the telecom industry with its stringent data protection rules.

For infrastructure, increasingly, many telcos are opting for cloud hosting, although the hybrid model still remains dominant. In the survey, 31% of respondents reported that they run most of their AI workloads in the cloud (44% for hybrid), compared to 21% of respondents in the previous survey (56% for hybrid). This is helping to fuel the growing need for more localized cloud infrastructure.

Download the “State of AI in Telecommunications: 2024 Trends” report for in-depth results and insights.

Explore how AI is transforming telecommunications at NVIDIA GTC, featuring industry leaders including Amdocs, Indosat, KT, Samsung Research, ServiceNow, Singtel, SoftBank, Telconet and Verizon.

Learn more about NVIDIA solutions for telecommunications across customer experience, network operations, sovereign AI factories and more.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)