Celebrate the new year with more cloud gaming. Experience the power and performance of the cloud with more than 20 new games to be added to GeForce NOW in January.

Start with five games available this week, including The Finals from Embark Studios.

And tune in to the NVIDIA Special Address at CES on Monday, Jan. 8, at 8 a.m. PT for the latest on gaming, AI-related news and more.

It’s the Final Countdown

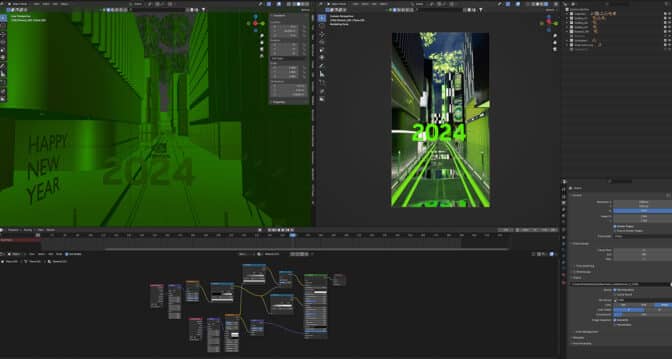

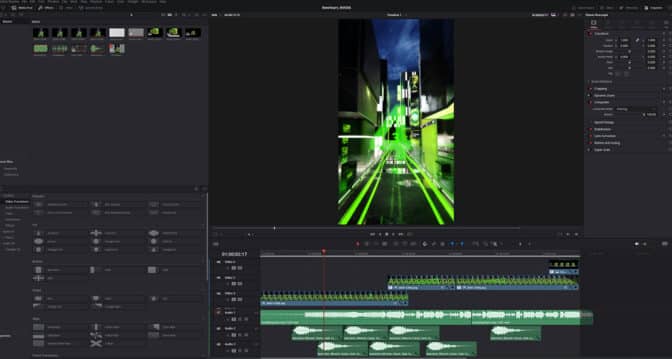

Fight for fame on the world’s biggest stage with Embark Studios’ The Finals. The free-to-play, multiplayer, first-person shooter is newly supported in the cloud this week, with RTX ON for the most cinematic lighting and visuals for GeForce NOW Ultimate and Priority members.

In The Finals, take part in a deadly TV game show that pits contestants against each other as they battle for a huge reward. Fight alongside teammates in virtual arenas that can be altered, exploited and even destroyed. Manipulate the environment as a weapon itself and use it to take down other players. Drive viewers wild with thrilling combat and flair, using tricks like crashing a wrecking ball into opponents.

Harness the power of the cloud and reach the finals anywhere with the ability to stream across devices. Ultimate members can fight for glory with the advantage of longer gaming sessions, the highest frame rates, ray tracing and ultra-low latency.

In With the New

In Enshrouded, become Flameborn, the last ember of hope of a dying race. Awaken, survive the terror of a corrupting fog and reclaim the lost beauty of the kingdom. Venture into a vast world, vanquish punishing bosses, build grand halls and forge a path in this co-op survival action role-playing game for up to 16 players, launching in the cloud Jan. 24.

Don’t miss the five newly supported games joining the GeForce NOW library this week:

- Dishonored, for Hungary, Czech Republic and Poland (Steam)

- The Finals (Steam)

- Redmatch 2 (Steam)

- Scorn (Xbox, available for PC Game Pass)

- Sniper Elite 5 (Xbox, available for PC Game Pass)

And here’s what’s coming throughout the rest of January:

- War Hospital (New release on Steam, Jan. 11)

- Prince of Persia: The Lost Crown (New release on Ubisoft, Jan. 18)

- Turnip Boy Robs a Bank (New release on Steam and Xbox, available for PC Game Pass, Jan.18)

- Stargate: Timekeepers (New release on Steam, Jan. 23)

- Enshrouded (New release on Steam, Jan. 24)

- Bang-On Balls: Chronicles (Steam)

- Firefighting Simulator – The Squad (Steam)

- Jected – Rivals (Steam)

- The Legend of Nayuta: Boundless Trails (Steam)

- RAILGRADE (Steam)

- Redmatch 2 (Steam)

- Shadow Tactics: Blades of the Shogun (Steam)

- Shadow Tactics: Blades of the Shogun – Aiko’s Choice (Steam)

- Solasta: Crown of the Magister (Steam)

- Survivalist: Invisible Strain (Steam)

- Witch It (Steam)

- Wobbly Life (Steam)

Doubled in December

In addition to the 70 games announced last month, 34 extra joined GeForce NOW:

- Avatar: Frontiers of Pandora (New release on Ubisoft, Dec. 7)

- Goat Simulator 3 (New release on Xbox, available on PC Game Pass, Dec. 7)

- LEGO Fortnite (New release on Epic Games Store, Dec. 7)

- Against the Storm (New release on Xbox, available on PC Game Pass, Dec. 8)

- Rocket Racing (New release on Epic Games Store, Dec. 8)

- Fortnite Festival (New release on Epic Games Store, Dec. 9)

- Stellaris Nexus (New release on Steam, Dec. 12)

- Tin Hearts (New release on Xbox, available PC Game Pass, Dec. 12)

- Amazing Cultivation Simulator (Xbox, available on the Microsoft Store)

- Blasphemous 2 (Epic Games Store)

- Century: Age of Ashes (Xbox, available on the Microsoft Store)

- Chorus (Xbox, available on the Microsoft Store)

- Dungeons 4 (Xbox, available on PC Game Pass)

- Edge of Eternity (Xbox, available on the Microsoft Store)

- Farming Simulator 17 (Xbox, available on the Microsoft Store)

- Farming Simulator 22 (Xbox, available on PC Game Pass)

- Flashback 2 (Steam)

- Forza Horizon 4 (Steam)

- Forza Horizon 5 (Steam, Xbox and available on PC Game Pass)

- Hollow Knight (Xbox, available on PC Game Pass)

- The Front (Steam)

- Martha Iis Dead (Xbox, available on the Microsoft Store)

- Minecraft Dungeons (Steam, Xbox and available on PC Game Pass)

- Monster Hunter: World (Steam)

- Neon Abyss (Xbox, available on PC Game Pass)

- Ori and the Will of the Wisps (Steam, Xbox and available on PC Game Pass)

- Ori and the Blind Forest: Definitive Edition (Steam)

- Raji: An Ancient Epic (Xbox, available on the Microsoft Store)

- Remnant: From the Ashes (Xbox, available on PC Game Pass)

- Remnant II (Xbox, available on PC Game Pass)

- Richman 10 (Xbox, available on the Microsoft Store)

- Spirittea (Xbox, available on PC Game Pass)

- Surgeon Simulator 2 (Xbox, available on the Microsoft Store)

- Sword and Fairy 7 (Xbox, available on PC Game Pass)

Terminator: Dark Fate – Defiance didn’t make it in December due to a change in its publish date. Stay tuned to GFN Thursday for updates.

What are you planning to play this weekend? Let us know on X or in the comments below.

what was the final game you played in 2023?

—

NVIDIA GeForce NOW (@NVIDIAGFN) January 3, 2024

Credit:

Credit: