As the meteoric rise of ChatGPT demonstrates, generative AI can unlock enormous potential for companies, teams and individuals.

Whether simplifying time-consuming tasks or accelerating 3D workflows to boost creativity and productivity, generative AI is already making an impact across industries — and there’s much more to come.

How generative AI is paving the way for the future will be a key topic at NVIDIA GTC, a free, global conference for the era of AI and the metaverse, taking place online March 20-23.

Dozens of sessions will dive into topics around generative AI — from conversational text to the creation of virtual worlds from images. Here’s a sampling:

- Fireside Chat With NVIDIA founder and CEO Jensen Huang and OpenAI’s Ilya Suskever: Join this conversation to learn more about the future of AI.

- How Generative AI Is Transforming the Creative Process: In this fireside chat, Scott Belsky, chief product officer at Adobe, and Bryan Catanzaro, vice president of applied deep learning research at NVIDIA, will discuss the powerful impact and future direction of generative AI.

- Generative AI Demystified: Discover how generative AI enables businesses to improve products and services. NVIDIA’s Bryan Catanzaro will discuss major developments in generative AI and share popular use cases driving cutting-edge generative applications.

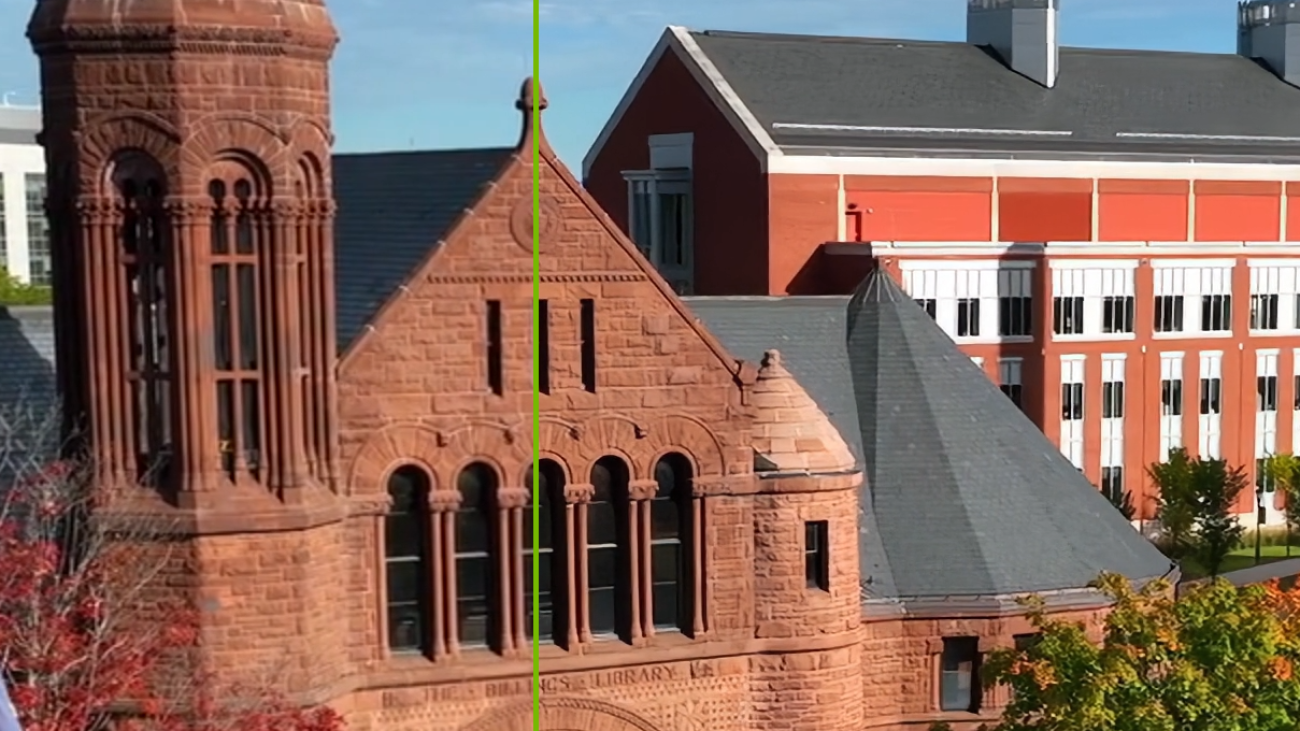

- Generating Modern Masterpieces: MoMA Dreams Become a Reality: Hear from multimedia artist Refik Anadol, as well as Museum of Modern Art curators Michelle Kuo and Paola Antonelli, who’ll discuss how AI helped transform the archive of data from New York’s legendary modern art museum into a real-time art piece — the first of its kind in a major art museum.

- How Generative AI Will Transform the Fashion Industry: See examples of how the latest generative tools are used in fashion, and hear from experts on their experiences in building a practice based on AI.

- Emerging Tech in Animation Pre-Production: Learn how Sony Pictures Animation is using generative AI to improve the creative pre-production and storytelling processes.

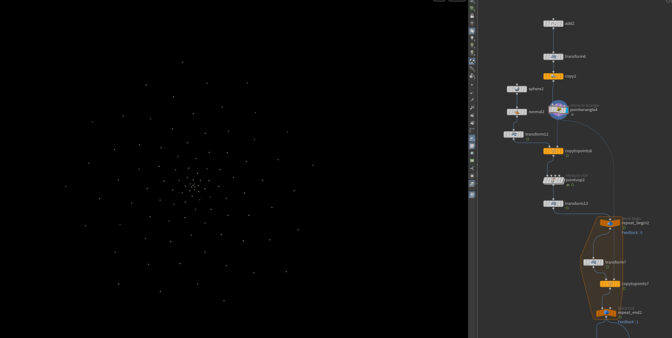

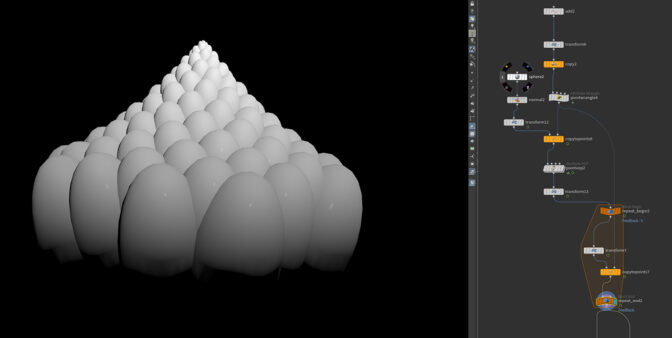

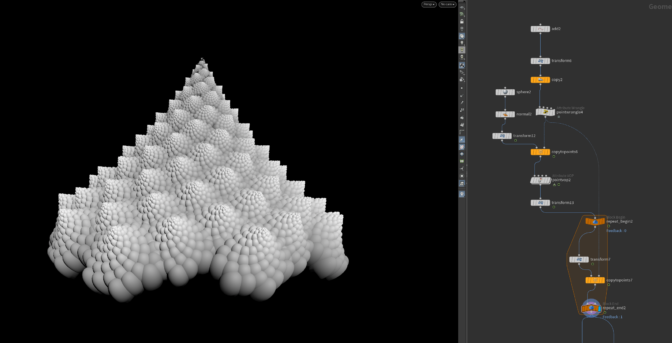

- 3D by AI: How Generative AI Will Make Building Virtual Worlds Easier: See some of NVIDIA’s latest work in generative AI models for creating 3D content and scenes, and explore how these tools and research can help 3D artists in their workflows.

Many more sessions on generative AI are available to explore at GTC, and registration is free. Join to discover the latest AI technology innovations and breakthroughs.

Featured image courtesy of Refik Anadol.

software development kit

software development kit