Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology accelerates creative workflows.

In the NVIDIA Studio celebrates the Open Broadcaster Software (OBS) Studio’s 10th anniversary and its 28.0 software release. Plus, popular streamer WATCHHOLLIE shares how she uses OBS and a GeForce RTX 3080 GPU in a single-PC setup to elevate her livestreams.

The OBS release, available starting later today, offers livestreamers new features, including native integration of the AI-powered NVIDIA Broadcast virtual background and room echo removal effects, along with support for high-efficiency video coding (HEVC or H.265) and high-dynamic range (HDR).

NVIDIA also worked with Google to enable live streaming of HEVC and HDR content to YouTube using the NVIDIA Encoder (NVENC) — dedicated hardware on GeForce RTX GPUs that handles video encoding without taking resources away from the game.

OBS Bliss

OBS 28.0 has launched with a host of updates and improvements — including two NVIDIA Broadcast features exclusive to GeForce RTX streamers.

AI-powered virtual background enables streamers to remove or replace their backgrounds without the need for a physical green screen. Room echo removal eliminates unwanted echoes during streaming sessions. This can come handy when using a desktop mic or if in a room with a bit of echo. Both effects — as well as noise removal — are available within OBS as filters, giving users more flexibility to apply them per source.

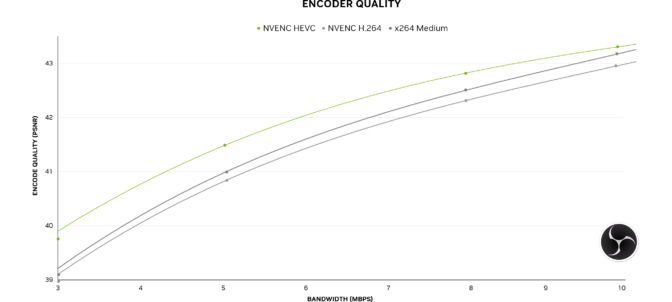

HEVC support in OBS 28.0 improves video compression by 15%. The implementation, built specifically for hardware-based HEVC encoders, enables users to record content and stream to supported platforms like YouTube with better quality.

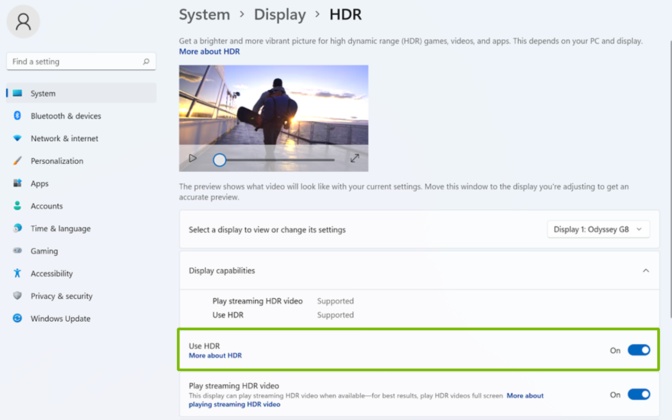

The update also enables recording and streaming in HDR, which offers a greater range of bright and dark colors on a display, adding a stunning vibrance and dramatic improvement in visual quality.

Previously, users had to turn HDR off, since 10- or 12-bit HDR content would look washed out if it was recorded at 8-bit. With this update, users can keep HDR on and decide whether they want to capture or stream in SDR, or full HDR.

As HDR displays become more popular, and with Windows 11’s new Auto HDR tool — which enables many games to be displayed in a virtual HDR mode — more users can benefit from this OBS update.

YouTube Live is one of the first platforms to support HEVC and HDR streaming. Streamers on the platform can give their audience higher quality streams, with only a few clicks.

Setup is quick and easy. Open this NVENC OBS guide and navigate to the “Recording and Streaming with HEVC and HDR” section to find directions.

Go Live With WATCHHOLLIE

When WATCHHOLLIE started her channel, she had to learn a whole new way of creating content. Now, she’s teaching the next generation of streamers and showcasing how NVIDIA broadcasting tech helps her workflow.

Trained as a video editor, WATCHHOLLIE experimented with a YouTube channel before discovering Twitch as a way to get back into gaming. During the pandemic, Hollie used streaming as a way to stay in touch with friends.

“I was trapped in an 800-square-foot apartment, and I wanted to hang out with friends,” she said. “So I started streaming every day.”

Her streams promote mental health awareness and inclusivity, establishing a safe place for members of the LGBTQ+ community like herself.

Hollie’s first streams were simple, using OBS on a Mac with an external capture card to share console gameplay.

“I thought, ‘I hope someone shows up,’ and few people did,” she said.

She kept at it between video-editing freelance work, asking friends to tune into her streams and provide advice.

To support her daily streaming schedule, Hollie built her first PC — initially with a GeForce RTX 2070 GPU and later upgrading to a GeForce RTX 3080 GPU. GeForce GPUs include NVENC as a discrete encoder that enables seamless gaming and streaming with maximum performance, even on a single-PC setup like WATCHOLLIE’s. NVENC’s advanced GPU encoding adds higher video quality for recorded videos, as well.

Soon, Hollie’s channel reached Affiliate status, which opened up monetization opportunities. She began accepting fewer freelance-editing gigs, and even turned down a full-time job offer. “I asked my mom what I should do, and she said, ‘This is your chance, you should take it,’” WATCHHOLLIE said.

Since achieving Partner status, she’s worked on her channel full time. “I’m very happy, and I feel like I’m making a difference with how I stream,” she said.

WATCHHOLLIE’s now turned her attention to helping new streamers get started. She founded WatchUs, a diversity-focused team that teaches aspiring creators how to grow their business, develop brand partnerships and improve their streaming setup.

“With WatchUs, I can help people and guide them through something that I didn’t have or was too scared to ask about,” she said.

More than 20 streamers were selected to join the team from 200+ applicants. They receive mentorship from coaches from all walks of life, about every facet of streaming. The group focuses on “education, not ego,” in WATCHHOLLIE’s words, and the team plans to reopen applications soon.

When asked what it takes to be a successful streamer, Hollie didn’t hesitate to answer: “Stick to a schedule and play what you love. Don’t wait for people to talk to you to be entertaining — be the entertainment first, and then people will want to talk to you.”

Follow and subscribe to WATCHHOLLIE’s social media channels.

Step Into the NVIDIA Studio

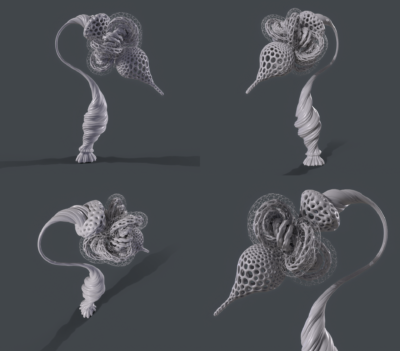

Just as WATCHHOLLIE has grown in her creative journey, the NVIDIA Studio team wants to see all artists’ personal growth. Amaze, or be amazed, as creatives share old and new works for the #CreatorJourneyChallenge across social media. Many extraordinary pieces have been shared so far.

It’s time to show how you’ve grown as an artist (just like @lowpolycurls)!

Join our #CreatorJourney challenge by sharing something old you created next to something new you’ve made for a chance to be featured on our channels.

Tag #CreatorJourney so we can see your post.

pic.twitter.com/PmkgOvhcBW

— NVIDIA Studio (@NVIDIACreators) August 15, 2022

To get in on the fun, simply provide an older piece of artwork alongside a more recent one to highlight your growth as an artist. Follow and tag NVIDIA Studio on Instagram, Twitter or Facebook, and use the #CreatorsJourneyChallenge tag for a chance to be showcased on NVIDIA Studio social media channels.

Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the NVIDIA Studio newsletter.

The post OBS Studio to Release Software Update 28.0 With NVIDIA Broadcast Features ‘In the NVIDIA Studio’ appeared first on NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)